Recent from talks

Contribute something

Nothing was collected or created yet.

Network model

View on WikipediaThis article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

In computing, the network model is a database model conceived as a flexible way of representing objects and their relationships. Its distinguishing feature is that the schema, viewed as a graph in which object types are nodes and relationship types are arcs, is not restricted to being a hierarchy or lattice.

The network model was adopted by the CODASYL Data Base Task Group in 1969 and underwent a major update in 1971. It is sometimes known as the CODASYL model for this reason. A number of network database systems became popular on mainframe and minicomputers through the 1970s before being widely replaced by relational databases in the 1980s.

Overview

[edit]While the hierarchical database model structures data as a tree of records, with each record having one parent record and many children, the network model allows each record to have multiple parent and child records, forming a generalized graph structure. This property applies at two levels: the schema is a generalized graph of record types connected by relationship types (called "set types" in CODASYL), and the database itself is a generalized graph of record occurrences connected by relationships (CODASYL "sets"). Cycles are permitted at both levels. Peer-to-Peer and Client Server are examples of Network Models.

The chief argument in favour of the network model, in comparison to the hierarchical model, was that it allowed a more natural modeling of relationships between entities. Although the model was widely implemented and used, it failed to become dominant for two main reasons. Firstly, IBM chose to stick to the hierarchical model with semi-network extensions in their established products such as IMS and DL/I. Secondly, it was eventually displaced by the relational model, which offered a higher-level, more declarative interface. Until the early 1980s the performance benefits of the low-level navigational interfaces offered by hierarchical and network databases were persuasive for many large-scale applications, but as hardware became faster, the extra productivity and flexibility of the relational model led to the gradual obsolescence of the network model in corporate enterprise usage.

History

[edit]The network model's original inventor was Charles Bachman, and it was developed into a standard specification published in 1969 by the Conference on Data Systems Languages (CODASYL) Consortium. This was followed by a second publication in 1971, which became the basis for most implementations. Subsequent work continued into the early 1980s, culminating in an ISO specification, but this had little influence on products.

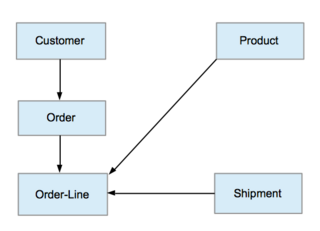

Bachman's influence is recognized in the term Bachman diagram, a diagrammatic notation that represents a database schema expressed using the network model. In a Bachman diagram, named rectangles represent record types, and arrows represent one-to-many relationship types between records (CODASYL set types).

Database systems

[edit]Some well-known database systems that use the network model include:

See also

[edit]References

[edit]- ^ a b "RDBMS Plenary 1: Early Years" (PDF) (PDF). Interviewed by Burton Grad. Computer History Museum. 2007-06-12. Retrieved 2025-05-30.

- David M, k., 1997. Fundamentals, Design, and Implementation. database processing ed. s.l.:Prentice-Hall.

Further reading

[edit]- Charles W. Bachman, The Programmer as Navigator. Turing Award lecture, Communications of the ACM, Volume 16, Issue 11, 1973, pp. 653–658, ISSN 0001-0782, doi:10.1145/355611.362534

External links

[edit]- "CODASYL Systems Committee "Survey of Data Base Systems"" (PDF). 1968-09-03. Archived from the original (PDF) on 2007-10-12.

- Network (CODASYL) Data Model

- SIBAS Database running on Norsk Data Servers

Network model

View on GrokipediaCore Concepts

Definition and Structure

The network model is a database architecture that organizes data in a graph-like structure, where records function as nodes and sets serve as directed edges to represent relationships between them. This approach facilitates the modeling of complex interconnections, including many-to-many relationships, by allowing records to participate in multiple linkages without the rigid parent-child hierarchy of tree structures.[1] The fundamental structural principle of the network model revolves around owner-member relationships, in which one record type is designated as the owner (or parent) of a set occurrence, and one or more other record types act as members (or children). Each set occurrence links a single owner to zero or more members, enabling flexible navigation across the data graph while maintaining directed associations that support efficient querying of interrelated records. Unlike simpler models limited to single-parentage, this principle allows members to connect to multiple owners through different sets, thereby accommodating real-world scenarios with multifaceted dependencies.[6][1] Key components of the network model include record types, which are structural definitions analogous to entities and comprise one or more data items (fields) that store specific attribute values, such as names or identifiers. Set types, in turn, specify the relationships between record types, implemented through pointer-based links that physically connect occurrences of owner and member records in storage. These pointers enable direct traversal from owners to members and, in some cases, vice versa, forming the backbone of data access.[1][6] In graphical representations, the network model's structure is commonly illustrated via data-structure diagrams, which depict record types as rectangular boxes and set relationships as arrows or lines indicating the direction from owner to member. These diagrams highlight the interconnected nature of the database, showing how pointers facilitate navigation paths akin to traversing a directed graph, thus providing a visual schema for understanding the overall topology.[1][6]Records, Sets, and Relationships

In the CODASYL network model, data is organized into records, which serve as the fundamental units of storage and retrieval. A record type defines the structure of a group of similar records, consisting of named data items or fields that hold atomic values, such as strings or numbers.[1] Records are distinguished as logical or physical: logical records represent the conceptual view accessible to applications, while physical records handle the underlying storage, often implemented as blocks or pages in files.[7] Within relationships, records are further classified as owner records, which act as parents in a set, or member records, which act as children linked to one or more owners.[6] Sets form the core mechanism for expressing relationships between record types in the network model, defined as an ordered collection that links one owner record type to zero or more member record instances of another type, establishing a many-to-one association.[1] Set types specify this linkage, with ordering modes such as first-in, last-in, or sorted by a key field to determine the sequence of members.[6] Single-parent sets restrict a member to exactly one owner occurrence, while multi-parent structures are achieved indirectly by allowing a member record type to participate in multiple set types, each with a different owner, thus enabling complex many-to-many relationships through intermediary records if needed.[7] Navigation through these relationships relies on pointers embedded within records, forming circular linked lists or rings that connect an owner to its members for efficient traversal.[7] Currency indicators maintain the position during operations, tracking the current record of a specific type, set type, or the entire run unit (a transaction-like scope), allowing commands to find and retrieve related data by moving forward, backward, or to the first/last position in a set.[6] This pointer-based approach facilitates direct access without full scans, with each set occurrence represented as a self-contained structure.[1] Several constraints ensure data integrity in sets. Uniqueness rules prohibit a member record from belonging to more than one occurrence of the same set type, preventing duplicates within a single relationship while allowing participation in multiple set types.[7] Cardinality is enforced as one-to-many per set occurrence, with exactly one owner per set and variable members (zero or more), though system limits may cap the maximum number of members to manage storage.[6] Additional options like mandatory membership require every member to connect to an owner, while optional allows standalone records.[7]Historical Development

Origins and Early Influences

The network database model emerged in the early 1960s, drawing foundational influences from graph theory, which provided a conceptual framework for representing complex interconnections between data entities as nodes and edges. This mathematical approach, developed in the 18th and 19th centuries but increasingly applied in computer science by the mid-20th century, enabled more flexible modeling of relationships compared to linear or tree-like structures prevalent in earlier file systems.[8] Charles Bachman, while working at General Electric, leveraged these graph-theoretic principles to create the Integrated Data Store (IDS) in 1963, marking the first direct-access database management system and laying the groundwork for the network model.[9] IDS represented data as records linked through sets, allowing navigation across multifaceted associations in a graph-like manner.[10] Early motivations for the network model stemmed from the growing demands of business computing in the 1960s, where organizations required integrated systems to manage intricate, many-to-many relationships in operational data—such as those in supply chains and production processes—that rigid file management techniques could not efficiently handle. At the time, data storage relied heavily on sequential tape systems or early navigational databases, but these lacked the ability to support shared data access across departments without redundancy. IBM's Information Management System (IMS), introduced in 1966 as a hierarchical model for the Apollo space program, further highlighted the limitations of tree-structured hierarchies, which struggled with non-parent-child linkages common in real-world business scenarios.[9] Bachman, as a key figure, pioneered the model's development through IDS and its companion Integrated File System (FS), aiming to enable company-wide data sharing at GE's manufacturing divisions. His innovations, including data structure diagrams for visualizing relationships, earned him the 1971 ACM Turing Award for contributions to database technology.[11] Initial adoption of the network model occurred in mid-1960s mainframe environments, particularly for manufacturing and inventory management applications where complex interdependencies between parts, suppliers, and production lines necessitated graph-based navigation. GE implemented IDS across its facilities to streamline appliance production data, demonstrating the model's practicality for large-scale, integrated operations on systems like the GE-600 series computers. This early use case influenced subsequent implementations at other industrial firms, establishing the network approach as a viable alternative for handling enterprise-scale data before broader standardization efforts.[12]CODASYL Standardization

The Conference on Data Systems Languages (CODASYL), established in 1959 by the U.S. Department of Defense to standardize programming languages such as COBOL, turned its attention to database management in the 1960s.[13] Building on early influences like Charles Bachman's integrated data store (IDS) system, CODASYL formed the List Processing Task Force in 1965, which was renamed to the Data Base Task Group (DBTG) in May 1967, to develop specifications for a common database management system compatible with COBOL and other languages.[9] The DBTG stabilized its membership in January 1969 under chairman A. Metaxides and published its first proposals that October, laying the groundwork for the network database model.[14] The pivotal 1971 DBTG Report, released in April and reviewed by the CODASYL Programming Language Committee in May, formalized the network model by defining key components including the schema for overall database structure, subschema for user views, and data storage mechanisms using records and sets to represent complex relationships.[14] This report incorporated 130 of 179 submitted proposals, emphasizing a three-level architecture to separate conceptual, external, and internal data representations.[14] The June 1973 Journal of Development, produced by the Data Description Language Committee (DDLC) formed in 1971, provided updates refining these elements, particularly introducing privacy locks for controlling access to records and items, as well as module concepts for organizing subschemas to enhance security and modularity.[14] Central to these standards were the Data Definition Language (DDL) specifications for describing database structures independently of host languages, and the Data Manipulation Language (DML) for operations like storing, retrieving, and updating data, both designed to ensure portability across diverse systems and vendors.[14] These vendor-neutral features promoted interoperability, reducing proprietary lock-in and facilitating program migration.[14] The CODASYL standards significantly influenced the database industry, driving widespread adoption of network model systems in government agencies and large enterprises during the 1970s, as evidenced by commercial implementations like Cullinet's IDMS and other COBOL-integrated solutions that supported complex, many-to-many data relationships in mainframe environments.[15] This peaked in the mid-1970s, with the standards enabling scalable data management for critical applications in sectors requiring robust, pointer-based navigation.[16]Technical Implementation

Data Definition Language

The Data Definition Language (DDL) in the CODASYL network model provides a formal syntax for defining the logical and physical structure of the database, primarily through schema, subschema, and storage specifications.[14] The schema DDL establishes the overall database blueprint, including record types, data items, and set types that model relationships between records.[17] Schema definition begins with the RECORD entry, which declares a record type and its constituent data items. The basic syntax isRECORD NAME IS record-name, followed by subentries for data items using level numbers (e.g., 01 for the record, 02 for subgroups) and clauses like PICTURE for formatting or TYPE for data categories such as arithmetic, character string, or database key.[14] For example:

RECORD NAME IS EMPLOYEE

01 EMP-ID PICTURE IS "9(6)"

01 EMP-NAME PICTURE IS "X(30)"

01 SALARY TYPE IS [DECIMAL](/page/Decimal)(7,2).

RECORD NAME IS EMPLOYEE

01 EMP-ID PICTURE IS "9(6)"

01 EMP-NAME PICTURE IS "X(30)"

01 SALARY TYPE IS [DECIMAL](/page/Decimal)(7,2).

SET NAME IS set-name OWNER IS owner-record MEMBER IS member-record, optionally including ORDER (e.g., ASCENDING on a key) or membership rules (e.g., MANDATORY AUTOMATIC for DBMS-managed links).[14] An example is:

SET NAME IS EMPLOYEE-DEPT

OWNER IS DEPARTMENT

MEMBER IS EMPLOYEE

MANDATORY AUTOMATIC

ORDER IS ASCENDING DEPT-NO.

SET NAME IS EMPLOYEE-DEPT

OWNER IS DEPARTMENT

MEMBER IS EMPLOYEE

MANDATORY AUTOMATIC

ORDER IS ASCENDING DEPT-NO.

SUBSCHEMA NAME IS subschema-name WITHIN [SCHEMA](/page/Schema) schema-name PRIVACY KEY IS 'password', it supports modifications such as redefining vectors as fixed arrays or applying privacy locks to restrict access.[17] This enables controlled views without altering the underlying schema.

Storage schema details the physical organization, starting with AREA entries like AREA NAME IS area-name [TEMPORARY], which divide the database into logical storage regions where records are assigned via a WITHIN clause in the RECORD definition (e.g., WITHIN MAIN-AREA).[14] Pages serve as fixed-length physical units within areas, managed automatically by the DBMS for record placement.[17] Indexing is handled through clauses like INDEXED in SET entries or SEARCH KEY in records, enabling efficient retrieval based on specified keys.[14]

Key DDL elements include locators, implemented as database keys (DBKEYs), which act as unique pointers to record occurrences for direct access; these are declared with TYPE IS DATA-BASE-KEY and managed by the DBMS.[17] Calculated fields, or calc keys, support computed values for storage or access, using LOCATION MODE IS CALC USING key-fields in the RECORD entry to derive record positions dynamically (e.g., hashing on a name field).[14] Rename clauses appear in subschemas to alias data items or records, such as redefining a field name for application-specific use without impacting the schema.[17]

Data Manipulation Language

The Data Manipulation Language (DML) in the network model, as defined by the CODASYL Database Task Group (DBTG), is a procedural interface embedded within a host programming language such as COBOL or PL/I, enabling applications to navigate and manipulate records through explicit commands that manage currency pointers and status indicators.[18] Unlike declarative query languages, it requires programmers to specify step-by-step operations, including loops and conditional checks, to traverse the complex graph of records and sets, reflecting the model's emphasis on direct pointer-based access for efficiency in hierarchical or many-to-many relationships.[7] Navigation in the network model relies on commands that position currency indicators—pointers to the current record instance (CRU) or set occurrence—to facilitate traversal. The FIND command locates a specific record or set element, setting the appropriate currency; for example,FIND ANY CUSTOMER USING CUSTOMER-NAME retrieves the first matching customer record, while FIND OWNER DEPOSITOR positions to the owner record in the depositor set, and FIND NEXT MEMBER WITHIN DEPOSITOR advances to the subsequent member record linked to the current owner.[18] Once positioned, the GET command transfers the current record's data into the program's user work area (UWA) for processing, as in GET CUSTOMER after a FIND operation.[6] The READY command prepares database areas or realms for access, specifying modes like retrieval or update to enable subsequent operations on sets, ensuring controlled concurrency.[19]

Insertion operations use the STORE command to add new records to the database, populating the UWA with data before execution; for instance, STORE ACCOUNT creates a new account record, automatically connecting it to an owner set if schema rules dictate mandatory membership.[7] Set membership updates accompany this via CONNECT, which links the new record as a member to a specified owner, such as CONNECT ACCOUNT TO DEPOSITOR after storing an account under a customer.[18] Deletion employs the ERASE command to remove the current record, with options like ERASE ALL [CUSTOMER](/page/Customer) recursively deleting the owner and all connected members; prior to erasure, DISCONNECT severs set links, e.g., DISCONNECT ACCOUNT FROM DEPOSITOR, to maintain referential integrity without cascading deletes unless specified.[6]

Updates are handled by the MODIFY command, which alters data items in the current record after a positioning FIND and GET; for example, after FIND FOR UPDATE CUSTOMER and GET CUSTOMER, MODIFY CUSTOMER can change an address field, with the system updating currency pointers to reflect the modified instance.[18] This requires explicit "for update" clauses in FIND to lock the record, preventing concurrent modifications.

The procedural essence of the DML demands application code to orchestrate navigation, often through iterative constructs like while loops checking status flags (e.g., DB-STATUS for success or end-of-set), contrasting sharply with declarative paradigms where queries abstract away pointer management.[7] This approach, while verbose, allows fine-grained control suited to the network model's linked structures.