Recent from talks

Nothing was collected or created yet.

OpenVMS

View on Wikipedia

| OpenVMS | |

|---|---|

| |

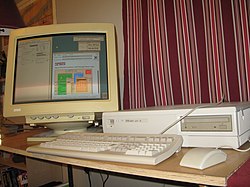

OpenVMS V7.3-1 running the CDE-based DECwindows "New Desktop" GUI | |

| Developer | VMS Software Inc (VSI)[1] (previously Digital Equipment Corporation, Compaq, Hewlett-Packard) |

| Written in | Primarily C, BLISS, VAX MACRO, DCL.[2] Other languages also used.[3] |

| Working state | Current |

| Source model | Closed-source with open-source components. Formerly source available[4][5] |

| Initial release | Announced: October 25, 1977 V1.0 / August 1978 |

| Latest release | V9.2-3 / November 20, 2024 |

| Marketing target | Servers (historically Minicomputers, Workstations) |

| Available in | English, Japanese.[6] Historical support for Chinese (both Traditional and Simplified characters), Korean, Thai.[7] |

| Update method | Concurrent upgrades, rolling upgrades |

| Package manager | PCSI and VMSINSTAL |

| Supported platforms | VAX, Alpha, Itanium, x86-64 |

| Kernel type | Monolithic kernel with loadable modules |

| Influenced | VAXELN, MICA, Windows NT |

| Influenced by | RSX-11M |

| Default user interface | DCL CLI and DECwindows GUI |

| License | Proprietary |

| Official website | vmssoftware |

OpenVMS, often referred to as just VMS,[8] is a multi-user, multiprocessing and virtual memory-based operating system. It is designed to support time-sharing, batch processing, transaction processing and workstation applications.[9] Customers using OpenVMS include banks and financial services, hospitals and healthcare, telecommunications operators, network information services, and industrial manufacturers.[10][11] During the 1990s and 2000s, there were approximately half a million VMS systems in operation worldwide.[12][13][14]

It was first announced by Digital Equipment Corporation (DEC) as VAX/VMS (Virtual Address eXtension/Virtual Memory System[15]) alongside the VAX-11/780 minicomputer in 1977.[16][17][18] OpenVMS has subsequently been ported to run on DEC Alpha systems, the Itanium-based HPE Integrity Servers,[19] and select x86-64 hardware and hypervisors.[20] Since 2014, OpenVMS is developed and supported by VMS Software Inc. (VSI).[21][22] OpenVMS offers high availability through clustering—the ability to distribute the system over multiple physical machines.[23] This allows clustered applications and data to remain continuously available while operating system software and hardware maintenance and upgrades are performed,[24] or if part of the cluster is destroyed.[25] VMS cluster uptimes of 17 years have been reported.[26]

History

[edit]Origin and name changes

[edit]

In April 1975, Digital Equipment Corporation (DEC) embarked on a project to design a 32-bit extension to its PDP-11 computer line. The hardware component was code named Star; the operating system was code named Starlet. Roger Gourd was the project lead for VMS. Software engineers Dave Cutler, Dick Hustvedt, and Peter Lipman acted as technical project leaders.[27] To avoid a repetition of PDP-11's many incompatible operating systems, the new operating system would be capable of real-time, time-sharing, and transaction processing.[28] The Star and Starlet projects culminated in the VAX-11/780 computer and the VAX/VMS operating system. The Starlet project's code name survives in VMS in the name of several of the system libraries, including STARLET.OLB and STARLET.MLB.[29] VMS was mostly written in VAX MACRO with some components written in BLISS.[8]

One of the original goals for VMS was backward compatibility with DEC's existing RSX-11M operating system.[8] Prior to the V4.0 release, VAX/VMS included a compatibility layer named the RSX Application Migration Executive (RSX AME), which allowed user-mode RSX-11M software to be run unmodified on top of VMS.[30] The RSX AME played an important role on early versions of VAX/VMS, which used certain RSX-11M user-mode utilities before native VAX versions had been developed.[8] By the V3.0 release, all compatibility-mode utilities were replaced with native implementations.[31] In VAX/VMS V4.0, RSX AME was removed from the base system, and replaced with an optional layered product named VAX-11 RSX.[32]

By the early 1980s VAX/VMS was very successful in the market. Although created on Unix on DEC systems, Ingres ported to VMS believing that doing so was necessary for commercial success. Demand for the VMS version was so much greater that the company neglected the Unix version.[35] A number of distributions of VAX/VMS were created:

- MicroVMS was a distribution of VAX/VMS designed for MicroVAX and VAXstation hardware, which had less memory and disk space than larger VAX systems of the time.[36] MicroVMS split up VAX/VMS into multiple kits, which a customer could use to install a subset of VAX/VMS tailored to their specific requirements.[37] MicroVMS releases were produced for each of the V4.x releases of VAX/VMS and was discontinued when VAX/VMS V5.0 was released.[38][39]

- Desktop-VMS was a short-lived distribution of VAX/VMS sold with VAXstation systems. It consisted of a single CD-ROM containing a bundle of VMS, DECwindows, DECnet, VAXcluster support, and a setup process designed for non-technical users.[40][41] Desktop-VMS could either be run directly from the CD or could be installed onto a hard drive.[42] Desktop-VMS had its own versioning scheme beginning with V1.0, which corresponded to the V5.x releases of VMS.[43]

- An unofficial derivative of VAX/VMS named MOS VP (Russian: Многофункциональная операционная система с виртуальной памятью, МОС ВП, lit. 'Multifunctional Operating System with Virtual Memory')[44] was created in the Soviet Union during the 1980s for the SM 1700 line of VAX clone hardware.[45][46] MOS VP added support for the Cyrillic script and translated parts of the user interface into Russian.[47] Similar derivatives of MicroVMS known as MicroMOS VP (Russian: МикроМОС ВП) or MOS-32M (Russian: МОС-32М) were also created.

With the V5.0 release in April 1988, DEC began to refer to VAX/VMS as simply VMS in its documentation.[48] In July 1992,[49] DEC renamed VAX/VMS to OpenVMS as an indication of its support of open systems industry standards such as POSIX and Unix compatibility,[50] and to drop the VAX connection since a migration to a different architecture was underway. The OpenVMS name was first used with the OpenVMS AXP V1.0 release in November 1992. DEC began using the OpenVMS VAX name with the V6.0 release in June 1993.[51]

Port to Alpha

[edit]

During the 1980s, DEC planned to replace the VAX platform and the VMS operating system with the PRISM architecture and the MICA operating system.[53] When these projects were cancelled in 1988, a team was set up to design new VAX/VMS systems of comparable performance to RISC-based Unix systems.[54] After a number of failed attempts to design a faster VAX-compatible processor, the group demonstrated the feasibility of porting VMS and its applications to a RISC architecture based on PRISM.[55] This led to the creation of the Alpha architecture.[56] The project to port VMS to Alpha began in 1989, and first booted on a prototype Alpha EV3-based Alpha Demonstration Unit in early 1991.[55][57]

The main challenge in porting VMS to a new architecture was that VMS and the VAX were designed together, meaning that VMS was dependent on certain details of the VAX architecture.[58] Furthermore, a significant amount of the VMS kernel, layered products, and customer-developed applications were implemented in VAX MACRO assembly code.[8] Some of the changes needed to decouple VMS from the VAX architecture included the creation of the MACRO-32 compiler, which treated VAX MACRO as a high-level language, and compiled it to Alpha object code,[59] and the emulation of certain low-level details of the VAX architecture in PALcode, such as interrupt handling and atomic queue instructions.

The VMS port to Alpha resulted in the creation of two separate codebases: one for VAX, and another for Alpha.[4] The Alpha code library was based on a snapshot of the VAX/VMS code base circa V5.4-2.[60] 1992 saw the release of the first version of OpenVMS for Alpha AXP systems, designated OpenVMS AXP V1.0. In 1994, with the release of OpenVMS V6.1, feature (and version number) parity between the VAX and Alpha variants was achieved; this was the so-called Functional Equivalence release.[60] The decision to use the 1.x version numbering stream for the pre-production quality releases of OpenVMS AXP confused some customers, and was not repeated in the subsequent ports of OpenVMS to new platforms.[58]

When VMS was ported to Alpha, it was initially left as a 32-bit only operating system.[59] This was done to ensure backwards compatibility with software written for the 32-bit VAX. 64-bit addressing was first added for Alpha in the V7.0 release.[61] In order to allow 64-bit code to interoperate with older 32-bit code, OpenVMS does not create a distinction between 32-bit and 64-bit executables, but instead allows for both 32-bit and 64-bit pointers to be used within the same code.[62] This is known as mixed pointer support. The 64-bit OpenVMS Alpha releases support a maximum virtual address space size of 8TiB (a 43-bit address space), which is the maximum supported by the Alpha 21064 and Alpha 21164.[63]

One of the more noteworthy Alpha-only features of OpenVMS was OpenVMS Galaxy, which allowed the partitioning of a single SMP server to run multiple instances of OpenVMS. Galaxy supported dynamic resource allocation to running partitions, and the ability to share memory between partitions.[64][65]

Port to Intel Itanium

[edit]

In 2001, prior to its acquisition by Hewlett-Packard, Compaq announced the port of OpenVMS to the Intel Itanium architecture.[66] The Itanium port was the result of Compaq's decision to discontinue future development of the Alpha architecture in favour of adopting the then-new Itanium architecture.[67] The porting began in late 2001, and the first boot on took place on January 31, 2003.[68] The first boot consisted of booting a minimal system configuration on a HP i2000 workstation, logging in as the SYSTEM user, and running the DIRECTORY command. The Itanium port of OpenVMS supports specific models and configurations of HPE Integrity Servers.[9] The Itanium releases were originally named HP OpenVMS Industry Standard 64 for Integrity Servers, although the names OpenVMS I64 or OpenVMS for Integrity Servers are more commonly used.[69]

The Itanium port was accomplished using source code maintained in common within the OpenVMS Alpha source code library, with the addition of conditional code and additional modules where changes specific to Itanium were required.[58] This required certain architectural dependencies of OpenVMS to be replaced, or emulated in software. Some of the changes included using the Extensible Firmware Interface (EFI) to boot the operating system,[70] reimplementing the functionality previously provided by Alpha PALcode inside the kernel,[71] using new executable file formats (Executable and Linkable Format and DWARF),[72] and adopting IEEE 754 as the default floating point format.[73]

As with the VAX to Alpha port, a binary translator for Alpha to Itanium was made available, allowing user-mode OpenVMS Alpha software to be ported to Itanium in situations where it was not possible to recompile the source code. This translator is known as the Alpha Environment Software Translator (AEST), and it also supported translating VAX executables which had already been translated with VEST.[74]

Two pre-production releases, OpenVMS I64 V8.0 and V8.1, were available on June 30, 2003, and on December 18, 2003. These releases were intended for HP organizations and third-party vendors involved with porting software packages to OpenVMS I64. The first production release, V8.2, was released in February 2005. V8.2 was also released for Alpha; subsequent V8.x releases of OpenVMS have maintained feature parity between the Alpha and Itanium architectures.[75]

Port to x86-64

[edit]When VMS Software Inc. (VSI) announced that they had secured the rights to develop the OpenVMS operating system from HP, they also announced their intention to port OpenVMS to the x86-64 architecture.[76] The porting effort ran concurrently with the establishment of the company, as well as the development of VSI's own Itanium and Alpha releases of OpenVMS V8.4-x.

The x86-64 port is targeted for specific servers from HPE and Dell, as well as certain virtual machine hypervisors.[77] Initial support was targeted for KVM and VirtualBox. Support for VMware was announced in 2020, and Hyper-V is being explored as a future target.[78] In 2021, the x86-64 port was demonstrated running on an Intel Atom-based single-board computer.[79]

As with the Alpha and Itanium ports, the x86-64 port made some changes to simplify porting and supporting OpenVMS on the new platform including: replacing the proprietary GEM compiler backend used by the VMS compilers with LLVM,[80] changing the boot process so that OpenVMS is booted from a memory disk,[81] and simulating the four privilege levels of OpenVMS in software since only two of x86-64's privilege levels are usable by OpenVMS.[71]

The first boot was announced on May 14, 2019. This involved booting OpenVMS on VirtualBox, and successfully running the DIRECTORY command.[82] In May 2020, the V9.0 Early Adopter's Kit release was made available to a small number of customers. This consisted of the OpenVMS operating system running in a VirtualBox VM with certain limitations; most significantly, few layered products were available, and code can only be compiled for x86-64 using cross compilers which run on Itanium-based OpenVMS systems.[20] Following the V9.0 release, VSI released a series of updates on a monthly or bimonthly basis which added additional functionality and hypervisor support. These were designated V9.0-A through V9.0-H.[83] In June 2021, VSI released the V9.1 Field Test, making it available to VSI's customers and partners.[84] V9.1 shipped as an ISO image which can be installed onto a variety of hypervisors, and onto HPE ProLiant DL380 servers starting with the V9.1-A release.[85]

Influence

[edit]During the 1980s, the MICA operating system for the PRISM architecture was intended to be the eventual successor to VMS. MICA was designed to maintain backwards compatibility with VMS applications while also supporting Ultrix applications on top of the same kernel.[86] MICA was ultimately cancelled along with the rest of the PRISM platform, leading Dave Cutler to leave DEC for Microsoft. At Microsoft, Cutler led the creation of the Windows NT operating system, which was heavily inspired by the architecture of MICA.[87] As a result, VMS is considered an ancestor of Windows NT, together with RSX-11, VAXELN and MICA, and many similarities exist between VMS and NT.[88]

A now-defunct project named FreeVMS attempted to develop an open-source operating system following VMS conventions.[89][90] FreeVMS was built on top of the L4 microkernel and supported the x86-64 architecture. Prior work investigating the implementation of VMS using a microkernel-based architecture had previously been undertaken as a prototyping exercise by DEC employees with assistance from Carnegie Mellon University using the Mach 3.0 microkernel ported to VAXstation 3100 hardware, adopting a multiserver architectural model.[91]

Architecture

[edit]

The OpenVMS operating system has a layered architecture, consisting of a privileged Executive, an intermediately privileged Command Language Interpreter, and unprivileged utilities and run-time libraries (RTLs).[92] Unprivileged code typically invokes the functionality of the Executive through system services (equivalent to system calls in other operating systems).

OpenVMS' layers and mechanisms are built around certain features of the VAX architecture, including:[92][93]

- The availability of four processor access modes (named Kernel, Executive, Supervisor and User, in order of decreasing privilege). Each mode has its own stack, and each memory page can have memory protections specified per-mode.

- A virtual address space which is partitioned between process-private space sections, and system space sections which are common to all processes.

- 32 interrupt priority levels which are used for synchronization.

- Hardware support for delivering asynchronous system traps to processes.

These VAX architecture mechanisms are implemented on Alpha, Itanium and x86-64 by either mapping to corresponding hardware mechanisms on those architectures, or through emulation (via PALcode on Alpha, or in software on Itanium and x86-64).[71]

Executive and Kernel

[edit]The OpenVMS Executive comprises the privileged code and data structures which reside in the system space. The Executive is further subdivided between the Kernel, which consists of the code which runs at the kernel access mode, and the less-privileged code outside of the Kernel which runs at the executive access mode.[92]

The components of the Executive which run at executive access mode include the Record Management Services, and certain system services such as image activation. The main distinction between the kernel and executive access modes is that most of the operating system's core data structures can be read from executive mode, but require kernel mode to be written to.[93] Code running at executive mode can switch to kernel mode at will, meaning that the barrier between the kernel and executive modes is intended as a safeguard against accidental corruption as opposed to a security mechanism.[94]

The Kernel comprises the operating system's core data structures (e.g. page tables, the I/O database and scheduling data), and the routines which operate on these structures. The Kernel is typically described as having three major subsystems: I/O, Process and Time Management, Memory Management.[92][93] In addition, other functionality such as logical name management, synchronization and system service dispatch are implemented inside the Kernel.

OpenVMS allows user-mode code with suitable privileges to switch to executive or kernel mode using the $CMEXEC and $CMKRNL system services, respectively.[95] This allows code outside of system space to have direct access to the Executive's routines and system services. In addition to allowing third-party extensions to the operating system, Privileged Images are used by core operating system utilities to manipulate operating system data structures through undocumented interfaces.[96]

File system

[edit]The typical user and application interface into the file system is the Record Management Services (RMS), although applications can interface directly with the underlying file system through the QIO system services.[97] The file systems supported by VMS are referred to as the Files-11 On-Disk Structures (ODS), the most significant of which are ODS-2 and ODS-5.[98] VMS is also capable of accessing files on ISO 9660 CD-ROMs and magnetic tape with ANSI tape labels.[99]

Files-11 is limited to 2 TiB volumes.[98] DEC attempted to replace it with a log-structured file system named Spiralog, first released in 1995.[100] However, Spiralog was discontinued due to a variety of problems, including issues with handling full volumes.[100] Instead, there has been discussion of porting the open-source GFS2 file system to OpenVMS.[101]

Command Language Interpreter

[edit]An OpenVMS Command Language Interpreter (CLI) implements a command-line interface for OpenVMS, responsible for executing individual commands and command procedures (equivalent to shell scripts or batch files).[102] The standard CLI for OpenVMS is the DIGITAL Command Language, although other options are available.

Unlike Unix shells, which typically run in their own isolated process and behave like any other user-mode program, OpenVMS CLIs are an optional component of a process, which exist alongside any executable image which that process may run.[103] Whereas a Unix shell will typically run executables by creating a separate process using fork-exec, an OpenVMS CLI will typically load the executable image into the same process, transfer control to the image, and ensure that control is transferred back to CLI once the image has exited and that the process is returned to its original state.[92]

Because the CLI is loaded into the same address space as user code, and the CLI is responsible for invoking image activation and image rundown, the CLI is mapped into the process address space at supervisor access mode, a higher level of privilege than most user code. This is in order to prevent accidental or malicious manipulation of the CLI's code and data structures by user-mode code.[92][103]

Features

[edit]

Clustering

[edit]OpenVMS supports clustering (first called VAXcluster and later VMScluster), where multiple computers run their own instance of the operating system. Clustered computers (nodes) may be fully independent from each other, or they may share devices like disk drives and printers. Communication across nodes provides a single system image abstraction.[104] Nodes may be connected to each other via a proprietary hardware connection called Cluster Interconnect or via a standard Ethernet LAN.

OpenVMS supports up to 96 nodes in a single cluster. It also allows mixed-architecture clusters.[23] OpenVMS clusters allow applications to function during planned or unplanned outages.[105] Planned outages include hardware and software upgrades.[24]

Networking

[edit]The DECnet protocol suite is tightly integrated into VMS, allowing remote logins, as well as transparent access to files, printers and other resources on VMS systems over a network.[106] VAX/VMS V1.0 featured support for DECnet Phase II,[107] and modern versions of VMS support both the traditional Phase IV DECnet protocol, as well as the OSI-compatible Phase V (also known as DECnet-Plus).[108] Support for TCP/IP is provided by the optional TCP/IP Services for OpenVMS layered product (originally known as the VMS/ULTRIX Connection, then as the ULTRIX Communications Extensions or UCX).[109][110] TCP/IP Services is based on a port of the BSD network stack to OpenVMS,[111] along with support for common protocols such as SSH, DHCP, FTP and SMTP.

DEC sold a software package named PATHWORKS (originally known as the Personal Computer Systems Architecture or PCSA) which allowed personal computers running MS-DOS, Microsoft Windows or OS/2, or the Apple Macintosh to serve as a terminal for VMS systems, or to use VMS systems as a file or print server.[112] PATHWORKS was later renamed to Advanced Server for OpenVMS, and was eventually replaced with a VMS port of Samba at the time of the Itanium port.[113]

DEC provided the Local Area Transport (LAT) protocol which allowed remote terminals and printers to be attached to a VMS system through a terminal server such as one of the DECserver family.[114]

Programming

[edit]DEC (and its successor companies) provided a wide variety of programming languages for VMS. Officially supported languages on VMS, either current or historical, include:[115][116][117]

Among OpenVMS's notable features is the Common Language Environment, a strictly defined standard that specifies calling conventions for functions and routines, including use of stacks, registers, etc., independent of programming language.[118] Because of this, it is possible to call a routine written in one language (for example, Fortran) from another (for example, COBOL), without needing to know the implementation details of the target language. OpenVMS itself is implemented in a variety of different languages and the common language environment and calling standard supports freely mixing these languages.[119] DEC created a tool named the Structure Definition Language (SDL), which allowed data type definitions to be generated for different languages from a common definition.[120]

The set of languages available directly with the operating system is restricted to C, Fortran, Pascal, BASIC, C++, BLISS and COBOL. Freely available open source languages include Lua, PHP, Python, Scala and Java.[121]

Development tools

[edit]

DEC provided a collection of software development tools in a layered product named DECset (originally named VAXset).[115] This consisted of the following tools:[122]

- Language-Sensitive Editor (LSE)

- Code Management System (CMS) a version control system

- Module Management System (MMS), a build tool

- the Source Code Analyzer (SCA), a static analyzer

- the Performance and Coverage Analyzer (PCA), a profiler

- Digital Test Manager (DTM), as a test manager

- In addition, a number of text editors are included in the operating system, including EDT, EVE and TECO.[123]

The OpenVMS Debugger supports all DEC compilers and many third-party languages. It allows breakpoints, watchpoints and interactive runtime program debugging using either a command line or graphical user interface.[124] A pair of lower-level debuggers, named DELTA and XDELTA, can be used to debug privileged code in additional to normal application code.[125]

In 2019, VSI released an officially supported Integrated Development Environment for VMS based on Visual Studio Code.[77] This allows VMS applications to be developed and debugged remotely from a Microsoft Windows, macOS or Linux workstation.[126]

Database management

[edit]DEC created a number of optional database products for VMS, some of which were marketed as the VAX Information Architecture family.[127] These products included:

- Rdb – A relational database system which originally used the proprietary Relational Data Operator (RDO) query interface, but later gained SQL support.[128]

- DBMS – A database management system which uses the CODASYL network model and Data Manipulation Language (DML).

- Digital Standard MUMPS (DSM) – an integrated programming language and key-value database.[115]

- Common Data Dictionary (CDD) – a central database schema repository, which allowed schemas to be shared between different applications, and data definitions to be generated for different programming languages.

- DATATRIEVE – a query and reporting tool which could access data from RMS files as well as Rdb and DBMS databases.

- Application Control Management System (ACMS) – A transaction processing monitor, which allows applications to be created using a high-level Task Description Language (TDL). Individual steps of a transaction can be implemented using DCL commands, or Common Language Environment procedures. User interfaces can be implemented using TDMS, DECforms or Digital's ALL-IN-1 office automation product.[129]

- RALLY, DECadmire – Fourth-generation programming languages (4GLs) for generating database-backed applications.[130] DECadmire featured integration with ACMS, and later provided support for generating Visual Basic client-server applications for Windows PCs.[131]

In 1994, DEC sold Rdb, DBMS and CDD to Oracle, where they remain under active development.[132] In 1995, DEC sold DSM to InterSystems, who renamed it Open M, and eventually replaced it with their Caché product.[133]

Examples of third-party database management systems for OpenVMS include MariaDB,[134] Mimer SQL[135] (Itanium and x86-64[136]), and System 1032.[137]

User interfaces

[edit]

VMS was originally designed to be used and managed interactively using DEC's text-based video terminals such as the VT100, or hardcopy terminals such as the DECwriter series. Since the introduction of the VAXstation line in 1984, VMS has optionally supported graphical user interfaces for use with workstations or X terminals such as the VT1000 series.

Text-based user interfaces

[edit]The DIGITAL Command Language (DCL) has served as the primary command language interpreter (CLI) of OpenVMS since the first release.[138][30][9] Other official CLIs available for VMS include the RSX-11 Monitor Console Routine (MCR) (VAX only), and various Unix shells.[115] DEC provided tools for creating text-based user interface applications – the Form Management System (FMS) and Terminal Data Management System (TDMS), later succeeded by DECforms.[139][140][141] A lower level interface named Screen Management Services (SMG$), comparable to Unix curses, also exists.[142]

Graphical user interfaces

[edit]

Over the years, VMS has gone through a number of different GUI toolkits and interfaces:

- The original graphical user interface for VMS was a proprietary windowing system known as the VMS Workstation Software (VWS), which was first released for the VAXstation I in 1984.[143] It exposed an API called the User Interface Services (UIS).[144] It ran on a limited selection of VAX hardware.[145]

- In 1989, DEC replaced VWS with a new X11-based windowing system named DECwindows.[146] It was first included in VAX/VMS V5.1.[147] Early versions of DECwindows featured an interface built on top of a proprietary toolkit named the X User Interface (XUI). A layered product named UISX was provided to allow VWS/UIS applications to run on top of DECwindows.[148] Parts of XUI were subsequently used by the Open Software Foundation as the foundation of the Motif toolkit.[149]

- In 1991, DEC replaced XUI with the Motif toolkit, creating DECwindows Motif.[150][151] As a result, the Motif Window Manager became the default DECwindows interface in OpenVMS V6.0,[147] although the XUI window manager remained as an option.

- In 1996, as part of OpenVMS V7.1,[147] DEC released the New Desktop interface for DECwindows Motif, based on the Common Desktop Environment (CDE).[152] On Alpha and Itanium systems, it is still possible to select the older MWM-based UI (referred to as the "DECwindows Desktop") at login time. The New Desktop was never ported to the VAX releases of OpenVMS.

Versions of VMS running on DEC Alpha workstations in the 1990s supported OpenGL[153] and Accelerated Graphics Port (AGP) graphics adapters. VMS also provides support for older graphics standards such as GKS and PHIGS.[154][155] Modern versions of DECwindows are based on X.Org Server.[9]

Security

[edit]OpenVMS provides various security features and mechanisms, including security identifiers, resource identifiers, subsystem identifiers, ACLs, intrusion detection and detailed security auditing and alarms.[156] Specific versions evaluated at Trusted Computer System Evaluation Criteria Class C2 and, with the SEVMS security enhanced release at Class B1.[157] OpenVMS also holds an ITSEC E3 rating (see NCSC and Common Criteria).[158] Passwords are hashed using the Purdy Polynomial.

Vulnerabilities

[edit]- Early versions of VMS included a number of privileged user accounts (including

SYSTEM,FIELD,SYSTESTandDECNET) with default passwords which were often left unchanged by system managers.[159][160] A number of computer worms for VMS including the WANK worm and the Father Christmas worm exploited these default passwords to gain access to nodes on DECnet networks.[161] This issue was also described by Clifford Stoll in The Cuckoo's Egg as a means by which Markus Hess gained unauthorized access to VAX/VMS systems.[162] In V5.0, the default passwords were removed, and it became mandatory to provide passwords for these accounts during system setup.[39] - A 33-year-old vulnerability in VMS on VAX and Alpha was discovered in 2017 and assigned the CVE ID CVE-2017-17482. On the affected platforms, this vulnerability allowed an attacker with access to the DCL command line to carry out a privilege escalation attack. The vulnerability relies on exploiting a buffer overflow bug in the DCL command processing code, the ability for a user to interrupt a running image (program executable) with CTRL/Y and return to the DCL prompt, and the fact that DCL retains the privileges of the interrupted image.[163] The buffer overflow bug allowed shellcode to be executed with the privileges of an interrupted image. This could be used in conjunction with an image installed with higher privileges than the attacker's account to bypass system security.[164]

POSIX compatibility

[edit]Various official Unix and POSIX compatibility layers were created for VMS. The first of these was DEC/Shell, which was a layered product consisting of ports of the Bourne shell from Version 7 Unix and several other Unix utilities to VAX/VMS.[115] In 1992, DEC released the POSIX for OpenVMS layered product, which included a shell based on the KornShell.[165] POSIX for OpenVMS was later replaced by the open-source GNV (GNU's not VMS) project, which was first included in OpenVMS media in 2002.[166] Amongst other GNU tools, GNV includes a port of the Bash shell to VMS.[167] Examples of third-party Unix compatibility layers for VMS include Eunice.[168]

Hobbyist programs

[edit]In 1997, OpenVMS and a number of layered products were made available free of charge for hobbyist, non-commercial use as part of the OpenVMS Hobbyist Program.[169] Since then, several companies producing OpenVMS software have made their products available under the same terms, such as Process Software.[170] Prior to the x86-64 port, the age and cost of hardware capable of running OpenVMS made emulators such as SIMH a common choice for hobbyist installations.[171]

In March 2020, HPE announced the end of the OpenVMS Hobbyist Program.[172] This was followed by VSI's announcement of the Community License Program (CLP) in April 2020, which was intended as a replacement for the HPE Hobbyist Program.[173] The CLP was launched in July 2020, and provides licenses for VSI OpenVMS releases on Alpha, Integrity and x86-64 systems.[174] OpenVMS for VAX is not covered by the CLP, since there are no VSI releases of OpenVMS VAX, and the old versions are still owned by HPE.[175]

Release history

[edit]| Version | Vendor | Release date [176][8][177] |

End of support [178][179][180] |

Platform | Significant changes, new hardware support [181][147] |

|---|---|---|---|---|---|

| X0.5[n 1] | DEC | April 1978[182] | ? | VAX | First version shipped to customers[29] |

| V1.0 | August 1978 | First production release | |||

| V1.01 | ?[n 2] | Bug fixes[183] | |||

| V1.5 | February 1979[n 3] | Support for native COBOL, BLISS compilers[183] | |||

| V1.6 | August 1979 | RMS-11 updates[184] | |||

| V2.0 | April 1980 | VAX-11/750, new utilities including EDT | |||

| V2.1 | ?[n 4] | ? | |||

| V2.2 | April 1981 | Process limit increased to 8,192[186] | |||

| V2.3 | May 1981[187] | Security enhancements[188] | |||

| V2.4 | ? | ? | |||

| V2.5 | ? | BACKUP utility[189] | |||

| V3.0 | April 1982 | VAX-11/730, VAX-11/725, VAX-11/782, ASMP | |||

| V3.1 | August 1982 | PL/I runtime bundled with base OS[190] | |||

| V3.2 | December 1982 | Support for RA60, RA80, RA81 disks[191] | |||

| V3.3 | April 1983 | HSC50 disk controller, BACKUP changes[192] | |||

| V3.4 | June 1983 | Ethernet support for DECnet,[193] VAX-11/785 | |||

| V3.5 | November 1983 | Support for new I/O devices[194] | |||

| V3.6 | April 1984 | Bug fixes[195] | |||

| V3.7 | August 1984 | Support for new I/O devices[196] | |||

| V4.0 | September 1984 | VAX 8600, MicroVMS, VAXclusters[197] | |||

| V4.1 | January 1985 | MicroVAX/VAXstation I, II[198] | |||

| V4.2 | October 1985 | Text Processing Utility | |||

| V4.3 | December 1985 | DELUA Ethernet adapter support | |||

| V4.3A | January 1986 | VAX 8200 | |||

| V4.4 | July 1986 | VAX 8800/8700/85xx, Volume Shadowing | |||

| V4.5 | November 1986 | Support for more memory in MicroVAX II | |||

| V4.5A | December 1986 | Ethernet VAXclusters | |||

| V4.5B | March 1987 | VAXstation/MicroVAX 2000 | |||

| V4.5C | May 1987 | MicroVAX 2000 cluster support | |||

| V4.6 | August 1987 | VAX 8250/8350/8530, RMS Journalling | |||

| V4.7 | January 1988 | First release installable from CD-ROM | |||

| V4.7A | March 1988 | VAXstation 3200/3500, MicroVAX 3500/3600 | |||

| V5.0 | April 1988 | VAX 6000, SMP, LMF, Modular Executive | |||

| V5.0-1 | August 1988 | Bug fixes | |||

| V5.0-2 | October 1988 | ||||

| V5.0-2A | MicroVAX 3300/3400 | ||||

| V5.1 | February 1989 | DECwindows | |||

| V5.1-B | VAXstation 3100 30/40, Desktop-VMS | ||||

| V5.1-1 | June 1989 | VAXstation 3520/3540, MicroVAX 3800/3900 | |||

| V5.2 | September 1989 | Cluster-wide process visibility/management | |||

| V5.2-1 | October 1989 | VAXstation 3100 38/48 | |||

| V5.3 | January 1990 | Support for third-party SCSI devices | |||

| V5.3-1 | April 1990 | Support for VAXstation SPX graphics | |||

| V5.3-2 | May 1990 | Support for new I/O devices | |||

| V5.4 | October 1990 | VAX 65xx, VAX Vector Architecture | |||

| V5.4-0A | VAX 9000, bug fixes for VAX 6000 systems | ||||

| V5.4-1 | November 1990 | New models of VAX 9000, VAXstation, VAXft | |||

| V5.4-1A | January 1991 | VAX 6000-400 | |||

| V5.4-2 | March 1991 | VAX 4000 Model 200, new I/O devices | |||

| V5.4-3 | October 1991 | FDDI adapter support | |||

| V5.5 | November 1991 | Cluster-wide batch queue, new VAX models | |||

| A5.5 | Same as V5.5 but without new batch queue | ||||

| V5.5-1 | July 1992 | Bug fixes for batch/print queue | |||

| V5.5-2HW | September 1992 | VAX 7000/10000, and other new VAX hardware | |||

| V5.5-2 | November 1992 | September 1995 | Consolidation of previous hardware releases | ||

| V5.5-2H4 | August 1993 | New VAX 4000 models, additional I/O devices | |||

| V5.5-2HF | ? | VAXft 810 | |||

| V1.0[n 5] | November 1992 | Alpha | First release for Alpha architecture | ||

| V1.5 | May 1993 | Cluster and SMP support for Alpha | |||

| V1.5-1H1 | October 1993 | New DEC 2000, DEC 3000 models | |||

| V6.0 | June 1993 | VAX | TCSEC C2 compliance, ISO 9660, Motif | ||

| V6.1 | April 1994 | VAX, Alpha | Merger of VAX and Alpha releases, PCSI | ||

| V6.1-1H1 | September 1994 | Alpha | New AlphaStation, AlphaServer models | ||

| V6.1-1H2 | November 1994 | ||||

| V6.2 | June 1995 | March 1998 | VAX, Alpha | Command Recall, DCL$PATH, SCSI clusters | |

| V6.2-1H1 | December 1995 | Alpha | New AlphaStation, AlphaServer models | ||

| V6.2-1H2 | March 1996 | ||||

| V6.2-1H3 | May 1996 | ||||

| V7.0 | January 1996 | VAX, Alpha | 64-bit addressing, Fast I/O, Kernel Threads | ||

| V7.1 | January 1997 | July 2000 | Very Large Memory support, DCL PIPE, CDE | ||

| V7.1-1H1 | November 1997 | Alpha | AlphaServer 800 5/500, 1200 | ||

| V7.1-1H2 | April 1998 | Support for booting from third-party devices | |||

| V7.1-2 | Compaq | December 1998 | Additional I/O device support | ||

| V7.2 | February 1999 | June 2002 | VAX, Alpha | OpenVMS Galaxy, ODS-5, DCOM | |

| V7.2-1 | July 1999 | Alpha | AlphaServer GS140, GS60, Tsunami | ||

| V7.2-1H1 | June 2000 | AlphaServer GS160, GS320 | |||

| V7.2-2 | September 2001 | December 2002 | Minicopy support for Volume Shadowing | ||

| V7.2-6C1 | August 2001 | ? | DII COE conformance[199] | ||

| V7.2-6C2 | July 2002 | ||||

| V7.3 | June 2001 | December 2012 | VAX | Final release for VAX architecture | |

| June 2004 | Alpha | ATM and GBE clusters, Extended File Cache | |||

| V7.3-1 | HP | August 2002 | December 2004 | Alpha | Security and performance improvements |

| V7.3-2 | December 2003 | December 2006 | AlphaServer GS1280, DS15 | ||

| V8.0 | June 2003 | December 2003 | IA64 | Evaluation release for Integrity servers | |

| V8.1 | December 2003 | February 2005 | Second evaluation release for Integrity servers | ||

| V8.2 | February 2005 | June 2010 | Alpha, IA64 | Production release for Integrity servers | |

| V8.2-1 | September 2005 | IA64 | Support for HP Superdome, rx7620, rx8620 | ||

| V8.3 | August 2006 | December 2015 | Alpha, IA64 | Support for additional Integrity server models | |

| V8.3-1H1 | November 2007 | IA64 | Support for HP BL860c, dual-core Itanium | ||

| V8.4 | June 2010 | December 2020 | Alpha, IA64 | Support for HPVM, clusters over TCP/IP[200] | |

| V8.4-1H1 | VSI | May 2015 | December 2022 | IA64 | Support for Poulson processors[201] |

| V8.4-2 | March 2016 | Support for HPE BL890c systems, UEFI 2.3 | |||

| V8.4-2L1 | September 2016 | December 2024 | OpenSSL updated to 1.0.2[202] | ||

| January 2017[203] | December 2035 | Alpha | |||

| V8.4-2L2 | July 2017 | Final release for Alpha architecture[204] | |||

| V8.4-2L3 | April 2021 | IA64 | Final release for Integrity servers[204] | ||

| V9.0 | May 2020 | June 2021 | x86-64 | x86-64 Early Adopter's Kit[205] | |

| V9.1 | June 2021 | September 2021 | x86-64 Field Test[84] | ||

| V9.1-A | September 2021 | April 2022 | DECnet-Plus for x86-64[85] | ||

| V9.2 | July 2022 | June 2023 | x86-64 Limited Production Release[206] | ||

| V9.2-1 | June 2023 | June 2025 | AMD CPUs, OpenSSL 3.0, native compilers[207] | ||

| V9.2-2 | January 2024 | December 2027 | Bug fixes[208] | ||

| V9.2-3 | November 2024 | December 2028 | VMware vMotion, VMDirectPath | ||

| V9.2-4 | June 2026 | TBA | iSCSI support | ||

Legend: Unsupported Supported Latest version Future version | |||||

- ^ X0.5 was also known as "Base Level 5".[182]

- ^ While an exact release date is unknown, the V1.01 change log dates in the release notes for V1.5 suggest it was released some time after November 1978.[183]

- ^ For some of the early VAX/VMS releases where an official release date is not known, the date of the Release Notes has been used an approximation.

- ^ The existence of releases V2.0 through V2.5 are documented in the V3.0 release notes.[185]

- ^ While the versioning scheme reset to V1.0 for the first AXP (Alpha) releases, these releases were contemporaneous with the V5.x releases and had a similar feature set.

See also

[edit]References

[edit]- ^ Patrick Thibodeau (July 31, 2014). "HP gives OpenVMS new life". Computerworld. Retrieved October 21, 2021.

- ^ Camiel Vanderhoeven (May 30, 2021). "How much of VMS is still in MACRO-32?". Newsgroup: comp.os.vms. Retrieved October 21, 2021.

- ^ "2.7 In what language is OpenVMS written?". The OpenVMS Frequently Asked Questions (FAQ). Hewlett Packard Enterprise. Archived from the original on August 10, 2018.

- ^ a b "Access to OpenVMS Source Code?". HP OpenVMS Systems ask the wizard. September 2, 1999. Archived from the original on October 28, 2017.

- ^ "Webinar 16: x86 Update". VSI. October 15, 2021. Archived from the original on December 11, 2021. Retrieved November 2, 2021.

- ^ "Japanese OpenVMS OS (JVMS)". VSI. Archived from the original on February 22, 2024. Retrieved February 5, 2021.

- ^ Michael M. T. Yau (1993). "Supporting the Chinese, Japanese, and Korean Languages in the OpenVMS Operating System" (PDF). Digital Technical Journal. 5 (3): 63–79. Retrieved October 21, 2021.

- ^ a b c d e f "OpenVMS at 20 Nothing stops it" (PDF). Digital Equipment Corporation. October 1997. Retrieved February 12, 2021.

- ^ a b c d "Software Product Description and QuickSpecs - VSI OpenVMS Version 8.4-2L1 for Integrity servers" (PDF). VMS Software Inc. July 2019. Retrieved January 2, 2021.

- ^ "VSI Business & New Products Update – April 9, 2019" (PDF). VSI. April 2019. Retrieved May 4, 2021.

- ^ Charles Babcock (November 1, 2007). "VMS Operating System Is 30 Years Old; Customers Believe It Can Last Forever". InformationWeek. Retrieved February 19, 2021.

- ^ Drew Robb (November 1, 2004). "OpenVMS survives and thrives". computerworld.com. Retrieved December 31, 2020.

- ^ Tao Ai Lei (May 30, 1998). "Digital tries to salvage OpenVMS". computerworld.co.nz. Archived from the original on September 25, 2021. Retrieved December 31, 2020.

- ^ Jesse Lipcon (October 1997). "OpenVMS: 20 Years of Renewal". Digital Equipment Corporation. Archived from the original on February 17, 2006. Retrieved February 12, 2021.

- ^ "VAX-11/780 Hardware Handbook" (PDF). Digital Equipment Corporation. 1979. Retrieved October 17, 2022.

- ^ Patrick Thibodeau (June 11, 2013). "OpenVMS, R.I.P. 1977-2020?". Computerworld. Retrieved April 27, 2024.

- ^ Tom Merritt (2012). Chronology of Tech History. Lulu.com. p. 104. ISBN 978-1300253075.

- ^ "VAX 11/780 - OLD-COMPUTERS.COM : HISTORY / detailed info". Archived from the original on September 26, 2023. Retrieved April 25, 2020.

- ^ "Supported Platforms". VSI.

- ^ a b "Rollout of V9.0 and Beyond" (PDF). VSI. May 19, 2020. Retrieved May 4, 2021.

- ^ "HP hands off OpenVMS development to VSI". Tech Times. August 1, 2014. Retrieved April 27, 2024.

- ^ "VMS Software, Inc. Named Exclusive Developer of Future Versions of OpenVMS Operating System" (Press release). Retrieved October 27, 2017.

- ^ a b "VSI Products - Clusters". VSI. Archived from the original on May 16, 2021. Retrieved May 4, 2021.

- ^ a b "Cluster Uptime". November 28, 2003. Archived from the original on February 29, 2012. Retrieved December 20, 2020.

- ^ "Commerzbank Survives 9/11 with OpenVMS Clusters" (PDF). July 2009. Retrieved April 27, 2024.

- ^ "February 2018 Business & Technical Update" (PDF). VSI. February 2018. Retrieved May 4, 2021.

- ^ Cutler, Dave (February 25, 2016). "Dave Cutler Oral History". youtube.com (Interview). Interviewed by Grant Saviers. Computer History Museum. Archived from the original on December 11, 2021. Retrieved February 26, 2021.

- ^ Bell, Gordon; Strecker, W.D. What Have We Learned from the PDP-11 - What We Have Learned from VAX and Alpha (PDF) (Report). Retrieved June 26, 2025.

- ^ a b Stephen Hoffman (September 2006). "What is OpenVMS? What is its history?". hoffmanlabs.com. Archived from the original on May 18, 2021. Retrieved January 3, 2021.

- ^ a b "Software Product Description – VAX/VMS Operating System, Version 1.0" (PDF). Digital Equipment Corporation. September 1978. Retrieved October 21, 2021.

- ^ "a simple question: what the h*ll is MCR?". Newsgroup: comp.os.vms. September 14, 2004. Retrieved December 31, 2020.

- ^ "Software Product Description VAX-11 RSX, Version 1.0" (PDF). Digital Equipment Corporation. October 1984. Retrieved September 20, 2021.

- ^ "Hello from....well what used to be SpitBrook". openvmshobbyist.com. February 27, 2007. Retrieved January 24, 2021.

- ^ "Computer system VAX/VMS". altiq.se. Archived from the original on February 1, 2021. Retrieved January 24, 2021.

- ^ "RDBMS Workshop: Ingres and Sybase" (PDF) (Interview). Interviewed by Doug Jerger. Computer History Museum. June 13, 2007. Retrieved May 30, 2025.

- ^ Michael D Duffy (2002). Getting Started with OpenVMS: A Guide for New Users. Elsevier. ISBN 978-0080507354.

- ^ "Micro VMS operating system". Computerworld. June 18, 1984. p. 7.

The Micro VMS operating system announced last week by Digital Equipment Corp. for its Microvax I family of microcomputers is a prepackaged version of ...

- ^ Kathleen D. Morse. "The VMS/MicroVMS merge". DEC Professional Magazine. pp. 74–84.

- ^ a b "VMS Version 5.0 Release Notes" (PDF). DEC. April 1988. Retrieved July 21, 2021.

- ^ Bob McCormick (January 11, 1989). "DECUServe WORKSTATIONS Conference 8". home.iae.nl. Archived from the original on July 10, 2022. Retrieved December 22, 2020.

- ^ "Office Archaeology". blog.nozell.com. February 24, 2004. Retrieved December 22, 2020.

- ^ "Software Product Description - Desktop-VMS, Version 1.2" (PDF). Digital. January 1991. Archived from the original (PDF) on August 16, 2000. Retrieved February 2, 2022.

- ^ "OpenVMS pages of proGIS Germany". vaxarchive.org. Retrieved December 22, 2020.

- ^ D.O. Andrievskaya, ed. (May 1989). "Computer Complexes, Technical Equipment, Software And Support Of The System Of Small Electronic Computer Machines (SM Computer)" (PDF) (in Russian). Soviet Union Research Institute of Information and Economics. Retrieved October 16, 2021.

- ^ Prokhorov N.L.; Gorskiy V.E. "Basic software for 32-bit SM computer models". Software Systems Journal (in Russian). 1988 (3). Retrieved October 15, 2021.

- ^ Egorov G.A.; Ostapenko G.P.; Stolyar N.G.; Shaposhnikov V.A. "Multifunctional operating system that supports virtual memory for 32-bit computers". Software Systems Journal (in Russian). 1988 (4). Retrieved October 15, 2021.

- ^ "Installing OS MOS-32M" (PDF). pdp-11.ru (in Russian). June 16, 2012. Archived (PDF) from the original on October 27, 2021. Retrieved October 15, 2021.

- ^ "VMS Version 5.0 Release Notes" (PDF). Digital Equipment Corporation. April 1988. Retrieved October 27, 2021.

- ^ "Digital Introduces First Generation of OpenVMS Alpha-Ready Systems". Digital Equipment Corporation. July 15, 1992. Retrieved January 25, 2021.

- ^ "OpenVMS Definition from PC Magazine Encyclopedia".

- ^ Arne Vajhøj (November 29, 1999). "OpenVMS FAQ - What is the difference between VMS and OpenVMS?". vaxmacro.de. Archived from the original on September 24, 2021. Retrieved January 25, 2021.

- ^ "History of the Vernon the VMS shark". vaxination.ca. Retrieved January 24, 2021.

- ^ Dave Cutler (May 30, 1988). "DECwest/SDT Agenda" (PDF). bitsavers.org.

- ^ "EV-4 (1992)". February 24, 2008.

- ^ a b Comerford, R. (July 1992). "How DEC developed Alpha". IEEE Spectrum. 29 (7): 26–31. doi:10.1109/6.144508.

- ^ "Managing Technological Leaps: A study of DEC's Alpha Design Team" (PDF). April 1993.

- ^ Supnik, Robert M. (1993). "Digital's Alpha project". Communications of the ACM. 36 (2): 30–32. doi:10.1145/151220.151223. ISSN 0001-0782. S2CID 32694010.

- ^ a b c Clair Grant (June 2005). "Porting OpenVMS to HP Integrity Servers" (PDF). OpenVMS Technical Journal. 6.

- ^ a b Nancy P. Kronenberg; Thomas R. Benson; Wayne M. Cardoza; Ravindran Jagannathan; Benjamin J. Thomas III (1992). "Porting OpenVMS from VAX to Alpha AXP" (PDF). Digital Technical Journal. 4 (4): 111–120. Retrieved April 27, 2024.

- ^ a b "OpenVMS Compatibility Between VAX and Alpha". Digital Equipment Corporation. May 1995. Retrieved October 22, 2021.

- ^ "Extending OpenVMS for 64-bit Addressable Virtual Memory" (PDF). Digital Technical Journal. 8 (2): 57–71. 1996. S2CID 9618620.

- ^ "The OpenVMS Mixed Pointer Size Environment" (PDF). Digital Technical Journal. 8 (2): 72–82. 1996. S2CID 14874367. Archived from the original (PDF) on February 19, 2020.

- ^ "VSI OpenVMS Programming Concepts Manual, Vol. 1" (PDF). VSI. April 2020. Retrieved October 7, 2020.

- ^ "HP OpenVMS Alpha Partitioning and Galaxy Guide". HP. September 2003. Retrieved October 22, 2021.

- ^ James Niccolai (October 14, 1998). "Compaq details strategy for OpenVMS". Australian Reseller News. Archived from the original on April 4, 2023. Retrieved January 14, 2021.

- ^ "Compaq OpenVMS Times" (PDF). January 2002. Archived from the original (PDF) on March 2, 2006.

- ^ Andrew Orlowski (June 25, 2001). "Farewell then, Alpha – Hello, Compaq the Box Shifter". theregister.com. Retrieved December 21, 2020.

- ^ Sue Skonetski (January 31, 2003). "OpenVMS Boots on Itanium on Friday Jan 31". Newsgroup: comp.os.vms. Retrieved December 21, 2020.

- ^ "HP C Installation Guide for OpenVMS Industry Standard 64 Systems" (PDF). HP. June 2007. Retrieved March 2, 2021.

- ^ Thomas Siebold (2005). "OpenVMS Integrity Boot Environment" (PDF). decus.de. Retrieved December 21, 2020.

- ^ a b c Camiel Vanderhoeven (October 8, 2017). Re-architecting SWIS for X86-64. YouTube. Archived from the original on December 11, 2021. Retrieved October 21, 2021.

- ^ Gaitan D’Antoni (2005). "Porting OpenVMS Applications to Itanium" (PDF). hp-user-society.de. Retrieved December 21, 2020.

- ^ "OpenVMS floating-point arithmetic on the Intel Itanium architecture" (PDF). decus.de. 2003. Retrieved December 21, 2020.

- ^ Thomas Siebold (2005). "OpenVMS Moving Custom Code" (PDF). decus.de. Retrieved December 21, 2020.

- ^ Paul Lacombe (2005). "HP OpenVMS Strategy and Futures" (PDF). de.openvms.org. Archived from the original (PDF) on February 7, 2021. Retrieved December 21, 2020.

- ^ "VMS Software, Inc. Named Exclusive Developer of Future Versions of OpenVMS Operating System" (Press release). July 31, 2014. Archived from the original on August 10, 2014.

- ^ a b "OpenVMS Rolling Roadmap" (PDF). VSI. December 2019. Archived from the original (PDF) on June 10, 2020. Retrieved May 4, 2021.

- ^ "VSI V9.0 Q&A". VSI. Retrieved April 27, 2024.

- ^ VSI (June 1, 2021). OpenVMS x64 Atom Project. YouTube. Archived from the original on December 11, 2021. Retrieved June 2, 2021.

- ^ 2017 LLVM Developers' Meeting: J. Reagan "Porting OpenVMS using LLVM". YouTube. October 31, 2017. Archived from the original on December 11, 2021.

- ^ "State of the Port to x86_64 January 2017" (PDF). January 6, 2017. Archived from the original (PDF) on November 4, 2019.

- ^ "VMS Software Inc. Announces First Boot on x86 Architecture". VSI. May 14, 2019. Retrieved May 4, 2021.

- ^ "State of the Port". VSI. Archived from the original on April 18, 2021. Retrieved April 16, 2021.

- ^ a b "OpenVMS 9.1". VSI. June 30, 2021. Archived from the original on June 30, 2021. Retrieved June 30, 2021.

- ^ a b "VMS Software Releases OpenVMS V9.1-A". VSI. September 30, 2021. Retrieved September 30, 2021.

- ^ Catherine Richardson; Terry Morris; Rockie Morgan; Reid Brown; Donna Meikle (March 1987). "MICA Software Business Plan" (PDF). Bitsavers. Retrieved January 4, 2021.

- ^ Zachary, G. Pascal (2014). Showstopper!: The Breakneck Race to Create Windows NT and the Next Generation at Microsoft. Open Road Media. ISBN 978-1-4804-9484-8. Retrieved January 4, 2021.

- ^ Mark Russinovich (October 30, 1998). "Windows NT and VMS: The Rest of the Story". ITPro Today. Retrieved January 4, 2021.

- ^ Eugenia Loli (November 23, 2004). "FreeVMS 0.1.0 Released". OSnews. Retrieved April 2, 2022.

- ^ "FreeVMS official web page". Archived from the original on September 8, 2018.

- ^ Wiecek, Cheryl A.; Kaler, Christopher G.; Fiorelli, Stephen; Davenport, Jr., William C.; Chen, Robert C. (April 1992). "A Model and Prototype of VMS Using the Mach 3.0 Kernel". Proceedings of the USENIX Workshop on Micro-Kernels and Other Kernel Architectures: 187–203. Retrieved September 20, 2021.

- ^ a b c d e f Ruth E. Goldenberg; Lawrence J. Kenah; Denise E. Dumas (1991). VAX/VMS Internals and Data Structures, Version 5.2. Digital Press. ISBN 978-1555580599.

- ^ a b c Hunter Goatley; Edward A. Heinrich. "Writing VMS Privileged Code Part I: The Fundamentals, Part 1". hunter.goatley.com. Retrieved January 31, 2021.

- ^ Paul A. Karger; Mary Ellen Zurko; Douglas W. Benin; Andrew H. Mason; Clifford E. Kahnh (May 7–9, 1990). A VMM security kernel for the VAX architecture (PDF). Proceedings. 1990 IEEE Computer Society Symposium on Research in Security and Privacy. IEEE. doi:10.1109/RISP.1990.63834. Retrieved January 31, 2021.

- ^ "VSI OpenVMS System Services Reference Manual: A–GETUAI" (PDF). VSI. June 2020. Retrieved February 15, 2021.

- ^ Wayne Sewell (1992). Inside VMS: The System Manager's and System Programmer's Guide to VMS Internals. Van Nostrand Reinhold. ISBN 0-442-00474-5.

- ^ "VSI OpenVMS I/O User's Reference Manual" (PDF). VSI. August 2019. Retrieved January 13, 2021.

- ^ a b "Andy Goldstein on Files-11, the OpenVMS File Systems". VSI Official Channel. July 25, 2019. Archived from the original on January 12, 2021. Retrieved January 3, 2021.

- ^ "VSI OpenVMS Guide to OpenVMS File Applications" (PDF). VSI. July 23, 2019. Retrieved January 13, 2021.

- ^ a b "Why was Spiralog retired?". Hewlett Packard Enterprise Community - Operating System - OpenVMS. January 10, 2006. Retrieved January 13, 2021.

- ^ "VSI OpenVMS Software Roadmap 2020" (PDF). September 2020. Archived from the original (PDF) on December 7, 2020. Retrieved September 23, 2020.

- ^ "OpenVMS User's Manual" (PDF). VSI. July 2020. Chapter 14, Advanced Programming with DCL. Retrieved April 9, 2021.

- ^ a b Simon Clubley (July 3, 2017). "How dangerous is it to be able to get into DCL supervisor mode?". Newsgroup: comp.os.vms. Retrieved February 1, 2021.

- ^ "VSI OpenVMS Cluster Systems" (PDF). VSI. August 2019. Retrieved January 13, 2021.

- ^ "Building Dependable Systems: The OpenVMS Approach" (PDF). DEC. May 1994. Retrieved July 31, 2021.

- ^ "DECnet for OpenVMS Guide to Networking" (PDF). VSI. August 2020. Archived from the original (PDF) on January 21, 2021. Retrieved January 14, 2021.

- ^ "Software Product Description: DECnet-VAX, Version 1" (PDF). DEC. September 1978. Retrieved May 23, 2023.

- ^ "VSI Products - DECnet". VSI. Archived from the original on January 27, 2021. Retrieved January 14, 2021.

- ^ "VMS/ULTRIX System Manager's Guide". Digital Equipment Corporation. September 1990. Retrieved January 21, 2021.

- ^ "VSI OpenVMS TCP/IP User's Guide" (PDF). VSI. August 2019. Retrieved January 14, 2021.

- ^ Robert Rappaport; Yanick Pouffary; Steve Lieman; Mary J. Marotta (2004). "Parallelism and Performance in the OpenVMS TCP/IP Kernel". OpenVMS Technical Journal. 4.

- ^ Alan Abrahams; David A. Low (1992). "An Overview of the PATHWORKS Product Family" (PDF). Digital Technical Journal. 4 (1): 8–14. Retrieved April 27, 2024.

- ^ Andy Goldstein (2005). "Samba and OpenVMS" (PDF). de.openvms.org. Archived from the original (PDF) on February 7, 2021. Retrieved January 1, 2021.

- ^ "Local Area Transport Network Concepts" (PDF). DEC. June 1988. Retrieved January 14, 2021.

- ^ a b c d e "VAX/VMS Software Language and Tools Handbook" (PDF). bitsavers.org. 1985. Retrieved December 31, 2020.

- ^ "VSI List of Products". VSI. Archived from the original on May 16, 2021. Retrieved May 4, 2021.

- ^ "Lua". VMS Software, Inc. Retrieved June 27, 2025.

- ^ "VSI OpenVMS Calling Standard" (PDF). January 2021. Retrieved May 4, 2021.

- ^ "VSI OpenVMS Programming Concepts Manual, Volume II" (PDF). VSI. April 2020. Retrieved May 4, 2021.

- ^ "SDL, LANGUAGE, Data Structure/Interface Definition Language". digiater.nl. November 1996. Retrieved January 3, 2021.

- ^ "VMS Software / Documentation". VSI. Retrieved May 12, 2025.

- ^ "DECset". VSI. Archived from the original on January 7, 2021. Retrieved January 2, 2021.

- ^ "VSI OpenVMS DCL Dictionary: A–M" (PDF). VSI. April 2020. Retrieved January 2, 2021.

- ^ "VSI OpenVMS Debugger Manual" (PDF). VSI. June 2020. Retrieved May 4, 2021.

- ^ "VSI OpenVMS Delta/XDelta Debugger Manual" (PDF). VSI. August 2019. Retrieved December 31, 2020.

- ^ "VMS IDE". Visual Studio Marketplace. Retrieved January 2, 2021.

- ^ "VAX/VMS Software Information Management Handbook" (PDF). Digital Equipment Corporation. 1985. Retrieved January 24, 2021.

- ^ Ian Smith (2004). "Rdb's First 20 Years: Memories and Highlights" (PDF). Archived from the original (PDF) on November 3, 2005. Retrieved January 24, 2021.

- ^ "Compaq ACMS for OpenVMS Getting Started". Compaq. December 1999. Retrieved January 24, 2021.

- ^ "Building Dependable Systems: The OpenVMS Approach". Digital Equipment Corporation. March 1994. Retrieved October 17, 2022.

- ^ "Cover Letter for DECADMIRE V2.1 MUP Kit - DECADMIRE V2.1A". Digital Equipment Corporation. 1995. Retrieved January 24, 2021.[permanent dead link]

- ^ Kevin Duffy; Philippe Vigier (2004). "Oracle Rdb Status and Direction" (PDF). Retrieved January 24, 2021.

- ^ Larry Goelz; John Paladino (May 31, 1999). "Cover Letter re DSM". Compaq. Retrieved January 24, 2021.[permanent dead link]

- ^ Neil Rieck (June 29, 2020). "OpenVMS Notes MySQL and MariaDB". Archived from the original on January 31, 2021. Retrieved January 24, 2021.

- ^ Bengt Gunne (2017). "Mimer SQL on OpenVMS Present and Future" (PDF). Retrieved April 27, 2024.

- ^ "Mimer SQL is now available for OpenVMS on x86". Mimer Information Technology AB. 2023.

- ^ "Rocket Software System 1032". Rocket Software. Archived from the original on January 22, 2021. Retrieved January 24, 2021.

- ^ Hoffman, Stephen; Anagnostopoulos, Paul (1999). Writing Real Programs in DCL (2nd ed.). Digital Press. ISBN 1-55558-191-9.

- ^ "Software Product Description HP DECforms for OpenVMS, Version 4.0" (PDF). Hewlett Packard Enterprise. August 2006. Retrieved January 1, 2021.[permanent dead link]

- ^ "Software Product Description HP FMS for OpenVMS, Version 2.5" (PDF). Hewlett Packard Enterprise. January 2005. Retrieved January 1, 2021.[permanent dead link]

- ^ "Compaq TDMS for OpenVMS VAX, Version 1.9B" (PDF). Hewlett Packard Enterprise. July 2002. Retrieved January 1, 2021.[permanent dead link]

- ^ "OpenVMS RTL Screen Management (SMG$) Manual". Hewlett Packard Enterprise. 2001. Archived from the original on December 4, 2020. Retrieved January 1, 2021.

- ^ Rick Spitz; Peter George; Stephen Zalewski (1986). "The Making of a Micro VAX Workstation" (PDF). Digital Technical Journal. 1 (2). Retrieved October 21, 2021.

- ^ "MicroVMS Workstation Graphics Programming Guide" (PDF). Digital Equipment Corporation. May 1986. Retrieved October 21, 2021.

- ^ Fred Kleinsorge (January 4, 2007). "comp.os.vms - Dec VWS Internals". Newsgroup: comp.os.vms. Retrieved February 27, 2021.

- ^ Scott A. McGregor (1990). "An Overview of the DECwindows Architecture" (PDF). Digital Technical Journal. 2 (3). Digital Equipment Corporation. Retrieved October 21, 2021.

- ^ a b c d "(Open)VMS(/ VAX), Version overview". vaxmacro.de. Archived from the original on October 22, 2020. Retrieved October 21, 2021.

- ^ "Migrating VWS/UIS Applications to DECwindows?". HP OpenVMS ask the wizard. November 9, 2004. Archived from the original on September 15, 2018.

- ^ Janet Dobbs (August 1989). "Strategies for Writing Graphical UNIX Applications Productively and Portably" (PDF). AUUG Newsletter. 10 (4): 50. Retrieved December 29, 2021.

- ^ "Using DECwindows Motif for OpenVMS" (PDF). VSI. October 2019. Retrieved October 21, 2020.

- ^ S. Kadantsev; M. Mouat. Early Experience With DECwindows/Motif In the TRIUMF Central Control System (PDF). 13th International Conference on Cyclotrons and their Applications. pp. 676–677. Archived from the original (PDF) on November 25, 2017. Retrieved August 28, 2019.

- ^ "Getting Started With the New Desktop". Digital Equipment Corporation. May 1996. Retrieved October 21, 2021.

- ^ OpenGL Frequently Asked Questions (FAQ) [1/3]. Faqs.org. Retrieved on July 17, 2013.

- ^ "Software Product Description VSI Graphical Kernel System" (PDF). VSI. 2017. Retrieved January 2, 2021.

- ^ "Software Product Description DEC PHIGS Version 3.1 for OpenVMS VAX" (PDF). Hewlett Packard Enterprise. April 1995. Retrieved January 2, 2021.[permanent dead link]

- ^ "VSI OpenVMS Guide to System Security" (PDF). VSI. December 2019. Retrieved April 26, 2021.

- ^ National Computer Security Center (NCSC) Trusted Product Evaluation List (TPEL)

- ^ "HP OpenVMS Guide to System Security". Hewlett Packard. September 2003. Retrieved October 21, 2021.

- ^ Green, James L.; Sisson, Patricia L. (June 1989). "The "Father Christmas" Worm" (PDF). 12th National Computer Security Conference Proceedings. Retrieved November 23, 2015.

- ^ Kevin Rich (November 2004). "Security Audit on OpenVMS: An Internal Auditor's Perspective". SANS Institute. Retrieved July 21, 2021.

- ^ Claes Nyberg; Christer Oberg; James Tusini (January 20, 2011). "DEFCON 16: Hacking OpenVMS". YouTube. Archived from the original on December 11, 2021. Retrieved July 21, 2021.

- ^ Stoll, Clifford (1989). The Cuckoo's Egg : tracking a spy through the maze of computer espionage (1st ed.). New York: Doubleday. ISBN 0-385-24946-2.

- ^ On the internal workings of the CTRL-Y mechanism, see: OpenVMS AXP Internals and Data Structures, Version 1.5, sections 30.6.5.1 (CTRL/Y Processing) and 30.6.5.4 (CONTINUE Command) at pp. 1074–1076.

- ^ John Leyden (February 6, 2018). "Ghost in the DCL shell: OpenVMS, touted as ultra reliable, had a local root hole for 30 years". theregister.com. Retrieved January 13, 2021.

- ^ Digital Equipment Corporation (1994). Software Product Description - POSIX for OpenVMS 2.0.

- ^ "OpenVMS Alpha Version 7.3-1 New Features and Documentation Overview Begin Index". June 2002.[permanent dead link]

- ^ "GNV". VSI Products. Retrieved June 27, 2025.

- ^ "ϕnix: a Unix emulator for VAX/VMS" (PDF). August 10, 1987. Archived from the original (PDF) on January 22, 2004.

- ^ "Compaq and DECUS expand Free License OpenVMS Hobbyist Program". Compaq. March 10, 1999. Retrieved August 1, 2021.

- ^ "Hobbyist Program". Process Software. Retrieved April 24, 2020.

- ^ Bill Pedersen; John Malmberg. "VMS Hardware". vms-ports. Retrieved July 30, 2021.

- ^ "HPE sets end date for hobbyist licenses for OpenVMS". Archived from the original on July 4, 2020. Retrieved July 4, 2020.

- ^ "VMS Software Announces Community License". VSI. April 22, 2020. Retrieved May 4, 2021.

- ^ "VMS Software Community License Available". VSI. July 28, 2020. Retrieved May 4, 2021.

- ^ "VSI Announces Community License Updates". VSI. June 11, 2020. Retrieved May 4, 2021.

- ^ "HP OpenVMS Systems - OpenVMS Release History". June 21, 2010. Archived from the original on October 7, 2018.

- ^ "OpenVMS – A guide to the strategy and roadmap". VSI. Retrieved September 27, 2021.

- ^ "HP OpenVMS Systems - Supported Software Versions - January 2014". Archived from the original on October 14, 2018.

- ^ "VSI OpenVMS Software Roadmap 2021" (PDF). VSI. Retrieved September 30, 2021.

- ^ "OpenVMS Software Technical Support Service" (PDF). hp.com. Hewlett Packard. October 2003. Retrieved February 1, 2022.

- ^ "OpenVMS Release History". Bitsavers. HP. Retrieved January 23, 2022.

- ^ a b Andy Goldstein (September 16, 1997). "When Did VMS First Come Out?". Newsgroup: comp.os.vms. Retrieved March 5, 2022.

- ^ a b c VAX/VMS Release Notes Version 1.5. DEC. February 1979. AA-D015B-TE.

- ^ VAX/VMS Release Notes Version 1.6. DEC. August 1979. AA-J039A-TE.

- ^ "VAX/VMS Release Notes Version 3.0" (PDF). DEC. May 1982. AA-D015D-TE. Retrieved February 6, 2022.

- ^ "VAX/VMS Internals and Data Structures" (PDF). DEC. April 1981. Retrieved February 6, 2022.

- ^ "VAX-11 Information Directory and Index" (PDF). DEC. May 1981. AA-D016D-TE. Retrieved February 6, 2022.

- ^ "GRPNAM SECURITY HOLE IN LOGIN". DEC. Retrieved February 6, 2022.

- ^ Bob Boyd (September 18, 1987). "First Introduction of BACKUP utility". Newsgroup: comp.os.vms. Retrieved February 6, 2022.

- ^ VAX/VMS Release Notes Version 3.1. DEC. August 1982. AA-N472A-TE.

- ^ VAX/VMS Release Notes Version 3.2. DEC. December 1982. AA-P763A-TE.

- ^ VAX/VMS Release Notes Version 3.3. DEC. April 1983. AA-P764A-TE.

- ^ VAX/VMS Release Notes Version 3.4. DEC. June 1983. AA-P765A-TE.

- ^ VAX/VMS Release Notes Version 3.5. DEC. November 1983. AA-P766A-TE.

- ^ VAX/VMS Release Notes Version 3.6. DEC. April 1984. AA-V332A-TE.

- ^ VAX/VMS Release Notes Version 3.7. DEC. August 1984. AA-CJ33A-TE.

- ^ vms-source-listings

- ^ vms-source-listings

- ^ "Commitment to DII COE initiative provides longterm support and application portability for OpenVMS customers". Hewlett Packard Enterprise. Archived from the original on September 7, 2023. Retrieved September 7, 2023.

- ^ "HP OpenVMS Systems - OpenVMS Version 8.4". Archived from the original on September 2, 2010.

- ^ "VMS Software, Inc. Launches New Version of OpenVMS Operating System Worldwide" (PDF) (Press release). June 1, 2015. Archived from the original (PDF) on August 7, 2015. Retrieved June 4, 2015.

- ^ "VMS Software, Inc. Launches New Version 8.4-2L1 of OpenVMS Operating System Worldwide". VSI (Press release). September 23, 2016. Retrieved May 4, 2021.

- ^ "VMS Software, Inc. Launches VSI OpenVMS Alpha V8.4-2L1 for Alpha Hardware". VSI (Press release). January 27, 2017. Retrieved May 4, 2021.

- ^ a b "Roadmap Update". VSI. September 2020. Archived from the original on September 27, 2020. Retrieved September 23, 2020.

- ^ "OpenVMS for x86 V9.0 EAK goes to first customer on May 15, 2020". VSI. April 24, 2020. Retrieved May 4, 2021.

- ^ "VSI OpenVMS v9.2 Released". VSI. July 14, 2022. Retrieved July 14, 2022.

- ^ "OpenVMS V9.2-1 Final Release". VSI. June 15, 2023. Retrieved June 15, 2023.

- ^ "OpenVMS V9.2-2 public availability". VSI. January 25, 2024. Retrieved January 25, 2024.

Further reading

[edit]- Getting Started with OpenVMS, Michael D. Duffy, ISBN 1-55558-279-6

- Introduction to OpenVMS, 5th Edition, Lesley Ogilvie Rice, ISBN 1-55558-194-3

- Ruth Goldenberg; Saro Saravanan (1994). OpenVMS AXP Internals and Data Structures: Version 1.5. Digital Press. ISBN 978-1555581206.

- OpenVMS Alpha Internals and Data Structures: Memory Management, Ruth Goldenberg, ISBN 1-55558-159-5

- OpenVMS Alpha Internals and Data Structures : Scheduling and Process Control : Version 7.0, Ruth Goldenberg, Saro Saravanan, Denise Dumas, ISBN 1-55558-156-0

- VAX/VMS Internals and Data Structures: Version 5.2 ("IDSM"), Ruth Goldenberg, Saro Saravanan, Denise Dumas, ISBN 1-55558-059-9

- Writing Real Programs in DCL, second edition, Stephen Hoffman, Paul Anagnostopoulos, ISBN 1-55558-191-9

- Writing OpenVMS Alpha Device Drivers in C, Margie Sherlock, Leonard Szubowicz, ISBN 1-55558-133-1

- OpenVMS Performance Management, Joginder Sethi, ISBN 1-55558-126-9

- Getting Started with OpenVMS System Management, 2nd Edition, David Donald Miller, Stephen Hoffman, Lawrence Baldwin, ISBN 1-55558-243-5

- The OpenVMS User's Guide, Second Edition, Patrick Holmay, ISBN 1-55558-203-6

- Using DECwindows Motif for OpenVMS, Margie Sherlock, ISBN 1-55558-114-5

- Wayne Sewell (1992). Inside VMS: The System Manager's and System Programmer's Guide to VMS Internals. Van Nostrand Reinhold. ISBN 0-442-00474-5.

- The hitchhiker's guide to VMS : an unsupported-undocumented-can-go-away-at-any-time feature of VMS, Bruce Ellis, ISBN 1-878956-00-0

- Roland Hughes (December 2006). The Minimum You Need to Know to Be an OpenVMS Application Developer. Logikal Solutions. ISBN 978-0-9770866-0-3.

External links

[edit]- VMS Software: Current Roadmap and Future Releases

- VMS Software: Documentation

- HP OpenVMS FAQ at the Wayback Machine (archived January 12, 2020)

- comp.os.vms Usenet group, archives on Google Groups

OpenVMS

View on GrokipediaHistory

Origins and Early Development

The development of VMS originated in 1975 at Digital Equipment Corporation (DEC), driven by the limitations of the 16-bit PDP-11 architecture and the need for a more advanced operating system to accompany the forthcoming 32-bit VAX minicomputer line.[8] This effort built upon DEC's prior real-time and time-sharing systems, including RSX-11 for multiprogramming on PDP-11s and RSTS/E for multiuser environments, adapting their concepts to support virtual memory and larger-scale computing.[9] The project, approved by DEC's engineering manager Gordon Bell in April 1975, was led by software architect Dave Cutler, who drew from his experience on RSX-11 to design a robust, multiuser system emphasizing reliability and extensibility.[8] VMS Version 1.0 was announced alongside the VAX-11/780 minicomputer on October 25, 1977, and shipped in late 1978, marking DEC's first 32-bit operating system with integrated hardware-software optimization.[3] It supported up to 8 MB of memory, multiprocessing for symmetric configurations, demand-paged virtual memory addressing up to 4 GB per process, and time-sharing for multiple interactive users, positioning it as a commercial alternative to Unix on minicomputers.[8] Core to its design was the Record Management Services (RMS), a record-oriented file system enabling structured data access for business applications, and the initial Digital Command Language (DCL) interpreter, providing a powerful, scriptable command-line interface for system administration and user tasks.[3] Basic utilities like the BACKUP command for volume archiving were included from this version, facilitating data protection in enterprise settings. Subsequent releases through 1985 refined VMS for broader hardware support and enhanced functionality. Version 2.0 (April 1980) added compatibility with the VAX-11/750 and improved DECnet networking for Phase III connectivity.[3] Version 3.0 (April 1982) extended to the VAX-11/730, introducing advanced lock management for concurrent access and support for larger disk drives like the RA81.[3] By Version 4.0 (September 1984), VMS incorporated foundational clustering via VAXclusters, allowing multiple VAX systems to share resources like disks through the Distributed Lock Manager and QIO system services, while also adding security enhancements and MicroVMS for smaller configurations.[3] Version 4.2 (October 1985) further advanced reliability with volume shadowing for disk redundancy and RMS journaling to protect against data corruption during failures.[3] In 1991, DEC renamed the operating system to OpenVMS to signify its growing adherence to open standards like POSIX and compatibility with third-party hardware, though it remained proprietary.[3]Architectural Ports and Transitions