Recent from talks

Nothing was collected or created yet.

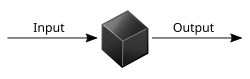

Black-box testing

View on Wikipedia| Black box systems | |

|---|---|

| |

| System | |

| Black box, Oracle machine | |

| Methods and techniques | |

| Black-box testing, Blackboxing | |

| Related techniques | |

| Feed forward, Obfuscation, Pattern recognition, White box, White-box testing, Gray-box testing, System identification | |

| Fundamentals | |

| A priori information, Control systems, Open systems, Operations research, Thermodynamic systems | |

Black-box testing, sometimes referred to as specification-based testing,[1] is a method of software testing that examines the functionality of an application without peering into its internal structures or workings. This method of test can be applied virtually to every level of software testing: unit, integration, system and acceptance. Black-box testing is also used as a method in penetration testing, where an ethical hacker simulates an external hacking or cyber warfare attack with no knowledge of the system being attacked.

Test procedures

[edit]Specification-based testing aims to test the functionality of software according to the applicable requirements.[2] This level of testing usually requires thorough test cases to be provided to the tester, who then can simply verify that for a given input, the output value (or behavior), either "is" or "is not" the same as the expected value specified in the test case.

Specific knowledge of the application's code, internal structure and programming knowledge in general is not required.[3] The tester is aware of what the software is supposed to do but is not aware of how it does it. For instance, the tester is aware that a particular input returns a certain, invariable output but is not aware of how the software produces the output in the first place.[4]

Test cases

[edit]Test cases are built around specifications and requirements, i.e., what the application is supposed to do. Test cases are generally derived from external descriptions of the software, including specifications, requirements and design parameters. Although the tests used are primarily functional in nature, non-functional tests may also be used. The test designer selects both valid and invalid inputs and determines the correct output, often with the help of a test oracle or a previous result that is known to be good, without any knowledge of the test object's internal structure.

Test design techniques

[edit]Typical black-box test design techniques include decision table testing, all-pairs testing, equivalence partitioning, boundary value analysis, cause–effect graph, error guessing, state transition testing, use case testing, user story testing, domain analysis, and syntax testing.[5][6]

Test coverage

[edit]Test coverage refers to the percentage of software requirements that are tested by black-box testing for a system or application.[7] This is in contrast with code coverage, which examines the inner workings of a program and measures the degree to which the source code of a program is executed when a test suite is run.[8] Measuring test coverage makes it possible to quickly detect and eliminate defects, to create a more comprehensive test suite. and to remove tests that are not relevant for the given requirements.[8][9]

Effectiveness

[edit]Black-box testing may be necessary to assure correct functionality, but it is insufficient to guard against complex or high-risk situations.[10] An advantage of the black box technique is that no programming knowledge is required. Whatever biases the programmers may have had, the tester likely has a different set and may emphasize different areas of functionality. On the other hand, black-box testing has been said to be "like a walk in a dark labyrinth without a flashlight."[11] Because they do not examine the source code, there are situations when a tester writes many test cases to check something that could have been tested by only one test case or leaves some parts of the program untested.

See also

[edit]References

[edit]- ^ Jerry Gao; H.-S. J. Tsao; Ye Wu (2003). Testing and Quality Assurance for Component-based Software. Artech House. pp. 170–. ISBN 978-1-58053-735-3.

- ^ Laycock, Gilbert T. (1993). The Theory and Practice of Specification Based Software Testing (PDF) (dissertation thesis). Department of Computer Science, University of Sheffield. Retrieved January 2, 2018.

- ^ Milind G. Limaye (2009). Software Testing. Tata McGraw-Hill Education. p. 216. ISBN 978-0-07-013990-9.

- ^ Patton, Ron (2005). Software Testing (2nd ed.). Indianapolis: Sams Publishing. ISBN 978-0672327988.

- ^ Forgács, István; Kovács, Attila (2019). Practical Test Design: Selection of Traditional and Automated Test Design Techniques. BCS Learning & Development Limited. ISBN 978-1780174723.

- ^ Black, R. (2011). Pragmatic Software Testing: Becoming an Effective and Efficient Test Professional. John Wiley & Sons. pp. 44–6. ISBN 978-1-118-07938-6.

- ^ IEEE Standard Glossary of Software Engineering Terminology (Technical report). IEEE. 1990. 610.12-1990.

- ^ a b "Code Coverage vs Test Coverage". BrowserStack. Retrieved 2024-04-13.

- ^ Andrades, Geosley (2023-12-16). "Top 8 Test Coverage Techniques in Software Testing". ACCELQ Inc. Retrieved 2024-04-13.

- ^ Bach, James (June 1999). "Risk and Requirements-Based Testing" (PDF). Computer. 32 (6): 113–114. Retrieved August 19, 2008.

- ^ Savenkov, Roman (2008). How to Become a Software Tester. Roman Savenkov Consulting. p. 159. ISBN 978-0-615-23372-7.

External links

[edit]- BCS SIGIST (British Computer Society Specialist Interest Group in Software Testing): Standard for Software Component Testing, Working Draft 3.4, 27. April 2001.

Black-box testing

View on GrokipediaOverview

Definition and Principles

Black-box testing is a software testing methodology that evaluates the functionality of an application based solely on its specifications, inputs, and outputs, without any knowledge of the internal code structure or implementation details.[1] This approach, also known as specification-based testing, treats the software component or system as a "black box," focusing exclusively on whether the observed behavior matches the expected results defined in the requirements.[7] It can encompass both functional testing, which verifies specific behaviors, and non-functional testing, such as performance or usability assessments, all derived from external specifications.[8] The foundational principles of black-box testing emphasize independence from internal design choices, ensuring that tests validate the software's adherence to user requirements and expected external interfaces rather than how those requirements are met internally.[9] Central to this is the principle of requirement-based validation, where test cases are derived directly from documented specifications to confirm that the software produces correct outputs for given inputs, including both valid and invalid scenarios, thereby prioritizing end-user perspective and overall system correctness.[10] Another key principle is the coverage of probable events, aiming to exercise the most critical paths in the specification to detect deviations in behavior without relying on code paths or algorithms.[7] Black-box testing applies across all levels of the software testing lifecycle, including unit testing for individual components, integration testing for component interactions, system testing for the complete integrated system, and acceptance testing to confirm alignment with business needs.[11] For instance, in testing a login function, a black-box approach involves supplying valid credentials to verify successful access and granting appropriate privileges, while providing invalid credentials to check for appropriate error messages and denial of access, all without examining the underlying authentication code.[2]Historical Development

The roots of black-box testing trace back to analogies from control systems engineering in the 1950s and 1960s, when software verification practices began to focus on external behavior rather than internal structure.[4] During this era, software testing was rudimentary and often manual, but concepts from control systems engineering—viewing components as opaque "black boxes"—began influencing software verification to focus on external behavior rather than code structure.[4] A key milestone occurred in the 1960s with its adoption in high-reliability domains like military and aerospace projects. NASA, for instance, incorporated functional testing—essentially black-box methods—to validate software against requirements specifications in early space programs, ensuring outputs met mission-critical needs without delving into implementation details.[12] This approach gained traction as software complexity grew with projects like the Apollo missions, where rigorous external validation helped mitigate risks in unproven computing environments.[13] The 1970s and 1980s saw formalization through emerging standards that codified specification-based testing. The concept was formalized in Glenford J. Myers' 1979 book The Art of Software Testing, which introduced and distinguished black-box testing techniques from white-box approaches.[14] The IEEE 829 standard for software test documentation, first published in 1983, outlined processes for black-box testing by emphasizing tests derived from requirements and user needs, independent of internal code.[14] This shift extended black-box practices from specialized sectors to broader software engineering, including the rise of commercial tools in the 1980s that introduced automated oracles for verifying expected outputs in GUI and system-level tests.[14] By the 1990s, black-box testing evolved to integrate with iterative development paradigms, particularly as agile methodologies emerged. Capture-and-replay tools dominated black-box practices, enabling rapid functional validation in cycles that aligned with agile's emphasis on continuous feedback and adaptability.[15] This integration facilitated black-box testing's role in agile frameworks like Extreme Programming, where it supported user-story verification without code exposure.[16] Into the 2020s, black-box testing has seen heightened emphasis in DevOps and cloud-native environments, where it underpins automated pipelines for scalable, containerized applications, though without introducing paradigm-shifting changes. Its focus on end-to-end functionality remains vital for ensuring reliability in dynamic, microservices-based systems.[17]Comparisons

With White-box Testing

White-box testing, also known as structural or glass-box testing, involves examining the internal code paths, structures, and logic of a software component or system to derive and select test cases. This approach requires detailed knowledge of the program's implementation, enabling testers to verify how data flows through the code and whether all branches and conditions are adequately exercised.[18] In contrast, black-box testing treats the software as an opaque entity, focusing solely on inputs, outputs, and external behaviors without accessing or analyzing the internal code structure.[1] The primary methodological difference lies in perspective and access: black-box testing adopts an external, specification-based view akin to an end-user's interaction, requiring no programming knowledge or code visibility, whereas white-box testing provides a transparent internal view, necessitating expertise in the codebase to identify logic flaws.[19] This distinction influences test design, with black-box methods relying on requirements and use cases, and white-box methods targeting code coverage criteria like statement or branch execution.[20] Black-box testing is particularly suited for validating end-user functionality and system-level requirements, such as ensuring that user interfaces respond correctly to inputs in integration or acceptance testing phases.[21] Conversely, white-box testing excels in developer-level debugging and unit testing, where the goal is to uncover defects in code logic, optimize algorithm performance, or confirm adherence to design specifications.[22] Selection between the two depends on testing objectives, available resources, and project stage, with black-box often applied later in the development lifecycle to simulate real-world usage. These approaches are complementary and frequently integrated in hybrid strategies to achieve comprehensive coverage, as white-box testing reveals internal issues that black-box might overlook, while black-box ensures overall functional alignment.[23] For instance, in a web application, black-box testing might verify that a login UI correctly handles valid and invalid credentials by checking response messages, whereas white-box testing could inspect the authentication algorithm's efficiency by tracing execution paths to ensure no redundant computations occur under load.[24]With Grey-box Testing

Grey-box testing, also known as gray-box testing, is a software testing approach that incorporates partial knowledge of the internal structures or workings of the application under test, such as database schemas, API endpoints, or high-level architecture, while still treating the system largely as a black box from the tester's perspective.[25] This hybrid method allows testers to design more informed test cases without requiring full access to the source code, enabling evaluation of both functional behavior and certain structural elements.[26] In contrast to black-box testing, which relies solely on external inputs and outputs with zero knowledge of internal implementation, grey-box testing introduces limited structural insights to guide testing, resulting in more targeted and efficient exploration of potential defects.[27] Black-box testing emphasizes behavioral validation from an end-user viewpoint, potentially uncovering usability issues that internal knowledge might overlook, whereas grey-box testing leverages partial information to enhance test coverage in areas like data flows or integration points, bridging the gap between pure functionality and code-level scrutiny.[28] Black-box testing excels in providing an unbiased, real-world simulation of user interactions, making it ideal for validating system requirements without preconceptions about internals, though it may miss subtle structural flaws. Conversely, grey-box testing offers advantages in efficiency for scenarios like security auditing or integration testing, where partial knowledge—such as user roles or session management—allows testers to prioritize high-risk paths and detect vulnerabilities like injection attacks more effectively than with black-box alone.[27] A representative example illustrates the distinction: in black-box testing of a web application's login feature, the tester verifies external behaviors like successful authentication with valid credentials or error messages for invalid ones, without accessing any backend details. In grey-box testing of the same feature, the tester uses known information, such as session variable structures or database query patterns, to probe deeper, for instance, by injecting malformed session data to check for proper handling of unauthorized access attempts.[29] Grey-box testing emerged as a practical evolution in the 1990s and early 2000s, particularly in the context of web and distributed application testing, where the increasing complexity of integrations necessitated a balanced approach between black-box realism and white-box depth.[30]Design Techniques

Test Case Creation

Test case creation in black-box testing begins with identifying the functional and non-functional requirements of the software under test, which serve as the foundation for deriving test conditions without considering internal code structure. Testers then define inputs, including both valid and invalid variations, to explore the system's behavior across expected scenarios, followed by specifying the corresponding expected outputs based on the requirements.[31] These cases are documented in a traceable format, such as spreadsheets or specialized tools, to ensure clarity and maintainability throughout the testing lifecycle.[32] A typical test case in black-box testing comprises several key components to provide a complete and executable specification:- Preconditions: Conditions that must be met before executing the test, such as system state or data setup.[31]

- Steps: Sequential instructions outlining how to perform the test, focusing on user interactions or inputs.[32]

- Inputs: Specific data values, ranges, or actions provided to the system, encompassing valid entries and potential error-inducing invalid ones.[31]

- Expected Results: Anticipated system responses or outputs that align with the requirements, including any observable behaviors or messages.[32]

- Postconditions: The expected state of the system or data after test execution, verifying overall impact.[31]

Key Methods

Black-box testing employs several key methods to generate efficient test cases by focusing on inputs, outputs, and system behavior without regard to internal code structure. These techniques aim to maximize coverage while minimizing redundancy, drawing from established principles in software engineering to identify defects systematically. Among the most widely adopted are equivalence partitioning, boundary value analysis, decision table testing, state transition testing, use case testing, and error guessing, each targeting different aspects of functional validation. Equivalence partitioning divides the input domain into partitions or classes where the software is expected to exhibit equivalent behavior for all values within a class, allowing testers to select one representative value per partition to reduce the number of test cases required. This method assumes that if one value in a partition causes an error, similar values will too, thereby streamlining testing efforts for large input ranges. It is particularly effective for handling both valid and invalid inputs, such as categorizing user ages into groups like under 18, 18-65, and over 65.[8] Boundary value analysis complements equivalence partitioning by focusing on the edges or boundaries of these input partitions, as errors are more likely to occur at the extremes of valid ranges, just inside or outside them. For instance, in validating an age field accepting values from 18 to 65, testers would examine boundary values like 17 (just below minimum), 18 (minimum), 65 (maximum), and 66 (just above maximum) to detect off-by-one errors or range mishandling. This technique, rooted in domain testing strategies, enhances defect detection by prioritizing critical transition points in input domains. Decision table testing represents complex business rules and conditions as a tabular format, with columns for input conditions, rules, and corresponding actions or outputs, enabling exhaustive coverage of combinations without exponential test case growth. Each row (rule) in the table corresponds to a unique test case, making it ideal for systems with multiple interdependent conditions, such as loan approval processes involving factors like credit score, income, and employment status. The table structure ensures all possible condition-action mappings are tested systematically.[37]| Conditions | Rule 1 | Rule 2 | Rule 3 | Rule 4 |

|---|---|---|---|---|

| Credit Score > 700 | Y | Y | N | N |

| Income > $50K | Y | N | Y | N |

| Employed | Y | Y | N | N |

| Actions | ||||

| Approve Loan | X | - | - | - |

| Request More Info | - | X | X | - |

| Reject Loan | - | - | - | X |