Recent from talks

Nothing was collected or created yet.

Phrase structure rules

View on WikipediaPhrase structure rules are a type of rewrite rule used to describe a given language's syntax and are closely associated with the early stages of transformational grammar, proposed by Noam Chomsky in 1957.[1] They are used to break down a natural language sentence into its constituent parts, also known as syntactic categories, including both lexical categories (parts of speech) and phrasal categories. A grammar that uses phrase structure rules is a type of phrase structure grammar. Phrase structure rules as they are commonly employed operate according to the constituency relation, and a grammar that employs phrase structure rules is therefore a constituency grammar; as such, it stands in contrast to dependency grammars, which are based on the dependency relation.[2]

Definition and examples

[edit]Phrase structure rules are usually of the following form:

meaning that the constituent is separated into the two subconstituents and . Some examples for English are as follows:

The first rule reads: A S (sentence) consists of a NP (noun phrase) followed by a VP (verb phrase). The second rule reads: A noun phrase consists of an optional Det (determiner) followed by a N (noun). The third rule means that a N (noun) can be preceded by an optional AP (adjective phrase) and followed by an optional PP (prepositional phrase). The round brackets indicate optional constituents.

Beginning with the sentence symbol S, and applying the phrase structure rules successively, finally applying replacement rules to substitute actual words for the abstract symbols, it is possible to generate many proper sentences of English (or whichever language the rules are specified for). If the rules are correct, then any sentence produced in this way ought to be grammatically (syntactically) correct. It is also to be expected that the rules will generate syntactically correct but semantically nonsensical sentences, such as the following well-known example:

This sentence was constructed by Noam Chomsky as an illustration that phrase structure rules are capable of generating syntactically correct but semantically incorrect sentences. Phrase structure rules break sentences down into their constituent parts. These constituents are often represented as tree structures (dendrograms). The tree for Chomsky's sentence can be rendered as follows:

A constituent is any word or combination of words that is dominated by a single node. Thus each individual word is a constituent. Further, the subject NP Colorless green ideas, the minor NP green ideas, and the VP sleep furiously are constituents. Phrase structure rules and the tree structures that are associated with them are a form of immediate constituent analysis.

In transformational grammar, systems of phrase structure rules are supplemented by transformation rules, which act on an existing syntactic structure to produce a new one (performing such operations as negation, passivization, etc.). These transformations are not strictly required for generation, as the sentences they produce could be generated by a suitably expanded system of phrase structure rules alone, but transformations provide greater economy and enable significant relations between sentences to be reflected in the grammar.

Top down

[edit]An important aspect of phrase structure rules is that they view sentence structure from the top down. The category on the left of the arrow is a greater constituent and the immediate constituents to the right of the arrow are lesser constituents. Constituents are successively broken down into their parts as one moves down a list of phrase structure rules for a given sentence. This top-down view of sentence structure stands in contrast to much work done in modern theoretical syntax. In Minimalism[3] for instance, sentence structure is generated from the bottom up. The operation Merge merges smaller constituents to create greater constituents until the greatest constituent (i.e. the sentence) is reached. In this regard, theoretical syntax abandoned phrase structure rules long ago, although their importance for computational linguistics seems to remain intact.

Alternative approaches

[edit]Constituency vs. dependency

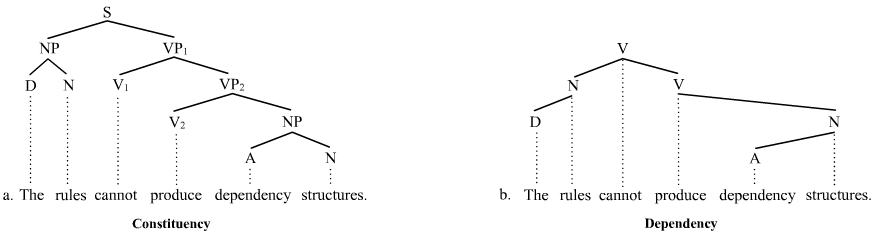

[edit]Phrase structure rules as they are commonly employed result in a view of sentence structure that is constituency-based. Thus, grammars that employ phrase structure rules are constituency grammars (= phrase structure grammars), as opposed to dependency grammars,[4] which view sentence structure as dependency-based. What this means is that for phrase structure rules to be applicable at all, one has to pursue a constituency-based understanding of sentence structure. The constituency relation is a one-to-one-or-more correspondence. For every word in a sentence, there is at least one node in the syntactic structure that corresponds to that word. The dependency relation, in contrast, is a one-to-one relation; for every word in the sentence, there is exactly one node in the syntactic structure that corresponds to that word. The distinction is illustrated with the following trees:

The constituency tree on the left could be generated by phrase structure rules. The sentence S is broken down into smaller and smaller constituent parts. The dependency tree on the right could not, in contrast, be generated by phrase structure rules (at least not as they are commonly interpreted).

Representational grammars

[edit]A number of representational phrase structure theories of grammar never acknowledged phrase structure rules, but have pursued instead an understanding of sentence structure in terms the notion of schema. Here phrase structures are not derived from rules that combine words, but from the specification or instantiation of syntactic schemata or configurations, often expressing some kind of semantic content independently of the specific words that appear in them. This approach is essentially equivalent to a system of phrase structure rules combined with a noncompositional semantic theory, since grammatical formalisms based on rewriting rules are generally equivalent in power to those based on substitution into schemata.

So in this type of approach, instead of being derived from the application of a number of phrase structure rules, the sentence Colorless green ideas sleep furiously would be generated by filling the words into the slots of a schema having the following structure:

- [NP[ADJ N] VP[V] AP[ADV]]

And which would express the following conceptual content:

- X DOES Y IN THE MANNER OF Z

Though they are non-compositional, such models are monotonic. This approach is highly developed within Construction grammar[5] and has had some influence in Head-Driven Phrase Structure Grammar[6] and lexical functional grammar,[7] the latter two clearly qualifying as phrase structure grammars.

See also

[edit]Notes

[edit]- ^ For general discussions of phrase structure rules, see for instance Borsley (1991:34ff.), Brinton (2000:165), Falk (2001:46ff.).

- ^ Dependency grammars are associated above all with the work of Lucien Tesnière (1959).

- ^ See for instance Chomsky (1995).

- ^ The most comprehensive source on dependency grammar is Ágel et al. (2003/6).

- ^ Concerning Construction Grammar, see Goldberg (2006).

- ^ Concerning Head-Driven Phrase Structure Grammar, see Pollard and Sag (1994).

- ^ Concerning Lexical Functional Grammar, see Bresnan (2001).

References

[edit]- Ágel, V., Ludwig Eichinger, Hans-Werner Eroms, Peter Hellwig, Hans Heringer, and Hennig Lobin (eds.) 2003/6. Dependency and Valency: An International Handbook of Contemporary Research. Berlin: Walter de Gruyter.

- Borsley, R. 1991. Syntactic theory: A unified approach. London: Edward Arnold.

- Bresnan, Joan 2001. Lexical Functional Syntax.

- Brinton, L. 2000. The structure of modern English. Amsterdam: John Benjamins Publishing Company.

- Carnie, A. 2013. Syntax: A Generative Introduction, 3rd edition. Oxford: Blackwell Publishing.

- Chomsky, N. 1957. Syntactic Structures. The Hague/Paris: Mouton.

- Chomsky, N. 1995. The Minimalist Program. Cambridge, Mass.: The MIT Press.

- Falk, Y. 2001. Lexical-Functional Grammar: An introduction to parallel constraint-based syntax. Stanford, CA: CSLI Publications.

- Goldberg, A. 2006. Constructions at Work: The Nature of Generalization in Language. Oxford University Press.

- Pollard, C. and I. Sag 1994. Head-driven phrase structure grammar. Chicago: University of Chicago Press.

- Tesnière, L. 1959. Éleménts de syntaxe structurale. Paris: Klincksieck.

Phrase structure rules

View on GrokipediaFundamentals

Definition and Purpose

Phrase structure rules are formal rewrite rules employed in generative grammar to generate hierarchical syntactic structures in natural language. These rules start from an initial non-terminal symbol, such as S (representing a sentence), and systematically expand non-terminal symbols into sequences of other non-terminals and terminal symbols until only terminals—corresponding to actual words—remain, thereby producing well-formed sentences while capturing the constituent structure of the language.[6] The primary purpose of phrase structure rules is to model the combinatorial processes by which words form phrases and sentences in human languages, enabling the description of an infinite array of grammatical utterances from a finite set of rules. In generative linguistics, they provide a mechanistic framework for understanding syntax as a generative system, distinct from semantics or phonology, and serve as the foundational component for deriving the structural descriptions necessary for further linguistic analysis.[7][6] Key components of phrase structure rules include non-terminal symbols, which denote syntactic categories like S for sentence or NP for noun phrase and can be further rewritten; terminal symbols, which are the lexical items or words that cannot be expanded; and the arrow notation (→), which specifies the rewriting operation, as in the general form X → Y Z, where X is replaced by the sequence Y Z.[8][7] These rules were introduced by Noam Chomsky in the 1950s, particularly in his 1957 work Syntactic Structures, to provide a rigorous formalization of syntax that addressed the shortcomings of earlier approaches like immediate constituent analysis, which struggled with recursion, ambiguity, and structural dependencies by relying on overly simplistic or ad hoc divisions.[6]Basic Examples

A foundational set of phrase structure rules for modeling simple English sentences, as proposed by Noam Chomsky in his seminal work, includes the following: , , and or for intransitive verbs. These rules specify how larger syntactic units, such as sentences (S), are rewritten as combinations of noun phrases (NP) and verb phrases (VP), with NPs expanding to determiners (Det) and nouns (N), and VPs to verbs (V) optionally followed by NPs. To illustrate their application, consider the derivation of the sentence "The cat sleeps" using a top-down process. Start with the initial symbol S and apply the rules sequentially: (1) ; (2) , where Det is realized as "the" and N as "cat"; (3) , where V is realized as "sleeps". This yields the terminal string "the cat sleeps", demonstrating how the rules generate a well-formed declarative sentence by successively substituting non-terminals with their constituents until only lexical items remain.[9] Phrase structure rules also incorporate recursion to account for embedding, allowing unlimited complexity in syntactic structures. For example, extending the NP rule to (where PP is a prepositional phrase, such as ) enables constructions like "the cat on the mat", derived as: "the" "cat" "on" "the mat" (with the inner NP as "the mat"). This recursive property permits nested phrases, as in "the cat on the mat in the house", reflecting the generative power of the system to produce hierarchically structured sentences of arbitrary depth. However, simple phrase structure rules exhibit limitations in capturing certain phenomena, particularly structural ambiguity where a single sentence admits multiple parses. For instance, "I saw the man with the telescope" can be derived in two ways: one attaching the prepositional phrase "with the telescope" to the verb phrase (meaning using a telescope to see the man), or to the noun phrase (meaning the man holding a telescope). Such ambiguities highlight the need for additional mechanisms beyond basic rules to fully describe English syntax, as they generate multiple valid structures without specifying the intended meaning.[10]Theoretical Foundations

Origins in Generative Grammar

Phrase structure rules were first systematically introduced by Noam Chomsky in his 1957 book Syntactic Structures, where they served as the core of the phrase-structure component in transformational-generative grammar.[11] These rules generate hierarchical syntactic structures by rewriting categories into sequences of constituents, providing a formal mechanism to describe the well-formed sentences of a language.[11] Chomsky positioned this generative approach as a departure from earlier taxonomic linguistics, aiming to model speakers' innate linguistic competence rather than merely classifying observed data.[11] This innovation contrasted sharply with the Bloomfieldian structuralist tradition, exemplified by immediate constituent analysis, which focused on binary divisions of sentences into immediate constituents without formal generative procedures.[12] Chomsky argued that such methods, rooted in behaviorist principles, failed to capture the creative aspect of language use and the underlying regularities of syntax, as they lacked the predictive power needed for a full theory of grammar.[12] Instead, phrase structure rules enabled the explicit enumeration of possible structures, forming the input to transformational rules that derive actual utterances.[11] Through the 1960s, Chomsky's framework evolved into what became known as Standard Theory, detailed in Aspects of the Theory of Syntax (1965), where phrase structure rules were refined as part of the grammar's base component.[13] In this model, the rules generate deep structures—abstract representations tied to semantic interpretation—before transformations produce surface structures, addressing limitations in earlier formulations by incorporating lexical insertions and categorial specifications.[13] This development emphasized the rules' role in achieving descriptive adequacy, explaining how languages conform to universal principles while varying parametrically.[13] The introduction and evolution of phrase structure rules profoundly influenced early computational linguistics, offering a rigorous basis for algorithmic sentence analysis in natural language processing systems of the 1960s.[14] Researchers adapted these rules to compile formal grammars for machine translation and parsing, as seen in efforts to verify syntactic consistency through computational derivation of phrase markers.[14] This integration bridged theoretical syntax with practical computation, laying groundwork for later developments in automated language understanding.[15]Relation to Context-Free Grammars

Phrase structure rules form the core productions of context-free grammars (CFGs), defined as rules of the form , where is a single nonterminal symbol and is a (possibly empty) string consisting of terminal and nonterminal symbols. These rules generate hierarchical constituent structures for sentences in natural languages, capturing the recursive nature of syntax without dependence on surrounding context. In generative linguistics, such rules directly correspond to the mechanisms of CFGs, enabling the formal description of phrase-level organizations like noun phrases and verb phrases.[16][17] Within the Chomsky hierarchy of formal grammars, CFGs—and thus the phrase structure rules they employ—occupy Type-2, generating context-free languages that exhibit balanced nesting but no inherent sensitivity to arbitrary contexts. These languages are equivalently recognized by nondeterministic pushdown automata, which use a stack to manage recursive structures, providing a computational model for parsing syntactic hierarchies. The hierarchy positions Type-2 grammars between the more restrictive Type-3 regular grammars and the more permissive Type-1 context-sensitive grammars, highlighting the adequacy of phrase structure rules for modeling core aspects of human language syntax.[18][19] The equivalence between phrase structure grammars and CFGs holds for those without crossing dependencies, where derivations produce non-intersecting branches in phrase structure trees; grammars permitting such crossings would require greater formal power beyond Type-2, but standard linguistic applications avoid them to maintain context-free tractability. This equivalence is established by the direct mapping of phrase structure productions to CFG rules, with proofs relying on constructive conversions that preserve generated languages.[20][17] This formal connection has key implications for resolving syntactic ambiguity in language, as CFGs allow multiple valid derivations for the same string, corresponding to distinct phrase structures (e.g., "I saw the man with the telescope" admitting attachment ambiguities). Such ambiguities underscore the nondeterminism inherent in context-free models, informing computational linguistics approaches to disambiguation via probabilistic parsing or additional constraints.[17]Representation Methods

Rule Notation and Syntax

Phrase structure rules in generative linguistics are commonly notated using a convention akin to the Backus-Naur Form (BNF), originally developed for formal language description, where a non-terminal category on the left side of an arrow rewrites to a string of terminals and/or non-terminals on the right, expressed as .[7] This notation, adapted from computer science, allows for the recursive expansion of syntactic categories to generate well-formed sentences, with the arrow symbolizing derivation.[21] In this system, non-terminal symbols—representing phrasal categories such as S (sentence) or NP (noun phrase)—are conventionally written in uppercase letters, while terminals—words or morphemes like "the" or "dog"—appear in lowercase or enclosed in quotation marks to distinguish lexical items from abstract categories.[7] Variants of BNF notation accommodate linguistic complexities, such as optionality and repetition. Optional elements are often enclosed in parentheses, as in , indicating that the noun phrase may or may not appear, while repetition is handled via the Kleene star symbol (*), denoting zero or more occurrences, though in practice, linguists frequently use recursive rules (e.g., ) to capture iterative structures like prepositional phrases.[7] These extensions maintain the formalism's precision while enabling concise representation of natural language variability. To encode additional syntactic features or constituency, phrase structures are often depicted through labeled bracketing, a linear notation using square brackets with category labels, such as , which mirrors hierarchical organization without visual trees.[22] This method highlights dominance and immediate constituency relations directly in text form. Notational practices vary across theoretical frameworks within generative grammar. In Generalized Phrase Structure Grammar (GPSG), rules incorporate feature specifications, such as , where superscripts denote bar levels and features like head (H) constrain expansions, emphasizing metarules for rule generalization.[23] By contrast, the Minimalist Program largely eschews explicit rewrite rules in favor of bare phrase structure notation derived from the Merge operation, using set-theoretic representations like to denote unlabeled projections without fixed schemata.[24]Phrase Structure Trees

Phrase structure trees, also referred to as phrase markers, provide a graphical representation of the hierarchical organization of sentences according to phrase structure rules in generative grammar. Each node in the tree denotes a syntactic constituent, such as a phrase or word, while branches depict the expansion of non-terminal categories into their subconstituents via rule applications; the terminal nodes at the leaves correspond to lexical items or words in their surface order. This structure captures the recursive embedding of phrases, enabling a clear depiction of how rules generate well-formed sentences from an initial symbol like S (sentence).[25] The construction of a phrase structure tree begins with the root node labeled S and proceeds downward by applying relevant rules to expand each non-terminal node until only terminals remain. For instance, a basic rule set might include S → NP VP, NP → Det N, and VP → V NP, leading to successive branchings that group words into phrases. Consider the sentence "The dog chased the cat": the tree starts with S branching to NP ("The dog") and VP ("chased the cat"); the first NP further branches to Det ("The") and N ("dog"); the VP branches to V ("chased") and another NP ("the cat"), which in turn branches to Det ("the") and N ("cat"). This results in a binary branching structure where all words appear as leaves from left to right, preserving their linear sequence.[26] Key properties of phrase structure trees include constituency, dominance, and precedence. Constituency refers to the grouping of words into subtrees that function as single units, such as noun phrases or verb phrases, which can be tested through syntactic behaviors like substitution or movement. Dominance describes the hierarchical relation where a node and its branches contain (or "dominate") all descendant nodes in the subtree below it, ensuring that larger phrases encompass their internal components. Precedence captures the linear ordering, where sister nodes (sharing the same parent) maintain the left-to-right sequence of their terminals, reflecting the word order of the language.[27] These trees are instrumental in identifying ungrammaticality and structural ambiguity. A sentence is ungrammatical if no valid tree can be constructed under the given rules, as the words fail to form coherent constituents. For structural ambiguity, multiple possible trees may exist for the same string, arising from different rule applications; a classic case is prepositional phrase (PP) attachment, as in "I saw the man with the telescope," where one tree attaches the PP to the verb phrase (indicating the speaker used a telescope) and another to the noun phrase (indicating the man held the telescope). Trees constructed via top-down derivation from phrase structure rules highlight such alternatives by showing distinct hierarchical attachments.[28]Derivation and Parsing

Top-Down Derivation

Top-down derivation in phrase structure grammars begins with the start symbol, typically denoted as S (for sentence), and proceeds recursively by applying rewrite rules to expand non-terminal symbols into sequences of non-terminals and terminals until only terminal symbols remain, generating a complete sentence.[6] This process models the generative aspect of syntax, where abstract syntactic categories are successively refined to produce concrete linguistic forms.[13] A representative example illustrates this algorithm using a simple set of phrase structure rules, such as S → NP VP, NP → Det N, VP → V NP, Det → the, N → man | ball, and V → hit. The derivation for the sentence "the man hit the ball" unfolds as follows:- S

- NP VP (applying S → NP VP)

- Det N VP (applying NP → Det N)

- Det N V NP (applying VP → V NP)

- the N V NP (applying Det → the)

- the man V NP (applying N → man)

- the man hit NP (applying V → hit)

- the man hit Det N (applying NP → Det N)

- the man hit the N (applying Det → the)

- the man hit the ball (applying N → ball)

Bottom-Up Parsing

Bottom-up parsing with phrase structure rules constructs syntactic structure by starting from the terminal symbols (words) of an input sentence and progressively combining them into larger non-terminal constituents using the inverse of production rules, until the start symbol S is reached. This approach reverses the generative process defined by the rules, recognizing sequences that match the right-hand sides (RHS) of productions and replacing them with the corresponding left-hand side (LHS) non-terminal. Unlike generative derivation, it analyzes surface forms to build phrase structure trees incrementally from the bottom up, providing a foundation for computational syntax analysis in context-free grammars underlying phrase structure rules.[30] A simple example illustrates the process for the sentence "The cat sleeps," using the following phrase structure rules in Chomsky normal form:S → NP VP

NP → Det N

VP → V

Det → the

N → cat

V → sleeps

S → NP VP

NP → Det N

VP → V

Det → the

N → cat

V → sleeps

Advanced Concepts

X-bar Theory

X-bar theory represents a significant extension of basic phrase structure rules within generative grammar, introducing a hierarchical template that captures the universal structure of phrases across languages. Developed by Noam Chomsky in the 1970s, particularly in his 1970 work "Remarks on Nominalization," the theory aimed to formalize the endocentric nature of phrases—where every phrase is built around a head—and to account for cross-linguistic similarities in how specifiers, heads, complements, and adjuncts are organized.[34] This approach reformulated earlier ideas from Zellig Harris on category structures while emphasizing that phrases are not arbitrary but follow a consistent schema that generalizes across syntactic categories like nouns, verbs, and prepositions.[35] Ray Jackendoff further elaborated these ideas in his 1977 monograph, providing detailed applications and refinements that solidified X-bar as a core component of transformational syntax.[36] The core principles of X-bar theory are expressed through a set of generalized phrase structure rules, known as the X-bar schema, which impose a three-level hierarchy on phrases using bar notation to indicate projection levels: X^0 (the head), X' (the intermediate projection), and XP or X'' (the maximal projection). The basic schema consists of the following rules: Here, the head X^0 (e.g., a noun or verb) projects to X', which can combine with a complement (a phrase selected by the head) or an adjunct (a modifier that adds information without being subcategorized), and the full XP includes an optional specifier (often a phrase providing additional specification, like a determiner).[35] These rules replace language-specific rewrite rules with a universal template, allowing recursion through multiple adjuncts or specifiers in some extensions, while ensuring endocentricity by requiring every phrase to have a head of the same category.[36] The bar levels (0, 1, 2) distinguish the lexical head from its phrasal projections, providing a uniform way to represent constituency that extends basic phrase structure trees by adding intermediate structure.[35] In application to English phrases, X-bar theory structures noun phrases (NPs) as N-bar projections, where the noun serves as the head, complemented by phrases like prepositional phrases, modified by adjuncts such as adjectives or relative clauses, and specified by determiners in the specifier position. For example, in the phrase "the old house near the river," "house" is the N^0 head, "near the river" is a PP complement attached to N', "old" is an AP adjunct to N', and "the" occupies Spec-NP as a specifier.[37] This N-bar structure captures how modifiers and specifiers cluster around the head, explaining phenomena like word order constraints and agreement without proliferating idiosyncratic rules. Similar patterns apply to verb phrases (VPs), adjective phrases (APs), and prepositional phrases (PPs), demonstrating the theory's cross-categorial uniformity in English syntax.[36] Despite its influence, X-bar theory has faced criticisms for potential overgeneration, as the recursive adjunction rule (X' → Adjunct X') permits unlimited stacking of modifiers without inherent limits, leading to structurally possible but ungrammatical or semantically implausible outputs unless constrained by other grammatical modules like theta-theory or binding principles.[38] Additionally, the fixed three-bar hierarchy has been seen as overly rigid, failing to accommodate variations in phrase complexity across languages. These issues prompted revisions in Chomsky's Minimalist Program (1995), which replaces the X-bar schema with "bare phrase structure," eliminating bar levels and fixed templates in favor of a simpler Merge operation that builds phrases dynamically from lexical items, deriving endocentricity from general computational principles rather than stipulated rules.[24]Head-Driven Phrase Structure Grammar

Head-Driven Phrase Structure Grammar (HPSG) is a constraint-based grammatical framework developed by Carl Pollard and Ivan Sag, initially presented in their 1987 work as an evolution of phrase structure rules that prioritizes lexical information and feature unification over transformational operations. In HPSG, syntactic structures are represented as typed feature structures, where phrase structure rules function as declarative constraints that enforce compatibility between daughters and the mother node through unification, rather than generative rewriting.[39] This approach draws brief influence from X-bar theory's emphasis on headedness but extends it by making all constraints, including those on phrase structure, lexically driven and non-transformational. A core mechanism in HPSG is the Head Feature Convention (HFC), which stipulates that the head features of a phrasal category are identical to those of its head daughter, ensuring that properties like part-of-speech and agreement percolate from the head without additional stipulations.[39] Subcategorization is integrated via the valence feature, which lists required complements and specifiers in lexical entries, allowing the grammar to license structures based on lexical specifications rather than separate rules. Representative schemas include the Head-Complement Schema, which combines a head with zero or more complements satisfying its valence requirements:Head-Complement Schema:

SYNSEM | LOC | CAT | HEAD → HD

SYNSEM | LOC | CAT | VAL | COMPS ⊇ CMP

DTRS < HD, CMP₁, ..., CMPₙ >

Head-Complement Schema:

SYNSEM | LOC | CAT | HEAD → HD

SYNSEM | LOC | CAT | VAL | COMPS ⊇ CMP

DTRS < HD, CMP₁, ..., CMPₙ >

Head-Specifier Schema:

SYNSEM | LOC | CAT | HEAD → HD

SYNSEM | LOC | CAT | VAL | SPR ⊇ SPR₁

DTRS < SPR₁, HD >

Head-Specifier Schema:

SYNSEM | LOC | CAT | HEAD → HD

SYNSEM | LOC | CAT | VAL | SPR ⊇ SPR₁

DTRS < SPR₁, HD >

![{\displaystyle {\mathrm {NP} {}\mathrel {\longrightarrow } {}(\mathrm {Det} )~\quad \mathrm {N} {\vphantom {A}}_{\smash[{t}]{1}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/eb7518f3341b08328ce41f2527c89c585e43be09)

![{\displaystyle {\mathrm {N} {\vphantom {A}}_{\smash[{t}]{1}}{}\mathrel {\longrightarrow } {}(\mathrm {AP} )~\quad \mathrm {N} {\vphantom {A}}_{\smash[{t}]{1}}~\quad (\mathrm {PP} )}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/970fb62e086c39604ea7b8f1eae5f6cc6ca62fc7)