Recent from talks

Contribute something

Nothing was collected or created yet.

| byte | |

|---|---|

| Unit system | unit derived from bit |

| Unit of | digital information, data size |

| Symbol | B, o (when 8 bits) |

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer[1][2] and for this reason it is the smallest addressable unit of memory in many computer architectures. To disambiguate arbitrarily sized bytes from the common 8-bit definition, network protocol documents such as the Internet Protocol (RFC 791) refer to an 8-bit byte as an octet.[3] Those bits in an octet are usually counted with numbering from 0 to 7 or 7 to 0 depending on the bit endianness.

The size of the byte has historically been hardware-dependent and no definitive standards existed that mandated the size. Sizes from 1 to 48 bits have been used.[4][5][6][7] The six-bit character code was an often-used implementation in early encoding systems, and computers using six-bit and nine-bit bytes were common in the 1960s. These systems often had memory words of 12, 18, 24, 30, 36, 48, or 60 bits, corresponding to 2, 3, 4, 5, 6, 8, or 10 six-bit bytes, and persisted, in legacy systems, into the twenty-first century. In this era, bit groupings in the instruction stream were often referred to as syllables[a] or slab, before the term byte became common.

The modern de facto standard of eight bits, as documented in ISO/IEC 2382-1:1993, is a convenient power of two permitting the binary-encoded values 0 through 255 for one byte, as 2 to the power of 8 is 256.[8] The international standard IEC 80000-13 codified this common meaning. Many types of applications use information representable in eight or fewer bits and processor designers commonly optimize for this usage. The popularity of major commercial computing architectures has aided in the ubiquitous acceptance of the 8-bit byte.[9] Modern architectures typically use 32- or 64-bit words, built of four or eight bytes, respectively.

The unit symbol for the byte was designated as the upper-case letter B by the International Electrotechnical Commission (IEC) and Institute of Electrical and Electronics Engineers (IEEE).[10] Internationally, the unit octet explicitly defines a sequence of eight bits, eliminating the potential ambiguity of the term "byte".[11][12] The symbol for octet, 'o', also conveniently eliminates the ambiguity in the symbol 'B' between byte and bel.

Etymology and history

[edit]The term byte was coined by Werner Buchholz in June 1956,[4][13][14][b] during the early design phase for the IBM Stretch[15][16][1][13][14][17][18] computer, which had addressing to the bit and variable field length (VFL) instructions with a byte size encoded in the instruction.[13] It is a deliberate respelling of bite to avoid accidental mutation to bit.[1][13][19][c]

Another origin of byte for bit groups smaller than a computer's word size, and in particular groups of four bits, is on record by Louis G. Dooley, who claimed he coined the term while working with Jules Schwartz and Dick Beeler on an air defense system called SAGE at MIT Lincoln Laboratory in 1956 or 1957, which was jointly developed by Rand, MIT, and IBM.[20][21] Later on, Schwartz's language JOVIAL actually used the term, but the author recalled vaguely that it was derived from AN/FSQ-31.[22][21]

Early computers used a variety of four-bit binary-coded decimal (BCD) representations and the six-bit codes for printable graphic patterns common in the U.S. Army (FIELDATA) and Navy. These representations included alphanumeric characters and special graphical symbols. These sets were expanded in 1963 to seven bits of coding, called the American Standard Code for Information Interchange (ASCII) as the Federal Information Processing Standard, which replaced the incompatible teleprinter codes in use by different branches of the U.S. government and universities during the 1960s. ASCII included the distinction of upper- and lowercase alphabets and a set of control characters to facilitate the transmission of written language as well as printing device functions, such as page advance and line feed, and the physical or logical control of data flow over the transmission media.[18] During the early 1960s, while also active in ASCII standardization, IBM simultaneously introduced in its product line of System/360 the eight-bit Extended Binary Coded Decimal Interchange Code (EBCDIC), an expansion of their six-bit binary-coded decimal (BCDIC) representations[d] used in earlier card punches.[23] The prominence of the System/360 led to the ubiquitous adoption of the eight-bit storage size,[18][16][13] while in detail the EBCDIC and ASCII encoding schemes are different.

In the early 1960s, AT&T introduced digital telephony on long-distance trunk lines. These used the eight-bit μ-law encoding. This large investment promised to reduce transmission costs for eight-bit data.

In Volume 1 of The Art of Computer Programming (first published in 1968), Donald Knuth uses byte in his hypothetical MIX computer to denote a unit which "contains an unspecified amount of information ... capable of holding at least 64 distinct values ... at most 100 distinct values. On a binary computer a byte must therefore be composed of six bits".[24] He notes that "Since 1975 or so, the word byte has come to mean a sequence of precisely eight binary digits...When we speak of bytes in connection with MIX we shall confine ourselves to the former sense of the word, harking back to the days when bytes were not yet standardized."[24]

The development of eight-bit microprocessors in the 1970s popularized this storage size. Microprocessors such as the Intel 8080, the direct predecessor of the 8086, could also perform a small number of operations on the four-bit pairs in a byte, such as the decimal-add-adjust (DAA) instruction. A four-bit quantity is often called a nibble, also nybble, which is conveniently represented by a single hexadecimal digit.

The term octet unambiguously specifies a size of eight bits.[18][12] It is used extensively in protocol definitions.

Historically, the term octad or octade was used to denote eight bits as well at least in Western Europe;[25][26] however, this usage is no longer common. The exact origin of the term is unclear, but it can be found in British, Dutch, and German sources of the 1960s and 1970s, and throughout the documentation of Philips mainframe computers.

Unit symbol

[edit]The unit symbol for the byte is specified in IEC 80000-13, IEEE 1541 and the Metric Interchange Format[10] as the upper-case character B.

In the International System of Quantities (ISQ), B is also the symbol of the bel, a unit of logarithmic power ratio named after Alexander Graham Bell, creating a conflict with the IEC specification. However, little danger of confusion exists, because the bel is a rarely used unit. It is used primarily in its decadic fraction, the decibel (dB), for signal strength and sound pressure level measurements, while a unit for one-tenth of a byte, the decibyte, and other fractions, are only used in derived units, such as transmission rates.

The lowercase letter o for octet is defined as the symbol for octet in IEC 80000-13 and is commonly used in languages such as French[27] and Romanian, and is also combined with metric prefixes for multiples, for example ko and Mo.

Multiple-byte units

[edit]| Multiple-byte units | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Orders of magnitude of data | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

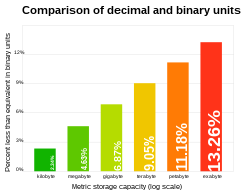

More than one system exists to define unit multiples based on the byte. Some systems are based on powers of 10, following the International System of Units (SI), which defines for example the prefix kilo as 1000 (103); other systems are based on powers of two. Nomenclature for these systems has led to confusion. Systems based on powers of 10 use standard SI prefixes (kilo, mega, giga, ...) and their corresponding symbols (k, M, G, ...). Systems based on powers of 2, however, might use binary prefixes (kibi, mebi, gibi, ...) and their corresponding symbols (Ki, Mi, Gi, ...) or they might use the prefixes K, M, and G, creating ambiguity when the prefixes M or G are used.

While the difference between the decimal and binary interpretations is relatively small for the kilobyte (about 2% smaller than the kibibyte), the systems deviate increasingly as units grow larger (the relative deviation grows by 2.4% for each three orders of magnitude). For example, a power-of-10-based terabyte is about 9% smaller than power-of-2-based tebibyte.

Units based on powers of 10

[edit]Definition of prefixes using powers of 10—in which 1 kilobyte (symbol kB) is defined to equal 1,000 bytes—is recommended by the International Electrotechnical Commission (IEC).[28] The IEC standard defines eight such multiples, up to 1 yottabyte (YB), equal to 10008 bytes.[29] The additional prefixes ronna- for 10009 and quetta- for 100010 were adopted by the International Bureau of Weights and Measures (BIPM) in 2022.[30][31]

This definition is most commonly used for data-rate units in computer networks, internal bus, hard drive and flash media transfer speeds, and for the capacities of most storage media, particularly hard drives,[32] flash-based storage,[33] and DVDs.[34] Operating systems that use this definition include macOS,[35] iOS,[35] Ubuntu,[36] and Debian.[37] It is also consistent with the other uses of the SI prefixes in computing, such as CPU clock speeds or measures of performance.

The IBM System 360 and the related disk and tape systems set the byte at 8 bits and documented capacities in decimal units.[38] The early 8-, 5.25- and 3.5-inch floppies gave capacities in multiples of 1024, using "KB" rather than the more accurate "KiB". The later, larger, 8-, 5.25- and 3.5-inch floppies gave capacities in a hybrid notation, i.e., multiples of 1024,000, using "KB" = 1024 B and "MB" = 1024,000 B. Early 5.25-inch disks used decimal[dubious – discuss] even though they used 128-byte and 256-byte sectors.[39] Hard disks used mostly 256-byte and then 512-byte before 4096-byte blocks became standard.[40] RAM was always sold in powers of 2.[citation needed]

Units based on powers of 2

[edit]A system of units based on powers of 2 in which 1 kibibyte (KiB) is equal to 1,024 (i.e., 210) bytes is defined by international standard IEC 80000-13 and is supported by national and international standards bodies (BIPM, IEC, NIST). The IEC standard defines eight such multiples, up to 1 yobibyte (YiB), equal to 10248 bytes. The natural binary counterparts to ronna- and quetta- were given in a consultation paper of the International Committee for Weights and Measures' Consultative Committee for Units (CCU) as robi- (Ri, 10249) and quebi- (Qi, 102410), but have not yet been adopted by the IEC or ISO.[41]

An alternative system of nomenclature for the same units (referred to here as the customary convention), in which 1 kilobyte (KB) is equal to 1,024 bytes,[42][43][44] 1 megabyte (MB) is equal to 10242 bytes and 1 gigabyte (GB) is equal to 10243 bytes is mentioned by a 1990s JEDEC standard. Only the first three multiples (up to GB) are mentioned by the JEDEC standard, which makes no mention of TB and larger. While confusing and incorrect,[45] the customary convention is used by the Microsoft Windows operating system[46][better source needed] and random-access memory capacity, such as main memory and CPU cache size, and in marketing and billing by telecommunication companies, such as Vodafone,[47] AT&T,[48] Orange[49] and Telstra.[50]

For storage capacity, the customary convention was used by macOS and iOS through Mac OS X 10.5 Leopard and iOS 10, after which they switched to units based on powers of 10.[35]

Parochial units

[edit]Various computer vendors have coined terms for data of various sizes, sometimes with different sizes for the same term even within a single vendor. These terms include double word, half word, long word, quad word, slab, superword and syllable. There are also informal terms. e.g., half byte and nybble for 4 bits, octal K for 10008.

History of the conflicting definitions

[edit]

When I see a disk advertised as having a capacity of one megabyte, what is this telling me? There are three plausible answers, and I wonder if anybody knows which one is correct ... Now this is not a really vital issue, as there is just under 5% difference between the smallest and largest alternatives. Nevertheless, it would [be] nice to know what the standard measure is, or if there is one.

— Allan D. Pratt of Small Computers in Libraries, 1982[51]

Contemporary[e] computer memory has a binary architecture making a definition of memory units based on powers of 2 most practical. The use of the metric prefix kilo for binary multiples arose as a convenience, because 1024 is approximately 1000.[27] This definition was popular in early decades of personal computing, with products like the Tandon 51⁄4-inch DD floppy format (holding 368640 bytes) being advertised as "360 KB", following the 1024-byte convention. It was not universal, however. The Shugart SA-400 51⁄4-inch floppy disk held 109,375 bytes unformatted,[52] and was advertised as "110 Kbyte", using the 1000 convention.[53] Likewise, the 8-inch DEC RX01 floppy (1975) held 256256 bytes formatted, and was advertised as "256k".[54] Some devices were advertised using a mixture of the two definitions: most notably, floppy disks advertised as "1.44 MB" have an actual capacity of 1440 KiB, the equivalent of 1.47 MB or 1.41 MiB.

In 1995, the International Union of Pure and Applied Chemistry's (IUPAC) Interdivisional Committee on Nomenclature and Symbols attempted to resolve this ambiguity by proposing a set of binary prefixes for the powers of 1024, including kibi (kilobinary), mebi (megabinary), and gibi (gigabinary).[55][56]

In December 1998, the IEC addressed such multiple usages and definitions by adopting the IUPAC's proposed prefixes (kibi, mebi, gibi, etc.) to unambiguously denote powers of 1024.[57] Thus one kibibyte (1 KiB) is 10241 bytes = 1024 bytes, one mebibyte (1 MiB) is 10242 bytes = 1048576 bytes, and so on.

In 1999, Donald Knuth suggested calling the kibibyte a "large kilobyte" (KKB).[58]

Modern standard definitions

[edit]The IEC adopted the IUPAC proposal and published the standard in January 1999.[59][60] The IEC prefixes are part of the International System of Quantities. The IEC further specified that the kilobyte should only be used to refer to 1000 bytes.[61]

Lawsuits over definition

[edit]Lawsuits arising from alleged consumer confusion over the binary and decimal definitions of multiples of the byte have generally ended in favor of the manufacturers, with courts holding that the legal definition of gigabyte or GB is 1 GB = 1000000000 (109) bytes (the decimal definition), rather than the binary definition (230, i.e., 1073741824). Specifically, the United States District Court for the Northern District of California held that "the U.S. Congress has deemed the decimal definition of gigabyte to be the 'preferred' one for the purposes of 'U.S. trade and commerce' [...] The California Legislature has likewise adopted the decimal system for all 'transactions in this state.'"[62]

Earlier lawsuits had ended in settlement with no court ruling on the question, such as a lawsuit against drive manufacturer Western Digital.[63][64] Western Digital settled the challenge and added explicit disclaimers to products that the usable capacity may differ from the advertised capacity.[63] Seagate was sued on similar grounds and also settled.[63][65]

Practical examples

[edit]| Unit | Approximate equivalent |

|---|---|

| bit | a Boolean variable indicating true (1) or false (0) |

| byte | a basic Latin character. |

| kilobyte | text of "Jabberwocky" |

| a typical favicon | |

| megabyte | text of Harry Potter and the Goblet of Fire[66] |

| gigabyte | about half an hour of DVD video[67] |

| CD-quality uncompressed audio of The Lamb Lies Down on Broadway | |

| terabyte | the largest consumer hard drive in 2007[68] |

| 75 hours of video, encoded at 30 Mbit/second | |

| petabyte | 2000 years of MP3-encoded music[69] |

| exabyte | global monthly Internet traffic in 2004[70] |

| zettabyte | global yearly Internet traffic in 2016 (known as the Zettabyte Era)[71] |

Common uses

[edit]Many programming languages define the data type byte.

The C and C++ programming languages define byte as an "addressable unit of data storage large enough to hold any member of the basic character set of the execution environment" (clause 3.6 of the C standard). The C standard requires that the integral data type unsigned char must hold at least 256 different values, and is represented by at least eight bits (clause 5.2.4.2.1). Various implementations of C and C++ reserve 8, 9, 16, 32, or 36 bits for the storage of a byte.[72][73][f] In addition, the C and C++ standards require that there be no gaps between two bytes. This means every bit in memory is part of a byte.[74]

Java's primitive data type byte is defined as eight bits. It is a signed data type, holding values from −128 to 127.

.NET programming languages, such as C#, define byte as an unsigned type, and the sbyte as a signed data type, holding values from 0 to 255, and −128 to 127, respectively.

In data transmission systems, the byte is used as a contiguous sequence of bits in a serial data stream, representing the smallest distinguished unit of data. For asynchronous communication a full transmission unit usually additionally includes a start bit, 1 or 2 stop bits, and possibly a parity bit, and thus its size may vary from seven to twelve bits for five to eight bits of actual data.[75] For synchronous communication the error checking usually uses bytes at the end of a frame.

See also

[edit]Notes

[edit]- ^ The term syllable was used for bytes containing instructions or constituents of instructions, not for data bytes.

- ^ Many sources erroneously indicate a birthday of the term byte in July 1956, but Werner Buchholz claimed that the term would have been coined in June 1956. In fact, the earliest document supporting this dates from 1956-06-11. Buchholz stated that the transition to 8-bit bytes was conceived in August 1956, but the earliest document found using this notion dates from September 1956.

- ^ Some later machines, e.g., Burroughs B1700, CDC 3600, DEC PDP-6, DEC PDP-10 had the ability to operate on arbitrary bytes no larger than the word size.

- ^ There was more than one BCD code page.

- ^ Through the 1970s there were machines with decimal architectures.

- ^ The actual number of bits in a particular implementation is documented as

CHAR_BITas implemented in the file limits.h.

References

[edit]- ^ a b c

Blaauw, Gerrit Anne; Brooks, Jr., Frederick Phillips; Buchholz, Werner (1962), "Chapter 4: Natural Data Units" (PDF), in Buchholz, Werner (ed.), Planning a Computer System - Project Stretch, McGraw-Hill Book Company, Inc. / The Maple Press Company, York, PA., pp. 39–40, LCCN 61-10466, archived from the original (PDF) on 2017-04-03, retrieved 2017-04-03

Terms used here to describe the structure imposed by the machine design, in addition to bit, are listed below.

Byte denotes a group of bits used to encode a character, or the number of bits transmitted in parallel to and from input-output units. A term other than character is used here because a given character may be represented in different applications by more than one code, and different codes may use different numbers of bits (i.e., different byte sizes). In input-output transmission the grouping of bits may be completely arbitrary and have no relation to actual characters. (The term is coined from bite, but respelled to avoid accidental mutation to bit.)

A word consists of the number of data bits transmitted in parallel from or to memory in one memory cycle. Word size is thus defined as a structural property of the memory. (The term catena was coined for this purpose by the designers of the Bull GAMMA 60 computer.)

Block refers to the number of words transmitted to or from an input-output unit in response to a single input-output instruction. Block size is a structural property of an input-output unit; it may have been fixed by the design or left to be varied by the program. - ^ Bemer, Robert William (1959), "A proposal for a generalized card code of 256 characters", Communications of the ACM, 2 (9): 19–23, doi:10.1145/368424.368435, S2CID 36115735

- ^

Postel, J. (September 1981). Internet Protocol DARPA INTERNET PROGRAM PROTOCOL SPECIFICATION. p. 43. doi:10.17487/RFC0791. RFC 791. Retrieved 28 August 2020.

octet An eight bit byte.

- ^ a b

Buchholz, Werner (1956-06-11). "7. The Shift Matrix" (PDF). The Link System. IBM. pp. 5–6. Stretch Memo No. 39G. Archived from the original (PDF) on 2017-04-04. Retrieved 2016-04-04.

[...] Most important, from the point of view of editing, will be the ability to handle any characters or digits, from 1 to 6 bits long.

Figure 2 shows the Shift Matrix to be used to convert a 60-bit word, coming from Memory in parallel, into characters, or 'bytes' as we have called them, to be sent to the Adder serially. The 60 bits are dumped into magnetic cores on six different levels. Thus, if a 1 comes out of position 9, it appears in all six cores underneath. Pulsing any diagonal line will send the six bits stored along that line to the Adder. The Adder may accept all or only some of the bits.

Assume that it is desired to operate on 4 bit decimal digits, starting at the right. The 0-diagonal is pulsed first, sending out the six bits 0 to 5, of which the Adder accepts only the first four (0-3). Bits 4 and 5 are ignored. Next, the 4 diagonal is pulsed. This sends out bits 4 to 9, of which the last two are again ignored, and so on.

It is just as easy to use all six bits in alphanumeric work, or to handle bytes of only one bit for logical analysis, or to offset the bytes by any number of bits. All this can be done by pulling the appropriate shift diagonals. An analogous matrix arrangement is used to change from serial to parallel operation at the output of the adder. [...] - ^

3600 Computer System - Reference Manual (PDF). K. St. Paul, Minnesota, US: Control Data Corporation (CDC). 1966-10-11 [1965]. 60021300. Archived from the original (PDF) on 2017-04-05. Retrieved 2017-04-05.

Byte - A partition of a computer word.

NB. Discusses 12-bit, 24-bit and 48-bit bytes. - ^

Rao, Thammavaram R. N.; Fujiwara, Eiji (1989). McCluskey, Edward J. (ed.). Error-Control Coding for Computer Systems. Prentice Hall Series in Computer Engineering (1 ed.). Englewood Cliffs, NJ, US: Prentice Hall. ISBN 0-13-283953-9. LCCN 88-17892.

NB. Example of the usage of a code for "4-bit bytes". - ^

Tafel, Hans Jörg (1971). Einführung in die digitale Datenverarbeitung [Introduction to digital information processing] (in German). Munich: Carl Hanser Verlag. p. 300. ISBN 3-446-10569-7.

Byte = zusammengehörige Folge von i.a. neun Bits; davon sind acht Datenbits, das neunte ein Prüfbit

NB. Defines a byte as a group of typically 9 bits; 8 data bits plus 1 parity bit. - ^

ISO/IEC 2382-1: 1993, Information technology - Vocabulary - Part 1: Fundamental terms. 1993.

byte:

A string that consists of a number of bits, treated as a unit, and usually representing a character or a part of a character.

NOTES:

1 The number of bits in a byte is fixed for a given data processing system.

2 The number of bits in a byte is usually 8. - ^ "Internet History of 1960s # 1964". Computer History Museum. 2017 [2015]. Archived from the original on 2022-06-24. Retrieved 2022-08-17.

- ^ a b Jaffer, Aubrey (2011) [2008]. "Metric-Interchange-Format". Archived from the original on 2017-04-03. Retrieved 2017-04-03.

- ^ Kozierok, Charles M. (2005-09-20) [2001]. "The TCP/IP Guide - Binary Information and Representation: Bits, Bytes, Nibbles, Octets and Characters - Byte versus Octet". 3.0. Archived from the original on 2017-04-03. Retrieved 2017-04-03.

- ^ a b

ISO 2382-4, Organization of data (2 ed.).

byte, octet, 8-bit byte: A string that consists of eight bits.

- ^ a b c d e

Buchholz, Werner (February 1977). "The Word "Byte" Comes of Age..." Byte Magazine. 2 (2): 144.

We received the following from W Buchholz, one of the individuals who was working on IBM's Project Stretch in the mid 1950s. His letter tells the story.

Not being a regular reader of your magazine, I heard about the question in the November 1976 issue regarding the origin of the term "byte" from a colleague who knew that I had perpetrated this piece of jargon [see page 77 of November 1976 BYTE, "Olde Englishe"]. I searched my files and could not locate a birth certificate. But I am sure that "byte" is coming of age in 1977 with its 21st birthday.

Many have assumed that byte, meaning 8 bits, originated with the IBM System/360, which spread such bytes far and wide in the mid-1960s. The editor is correct in pointing out that the term goes back to the earlier Stretch computer (but incorrect in that Stretch was the first, not the last, of IBM's second-generation transistorized computers to be developed).

The first reference found in the files was contained in an internal memo written in June 1956 during the early days of developing Stretch. A byte was described as consisting of any number of parallel bits from one to six. Thus a byte was assumed to have a length appropriate for the occasion. Its first use was in the context of the input-output equipment of the 1950s, which handled six bits at a time. The possibility of going to 8-bit bytes was considered in August 1956 and incorporated in the design of Stretch shortly thereafter.

The first published reference to the term occurred in 1959 in a paper 'Processing Data in Bits and Pieces' by G A Blaauw, F P Brooks Jr and W Buchholz in the IRE Transactions on Electronic Computers, June 1959, page 121. The notions of that paper were elaborated in Chapter 4 of Planning a Computer System (Project Stretch), edited by W Buchholz, McGraw-Hill Book Company (1962). The rationale for coining the term was explained there on page 40 as follows:

Byte denotes a group of bits used to encode a character, or the number of bits transmitted in parallel to and from input-output units. A term other than character is used here because a given character may be represented in different applications by more than one code, and different codes may use different numbers of bits (ie, different byte sizes). In input-output transmission the grouping of bits may be completely arbitrary and have no relation to actual characters. (The term is coined from bite, but respelled to avoid accidental mutation to bit.)

System/360 took over many of the Stretch concepts, including the basic byte and word sizes, which are powers of 2. For economy, however, the byte size was fixed at the 8 bit maximum, and addressing at the bit level was replaced by byte addressing.

Since then the term byte has generally meant 8 bits, and it has thus passed into the general vocabulary.

Are there any other terms coined especially for the computer field which have found their way into general dictionaries of English language? - ^ a b

"Timeline of the IBM Stretch/Harvest era (1956-1961)". Computer History Museum. June 1956. Archived from the original on 2016-04-29. Retrieved 2017-04-03.

1956 Summer: Gerrit Blaauw, Fred Brooks, Werner Buchholz, John Cocke and Jim Pomerene join the Stretch team. Lloyd Hunter provides transistor leadership.

1956 July [sic]: In a report Werner Buchholz lists the advantages of a 64-bit word length for Stretch. It also supports NSA's requirement for 8-bit bytes. Werner's term "Byte" first popularized in this memo.NB. This timeline erroneously specifies the birth date of the term "byte" as July 1956, while Buchholz actually used the term as early as June 1956.

- ^

Buchholz, Werner (1956-07-31). "5. Input-Output" (PDF). Memory Word Length. IBM. p. 2. Stretch Memo No. 40. Archived from the original (PDF) on 2017-04-04. Retrieved 2016-04-04.

[...] 60 is a multiple of 1, 2, 3, 4, 5, and 6. Hence bytes of length from 1 to 6 bits can be packed efficiently into a 60-bit word without having to split a byte between one word and the next. If longer bytes were needed, 60 bits would, of course, no longer be ideal. With present applications, 1, 4, and 6 bits are the really important cases.

With 64-bit words, it would often be necessary to make some compromises, such as leaving 4 bits unused in a word when dealing with 6-bit bytes at the input and output. However, the LINK Computer can be equipped to edit out these gaps and to permit handling of bytes which are split between words. [...] - ^ a b

Buchholz, Werner (1956-09-19). "2. Input-Output Byte Size" (PDF). Memory Word Length and Indexing. IBM. p. 1. Stretch Memo No. 45. Archived from the original (PDF) on 2017-04-04. Retrieved 2016-04-04.

[...] The maximum input-output byte size for serial operation will now be 8 bits, not counting any error detection and correction bits. Thus, the Exchange will operate on an 8-bit byte basis, and any input-output units with less than 8 bits per byte will leave the remaining bits blank. The resultant gaps can be edited out later by programming [...]

- ^ Raymond, Eric Steven (2017) [2003]. "byte definition". Archived from the original on 2017-04-03. Retrieved 2017-04-03.

- ^ a b c d

Bemer, Robert William (2000-08-08). "Why is a byte 8 bits? Or is it?". Computer History Vignettes. Archived from the original on 2017-04-03. Retrieved 2017-04-03.

I came to work for IBM, and saw all the confusion caused by the 64-character limitation. Especially when we started to think about word processing, which would require both upper and lower case.

Add 26 lower case letters to 47 existing, and one got 73 -- 9 more than 6 bits could represent.

I even made a proposal (in view of STRETCH, the very first computer I know of with an 8-bit byte) that would extend the number of punch card character codes to 256 [1].

Some folks took it seriously. I thought of it as a spoof.

So some folks started thinking about 7-bit characters, but this was ridiculous. With IBM's STRETCH computer as background, handling 64-character words divisible into groups of 8 (I designed the character set for it, under the guidance of Dr. Werner Buchholz, the man who DID coin the term "byte" for an 8-bit grouping). [2] It seemed reasonable to make a universal 8-bit character set, handling up to 256. In those days my mantra was "powers of 2 are magic". And so the group I headed developed and justified such a proposal [3].

That was a little too much progress when presented to the standards group that was to formalize ASCII, so they stopped short for the moment with a 7-bit set, or else an 8-bit set with the upper half left for future work.

The IBM 360 used 8-bit characters, although not ASCII directly. Thus Buchholz's "byte" caught on everywhere. I myself did not like the name for many reasons. The design had 8 bits moving around in parallel. But then came a new IBM part, with 9 bits for self-checking, both inside the CPU and in the tape drives. I exposed this 9-bit byte to the press in 1973. But long before that, when I headed software operations for Cie. Bull in France in 1965-66, I insisted that 'byte' be deprecated in favor of "octet".

You can notice that my preference then is now the preferred term.

It is justified by new communications methods that can carry 16, 32, 64, and even 128 bits in parallel. But some foolish people now refer to a "16-bit byte" because of this parallel transfer, which is visible in the UNICODE set. I'm not sure, but maybe this should be called a "hextet".

But you will notice that I am still correct. Powers of 2 are still magic! - ^ Blaauw, Gerrit Anne; Brooks, Jr., Frederick Phillips; Buchholz, Werner (June 1959). "Processing Data in Bits and Pieces". IRE Transactions on Electronic Computers: 121.

- ^

Dooley, Louis G. (February 1995). "Byte: The Word". BYTE. Ocala, FL, US. Archived from the original on 1996-12-20.

The word byte was coined around 1956 to 1957 at MIT Lincoln Laboratories within a project called SAGE (the North American Air Defense System), which was jointly developed by Rand, Lincoln Labs, and IBM. In that era, computer memory structure was already defined in terms of word size. A word consisted of x number of bits; a bit represented a binary notational position in a word. Operations typically operated on all the bits in the full word.

We coined the word byte to refer to a logical set of bits less than a full word size. At that time, it was not defined specifically as x bits but typically referred to as a set of 4 bits, as that was the size of most of our coded data items. Shortly afterward, I went on to other responsibilities that removed me from SAGE. After having spent many years in Asia, I returned to the U.S. and was bemused to find out that the word byte was being used in the new microcomputer technology to refer to the basic addressable memory unit. - ^ a b Ram, Stefan (17 January 2003). "Erklärung des Wortes "Byte" im Rahmen der Lehre binärer Codes" (in German). Berlin, Germany: Freie Universität Berlin. Archived from the original on 2021-06-10. Retrieved 2017-04-10.

- ^

Origin of the term "byte", 1956, archived from the original on 2017-04-10, retrieved 2022-08-17

A question-and-answer session at an ACM conference on the history of programming languages included this exchange:

[ John Goodenough:

You mentioned that the term "byte" is used in JOVIAL. Where did the term come from? ]

[ Jules Schwartz (inventor of JOVIAL):

As I recall, the AN/FSQ-31, a totally different computer than the 709, was byte oriented. I don't recall for sure, but I'm reasonably certain the description of that computer included the word "byte," and we used it. ]

[ Fred Brooks:

May I speak to that? Werner Buchholz coined the word as part of the definition of STRETCH, and the AN/FSQ-31 picked it up from STRETCH, but Werner is very definitely the author of that word. ]

[ Schwartz:

That's right. Thank you. ] - ^ "List of EBCDIC codes by IBM". ibm.com. 2020-01-02. Archived from the original on 2020-07-03. Retrieved 2020-07-03.

- ^ a b Knuth, Donald (1997) [1968]. The Art of Computer Programming: Volume 1: Fundamental Algorithms (3rd ed.). Boston: Addison-Wesley. p. 125. ISBN 9780201896831.

- ^ Williams, R. H. (1969). British Commercial Computer Digest: Pergamon Computer Data Series. Pergamon Press. ISBN 1483122107. ISBN 978-1483122106[clarification needed]

- ^ "Philips Data Systems' product range" (PDF). Philips. April 1971. Archived from the original on 2016-03-04. Retrieved 2015-08-03.

- ^ a b

[

|*| About bits and bytes: prefixes for binary multiples - IEC

|*| v0: https://web.archive.org/web/20090818042050/http://www.iec.ch/online_news/etech/arch_2003/etech_0503/focus.htm

|*| v1: https://www.iec.ch/prefixes-binary-multiples Archived 2021-08-16 at the Wayback Machine ] - ^ Prefixes for Binary Multiples Archived 2007-08-08 at the Wayback Machine — The NIST Reference on Constants, Units, and Uncertainty

- ^ Matsuoka, Satoshi; Sato, Hitoshi; Tatebe, Osamu; Koibuchi, Michihiro; Fujiwara, Ikki; Suzuki, Shuji; Kakuta, Masanori; Ishida, Takashi; Akiyama, Yutaka; Suzumura, Toyotaro; Ueno, Koji (2014-09-15). "Extreme Big Data (EBD): Next Generation Big Data Infrastructure Technologies Towards Yottabyte/Year". Supercomputing Frontiers and Innovations. 1 (2): 89–107. doi:10.14529/jsfi140206. ISSN 2313-8734. Archived from the original on 2022-03-13. Retrieved 2022-05-27.

- ^ "List of Resolutions for the 27th meeting of the General Conference on Weights and Measures" (PDF). 2022-11-18. Archived (PDF) from the original on 2022-11-18. Retrieved 2022-11-18.

- ^ Gibney, Elizabeth (18 November 2022). "How many yottabytes in a quettabyte? Extreme numbers get new names". Nature. doi:10.1038/d41586-022-03747-9. ISSN 0028-0836. PMID 36400954. S2CID 253671538. Archived from the original on 16 January 2023. Retrieved 21 November 2022.

- ^ 1977 Disk/Trend Report Rigid Disk Drives, published June 1977

- ^ SanDisk USB Flash Drive Archived 2008-05-13 at the Wayback Machine "Note: 1 megabyte (MB) = 1 million bytes; 1 gigabyte (GB) = 1 billion bytes."

- ^ Bennett, Hugh (1999). "Pioneer DVD-S201 4.7GB DVD-Recorder". EMedia. Vol. 12, no. 12. ISSN 1529-7306.

DVD nomenclature defines one gigabyte as one billion bytes...

- ^ a b c "How iOS and macOS report storage capacity". Apple Support. 27 February 2018. Archived from the original on 9 April 2020. Retrieved 9 January 2022.

- ^ "UnitsPolicy". Ubuntu Wiki. Ubuntu. Archived from the original on 18 November 2021. Retrieved 9 January 2022.

- ^ "ConsistentUnitPrefixes". Debian Wiki. Archived from the original on 3 December 2021. Retrieved 9 January 2022.

- ^ "Main Core Storage" (PDF). IBM System/360 System Summary (PDF). System Reference Library (First ed.). IBM. 1964. p. 6. A22-6810-0. Retrieved May 22, 2025.

- ^ "5.25" Floppy Disk Drive Stock No. 237-088" (PDF). docs.rs-online.com.

- ^ "Advanced Format White Paper" (PDF). documents.westerndigital.com.

- ^ Brown, Richard J. C. (27 April 2022). "Reply to "Facing a shortage of the Latin letters for the prospective new SI symbols: alternative proposal for the new SI prefixes"". Accreditation and Quality Assurance. 27 (3): 143–144. doi:10.1007/s00769-022-01499-7. S2CID 248397680.

- ^ Kilobyte – Definition and More from the Free Merriam-Webster Dictionary Archived 2010-04-09 at the Wayback Machine. Merriam-webster.com (2010-08-13). Retrieved on 2011-01-07.

- ^ Kilobyte – Definition of Kilobyte at Dictionary.com Archived 2010-09-01 at the Wayback Machine. Dictionary.reference.com (1995-09-29). Retrieved on 2011-01-07.

- ^ Definition of kilobyte from Oxford Dictionaries Online Archived 2006-06-25 at the Wayback Machine. Askoxford.com. Retrieved on 2011-01-07.

- ^ Prefixes for Binary and Decimal Multiples: Binary versus decimal

- ^ "Determining Actual Disk Size: Why 1.44 MB Should Be 1.40 MB". Microsoft Support. 2003-05-06. Archived from the original on 2014-02-09. Retrieved 2014-03-25.

- ^ "3G/GPRS data rates". Vodafone Ireland. Archived from the original on 26 October 2016. Retrieved 26 October 2016.

- ^ "Data Measurement Scale". AT&T. Retrieved 26 October 2016.[permanent dead link]

- ^ "Internet Mobile Access". Orange Romania. Archived from the original on 26 October 2016. Retrieved 26 October 2016.

- ^ "Our Customer Terms" (PDF). Telstra. p. 7. Archived (PDF) from the original on 10 April 2017. Retrieved 26 October 2016.

- ^ Pratt, Allan D. (1982-04-12). "Kwandary". Letters to the Editor. InfoWorld. p. 21. Retrieved 2025-03-16.

- ^ "SA400 minifloppy". Swtpc.com. 2013-08-14. Archived from the original on 2014-05-27. Retrieved 2014-03-25.

- ^ "Shugart Associates SA 400 minifloppy™ Disk Drive" (PDF). Archived from the original (PDF) on 2011-06-08. Retrieved 2011-06-24.

- ^ "RXS/RX11 floppy disk system maintenance manual" (PDF). Maynard, Massachusetts: Digital Equipment Corporation. May 1975. Archived from the original (PDF) on 2011-04-23. Retrieved 2011-06-24.

- ^ IUCr 1995 Report - IUPAC Interdivisional Committee on Nomenclature and Symbols (IDCNS) http://ww1.iucr.org/iucr-top/cexec/rep95/idcns.htm Archived 2020-12-19 at the Wayback Machine

- ^ "Binary Prefix" University of Auckland Department of Computer Science https://wiki.cs.auckland.ac.nz/stageonewiki/index.php/Binary_prefix Archived 2020-10-16 at the Wayback Machine

- ^ National Institute of Standards and Technology. "Prefixes for binary multiples". Archived from the original on 2007-08-08. "In December 1998 the International Electrotechnical Commission (IEC) [...] approved as an IEC International Standard names and symbols for prefixes for binary multiples for use in the fields of data processing and data transmission."

- ^ "What is a kilobyte?". Archived from the original on 2011-06-06. Retrieved 2010-05-20.

- ^ NIST "Prefixes for binary multiples" https://physics.nist.gov/cuu/Units/binary.html Archived 2018-01-14 at the Wayback Machine

- ^ Amendment 2 to IEC International Standard IEC 60027-2: Letter symbols to be used in electrical technology – Part 2: Telecommunications and electronics.

- ^ Barrow, Bruce (January 1997). "A Lesson in Megabytes" (PDF). IEEE. p. 5. Archived from the original (PDF) on 17 December 2005. Retrieved 14 December 2024.

- ^ "Order Granting Motion to Dismiss" (PDF). United States District Court for the Northern District of California. Archived (PDF) from the original on 2021-10-07. Retrieved 2020-01-24.

- ^ a b c Mook, Nate (2006-06-28). "Western Digital Settles Capacity Suit". betanews. Archived from the original on 2009-09-07. Retrieved 2009-03-30.

- ^ Baskin, Scott D. (2006-02-01). "Defendant Western Digital Corporation's Brief in Support of Plaintiff's Motion for Preliminary Approval". Orin Safier v. Western Digital Corporation. Western Digital Corporation. Archived from the original on 2009-01-02. Retrieved 2009-03-30.

- ^ Judge, Peter (2007-10-26). "Seagate pays out over gigabyte definition". ZDNet. Archived from the original on 2014-09-03. Retrieved 2014-09-16.

- ^ Allison Dexter, "How Many Words are in Harry Potter?", [1] Archived 2021-01-25 at the Wayback Machine; shows 190637 words

- ^ "Kilobytes Megabytes Gigabytes Terabytes (Stanford University)". Archived from the original on 2020-11-08. Retrieved 2020-12-12.

- ^ Perenson, Melissa J. (4 January 2007). "Hitachi Introduces 1-Terabyte Hard Drive". www.pcworld.com. Archived from the original on 24 October 2012. Retrieved 5 December 2020.

- ^ "What does a petabyte look like?". Archived from the original on 28 January 2018. Retrieved 19 February 2018.

- ^ Gross, Grant (24 November 2007). "Internet Could Max Out in 2 Years, Study Says". PC World. Archived from the original on 26 November 2007. Retrieved 28 November 2007.

- ^ "The Zettabyte Era Officially Begins (How Much is That?)". Cisco Blogs. 2016-09-09. Archived from the original on 2021-08-02. Retrieved 2021-08-04.

- ^ Cline, Marshall. "I could imagine a machine with 9-bit bytes. But surely not 16-bit bytes or 32-bit bytes, right?". Archived from the original on 2019-03-21. Retrieved 2015-06-18.

- ^ Klein, Jack (2008), Integer Types in C and C++, archived from the original on 2010-03-27, retrieved 2015-06-18

- ^ Cline, Marshall. "C++ FAQ: the rules about bytes, chars, and characters". Archived from the original on 2019-03-21. Retrieved 2015-06-18.

- ^ "External Interfaces/API". Northwestern University. Archived from the original on 2018-08-09. Retrieved 2016-09-02.

Further reading

[edit]- "2.5 Byte manipulation" (PDF). Programming with the PDP-10 Instruction Set (PDF). PDP-10 System Reference Manual. Vol. 1. Digital Equipment Corporation (DEC). August 1969. pp. 2-15 – 2-17. Archived (PDF) from the original on 2017-04-05. Retrieved 2017-04-05.

- Ashley Taylor. "Bits and Bytes". Stanford. https://web.stanford.edu/class/cs101/bits-bytes.html

Definition and Fundamentals

Core Definition

A byte is a unit of digital information typically consisting of eight bits, enabling the representation of 256 distinct values ranging from 0 to 255 in decimal notation.[9] This structure allows bytes to serve as a fundamental building block for data storage, processing, and transmission in computing systems. A bit, the smallest unit of digital information, represents a single binary digit that can hold either a value of 0 or 1.[9] By grouping eight such bits into a byte, computers can encode more complex data efficiently, supporting operations like arithmetic calculations and character representation that exceed the limitations of individual bits. The international standard IEC 80000-13:2008 formally defines one byte as exactly eight bits, using the term "byte" (symbol B) as a synonym for "octet" to denote this eight-bit quantity and recommending its use to avoid ambiguity with historical variations.[9] For example, a single byte can store one ASCII character, such as 'A', which corresponds to the decimal value 65.[10]Relation to Bits

A byte is an ordered collection of bits, standardized in modern computing to eight bits, that is typically treated as a single binary number representing integer values from 00000000 (0 in decimal) to 11111111 (255 in decimal).[1][5] This structure allows a byte to encode 256 distinct states, as each bit can independently be 0 or 1, yielding possible combinations.[11] The numerical value of a byte is determined by its binary representation using positional notation, where each bit's position corresponds to a power of 2. The value of an 8-bit byte is calculated as where is the value of the -th bit (either 0 or 1), and denotes the least significant bit.[11] For example, the binary byte 10101010 converts to 170 in decimal, computed as .[11] In computing systems, bytes play a crucial role by serving as the smallest addressable unit of memory, enabling efficient referencing and manipulation of data in larger aggregates beyond individual bits.[12] This byte-addressable design facilitates operations on contiguous blocks of memory, such as loading instructions or storing variables, which would be impractical at the bit level due to the granularity mismatch.[13]History and Etymology

Origins of the Term

The term "byte" was coined in July 1956 by IBM engineer Werner Buchholz during the early design phase of the IBM Stretch computer, a pioneering supercomputer project aimed at advancing high-performance computing.[3][14] Buchholz introduced the word as a more concise alternative to cumbersome phrases like "binary digit group" or "bit string," which were used to describe groupings of bits in data processing. Etymologically, "byte" derives from "bit" with the addition of the suffix "-yte," intentionally respelled from the more intuitive "bite" to prevent confusion with the existing term "bit" while evoking the idea of a larger "bite" of information.[3] This playful yet practical choice reflected the need for a unit that signified a meaningful aggregation of bits, larger than a single binary digit but suitable for computational operations.[15] In its early conceptual role, the byte was proposed as a flexible data-handling unit larger than a bit, specifically to encode characters, perform arithmetic on variable-length fields, and manage instructions in the bit-addressable architecture of mainframes like the Stretch.[16] This addressed the limitations of processing data solely in isolated bits, enabling more efficient handling of textual and numerical information in early computer systems.[17] The first documented use of "byte" appeared in the June 1959 technical paper "Processing Data in Bits and Pieces" by Buchholz, Frederick P. Brooks Jr., and Gerrit A. Blaauw, published in the IRE Transactions on Electronic Computers, where it described a unit for variable-length data operations in the context of Stretch's design.[16] Although the term originated three years earlier in internal IBM discussions, this publication marked its entry into the broader technical literature, predating its adoption in the IBM System/360 architecture.[18]Evolution of Byte Size

In the early days of computing, the size of a byte varied across systems to suit specific hardware architectures and data encoding needs. The IBM 7030 Stretch supercomputer, introduced in 1959, employed a variable-length byte concept, but typically utilized 6-bit bytes for binary-coded decimal (BCD) character representation, allowing efficient packing of decimal digits within its 64-bit words.[19] Similarly, 7-bit bytes were common in telegraphic and communication systems, aligning with the structure of early character codes like the International Telegraph Alphabet No. 5, a 7-bit code supporting 128 characters. Some minicomputers, such as the DEC PDP-10 from the late 1960s, adopted 9-bit bytes to divide 36-bit words into four equal units, facilitating operations on larger datasets like those in time-sharing systems. The transition to an 8-bit byte gained momentum in the mid-1960s, propelled by emerging character encoding standards that required more robust representation. The American Standard Code for Information Interchange (ASCII), standardized in 1963, defined 7 bits for 128 characters, but practical implementations often added an 8th parity bit for error checking in transmission, effectively establishing an 8-bit structure. IBM's Extended Binary Coded Decimal Interchange Code (EBCDIC), developed in 1964 for the System/360 mainframe series, natively used 8 bits to encode 256 possible values, including control characters and punched-card compatibility, influencing enterprise computing architectures.[20] The IBM System/360, announced in 1964, played a crucial role in this standardization by adopting a consistent 8-bit byte across its compatible family of computers, facilitating data interchange and software portability.[21] This shift aligned with the growing need for international character support and efficient data processing beyond decimal-centric designs. By the 1970s, the 8-bit byte had become the de facto standard, driven by advancements in semiconductor technology and microprocessor design. Early dynamic random-access memory (DRAM) chips, such as Intel's 1103 introduced in 1970, provided 1-kilobit capacities in a 1024 × 1 bit organization. Systems using these chips often combined multiple devices to form 8-bit bytes, aligning with emerging standards for compatibility and efficiency. The Intel 8080 microprocessor, released in 1974, further solidified this by processing data in 8-bit units across its 16-bit architecture, enabling the proliferation of affordable personal computers and embedded systems. This standardization improved memory efficiency, as 8-bit alignments reduced overhead in addressing and arithmetic operations compared to uneven sizes like 6 or 9 bits. Formal standardization affirmed the 8-bit byte in international norms during the late 20th century. The IEEE 754 standard for binary floating-point arithmetic, published in 1985, implicitly relied on 8-bit bytes by defining single-precision formats as 32 bits (four bytes) and double-precision as 64 bits (eight bytes), ensuring portability across hardware. The ISO/IEC 2382-1 vocabulary standard, revised in 1993, explicitly defined a byte as a sequence of eight bits, providing a consistent terminology for information technology.[22] This was reinforced by the International Electrotechnical Commission (IEC) in 1998 through amendments to IEC 60027-2, which integrated the 8-bit byte into binary prefix definitions for data quantities, resolving ambiguities in storage and transmission metrics.Notation and Standards

Unit Symbols and Abbreviations

The official unit symbol for the byte is the uppercase letter B, as established by international standards to represent a sequence of eight bits. This symbol is defined in IEC 80000-13:2025, which specifies that the byte is synonymous with the octet and uses B to denote this unit in information science and technology contexts. The standard also aligns with earlier guidelines in IEC 60027-2 (2000), which incorporated conventions for binary multiples introduced in 1998 and emphasized consistent notation for bytes and bits.[7] To prevent ambiguity, particularly in data rates and storage metrics, the lowercase b is reserved for the bit or its multiples (e.g., kbit for kilobit), while B exclusively denotes the byte.[7] The National Institute of Standards and Technology (NIST) reinforces this distinction in its guidelines on SI units and binary prefixes, stating that one byte equals 1 B = 8 bits, and recommending B for all byte-related quantities to avoid confusion with bit-based units.[7] Similarly, the International Electrotechnical Commission (IEC) advises against using non-standard symbols like "o" for octet, as it deviates from the unified B notation and could lead to errors in technical documentation. In formal writing and standards-compliant contexts, abbreviations should use B without periods or pluralization (e.g., 8 B for eight bytes), following general SI symbol rules for upright roman type and no modification for plurality.[7] Informal usage in prose often spells out "byte" fully or employs B inline, but avoids ambiguous lowercase "b" for bytes to maintain clarity.[24] For example, storage capacities are expressed as 1 KB = 1024 B in binary contexts, distinguishing from kbit or kb for kilobits (1000 bits).[7] Guidelines from authoritative bodies like NIST and the IEC continue to prioritize B to ensure unambiguous communication in computing and measurement applications.[7] These conventions promote standardized unit symbols to support global interoperability.[25]Definition of Multiples

Multiples of bytes provide a standardized way to express larger quantities of digital information, commonly applied in contexts such as data storage, memory capacity, and bandwidth measurement. These multiples incorporate prefixes that scale the base unit of one byte (8 bits) by powers of either 10, aligning with the decimal system used in general scientific measurement, or powers of 2, which correspond to the binary nature of computing architectures.[7][24] In 1998, the International Electrotechnical Commission (IEC) established binary prefixes through the amendment to International Standard IEC 60027-2 to clearly denote multiples based on powers of 2, avoiding ambiguity in computing applications, with the latest revision in IEC 80000-13:2025 adding new prefixes for binary multiples. Under this system, the prefix "kibi" (Ki) represents bytes, so 1 KiB = bytes = 1024 bytes; "mebi" (Mi) represents bytes, so 1 MiB = bytes = 1,048,576 bytes; and the scale extends through prefixes like gibi (Gi, ), tebi (Ti, ), pebi (Pi, ), exbi (Ei, ), zebi (Zi, ), up to yobi (Yi, ), where 1 YiB = bytes.[24][7] Concurrently in 1998, the International System of Units (SI) prefixes were endorsed for decimal multiples of bytes to maintain consistency with metric conventions, defining scales based on powers of 10. For instance, the prefix "kilo" (k) denotes bytes, so 1 kB = bytes = 1000 bytes; "mega" (M) denotes bytes, so 1 MB = bytes = 1,000,000 bytes; and the progression continues with giga (G, ), tera (T, ), peta (P, ), exa (E, ), zetta (Z, ), yotta (Y, ), ronna (R, ), quetta (Q, ), where 1 QB = bytes.[7][26] In general, the value of a byte multiple can be expressed as \text{Value} = \text{prefix_factor} \times \text{byte_size}, where byte_size is 1 byte and prefix_factor equals for decimal prefixes or for binary prefixes, where for decimal n is the exponent (e.g., n=3 for kilo), and for binary k is the level (e.g., k=1 for kibi, corresponding to ).[7][24]Variations and Conflicts in Multiples

Binary-Based Units

Binary-based units, also referred to as binary prefixes, are measurement units for digital information that are multiples of powers of 2, aligning with the fundamental binary architecture of computers. These units were formalized by the International Electrotechnical Commission (IEC) in its 1998 standard IEC 60027-2, which defines prefixes such as kibi (Ki), mebi (Mi), and gibi (Gi) to denote exact binary multiples of the byte. For instance, 1 kibibyte (KiB) equals bytes, while 1 gibibyte (GiB) equals bytes. This standardization was later incorporated into the updated IEC 80000-13:2008, emphasizing their role in data processing and transmission.[7][24] The adoption of binary-based units gained traction for their precision in contexts like random access memory (RAM) capacities and file size reporting, where alignment with hardware addressing is crucial. Operating systems such as Microsoft Windows commonly report file sizes using these binary multiples—for example, displaying 1 KB as 1,024 bytes in File Explorer—to reflect actual storage allocation in binary systems.[24][27] The IEC promoted these units to eliminate ambiguity in computing applications, ensuring that measurements for volatile memory like RAM and non-volatile storage like files accurately represent binary-scaled data.[24] A key advantage of binary-based units lies in their seamless integration with computer memory addressing, where locations are numbered in powers of 2; for example, addressable bytes precisely equals 1 mebibyte (MiB), facilitating efficient hardware design and software calculations without conversion overhead. The general formula for calculating the size in bytes is , where is the prefix order (e.g., for kibi, for mebi). Thus, 1 tebibyte (TiB) = bytes. Common binary prefixes are summarized below:| Prefix Name | Symbol | Factor | Bytes (for byte multiples) |

|---|---|---|---|

| kibibyte | KiB | 1,024 | |

| mebibyte | MiB | 1,048,576 | |

| gibibyte | GiB | 1,073,741,824 | |

| tebibyte | TiB | 1,099,511,627,776 | |

| pebibyte | PiB | 1,125,899,906,842,624 |