Recent from talks

Contribute something

Nothing was collected or created yet.

Array (data type)

View on WikipediaIn computer science, array is a data type that represents a collection of elements (values or variables), each selected by one or more indices (identifying keys) that can be computed at run time during program execution. Such a collection is usually called an array variable or array value.[1] By analogy with the mathematical concepts vector and matrix, array types with one and two indices are often called vector type and matrix type, respectively. More generally, a multidimensional array type can be called a tensor type, by analogy with the mathematical concept, tensor.[2]

Language support for array types may include certain built-in array data types, some syntactic constructions (array type constructors) that the programmer may use to define such types and declare array variables, and special notation for indexing array elements.[1] For example, in the Pascal programming language, the declaration type MyTable = array [1..4,1..2] of integer, defines a new array data type called MyTable. The declaration var A: MyTable then defines a variable A of that type, which is an aggregate of eight elements, each being an integer variable identified by two indices. In the Pascal program, those elements are denoted A[1,1], A[1,2], A[2,1], …, A[4,2].[3] Special array types are often defined by the language's standard libraries.

Dynamic lists are also more common and easier to implement[dubious – discuss] than dynamic arrays. Array types are distinguished from record types mainly because they allow the element indices to be computed at run time, as in the Pascal assignment A[I,J] := A[N-I,2*J]. Among other things, this feature allows a single iterative statement to process arbitrarily many elements of an array variable.

In more theoretical contexts, especially in type theory and in the description of abstract algorithms, the terms "array" and "array type" sometimes refer to an abstract data type (ADT) also called abstract array or may refer to an associative array, a mathematical model with the basic operations and behavior of a typical array type in most languages – basically, a collection of elements that are selected by indices computed at run-time.

Depending on the language, array types may overlap (or be identified with) other data types that describe aggregates of values, such as lists and strings. Array types are often implemented by array data structures, but sometimes by other means, such as hash tables, linked lists, or search trees.

History

[edit]Heinz Rutishauser's programming language Superplan (1949–1951) included multi-dimensional arrays. However, although Rutishauser described how a compiler for his language should be built, did not implement one.

Assembly languages and low-level languages like BCPL[4] generally have no syntactic support for arrays.

Because of the importance of array structures for efficient computation, the earliest high-level programming languages, including FORTRAN (1957), COBOL (1960), and Algol 60 (1960), provided support for multi-dimensional arrays.

Abstract arrays

[edit]An array data structure can be mathematically modeled as an abstract data structure (an abstract array) with two operations

- get(A, I): the data stored in the element of the array A whose indices are the integer tuple I.

- set(A, I, V): the array that results by setting the value of that element to V.

These operations are required to satisfy the axioms[5]

- get(set(A, I, V), I) = V

- get(set(A, I, V), J) = get(A, J) if I ≠ J

for any array state A, any value V, and any tuples I, J for which the operations are defined.

The first axiom means that each element behaves like a variable. The second axiom means that elements with distinct indices behave as disjoint variables, so that storing a value in one element does not affect the value of any other element.

These axioms do not place any constraints on the set of valid index tuples I, therefore this abstract model can be used for triangular matrices and other oddly-shaped arrays.

Implementations

[edit]In order to effectively implement variables of such types as array structures (with indexing done by pointer arithmetic), many languages restrict the indices to integer data types[6][7] (or other types that can be interpreted as integers, such as bytes and enumerated types), and require that all elements have the same data type and storage size. Most of those languages also restrict each index to a finite interval of integers, that remains fixed throughout the lifetime of the array variable. In some compiled languages, in fact, the index ranges may have to be known at compile time.

On the other hand, some programming languages provide more liberal array types, that allow indexing by arbitrary values, such as floating-point numbers, strings, objects, references, etc.. Such index values cannot be restricted to an interval, much less a fixed interval. So, these languages usually allow arbitrary new elements to be created at any time. This choice precludes the implementation of array types as array data structures. That is, those languages use array-like syntax to implement a more general associative array semantics, and must therefore be implemented by a hash table or some other search data structure.

Language support

[edit]Multi-dimensional arrays

[edit]The number of indices needed to specify an element is called the dimension, dimensionality, or rank of the array type. (This nomenclature conflicts with the concept of dimension in linear algebra, which expresses the shape of a matrix. Thus, an array of numbers with 5 rows and 4 columns, hence 20 elements, is said to have dimension 2 in computing contexts, but represents a matrix that is said to be 4×5-dimensional. Also, the computer science meaning of "rank" conflicts with the notion of tensor rank, which is a generalization of the linear algebra concept of rank of a matrix.)

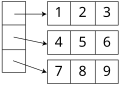

Many languages support only one-dimensional arrays. In those languages, a multi-dimensional array is typically represented by an Iliffe vector, a one-dimensional array of references to arrays of one dimension less. A two-dimensional array, in particular, would be implemented as a vector of pointers to its rows.[8] Thus an element in row i and column j of an array A would be accessed by double indexing (A[i][j] in typical notation). This way of emulating multi-dimensional arrays allows the creation of jagged arrays, where each row may have a different size – or, in general, where the valid range of each index depends on the values of all preceding indices.

This representation for multi-dimensional arrays is quite prevalent in C and C++ software. However, C and C++ will use a linear indexing formula for multi-dimensional arrays that are declared with compile time constant size, e.g. by int A[10][20] or int A[m][n], instead of the traditional int **A.[9]

The C99 standard introduced Variable Length Array types that let define array types with dimensions computed in run time. The dynamic 4D array can be constructed using a pointer to 4d array, e.g. int (*arr)[t][u][v][w] = malloc(sizeof *arr);. The individual elements are accessed by first de-referencing an array pointer followed by indexing, e.g. (*arr)[i][j][k][l]. Alternatively, n-d arrays can be declared as pointers to its first element which is a (n-1) dimensional array, e.g. int (*arr)[u][v][w] = malloc(t * sizeof *arr); and accessed using more idiomatic syntax, e.g. arr[i][j][k][l].

Indexing notation

[edit]Most programming languages that support arrays support the store and select operations, and have special syntax for indexing. Early languages used parentheses, e.g. A(i,j), as in FORTRAN; others choose square brackets, e.g. A[i,j] or A[i][j], as in Algol 60 and Pascal (to distinguish from the use of parentheses for function calls).

Index types

[edit]Array data types are most often implemented as array structures: with the indices restricted to integer (or totally ordered) values, index ranges fixed at array creation time, and multilinear element addressing. This was the case in most "third generation" languages, and is still the case of most systems programming languages such as Ada, C, and C++. In some languages, however, array data types have the semantics of associative arrays, with indices of arbitrary type and dynamic element creation. This is the case in some scripting languages such as Awk and Lua, and of some array types provided by standard C++ libraries.

Bounds checking

[edit]Some languages (like Pascal and Modula) perform bounds checking on every access, raising an exception or aborting the program when any index is out of its valid range. Compilers may allow these checks to be turned off to trade safety for speed. Other languages (like FORTRAN and C) trust the programmer and perform no checks. Good compilers may also analyze the program to determine the range of possible values that the index may have, and this analysis may lead to bounds-checking elimination.

Index origin

[edit]Some languages, such as C, provide only zero-based array types, for which the minimum valid value for any index is 0.[10] This choice is convenient for array implementation and address computations. With a language such as C, a pointer to the interior of any array can be defined that will symbolically act as a pseudo-array that accommodates negative indices. This works only because C does not check an index against bounds when used.

Other languages provide only one-based array types, where each index starts at 1; this is the traditional convention in mathematics for matrices and mathematical sequences. A few languages, such as Pascal and Lua, support n-based array types, whose minimum legal indices are chosen by the programmer. The relative merits of each choice have been the subject of heated debate. Zero-based indexing can avoid off-by-one or fencepost errors.[11]

Highest index

[edit]The relation between numbers appearing in an array declaration and the index of that array's last element also varies by language. In many languages (such as C), one should specify the number of elements contained in the array; whereas in others (such as Pascal and Visual Basic .NET) one should specify the numeric value of the index of the last element. This distinction is not present in languages where the indices start at 1, such as Lua.

Array algebra

[edit]Some programming languages support array programming, where operations and functions defined for certain data types are implicitly extended to arrays of elements of those types. Thus one can write A+B to add corresponding elements of two arrays A and B. Usually these languages provide both the element-by-element multiplication and the standard matrix product of linear algebra, and which of these is represented by the * operator varies by language.

Languages providing array programming capabilities have proliferated since the innovations in this area of APL. These are core capabilities of domain-specific languages such as GAUSS, IDL, Matlab, and Mathematica. They are a core facility in newer languages, such as Julia and recent versions of Fortran. These capabilities are also provided via standard extension libraries for other general purpose programming languages (such as the widely used NumPy library for Python).

String types and arrays

[edit]Many languages provide a built-in string data type, with specialized notation ("string literals") to build values of that type. In some languages (such as C), a string is just an array of characters, or is handled in much the same way. Other languages, like Pascal, may provide vastly different operations for strings and arrays.

Array index range queries

[edit]Some programming languages provide operations that return the size (number of elements) of a vector, or, more generally, range of each index of an array. In C and C++ arrays do not support the size() function, so programmers often have to declare separate variable to hold the size, and pass it to procedures as a separate parameter.

Elements of a newly created array may have undefined values (as in C), or may be defined to have a specific "default" value such as 0 or a null pointer (as in Java).

In C++ a std::vector object supports the store, select, and append operations with the performance characteristics discussed above. Vectors can be queried for their size and can be resized. Slower operations like inserting an element in the middle are also supported.

Slicing

[edit]An array slicing operation takes a subset of the elements of an array-typed entity (value or variable) and then assembles them as another array-typed entity, possibly with other indices. If array types are implemented as array structures, many useful slicing operations (such as selecting a sub-array, swapping indices, or reversing the direction of the indices) can be performed very efficiently by manipulating the dope vector of the structure. The possible slicings depend on the implementation details: for example, Fortran allows slicing off one column of a matrix variable, but not a row, and treat it as a vector.

On the other hand, other slicing operations are possible when array types are implemented in other ways.

Resizing

[edit]Some languages allow dynamic arrays (also called resizable, growable, or extensible): array variables whose index ranges may be expanded at any time after creation, without changing the values of its current elements.

For one-dimensional arrays, this facility may be provided as an operation append(A,x) that increases the size of the array A by one and then sets the value of the last element to x. Other array types (such as Pascal strings) provide a concatenation operator, which can be used together with slicing to achieve that effect and more. In some languages, assigning a value to an element of an array automatically extends the array, if necessary, to include that element. In other array types, a slice can be replaced by an array of different size, with subsequent elements being renumbered accordingly – as in Python's list assignment A[5:5] = [10,20,30], that inserts three new elements (10, 20, and 30) before element "A[5]". Resizable arrays are conceptually similar to lists, and the two concepts are synonymous in some languages.

An extensible array can be implemented as a fixed-size array, with a counter that records how many elements are actually in use. The append operation merely increments the counter; until the whole array is used, when the append operation may be defined to fail. This is an implementation of a dynamic array with a fixed capacity, as in the string type of Pascal. Alternatively, the append operation may re-allocate the underlying array with a larger size, and copy the old elements to the new area.

See also

[edit]References

[edit]- ^ a b Robert W. Sebesta (2001) Concepts of Programming Languages. Addison-Wesley. 4th edition (1998), 5th edition (2001), ISBN 9780201385960

- ^ "Introduction to Tensors | TensorFlow Core". TensorFlow.

- ^ K. Jensen and Niklaus Wirth, PASCAL User Manual and Report. Springer. Paperback edition (2007) 184 pages, ISBN 978-3540069508

- ^ John Mitchell, Concepts of Programming Languages. Cambridge University Press.

- ^ Lukham, Suzuki (1979), "Verification of array, record, and pointer operations in Pascal". ACM Transactions on Programming Languages and Systems 1 (2), 226–244.

- ^ Deitel, Harvey M.; Deitel, Paul J. (2005). C# for Programmers. Prentice Hall Professional. p. 303. ISBN 978-0-13-246591-5. Retrieved 22 May 2024.

- ^ Friesen, Jeff (5 March 2014). Learn Java for Android Development: Java 8 and Android 5 Edition. Apress. p. 56. ISBN 978-1-4302-6455-2. Retrieved 22 May 2024.

- ^ Van der Linden, Peter (1994). Expert C Programming: Deep C Secrets. Englewood Cliffs, NJ: SunSoft Press. ISBN 978-0-13-177429-2.

- ^ Brian W. Kernighan and Dennis M. Ritchie (1988), The C programming Language. Prentice-Hall, p. 81.

- ^ Kernighan, Brian W.; Ritchie, Dennis M. (1988). The C programming language (2nd ed.). Englewood Cliffs, N.J: Prentice Hall. p. 24. ISBN 978-0-13-110370-2.

- ^ Edsger W. Dijkstra, "Why numbering should start at zero"

External links

[edit]Array (data type)

View on GrokipediaFundamentals

Definition and Characteristics

An array is a data structure that represents a fixed-size, ordered collection of elements, all of the same data type, which can be accessed and manipulated using indices that typically start from zero.[1] This structure allows for the storage of multiple values under a single identifier, enabling efficient organization of homogeneous data.[5] Key characteristics of arrays include their homogeneity, requiring all elements to share the identical data type, such as integers or characters, which ensures uniform memory allocation per element.[6] They maintain a logical ordering, often implemented as contiguous blocks in memory for static arrays, where the size is determined at creation and remains immutable during runtime.[7] Access to individual elements occurs directly via index-based addressing, providing constant-time retrieval complexity, O(1), regardless of the array's size.[8] For example, an array declared asint[5] in a programming language like C or Java can hold five integer values, such as {10, 20, 30, 40, 50}, where the element at index 2 is accessed as array[2] to retrieve 30.[9]

Unlike linked lists, which store elements in non-contiguous memory locations connected by pointers and allow dynamic resizing at the cost of slower access times, arrays demand contiguous memory allocation to support their direct indexing, trading flexibility for performance in retrieval operations.[10][11]

Basic Operations

Arrays are created through declaration and allocation, specifying the element type and a fixed size, after which the size cannot be changed. In pseudocode, this is often represented asarray<T> A(n);, where T is the element type and n is the number of elements, allocating contiguous memory and initializing elements to default values such as zero for numeric types or null for references.[12] Alternatively, arrays can be initialized with explicit values at creation, as in array<int> odds = {1, 3, 5, 7};, which sets the size implicitly based on the provided values.[13]

Accessing and modifying elements occurs via zero-based indexing, where elements are referenced by their position starting from 0 up to n-1. Reading an element uses the notation value = A[i], retrieving the value at index i, while writing assigns a new value with A[i] = value, overwriting the existing element at that position.[7] The length of the array, which remains fixed after initialization, can be queried using a built-in property such as A.length, returning the integer n to facilitate operations dependent on the array's size.[12]

Traversal of an array involves sequentially visiting each element, typically implemented with a for-loop iterating from index 0 to n-1, as in:

for i from 0 to A.length - 1:

process A[i]

for i from 0 to A.length - 1:

process A[i]

i < 0 or i >= n, leads to undefined behavior or a runtime error, depending on the language implementation.[7]

Historical Development

Early Concepts

The concept of arrays predates electronic computing, emerging in mathematical notation during the 19th century as a means to represent systems of linear equations and transformations. James Joseph Sylvester introduced the term "matrix" in 1850 to describe an oblong arrangement of terms that facilitated the study of linear relations, while Arthur Cayley formalized matrix theory in 1858, establishing operations like multiplication for these rectangular arrays of numbers.[14] These mathematical arrays provided a foundational abstraction for organizing and manipulating collections of related values, influencing later computational structures. In the 1940s, as electronic computers emerged, arrays were adapted for practical data storage in hardware designed for scientific calculations. The ENIAC, completed in 1945, utilized function tables to store sequences of numerical data, functioning as one-dimensional arrays of up to 104 signed 10-digit numbers for tasks like ballistics trajectory computations.[15] John von Neumann's 1945 "First Draft of a Report on the EDVAC" further conceptualized indexed storage, proposing a memory system with random access and addressing mechanisms to efficiently handle ordered collections of data and instructions in a stored-program architecture.[16] This design emphasized arrays for sequential access, driven by the need to process large datasets in scientific simulations without manual reconfiguration. By the mid-1950s, high-level programming languages began incorporating array support to simplify scientific programming. Fortran, developed by IBM and first implemented in 1957 for the IBM 704, introduced subscripted variables—essentially arrays—as a core feature, allowing programmers to declare and manipulate one- or multi-dimensional collections of data with indexed access.[17] The primary motivation across these early developments was to enable efficient storage and rapid computation of numerical sequences for applications in physics, engineering, and military research, reducing the complexity of handling repetitive data operations.Modern Evolution

The evolution of arrays in the mid-20th century built upon early concepts by incorporating more flexible and type-safe mechanisms in high-level languages. ALGOL 60, published in 1960, formalized arrays as ordered collections with dynamic bounds that could be determined at runtime within block scopes, allowing subscript expressions to vary based on local variables.[18] This design influenced subsequent languages, emphasizing structured data access. In 1972, C introduced arrays closely tied to pointers, where array names decay to pointer types in expressions, enabling efficient but manual memory management without built-in bounds checking. Pascal, released in 1970 by Niklaus Wirth, advanced array handling with strongly typed, fixed-size declarations and support for multi-dimensional structures, though dynamic sizing required heap allocation via procedures like new and dispose in extensions.[19] From the 1990s onward, arrays adapted to object-oriented paradigms and specialized computing needs. Java, launched in 1995, integrated arrays as first-class objects with automatic bounds checking enforced by the virtual machine, preventing common runtime errors like buffer overflows. The C++ Standard Template Library (STL), standardized in 1998, introduced std::vector as a dynamic array container with automatic resizing, iterators, and algorithmic integration, extending C's pointer model into a generic, type-safe framework. In Python, NumPy arrays emerged in 2006, providing multi-dimensional, homogeneous arrays optimized for numerical computations, bridging high-level scripting with efficient vectorized operations for scientific applications. Concurrently, NVIDIA's CUDA framework, introduced in 2006, enabled arrays on graphics processing units (GPUs), supporting parallel data processing across thousands of threads for high-performance computing tasks. These developments were shaped by broader shifts in programming paradigms and computational demands. The rise of object-oriented languages like Java and C++ encapsulated arrays within classes, promoting safer abstraction and integration with inheritance, while functional influences in languages emphasized immutable arrays for concurrency. Handling big data and parallelism drove innovations like NumPy's broadcasting and CUDA's memory hierarchies, addressing scalability in distributed systems and machine learning workloads. Key milestones, such as Java's bounds checking, underscored a move toward reliability in enterprise software, influencing modern standards across ecosystems.Abstract Model

Abstract Array Properties

In computer science, an array is conceptualized as an abstract data type (ADT) that provides a mapping from a consecutive range of integer indices to elements of a homogeneous type, enabling efficient and direct access to any element by its position. This abstract model abstracts away implementation details, focusing instead on the logical structure where indices typically form a contiguous sequence, often starting from 0 or 1, and each index uniquely corresponds to one element. The ADT specification includes operations such as creation with a specified size, access and modification via indexing, and, in some variants, insertion or deletion that may adjust the structure while preserving the mapping property.[20][21] Key properties of the abstract array include contiguous indexing, which ensures that elements are logically positioned in a linear order without gaps in the index domain, facilitating constant-time access in the ideal model; homogeneity, requiring all elements to share the same data type for uniform treatment; and, in static array variants, immutability of size once established, which simplifies reasoning about bounds but limits flexibility. These properties make arrays suitable for representing ordered collections where positional relationships are paramount. Dynamic array abstracts extend this model by allowing runtime resizing, such as through amortized operations that maintain the contiguous indexing while adjusting capacity as needed.[21][22] Conceptually, sparse arrays represent a variant of the array ADT where the mapping applies to a potentially large index domain, but only non-default (e.g., non-zero) elements are considered significant, though the abstract interface still supports full indexing over the domain. This abstraction is useful for space-efficient handling of matrices or sequences with many implicit zeros, without altering the core mapping semantics. In algorithmic contexts, arrays function as sequences that underpin fundamental operations like sorting and searching; for instance, they enable in-place swaps in algorithms such as quicksort or binary search traversals that halve the index range iteratively, leveraging the direct index-to-element correspondence for efficiency.[23][24]Mathematical Formalism

In mathematical formalism, an array of elements of type is defined as a total function , where is a finite consecutive set of integers representing the index domain, with as the lower bound and as the upper bound (assuming ).[25] This models the array as a mapping from each valid index in to exactly one element in , ensuring no undefined positions within the bounds.[25] A key property of this function is totality: for every , is defined and yields an element of .[25] The length of the array, denoted , is the cardinality of the domain, given by the equation [25] In zero-based indexing conventions (where and ), the storage offset for an index simplifies to itself; in general, the offset is .[26] Basic operations on the array are defined functionally. Access retrieves the value at a valid index via evaluation of the function: for .[25] Update modifies the mapping at a specific index, denoted where and , resulting in a new function identical to except at , where .[25] Array algebra extends this model with constructors for compound structures. Concatenation, denoted for arrays and of compatible type , produces a new array whose domain is the disjoint union of the domains of and , with elements sequenced from followed by ; it satisfies associativity: .[26] Slicing extracts a subarray where , yielding a new array with domain and values for in that subdomain; the full array satisfies .[26]Implementations

Memory Representation

Arrays are typically implemented using contiguous allocation in memory, where elements of the same type are stored in sequential addresses starting from a base address.[27] The address of the -th element is calculated as the base address plus times the size of each element type, denoted as , enabling efficient random access through pointer arithmetic.[27] This layout applies to both one-dimensional and multi-dimensional arrays, which are flattened into a single contiguous block in row-major or column-major order, without explicit delimiters between dimensions.[27] To optimize access speed and hardware efficiency, memory systems enforce alignment requirements, where the starting address of each element must be a multiple of its size (e.g., 4-byte alignment for 32-bit integers).[28] Padding bytes are inserted between elements or at the end of structures containing arrays to maintain this alignment, particularly in multi-dimensional arrays or arrays of structs, which can increase the total memory footprint beyond the sum of element sizes.[28] For instance, an array of structs may require padding after each struct to ensure the next struct begins at an aligned boundary, preserving the alignment for all elements.[28] Static arrays are allocated at compile time with a fixed size, typically on the stack for local variables, providing automatic management but limiting flexibility.[27] In contrast, dynamic arrays are allocated at runtime on the heap using functions likemalloc, allowing resizable buffers through reallocation, though this introduces manual memory management responsibilities.[27] The stack offers fast allocation for fixed-size arrays but has size limits, while the heap supports larger, variable-sized allocations at the cost of potential fragmentation.[29]

Endianness affects the byte order of multi-byte elements within arrays, with little-endian systems (common in x86 architectures) storing the least significant byte at the lowest address, and big-endian doing the reverse.[30] This ordering influences how array data is interpreted across platforms, particularly for integers or floats spanning multiple bytes, requiring byte-swapping for interoperability in networked or cross-system applications.[30] For example, a 16-bit value of 258 would appear as bytes 02 01 in little-endian order starting at address 1008.[30]

Dense arrays exhibit minimal space overhead, primarily consisting of the elements themselves with only occasional padding for alignment, making them highly space-efficient compared to structures like linked lists that require pointers per node.[11] This efficiency stems from the contiguous layout, which avoids per-element metadata beyond the base pointer.[11]

Hardware and Low-Level Support

Hardware architectures provide foundational support for array operations through specialized instructions that enable efficient memory access and computation on contiguous data blocks. In x86 architectures, load and store instructions facilitate array indexing by allowing memory operands in the form of [base + index * scale + displacement], where the base register holds the array's starting address, the index register tracks the element offset, the scale factor accounts for element size (e.g., 1, 2, 4, or 8 bytes), and displacement adds a constant offset.[31] The Load Effective Address (LEA) instruction computes this address without performing a memory load, enabling fast index calculations for array traversal or bounds checking, as it executes in a single cycle on modern Intel processors.[31] Contiguous memory allocation for arrays exploits spatial locality in CPU caches, where sequential access patterns prefetch adjacent data blocks into faster cache levels, reducing latency compared to non-contiguous structures. Modern processors, such as those with Intel's inclusive L3 cache hierarchy, load entire cache lines (typically 64 bytes) on a miss, allowing subsequent array elements to be served from L1 or L2 caches with latencies under 10 cycles, versus hundreds for main memory.[32] This locality principle is particularly effective for one-dimensional arrays stored in row-major order, minimizing cache misses during linear traversals.[33] Single Instruction, Multiple Data (SIMD) extensions further accelerate array processing by applying operations across multiple elements simultaneously using vector registers. Intel's Advanced Vector Extensions (AVX) and AVX2 introduce 256-bit YMM registers that can hold up to eight 32-bit floats or integers, enabling instructions like VADDPS to add corresponding elements from two arrays in parallel, achieving up to 8x speedup over scalar operations on aligned data.[34] AVX-512 extends this to 512-bit ZMM registers for even wider parallelism, supporting masked operations to handle irregular array lengths without branching.[34] In parallel architectures like GPUs, arrays are handled via Single Instruction, Multiple Threads (SIMT) models, where thousands of threads execute the same instruction on different data elements within thread blocks. NVIDIA's CUDA architecture organizes threads into warps of 32, scheduling them on streaming multiprocessors (SMs) that process array operations in lockstep, with shared memory providing low-latency access to contiguous array segments for coalesced global memory loads.[35] This SIMT approach suits array-heavy computations in shaders or compute kernels, such as matrix multiplications, by broadcasting instructions across warps while allowing divergence for conditional array access.[35] At the assembly level, array traversal leverages indexing modes for efficient iteration. For example, in x86-64 NASM assembly, a simple loop to sum elements of a 32-bit integer array starting at addressarray with length n might use:

section .data

[array](/page/Array) dd 1, 2, 3, 4 ; Example [array](/page/Array)

n equ 4

section .bss

sum resd 1

section .text

global _start

_start:

mov ecx, n ; Loop counter

mov eax, 0 ; Sum accumulator

mov esi, [array](/page/Array) ; Base [address](/page/Address)

loop:

mov ebx, [esi + ecx*4 - 4] ; Load element: base + (index-1)*scale

add eax, ebx ; Accumulate

loop loop ; Decrement ecx and jump if !=0

mov [sum], eax ; Store result

section .data

[array](/page/Array) dd 1, 2, 3, 4 ; Example [array](/page/Array)

n equ 4

section .bss

sum resd 1

section .text

global _start

_start:

mov ecx, n ; Loop counter

mov eax, 0 ; Sum accumulator

mov esi, [array](/page/Array) ; Base [address](/page/Address)

loop:

mov ebx, [esi + ecx*4 - 4] ; Load element: base + (index-1)*scale

add eax, ebx ; Accumulate

loop loop ; Decrement ecx and jump if !=0

mov [sum], eax ; Store result

Language Support

Single-Dimensional Arrays

Single-dimensional arrays, also known as one-dimensional or linear arrays, provide a fundamental mechanism in many programming languages for storing a fixed sequence of elements of the same type in contiguous memory locations. These arrays are declared with a specified size at compile time or runtime, enabling efficient access via zero-based indexing. Support for single-dimensional arrays varies across languages, with some offering built-in types and others relying on library implementations that mimic array behavior.[37][38] In C, single-dimensional arrays are declared using the syntaxtype name[size];, where size is a compile-time constant integer expression greater than zero, such as int a[10];, which allocates space for 10 integers. Initialization can occur simultaneously, for example, int a[5] = {1, 2, 3};, where the first three elements are set to the specified values and the remaining two default to zero. Partially initialized arrays have the remaining elements default to zero for numeric types, even in local scope. However, fully uninitialized local arrays contain indeterminate values, while those with static or global storage duration are zero-initialized. Java follows a similar built-in approach but uses object-oriented syntax: type[] name = new type[size];, like int[] a = new int[10];, which creates an array of 10 integers initialized to zero by default; alternatively, int[] a = {1, 2, 3}; provides explicit values, with the size inferred.[37][39]

Python does not have a native built-in array type for general use but employs lists as a dynamic proxy for single-dimensional sequences, declared as mylist = [] for an empty list or mylist = [1, 2, 3] for initialization with values; lists start empty with no default element values and support heterogeneous types, though they are often used homogeneously like arrays. In JavaScript, arrays are implemented as objects via the built-in Array constructor or literals, such as let a = new Array(10); for a sparse array of 10 undefined slots or let a = [1, 2, 3]; for explicit initialization; as a library-based structure, it allows mixed types and defaults to undefined for unset elements. These variations highlight built-in support in low-level languages like C and Java for static sizing versus higher-level, object-oriented proxies in Python and JavaScript.[38][40][41]

Single-dimensional arrays are commonly used to represent linear data structures, such as sequences of numbers or characters, and as buffers for temporary storage during computations. For instance, they store ordered collections like month names or precomputed values in algorithms, enabling efficient iteration and lookup by index. In data processing tasks, they hold elements like card decks for operations such as shuffling, providing a simple way to manage homogeneous lists without the overhead of more complex structures.[42][43][42]

Multi-Dimensional Arrays

Multi-dimensional arrays allow elements to be organized in multiple dimensions, extending the single-dimensional structure to represent data in grid-like formats such as matrices or images.[44] These arrays are particularly useful in numerical computing, where they model relationships across rows and columns, as seen in linear algebra operations or pixel data in image processing.[44] However, they often impose limitations like fixed dimensions at declaration time in statically typed languages, which can restrict flexibility for variable-sized data without resorting to dynamic structures.[37] The storage layout of multi-dimensional arrays varies by language, with row-major and column-major orders being the primary conventions. In row-major order, elements within each row are stored contiguously in memory, followed by the next row; this is the approach used in C, where a declaration likeint a[3][4]; allocates space for a 3-by-4 array in this linear fashion. Conversely, Fortran employs column-major order, storing elements of each column contiguously, which aligns with its historical focus on scientific computing and matrix operations.[45] This difference affects traversal efficiency, as accessing elements along the contiguous dimension incurs fewer cache misses.[46]

Access to elements in multi-dimensional arrays typically uses nested indexing, such as A[i][j] for a two-dimensional array, or a flattened index like A[i * cols + j] to map to the underlying one-dimensional storage. In Java, rectangular multi-dimensional arrays maintain uniform row lengths, declared as int[][] a = new int[3][4];, but jagged arrays—arrays of arrays with varying sub-array sizes—offer more flexibility for irregular data.[37] Python implements multi-dimensional arrays through nested lists, enabling dynamic sizing but without built-in contiguous storage guarantees unless using libraries like NumPy.[47] MATLAB provides native support for multi-dimensional arrays beyond two dimensions, optimized for vectorized operations in scientific applications.[44]

Indexing and Access Methods

Arrays employ indexing conventions that specify how elements are addressed, with the index origin determining the starting value for valid indices. In zero-based indexing, the first element is accessed at index 0, a convention adopted in languages like C and Java to align with memory offset calculations from the array's base address.[37] Conversely, one-based indexing begins at index 1, as seen in Fortran and MATLAB, reflecting historical influences from mathematical notation where sequences often start at 1.[48][49] For an array of length , the valid indices span from the origin to in zero-based systems or to in one-based systems, ensuring contiguous access without exceeding the allocated bounds.[50] This highest index of in zero-based arrays facilitates efficient pointer arithmetic, as the offset for the last element is simply element size. Access notations vary across languages but commonly use square brackets for subscripting, such as in C, Java, and Python, where denotes the index.[37] Some object-oriented contexts employ dot notation like for named properties, though this is less typical for numeric arrays and more for associative structures.[51] Functional notations, such as , appear in languages emphasizing pure functions, avoiding mutable subscript operators. Index types are predominantly integers, with languages specifying signed or unsigned variants for safety and range. In Java, indices must be of type , a signed 32-bit integer, while C permits integer expressions but often uses unsigned \text{size_t} for sizes to match allocation functions.[50] Rust enforces , an unsigned pointer-sized integer, for array and vector indexing to prevent underflow issues.[52] Some languages extend indices to enums that coerce to integers, enabling type-safe access in switch-like patterns, though this requires the enum to map to valid integer ranges. Basic range queries specify subarrays via start and end indices, often in loops or declarations, such as iterating from start to end-1 in zero-based systems to select elements to . In multi-dimensional arrays, such ranges apply per dimension, like for row from start_row to end_row and column from start_col to end_col.Bounds Checking and Safety

Bounds checking refers to the verification that array indices fall within the valid range before accessing elements, preventing errors such as buffer overflows that can lead to security vulnerabilities or program crashes.[53] In languages with built-in support, this is typically performed at runtime, though some incorporate static analysis for compile-time guarantees. The primary goal is to ensure memory safety by detecting invalid accesses early, but implementations vary by language to balance reliability and efficiency. In Java, bounds checking is mandatory at runtime for all array accesses, enforced by the Java Virtual Machine (JVM). If an index is invalid, the program throws anArrayIndexOutOfBoundsException, halting execution to prevent further damage.[54] This design prioritizes safety in managed environments, where the overhead is integrated into bytecode instructions like iaload for integer array loads.

C, by contrast, provides no automatic bounds checking, treating out-of-bounds access as undefined behavior (UB), which may result in a segmentation fault if the access violates memory protections enforced by the operating system. Programmers must manually ensure index validity, a common source of vulnerabilities in systems programming.

C++ offers flexibility through its standard library: the std::array container's operator[] performs no bounds check for performance, invoking UB on invalid access, while the at() method includes runtime verification and throws a std::out_of_range exception if the index is invalid. This allows developers to choose safety levels based on context.

Ada enforces bounds checking primarily at runtime, raising a Constraint_Error exception for invalid indices, but supports static analysis via tools like GNATprove in the SPARK subset, which can prove the absence of runtime errors at compile time for critical systems.[55][53]

Rust integrates bounds checking into its indexing operations on arrays and slices, panicking at runtime if an index exceeds the length, which terminates the thread to avoid unsafe memory access.[52] Its ownership model further enhances safety by preventing aliasing and use-after-free errors that could indirectly lead to bounds violations, ensuring compile-time enforcement of borrowing rules without garbage collection.[56]

The trade-off between bounds checking and performance is significant: unchecked access enables optimizations like faster execution in numerical computations, but enabling checks can increase runtime by 10-20% on average in optimized implementations, or over 100% in unoptimized code for array-heavy workloads.[57][58] Techniques such as compiler optimizations, including redundant check elimination and hardware-assisted verification, mitigate this overhead while preserving safety guarantees.[59]

Dynamic and Advanced Features

Dynamic arrays extend the basic array concept by allowing runtime resizing, which is essential for handling variable-sized data collections without predefined limits. In C++, the standard library providesstd::vector as a dynamic array implementation that automatically manages memory allocation and deallocation during resizing operations. When elements are added beyond the current capacity, std::vector reallocates a larger contiguous block of memory, typically doubling the size to achieve amortized constant-time insertions, and copies the existing elements to the new location. This resizing mechanism supports operations like push_back to append elements and pop_back to remove the last element, ensuring efficient growth and shrinkage.

Similarly, Python's built-in list type functions as a dynamic array, supporting append and pop operations that adjust the underlying array size as needed. The append method adds an element to the end of the list, potentially triggering an internal resize if the capacity is exceeded, while pop removes and returns an element, optionally from a specific index, and may shrink the list under certain conditions to optimize memory usage. These operations enable flexible list manipulation in Python, where lists serve as the primary mutable sequence type for dynamic data storage.[60]

Slicing provides a way to extract subarrays or views of an array, facilitating efficient access to contiguous or strided portions without always creating full copies. In Python, list slicing such as a[1:3] produces a new list containing references to the selected elements, resulting in a shallow copy that avoids duplicating the underlying objects but still allocates a new list structure. For more memory-efficient subarray views that do not copy data, NumPy arrays support slicing that returns a view into the original array's memory buffer, allowing modifications to affect the source array directly. This view-based slicing is particularly useful in numerical computing to minimize overhead when working with large datasets.[38][61]

Array algebra introduces higher-level operations on arrays, enabling element-wise computations and shape-compatible manipulations without explicit loops. In NumPy, element-wise operations like addition (+) or multiplication (*) apply the operator to corresponding elements of arrays of the same shape, producing a new array with the results. Broadcasting extends this capability to arrays of different shapes by virtually expanding the smaller array along compatible dimensions, such as adding a scalar to each element of a vector or aligning a row vector with a column vector for outer operations. This mechanism, rooted in vectorized computing, enhances performance in scientific applications by leveraging optimized C-level implementations.[62][63]

Strings often leverage array-like structures for storage and manipulation, treating sequences of characters as arrays in various languages. In C, strings are conventionally represented as null-terminated character arrays (char[]), where the array holds sequential characters ending with a null byte (\0), allowing direct indexing and modification like any array but requiring manual bounds management to prevent overflows. This approach integrates strings seamlessly into array operations, such as iterating over characters via array indices. In contrast, Java's String class models strings as immutable objects backed by a character array, ensuring that once created, the content cannot be altered, which promotes thread safety and enables string interning for memory efficiency. Access to the underlying characters occurs through methods like toCharArray(), which returns a mutable copy, distinguishing it from the fixed internal representation.[64]

Sparse arrays address scenarios where most elements are zero or default values, using non-contiguous storage to save memory by only allocating space for non-zero entries. Languages like Julia provide built-in support through the SparseArrays module, which implements formats such as compressed sparse row (CSR) for efficient access and operations on irregular data distributions. In Python, the SciPy library extends NumPy with sparse array classes like csr_matrix, enabling non-contiguous representations that store indices and values separately, ideal for applications in machine learning and scientific simulations where dense arrays would waste resources. These variants maintain array semantics while optimizing for sparsity, often with specialized arithmetic operations that propagate zeros implicitly.[65][66]

Performance Considerations

Time and Space Complexity

Arrays provide efficient access to elements through direct indexing, achieving constant time complexity of O(1) for retrieving or modifying an element at a known index, as the operation involves a simple arithmetic computation to locate the memory address.[67] Insertion and deletion operations in contiguous arrays, such as adding or removing an element at an arbitrary position, require shifting subsequent elements to maintain contiguity, resulting in O(n time complexity in the worst and average cases, where n is the number of elements.[67] Searching for an element in an unsorted array typically uses linear search, which examines elements sequentially and has O(n) time complexity in the worst and average cases. If the array is sorted, binary search can be employed, reducing the time complexity to O(log n) by repeatedly dividing the search space in half.[68][69] The space complexity of an array is O(n) to store n elements, reflecting the linear allocation of memory for the data itself. For dynamic arrays that support resizing, additional overhead exists due to over-allocation of capacity to amortize growth costs, typically maintaining a constant factor greater than 1 relative to the current size, though the asymptotic space remains O(n).[70] Multi-dimensional arrays, often implemented as flattened one-dimensional arrays in row-major or column-major order, inherit similar complexities: element access remains O(1) after computing the linear index via dimension strides, while insertion or deletion affects O(total elements) time due to shifting in the underlying contiguous storage, and space is O(product of dimensions).[69]| Operation | Time Complexity (Worst/Average) | Space Complexity |

|---|---|---|

| Access by index | O(1) | O(1) auxiliary |

| Insertion (arbitrary) | O(n) | O(1) auxiliary |

| Deletion (arbitrary) | O(n) | O(1) auxiliary |

| Linear search | O(n) | O(1) auxiliary |

| Binary search (sorted) | O(log n) | O(1) auxiliary |

| Storage | N/A | O(n) |