Recent from talks

Nothing was collected or created yet.

Array (data structure)

View on Wikipedia

This article needs additional citations for verification. (September 2008) |

In computer science, an array is a data structure consisting of a collection of elements (values or variables), of same memory size, each identified by at least one array index or key, a collection of which may be a tuple, known as an index tuple. In general, array is mutable and linear collection of same data type elements. An array is stored such that the position (memory address) of each element can be computed from its index tuple by a mathematical formula.[1][2][3] The simplest type of data structure is a linear array, also called a one-dimensional array.

For example, an array of ten 32-bit (4-byte) integer variables, with indices 0 through 9, may be stored as ten words at memory addresses 2000, 2004, 2008, ..., 2036, (in hexadecimal: 0x7D0, 0x7D4, 0x7D8, ..., 0x7F4) so that the element with index i has the address 2000 + (i × 4).[4]

The memory address of the first element of an array is called first address, foundation address, or base address.

Because the mathematical concept of a matrix can be represented as a two-dimensional grid, two-dimensional arrays are also sometimes called "matrices". In some cases the term "vector" is used in computing to refer to an array, although tuples rather than vectors are the more mathematically correct equivalent. Tables are often implemented in the form of arrays, especially lookup tables; the word "table" is sometimes used as a synonym of array.

Arrays are among the oldest and most important data structures, and are used by almost every program. They are also used to implement many other data structures, such as lists and strings. They effectively exploit the addressing logic of computers. In most modern computers and many external storage devices, the memory is a one-dimensional array of words, whose indices are their addresses. Processors, especially vector processors, are often optimized for array operations.

Arrays are useful mostly because the element indices can be computed at run time. Among other things, this feature allows a single iterative statement to process arbitrarily many elements of an array. For that reason, the elements of an array data structure are required to have the same size and should use the same data representation. The set of valid index tuples and the addresses of the elements (and hence the element addressing formula) are usually,[3][5] but not always,[2] fixed while the array is in use.

The term "array" may also refer to an array data type, a kind of data type provided by most high-level programming languages that consists of a collection of values or variables that can be selected by one or more indices computed at run-time. Array types are often implemented by array structures; however, in some languages they may be implemented by hash tables, linked lists, search trees, or other data structures.

The term is also used, especially in the description of algorithms, to mean associative array or "abstract array", a theoretical computer science model (an abstract data type or ADT) intended to capture the essential properties of arrays.

History

[edit]The first digital computers used machine-language programming to set up and access array structures for data tables, vector and matrix computations, and for many other purposes. John von Neumann wrote the first array-sorting program (merge sort) in 1945, during the building of the first stored-program computer.[6] Array indexing was originally done by self-modifying code, and later using index registers and indirect addressing. Some mainframes designed in the 1960s, such as the Burroughs B5000 and its successors, used memory segmentation to perform index-bounds checking in hardware.[7]

Assembly languages generally have no special support for arrays, other than what the machine itself provides. The earliest high-level programming languages, including FORTRAN (1957), Lisp (1958), COBOL (1960), and ALGOL 60 (1960), had support for multi-dimensional arrays, and so has C (1972). In C++ (1983), class templates exist for multi-dimensional arrays whose dimension is fixed at runtime[3][5] as well as for runtime-flexible arrays.[2]

Applications

[edit]Arrays are used to implement mathematical vectors and matrices, as well as other kinds of rectangular tables. Many databases, small and large, consist of (or include) one-dimensional arrays whose elements are records.

Arrays are used to implement other data structures, such as lists, heaps, hash tables, deques, queues, stacks, strings, and VLists. Array-based implementations of other data structures are frequently simple and space-efficient (implicit data structures), requiring little space overhead, but may have poor space complexity, particularly when modified, compared to tree-based data structures (compare a sorted array to a search tree).

One or more large arrays are sometimes used to emulate in-program dynamic memory allocation, particularly memory pool allocation. Historically, this has sometimes been the only way to allocate "dynamic memory" portably.

Arrays can be used to determine partial or complete control flow in programs, as a compact alternative to (otherwise repetitive) multiple IF statements. In this context, they are known as control tables and are used in conjunction with a purpose-built interpreter whose control flow is altered according to values contained in the array. The array may contain subroutine pointers (or relative subroutine numbers that can be acted upon by SWITCH statements) that direct the path of the execution of the program.

Element identifier and addressing formulas

[edit]When data objects are stored in an array, individual objects are selected by an index that is usually a non-negative scalar integer. Indexes are also called subscripts. An index maps the array value to a stored object.

There are three ways in which the elements of an array can be indexed:

- 0 (zero-based indexing)

- The first element of the array is indexed by subscript of 0.[8]

- 1 (one-based indexing)

- The first element of the array is indexed by subscript of 1.

- n (n-based indexing)

- The base index of an array can be freely chosen. Usually programming languages allowing n-based indexing also allow negative index values and other scalar data types like enumerations, or characters may be used as an array index.

Using zero based indexing is the design choice of many influential programming languages, including C, Java and Lisp. This leads to simpler implementation where the subscript refers to an offset from the starting position of an array, so the first element has an offset of zero.

Arrays can have multiple dimensions, thus it is not uncommon to access an array using multiple indices. For example, a two-dimensional array A with three rows and four columns might provide access to the element at the 2nd row and 4th column by the expression A[1][3] in the case of a zero-based indexing system. Thus two indices are used for a two-dimensional array, three for a three-dimensional array, and n for an n-dimensional array.

The number of indices needed to specify an element is called the dimension, dimensionality, or rank of the array.

In standard arrays, each index is restricted to a certain range of consecutive integers (or consecutive values of some enumerated type), and the address of an element is computed by a "linear" formula on the indices.

One-dimensional arrays

[edit]

A one-dimensional array (or single dimension array) is a type of linear array. Accessing its elements involves a single subscript which can either represent a row or column index.

As an example consider the C declaration int anArrayName[10]; which declares a one-dimensional array of ten integers. Here, the array can store ten elements of type int . This array has indices starting from zero through nine. For example, the expressions anArrayName[0] and anArrayName[9] are the first and last elements respectively.

For a vector with linear addressing, the element with index i is located at the address B + c · i, where B is a fixed base address and c a fixed constant, sometimes called the address increment or stride.

If the valid element indices begin at 0, the constant B is simply the address of the first element of the array. For this reason, the C programming language specifies that array indices always begin at 0; and many programmers will call that element "zeroth" rather than "first".

However, one can choose the index of the first element by an appropriate choice of the base address B. For example, if the array has five elements, indexed 1 through 5, and the base address B is replaced by B + 30c, then the indices of those same elements will be 31 to 35. If the numbering does not start at 0, the constant B may not be the address of any element.

Multidimensional arrays

[edit]

For a multidimensional array, the element with indices i,j would have address B + c · i + d · j, where the coefficients c and d are the row and column address increments, respectively.

More generally, in a k-dimensional array, the address of an element with indices i1, i2, ..., ik is

- B + c1 · i1 + c2 · i2 + … + ck · ik.

For example: int a[2][3];

This means that array a has 2 rows and 3 columns, and the array is of integer type. Here we can store 6 elements they will be stored linearly but starting from first row linear then continuing with second row. The above array will be stored as a11, a12, a13, a21, a22, a23.

This formula requires only k multiplications and k additions, for any array that can fit in memory. Moreover, if any coefficient is a fixed power of 2, the multiplication can be replaced by bit shifting.

The coefficients ck must be chosen so that every valid index tuple maps to the address of a distinct element.

If the minimum legal value for every index is 0, then B is the address of the element whose indices are all zero. As in the one-dimensional case, the element indices may be changed by changing the base address B. Thus, if a two-dimensional array has rows and columns indexed from 1 to 10 and 1 to 20, respectively, then replacing B by B + c1 − 3c2 will cause them to be renumbered from 0 through 9 and 4 through 23, respectively. Taking advantage of this feature, some languages (like FORTRAN 77) specify that array indices begin at 1, as in mathematical tradition while other languages (like Fortran 90, Pascal and Algol) let the user choose the minimum value for each index.

Dope vectors

[edit]The addressing formula is completely defined by the dimension d, the base address B, and the increments c1, c2, ..., ck. It is often useful to pack these parameters into a record called the array's descriptor, stride vector, or dope vector.[2][3] The size of each element, and the minimum and maximum values allowed for each index may also be included in the dope vector. The dope vector is a complete handle for the array, and is a convenient way to pass arrays as arguments to procedures. Many useful array slicing operations (such as selecting a sub-array, swapping indices, or reversing the direction of the indices) can be performed very efficiently by manipulating the dope vector.[2]

Compact layouts

[edit]Often the coefficients are chosen so that the elements occupy a contiguous area of memory. However, that is not necessary. Even if arrays are always created with contiguous elements, some array slicing operations may create non-contiguous sub-arrays from them.

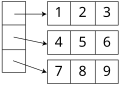

There are two systematic compact layouts for a two-dimensional array. For example, consider the matrix

In the row-major order layout (adopted by C for statically declared arrays), the elements in each row are stored in consecutive positions and all of the elements of a row have a lower address than any of the elements of a consecutive row:

1 2 3 4 5 6 7 8 9

In column-major order (traditionally used by Fortran), the elements in each column are consecutive in memory and all of the elements of a column have a lower address than any of the elements of a consecutive column:

1 4 7 2 5 8 3 6 9

For arrays with three or more indices, "row major order" puts in consecutive positions any two elements whose index tuples differ only by one in the last index. "Column major order" is analogous with respect to the first index.

In systems which use processor cache or virtual memory, scanning an array is much faster if successive elements are stored in consecutive positions in memory, rather than sparsely scattered. This is known as spatial locality, which is a type of locality of reference. Many algorithms that use multidimensional arrays will scan them in a predictable order. A programmer (or a sophisticated compiler) may use this information to choose between row- or column-major layout for each array. For example, when computing the product A·B of two matrices, it would be best to have A stored in row-major order, and B in column-major order.

Resizing

[edit]Static arrays have a size that is fixed when they are created and consequently do not allow elements to be inserted or removed. However, by allocating a new array and copying the contents of the old array to it, it is possible to effectively implement a dynamic version of an array; see dynamic array. If this operation is done infrequently, insertions at the end of the array require only amortized constant time.

Some array data structures do not reallocate storage, but do store a count of the number of elements of the array in use, called the count or size. This effectively makes the array a dynamic array with a fixed maximum size or capacity; Pascal strings are examples of this.

Non-linear formulas

[edit]More complicated (non-linear) formulas are occasionally used. For a compact two-dimensional triangular array, for instance, the addressing formula is a polynomial of degree 2.

Efficiency

[edit]Both store and select take (deterministic worst case) constant time. Arrays take linear (O(n)) space in the number of elements n that they hold.

In an array with element size k and on a machine with a cache line size of B bytes, iterating through an array of n elements requires the minimum of ceiling(nk/B) cache misses, because its elements occupy contiguous memory locations. This is roughly a factor of B/k better than the number of cache misses needed to access n elements at random memory locations. As a consequence, sequential iteration over an array is noticeably faster in practice than iteration over many other data structures, a property called locality of reference (this does not mean however, that using a perfect hash or trivial hash within the same (local) array, will not be even faster - and achievable in constant time). Libraries provide low-level optimized facilities for copying ranges of memory (such as memcpy) which can be used to move contiguous blocks of array elements significantly faster than can be achieved through individual element access. The speedup of such optimized routines varies by array element size, architecture, and implementation.

Memory-wise, arrays are compact data structures with no per-element overhead. There may be a per-array overhead (e.g., to store index bounds) but this is language-dependent. It can also happen that elements stored in an array require less memory than the same elements stored in individual variables, because several array elements can be stored in a single word; such arrays are often called packed arrays. An extreme (but commonly used) case is the bit array, where every bit represents a single element. A single octet can thus hold up to 256 different combinations of up to 8 different conditions, in the most compact form.

Array accesses with statically predictable access patterns are a major source of data parallelism.

Comparison with other data structures

[edit]| Peek (index) |

Mutate (insert or delete) at … | Excess space, average | |||

|---|---|---|---|---|---|

| Beginning | End | Middle | |||

| Linked list | Θ(n) | Θ(1) | Θ(1), known end element; Θ(n), unknown end element |

Θ(n) | Θ(n) |

| Array | Θ(1) | — | — | — | 0 |

| Dynamic array | Θ(1) | Θ(n) | Θ(1) amortized | Θ(n) | Θ(n)[9] |

| Balanced tree | Θ(log n) | Θ(log n) | Θ(log n) | Θ(log n) | Θ(n) |

| Random-access list | Θ(log n)[10] | Θ(1) | —[10] | —[10] | Θ(n) |

| Hashed array tree | Θ(1) | Θ(n) | Θ(1) amortized | Θ(n) | Θ(√n) |

Dynamic arrays or growable arrays are similar to arrays but add the ability to insert and delete elements; adding and deleting at the end is particularly efficient. However, they reserve linear (Θ(n)) additional storage, whereas arrays do not reserve additional storage.

Associative arrays provide a mechanism for array-like functionality without huge storage overheads when the index values are sparse. For example, an array that contains values only at indexes 1 and 2 billion may benefit from using such a structure. Specialized associative arrays with integer keys include Patricia tries, Judy arrays, and van Emde Boas trees.

Balanced trees require O(log n) time for indexed access, but also permit inserting or deleting elements in O(log n) time,[11] whereas growable arrays require linear (Θ(n)) time to insert or delete elements at an arbitrary position.

Linked lists allow constant time removal and insertion in the middle but take linear time for indexed access. Their memory use is typically worse than arrays, but is still linear.

An Iliffe vector is an alternative to a multidimensional array structure. It uses a one-dimensional array of references to arrays of one dimension less. For two dimensions, in particular, this alternative structure would be a vector of pointers to vectors, one for each row(pointer on c or c++). Thus an element in row i and column j of an array A would be accessed by double indexing (A[i][j] in typical notation). This alternative structure allows jagged arrays, where each row may have a different size—or, in general, where the valid range of each index depends on the values of all preceding indices. It also saves one multiplication (by the column address increment) replacing it by a bit shift (to index the vector of row pointers) and one extra memory access (fetching the row address), which may be worthwhile in some architectures.

Dimension

[edit]The dimension of an array is the number of indices needed to select an element. Thus, if the array is seen as a function on a set of possible index combinations, it is the dimension of the space of which its domain is a discrete subset. Thus a one-dimensional array is a list of data, a two-dimensional array is a rectangle of data,[12] a three-dimensional array a block of data, etc.

This should not be confused with the dimension of the set of all matrices with a given domain, that is, the number of elements in the array. For example, an array with 5 rows and 4 columns is two-dimensional, but such matrices form a 20-dimensional space. Similarly, a three-dimensional vector can be represented by a one-dimensional array of size three.

See also

[edit]References

[edit]- ^ Black, Paul E. (13 November 2008). "array". Dictionary of Algorithms and Data Structures. National Institute of Standards and Technology. Retrieved 22 August 2010.

- ^ a b c d e Bjoern Andres; Ullrich Koethe; Thorben Kroeger; Hamprecht (2010). "Runtime-Flexible Multi-dimensional Arrays and Views for C++98 and C++0x". arXiv:1008.2909 [cs.DS].

- ^ a b c d Garcia, Ronald; Lumsdaine, Andrew (2005). "MultiArray: a C++ library for generic programming with arrays". Software: Practice and Experience. 35 (2): 159–188. doi:10.1002/spe.630. ISSN 0038-0644. S2CID 10890293.

- ^ David R. Richardson (2002), The Book on Data Structures. iUniverse, 1112 pages. ISBN 0-595-24039-9, ISBN 978-0-595-24039-5.

- ^ a b Veldhuizen, Todd L. (December 1998). Arrays in Blitz++. Computing in Object-Oriented Parallel Environments. Lecture Notes in Computer Science. Vol. 1505. Berlin: Springer. pp. 223–230. doi:10.1007/3-540-49372-7_24. ISBN 978-3-540-65387-5.[dead link]

- ^ Knuth, Donald (1998). Sorting and Searching. The Art of Computer Programming. Vol. 3. Reading, MA: Addison-Wesley Professional. p. 159.

- ^ Levy, Henry M. (1984), Capability-based Computer Systems, Digital Press, p. 22, ISBN 9780932376220.

- ^ "Array Code Examples - PHP Array Functions - PHP code". Computer Programming Web programming Tips. Archived from the original on 13 April 2011. Retrieved 8 April 2011.

In most computer languages array index (counting) starts from 0, not from 1. Index of the first element of the array is 0, index of the second element of the array is 1, and so on. In array of names below you can see indexes and values.

- ^ Brodnik, Andrej; Carlsson, Svante; Sedgewick, Robert; Munro, JI; Demaine, ED (1999), Resizable Arrays in Optimal Time and Space (Technical Report CS-99-09) (PDF), Department of Computer Science, University of Waterloo

- ^ a b c Chris Okasaki (1995). "Purely Functional Random-Access Lists". Proceedings of the Seventh International Conference on Functional Programming Languages and Computer Architecture: 86–95. doi:10.1145/224164.224187.

- ^ "Counted B-Trees".

- ^ "Two-Dimensional Arrays \ Processing.org". processing.org. Retrieved 1 May 2020.

External links

[edit] Data Structures/Arrays at Wikibooks

Data Structures/Arrays at Wikibooks

Array (data structure)

View on GrokipediaFundamentals

Definition and Characteristics

An array is a data structure consisting of a collection of elements of the same type stored at contiguous memory locations, each identifiable by at least one index.[9][10] Key characteristics of arrays include their fixed size in static implementations, where the capacity is specified at creation and remains immutable, and the homogeneity of elements, requiring all items to share the identical data type to ensure uniform memory allocation per element.[9][10] Arrays enable random access through indices, which conventionally begin at 0 in most modern programming languages such as C++ and Python, although languages like Fortran default to starting at 1.[9][11] The memory layout forms a linear, contiguous block, facilitating direct computation of an element's address from its index and achieving O(1) average-case access time without sequential traversal.[9][10] This contrasts with sequential structures like linked lists, where elements are not stored contiguously and require traversal from a starting point, resulting in O(n) access time in the worst case.[10] Example:In pseudocode, a one-dimensional integer array of size 5 might be declared and initialized as:

int arr[5] = {1, 2, 3, 4, 5};

int arr[5] = {1, 2, 3, 4, 5};

arr[0] retrieves 1, arr[2] retrieves 3, and indices range from 0 to 4.[9]

Multidimensional arrays build upon this linear foundation by logically arranging elements into rows and columns (or higher dimensions) within the same contiguous memory space.[9]

Basic Operations

Arrays provide fundamental operations for managing collections of elements stored in contiguous memory locations. These operations enable efficient manipulation of data while respecting the fixed-size nature of basic arrays. Access and modification are typically constant-time operations, achieving O(1) average-case performance due to direct indexing.[12]Initialization

Initialization involves allocating space for a fixed number of elements of a specified type and setting their initial values. In many programming languages, such as Java, newly created arrays are automatically filled with default values, like zero for numeric types or null for references.[13] Alternatively, explicit values can be provided during declaration to set specific initial contents. This process establishes the array's size, which cannot be changed without reallocation in fixed-size implementations.[14] Pseudocode for basic initialization with defaults:DECLARE array_name AS type[size]

# Elements are set to default values (e.g., 0 for integers)

DECLARE array_name AS type[size]

# Elements are set to default values (e.g., 0 for integers)

DECLARE array_name AS type[] = {value1, value2, ..., valueN}

# Size is inferred from the number of values

DECLARE array_name AS type[] = {value1, value2, ..., valueN}

# Size is inferred from the number of values

Access

Accessing an element in an array uses direct indexing, where the element at position i (starting from 0) is retrieved using the syntaxarray_name[i]. This operation allows reading the value without scanning the entire structure, making it highly efficient for random lookups. Indices range from 0 to size-1, and accessing beyond these bounds results in runtime errors in languages with bounds checking.[15]

Pseudocode for accessing an element:

value = array_name[index]

# Retrieves the element at the specified index

value = array_name[index]

# Retrieves the element at the specified index

Modification

Modification in fixed-size arrays primarily involves updating existing elements via assignment, which replaces the value at a specific index. For insertion at the end, if the array is not full, an assignment can be used; however, insertion in a full fixed-size array is not possible without resizing, which involves creating a new larger array and copying elements (not covered here). For insertions not at the end, even when space is available, shifting subsequent elements is required, which is O(n) time. Deletion at the end simply involves setting the last element to a default value or marking it as unused, but removing from the middle necessitates shifting to fill the gap, limiting practicality in fixed structures. These constraints highlight the trade-offs of fixed sizing for simplicity and speed.[14][15] Pseudocode for updating an element:array_name[index] = new_value

# Assigns the new value to the specified position

array_name[index] = new_value

# Assigns the new value to the specified position

IF current_size < max_size THEN

array_name[current_size] = new_value

current_size = current_size + 1

ELSE

# Error: array is full; insertion requires resizing, not possible in fixed-size arrays

END IF

IF current_size < max_size THEN

array_name[current_size] = new_value

current_size = current_size + 1

ELSE

# Error: array is full; insertion requires resizing, not possible in fixed-size arrays

END IF

IF current_size > 0 THEN

current_size = current_size - 1

# Optional: set array_name[current_size] to default

END IF

IF current_size > 0 THEN

current_size = current_size - 1

# Optional: set array_name[current_size] to default

END IF

Traversal

Traversal systematically visits each element in the array, often using a loop over indices to process or inspect the contents. This is commonly implemented with a for-loop that iterates from the first to the last index, enabling operations like summing values or searching for elements. Traversal takes linear time proportional to the array size but provides sequential access without gaps.[15][14] Pseudocode for basic traversal:FOR i = 0 TO array_name.length - 1

PROCESS array_name[i]

END FOR

# Visits each element in order

FOR i = 0 TO array_name.length - 1

PROCESS array_name[i]

END FOR

# Visits each element in order

Common Errors

A frequent issue in array operations is the off-by-one error, where indices are miscalculated, such as using a loop condition ofi <= length instead of i < length, leading to attempts to access non-existent elements beyond the valid range of 0 to length-1. This can cause runtime exceptions or undefined behavior, emphasizing the importance of careful index management during access, modification, and traversal.[16]

Historical Development

Origins in Early Computing

The concept of arrays in computing traces its roots to 19th-century mathematical notation, particularly the development of matrices as rectangular arrangements of numbers for solving systems of linear equations. British mathematician James Joseph Sylvester coined the term "matrix" in 1850 to describe such oblong arrays, building on earlier work by Arthur Cayley who formalized matrix algebra in 1858, providing a precursor to structured data storage in computational contexts.[17] These mathematical arrays influenced early computing by enabling the representation of multidimensional numerical data, though they remained theoretical until electronic machines emerged in the 1940s. A key milestone in computing-specific array usage occurred with the ENIAC (Electronic Numerical Integrator and Computer), completed in 1945, which employed fixed function tables as one- and two-dimensional arrays to store and retrieve numerical values for ballistic calculations. These tables functioned as static arrays of up to 104 signed 10-digit numbers, programmed via plugboards and switches to hold precomputed data or functions, allowing batch processing of iterative computations without reprogramming the entire machine.[18] Around the same time, John von Neumann's 1945 report on the EDVAC design described the first array-sorting algorithm (merge sort), treating memory as a linear array for efficient data manipulation in stored-program computers. (Note: This links to a discussion referencing the report; original at http://www.virtualtravelog.net/wp/wp-content/media/2003-08-TheFirstDraft.pdf) The introduction of arrays in high-level programming languages solidified their role in early computing, notably with Fortran (Formula Translation) released by IBM in 1957. Fortran provided subscript notation for declaring one-, two-, or three-dimensional arrays as indexable variables, facilitating scientific computations on machines like the IBM 704 by allowing programmers to handle batches of numerical data without assembly-level memory addressing.[19] For instance, early UNIVAC systems, starting with the UNIVAC I in 1951, utilized fixed arrays in their programming for tabulating large numerical datasets during the 1950 U.S. Census, enabling efficient batch processing of population statistics through contiguous memory blocks.[20] Early hardware imposed significant limitations on arrays, primarily through fixed memory allocation that precluded dynamic resizing during execution. In machines like ENIAC and UNIVAC, array sizes had to be predetermined at setup or compile time, leading to inefficient use of scarce resources—either wasting memory with oversized allocations or requiring manual reconfiguration for varying data volumes—without support for runtime expansion seen in later systems.[21]Evolution in Programming Languages

The evolution of arrays in programming languages from the 1960s onward marked a shift toward more flexible, expressive, and safe abstractions, building on early concepts to support diverse computational needs. In ALGOL 60, introduced in 1960, arrays were declared with explicit bound pairs specifying lower and upper limits for each dimension, allowing arbitrary integer bounds rather than a fixed starting point; for instance, an array could be defined asarray a[1:10], with the lower bound typically set to 1 in examples, enabling rectangular multidimensional structures without assuming zero-based indexing.[22] This design emphasized generality for algorithmic expression. By the 1970s, the C language, developed by Dennis Ritchie at Bell Labs between 1971 and 1973, adopted zero-based indexing for arrays, where the first element is accessed at index 0, and innovated by treating array names in expressions as pointers to their first element—e.g., a[i] equivalent to *(a + i)—facilitating efficient memory access and pointer arithmetic while inheriting contiguous storage from predecessors like B.[23]

Indexing conventions diverged across languages, reflecting influences from mathematical traditions and hardware constraints. Zero-based indexing became standard in systems-oriented languages like C and later Java, where arrays start at index 0 to align with pointer offsets and simplify boundary calculations.[23] In contrast, scientific computing languages such as Fortran (from its 1957 origins but standardized in subsequent revisions) and MATLAB default to one-based indexing, matching human intuition for counting and matrix notation; for example, Fortran arrays begin at 1 unless explicitly adjusted, as in INTEGER :: arr(1:5).[24][25]

From the 1980s, languages increasingly supported dynamic arrays to handle variable sizes at runtime, addressing the limitations of static declarations. Java's ArrayList, part of the Java Collections Framework since JDK 1.2 in 1998, implements a resizable array that automatically grows its capacity (initially 10 elements, expanding by 50% increments) when elements are added, providing dynamic behavior over fixed-size arrays while maintaining zero-based indexing. Similarly, Python's lists, introduced in Python 0.9 in 1991, function as dynamic arrays under the hood, supporting append, insert, and resize operations with over-allocation for amortized O(1) additions, and zero-based indexing for access like lst[0].[26] Language-specific features further diversified array handling; C++ allows multidimensional arrays via declarations like int arr[3][4];, computing sizes at compile time with row-major layout, while Python's NumPy library provides built-in support for efficient n-dimensional arrays (ndarrays) since 2006, enabling advanced operations like broadcasting and vectorization on structures like np.array([[1,2],[3,4]]).[27]

Standardization efforts solidified array semantics for portability. The ISO C99 standard (ISO/IEC 9899:1999) formalized fixed-size arrays with zero-based indexing and introduced variable-length arrays (VLAs) whose sizes are determined at runtime, e.g., int n = 5; int arr[n];, though with restrictions on recursion and storage duration.[28] The ISO C++ standards, evolving from 1998 onward, built on this with templates for generic arrays but emphasized manual memory management until later additions like std::array for fixed-size, bounds-checked alternatives. More recent languages like Rust (first stable in 2015) evolved toward safer array types, distinguishing fixed-size arrays [T; N] from dynamic slices &[T], which provide runtime bounds checking to prevent overflows, as in let slice = &arr[0..3];, reducing common errors in imperative code.[29][30]

Arrays also adapted to programming paradigms, influencing mutability models. In imperative languages like C and Java, arrays are mutable by default, allowing in-place modifications for efficiency in loops and algorithms. Functional languages, however, prioritize immutability to enable pure functions and parallelism; for instance, Haskell's array libraries, such as the array package since 1998, implement immutable arrays using persistent data structures that share unchanged parts across updates, ensuring referential transparency without side effects.[31] This contrast highlights arrays' role in balancing performance with safety across paradigms.

Addressing Mechanisms

One-Dimensional Arrays

A one-dimensional array, also known as a linear array, stores its elements in contiguous blocks of memory, enabling direct computation of each element's location using a simple formula. The memory address of the element at index is given by \text{base_address} + i \times \text{element_size}, where \text{base_address} is the starting memory location of the array and \text{element_size} represents the number of bytes per element, such as 4 bytes for an integer type on a 32-bit system.[32] This formula assumes zero-based indexing, which is standard in languages like C and Java, allowing efficient random access without traversing the structure.[33] For example, consider an array of integers with a base address of 1000 bytes and an element size of 4 bytes. The address of the element at index 3 is calculated as .[34] Indices in a one-dimensional array of elements typically range from 0 to , ensuring all positions are uniquely addressable within the allocated space.[35] The contiguous allocation of elements in memory enhances performance by exploiting spatial locality, where accessing one element often prefetches neighboring ones into the processor cache, reducing latency for sequential traversals.[36] In low-level languages like C, this address computation is often handled via pointer arithmetic, as shown in the following pseudocode:#include <stddef.h>

size_t compute_address(void* base, size_t index, size_t element_size) {

return (size_t)((char*)base + index * element_size);

}

#include <stddef.h>

size_t compute_address(void* base, size_t index, size_t element_size) {

return (size_t)((char*)base + index * element_size);

}

Multidimensional Arrays

A multidimensional array logically organizes data into multiple dimensions, such as rows and columns for a two-dimensional array, but is physically stored as a contiguous block in linear memory to facilitate efficient access.[38] This mapping from logical structure to physical layout enables the use of one-dimensional addressing mechanisms while preserving the multi-index abstraction.[39] In row-major order, commonly used in languages like C, the elements of each row are stored contiguously, followed by the next row.[38] The memory address of an element at position in a 2D array with rows and columns (0-based indexing) is calculated as , where is the starting address and is the size of each element in bytes.[38] For example, in a 3×3 array of integers (each 4 bytes) starting at address 0, the element at (1, 1) has address , reflecting a row stride of bytes between corresponding elements in adjacent rows.[38] In contrast, column-major order, as employed in Fortran, stores elements column by column, with the address for given by .[40] This results in contiguous storage along columns, making column-wise traversals more cache-efficient in Fortran programs.[38] For higher-dimensional arrays, the addressing generalizes using strides for each axis, where the stride for dimension is the product of the sizes of all higher dimensions multiplied by the element size.[38] In an -dimensional array with dimensions , the address of element in row-major order is , with and .[38] Column-major order reverses the stride computation, starting from the highest dimension.[40] Memory implications in multidimensional arrays often include padding to maintain alignment, as hardware typically requires elements or rows to start at addresses that are multiples of the element size or cache line boundaries.[41] For instance, in a row-major 2D array, padding may be inserted after each row to align the start of the next row, preventing performance penalties from unaligned access in multi-dimensional structures.[41]Layout Variations

Dope Vectors

A dope vector is an auxiliary data structure, typically implemented as a record or struct, that stores metadata essential for managing and accessing arrays in memory. It includes key elements such as the base pointer to the array's data, the shape (dimensions and extents), strides (the memory offset between consecutive elements along each dimension), and bounds (lower and upper limits for each dimension). This structure allows compilers and runtimes to handle arrays abstractly without embedding all details in the code itself.[42] The primary purpose of a dope vector is to support flexible array operations like dynamic bounds adjustment, slicing, and creating views that reference subsets of the data without copying it, thereby improving efficiency in memory usage and computation. For instance, in NumPy, the ndarray object functions similarly to a dope vector by maintaining attributes like shape, strides, and a data pointer, enabling zero-copy operations such as array slicing where a view shares the underlying buffer but adjusts metadata to represent the subset. This approach is crucial for high-performance numerical computing, as it avoids unnecessary data duplication during manipulations.[27] Typical components of a dope vector encompass: the data pointer (address of the first element), lower and upper bounds for each dimension (to define the valid index range), strides per dimension (to compute offsets for non-contiguous access), the total number of elements (for size checks), and sometimes the element type or rank (number of dimensions). These elements collectively form a compact descriptor that can be passed to functions, allowing them to interpret the array's layout dynamically. In Fortran implementations, for example, the array descriptor (dope vector) for an allocatable array includes fields likebase_addr, dim (array of dimension info with lower bound, extent, and stride), and data for the actual storage.[43]

To illustrate, consider a 2D array A of shape (5, 7) stored in row-major order with a stride of 7 for the first dimension and 1 for the second. A dope vector for the slice A[1:3, 2:4] would update the base pointer to offset by (17 + 21) elements from the original, set lower bounds to (0,0) in the view's local indexing, upper bounds to (2,2), and retain the original strides (7,1) to access elements without copying. This adjustment ensures the view behaves as a standalone (2,2) array while pointing to the original data.

Historically, dope vectors emerged in the implementation of Algol 68, where they served as descriptors to manage flexible array modes, including dynamic sizing and subscripting, by storing bounds and offsets in a runtime structure passed to procedures. This design influenced subsequent languages, with modern usage prominent in Fortran for handling assumed-shape and allocatable arrays, where the compiler generates dope vectors to facilitate interoperability and slicing. Such structures integrate with resizing by updating metadata rather than the data buffer itself.

The advantages of dope vectors include enabling support for irregular arrays (e.g., non-zero lower bounds or strided access) without requiring full data reallocation, facilitating automatic bounds checking to prevent overflows, and simplifying array passing in subroutine calls by encapsulating all necessary layout information. These features enhance both safety and performance in array-centric applications.[42]

Compact Storage Techniques

Compact storage techniques for arrays aim to minimize memory usage by encoding data more densely than standard contiguous layouts, particularly for booleans, small integers, or sparse structures.[44] One prominent method is bit-packing, which stores multiple small values—such as booleans or integers requiring fewer than 8 bits—within a single byte or word by manipulating individual bits./10:_Sets/10.02:_Bit_array_implementation) For instance, eight boolean values can be packed into one byte, where each bit represents true (1) or false (0), using bitwise operations like shifts and masks for packing and extraction.[44] To access a bit-packed boolean array, implementation typically involves calculating the byte index and bit position, then applying masks. The following pseudocode illustrates setting and getting a boolean at indexi in a bit-packed array stored as bytes:

function get_bit(array, i):

byte_index = i // 8

bit_pos = i % 8

return (array[byte_index] & (1 << bit_pos)) != 0

function set_bit(array, i, value):

byte_index = i // 8

bit_pos = i % 8

mask = ~(1 << bit_pos)

if value:

array[byte_index] |= (1 << bit_pos)

else:

array[byte_index] &= mask

function get_bit(array, i):

byte_index = i // 8

bit_pos = i % 8

return (array[byte_index] & (1 << bit_pos)) != 0

function set_bit(array, i, value):

byte_index = i // 8

bit_pos = i % 8

mask = ~(1 << bit_pos)

if value:

array[byte_index] |= (1 << bit_pos)

else:

array[byte_index] &= mask

\0), eliminating the need for explicit length storage and allowing multiple strings to share an array with padding if necessary.[45] Alternatively, length-prefixed packing stores the string length in a preceding field (e.g., a byte or integer), followed by the characters, which supports variable-length strings without scanning for terminators but adds a small overhead for the prefix.[46]

In sparse matrices, diagonal storage techniques further enhance compactness by storing only non-zero elements along the matrix diagonals, using a two-dimensional array where rows represent diagonal offsets and columns hold the values.[47] This format is particularly efficient for banded or diagonally dominant matrices, as it avoids allocating space for zero entries off the diagonals, potentially reducing storage from O(n²) to O(k n) where k is the bandwidth./08:_Sparse_Matrices/8.02:_Sparse_Matrix_Formats)

These techniques trade access speed for space savings: bit operations in packing introduce computational overhead, such as shifts and masks that slow reads and writes compared to byte-aligned access, while diagonal storage may require offset calculations that complicate random access in non-banded cases.[44] Nonetheless, they prove valuable for memory-constrained environments with dense small data or structured sparsity, achieving up to 8x compression for booleans./10:_Sets/10.02:_Bit_array_implementation)

Dynamic Features

Resizing Strategies

Arrays can be classified as static or dynamic based on their size flexibility. Static arrays have a fixed size determined at compile-time or allocation, limiting them to that capacity without runtime adjustment.[48] In contrast, dynamic arrays allow size changes at runtime to accommodate varying numbers of elements, typically through reallocation mechanisms.[48] A common resizing strategy for dynamic arrays employs amortized analysis to achieve efficient average-case performance for insertions. When the array fills to capacity, it reallocates a larger block, often by multiplying the current size by a growth factor such as 2, resulting in capacities that double (e.g., from 4 to 8 to 16). This geometric progression ensures that the amortized cost of appending an element is O(1), as the total cost of n insertions, including occasional resizes, is O(n). The reallocation process involves allocating a new array of size , copying all existing elements to the new array, and freeing the old one. Growth factors between 1.5 and 2 are typical, balancing time and space efficiency; factors closer to the golden ratio can optimize both asymptotically.[48][49] Shrinking strategies complement growth to manage memory when elements are removed. If the array's utilization falls below a load factor threshold, such as 25% full, the capacity is typically halved by reallocating a smaller array, copying elements, and freeing excess space. This prevents excessive memory waste while maintaining amortized O(1) costs for deletions.[50] In practice, these strategies appear in standard library implementations. Java's ArrayList uses a growth factor of 1.5, calculating new capacity as , and shrinks only explicitly via trimToSize, though automatic shrinking is not standard. C++'s std::vector follows the C++ standard's requirement for amortized constant-time insertions but leaves the exact factor to implementations; GNU libstdc++ doubles the capacity (factor of 2), while Microsoft Visual C++ uses 1.5. Resizing incurs O(n) worst-case time due to element copying, but amortization spreads this cost.[51][52][53][54]Bounds and Indexing

Bounds checking in arrays involves verifying that an index value falls within the valid range, typically ensuring , to prevent access to memory outside the allocated array bounds.[55] This verification is crucial for maintaining program safety and avoiding undefined or erroneous behavior during array operations. Without proper checks, attempts to access elements beyond the array's limits can lead to severe issues, including data corruption or security vulnerabilities. Many modern programming languages implement runtime bounds checking automatically. In Java, the Java Virtual Machine enforces bounds validation on every array access, throwing anArrayIndexOutOfBoundsException if the index is negative or exceeds or equals the array length.[56] Similarly, Python performs runtime checks for sequence types like lists, raising an IndexError when an index is out of range, such as attempting to access lst[-1] on an empty list or lst[5] on a list of length 3.[57] These mechanisms add overhead but ensure predictable error handling. In contrast, C does not provide built-in runtime bounds checking; accessing an array out of bounds results in undefined behavior as per the C standard, allowing the compiler to assume valid access and potentially optimize away related code.

Out-of-bounds access can cause critical failures, such as buffer overflows—where data spills into adjacent memory—or segmentation faults, which terminate the program due to invalid memory reads or writes.[58] A notable historical example is the 1988 Morris worm, which exploited a buffer overflow vulnerability in the fingerd daemon on VAX systems; the worm sent a 536-byte input to a 512-byte stack buffer without bounds validation, overwriting return addresses to execute arbitrary code and propagate across networks.[59] Such incidents highlight the risks of unchecked array access in low-level languages.

To promote safe indexing, developers can employ assertions for debugging, which halt execution if preconditions like valid indices are violated, or use checked accessors that explicitly validate before proceeding.[60] In Fortran, bounds checking is not mandated by the language standard but is commonly enabled through compiler options, such as -check bounds in gfortran or Intel Fortran, providing runtime validation optional for performance-critical code; this contrasts with C's reliance on undefined behavior, where no such implicit safeguards exist.[61] Best practices include always consulting length variables or properties—such as sizeof in C for static arrays, Java's array.length, or Python's len()—to manually validate indices before access, thereby mitigating risks even in languages without automatic enforcement.

Performance Evaluation

Time and Space Complexity

Arrays provide efficient random access to elements through direct indexing, allowing both read and write operations at a specific index to complete in constant time, denoted as in asymptotic notation, where the time remains independent of the array size .[62] This efficiency stems from the contiguous memory allocation of arrays, enabling the computation of an element's memory address as a simple offset from the base address, requiring only arithmetic operations regardless of array length. In contrast, inserting or deleting an element at an arbitrary index in the middle of the array requires shifting subsequent elements to maintain contiguity, resulting in time complexity in the worst case, as up to elements may need relocation.[62] For searching an unsorted array, a linear scan must examine each element sequentially, yielding time complexity, as the target could be in the last position or absent.[62] If the array is sorted, binary search can halve the search space iteratively, achieving time by comparing the target to the middle element and recursing on the appropriate half, with the number of steps bounded by the logarithm base 2 of .[63] Appending an element to the end of a dynamic array is amortized , meaning the average time per operation over a sequence is constant; while most appends take by placing the element in the next slot, resizing the array (copying all elements to a larger allocation) occurs infrequently, spreading the cost across many operations.[64] In terms of space complexity, an array storing elements requires space proportional to the number of elements, with each typically occupying a fixed size based on the element type.[62] Dynamic arrays introduce additional overhead, allocating capacity greater than the current size (often doubling it during resizes) to amortize future insertions, resulting in up to roughly twice the space for elements plus metadata like size and capacity pointers.[64] Asymptotic notation like Big-O describes upper bounds on growth rates; for arrays, access exemplifies constant time, ideal for frequent indexing, while insertions highlight linear scaling with size, making arrays unsuitable for frequent mid-array modifications. In practice, the idealized access benefits from spatial locality in cache hierarchies, as contiguous elements load into cache lines together, reducing memory access latency beyond theoretical bounds in real systems.[65]Comparisons to Linked Structures

Arrays offer constant-time random access to elements, achieving O(1) time complexity for indexing, whereas linked lists require traversing from the head or a known position, resulting in O(n) time in the worst case.[66] This makes arrays preferable for scenarios demanding frequent direct access, such as lookup operations in sequential data. In contrast, insertions and deletions in arrays often necessitate shifting elements, leading to O(n) time complexity, while linked lists support O(1) operations at known positions by adjusting pointers.[66] Arrays typically have a fixed size allocated upfront, which can lead to underutilization if the actual number of elements varies, though dynamic arrays (like resizable vectors) mitigate this through reallocation.[67] Linked lists, however, are inherently dynamic, allowing insertions and deletions without predefined capacity limits, making them suitable for applications with unpredictable sizes.[66] Space-wise, arrays benefit from contiguous memory allocation, minimizing fragmentation and overhead, as each element stores only the data itself.[68] Linked lists incur higher space overhead due to storing pointers (typically 4-8 bytes per node) alongside data, potentially doubling or more the memory usage for small elements.[69] Compared to tree structures like binary search trees, arrays are ideal for dense, sequential data where order is fixed and access patterns are uniform, but trees provide O(log n) time for insertions, deletions, and searches in dynamic, sorted datasets.[70] Hash tables, using arrays internally with hashing, achieve average O(1) time for key-value lookups, insertions, and deletions, outperforming arrays for sparse or associative access but requiring more space for collision resolution.[70] Arrays excel in scenarios like representing matrices or images, where two-dimensional indexing enables efficient pixel or element manipulation without pointer overhead.[71] Linked lists or deques built on them are better for queues and stacks involving frequent end modifications, such as task scheduling or undo operations.[72] Hybrid structures combine strengths of both: array-based deques use circular buffers for O(1) amortized insertions and deletions at both ends, avoiding the traversal costs of linked deques while maintaining dynamism.[73] Skip lists layer linked lists with express pointers mimicking array-like jumps, achieving O(log n) expected time for searches and updates in ordered data.[74] The following table summarizes key time complexities for common operations across these structures (assuming standard implementations; worst-case for trees and hashes unless balanced or low-collision):| Operation | Array | Linked List | Binary Search Tree | Hash Table |

|---|---|---|---|---|

| Random Access/Search | O(1) | O(n) | O(log n) | O(1) average |

| Insertion (end/known pos) | O(1) amortized (dynamic) / O(n) | O(1) | O(log n) | O(1) average |

| Deletion (end/known pos) | O(1) amortized (dynamic) / O(n) | O(1) | O(log n) | O(1) average |

Extensions and Dimensions

Array Dimensions

In data structures, the dimensionality of an array refers to the number of indices required to specify an element, known as the rank, which determines its geometric interpretation and utility. A one-dimensional (1D) array, with rank 1, functions as a vector, storing a linear sequence of elements. A two-dimensional (2D) array, with rank 2, operates as a matrix, organizing elements into rows and columns for tabular data. Arrays with three or more dimensions, having higher ranks, are generalized as tensors, enabling representation of complex multi-way relationships such as volumes or higher-order data.[75][76] The shape of an array encapsulates its structure as a tuple of integers, each denoting the extent along a dimension; for instance, a shape of (3, 4) describes a 2D array with 3 elements in the first dimension and 4 in the second. The rank is simply the length of this shape tuple. In programming languages, multidimensional arrays are declared by specifying multiple bracket pairs or dimensions. For example, in Java, a jagged 2D array is declared asint[][] array, allowing rows of varying lengths, while in C++, a fixed-size 2D array uses int array[3][4].[76][77][78][79]

The total number of elements in a multidimensional array scales as the product of its dimension sizes, directly impacting memory allocation; a 2D array of shape (m, n) thus requires m × n elements. Visually, a 1D array resembles a straight line of consecutive items, a 2D array forms a grid or table with rows and columns, and a 3D array appears as a cube or layered stack of 2D grids, facilitating intuitive mapping to spatial data.[80]

Practical limitations on array dimensions arise from hardware and implementation constraints, including maximum rank limited by language standards, such as 7 in Fortran 95 and earlier or 15 in Fortran 2008 and later, and total size bounded by addressable memory. In 64-bit systems, where addresses span up to 264 bytes, the theoretical maximum array size approaches 264 elements for byte-sized data, though actual limits are lower due to overhead, fragmentation, and per-process memory caps.[80][81][82]