Recent from talks

Nothing was collected or created yet.

Binary search

View on Wikipedia

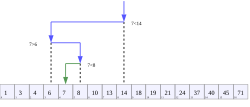

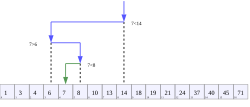

Visualization of the binary search algorithm where 7 is the target value | |

| Class | Search algorithm |

|---|---|

| Data structure | Array |

| Worst-case performance | O(log n) |

| Best-case performance | O(1) |

| Average performance | O(log n) |

| Worst-case space complexity | O(1) |

| Optimal | Yes |

In computer science, binary search, also known as half-interval search,[1] logarithmic search,[2] or binary chop,[3] is a search algorithm that finds the position of a target value within a sorted array.[4][5] Binary search compares the target value to the middle element of the array. If they are not equal, the half in which the target cannot lie is eliminated and the search continues on the remaining half, again taking the middle element to compare to the target value, and repeating this until the target value is found. If the search ends with the remaining half being empty, the target is not in the array.

Binary search runs in logarithmic time in the worst case, making comparisons, where is the number of elements in the array.[a][6] Binary search is faster than linear search except for small arrays. However, the array must be sorted first to be able to apply binary search. There are specialized data structures designed for fast searching, such as hash tables, that can be searched more efficiently than binary search. However, binary search can be used to solve a wider range of problems, such as finding the next-smallest or next-largest element in the array relative to the target even if it is absent from the array.

There are numerous variations of binary search. In particular, fractional cascading speeds up binary searches for the same value in multiple arrays. Fractional cascading efficiently solves a number of search problems in computational geometry and in numerous other fields. Exponential search extends binary search to unbounded lists. The binary search tree and B-tree data structures are based on binary search.

Algorithm

[edit]Binary search works on sorted arrays. Binary search begins by comparing an element in the middle of the array with the target value. If the target value matches the element, its position in the array is returned. If the target value is less than the element, the search continues in the lower half of the array. If the target value is greater than the element, the search continues in the upper half of the array. By doing this, the algorithm eliminates the half in which the target value cannot lie in each iteration.[7]

Procedure

[edit]Given an array of elements with values or records sorted such that , and target value , the following subroutine uses binary search to find the index of in .[7]

- Set to and to .

- If , the search terminates as unsuccessful.

- Set (the position of the middle element) to plus the floor of , which is the greatest integer less than or equal to .

- If , set to and go to step 2.

- If , set to and go to step 2.

- Now , the search is done; return .

This iterative procedure keeps track of the search boundaries with the two variables and . The procedure may be expressed in pseudocode as follows, where the variable names and types remain the same as above, floor is the floor function, and unsuccessful refers to a specific value that conveys the failure of the search.[7]

function binary_search(A, n, T) is

L := 0

R := n − 1

while L ≤ R do

m := L + floor((R - L) / 2)

if A[m] < T then

L := m + 1

else if A[m] > T then

R := m − 1

else:

return m

return unsuccessful

Alternatively, the algorithm may take the ceiling of . This may change the result if the target value appears more than once in the array.

Alternative procedure

[edit]In the above procedure, the algorithm checks whether the middle element () is equal to the target () in every iteration. Some implementations leave out this check during each iteration. The algorithm would perform this check only when one element is left (when ). This results in a faster comparison loop, as one comparison is eliminated per iteration, while it requires only one more iteration on average.[8]

Hermann Bottenbruch published the first implementation to leave out this check in 1962.[8][9]

- Set to and to .

- While ,

- Set (the position of the middle element) to plus the ceiling of , which is the least integer greater than or equal to .

- If , set to .

- Else, ; set to .

- Now , the search is done. If , return . Otherwise, the search terminates as unsuccessful.

Where ceil is the ceiling function, the pseudocode for this version is:

function binary_search_alternative(A, n, T) is

L := 0

R := n − 1

while L != R do

m := L + ceil((R - L) / 2)

if A[m] > T then

R := m − 1

else:

L := m

if A[L] = T then

return L

return unsuccessful

Duplicate elements

[edit]The procedure may return any index whose element is equal to the target value, even if there are duplicate elements in the array. For example, if the array to be searched was and the target was , then it would be correct for the algorithm to either return the 4th (index 3) or 5th (index 4) element. The regular procedure would return the 4th element (index 3) in this case. It does not always return the first duplicate (consider which still returns the 4th element). However, it is sometimes necessary to find the leftmost element or the rightmost element for a target value that is duplicated in the array. In the above example, the 4th element is the leftmost element of the value 4, while the 5th element is the rightmost element of the value 4. The alternative procedure above will always return the index of the rightmost element if such an element exists.[9]

Procedure for finding the leftmost element

[edit]To find the leftmost element, the following procedure can be used:[10]

- Set to and to .

- While ,

- Set (the position of the middle element) to plus the floor of , which is the greatest integer less than or equal to .

- If , set to .

- Else, ; set to .

- Return .

If and , then is the leftmost element that equals . Even if is not in the array, is the rank of in the array, or the number of elements in the array that are less than .

Where floor is the floor function, the pseudocode for this version is:

function binary_search_leftmost(A, n, T):

L := 0

R := n

while L < R:

m := L + floor((R - L) / 2)

if A[m] < T:

L := m + 1

else:

R := m

return L

Procedure for finding the rightmost element

[edit]To find the rightmost element, the following procedure can be used:[10]

- Set to and to .

- While ,

- Set (the position of the middle element) to plus the floor of , which is the greatest integer less than or equal to .

- If , set to .

- Else, ; set to .

- Return .

If and , then is the rightmost element that equals . Even if is not in the array, is the number of elements in the array that are greater than .

Where floor is the floor function, the pseudocode for this version is:

function binary_search_rightmost(A, n, T):

L := 0

R := n

while L < R:

m := L + floor((R - L) / 2)

if A[m] > T:

R := m

else:

L := m + 1

return R - 1

Approximate matches

[edit]

The above procedure only performs exact matches, finding the position of a target value. However, it is trivial to extend binary search to perform approximate matches because binary search operates on sorted arrays. For example, binary search can be used to compute, for a given value, its rank (the number of smaller elements), predecessor (next-smallest element), successor (next-largest element), and nearest neighbor. Range queries seeking the number of elements between two values can be performed with two rank queries.[11]

- Rank queries can be performed with the procedure for finding the leftmost element. The number of elements less than the target value is returned by the procedure.[11]

- Predecessor queries can be performed with rank queries. If the rank of the target value is , its predecessor is .[12]

- For successor queries, the procedure for finding the rightmost element can be used. If the result of running the procedure for the target value is , then the successor of the target value is .[12]

- The nearest neighbor of the target value is either its predecessor or successor, whichever is closer.

- Range queries are also straightforward.[12] Once the ranks of the two values are known, the number of elements greater than or equal to the first value and less than the second is the difference of the two ranks. This count can be adjusted up or down by one according to whether the endpoints of the range should be considered to be part of the range and whether the array contains entries matching those endpoints.[13]

Performance

[edit]

In terms of the number of comparisons, the performance of binary search can be analyzed by viewing the run of the procedure on a binary tree. The root node of the tree is the middle element of the array. The middle element of the lower half is the left child node of the root, and the middle element of the upper half is the right child node of the root. The rest of the tree is built in a similar fashion. Starting from the root node, the left or right subtrees are traversed depending on whether the target value is less or more than the node under consideration.[6][14]

In the worst case, binary search makes iterations of the comparison loop, where the notation denotes the floor function that yields the greatest integer less than or equal to the argument, and is the binary logarithm. This is because the worst case is reached when the search reaches the deepest level of the tree, and there are always levels in the tree for any binary search.

The worst case may also be reached when the target element is not in the array. If is one less than a power of two, then this is always the case. Otherwise, the search may perform iterations if the search reaches the deepest level of the tree. However, it may make iterations, which is one less than the worst case, if the search ends at the second-deepest level of the tree.[15]

On average, assuming that each element is equally likely to be searched, binary search makes iterations when the target element is in the array. This is approximately equal to iterations. When the target element is not in the array, binary search makes iterations on average, assuming that the range between and outside elements is equally likely to be searched.[14]

In the best case, where the target value is the middle element of the array, its position is returned after one iteration.[16]

In terms of iterations, no search algorithm that works only by comparing elements can exhibit better average and worst-case performance than binary search. The comparison tree representing binary search has the fewest levels possible as every level above the lowest level of the tree is filled completely.[b] Otherwise, the search algorithm can eliminate few elements in an iteration, increasing the number of iterations required in the average and worst case. This is the case for other search algorithms based on comparisons, as while they may work faster on some target values, the average performance over all elements is worse than binary search. By dividing the array in half, binary search ensures that the size of both subarrays are as similar as possible.[14]

Space complexity

[edit]Binary search requires three pointers to elements, which may be array indices or pointers to memory locations, regardless of the size of the array. Therefore, the space complexity of binary search is in the word RAM model of computation.

Derivation of average case

[edit]The average number of iterations performed by binary search depends on the probability of each element being searched. The average case is different for successful searches and unsuccessful searches. It will be assumed that each element is equally likely to be searched for successful searches. For unsuccessful searches, it will be assumed that the intervals between and outside elements are equally likely to be searched. The average case for successful searches is the number of iterations required to search every element exactly once, divided by , the number of elements. The average case for unsuccessful searches is the number of iterations required to search an element within every interval exactly once, divided by the intervals.[14]

Successful searches

[edit]In the binary tree representation, a successful search can be represented by a path from the root to the target node, called an internal path. The length of a path is the number of edges (connections between nodes) that the path passes through. The number of iterations performed by a search, given that the corresponding path has length l, is counting the initial iteration. The internal path length is the sum of the lengths of all unique internal paths. Since there is only one path from the root to any single node, each internal path represents a search for a specific element. If there are n elements, which is a positive integer, and the internal path length is , then the average number of iterations for a successful search , with the one iteration added to count the initial iteration.[14]

Since binary search is the optimal algorithm for searching with comparisons, this problem is reduced to calculating the minimum internal path length of all binary trees with n nodes, which is equal to:[17]

For example, in a 7-element array, the root requires one iteration, the two elements below the root require two iterations, and the four elements below require three iterations. In this case, the internal path length is:[17]

The average number of iterations would be based on the equation for the average case. The sum for can be simplified to:[14]

Substituting the equation for into the equation for :[14]

For integer n, this is equivalent to the equation for the average case on a successful search specified above.

Unsuccessful searches

[edit]Unsuccessful searches can be represented by augmenting the tree with external nodes, which forms an extended binary tree. If an internal node, or a node present in the tree, has fewer than two child nodes, then additional child nodes, called external nodes, are added so that each internal node has two children. By doing so, an unsuccessful search can be represented as a path to an external node, whose parent is the single element that remains during the last iteration. An external path is a path from the root to an external node. The external path length is the sum of the lengths of all unique external paths. If there are elements, which is a positive integer, and the external path length is , then the average number of iterations for an unsuccessful search , with the one iteration added to count the initial iteration. The external path length is divided by instead of because there are external paths, representing the intervals between and outside the elements of the array.[14]

This problem can similarly be reduced to determining the minimum external path length of all binary trees with nodes. For all binary trees, the external path length is equal to the internal path length plus .[17] Substituting the equation for :[14]

Substituting the equation for into the equation for , the average case for unsuccessful searches can be determined:[14]

Performance of alternative procedure

[edit]Each iteration of the binary search procedure defined above makes one or two comparisons, checking if the middle element is equal to the target in each iteration. Assuming that each element is equally likely to be searched, each iteration makes 1.5 comparisons on average. A variation of the algorithm checks whether the middle element is equal to the target at the end of the search. On average, this eliminates half a comparison from each iteration. This slightly cuts the time taken per iteration on most computers. However, it guarantees that the search takes the maximum number of iterations, on average adding one iteration to the search. Because the comparison loop is performed only times in the worst case, the slight increase in efficiency per iteration does not compensate for the extra iteration for all but very large .[c][18][19]

Additional considerations

[edit]Cost of comparison

[edit]In analyzing the performance of binary search, another consideration is the time required to compare two elements. For integers and strings, the time required increases linearly as the encoding length (usually the number of bits) of the elements increase. For example, comparing a pair of 64-bit unsigned integers would require comparing up to double the bits as comparing a pair of 32-bit unsigned integers. The worst case is achieved when the integers are equal. This can be significant when the encoding lengths of the elements are large, such as with large integer types or long strings, which makes comparing elements expensive. Furthermore, comparing floating-point values (the most common digital representation of real numbers) is often more expensive than comparing integers or short strings.

Fast floating point comparison is possible via comparing as an integer. However, this kind of comparison forms a total order, which makes every floating-point value compare differently from each other and the same as itself. This is different from the typical comparison where -0.0 should be the same as 0.0 and NaN should not compare the same as any other value including itself.[20][21]

Branch prediction

[edit]According to Steel Bank Common Lisp contributor Paul Khuong, binary search leads to very few branch mispredictions despite its data-dependent nature. This is in part because most of it can be expressed as conditional moves instead of branches. The same applies to most logarithmic divide-and-conquer search algorithms.[22]

Cache usage

[edit]On most computer architectures, the processor has a hardware cache separate from RAM. Since they are located within the processor itself, caches are much faster to access but usually store much less data than RAM. Therefore, most processors store memory locations that have been accessed recently, along with memory locations close to it. For example, when an array element is accessed, the element itself may be stored along with the elements that are stored close to it in RAM, making it faster to sequentially access array elements that are close in index to each other (locality of reference). On a sorted array, binary search can jump to distant memory locations if the array is large, unlike algorithms (such as linear search and linear probing in hash tables) which access elements in sequence. This adds slightly to the running time of binary search for large arrays on most systems.[23]

Paul Khuong has noted that binary search on large (≥ 512 KiB) arrays of exactly a power-of-two size tends to cause an additional problem with how CPU caches are implemented. Specifically, the translation lookaside buffer (TLB) is often implemented as a content-addressable memory (CAM), with the "key" usually being the lower bits of a requested address. When searching on an array of exactly a power-of-two size, memory address with the same lower bits tend to be accessed, causing collisions ("aliasing") with the "key" used to fetch the CAM. The typical TLB is 4-way associative, meaning it can handle at most four addresses hitting the same "key", after which TLB thrashing happens. (Although the other levels of CPU caches also use a similar setup, they manage smaller areas with a higher way count, usually 8 or 16, so they are less affected.) This can be prevented by offsetting the split point of the binary search so it divides at 31⁄64 instead of exactly the middle.[24]

Binary search versus other schemes

[edit]Sorted arrays with binary search are a very inefficient solution when insertion and deletion operations are interleaved with retrieval, taking time for each such operation. In addition, sorted arrays can complicate memory use especially when elements are often inserted into the array.[25] There are other data structures that support much more efficient insertion and deletion. Binary search can be used to perform exact matching and set membership (determining whether a target value is in a collection of values). There are data structures that support faster exact matching and set membership. However, unlike many other searching schemes, binary search can be used for efficient approximate matching, usually performing such matches in time regardless of the type or structure of the values themselves.[26] In addition, there are some operations, like finding the smallest and largest element, that can be performed efficiently on a sorted array.[11]

Linear search

[edit]Linear search is a simple search algorithm that checks every record until it finds the target value. Linear search can be done on a linked list, which allows for faster insertion and deletion than an array. Binary search is faster than linear search for sorted arrays except if the array is short, although the array needs to be sorted beforehand.[d][28] All sorting algorithms based on comparing elements, such as quicksort and merge sort, require at least comparisons in the worst case.[29] Unlike linear search, binary search can be used for efficient approximate matching. There are operations such as finding the smallest and largest element that can be done efficiently on a sorted array but not on an unsorted array.[30]

Trees

[edit]

A binary search tree is a binary tree data structure that works based on the principle of binary search. The records of the tree are arranged in sorted order, and each record in the tree can be searched using an algorithm similar to binary search, taking on average logarithmic time. Insertion and deletion also require on average logarithmic time in binary search trees. This can be faster than the linear time insertion and deletion of sorted arrays, and binary trees retain the ability to perform all the operations possible on a sorted array, including range and approximate queries.[26][31]

However, binary search is usually more efficient for searching as binary search trees will most likely be imperfectly balanced, resulting in slightly worse performance than binary search. This even applies to balanced binary search trees, binary search trees that balance their own nodes, because they rarely produce the tree with the fewest possible levels. Except for balanced binary search trees, the tree may be severely imbalanced with few internal nodes with two children, resulting in the average and worst-case search time approaching comparisons.[e] Binary search trees take more space than sorted arrays.[33]

Binary search trees lend themselves to fast searching in external memory stored in hard disks, as binary search trees can be efficiently structured in filesystems. The B-tree generalizes this method of tree organization. B-trees are frequently used to organize long-term storage such as databases and filesystems.[34][35]

Hashing

[edit]For implementing associative arrays, hash tables, a data structure that maps keys to records using a hash function, are generally faster than binary search on a sorted array of records.[36] Most hash table implementations require only amortized constant time on average.[f][38] However, hashing is not useful for approximate matches, such as computing the next-smallest, next-largest, and nearest key, as the only information given on a failed search is that the target is not present in any record.[39] Binary search is ideal for such matches, performing them in logarithmic time. Binary search also supports approximate matches. Some operations, like finding the smallest and largest element, can be done efficiently on sorted arrays but not on hash tables.[26]

Set membership algorithms

[edit]A related problem to search is set membership. Any algorithm that does lookup, like binary search, can also be used for set membership. There are other algorithms that are more specifically suited for set membership. A bit array is the simplest, useful when the range of keys is limited. It compactly stores a collection of bits, with each bit representing a single key within the range of keys. Bit arrays are very fast, requiring only time.[40] The Judy1 type of Judy array handles 64-bit keys efficiently.[41]

For approximate results, Bloom filters, another probabilistic data structure based on hashing, store a set of keys by encoding the keys using a bit array and multiple hash functions. Bloom filters are much more space-efficient than bit arrays in most cases and not much slower: with hash functions, membership queries require only time. However, Bloom filters suffer from false positives.[g][h][43]

Other data structures

[edit]There exist data structures that may improve on binary search in some cases for both searching and other operations available for sorted arrays. For example, searches, approximate matches, and the operations available to sorted arrays can be performed more efficiently than binary search on specialized data structures such as van Emde Boas trees, fusion trees, tries, and bit arrays. These specialized data structures are usually only faster because they take advantage of the properties of keys with a certain attribute (usually keys that are small integers), and thus will be time or space consuming for keys that lack that attribute.[26] As long as the keys can be ordered, these operations can always be done at least efficiently on a sorted array regardless of the keys. Some structures, such as Judy arrays, use a combination of approaches to mitigate this while retaining efficiency and the ability to perform approximate matching.[41]

Variations

[edit]Uniform binary search

[edit]

Uniform binary search stores, instead of the lower and upper bounds, the difference in the index of the middle element from the current iteration to the next iteration. A lookup table containing the differences is computed beforehand. For example, if the array to be searched is [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11], the middle element () would be 6. In this case, the middle element of the left subarray ([1, 2, 3, 4, 5]) is 3 and the middle element of the right subarray ([7, 8, 9, 10, 11]) is 9. Uniform binary search would store the value of 3 as both indices differ from 6 by this same amount.[44] To reduce the search space, the algorithm either adds or subtracts this change from the index of the middle element. Uniform binary search may be faster on systems where it is inefficient to calculate the midpoint, such as on decimal computers.[45]

Exponential search

[edit]

Exponential search extends binary search to unbounded lists. It starts by finding the first element with an index that is both a power of two and greater than the target value. Afterwards, it sets that index as the upper bound, and switches to binary search. A search takes iterations before binary search is started and at most iterations of the binary search, where is the position of the target value. Exponential search works on bounded lists, but becomes an improvement over binary search only if the target value lies near the beginning of the array.[46]

Interpolation search

[edit]

Instead of calculating the midpoint, interpolation search estimates the position of the target value, taking into account the lowest and highest elements in the array as well as length of the array. It works on the basis that the midpoint is not the best guess in many cases. For example, if the target value is close to the highest element in the array, it is likely to be located near the end of the array.[47]

A common interpolation function is linear interpolation. If is the array, are the lower and upper bounds respectively, and is the target, then the target is estimated to be about of the way between and . When linear interpolation is used, and the distribution of the array elements is uniform or near uniform, interpolation search makes comparisons.[47][48][49]

In practice, interpolation search is slower than binary search for small arrays, as interpolation search requires extra computation. Its time complexity grows more slowly than binary search, but this only compensates for the extra computation for large arrays.[47]

Fractional cascading

[edit]

Fractional cascading is a technique that speeds up binary searches for the same element in multiple sorted arrays. Searching each array separately requires time, where is the number of arrays. Fractional cascading reduces this to by storing specific information in each array about each element and its position in the other arrays.[50][51]

Fractional cascading was originally developed to efficiently solve various computational geometry problems. Fractional cascading has been applied elsewhere, such as in data mining and Internet Protocol routing.[50]

Generalization to graphs

[edit]Binary search has been generalized to work on certain types of graphs, where the target value is stored in a vertex instead of an array element. Binary search trees are one such generalization—when a vertex (node) in the tree is queried, the algorithm either learns that the vertex is the target, or otherwise which subtree the target would be located in. However, this can be further generalized as follows: given an undirected, positively weighted graph and a target vertex, the algorithm learns upon querying a vertex that it is equal to the target, or it is given an incident edge that is on the shortest path from the queried vertex to the target. The standard binary search algorithm is simply the case where the graph is a path. Similarly, binary search trees are the case where the edges to the left or right subtrees are given when the queried vertex is unequal to the target. For all undirected, positively weighted graphs, there is an algorithm that finds the target vertex in queries in the worst case.[52]

Noisy binary search

[edit]

Noisy binary search algorithms solve the case where the algorithm cannot reliably compare elements of the array. For each pair of elements, there is a certain probability that the algorithm makes the wrong comparison. Noisy binary search can find the correct position of the target with a given probability that controls the reliability of the yielded position. Every noisy binary search procedure must make at least comparisons on average, where is the binary entropy function and is the probability that the procedure yields the wrong position.[53][54][55] The noisy binary search problem can be considered as a case of the Rényi-Ulam game,[56] a variant of Twenty Questions where the answers may be wrong.[57]

Quantum binary search

[edit]Classical computers are bounded to the worst case of exactly iterations when performing binary search. Quantum algorithms for binary search are still bounded to a proportion of queries (representing iterations of the classical procedure), but the constant factor is less than one, providing for a lower time complexity on quantum computers. Any exact quantum binary search procedure—that is, a procedure that always yields the correct result—requires at least queries in the worst case, where is the natural logarithm.[58] There is an exact quantum binary search procedure that runs in queries in the worst case.[59] In comparison, Grover's algorithm is the optimal quantum algorithm for searching an unordered list of elements, and it requires queries.[60]

History

[edit]The idea of sorting a list of items to allow for faster searching dates back to antiquity. The earliest known example was the Inakibit-Anu tablet from Babylon dating back to c. 200 BCE. The tablet contained about 500 sexagesimal numbers and their reciprocals sorted in lexicographical order, which made searching for a specific entry easier. In addition, several lists of names that were sorted by their first letter were discovered on the Aegean Islands. Catholicon, a Latin dictionary finished in 1286 CE, was the first work to describe rules for sorting words into alphabetical order, as opposed to just the first few letters.[9]

In 1946, John Mauchly made the first mention of binary search as part of the Moore School Lectures, a seminal and foundational college course in computing.[9] In 1957, William Wesley Peterson published the first method for interpolation search.[9][61] Every published binary search algorithm worked only for arrays whose length is one less than a power of two[i] until 1960, when Derrick Henry Lehmer published a binary search algorithm that worked on all arrays.[63] In 1962, Hermann Bottenbruch presented an ALGOL 60 implementation of binary search that placed the comparison for equality at the end, increasing the average number of iterations by one, but reducing to one the number of comparisons per iteration.[8] The uniform binary search was developed by A. K. Chandra of Stanford University in 1971.[9] In 1986, Bernard Chazelle and Leonidas J. Guibas introduced fractional cascading as a method to solve numerous search problems in computational geometry.[50][64][65]

Implementation issues

[edit]Although the basic idea of binary search is comparatively straightforward, the details can be surprisingly tricky

When Jon Bentley assigned binary search as a problem in a course for professional programmers, he found that ninety percent failed to provide a correct solution after several hours of working on it, mainly because the incorrect implementations failed to run or returned a wrong answer in rare edge cases.[66] A study published in 1988 shows that accurate code for it is only found in five out of twenty textbooks.[67] Furthermore, Bentley's own implementation of binary search, published in his 1986 book Programming Pearls, contained an overflow error that remained undetected for over twenty years. The Java programming language library implementation of binary search had the same overflow bug for more than nine years.[68]

In a practical implementation, the variables used to represent the indices will often be of fixed size (integers), and this can result in an arithmetic overflow for very large arrays. If the midpoint of the span is calculated as , then the value of may exceed the range of integers of the data type used to store the midpoint, even if and are within the range. If and are nonnegative, this can be avoided by calculating the midpoint as .[69]

An infinite loop may occur if the exit conditions for the loop are not defined correctly. Once exceeds , the search has failed and must convey the failure of the search. In addition, the loop must be exited when the target element is found, or in the case of an implementation where this check is moved to the end, checks for whether the search was successful or failed at the end must be in place. Bentley found that most of the programmers who incorrectly implemented binary search made an error in defining the exit conditions.[8][70]

Library support

[edit]Many languages' standard libraries include binary search routines:

- C provides the function

bsearch()in its standard library, which is typically implemented via binary search, although the official standard does not require it so.[71] - C++'s standard library provides the functions

binary_search(),lower_bound(),upper_bound()andequal_range().[72] Using the C++20std::rangeslibrary, it can be applied over a range asstd::ranges::binary_search(). - D's standard library Phobos, in

std.rangemodule provides a typeSortedRange(returned bysort()andassumeSorted()functions) with methodscontains(),equaleRange(),lowerBound()andtrisect(), that use binary search techniques by default for ranges that offer random access.[73] - COBOL provides the

SEARCH ALLverb for performing binary searches on COBOL ordered tables.[74] - Go's

sortstandard library package contains the functionsSearch,SearchInts,SearchFloat64s, andSearchStrings, which implement general binary search, as well as specific implementations for searching slices of integers, floating-point numbers, and strings, respectively.[75] - Java offers a set of overloaded

binarySearch()static methods in the classesArraysandCollectionsin the standardjava.utilpackage for performing binary searches on Java arrays and onLists, respectively.[76][77] - Microsoft's .NET Framework 2.0 offers static generic versions of the binary search algorithm in its collection base classes. An example would be

System.Array's methodBinarySearch<T>(T[] array, T value).[78] - For Objective-C, the Cocoa framework provides the

NSArray -indexOfObject:inSortedRange:options:usingComparator:method in Mac OS X 10.6+.[79] Apple's Core Foundation C framework also contains aCFArrayBSearchValues()function.[80] - Python provides the

bisectmodule that keeps a list in sorted order without having to sort the list after each insertion.[81] - Ruby's Array class includes a

bsearchmethod with built-in approximate matching.[82] - Rust's slice primitive provides

binary_search(),binary_search_by(),binary_search_by_key(), andpartition_point().[83]

See also

[edit]- Bisection method – Algorithm for finding a zero of a function – the same idea used to solve equations in the real numbers

- Multiplicative binary search – Binary search variation with simplified midpoint calculation

Notes and references

[edit]![]() This article was submitted to WikiJournal of Science for external academic peer review in 2018 (reviewer reports). The updated content was reintegrated into the Wikipedia page under a CC-BY-SA-3.0 license (2019). The version of record as reviewed is:

Anthony Lin; et al. (2 July 2019). "Binary search algorithm" (PDF). WikiJournal of Science. 2 (1): 5. doi:10.15347/WJS/2019.005. ISSN 2470-6345. Wikidata Q81434400.

This article was submitted to WikiJournal of Science for external academic peer review in 2018 (reviewer reports). The updated content was reintegrated into the Wikipedia page under a CC-BY-SA-3.0 license (2019). The version of record as reviewed is:

Anthony Lin; et al. (2 July 2019). "Binary search algorithm" (PDF). WikiJournal of Science. 2 (1): 5. doi:10.15347/WJS/2019.005. ISSN 2470-6345. Wikidata Q81434400.

Notes

[edit]- ^ The is Big O notation, and is the logarithm. In Big O notation, the base of the logarithm does not matter since every logarithm of a given base is a constant factor of another logarithm of another base. That is, , where is a constant.

- ^ Any search algorithm based solely on comparisons can be represented using a binary comparison tree. An internal path is any path from the root to an existing node. Let be the internal path length, the sum of the lengths of all internal paths. If each element is equally likely to be searched, the average case is or simply one plus the average of all the internal path lengths of the tree. This is because internal paths represent the elements that the search algorithm compares to the target. The lengths of these internal paths represent the number of iterations after the root node. Adding the average of these lengths to the one iteration at the root yields the average case. Therefore, to minimize the average number of comparisons, the internal path length must be minimized. It turns out that the tree for binary search minimizes the internal path length. Knuth 1998 proved that the external path length (the path length over all nodes where both children are present for each already-existing node) is minimized when the external nodes (the nodes with no children) lie within two consecutive levels of the tree. This also applies to internal paths as internal path length is linearly related to external path length . For any tree of nodes, . When each subtree has a similar number of nodes, or equivalently the array is divided into halves in each iteration, the external nodes as well as their interior parent nodes lie within two levels. It follows that binary search minimizes the number of average comparisons as its comparison tree has the lowest possible internal path length.[14]

- ^ Knuth 1998 showed on his MIX computer model, which Knuth designed as a representation of an ordinary computer, that the average running time of this variation for a successful search is units of time compared to units for regular binary search. The time complexity for this variation grows slightly more slowly, but at the cost of higher initial complexity.[18]

- ^ Knuth 1998 performed a formal time performance analysis of both of these search algorithms. On Knuth's MIX computer, which Knuth designed as a representation of an ordinary computer, binary search takes on average units of time for a successful search, while linear search with a sentinel node at the end of the list takes units. Linear search has lower initial complexity because it requires minimal computation, but it quickly outgrows binary search in complexity. On the MIX computer, binary search only outperforms linear search with a sentinel if .[14][27]

- ^ Inserting the values in sorted order or in an alternating lowest-highest key pattern will result in a binary search tree that maximizes the average and worst-case search time.[32]

- ^ It is possible to search some hash table implementations in guaranteed constant time.[37]

- ^ This is because simply setting all of the bits which the hash functions point to for a specific key can affect queries for other keys which have a common hash location for one or more of the functions.[42]

- ^ There exist improvements of the Bloom filter which improve on its complexity or support deletion; for example, the cuckoo filter exploits cuckoo hashing to gain these advantages.[42]

- ^ That is, arrays of length 1, 3, 7, 15, 31 ...[62]

Citations

[edit]- ^ Williams, Jr., Louis F. (22 April 1976). A modification to the half-interval search (binary search) method. Proceedings of the 14th ACM Southeast Conference. ACM. pp. 95–101. doi:10.1145/503561.503582. Archived from the original on 12 March 2017. Retrieved 29 June 2018.

- ^ a b Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Binary search".

- ^ Butterfield & Ngondi 2016, p. 46.

- ^ Cormen et al. 2009, p. 39.

- ^ Weisstein, Eric W. "Binary search". MathWorld.

- ^ a b Flores, Ivan; Madpis, George (1 September 1971). "Average binary search length for dense ordered lists". Communications of the ACM. 14 (9): 602–603. doi:10.1145/362663.362752. ISSN 0001-0782. S2CID 43325465.

- ^ a b c Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Algorithm B".

- ^ a b c d Bottenbruch, Hermann (1 April 1962). "Structure and use of ALGOL 60". Journal of the ACM. 9 (2): 161–221. doi:10.1145/321119.321120. ISSN 0004-5411. S2CID 13406983. Procedure is described at p. 214 (§43), titled "Program for Binary Search".

- ^ a b c d e f Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "History and bibliography".

- ^ a b Kasahara & Morishita 2006, pp. 8–9.

- ^ a b c Sedgewick & Wayne 2011, §3.1, subsection "Rank and selection".

- ^ a b c Goldman & Goldman 2008, pp. 461–463.

- ^ Sedgewick & Wayne 2011, §3.1, subsection "Range queries".

- ^ a b c d e f g h i j k l Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Further analysis of binary search".

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table"), "Theorem B".

- ^ Chang 2003, p. 169.

- ^ a b c Knuth 1997, §2.3.4.5 ("Path length").

- ^ a b Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Exercise 23".

- ^ Rolfe, Timothy J. (1997). "Analytic derivation of comparisons in binary search". ACM SIGNUM Newsletter. 32 (4): 15–19. doi:10.1145/289251.289255. S2CID 23752485.

- ^ Herf, Michael (December 2001). "radix tricks". stereopsis: graphics.

- ^ "Implement total_cmp for f32, f64 by golddranks · Pull Request #72568 · rust-lang/rust". GitHub. – contains relevant quotations from IEEE 754-2008 and -2019. Contains a type-pun implementation and explanation.

- ^ "Binary search *eliminates* branch mispredictions - Paul Khuong: some Lisp". pvk.ca.

- ^ Khuong, Paul-Virak; Morin, Pat (2017). "Array Layouts for Comparison-Based Searching". Journal of Experimental Algorithmics. 22. Article 1.3. arXiv:1509.05053. doi:10.1145/3053370. S2CID 23752485.

- ^ "Binary search is a pathological case for caches - Paul Khuong: some Lisp". pvk.ca.

- ^ Knuth 1997, §2.2.2 ("Sequential Allocation").

- ^ a b c d Beame, Paul; Fich, Faith E. (2001). "Optimal bounds for the predecessor problem and related problems". Journal of Computer and System Sciences. 65 (1): 38–72. doi:10.1006/jcss.2002.1822.

- ^ Knuth 1998, Answers to Exercises (§6.2.1) for "Exercise 5".

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table").

- ^ Knuth 1998, §5.3.1 ("Minimum-Comparison sorting").

- ^ Sedgewick & Wayne 2011, §3.2 ("Ordered symbol tables").

- ^ Sedgewick & Wayne 2011, §3.2 ("Binary Search Trees"), subsection "Order-based methods and deletion".

- ^ Knuth 1998, §6.2.2 ("Binary tree searching"), subsection "But what about the worst case?".

- ^ Sedgewick & Wayne 2011, §3.5 ("Applications"), "Which symbol-table implementation should I use?".

- ^ Knuth 1998, §5.4.9 ("Disks and Drums").

- ^ Knuth 1998, §6.2.4 ("Multiway trees").

- ^ Knuth 1998, §6.4 ("Hashing").

- ^ Knuth 1998, §6.4 ("Hashing"), subsection "History".

- ^ Dietzfelbinger, Martin; Karlin, Anna; Mehlhorn, Kurt; Meyer auf der Heide, Friedhelm; Rohnert, Hans; Tarjan, Robert E. (August 1994). "Dynamic perfect hashing: upper and lower bounds". SIAM Journal on Computing. 23 (4): 738–761. doi:10.1137/S0097539791194094.

- ^ Morin, Pat. "Hash tables" (PDF). p. 1. Archived (PDF) from the original on 9 October 2022. Retrieved 28 March 2016.

- ^ Knuth 2011, §7.1.3 ("Bitwise Tricks and Techniques").

- ^ a b Silverstein, Alan, Judy IV shop manual (PDF), Hewlett-Packard, pp. 80–81, archived (PDF) from the original on 9 October 2022

- ^ a b Fan, Bin; Andersen, Dave G.; Kaminsky, Michael; Mitzenmacher, Michael D. (2014). Cuckoo filter: practically better than Bloom. Proceedings of the 10th ACM International on Conference on Emerging Networking Experiments and Technologies. pp. 75–88. doi:10.1145/2674005.2674994.

- ^ Bloom, Burton H. (1970). "Space/time trade-offs in hash coding with allowable errors". Communications of the ACM. 13 (7): 422–426. CiteSeerX 10.1.1.641.9096. doi:10.1145/362686.362692. S2CID 7931252.

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "An important variation".

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Algorithm U".

- ^ Moffat & Turpin 2002, p. 33.

- ^ a b c Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Interpolation search".

- ^ Knuth 1998, §6.2.1 ("Searching an ordered table"), subsection "Exercise 22".

- ^ Perl, Yehoshua; Itai, Alon; Avni, Haim (1978). "Interpolation search—a log log n search". Communications of the ACM. 21 (7): 550–553. doi:10.1145/359545.359557. S2CID 11089655.

- ^ a b c Chazelle, Bernard; Liu, Ding (6 July 2001). Lower bounds for intersection searching and fractional cascading in higher dimension. 33rd ACM Symposium on Theory of Computing. ACM. pp. 322–329. doi:10.1145/380752.380818. ISBN 978-1-58113-349-3. Retrieved 30 June 2018.

- ^ Chazelle, Bernard; Liu, Ding (1 March 2004). "Lower bounds for intersection searching and fractional cascading in higher dimension" (PDF). Journal of Computer and System Sciences. 68 (2): 269–284. CiteSeerX 10.1.1.298.7772. doi:10.1016/j.jcss.2003.07.003. ISSN 0022-0000. Archived (PDF) from the original on 9 October 2022. Retrieved 30 June 2018.

- ^ Emamjomeh-Zadeh, Ehsan; Kempe, David; Singhal, Vikrant (2016). Deterministic and probabilistic binary search in graphs. 48th ACM Symposium on Theory of Computing. pp. 519–532. arXiv:1503.00805. doi:10.1145/2897518.2897656.

- ^ Ben-Or, Michael; Hassidim, Avinatan (2008). "The Bayesian learner is optimal for noisy binary search (and pretty good for quantum as well)" (PDF). 49th Symposium on Foundations of Computer Science. pp. 221–230. doi:10.1109/FOCS.2008.58. ISBN 978-0-7695-3436-7. Archived (PDF) from the original on 9 October 2022.

- ^ Pelc, Andrzej (1989). "Searching with known error probability". Theoretical Computer Science. 63 (2): 185–202. doi:10.1016/0304-3975(89)90077-7.

- ^ Rivest, Ronald L.; Meyer, Albert R.; Kleitman, Daniel J.; Winklmann, K. Coping with errors in binary search procedures. 10th ACM Symposium on Theory of Computing. doi:10.1145/800133.804351.

- ^ Pelc, Andrzej (2002). "Searching games with errors—fifty years of coping with liars". Theoretical Computer Science. 270 (1–2): 71–109. doi:10.1016/S0304-3975(01)00303-6.

- ^ Rényi, Alfréd (1961). "On a problem in information theory". Magyar Tudományos Akadémia Matematikai Kutató Intézetének Közleményei (in Hungarian). 6: 505–516. MR 0143666.

- ^ Høyer, Peter; Neerbek, Jan; Shi, Yaoyun (2002). "Quantum complexities of ordered searching, sorting, and element distinctness". Algorithmica. 34 (4): 429–448. arXiv:quant-ph/0102078. doi:10.1007/s00453-002-0976-3. S2CID 13717616.

- ^ Childs, Andrew M.; Landahl, Andrew J.; Parrilo, Pablo A. (2007). "Quantum algorithms for the ordered search problem via semidefinite programming". Physical Review A. 75 (3). 032335. arXiv:quant-ph/0608161. Bibcode:2007PhRvA..75c2335C. doi:10.1103/PhysRevA.75.032335. S2CID 41539957.

- ^ Grover, Lov K. (1996). A fast quantum mechanical algorithm for database search. 28th ACM Symposium on Theory of Computing. Philadelphia, PA. pp. 212–219. arXiv:quant-ph/9605043. doi:10.1145/237814.237866.

- ^ Peterson, William Wesley (1957). "Addressing for random-access storage". IBM Journal of Research and Development. 1 (2): 130–146. doi:10.1147/rd.12.0130.

- ^ "2n−1". OEIS A000225 Archived 8 June 2016 at the Wayback Machine. Retrieved 7 May 2016.

- ^ Lehmer, Derrick (1960). "Teaching combinatorial tricks to a computer". Combinatorial Analysis. Proceedings of Symposia in Applied Mathematics. Vol. 10. pp. 180–181. doi:10.1090/psapm/010/0113289. ISBN 9780821813102. MR 0113289.

{{cite book}}: ISBN / Date incompatibility (help) - ^ Chazelle, Bernard; Guibas, Leonidas J. (1986). "Fractional cascading: I. A data structuring technique" (PDF). Algorithmica. 1 (1–4): 133–162. CiteSeerX 10.1.1.117.8349. doi:10.1007/BF01840440. S2CID 12745042.

- ^ Chazelle, Bernard; Guibas, Leonidas J. (1986), "Fractional cascading: II. Applications" (PDF), Algorithmica, 1 (1–4): 163–191, doi:10.1007/BF01840441, S2CID 11232235

- ^ Bentley 2000, §4.1 ("The Challenge of Binary Search").

- ^ Pattis, Richard E. (1988). "Textbook errors in binary searching". SIGCSE Bulletin. 20: 190–194. doi:10.1145/52965.53012.

- ^ Bloch, Joshua (2 June 2006). "Extra, extra – read all about it: nearly all binary searches and mergesorts are broken". Google Research Blog. Archived from the original on 1 April 2016. Retrieved 21 April 2016.

- ^ Ruggieri, Salvatore (2003). "On computing the semi-sum of two integers" (PDF). Information Processing Letters. 87 (2): 67–71. CiteSeerX 10.1.1.13.5631. doi:10.1016/S0020-0190(03)00263-1. Archived (PDF) from the original on 3 July 2006. Retrieved 19 March 2016.

- ^ Bentley 2000, §4.4 ("Principles").

- ^ "bsearch – binary search a sorted table". The Open Group Base Specifications (7th ed.). The Open Group. 2013. Archived from the original on 21 March 2016. Retrieved 28 March 2016.

- ^ Stroustrup 2013, p. 945.

- ^ "std.range - D Programming Language". dlang.org. Retrieved 29 April 2020.

- ^ Unisys (2012), COBOL ANSI-85 programming reference manual, vol. 1, pp. 598–601

- ^ "Package sort". The Go Programming Language. Archived from the original on 25 April 2016. Retrieved 28 April 2016.

- ^ "java.util.Arrays". Java Platform Standard Edition 8 Documentation. Oracle Corporation. Archived from the original on 29 April 2016. Retrieved 1 May 2016.

- ^ "java.util.Collections". Java Platform Standard Edition 8 Documentation. Oracle Corporation. Archived from the original on 23 April 2016. Retrieved 1 May 2016.

- ^ "List<T>.BinarySearch method (T)". Microsoft Developer Network. Archived from the original on 7 May 2016. Retrieved 10 April 2016.

- ^ "NSArray". Mac Developer Library. Apple Inc. Archived from the original on 17 April 2016. Retrieved 1 May 2016.

- ^ "CFArray". Mac Developer Library. Apple Inc. Archived from the original on 20 April 2016. Retrieved 1 May 2016.

- ^ "8.6. bisect — Array bisection algorithm". The Python Standard Library. Python Software Foundation. Archived from the original on 25 March 2018. Retrieved 26 March 2018.

- ^ Fitzgerald 2015, p. 152.

- ^ "Primitive Type

slice". The Rust Standard Library. The Rust Foundation. 2024. Retrieved 25 May 2024.

Sources

[edit]- Bentley, Jon (2000). Programming pearls (2nd ed.). Addison-Wesley. ISBN 978-0-201-65788-3.

- Butterfield, Andrew; Ngondi, Gerard E. (2016). A dictionary of computer science (7th ed.). Oxford, UK: Oxford University Press. ISBN 978-0-19-968897-5.

- Chang, Shi-Kuo (2003). Data structures and algorithms. Software Engineering and Knowledge Engineering. Vol. 13. Singapore: World Scientific. ISBN 978-981-238-348-8.

- Cormen, Thomas H.; Leiserson, Charles E.; Rivest, Ronald L.; Stein, Clifford (2009). Introduction to algorithms (3rd ed.). MIT Press and McGraw-Hill. ISBN 978-0-262-03384-8.

- Fitzgerald, Michael (2015). Ruby pocket reference. Sebastopol, California: O'Reilly Media. ISBN 978-1-4919-2601-7.

- Goldman, Sally A.; Goldman, Kenneth J. (2008). A practical guide to data structures and algorithms using Java. Boca Raton, Florida: CRC Press. ISBN 978-1-58488-455-2.

- Kasahara, Masahiro; Morishita, Shinichi (2006). Large-scale genome sequence processing. London, UK: Imperial College Press. ISBN 978-1-86094-635-6.

- Knuth, Donald (1997). Fundamental algorithms. The Art of Computer Programming. Vol. 1 (3rd ed.). Reading, MA: Addison-Wesley Professional. ISBN 978-0-201-89683-1.

- Knuth, Donald (1998). Sorting and searching. The Art of Computer Programming. Vol. 3 (2nd ed.). Reading, MA: Addison-Wesley Professional. ISBN 978-0-201-89685-5.

- Knuth, Donald (2011). Combinatorial algorithms. The Art of Computer Programming. Vol. 4A (1st ed.). Reading, MA: Addison-Wesley Professional. ISBN 978-0-201-03804-0.

- Moffat, Alistair; Turpin, Andrew (2002). Compression and coding algorithms. Hamburg, Germany: Kluwer Academic Publishers. doi:10.1007/978-1-4615-0935-6. ISBN 978-0-7923-7668-2.

- Sedgewick, Robert; Wayne, Kevin (2011). Algorithms (4th ed.). Upper Saddle River, New Jersey: Addison-Wesley Professional. ISBN 978-0-321-57351-3. Condensed web version

; book version

; book version  .

. - Stroustrup, Bjarne (2013). The C++ programming language (4th ed.). Upper Saddle River, New Jersey: Addison-Wesley Professional. ISBN 978-0-321-56384-2.

External links

[edit]Binary search

View on GrokipediaArrays.binarySearch and Python's bisect module for tasks ranging from database indexing to optimizing lookups in sorted datasets.[6]

History

Origins and Invention

The conceptual foundations of binary search trace back to ancient practices of organizing data for efficient retrieval, predating its formal algorithmic development by millennia. Around 200 BCE, a Babylonian clay tablet known as the Inakibit-Anu contains one of the earliest known sorted tables, listing numbers alongside their reciprocals to enable quick lookups without exhaustive scanning. Similarly, the Great Library of Alexandria, circa 300 BCE, utilized the Pinakes catalog compiled by scholar Callimachus, which sorted over 120,000 scrolls alphabetically by author and subject, facilitating targeted searches in a vast collection. These examples illustrate early recognition of sorting's role in streamlining access to ordered information, though they lacked the systematic halving mechanism central to binary search.[5] Indirect philosophical influences on binary search's divide-and-conquer strategy can be found in ancient Greek thought, particularly the method of dichotomy—a logical division of a concept into two mutually exclusive parts—developed by Plato in works like the Sophist and Statesman. This dichotomous approach, later refined by Aristotle in his analytical methods, emphasized breaking down problems into simpler components, laying groundwork for algorithmic decomposition centuries later. In the 9th century, Persian polymath Muhammad ibn Musa al-Khwarizmi advanced systematic problem-solving through his treatise Al-Kitab al-Mukhtasar fi Hisab al-Jabr wal-Muqabala, which introduced step-by-step algebraic algorithms; while not describing search directly, his methodical division of equations influenced the structured reasoning underpinning later computational techniques. The explicit invention of binary search as a discrete algorithm emerged in the mid-20th century amid the dawn of electronic computing. The first recorded mention of "binary search" occurred in 1946 during the Moore School Lectures at the University of Pennsylvania, delivered by John Mauchly, co-designer of the ENIAC computer. In these lectures—the first comprehensive course on computing—Mauchly described binary search as an efficient technique for locating elements in sorted arrays by repeatedly bisecting the search interval, tailored to the limitations of early machines like ENIAC. John von Neumann, a key consultant to the ENIAC project and participant in the lectures, contributed to the broader context of algorithmic efficiency in stored-program computers, helping formalize such methods for practical implementation in electronic data processing. This marked binary search's transition from conceptual idea to a cornerstone of computational searching in sorted lists.[5][7]Evolution and Key Milestones

Following its conceptual roots in ancient bisection methods for root-finding, binary search saw practical adoption in computing during the 1950s through early programming texts and hardware manuals. This marked an initial milestone in applying the algorithm to real-world data processing challenges in the post-war computing boom.[8] In the 1960s, binary search received rigorous formal analysis from Donald Knuth, who examined its correctness, efficiency, and potential pitfalls—including common off-by-one errors in early implementations—in his seminal work The Art of Computer Programming, Volume 3: Sorting and Searching (first edition, 1973, building on analyses from the late 1960s). Knuth noted that while the first binary search was published in 1946, the first bug-free version did not appear until 1962. His treatment elevated it to a foundational element of algorithm design and analysis.[9] A key refinement during this period was Derrick Henry Lehmer's 1960 improvement, which extended binary search to arrays of arbitrary length beyond powers of two, resolving a common limitation in prior versions.[5] The algorithm's evolution continued with its standardization in programming languages. In the 1970s, binary search was integrated into early C libraries, such as the Seventh Edition UNIX system in 1979, via thebsearch function in <stdlib.h>, facilitating its widespread use in systems programming.[10] By the 1990s, it appeared in object-oriented languages, notably Java's Arrays.binarySearch method introduced in JDK 1.0 (1996), which supported both primitive and object arrays for robust searching in sorted collections.

Parallel to these developments, the 1950s and 1960s saw the emergence of variants to handle specific data distributions. Interpolation search, proposed by W. Wesley Peterson in 1957, improved upon binary search for uniformly distributed keys by estimating positions based on value interpolation, achieving better average-case performance for large datasets driven by growing storage needs. These advancements underscored binary search's adaptability, influencing subsequent search techniques in theoretical and applied computing.

Fundamentals

Prerequisites: Sorted Data Structures

Binary search operates on data structures that are pre-sorted, typically arrays or lists where elements are arranged in non-decreasing (or non-increasing) order, ensuring that for any index , the element at position is less than or equal to the element at . This monotonic ordering is fundamental, as it allows the algorithm to eliminate half of the search space at each step by comparing the target value to the middle element. Properties of such sorted structures include efficient random access (O(1) time for arrays) and the ability to maintain order without gaps, which contrasts with unsorted collections where elements can appear in arbitrary positions. Preprocessing through sorting is essential before applying binary search, as the input data must be organized to leverage the algorithm's efficiency; common sorting methods include quicksort, with an average time complexity of O(n log n), or mergesort, which guarantees O(n log n) in the worst case. This initial sorting cost is a one-time overhead, making binary search particularly advantageous for repeated lookups on large, static datasets, though it becomes inefficient if the data changes frequently without resorting. For instance, in database indexing, sorting ensures that subsequent searches avoid the full O(n log n) penalty each time. Sorted lists with duplicates require special handling for precise searches, such as finding the first or last occurrence of a value, which can be achieved by modifying the comparison logic to continue searching in the appropriate half even after a match is found. Edge cases include empty lists (immediate failure), single-element lists (direct comparison), or all elements matching the target, where binary search variants iterate until the boundary is identified without disrupting the O(log n) bound. Binary search assumes a static, sorted input, meaning insertions, deletions, or updates disrupt the order and necessitate resorting or rebuilding the structure; for dynamic scenarios, alternatives like binary search trees (BSTs) are used, which maintain sorting automatically through balanced insertions at O(log n) amortized cost. This prerequisite distinguishes binary search from more flexible tree-based searches, emphasizing its suitability for read-heavy, unchanging data.Divide-and-Conquer Principle

The divide-and-conquer paradigm is a foundational algorithm design strategy in computer science, characterized by recursively breaking down a complex problem into smaller, overlapping or non-overlapping subproblems of similar form, solving these subproblems independently, and then merging their solutions to address the original problem. This approach leverages recursion to manage complexity, often resulting in efficient solutions for problems amenable to subdivision, such as sorting, matrix multiplication, and searching tasks.[11] In the application of binary search, the divide-and-conquer principle manifests through the systematic halving of the search interval in a sorted array or list, where the midpoint serves as a reference point for comparison with the target value. Depending on whether the target is less than, equal to, or greater than the midpoint, the algorithm discards the irrelevant half of the interval and recurses on the remaining portion, progressively narrowing the search space until the target is isolated or confirmed absent. This iterative division exploits the ordered nature of the data to eliminate vast portions of the possible locations with each step, embodying the paradigm's emphasis on problem decomposition for efficiency. The conceptual origins of divide-and-conquer trace back to 19th-century mathematical practices, including interval-halving techniques like the bisection method for approximating roots of continuous functions, which similarly reduce uncertainty through successive bisections. These ideas were rigorously adapted and formalized within computer science during the 1970s, notably by Donald Knuth in his 1973 book The Art of Computer Programming, Volume 3: Sorting and Searching, whose analysis integrated them into systematic algorithmic frameworks for discrete problems.[12] A central insight into binary search's performance under this paradigm is captured by its recurrence relation: with the base case , resolving to and highlighting the logarithmic recursion depth that ensures rapid resolution regardless of input size.[13]Algorithm Mechanics

Step-by-Step Execution

Binary search begins with the initialization of two pointers: the low pointer is set to the first index of the sorted array (typically 0), and the high pointer is set to the last index of the array.[14] This establishes the initial search interval encompassing the entire array. The algorithm then enters a loop that continues as long as the low pointer is less than or equal to the high pointer. In each iteration, the middle index is calculated using integer division: mid = (low + high) / 2. The target value is compared to the element at array[mid]. If the target equals array[mid], the algorithm returns the mid index as the location of the target.[1] Based on the comparison, the search interval is adjusted to narrow the scope. If the target is less than array[mid], the high pointer is updated to mid - 1, effectively discarding the upper half of the interval. Conversely, if the target is greater than array[mid], the low pointer is set to mid + 1, discarding the lower half. This process halves the search space in each step, embodying the divide-and-conquer principle.[15] The loop terminates when low exceeds high, indicating the target is not present in the array, at which point the algorithm returns -1 or another indicator of failure.[16] To illustrate, consider searching for the value 7 in the sorted array [1, 3, 5, 7, 9], with indices 0 to 4.- Iteration 1: low = 0, high = 4, mid = 2, array = 5. Since 7 > 5, set low = 3.

- Iteration 2: low = 3, high = 4, mid = 3, array = 7. Since 7 == 7, return index 3.

Pseudocode Representation

Binary search is commonly formalized using iterative pseudocode that operates on a sorted array. The following representation assumes a zero-based indexing scheme and an array sorted in ascending order, as described in standard algorithmic texts.function binarySearch(array, target):

low = 0

high = array.length - 1

while low <= high:

mid = floor((low + high) / 2)

if array[mid] == target:

return mid

else if array[mid] < target:

low = mid + 1

else:

high = mid - 1

return -1 // target not found

function binarySearch(array, target):

low = 0

high = array.length - 1

while low <= high:

mid = floor((low + high) / 2)

if array[mid] == target:

return mid

else if array[mid] < target:

low = mid + 1

else:

high = mid - 1

return -1 // target not found

low initializes to the first index of the array, marking the lower bound of the search interval, while high starts at the last index, defining the upper bound. The variable mid computes the midpoint of the current interval to decide which half to discard next. The loop continues until the interval collapses (low > high), at which point the target is deemed absent.

A key implementation detail is the computation of mid, where the direct formula (low + high) / 2 risks integer overflow if low and high are large values in fixed-width integer systems; an equivalent safe alternative is low + floor((high - low) / 2).[18]

Adaptations include: for 1-based indexing, set low = 1 and high = array.length, adjusting comparisons accordingly; a recursive variant partitions via function calls on subintervals until the base case (low > high) or match is reached. This form assumes ascending order; for descending, reverse the conditional branches by swapping the < and > comparisons.

Performance Characteristics

Time and Space Complexity

The time complexity of binary search is in the worst and average cases, and in the best case, where is the number of elements in the sorted array, as the algorithm halves the search space with each comparison. This logarithmic performance stems from the divide-and-conquer principle, where the recurrence relation for the number of steps is , with the base case . Solving this recurrence via the recursion tree method yields , confirming the tight bound for worst- and average-case scenarios, while the best case requires only a constant number of operations if the target is at the midpoint.[19] The exact number of comparisons in the worst case is at most , representing the height of the decision tree for distinguishing among possible outcomes (the element's position or its absence).[20] Regarding space complexity, the iterative implementation of binary search uses auxiliary space, relying solely on a constant number of pointers (low, high, and mid) to traverse the array in place. In contrast, the recursive version requires space due to the call stack depth matching the recursion levels. These complexities are influenced primarily by the array size , under the assumption of uniform access time to array elements; real-world factors like cache hierarchies or non-contiguous memory are typically ignored in theoretical analysis.[21]Comparison with Linear Search

Linear search, also known as sequential search, operates by sequentially examining each element in a data structure from the beginning until the target is found or the entire structure has been traversed.[18] It has a worst-case time complexity of O(n), where n is the number of elements, as it may require checking every item, and uses O(1) auxiliary space since it does not allocate additional storage beyond the input.[18] Unlike binary search, linear search imposes no prerequisite for the data to be sorted, making it applicable to unsorted collections without any preprocessing.[18] In contrast, binary search leverages its O(log n) time complexity to outperform linear search dramatically on large, sorted datasets, requiring only about 20 comparisons in the worst case for 1 million elements, compared to up to 1 million for linear search.[22] However, binary search demands an upfront sorting cost of O(n log n) if the data is not already ordered, which can offset its advantages for infrequent or one-time queries.[18] This sorting overhead means binary search is less suitable for dynamic datasets that change frequently, as repeated sorting would accumulate significant expense. Binary search is preferable for frequent searches on static, large datasets where the logarithmic efficiency justifies the initial sorting investment, while linear search suits small, unsorted, or frequently modified lists where simplicity and lack of preprocessing outweigh performance gains.[22][18] The break-even point—where the total cost of sorting plus binary searches equals that of linear searches—varies with the number of queries, sorting overhead, and hardware factors.Implementations and Variations

Code Examples in Programming Languages

Binary search implementations in programming languages typically follow an iterative approach to locate an element in a sorted array or list, initializing low and high pointers at the boundaries and repeatedly narrowing the search range until the target is found or the range is exhausted.[23]Python

Python's built-in support for lists and integer division makes binary search straightforward to implement without additional libraries. The following function searches for a target valuex in a sorted list arr and returns the index if found, or -1 otherwise.[24]

def binary_search(arr, x):

low, high = 0, len(arr) - 1

while low <= high:

mid = (low + high) // 2

if arr[mid] < x:

low = mid + 1

elif arr[mid] > x:

high = mid - 1

else:

return mid

return -1

def binary_search(arr, x):

low, high = 0, len(arr) - 1

while low <= high:

mid = (low + high) // 2

if arr[mid] < x:

low = mid + 1

elif arr[mid] > x:

high = mid - 1

else:

return mid

return -1

// operator for floor division to compute the midpoint.[24]

Java

In Java, binary search on arrays requires careful handling of array lengths and bounds to prevent index errors. The iterative version below assumes a sorted integer arrayarr and searches for x, returning the index or -1 if not found. It includes explicit checks for empty arrays.[25]

public static int binarySearch(int[] arr, int x) {

if (arr.length == 0) return -1;

int low = 0;

int high = arr.length - 1;

while (low <= high) {

int mid = low + (high - low) / 2;

if (arr[mid] < x) {

low = mid + 1;

} else if (arr[mid] > x) {

high = mid - 1;

} else {

return mid;

}

}

return -1;

}

public static int binarySearch(int[] arr, int x) {

if (arr.length == 0) return -1;

int low = 0;

int high = arr.length - 1;

while (low <= high) {

int mid = low + (high - low) / 2;

if (arr[mid] < x) {

low = mid + 1;

} else if (arr[mid] > x) {

high = mid - 1;

} else {

return mid;

}

}

return -1;

}

low + (high - low) / 2.[25]

C++

C++ allows for generic implementations using templates, enabling reuse across data types. The template function below operates on a sortedstd::vector<T> to find x, returning the index or -1. It includes the <algorithm> header for standard utilities, though the core logic relies on integer arithmetic. To mitigate potential integer overflow in midpoint computation—especially for large indices—it employs (low + (high - low) / 2).[26]

#include <vector>

#include <algorithm> // For standard utilities, though not directly used in search logic

template<typename T>

int binarySearch(std::vector<T>& arr, T x) {

if (arr.empty()) return -1;

int low = 0;

int high = arr.size() - 1;

while (low <= high) {

int mid = low + (high - low) / 2;

if (arr[mid] < x) {

low = mid + 1;

} else if (arr[mid] > x) {

high = mid - 1;

} else {

return mid;

}

}

return -1;

}

#include <vector>

#include <algorithm> // For standard utilities, though not directly used in search logic

template<typename T>

int binarySearch(std::vector<T>& arr, T x) {

if (arr.empty()) return -1;

int low = 0;

int high = arr.size() - 1;

while (low <= high) {

int mid = low + (high - low) / 2;

if (arr[mid] < x) {

low = mid + 1;

} else if (arr[mid] > x) {

high = mid - 1;

} else {

return mid;

}

}

return -1;

}

Common Variations and Adaptations

Lower and upper bound searches adapt the standard binary search to identify insertion points in a sorted array rather than exact matches. The lower bound variant returns the index of the first element that is not less than the target value, effectively finding the position where the target would be inserted to maintain sorted order. This is particularly useful in scenarios involving multisets or when detecting the range of duplicate elements. Similarly, the upper bound returns the index of the first element strictly greater than the target, aiding in operations like finding the end of a range of equal elements. These adaptations maintain the O(log n) time complexity of binary search while providing more nuanced results for non-unique searches.[11] Recursive binary search reformulates the iterative version using function calls, dividing the search space by recursing on the appropriate half based on comparisons at the midpoint. The base case terminates when the search interval is empty (low > high), returning not found, or when the target is matched. This approach leverages the call stack for state management, offering cleaner code structure for divide-and-conquer paradigms, though it incurs O(log n) space overhead due to recursion depth. In practice, tail-recursive optimizations in modern compilers can eliminate this stack usage, matching iterative performance.[11] Interpolation search modifies binary search for uniformly distributed keys by estimating the midpoint not as the arithmetic middle but as a proportional position based on the target's value relative to the interval endpoints. Specifically, the estimated index is calculated as low + (target - arr[low]) * (high - low) / (arr[high] - arr[low]), assuming linear distribution. This adaptation yields an average-case time complexity of O(log log n) for uniform data, outperforming standard binary search's O(log n), though worst-case remains O(n) if estimates deviate significantly. Experimental validations confirm low extra accesses.[27] Handling unbounded arrays extends binary search to infinite or dynamically growing sorted structures where the upper limit is unknown. The strategy first establishes bounds by exponentially expanding the search range—starting with a small interval and doubling it until the target is bracketed (i.e., arr[low] ≤ target ≤ arr[high])—taking O(log position) steps. Standard binary search is then applied within these bounds. This achieves overall O(log position) time, where position approximates the target's index, making it suitable for virtual or lazy-loaded arrays. An almost optimal variant refines this doubling to minimize probes in the worst case.[28]Applications and Limitations

Real-World Uses