Recent from talks

Nothing was collected or created yet.

News aggregator

View on Wikipedia

This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

In computing, a news aggregator, also termed a feed aggregator, content aggregator, feed reader, news reader, or simply an aggregator, is client software or a web application that aggregates digital content such as online newspapers, blogs, podcasts, and video blogs (vlogs) in one location for easy viewing. The updates distributed may include journal tables of contents, podcasts, videos, and news items.[1]

Contemporary news aggregators include MSN, Yahoo! News, Feedly, Inoreader, and Mozilla Thunderbird.

Function

[edit]

Aggregation technology often consolidates (sometimes syndicated) web content into one page that can show only the new or updated information from many sites. Aggregators reduce the time and effort needed to regularly check websites for updates, creating a unique information space or personal newspaper. Once subscribed to a feed, an aggregator is able to check for new content at user-determined intervals and retrieve the update. The content is sometimes described as being pulled to the subscriber, as opposed to pushed with email or IM. Unlike recipients of some push information, the aggregator user can easily unsubscribe from a feed. The feeds are often in the RSS or Atom formats which use Extensible Markup Language (XML) to structure pieces of information to be aggregated in a feed reader that displays the information in a user-friendly interface.[1] Before subscribing to a feed, users have to install either "feed reader" or "news aggregator" applications in order to read it. The aggregator provides a consolidated view of the content in one browser display or desktop application. "Desktop applications offer the advantages of a potentially richer user interface and of being able to provide some content even when the computer is not connected to the Internet. Web-based feed readers offer the great convenience of allowing users to access up-to-date feeds from any Internet-connected computer."[2] Although some applications will have an automated process to subscribe to a news feed, the basic way to subscribe is by simply clicking on the web feed icon and/or text link.[2] Aggregation features are frequently built into web portal sites, in the web browsers themselves, in email applications, or in application software designed specifically for reading feeds. Aggregators with podcasting capabilities can automatically download media files, such as MP3 recordings. In some cases, these can be automatically loaded onto portable media players (like iPods) when they are connected to the end-users computer. By 2011, so-called RSS narrators appeared, which aggregated text-only news feeds, and converted them into audio recordings for offline listening. The syndicated contents an aggregator will retrieve and interpret is usually supplied in the form of RSS or other XML-formatted data, such as RDF/XML or Atom.

History

[edit]RSS began in 1999 when it was first introduced by web browser pioneer Netscape.[2] In the beginning, RSS was not a user-friendly gadget and it took some years to spread. "...RDF-based data model that people inside Netscape felt was too complicated for end users."[3] The rise of RSS began in the early 2000s when the New York Times implemented RSS: "One of the first, most popular sites that offered users the option to subscribe to RSS feeds was the New York Times, and the company's implementation of the format was revered as the 'tipping point' that cemented RSS's position as a de facto standard."[4] "In 2005, major players in the web browser market started integrating the technology directly into their products, including Microsoft's Internet Explorer, Mozilla's Firefox and Apple's Safari." As of 2015,[update] according to BuiltWith.com, there were 20,516,036 live websites using RSS.[5]

Types

[edit]Web aggregators gather material from a variety of sources for display in one location. They may additionally process the information after retrieval for individual clients.[6] For instance, Google News gathers and publishes material independent of customers' needs while Awasu[7] is created as an individual RSS tool to control and collect information according to clients' criteria. There are a variety of software applications and components available to collect, format, translate, and republish XML feeds, a demonstration of presentation-independent data. [citation needed]

News aggregation websites

[edit]A news aggregator provides and updates information from different sources in a systematized way. "Some news aggregator services also provide update services, whereby a user is regularly updated with the latest news on a chosen topic".[6] Websites such as Google News, Yahoo News, Bing News, and NewsNow where aggregation is entirely automatic, using algorithms which carry out contextual analysis and group similar stories together.[8] Websites such as Drudge Report and HuffPost supplement aggregated news headline RSS feeds from a number of reputable mainstream and alternative news outlets, while including their own articles in a separate section of the website.[9]

News aggregation websites began with content selected and entered by humans, while automated selection algorithms were eventually developed to fill the content from a range of either automatically selected or manually added sources. Google News launched in 2002 using automated story selection, but humans could add sources to its search engine, while the older Yahoo News, as of 2005, used a combination of automated news crawlers and human editors.[10][11][12]

Web-based feed readers

[edit]Web-based feeds readers allow users to find a web feed on the internet and add it to their feed reader. These are meant for personal use and are hosted on remote servers. Because the application is available via the web, it can be accessed anywhere by a user with an internet connection. There are even more specified web-based RSS readers.[13]

More advanced methods of aggregating feeds are provided via Ajax coding techniques and XML components called web widgets. Ranging from full-fledged applications to small fragments of source code that can be integrated into larger programs, they allow users to aggregate OPML files, email services, documents, or feeds into one interface. Many customizable homepage and portal implementations provide such functionality.

In addition to aggregator services mainly for individual use, there are web applications that can be used to aggregate several blogs into one. One such variety—called planet sites—are used by online communities to aggregate community blogs in a centralized location. They are named after the Planet aggregator, a server application designed for this purpose.

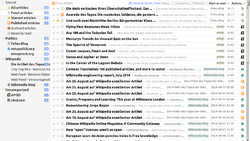

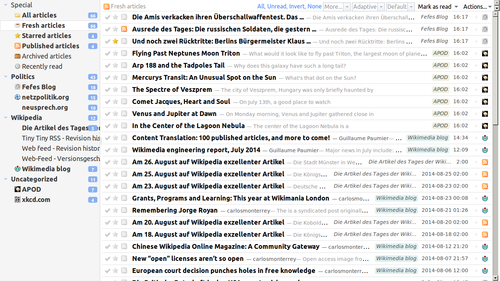

Feed reader applications

[edit]Feed aggregation applications are installed on a PC, smartphone or tablet computer and designed to collect news and interest feed subscriptions and group them together using a user-friendly interface. The graphical user interface of such applications often closely resembles that of popular e-mail clients, using a three-panel composition in which subscriptions are grouped in a frame on the left, and individual entries are browsed, selected, and read in frames on the right.

Software aggregators can also take the form of news tickers which scroll feeds like ticker tape, alerters that display updates in windows as they are refreshed, web browser macro tools or as smaller components (sometimes called plugins or extensions), which can integrate feeds into the operating system or software applications such as a web browser.

Social news aggregators

[edit]Social news aggregators collect the most popular stories on the Internet, selected, edited, and proposed by a wide range of people. "In these social news aggregators, users submit news items (referred to as "stories"), communicate with peers through direct messages and comments, and collaboratively select and rate submitted stories to get to a real-time compilation of what is currently perceived as "hot" and popular on the Internet."[14] Social news aggregators are based on engagement of community. Their responses, engagement level, and contribution to stories create the content and determine what will be generated as RSS feed.

Frame- and media-bias–aware news aggregators

[edit]Media bias and framing are concepts that fundamentally explain deliberate or accidental differences in news coverage. A simple example is comparing media coverage of a topic in two countries, which are in (armed) conflict with another: one can easily imagine that news outlets, particularly if state-controlled, will report differently or even contrarily on the same events (for instance, the Russo-Ukrainian War). While media bias and framing have been subject to manual research for a couple of decades in the social sciences, only recently have automated methods and systems been proposed to analyze and show such differences. Such systems make use of text-features, e.g., news aggregators that extract key phrases that describe a topic differently, or other features, such as matrix-based news aggregation, which spans a matrix over two dimensions, the first dimension being which country an article was published in, and the second being which country it is reporting on.[15][16]

Media aggregators

[edit]Media aggregators are sometimes referred to as podcatchers due to the popularity of the term podcast used to refer to a web feed containing audio or video. Media aggregators are client software or web-based applications which maintain subscriptions to feeds that contain audio or video media enclosures. They can be used to automatically download media, playback the media within the application interface, or synchronize media content with a portable media player. Multimedia aggregators are the current focus. EU launched the project Reveal This to embedded different media platforms in RSS system. "Integrated infrastructure that will allow the user to capture, store, semantically index, categorize and retrieve multimedia, and multilingual digital content across different sources – TV, radio, music, web, etc. The system will allow the user to personalize the service and will have semantic search, retrieval, summarization."[6]

Broadcatching

[edit]Broadcatching is a mechanism that automatically downloads BitTorrent files advertised through RSS feeds.[17] Several BitTorrent client software applications such as Azureus and μTorrent have added the ability to broadcatch torrents of distributed multimedia through the aggregation of web feeds.

Feed filtering

[edit]One of the problems with news aggregators is that the volume of articles can sometimes be overwhelming, especially when the user has many web feed subscriptions. As a solution, many feed readers allow users to tag each feed with one or more keywords which can be used to sort and filter the available articles into easily navigable categories. Another option is to import the user's Attention Profile to filter items based on their relevance to the user's interests.

RSS and marketing

[edit]Some bloggers predicted the death of RSS when Google Reader was shut down.[18][19] Later, however, RSS was considered more of a success as an appealing way to obtain information. "Feedly, likely the most popular RSS reader today, has gone from around 5,000 paid subscribers in 2013 to around 50,000 paid subscribers in early 2015 – that's a 900% increase for Feedly in two years."[20] Customers use RSS to get information more easily while businesses take advantage of being able to spread announcements. "RSS serves as a delivery mechanism for websites to push online content to potential users and as an information aggregator and filter for users."[21] However, it has been pointed out that in order to push the content RSS should be user-friendly to ensure[22] proactive interaction so that the user can remain engaged without feeling "trapped", good design to avoid being overwhelmed by stale data, and optimization for both desktop and mobile use. RSS has a positive impact on marketing since it contributes to better search engine rankings, to building and maintaining brand awareness, and increasing site traffic.[23]

See also

[edit]References

[edit]- ^ a b Miles, Alisha (2009). "RIP RSS: Reviving Innovative Programs through Really Savvy Services". Journal of Hospital Librarianship. 9 (4): 425–432. doi:10.1080/15323260903253753. S2CID 71547323.

- ^ a b c Doree, Jim (1 January 2007). "RSS: A Brief Introduction". The Journal of Manual & Manipulative Therapy. 15 (1): 57–58. doi:10.1179/106698107791090169. ISSN 1066-9817. PMC 2565593. PMID 19066644.

- ^ Hammersley, Ben (2005). Developing Feeds with RSS and Atom. Sebastopol: O'Reilly Media, Inc. ISBN 978-0-596-00881-9.

- ^ "Google Reader is dead but the race to replace the RSS feed is very alive". Digital Trends. July 2013. Retrieved 21 December 2015.

- ^ "RSS Usage Statistics". trends.builtwith.com. Retrieved 21 December 2015.

- ^ a b c Chowdhury, Sudatta; Landoni, Monica (2006). "News aggregator services: user expectations and experience". Online Information Review. 30 (2): 100–115. doi:10.1108/14684520610659157.

- ^ "Welcome to Awasu". www.Awasu.com. Retrieved 27 October 2017.

- ^ "Google News and newspaper publishers: allies or enemies?". Editorsweblog.org. World Editors Forum. Retrieved 31 March 2009.

- ^ Luscombe, Belinda (19 March 2009). "Arianna Huffington: The Web's New Oracle". Time. Time Inc. Archived from the original on 21 March 2009. Retrieved 30 March 2009.

The Huffington Post was to have three basic functions: blog, news aggregator with an attitude and place for premoderated comments.

- ^ Hansell, Saul (24 September 2002). "All the news Google algorithms say is fit to print". The New York Times. Retrieved 20 January 2014.

- ^ Hill, Brad (24 October 2005). Google Search & Rescue For Dummies. John Wiley & Sons. p. 85. ISBN 978-0-471-75811-2.

- ^ LiCalzi O'Connell, Pamela (29 January 2001). "New Economy; Yahoo Charts the Spread of the News by E-Mail, and What It Finds Out Is Itself Becoming News". New York Times.

- ^ Butler, Declan (25 June 2008). "Scientists get online news aggregator". Nature News. 453 (7199): 1149. doi:10.1038/4531149b. PMID 18580906. S2CID 205037759.

- ^ Doerr, Christian; Blenn, Norbert; Tang, Siyu; Van Mieghem, Piet (2012). "Are Friends Overrated? A Study for the Social News Aggregator Digg.com". Computer Communications. 35 (7): 796–809. arXiv:1304.2974. doi:10.1016/j.comcom.2012.02.001. ISSN 0140-3664. S2CID 15187700.

- ^ Felix Hamborg, Norman Meuschke, and Bela Gipp, Matrix-based News Aggregation: Exploring Different News Perspectives in Proceedings of the ACM/IEEE-CS Joint Conference on Digital Libraries (JCDL), 2017.

- ^ Felix Hamborg, Norman Meuschke and Bela Gipp, Bias-aware News Analysis using Matrix-based News Aggregation in the International Journal on Digital Libraries (IJDL), 2018.

- ^ Zhang, Zengbin; Lin, Yuan; Chen, Yang; Xiong, Yongqiang; Shen, Jacky; Liu, Hongqiang; Deng, Beixing; Li, Xing (2009). "Experimental Study of Broadcatching in BitTorrent". 2009 6th IEEE Consumer Communications and Networking Conference. pp. 1–5. CiteSeerX 10.1.1.433.6539. doi:10.1109/CCNC.2009.4784862. ISBN 978-1-4244-2308-8. S2CID 342057.

- ^ "R.I.P. RSS? Google to shut down Google Reader". www.Gizmag.com. 14 March 2013. Retrieved 27 October 2017.

- ^ Olanoff, Drew. "Google Reader's Death Is Proof That RSS Always Suffered From Lack Of Consumer Appeal". Techcrunch. Retrieved 27 October 2017.

- ^ "Is RSS Dead? A Look At The Numbers". MakeUseOf. 25 March 2015. Retrieved 21 December 2015.

- ^ Ma, Dan (1 December 2012). "Use of RSS feeds to push online content to users". Decision Support Systems. 54 (1): 740–749. doi:10.1016/j.dss.2012.09.002.

- ^ "Google Reader is dead but the race to replace the RSS feed is very alive". Digital Trends. July 2013. Retrieved 21 December 2015.

- ^ Hammersley, Ben (2005). Developing Feeds with RSS and Atom. California: O'Reilly Media, Inc. pp. 11. ISBN 9780596519001.

External links

[edit]News aggregator

View on GrokipediaDefinition and Core Principles

Fundamental Functionality

News aggregators fundamentally collect syndicated content from diverse online sources and compile it into a single, accessible interface, enabling users to monitor updates efficiently without navigating multiple websites individually. This core operation hinges on web syndication protocols, primarily RSS (Really Simple Syndication) and Atom, which structure content as XML files containing elements like headlines, summaries, publication timestamps, and hyperlinks to full articles. Publishers expose these feeds via dedicated URLs, allowing aggregators to subscribe and automate retrieval.[10][11] The aggregation process begins with periodic polling of subscribed feeds, where software queries the URLs at set intervals—often ranging from minutes for high-volume sources to hours for less frequent updates—to detect new entries. Retrieved XML data is then parsed to extract and normalize information, followed by indexing for storage and display, typically in chronological order or user-defined categories. Basic implementations emphasize unadulterated presentation of source-provided metadata to preserve original context, while avoiding substantive rewriting to minimize distortion.[12][13] Deduplication mechanisms, such as comparing titles, URLs, or content hashes, prevent redundant listings of identical stories across sources, enhancing usability. Where syndication feeds are absent, some aggregators employ web scraping to crawl and parse HTML pages for equivalent data, though this method depends on site structure stability and complies with robots.txt directives and legal restrictions on automated access. Overall, this functionality democratizes access to information flows, countering silos formed by proprietary platforms, but relies on source diversity to mitigate inherent biases in upstream publishing.[14][12]Key Technologies and Mechanisms

News aggregators acquire content through standardized syndication protocols, primarily RSS (Really Simple Syndication) and Atom, which enable publishers to distribute structured updates in XML format without requiring direct website access. RSS, introduced in 1999, structures feeds with elements like titles, descriptions, publication dates, and links, allowing aggregators to poll sources periodically for new items.[15] Aggregators use feed parsers to process these XML documents, extracting metadata and content for indexing, while handling enclosures for multimedia. This mechanism supports efficient, low-bandwidth ingestion from compliant sites, though adoption has declined since the mid-2010s due to platform shifts toward social distribution.[16] For sources lacking feeds, aggregators employ APIs from news providers, which deliver JSON-formatted data via RESTful endpoints, including endpoints for querying by keywords, dates, or categories. Services like NewsAPI aggregate from over 150,000 sources, providing real-time headlines, articles, and sentiment analysis, with rate limits and authentication via API keys to manage access.[17] This approach ensures structured, verifiable data retrieval, reducing parsing errors compared to unstructured web content, and facilitates integration in scalable systems using languages like Python or JavaScript frameworks.[18] Where APIs or feeds are unavailable, web scraping extracts content by simulating browser requests or using headless crawlers to parse HTML via libraries such as BeautifulSoup or Scrapy. Scrapers target elements like article divs or meta tags, but face challenges including anti-bot measures like CAPTCHAs and dynamic JavaScript rendering, necessitating tools like Selenium or Puppeteer for full-page emulation.[19] Legal considerations arise under terms of service and robots.txt protocols, with some publishers explicitly prohibiting automated extraction.[20] Post-ingestion, aggregators apply deduplication algorithms to identify and merge near-identical stories across sources using techniques like cosine similarity on TF-IDF vectors or fuzzy hashing. Content is then categorized via keyword matching, NLP for entity recognition, or topic models like LDA.[21] Personalization relies on machine learning recommenders, employing collaborative filtering to infer preferences from user interactions or content-based methods matching article vectors to user profiles via embeddings from models like BERT. Deep learning architectures, including neural collaborative filtering, enhance accuracy by capturing sequential reading patterns, though they risk filter bubbles by over-emphasizing past behavior.[22] Real-time systems use vector databases for fast similarity searches, enabling dynamic feeds.[23]Historical Development

Pre-Digital and Early Digital Foundations (Pre-2000)

The concept of news aggregation predates digital technologies, originating with cooperative news-gathering organizations in the mid-19th century that centralized collection and distribution of information to reduce costs and improve efficiency for multiple outlets. The Associated Press (AP), established in 1846 by five New York City newspapers, exemplified this model by pooling resources to hire correspondents and relay dispatches initially via pony express and later telegraph, enabling simultaneous access to shared news content across subscribing publications.[24] Similarly, Reuters, founded in 1851 by Paul Julius Reuter in London, began by disseminating stock prices and commercial intelligence using carrier pigeons and submarine cables before expanding to general news syndication, serving as a model for international aggregation through leased telegraph lines.[25] These wire services functioned as centralized hubs, aggregating reports from global correspondents and distributing them in real-time to newspapers, broadcasters, and other media, thereby laying the groundwork for scalable news dissemination without individual outlets duplicating efforts.[24] Complementing wire services, press clipping bureaus emerged in the late 19th century as manual aggregation tools tailored for businesses, public figures, and organizations seeking targeted monitoring of media mentions. Henry Romeike established the first such service in London around 1881, systematically scanning newspapers for client-specified keywords and mailing physical clippings, a practice that spread to the United States by 1887 with Romeike's New York branch.[26] Frank Burrelle launched a competing U.S. bureau in 1888, employing readers to comb through hundreds of dailies and deliver customized bundles, which by the early 20th century served thousands of clients and processed millions of clippings annually.[27] These services represented an early form of curated aggregation, prioritizing relevance over volume and prefiguring modern algorithmic filtering by relying on human labor to synthesize dispersed print sources into actionable intelligence.[28] The shift to early digital foundations began in the mid-20th century with the electrification and computerization of wire services, transitioning aggregation from telegraphic Morse code to automated transmission. By the 1960s, the AP and United Press International (UPI) adopted computerized phototypesetting and electronic data interchange, allowing news wires to be fed directly into printing systems and early databases, which reduced latency and enabled broader syndication to emerging television and radio networks.[24] In the 1980s, proprietary online platforms introduced rudimentary digital aggregation to consumers; Knight-Ridder's Viewtron, launched in 1983, delivered syndicated news headlines and summaries via dial-up videotex to subscribers' terminals, marking one of the first experiments in electronic news bundling from multiple wire sources.[29] Services like Prodigy, starting in 1988, aggregated updates from AP and Reuters for direct computer access, pushing content upon user login and foreshadowing pull-based feeds.[29] By the 1990s, the World Wide Web accelerated early digital aggregation through portal sites and nascent syndication protocols. Yahoo!, founded in 1994, curated news links from diverse sources into categorized directories, manually aggregating headlines and abstracts to create unified feeds for users navigating the fragmented early internet.[30] Moreover Technologies, emerging in the late 1990s, developed automated web crawlers to index and republish real-time news from thousands of sites, powering enterprise aggregation tools that parsed HTML for headlines and snippets before RSS standardization.[31] Dave Winer's UserLand Software advanced scripting tools around 1997, enabling XML-based "channels" for website content distribution via email or HTTP, serving as a direct precursor to structured feeds by allowing publishers to syndicate updates programmatically.[32] These innovations bridged analog wire models with web-scale automation, emphasizing metadata for discovery and transfer, though limited by dial-up speeds and lack of universal standards until RSS's debut in 1999.[32]Expansion and Mainstream Adoption (2000-2010)

The expansion of news aggregators in the 2000s was propelled by the maturation of RSS technology, which enabled automated syndication of headlines and content summaries from diverse sources. RSS 2.0, finalized in 2002, standardized feed formats and spurred integration across websites, allowing users to pull updates from multiple publishers without manual navigation.[33] This shift addressed the fragmentation of early web news, where static pages required repeated visits, by enabling real-time aggregation through simple XML-based protocols. By mid-decade, RSS feeds proliferated as blogs and traditional media adopted the format to distribute content efficiently, fostering a ecosystem where aggregators could compile feeds into unified interfaces. Google News, launched in September 2002, exemplified early mainstream aggregation by algorithmically indexing stories from over 4,000 sources initially, prioritizing relevance via clustering similar reports and user personalization.[34] Complementing this, web-based feed readers like Bloglines, introduced in 2003, allowed users to subscribe to RSS feeds via browser-accessible dashboards, democratizing access beyond desktop software.[35] These tools gained traction amid the blogosphere's boom, with aggregators handling thousands of feeds and reducing information overload through subscription models that emphasized user-curated streams over editorial gatekeeping. Mainstream adoption accelerated in 2005 with the release of Google Reader on October 7, which offered seamless feed management, sharing, and search within a free web platform, attracting millions of users by simplifying syndication for non-technical audiences.[36] Concurrently, major publishers like The New York Times began providing RSS feeds for headlines in 2004, signaling institutional embrace and expanding aggregator utility to include mainstream journalism.[32] Browser integrations, such as Firefox's RSS support in 2004 and Safari's in 2005, further embedded aggregation into daily browsing, while the RSS feed icon's standardization around 2005-2006 enhanced visibility and usability.[4] This era's growth reflected causal drivers like broadband proliferation and Web 2.0 interactivity, though reliance on voluntary publisher opt-in limited universal coverage compared to later algorithmic scraping.Contemporary Evolution and Market Growth (2011-Present)

The proliferation of smartphones in the early 2010s catalyzed a shift in news aggregation toward mobile-centric platforms, with apps like Flipboard—launched in 2010 but scaling significantly thereafter—and Google News adapting to touch interfaces and push notifications for real-time content delivery.[30] By 2012, smartphone news users were nearly evenly divided between using their devices as primary platforms alongside laptops, reflecting a 46% reliance on laptops but rapid mobile uptake driven by improved connectivity and app ecosystems.[37] This era saw aggregators evolve from RSS-based feed readers to algorithmically curated feeds incorporating user behavior, social signals, and trending topics, as exemplified by platforms like SmartNews (launched 2012 in Japan, expanding globally) and Toutiao (2012 in China), which prioritized personalized digests over chronological listings.[38] Market expansion accelerated amid rising digital ad revenues, which rose from 17% of total news industry advertising in 2011 to 29% by 2016, though this failed to fully offset print declines and highlighted aggregators' role in funneling traffic to publishers.[39] Google News, a dominant player, drove over 1.6 billion weekly visits to news publisher sites by January 2018, up more than 25% since early 2017, underscoring its influence on consumption patterns despite debates over revenue sharing with content creators.[40] Platforms such as Yahoo News and Huffington Post outperformed many traditional outlets in audience reach during this period, with aggregators comprising four of the top 25 U.S. online news destinations by 2011 traffic metrics from sources like Hitwise.[41] [42] Into the 2020s, advancements in machine learning enabled deeper personalization and content verification, with aggregators integrating AI for topic clustering, bias detection, and combating misinformation, including tools for deepfake identification to maintain feed integrity.[43] Usage statistics reflect sustained growth, particularly in emerging markets, though referral traffic shares from aggregators dipped to 18-20% of total social/search referrals by the early 2020s compared to prior peaks, prompting publishers to value them for discovery amid platform algorithm changes.[44] Global market valuations for news aggregators were estimated at USD 14.83 billion in 2025, projected to reach USD 29.77 billion by 2033 at a compound annual growth rate reflecting demand for efficient, algorithm-driven information synthesis.[45] This trajectory aligns with broader digital news consumption trends, where aggregators like Feedly and Inoreader sustained niche appeal for customizable feeds, while mainstream apps emphasized scalability and user retention through data-driven curation.[46]Classifications and Variants

Web-Based News Aggregators

Web-based news aggregators are online platforms accessible primarily through web browsers that collect, organize, and present news content from multiple sources in a centralized interface, often leveraging RSS feeds, APIs, or algorithmic scraping for content ingestion. These services enable users to browse headlines, summaries, and full articles without needing dedicated software installations, distinguishing them from native mobile or desktop applications by prioritizing universal browser compatibility and reduced dependency on device-specific ecosystems. Early implementations drew from portal sites like Yahoo, which began aggregating news links in the 1990s, but modern variants emphasize personalization via machine learning to tailor feeds based on user behavior, location, and explicit preferences.[1][47] Prominent examples include Google News, which processes over 1 billion articles daily from thousands of sources using AI-driven topic clustering and full-text indexing, launched in 2006 after an initial beta in 2002. Feedly, a RSS-focused aggregator, supports web access for subscribing to over 1 million feeds as of 2025, allowing users to organize content into boards and integrate with tools like Zapier for automation. Other notable platforms are AllTop, which categorizes aggregated links from top blogs and sites without algorithmic filtering, and Ground News, which displays news alongside bias ratings from independent evaluators to highlight coverage blind spots across political spectra. Self-hosted options like Tiny Tiny RSS enable users to run private instances on personal servers, aggregating feeds via OPML imports and offering features such as keyboard shortcuts and mobile syncing without third-party data sharing.[48][47][49] Key features of web-based aggregators include search functionalities for querying across sources, customizable topic sections (e.g., Google News' "For You" and "Headlines" tabs), and integration with browser extensions for enhanced clipping or sharing. They often generate revenue through advertising or premium subscriptions, with Google News reporting billions of monthly users driving referral traffic to publishers via outbound links, though this model has faced scrutiny for reducing direct publisher visits by up to 20% in some cases due to in-platform summaries. Unlike app-centric counterparts, web versions typically lack push notifications but excel in SEO discoverability and desktop usability, supporting broader analytics via web traffic tools. Challenges include dependency on source APIs for real-time updates and vulnerability to algorithmic opacity, where curation decisions—such as source prioritization—can inadvertently amplify echo chambers, as evidenced by user studies showing 15-30% variance in story exposure based on initial feed priming.[50][51][49]Feed Reader Applications

Feed reader applications, commonly referred to as RSS readers, are software tools designed to subscribe to and aggregate content from web feeds in formats like RSS (Really Simple Syndication) and Atom. These applications fetch updates from user-selected sources—such as news sites, blogs, and podcasts—and display them in a centralized, typically chronological feed, enabling efficient consumption of syndicated content without visiting individual websites.[52] In the realm of news aggregation, they provide a direct, algorithm-free alternative to platform-curated timelines, relying on publisher-provided feeds for headlines, summaries, and sometimes full articles.[53] The foundational RSS specification emerged in March 1999, developed by Netscape as RDF Site Summary to syndicate website metadata and content updates. Early desktop feed readers appeared in the early 2000s, with FeedDemon offering Windows users features like OPML import/export for subscription management and podcast support.[54] The format evolved through versions, including RSS 2.0 in 2002, which standardized broader syndication. Google's Reader service, launched in 2005, popularized web-based feed reading but was discontinued on July 1, 2013, citing insufficient usage amid the shift to social media feeds; this event catalyzed the development of independent alternatives.[32] Contemporary feed readers vary by platform and hosting model. Web-based options like Feedly, which pivoted to RSS aggregation around 2010, support cross-device syncing and integrate AI for topic discovery in paid plans, serving millions of users focused on news and research.[55] Inoreader emphasizes automation, advanced search, and archiving, positioning itself as a robust tool for power users tracking news timelines via feeds.[56] NewsBlur provides filtering to prioritize or exclude content, with partial open-source code enabling self-hosting extensions.[53] Self-hosted and open-source applications, such as Tiny Tiny RSS (initially released in 2005), allow users to run servers for private aggregation, supporting features like mobile apps and keyboard shortcuts while avoiding third-party data dependencies.[57] Mobile-centric readers include Feeder for Android, an open-source app handling feeds offline, and NetNewsWire for iOS and macOS, which integrates Safari extensions for seamless subscription.[58] These tools mitigate issues like ad overload and tracking prevalent in direct web browsing, though adoption remains niche due to inconsistent feed availability from publishers favoring proprietary apps.[59] Key advantages for news consumption include user-defined curation, reducing exposure to sensationalism amplified by engagement algorithms on social platforms, and support for full-text extraction where feeds lack it.[53] However, challenges persist, including feed parsing errors from malformed publisher data and the labor of curating subscriptions manually. As of 2025, interest in feed readers persists among privacy advocates and professionals seeking unbiased aggregation, evidenced by ongoing development in open-source communities.[60][61]Mobile and App-Centric Aggregators

Mobile and app-centric news aggregators are native applications optimized for smartphones and tablets, harnessing device-specific capabilities such as touch gestures, push notifications, geolocation services, and battery-efficient background syncing to deliver aggregated content. These platforms diverged from desktop-focused tools by prioritizing on-the-go consumption, with early innovations like swipeable card interfaces and algorithmic feeds emerging alongside the iPhone (2007) and Android (2008) ecosystems. By 2010, app stores facilitated rapid proliferation, enabling developers to integrate directly with hardware sensors for contextual personalization, such as surfacing local stories based on user proximity.[62] Prominent examples include Flipboard, which debuted in December 2010 as a "social magazine" app, curating RSS feeds, social media updates, and publisher content into user-flipped digital magazines with over 100 million downloads by the mid-2010s. The app's core mechanism involves machine learning to match user interests with topics, though it has evolved to emphasize human-curated topics amid concerns over algorithmic amplification of unverified sources. Apple News launched on June 8, 2015, with iOS 9, aggregating articles from thousands of publishers through proprietary deals and featuring editorially selected "Top Stories" alongside user-customized channels; its Apple News+ tier, introduced in March 2019, bundles premium magazine access for $9.99 monthly, reaching an estimated 100 million monthly users by 2023 via iOS exclusivity.[63][64] Google News, available as a standalone mobile app since 2009 but refined for modern devices, employs AI-driven personalization to organize global headlines into "For You" feeds, topic follows, and "Full Coverage" clusters drawing from over 50,000 sources, with features like audio briefings and offline downloads enhancing accessibility. RSS-focused apps like Feedly, with iOS and Android clients since around 2010, enable subscription to raw feeds for ad-free, overload-free reading, supporting up to 100 sources in free tiers and integrating AI for trend detection in premium versions priced at $6 monthly. SmartNews, founded in 2012 in Japan and expanded to the U.S. in 2013, uses a neutral algorithm to prioritize "stories that matter" from 7,000+ outlets, emphasizing speed and local coverage via channels like government and traffic alerts, amassing over 20 million downloads by 2020.[65][55][66] These apps have driven market expansion, with the global mobile news apps sector valued at $15.51 billion in 2025, fueled by 9.5% CAGR through 2029 via freemium models, in-app ads, and subscriptions. However, reliance on opaque algorithms raises verification challenges, as empirical studies indicate potential for echo chambers when source diversity is limited by popularity metrics rather than factual rigor. Push notifications, while boosting engagement—e.g., Flipboard's real-time alerts—increase dependency on app ecosystems, potentially sidelining independent verification in favor of convenience.[67]Social and User-Driven Aggregators

Social and user-driven news aggregators facilitate content curation through user submissions, voting mechanisms, and community discussions, where prominence is determined by collective upvotes rather than centralized editorial decisions or automated feeds. This model emerged in the mid-2000s as an alternative to traditional syndication, enabling crowdsourced prioritization of stories based on perceived relevance or interest. Platforms of this type often incorporate comment threads to foster debate, though empirical studies indicate that popularity frequently correlates weakly with objective quality, favoring sensational or timely items over substantive analysis.[68][69] Digg, launched in December 2004 by Kevin Rose and Jay Adelson, exemplified early user-driven aggregation by allowing submissions of links "dug" upward via community votes, peaking at over 180,000 registered users by early 2006.[70][71] Its 2010 algorithm overhaul, which reduced user influence in favor of editorial curation, triggered a user revolt and migration to rivals, highlighting tensions between democratic ideals and platform control.[70] Reddit, founded in June 2005 by Steve Huffman and Alexis Ohanian with initial Y Combinator funding, refined the approach via topic-specific subreddits such as r/news, which by the 2020s served as hubs for user-voted headlines and threaded discourse.[72] With over 500 million monthly active users as of 2024, Reddit's scale has amplified its role in news dissemination, yet analyses reveal systemic left-leaning biases in promoted content, stemming from moderator discretion and user demographics that undervalue conservative perspectives.[73] Hacker News, initiated in February 2007 by Paul Graham of Y Combinator, targets technology and entrepreneurship audiences, employing a simple upvote system to elevate submissions from a self-selecting community of programmers and founders.[74] Launch traffic hovered at 1,600 daily unique visitors, expanding to around 22,000 by later years through organic growth rather than aggressive marketing.[74] Other variants, like Slashdot, emphasize geek culture with moderated discussions appended to user-submitted stories. These sites democratize access but risk "mob rule," where transient trends eclipse verified reporting, as evidenced by correlations between vote counts and shareability over factual rigor in comparative studies of Reddit and Hacker News.[68] Recent evolutions, such as Digg's 2025 AI-assisted relaunch under Rose and Ohanian, aim to blend user input with algorithmic aids for moderation, though core reliance on human judgment persists.[75]Bias-Aware and Specialized Aggregators

Bias-aware news aggregators incorporate mechanisms to highlight or mitigate ideological skews in source selection and presentation, addressing empirical patterns of left-leaning bias in mainstream media outlets and algorithms that amplify such distortions.[8] These tools often rate outlets on a left-to-right spectrum, display comparative coverage of the same events, or flag underreported stories, enabling users to cross-verify claims against diverse perspectives rather than relying on homogenized feeds.[76] Ground News, founded in 2014 and publicly launched as an app on January 15, 2020, aggregates articles from thousands of sources worldwide and assigns each a bias rating based on editorial stance, allowing users to filter by political lean, view "blind spots" in coverage gaps, and track personal news consumption biases via tools like "My News Bias."[77][78][79] As of 2025, it reports covering over 50,000 news outlets and emphasizes factuality alongside bias to reduce misinformation effects.[50] AllSides, operational since 2012, complements aggregation with media bias ratings derived from blind surveys, expert reviews, and community feedback, presenting side-by-side headlines from left-, center-, and right-leaning sources in features like Balanced News Briefings.[80][81] Its July 2025 Media Bias Chart update rates aggregators themselves, such as noting Lean Left tendencies in platforms like SmartNews due to disproportionate sourcing from center-left outlets.[82][83] This approach has been credited with fostering causal awareness of how source selection influences narrative framing, though ratings remain subjective and evolve with new data.[82] Specialized aggregators prioritize depth in targeted domains, curating feeds from domain-specific sources to minimize irrelevant general coverage and enhance signal-to-noise ratios for expert users. Techmeme, launched in 2005, focuses exclusively on technology news, employing algorithmic ranking combined with human oversight to surface real-time headlines from hundreds of tech outlets, with leaderboards tracking influential authors and publications as of September 2025.[84][85] It processes thousands of daily inputs into a concise, archiveable format, serving as a primary tool for industry leaders seeking unfiltered tech developments without broader political noise.[86] In finance, Seeking Alpha aggregates user-generated analysis and news from investment-focused sources, emphasizing stock-specific insights and earnings data since its 2004 inception, with over 20 million monthly users as of 2025 relying on its quantitative ratings and crowd-sourced due diligence to inform decisions.[87] These niche tools often integrate verification layers, such as peer-reviewed signals in science aggregators or market data APIs, but can inherit domain-specific biases if source pools remain undiversified.[47]Technical Underpinnings

Syndication Protocols and Data Ingestion

Syndication protocols standardize the distribution of news content from publishers to aggregators via structured data formats, primarily XML-based web feeds. RSS (Really Simple Syndication), originating in 1999 from Netscape's efforts to summarize site content using RDF, evolved through versions including RSS 0.91 (simplified XML without RDF), RSS 1.0 (RDF-based), and RSS 2.0 (introduced in 2002 by Dave Winer), which remains the most widely supported for its channel-item structure containing elements like title, link, description, publication date, and globally unique identifiers (GUIDs) for items.[88] RSS 2.0 files must conform to XML 1.0 and include required elements such as a channel with at least one item, enabling aggregators to detect updates by comparing timestamps or GUIDs.[88] Atom, developed in 2003 by a working group including Tim Bray to address RSS fragmentation and ambiguities, was standardized as RFC 4287 by the IETF in 2005 as the Atom Syndication Format. Unlike RSS's version-specific dialects, Atom uses XML namespaces for extensibility, mandating elements like feed (analogous to RSS channel), entry (for items), author, and updated timestamps in ISO 8601 format, which improves interoperability and supports threading or categories more robustly. Both protocols facilitate pull-based syndication, where publishers expose feed URLs (often ending in .xml or .rss) that aggregators poll via HTTP GET requests. Data ingestion in news aggregators involves fetching these feeds at configurable intervals (e.g., every 15-60 minutes to balance freshness and server load), parsing the XML payload, and extracting metadata for storage or rendering. Parsing libraries like Python's feedparser handle RSS/Atom variants by normalizing fields—mapping RSS's to Atom's