Recent from talks

Nothing was collected or created yet.

Jitter

View on WikipediaThis article needs additional citations for verification. (March 2009) |

In electronics and telecommunications, jitter is the deviation from true periodicity of a presumably periodic signal, often in relation to a reference clock signal. In clock recovery applications it is called timing jitter.[1] Jitter is a significant, and usually undesired, factor in the design of almost all communications links.

Jitter can be quantified in the same terms as all time-varying signals, e.g., root mean square (RMS), or peak-to-peak displacement. Also, like other time-varying signals, jitter can be expressed in terms of spectral density.

Jitter period is the interval between two times of maximum effect (or minimum effect) of a signal characteristic that varies regularly with time. Jitter frequency, the more commonly quoted figure, is its inverse. ITU-T G.810 classifies deviation lower frequencies below 10 Hz as wander and higher frequencies at or above 10 Hz as jitter.[2]

Jitter may be caused by electromagnetic interference and crosstalk with carriers of other signals. Jitter can cause a display monitor to flicker, affect the performance of processors in personal computers, introduce clicks or other undesired effects in audio signals, and cause loss of transmitted data between network devices. The amount of tolerable jitter depends on the affected application.

Metrics

[edit]For clock jitter, there are three commonly used metrics:[contradictory]

- Absolute jitter

- The absolute difference in the position of a clock's edge from where it would ideally be.

- Maximum time interval error (MTIE)

- Maximum error committed by a clock under test in measuring a time interval for a given period of time.

- Period jitter (a.k.a. cycle jitter)

- The difference between any one clock period and the ideal or average clock period. Period jitter tends to be important in synchronous circuitry such as digital state machines where the error-free operation of the circuitry is limited by the shortest possible clock period (average period less maximum cycle jitter), and the performance of the circuitry is set by the average clock period. Hence, synchronous circuitry benefits from minimizing period jitter, so that the shortest clock period approaches the average clock period.

- Cycle-to-cycle jitter

- The difference in duration of any two adjacent clock periods. It can be important for some types of clock generation circuitry used in microprocessors and RAM interfaces.

In telecommunications, the unit used for the above types of jitter is usually the unit interval (UI) which quantifies the jitter in terms of a fraction of the transmission unit period. This unit is useful because it scales with clock frequency and thus allows relatively slow interconnects such as T1 to be compared to higher-speed internet backbone links such as OC-192. Absolute units such as picoseconds are more common in microprocessor applications. Units of degrees and radians are also used.

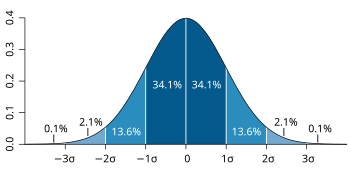

If jitter has a Gaussian distribution, it is usually quantified using the standard deviation of this distribution. This translates to an RMS measurement for a zero-mean distribution. Often, jitter distribution is significantly non-Gaussian. This can occur if the jitter is caused by external sources such as power supply noise. In these cases, peak-to-peak measurements may be more useful. Many efforts have been made to meaningfully quantify distributions that are neither Gaussian nor have a meaningful peak level. All have shortcomings but most tend to be good enough for the purposes of engineering work.

In computer networking, jitter can refer to packet delay variation, the variation (statistical dispersion) in the delay of the packets.

Types

[edit]One of the main differences between random and deterministic jitter is that deterministic jitter is bounded and random jitter is unbounded.[3][4]

Random jitter

[edit]Random jitter, also called Gaussian jitter, is unpredictable electronic timing noise. Random jitter typically follows a normal distribution[5][6] due to being caused by thermal noise in an electrical circuit.

Deterministic jitter

[edit]Deterministic jitter is a type of clock or data signal jitter that is predictable and reproducible. The peak-to-peak value of this jitter is bounded, and the bounds can easily be observed and predicted. Deterministic jitter has a known non-normal distribution. Deterministic jitter can either be correlated to the data stream (data-dependent jitter) or uncorrelated to the data stream (bounded uncorrelated jitter). Examples of data-dependent jitter are duty-cycle dependent jitter (also known as duty-cycle distortion) and intersymbol interference.

Total jitter

[edit]| n | BER |

|---|---|

| 6.4 | 10−10 |

| 6.7 | 10−11 |

| 7 | 10−12 |

| 7.3 | 10−13 |

| 7.6 | 10−14 |

Total jitter (T) is the combination of random jitter (R) and deterministic jitter (D) and is computed in the context to a required bit error rate (BER) for the system:[7]

- T = Dpeak-to-peak + 2nRrms,

in which the value of n is based on the BER required of the link.

A common BER used in communication standards such as Ethernet is 10−12.

Examples

[edit]Sampling jitter

[edit]In analog-to-digital and digital-to-analog conversion of signals, the sampling is normally assumed to be periodic with a fixed period—the time between every two samples is the same. If there is jitter present on the clock signal to the analog-to-digital converter or a digital-to-analog converter, the time between samples varies and instantaneous signal error arises. The error is proportional to the slew rate of the desired signal and the absolute value of the clock error. The effect of jitter on the signal depends on the nature of the jitter. Random jitter tends to add broadband noise while periodic jitter tends to add errant spectral components, "birdys". In some conditions, less than a nanosecond of jitter can reduce the effective bit resolution of a converter with a Nyquist frequency of 22 kHz to 14 bits.[8]

Sampling jitter is an important consideration in high-frequency signal conversion, or where the clock signal is especially prone to interference.

In digital antenna arrays ADC and DAC jitters are the important factors determining the direction of arrival estimation accuracy[9] and the depth of jammers suppression.[10]

Packet jitter in computer networks

[edit]In the context of computer networks, packet jitter or packet delay variation (PDV) is the variation in latency as measured in the variability over time of the end-to-end delay across a network. A network with constant delay has no packet jitter.[11] Packet jitter is expressed as an average of the deviation from the network mean delay.[12] PDV is an important quality of service factor in assessment of network performance.

Transmitting a burst of traffic at a high rate followed by an interval or period of lower or zero rate transmission may also be seen as a form of jitter, as it represents a deviation from the average transmission rate. However, unlike the jitter caused by variation in latency, transmitting in bursts may be seen as a desirable feature,[citation needed] e.g. in variable bitrate transmissions.

Video and image jitter

[edit]Video or image jitter occurs when the horizontal lines of video image frames are randomly displaced due to the corruption of synchronization signals or electromagnetic interference during video transmission. Model-based dejittering study has been carried out under the framework of digital image and video restoration.[13]

Testing

[edit]Jitter in serial bus architectures is measured by means of eye patterns. There are standards for jitter measurement in serial bus architectures. The standards cover jitter tolerance, jitter transfer function and jitter generation, with the required values for these attributes varying among different applications. Where applicable, compliant systems are required to conform to these standards.

Testing for jitter and its measurement is of growing importance to electronics engineers because of increased clock frequencies in digital electronic circuitry to achieve higher device performance. Higher clock frequencies have commensurately smaller eye openings, and thus impose tighter tolerances on jitter. For example, modern computer motherboards have serial bus architectures with eye openings of 160 picoseconds or less. This is extremely small compared to parallel bus architectures with equivalent performance, which may have eye openings on the order of 1000 picoseconds.

Jitter is measured and evaluated in various ways depending on the type of circuit under test.[14] In all cases, the goal of jitter measurement is to verify that the jitter will not disrupt normal operation of the circuit.

Testing of device performance for jitter tolerance may involve injection of jitter into electronic components with specialized test equipment.

A less direct approach—in which analog waveforms are digitized and the resulting data stream analyzed—is employed when measuring pixel jitter in frame grabbers.[15]

Mitigation

[edit]Anti-jitter circuits

[edit]Anti-jitter circuits (AJCs) are a class of electronic circuits designed to reduce the level of jitter in a clock signal. AJCs operate by re-timing the output pulses so they align more closely to an idealized clock. They are widely used in clock and data recovery circuits in digital communications, as well as for data sampling systems such as the analog-to-digital converter and digital-to-analog converter. Examples of anti-jitter circuits include phase-locked loop and delay-locked loop.

Jitter buffers

[edit]Jitter buffers or de-jitter buffers are buffers used to counter jitter introduced by queuing in packet-switched networks to ensure continuous playout of an audio or video media stream transmitted over the network.[16] The maximum jitter that can be countered by a de-jitter buffer is equal to the buffering delay introduced before starting the play-out of the media stream. In the context of packet-switched networks, the term packet delay variation is often preferred over jitter.

Some systems use sophisticated delay-optimal de-jitter buffers that are capable of adapting the buffering delay to changing network characteristics. The adaptation logic is based on the jitter estimates computed from the arrival characteristics of the media packets. Adjustments associated with adaptive de-jittering involves introducing discontinuities in the media play-out which may be noticeable to the listener or viewer. Adaptive de-jittering is usually carried out for audio play-outs that include voice activity detection that allows the lengths of the silence periods to be adjusted, thus minimizing the perceptual impact of the adaptation.

Dejitterizer

[edit]A dejitterizer is a device that reduces jitter in a digital signal.[17] A dejitterizer usually consists of an elastic buffer in which the signal is temporarily stored and then retransmitted at a rate based on the average rate of the incoming signal. A dejitterizer may not be effective in removing low-frequency jitter (wander).

Filtering and decomposition

[edit]A filter can be designed to minimize the effect of sampling jitter.[18]

A jitter signal can be decomposed into intrinsic mode functions (IMFs), which can be further applied for filtering or dejittering.[citation needed]

See also

[edit]References

[edit]- ^ Wolaver, Dan H. (1991). Phase-Locked Loop Circuit Design. Prentice Hall. p. 211. ISBN 978-0-13-662743-2.

- ^ "FTB-8080 Sync Analyzer: Resolving Synchronization Problems in Telecom Networks" (PDF). EXFO. Application note 119. Archived from the original (PDF) on 2012-02-07. Retrieved 2012-08-05.

- ^ Hagedorn, Julian; Alicke, Falk; Verma, Ankur (August 2017). "How to Measure Total Jitter" (PDF). Texas Instruments. SCAA120B. Retrieved 2018-07-17.

- ^ "Understanding Jitter Calculations". Teledyne Technologies. July 9, 2014. Retrieved 2018-07-17.

- ^ Hagedorn, Julian; Alicke, Falk; Verma, Ankur (August 2017). "How to Measure Total Jitter" (PDF). Texas Instruments. SCAA120B. Retrieved 2018-07-17.

- ^ "Understanding Jitter Calculations". Teledyne Technologies. July 9, 2014. Retrieved 2018-07-17.

- ^ Stephens, Ransom. "The Meaning of Total Jitter" (PDF). Tektronix. Archived (PDF) from the original on 2022-10-09. Retrieved 2018-07-17.

- ^ Puente León, Fernando (2015). Messtechnik. Springer. p. 332f. ISBN 978-3-662-44820-5.

- ^ M. Bondarenko and V.I. Slyusar. (6 September 2011). "Influence of jitter in ADC on precision of direction-finding by digital antenna arrays. // Radioelectronics and Communications Systems. - Volume 54, Number 8, 2011.- Pp. 436 – 445.-" (PDF). Radioelectronics and Communications Systems. 54 (8): 436. doi:10.3103/S0735272711080061. S2CID 110506568. Archived (PDF) from the original on 2022-10-09.

- ^ Bondarenko M.V., Slyusar V.I. "Limiting depth of jammer's suppression in a digital antenna array in conditions of ADC jitter.// 5th International Scientific Conference on Defensive Technologies, OTEH 2012. - 18 - 19 September, 2012. - Belgrade, Serbia. - Pp. 495 - 497" (PDF). Archived (PDF) from the original on 2022-10-09.

- ^ Comer, Douglas E. (2008). Computer Networks and Internets. Prentice Hall. p. 476. ISBN 978-0-13-606127-4.

- ^ Demichelis, C. (November 2002). IP Packet Delay Variation Metric for IP Performance Metrics (IPPM). IETF. doi:10.17487/RFC3393. RFC 3393.

- ^ Kang, Sung-Ha; Shen, Jianhong (Jackie) (2006). "Video Dejittering by Bake and Shake". Image and Vision Computing. 24 (2): 143–152. doi:10.1016/j.imavis.2005.09.022.

- ^ M. Bondarenko and V.I. Slyusar. "Methods for estimating the ADC jitter in noncoherent systems. // Radioelectronics and Communications Systems. - Volume 54, Number 10, 2011. – Pp. 536 - 545. - DOI: 10.3103/S0735272711100037" (PDF). Archived (PDF) from the original on 2022-10-09.

- ^ Khvilivitzky, Alexander (2008). "Pixel Jitter in Frame Grabbers". Retrieved 2015-03-09.

- ^ "Jitter Buffer". Archived from the original on 2006-05-07. Retrieved 2006-04-03.

- ^

This article incorporates public domain material from "dejitterizer". Federal Standard 1037C. General Services Administration. Archived from the original on 2022-01-22.

This article incorporates public domain material from "dejitterizer". Federal Standard 1037C. General Services Administration. Archived from the original on 2022-01-22.

- ^ Ahmed, Salman; Chen, Tongwen (April 2011). "Minimizing the Effect of Sampling Jitters in Wireless Sensor Networks". IEEE Signal Processing Letters. 18 (4): 219–222. Bibcode:2011ISPL...18..219A. doi:10.1109/LSP.2011.2109711.

Further reading

[edit]- Li, Mike P. Jitter and Signal Integrity Verification for Synchronous and Asynchronous I/Os at Multiple to 10 GHz/Gbps. Presented at International Test Conference 2008.

- Li, Mike P. A New Jitter Classification Method Based on Statistical, Physical, and Spectroscopic Mechanisms. Presented at DesignCon 2009.

- Liu, Hui, Hong Shi, Xiaohong Jiang, and Zhe Li. Pre-Driver PDN SSN, OPD, Data Encoding, and Their Impact on SSJ. Presented at Electronics Components and Technology Conference 2009.

- Trischitta, Patrick R.; Varma, Eve L. (1989). Jitter in Digital Transmission Systems. Artech. ISBN 978-0-89006-248-7.

- Zamek, Iliya. SOC-System Jitter Resonance and Its Impact on Common Approach to the PDN Impedance. Presented at International Test Conference 2008.

External links

[edit]- Beating the jitter bug at the Wayback Machine (archived 2016-11-30)

- Jitter in VoIP - Causes, solutions and recommended values at the Wayback Machine (archived 2007-10-07)

- An Introduction to Jitter in Communications Systems at the Wayback Machine (archived 2009-01-13)

- Specifications Made Easy at the Wayback Machine (archived 2009-11-17), a Heuristic Discussion of Fibre Channel and Gigabit Ethernet Methods

- Jitter in Packet Voice Networks