Recent from talks

Nothing was collected or created yet.

State-space representation

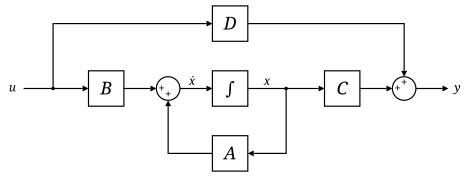

View on WikipediaIn control engineering and system identification, a state-space representation is a mathematical model of a physical system that uses state variables to track how inputs shape system behavior over time through first-order differential equations or difference equations. These state variables change based on their current values and inputs, while outputs depend on the states and sometimes the inputs too. The state space (also called time-domain approach and equivalent to phase space in certain dynamical systems) is a geometric space where the axes are these state variables, and the system’s state is represented by a state vector.

For linear, time-invariant, and finite-dimensional systems, the equations can be written in matrix form,[1][2] offering a compact alternative to the frequency domain’s Laplace transforms for multiple-input and multiple-output (MIMO) systems. Unlike the frequency domain approach, it works for systems beyond just linear ones with zero initial conditions. This approach turns systems theory into an algebraic framework, making it possible to use Kronecker structures for efficient analysis.

State-space models are applied in fields such as economics,[3] statistics,[4] computer science, electrical engineering,[5] and neuroscience.[6] In econometrics, for example, state-space models can be used to decompose a time series into trend and cycle, compose individual indicators into a composite index,[7] identify turning points of the business cycle, and estimate GDP using latent and unobserved time series.[8][9] Many applications rely on the Kalman Filter or a state observer to produce estimates of the current unknown state variables using their previous observations.[10][11]

State variables

[edit]The internal state variables are the smallest possible subset of system variables that can represent the entire state of the system at any given time.[12] The minimum number of state variables required to represent a given system, , is usually equal to the order of the system's defining differential equation, but not necessarily. If the system is represented in transfer function form, the minimum number of state variables is equal to the order of the transfer function's denominator after it has been reduced to a proper fraction. It is important to understand that converting a state-space realization to a transfer function form may lose some internal information about the system, and may provide a description of a system which is stable, when the state-space realization is unstable at certain points. In electric circuits, the number of state variables is often, though not always, the same as the number of energy storage elements in the circuit such as capacitors and inductors. The state variables defined must be linearly independent, i.e., no state variable can be written as a linear combination of the other state variables.

Linear systems

[edit]

The most general state-space representation of a linear system with inputs, outputs and state variables is written in the following form:[13]

where:

- is called the "state vector", ;

- is called the "output vector", ;

- is called the "input (or control) vector", ;

- is the "state (or system) matrix", ,

- is the "input matrix", ,

- is the "output matrix", ,

- is the "feedthrough (or feedforward) matrix" (in cases where the system model does not have a direct feedthrough, is the zero matrix), ,

- .

In this general formulation, all matrices are allowed to be time-variant (i.e. their elements can depend on time); however, in the common LTI case, matrices will be time invariant. The time variable can be continuous (e.g. ) or discrete (e.g. ). In the latter case, the time variable is usually used instead of . Hybrid systems allow for time domains that have both continuous and discrete parts. Depending on the assumptions made, the state-space model representation can assume the following forms:

| System type | State-space model |

|---|---|

| Continuous time-invariant | |

| Continuous time-variant | |

| Explicit discrete time-invariant | |

| Explicit discrete time-variant | |

| Laplace domain of continuous time-invariant |

|

| Z-domain of discrete time-invariant |

Example: continuous-time LTI case

[edit]Stability and natural response characteristics of a continuous-time LTI system (i.e., linear with matrices that are constant with respect to time) can be studied from the eigenvalues of the matrix . The stability of a time-invariant state-space model can be determined by looking at the system's transfer function in factored form. It will then look something like this:

The denominator of the transfer function is equal to the characteristic polynomial found by taking the determinant of , The roots of this polynomial (the eigenvalues) are the system transfer function's poles (i.e., the singularities where the transfer function's magnitude is unbounded). These poles can be used to analyze whether the system is asymptotically stable or marginally stable. An alternative approach to determining stability, which does not involve calculating eigenvalues, is to analyze the system's Lyapunov stability.

The zeros found in the numerator of can similarly be used to determine whether the system is minimum phase.

The system may still be input–output stable (see BIBO stable) even though it is not internally stable. This may be the case if unstable poles are canceled out by zeros (i.e., if those singularities in the transfer function are removable).

Controllability

[edit]The state controllability condition implies that it is possible – by admissible inputs – to steer the states from any initial value to any final value within some finite time window. A continuous time-invariant linear state-space model is controllable if and only if where rank is the number of linearly independent rows in a matrix, and where n is the number of state variables.

Observability

[edit]Observability is a measure for how well internal states of a system can be inferred by knowledge of its external outputs. The observability and controllability of a system are mathematical duals (i.e., as controllability provides that an input is available that brings any initial state to any desired final state, observability provides that knowing an output trajectory provides enough information to predict the initial state of the system).

A continuous time-invariant linear state-space model is observable if and only if

Transfer function

[edit]The "transfer function" of a continuous time-invariant linear state-space model can be derived in the following way:

First, taking the Laplace transform of

yields Next, we simplify for , giving and thus

Substituting for in the output equation

giving

Assuming zero initial conditions and a single-input single-output (SISO) system, the transfer function is defined as the ratio of output and input . For a multiple-input multiple-output (MIMO) system, however, this ratio is not defined. Therefore, assuming zero initial conditions, the transfer function matrix is derived from

using the method of equating the coefficients which yields

Consequently, is a matrix with the dimension which contains transfer functions for each input output combination. Due to the simplicity of this matrix notation, the state-space representation is commonly used for multiple-input, multiple-output systems. The Rosenbrock system matrix provides a bridge between the state-space representation and its transfer function.

Canonical realizations

[edit]Any given transfer function which is strictly proper can easily be transferred into state-space by the following approach (this example is for a 4-dimensional, single-input, single-output system):

Given a transfer function, expand it to reveal all coefficients in both the numerator and denominator. This should result in the following form:

The coefficients can now be inserted directly into the state-space model by the following approach:

This state-space realization is called controllable canonical form because the resulting model is guaranteed to be controllable (i.e., because the control enters a chain of integrators, it has the ability to move every state).

The transfer function coefficients can also be used to construct another type of canonical form

This state-space realization is called observable canonical form because the resulting model is guaranteed to be observable (i.e., because the output exits from a chain of integrators, every state has an effect on the output).

Proper transfer functions

[edit]Transfer functions which are only proper (and not strictly proper) can also be realised quite easily. The trick here is to separate the transfer function into two parts: a strictly proper part and a constant.

The strictly proper transfer function can then be transformed into a canonical state-space realization using techniques shown above. The state-space realization of the constant is trivially . Together we then get a state-space realization with matrices A, B and C determined by the strictly proper part, and matrix D determined by the constant.

Here is an example to clear things up a bit: which yields the following controllable realization Notice how the output also depends directly on the input. This is due to the constant in the transfer function.

Feedback

[edit]

A common method for feedback is to multiply the output by a matrix K and setting this as the input to the system: . Since the values of K are unrestricted the values can easily be negated for negative feedback. The presence of a negative sign (the common notation) is merely a notational one and its absence has no impact on the end results.

becomes

solving the output equation for and substituting in the state equation results in

The advantage of this is that the eigenvalues of A can be controlled by setting K appropriately through eigendecomposition of . This assumes that the closed-loop system is controllable or that the unstable eigenvalues of A can be made stable through appropriate choice of K.

Example

[edit]For a strictly proper system D equals zero. Another fairly common situation is when all states are outputs, i.e. y = x, which yields C = I, the identity matrix. This would then result in the simpler equations

This reduces the necessary eigendecomposition to just .

Feedback with setpoint (reference) input

[edit]

In addition to feedback, an input, , can be added such that .

becomes

solving the output equation for and substituting in the state equation results in

One fairly common simplification to this system is removing D, which reduces the equations to

Moving object example

[edit]A classical linear system is that of one-dimensional movement of an object (e.g., a cart). Newton's laws of motion for an object moving horizontally on a plane and attached to a wall with a spring:

where

- is position; is velocity; is acceleration

- is an applied force

- is the viscous friction coefficient

- is the spring constant

- is the mass of the object

The state equation would then become

where

- represents the position of the object

- is the velocity of the object

- is the acceleration of the object

- the output is the position of the object

The controllability test is then

which has full rank for all and . This means, that if initial state of the system is known (, , ), and if the and are constants, then there is a force that could move the cart into any other position in the system.

The observability test is then

which also has full rank. Therefore, this system is both controllable and observable.

Nonlinear systems

[edit]The more general form of a state-space model can be written as two functions.

The first is the state equation and the latter is the output equation. If the function is a linear combination of states and inputs then the equations can be written in matrix notation like above. The argument to the functions can be dropped if the system is unforced (i.e., it has no inputs).

Pendulum example

[edit]A classic nonlinear system is a simple unforced pendulum

where

- is the angle of the pendulum with respect to the direction of gravity

- is the mass of the pendulum (pendulum rod's mass is assumed to be zero)

- is the gravitational acceleration

- is coefficient of friction at the pivot point

- is the radius of the pendulum (to the center of gravity of the mass )

The state equations are then

where

- is the angle of the pendulum

- is the rotational velocity of the pendulum

- is the rotational acceleration of the pendulum

Instead, the state equation can be written in the general form

The equilibrium/stationary points of a system are when and so the equilibrium points of a pendulum are those that satisfy

for integers n.

See also

[edit]- Control engineering

- Control theory

- State observer

- Observability

- Controllability

- Discretization of state-space models

- Phase space for information about phase state (like state space) in physics and mathematics.

- State space for information about state space with discrete states in computer science.

- Kalman filter for a statistical application.

References

[edit]- ^ Katalin M. Hangos; R. Lakner & M. Gerzson (2001). Intelligent Control Systems: An Introduction with Examples. Springer. p. 254. ISBN 978-1-4020-0134-5.

- ^ Katalin M. Hangos; József Bokor & Gábor Szederkényi (2004). Analysis and Control of Nonlinear Process Systems. Springer. p. 25. ISBN 978-1-85233-600-4.

- ^ Stock, J.H.; Watson, M.W. (2016), "Dynamic Factor Models, Factor-Augmented Vector Autoregressions, and Structural Vector Autoregressions in Macroeconomics", Handbook of Macroeconomics, vol. 2, Elsevier, pp. 415–525, doi:10.1016/bs.hesmac.2016.04.002, ISBN 978-0-444-59487-7

- ^ Durbin, James; Koopman, Siem Jan (2012). Time series analysis by state space methods. Oxford University Press. ISBN 978-0-19-964117-8. OCLC 794591362.

- ^ Roesser, R. (1975). "A discrete state-space model for linear image processing". IEEE Transactions on Automatic Control. 20 (1): 1–10. doi:10.1109/tac.1975.1100844. ISSN 0018-9286.

- ^ Smith, Anne C.; Brown, Emery N. (2003). "Estimating a State-Space Model from Point Process Observations". Neural Computation. 15 (5): 965–991. doi:10.1162/089976603765202622. ISSN 0899-7667. PMID 12803953. S2CID 10020032.

- ^ James H. Stock & Mark W. Watson, 1989. "New Indexes of Coincident and Leading Economic Indicators," NBER Chapters, in: NBER Macroeconomics Annual 1989, Volume 4, pages 351-409, National Bureau of Economic Research, Inc.

- ^ Bańbura, Marta; Modugno, Michele (2012-11-12). "Maximum Likelihood Estimation of Factor Models on Datasets with Arbitrary Pattern of Missing Data". Journal of Applied Econometrics. 29 (1): 133–160. doi:10.1002/jae.2306. hdl:10419/153623. ISSN 0883-7252. S2CID 14231301.

- ^ "State-Space Models with Markov Switching and Gibbs-Sampling", State-Space Models with Regime Switching, The MIT Press, pp. 237–274, 2017, doi:10.7551/mitpress/6444.003.0013, ISBN 978-0-262-27711-2

- ^ Kalman, R. E. (1960-03-01). "A New Approach to Linear Filtering and Prediction Problems". Journal of Basic Engineering. 82 (1): 35–45. doi:10.1115/1.3662552. ISSN 0021-9223. S2CID 259115248.

- ^ Harvey, Andrew C. (1990). Forecasting, Structural Time Series Models and the Kalman Filter. Cambridge: Cambridge University Press. doi:10.1017/CBO9781107049994

- ^ Nise, Norman S. (2010). Control Systems Engineering (6th ed.). John Wiley & Sons, Inc. ISBN 978-0-470-54756-4.

- ^ Brogan, William L. (1974). Modern Control Theory (1st ed.). Quantum Publishers, Inc. p. 172.

Further reading

[edit]- Antsaklis, P. J.; Michel, A. N. (2007). A Linear Systems Primer. Birkhauser. ISBN 978-0-8176-4460-4.

- Chen, Chi-Tsong (1999). Linear System Theory and Design (3rd ed.). Oxford University Press. ISBN 0-19-511777-8.

- Khalil, Hassan K. (2001). Nonlinear Systems (3rd ed.). Prentice Hall. ISBN 0-13-067389-7.

- Hinrichsen, Diederich; Pritchard, Anthony J. (2005). Mathematical Systems Theory I, Modelling, State Space Analysis, Stability and Robustness. Springer. ISBN 978-3-540-44125-0.

- Sontag, Eduardo D. (1999). Mathematical Control Theory: Deterministic Finite Dimensional Systems (PDF) (2nd ed.). Springer. ISBN 0-387-98489-5. Retrieved June 28, 2012.

- Friedland, Bernard (2005). Control System Design: An Introduction to State-Space Methods. Dover. ISBN 0-486-44278-0.

- Zadeh, Lotfi A.; Desoer, Charles A. (1979). Linear System Theory. Krieger Pub Co. ISBN 978-0-88275-809-1.

- On the applications of state-space models in econometrics

- Durbin, J.; Koopman, S. (2001). Time series analysis by state space methods. Oxford, UK: Oxford University Press. ISBN 978-0-19-852354-3.

External links

[edit]- Wolfram language functions for linear state-space models, affine state-space models, and nonlinear state-space models.

![{\displaystyle \dim[\mathbf {A} (\cdot )]=n\times n}](https://wikimedia.org/api/rest_v1/media/math/render/svg/36132ac686feb5a155148ce1af3c4b1cde26d963)

![{\displaystyle \dim[\mathbf {B} (\cdot )]=n\times p}](https://wikimedia.org/api/rest_v1/media/math/render/svg/399a639fe2c75cc2dc72e069fba832a235d70bca)

![{\displaystyle \dim[\mathbf {C} (\cdot )]=q\times n}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0f0734c77f3aaf2ece3c896f20be30bb971eed19)

![{\displaystyle \dim[\mathbf {D} (\cdot )]=q\times p}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5067c86df8b8b6881aa5adc6876fd9ee762cc088)

![{\displaystyle \mathbf {y} (t)=\left[{\begin{matrix}1&0\end{matrix}}\right]\left[{\begin{matrix}\mathbf {x_{1}} (t)\\\mathbf {x_{2}} (t)\end{matrix}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/089622c024d88e55fb7da811b54574235a1af4a1)