Recent from talks

Nothing was collected or created yet.

VJing

View on WikipediaThis article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

VJing (pronounced: VEE-JAY-ing) is a broad designation for realtime visual performance. Characteristics of VJing are the creation or manipulation of imagery in realtime through technological mediation and for an audience, in synchronization to music.[1] VJing often takes place at events such as concerts, nightclubs, music festivals and sometimes in combination with other performative arts. This results in a live multimedia performance that can include music, actors and dancers. The term VJing became popular in its association with MTV's Video Jockey but its origins date back to the New York club scene of the 1970s.[2][3] In both situations VJing is the manipulation or selection of visuals, the same way DJing is a selection and manipulation of audio.

One of the key elements in the practice of VJing is the realtime mix of content from a "library of media", on storage media such as VHS tapes or DVDs, video and still image files on computer hard drives, live camera input, or from computer generated visuals.[4] In addition to the selection of media, VJing mostly implies realtime processing of the visual material. The term is also used to describe the performative use of generative software, although the word "becomes dubious ... since no video is being mixed".[5]

History

[edit]Antecedents

[edit]Historically, VJing gets its references from art forms that deal with the synesthetic experience of vision and sound. These historical references are shared with other live audiovisual art forms, such as Live Cinema, to include the camera obscura, the panorama and diorama, the magic lantern, color organ, and liquid light shows.

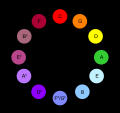

The color organ is a mechanism to make colors correspond to sound through mechanical and electromechanic means. Bainbridge Bishop, who contributed to the development of the color organ, was "dominated with the idea of painting music". In a book from 1893 that documents his work, Bishop states: "I procured an organ, and experimented by building an attachment to the keys, which would play with different colored lights to correspond with the music of the instrument."[6]

Between 1919 and 1927, Mary Hallock-Greenewalt, a piano soloist, created a new technological art form called Nourathar, which means "essence of light" in Arabic. Her light music consisted of environmental color fields that produced a scale of light intensities and color. "In place of a keyboard, the Sarabet had a console with graduated sliders and other controls, more like a modern mixing board. Lights could be adjusted directly via the sliders, through the use of a pedal, and with toggle switches that worked like individual keys."[7]

In clubs and private events in the 1960s "people used liquid-slides, disco balls and light projections on smoke to give the audience new sensations. Some of these experiments were linked to the music, but most of the time they functioned as decorations."[8] These came to be known as liquid light shows. From 1965 to 1966 in San Francisco, the visual shows by artist collectives such as The Joshua Light Show and the Brotherhood of Light accompanied The Grateful Dead concerts, which were inspired by the Beat generation—in particular the Merry Pranksters—and fueled by the "expansion of consciousness" from the Acid Tests.

The Exploding Plastic Inevitable, between 1966 and 1967, organized by Andy Warhol contributed to the fusion of music and visuals in a party context. "The Exploding Party project examined the history of the party as an experimental artistic format, focusing in particular on music visualization - also in live contexts" [9]

1970s

[edit]Important events

[edit]During the late 1970s video and music performance became more tightly integrated. At concerts, a few bands started to have regular film/video along with their music. Experimental film maker Tony Potts was considered an unofficial member of The Monochrome Set for his work on lighting design and film making for projections for live shows. Test Department initially worked with "Bert" Turnball as their resident visual artist, creating slideshows and film for live performances.[10] The organization, Ministry of Power included collaborations with performance groups, traditional choirs and various political activists. Industrial bands would perform in art contexts, as well as in concert halls, and often with video projections. Groups like Cabaret Voltaire started to use low cost video editing equipment to create their own time-based collages for their sound works. In their words, "before [the use of video], you had to do collages on paper, but now you present them in rhythm—living time—in video."[citation needed] The film collages made by and for groups such as the Test Dept, Throbbing Gristle and San Francisco's Tuxedomoon became part of their live shows.

An example of mixing film with live performance is that of Public Image Ltd. at the Ritz Riot in 1981. This club, located on the East 9th St in New York, had a state of the art video projection system. It was used to show a combination of prerecorded and live video on the club's screen. PiL played behind this screen with lights rear projecting their shadows on to the screen. Expecting a more traditional rock show, the audience reacted by pelting the projection screen with beer bottles and eventually pulling down the screen.[11]

Technological developments

[edit]An artist retreat in Owego New York called Experimental Television Center, founded in 1971, made contributions to the development of many artists by gathering the experimental hardware created by video art pioneers: Nam June Paik, Steve Rutt and Bill Etra, and made the equipment available to artists in an inviting setting for free experimentation. Many of the outcomes debuted at the nightclub Hurrah which quickly became a new alternative for video artists who could not get their avant garde productions aired on regular broadcast outlets. Similarly, music video development was happening in other major cities around the world, providing an alternative to mainstream television.

A notable image processor is the Sandin Image Processor (1971), primarily as it describes what is now commonly referred to as open source.

The Dan Sandin Image Processor, or "IP," is an analog video processor with video signals sent through processing modules that route to an output color encoder. The IP's most unique attribute is its non-commercial philosophy, emphasizing a public access to processing methods and the machines that assist in generating the images. The IP was Sandin's electronic expression for a culture that would "learn to use High-Tech machines for personal, aesthetic, religious, intuitive, comprehensive, and exploratory growth." This educational goal was supplemented with a "distribution religion" that enabled video artists, and not-for-profit groups, to "roll-your-own" video synthesizer for only the cost of parts and the sweat and labor it took to build it. It was the "Heathkit" of video art tools, with a full building plan spelled out, including electronic schematics and mechanical assembly information. Tips on soldering, procuring electronic parts and Printed Circuit boards, were also included in the documentation, increasing the chances of successfully building a working version of the video synthesizer.

1980s

[edit]Important events

[edit]In May 1980, multi media artist / filmmaker Merrill Aldighieri was invited to screen a film at the nightclub Hurrah. At this time, music video clips did not exist in large quantity and the video installation was used to present an occasional film. To bring the role of visuals to an equal level with the DJ's music, Merrill made a large body of ambient visuals that could be combined in real time to interpret the music. Working alongside the DJ, this collection of raw visuals was mixed in real time to create a non-stop visual interpretation of the music. Merrill became the world's first full-time VJ. MTV founders came to this club and Merrill introduced them to the term and the role of "VJ", inspiring them to have VJ hosts on their channel the following year. Merrill collaborated with many musicians at the club, notably with electronic musician Richard Bone to make the first ambient music video album titled "Emerging Video". Thanks to a grant from the Experimental Television Center, her blend of video and 16 mm film bore the influential mark of the unique Rutt Etra and Paik synthesizers. This film was offered on VHS through "High Times Magazine" and was featured in the club programming. Her next foray into the home video audience was in collaboration with the newly formed arm of Sony, Sony HOME VIDEO, where she introduced the concept of "breaking music on video" with her series DANSPAK. With a few exceptions like the Jim Carrol Band with Lou Reed and Man Parrish, this series featured unknown bands, many of them unsigned.

The rise of electronic music (especially in house and techno genres) and DJ club culture provided more opportunities for artists to create live visuals at events. The popularity of MTV lead to greater and better production of music videos for both broadcast and VHS, and many clubs began to show music videos as part of entertainment and atmosphere.

Joe Shannahan (owner of Metro in 1989–1990) was paying artists for video content on VHS. Part of the evening they would play MTV music videos and part of the evening they would run mixes from local artists Shanahan had commissioned.[13]

Medusa's (an all-ages bar in Chicago) incorporated visuals as part of their nightly art performances throughout the early to mid 80s (1983–85).[14] Also in Chicago during the mid-80s was Smart Bar, where Metro held "Video Metro" every Saturday night.[15]

Technological developments

[edit]In the 1980s the development of relatively cheap transistor and integrated circuit technology allowed the development of digital video effects hardware at a price within reach of individual VJs and nightclub owners.

One of the first commercially distributed video synthesizers available in 1981 was the CEL Electronics Chromascope sold for use in the developing nightclub scene.[16] The Fairlight Computer Video Instrument (CVI), first produced in 1983, was revolutionary in this area, allowing complex digital effects to be applied in real time to video sources. The CVI became popular amongst television and music video producers and features in a number of music videos from the period. The Commodore Amiga introduced in 1985 made a breakthrough in accessibility for home computers and developed the first computer animation programs for 2D and 3D animation that could produce broadcast results on a desktop computer.

1990s

[edit]Important events

[edit]A number of recorded works begin to be published in the 1990s to further distribute the work of VJs, such as the Xmix compilations (beginning in 1993), Future Sound of London's "Lifeforms"(VHS, 1994), Emergency Broadcast Network's "Telecommunication Breakdown" (VHS, 1995),Coldcut and Hexstatic's "Timber" (VHS, 1997 and then later on CDRom including a copy of VJamm VJ software), the "Mego Videos" compilation of works from 1996 to 1998 (VHS/PAL, 1999) and Addictive TV's 1998 television series "Transambient" for the UK's Channel 4 (and DVD release).

In the United States, the emergence of the rave scene is perhaps to be credited for the shift of the VJ scene from nightclubs into underground parties. From around 1991 until 1994, Mark Zero would do film loops at Chicago raves and house parties.[17] One of the earliest large-scale[18] Chicago raves was "Massive New Years Eve Revolution" in 1993, produced by Milwaukee's Drop Bass Network. It was a notable event as it featured the Optique Vid Tek (OVT) VJs on the bill. This event was followed by Psychosis, held on 3 April 1993, and headlined by Psychic TV, with visuals by OVT Visuals. In San Francisco Dimension 7 were a VJ collective working the early West Coast rave scene beginning in 1993. Between 1996 and 1998, Dimension 7 took projectors and lasers to the Burning Man festival, creating immersive video installations on the Black Rock desert.

In the UK groups such as The Light Surgeons and Eikon were transforming clubs and rave events by combining the old techniques of liquid lightshows with layers of slide, film and video projections. In Bristol, Children of Technology emerged, pioneering interactive immersive environments stemming from co-founder Mike Godfrey's architectural thesis whilst at university during the 1980s. Children of Technology integrated their homegrown CGI animation and video texture library with output from the interactive Virtual Light Machine (VLM), the brainchild of Jeff Minter and Dave Japp, with output onto over 500 sq m of layered screens using high power video and laser projection within a dedicated lightshow. Their "Ambient Theatre Lightshow" first emerged at Glastonbury 93 and they also provided VJ visuals for the Shamen, who had just released their no 1. hit "Ebeneezer Goode" at the festival. Invited musicians jammed in the Ambient Theatre Lightshow, using the VLM, within a prototype immersive environment. Children of Technology took interactive video concepts into a wide range of projects including show production for "Obsession" raves between 1993 and 1995, theatre, clubs, advertising, major stage shows and TV events. This included pioneering projects with 3D video / sound recording and performance, and major architectural projects in the late 1990s, where many media technology ideas were now taking hold. Another collective, "Hex" were working across a wide range of media - from computer games to art exhibitions - the group pioneered many new media hybrids, including live audiovisual jamming, computer-generated audio performances, and interactive collaborative instruments. This was the start of a trend which continues today with many VJs working beyond the club and dance party scene in areas such as installation art.

The Japanese book VJ2000 (Daizaburo Harada, 1999) marked one of the earliest publications dedicated to discussing the practices of VJs.

Technological developments

[edit]The combination of the emerging rave scene, along with slightly more affordable video technology for home-entertainment systems, brought consumer products to become more widely used in artistic production. However, costs for these new types of video equipment were still high enough to be prohibitive for many artists.

There are three main factors that lead to the proliferation of the VJ scene in the 2000s:

- affordable and faster laptops;

- drop in prices of video projectors (especially after the dot-com bust where companies were loading off their goods on craigslist)[19]

- the emergence of strong rave scenes and the growth of club culture internationally

As a result of these, the VJ scene saw an explosion of new artists and styles. These conditions also facilitated a sudden emergence of a less visible (but nonetheless strong) movement of artists who were creating algorithmic, generative visuals.

This decade saw video technology shift from being strictly for professional film and television studios to being accessible for the prosumer market (e.g. the wedding industry, church presentations, low-budget films, and community television productions).These mixers were quickly adopted by VJs as the core component of their performance setups. This is similar to the release of the Technics 1200 turntables, which were marketed towards homeowners desiring a more advanced home entertainment system, but were then appropriated by musicians and music enthusiasts for experimentation. Initially, video mixers were used to mix pre-prepared video material from VHS players and live camera sources, and later to add the new computer software outputs into their mix. The 90s saw the development of a number of digital video mixers such as Panasonic's WJ-MX50, WJ-MX12, and the Videonics MX-1.

Early desktop editing systems such as the NewTek Video Toaster for the Amiga computer were quickly put to use by VJs seeking to create visuals for the emerging rave scene, whilst software developers began to develop systems specifically designed for live visuals such as O'Wonder's "Bitbopper".[20]

The first known software for VJs was Vujak - created in 1992 and written for the Mac by artist Brian Kane for use by the video art group he was part of - Emergency Broadcast Network, though it was not used in live performances.EBN used the EBN VideoSampler v2.3, developed by Mark Marinello and Greg Deocampo. In the UK, Bristol's Children of Technology developed a dedicated immersive video lightshow using the Virtual Light Machine (VLM) called AVLS or Audio-Visual-Live-System during 1992 and 1993. The VLM was a custom built PC by video engineer Dave Japp using super-rare transputer chips and modified motherboards, programmed by Jeff Minter (Llamasoft & Virtual Light Co.). The VLM developed after Jeff's earlier Llamasoft Light Synthesiser programme. With VLM, DI's from live musicians or DJ's activated Jeff's algorithmic real-time video patterns, and this was real-time mixed using pansonic video mixers with CGI animation/VHS custom texture library and live camera video feedback. Children of Technology developed their own "Video Light" system, using hi-power and low-power video projection to generate real-time 3D beam effects, simultaneous with enormous surface and mapped projection. The VLM was used by the Shamen, The Orb, Primal Scream, Obsession, Peter Gabriel, Prince and many others between 1993 and 1996. A software version of the VLM was integrated into Atari's Jaguar console, in response to growing VJ interest. In the mid-90s, Audio reactive pure synthesis (as opposed to clip-based) software such as Cthugha and Bomb were influential.By the late 90s there were several PC based VJing software available, including generative visuals programs such as MooNSTER, Aestesis, and Advanced Visualization Studio, as well as video clip players such as FLxER, created by Gianluca Del Gobbo, and VJamm.

Programming environments such as Max/MSP, Macromedia Director and later Quartz Composer started to become used by themselves and also to create VJing programs like VDMX or pixmix. These new software products and the dramatic increases in computer processing power over the decade meant that VJs were now regularly taking computers to gigs.

2000s

[edit]Important events

[edit]

The new century has brought new dynamics to the practice of visual performance. To be a VJ previously had largely meant a process of self-invention in isolation from others: the term was not widely known. Then through the rise of internet adoption, having access to other practitioners very became the norm, and virtual communities quickly formed. The sense of collective then translated from the virtual world onto physical spaces. This becomes apparent through the numerous festivals that emerge all over Europe with strong focus on VJing.

VJ events in Europe

[edit]The VideA festival in Barcelona ran from 2000 to 2005.[21] AVIT, clear in its inception as the online community of VJCentral.com self-organising a physical presence, had its first festival in Leeds (2002),[22] followed by Chicago (2003), Brighton (2003), San Francisco (2004), and Birmingham (2005), 320x240 in Croatia (2003), Contact Europe in Berlin (2003). Also, the Cimatics festival in Brussels should be credited as a pioneering event, with a first festival edition in 2002 completely dedicated to VJing. In 2003, the Finnish media arts festival PixelAche was dedicated to the topic of VJing, while in 2003, Berlin's Chaos Computer Club started a collaboration with AVIT organisers that featured VJ Camps and Congress strands. LPM - Live Performers Meeting was born in Rome in 2004, with the aim to offer a real meeting space for often individually working artists, a place to meet the fellow VJ artists, spin-off new projects, and share all VJing related experiences, software, questions and insights. LPM since has become one of the leading international meetings dedicated to artists, professionals and enthusiasts of VJing, visual and live video performance, counting its 20th edition in 2019. Also around this time (in 2005 and 2007), UK artists Addictive TV teamed up with the British Film Institute to produce Optronica, a crossover event showcasing audiovisual performances at the London IMAX cinema and BFI Southbank.

Two festivals entirely dedicated to VJing, Mapping Festival in Geneva and Vision'R in Paris, held their first edition in 2005.As these festivals emerged that prominently featured VJs as headline acts (or the entire focus of the festival), the rave festival scene also began to regularly include VJs in their main stage lineups with varying degrees of prominence.

VJ events beyond Europe

[edit]The MUTEK festival (2000–present) in Montréal regularly featured VJs alongside experimental sound art performances, and later the Elektra Festival (2008–present) also emerged in Montréal and featured many VJ performances. In Perth, Australia, the Byte Me! festival (2007) showed the work of many VJs from the Pacific Rim area alongside new media theorists and design practitioners.

With lesser funding[citation needed], the US scene has been host to more workshops and salons than festivals. Between 2000 and 2006, Grant Davis (VJ Culture) and Jon Schwark of Dimension 7 produced "Video Salon", a regular monthly gathering significant in helping establish and educate a strong VJ community in San Francisco, and attended by VJs across California and the United States.[23] In addition, they produced an annual "Video RIOT!" (2003–2005) as a political statement following the R.A.V.E. Act (Reducing Americans' Vulnerability to Ecstasy Act) of 2003; a display of dissatisfaction by the re-election of George W. Bush in 2004; and in defiance of a San Francisco city ordinance limiting public gatherings in 2005.

Several VJ battles and competitions began to emerge during this time period, such as Video Salon's "SIGGRAPH VJ Battle" in San Diego (2003), Videocake's "AV Deathmatch" series in Toronto (2006) and the "VJ Contests" at the Mapping Festival in Geneva (2009). These worked much like a traditional DJ battle where VJs would be given a set amount of time to show off their best mixes and were judged according to several criteria by a panel of judges.

Publications

[edit]Databases of visual content and promotional documentation became available on DVD formats and online through personal websites and through large databases, such as the "Prelinger Archives" on Archive.org. Many VJs began releasing digital video loop sets on various websites under Public Domain or Creative Commons licensing for other VJs to use in their mixes, such as Tom Bassford's "Design of Signage" collection (2006), Analog Recycling's "79 VJ Loops" (2006), VJzoo's "Vintage Fairlight Clips" (2007) and Mo Selle's "57 V.2" (2007).

Promotional and content-based DVDs began to emerge, such as the works from the UK's ITV1 television series Mixmasters (2000–2005) produced by Addictive TV, Lightrhythm Visuals (2003), Visomat Inc. (2002), and Pixdisc, all of which focused on the visual creators, VJ styles and techniques. These were then later followed by NOTV, Atmospherix, and other labels. Mia Makela curated a DVD collection for Mediateca of Caixa Forum called "LIVE CINEMA" in 2007, focusing on the emerging sister practice of "live cinema". Individual VJs and collectives also published DVDs and CD-ROMs of their work, including Eclectic Method's bootleg video mix (2002) and Eclectic Method's "We're Not VJs" (2005), as well as eyewash's "DVD2" (2004) and their "DVD3" (2008).

Books reflecting on the history, technical aspects, and theoretical issues began to appear, such as "The VJ Book: Inspirations and Practical Advice for Live Visuals Performance" (Paul Spinrad, 2005), "VJ: Audio-Visual Art and VJ Culture" (Michael Faulkner and D-Fuse, 2006), "vE-jA: Art + Technology of Live Audio-Video" (Xárene Eskandar [ed], 2006), and "VJ: Live Cinema Unraveled" (Tim Jaeger, 2006). The subject of VJ-DJ collaboration also started to become a subject of interest for those studying in the field of academic human–computer interaction (HCI).

Video Scratching

[edit]In 2004, Pioneer released the DVJ-X1 DVD player, which allowed DJ's to scratch and manipulate videos like vinyl. In 2006 Pioneer released a successor unit, DVJ-1000, with similar functionality. Those video players have inspired DJ software makers to add video plugins to their software. The first example of this was Numark Virtual Vinyl, released in 2007, which had this option available. In 2008, Serato Scratch Live received the same option, called Video-SL. This allowed turntable manipulations of videos, and added more options to the Rane TTM-57SL mixer.

Technological developments

[edit]The availability and affordability of new consumer-level technology allowed many more people to get involved into VJing. The dramatic increase in computer processing power that became available facilitated more compact, yet often more complex setups, sometimes allowing VJs to bypass using a video mixer, using powerful computers running VJ software to control their mixing instead. However, many VJs continue to use video mixers with multiple sources, which allows flexibility for a wide range of input devices and a level of security against computer crashes or slowdowns in video playback due to overloading the CPU of computers due to the demanding nature of realtime video processing.

Today's VJs have a wide choice of off the shelf hardware products, covering every aspect of visuals performance, including video sample playback (Korg Kaptivator), real-time video effects (Korg Entrancer) and 3D visual generation.

The widespread use of DVDs gave initiative for scratchable DVD players.

Many new models of MIDI controllers became available during the 2000s, which allow VJs to use controllers based on physical knobs, dials, and sliders, rather than interact primarily with the mouse/keyboard computer interface.

There are also many VJs working with experimental approaches to working with live video. Open source graphical programming environments (such as Pure Data) are often used to create custom software interfaces for performances, or to connect experimental devices to their computer for processing live data (for example, the IBVA EEG-reading brainwave unit, the Arduino microprocessor, or circuit bending children's toys).

The second half of this decade also saw a dramatic increase in display configurations being deployed, including widescreen canvases, multiple projections and video mapped onto the architectural form. This shift has been underlined by the transition from broadcast based technology - fixed until this decade firmly in the 4x3 aspect ratio specifications NTSC and PAL - to computer industry technology, where the varied needs of office presentation, immersive gaming and corporate video presentation have led to diversity and abundance in methods of output. Compared to the ~640x480i fixed format of NTSC/PAL, a contemporary laptop using DVI can output a great variety of resolutions up to ~2500px wide, and in conjunction with the Matrox TripleHead2Go can feed three different displays with an image coordinated across them all.

Common technical setups

[edit]A significant aspect of VJing is the use of technology, be it the re-appropriation of existing technologies meant for other fields, or the creation of new and specific ones for the purpose of live performance. The advent of video is a defining moment for the formation of the VJ (video jockey).

Often using a video mixer, VJs blend and superimpose various video sources into a live motion composition.In recent years, electronic musical instrument makers have begun to make specialty equipment for VJing.

VJing developed initially by performers using video hardware such as videocameras, video decks and monitors to transmit improvised performances with live input from cameras and even broadcast TV mixed with pre-recorded elements. This tradition lives on with many VJs using a wide range of hardware and software available commercially or custom made for and by the VJs.

VJ hardware can be split into categories -

- Source hardware generates a video picture which can be manipulated by the VJ, e.g. video cameras and Video Synthesizers.

- Playback hardware plays back an existing video stream from disk or tape-based storage mediums, e.g. VHS tape players and DVD players.

- Mixing hardware allows the combining of multiple streams of video e.g. a video mixer or a computer utilizing VJ software.

- Effects hardware allows the adding of special effects to the video stream, e.g. Colour Correction units

- Output hardware is for displaying the video signal, e.g. Video projector, LED display, or Plasma Screen.

There are many types of software a VJ may use in their work, traditional NLE production tools such as Adobe Premiere, After Effects, and Apple's Final Cut Pro are used to create content for VJ shows.Specialist performance software is used by VJs to play back and manipulate video in real-time.

VJ performance software is highly diverse, and include software which allows a computer to replace the role of an analog video mixer and output video across extended canvasses composed of multiple screens or projectors. Small companies producing dedicated VJ software such as Modul8 and Magic give VJs a sophisticated interface for real-time processing of multiple layers of video clips combined with live camera inputs, giving VJs a complete off the shelf solution so they can simply load in the content and perform. Some popular titles which emerged during the 2000s include Resolume, NuVJ.

Some VJs prefer to develop software themselves specifically to suit their own performance style. Graphical programming environments such as Max/MSP/Jitter, Isadora, and Pure Data have developed to facilitate rapid development of such custom software without needing years of coding experience.

Sample workflows

[edit]There are many types of configurations of hardware and software that a VJ may use to perform.

Research and reflective thinking

[edit]Several research projects have been dedicated to the documentation and study of VJing from the reflective and theoretical point of view. Round tables, talks, presentations and discussions are part of festivals and conferences related to new media art, such as ISEA and Ars Electronica for example, as well as specifically related to VJing, as is the case of the Mapping Festival. Exchange of ideas through dialogue contributed to the shift of the discussion from issues related to the practicalities of production to more complex ideas and to the process and the concept. Subjects related to VJing are, but not exclusively: identity and persona (individual and collective), the moment as art, audience participation, authorship, networks, collaboration and narrative. Through collaborative projects, visual live performance shift to a field of interdisciplinary practices.

Periodical publications, online and printed, launched special issues on VJing. This is the case of AMinima printed magazine, with a special issue on Live Cinema[24] (which features works by VJs), and Vague Terrain (an online new media journal), with the issue The Rise of the VJ.[25]

See also

[edit]- Culture jamming

- Datamoshing

- Found footage

- Glitch art

- Live event visual amplification

- List of music software

- Mashup (video)

- Music visualization

- New media art

- New Media art festivals

- Remix culture

- Scratch Video

- Video art

- Video scratching

- Video synthesizer

- Vidding

- VJ (media personality)

- VJ Hypnotica

- TouchDesigner

Notes

[edit]- ^ "VJ: an artist who creates and mixes video live and in synchronization to music". - Eskandar, p.1.

- ^ Dekker, Anette (May 2006) [2003], "Synaesthetic Performance in the Club Scene", Cosign 2003: Computational Semiotics, archived from the original on 21 July 2011, retrieved 7 May 2010

- ^ "The term VJ was first used at the end of the 1970s in the New York club Peppermint Lounge" - Crevits, Bram (2006), "The roots of VJing - A historical overview", VJ: Audio-visual Art + VJ Culture, London: Laurence King: 14, ISBN 9781856694902, retrieved 11 May 2010

- ^ Davis, Grant (2006), "VJ 101:: Hardware/Software Basics", VE-jA: Art + Technology of Live Audio-Video, San Francisco: h4SF: 12

- ^ Watz, Marius (2006), "More Points on the Chicken: and new Directions in Improvised Visual Performance", VE-jA: Art + Technology of Live Audio-Video, San Francisco: h4SF: 7

- ^ Bainbridge, Bishop (1893), A Souvenir of the Color Organ (PDF), archived from the original (PDF) on 15 July 2011, retrieved 7 May 2010

- ^ Betancourt, Michael (May 2008). "Pushing the Performance Envelope". MAKE. 14 (1556–2336): 47.

- ^ Dekker (2006)

- ^ Lund (2009)

- ^ credited on Beating The Retreat, Some Bizzare Records, 1984

- ^ Caraballo, Ed (1977), There's A Riot Goin' On: The Infamous Public Image Ltd. Riot Show, The Ritz- 1981, retrieved 11 May 2010

- ^ "VSynths: SANDIN IMAGE PROCESSOR". audiovisualizers.com. Archived from the original on 7 May 2017. Retrieved 20 April 2017.

- ^ Interview with Brien Rullman aka VJ Tek of OVT. 10 May 2010

- ^ "Medusa Chicago". Archived from the original on 2010-05-03. Retrieved 2010-05-12.

- ^ "History - Metro Chicago". metrochicago.com.

- ^ "Chromascope Video Synthesizer - eyetrap.net VJ network". www.eyetrap.net.

- ^ Interview with Brien Rullman of OVT.

- ^ Interview with OVT.

- ^ Interview with Grant Davis aka VJ Culture specifically about the San Francisco scene.

- ^ "History". owonder.com. Archived from the original on September 5, 2008.

- ^ "VideA was the first festival in Spain and perhaps to Europe to dedicate itself to the VJ's art." - Makela, Mia (2006), "VJ Scene Spain", VE-jA: Art + Technology of Live Audio-Video, San Francisco: h4SF: 98

- ^ Eskandar, p.181

- ^ Eskandar, pp. 174–175

- ^ Makela, Mia, ed. (December 2009), "Live Cinema", AMinima Magazine (22), Barcelona

- ^ Gates, Carrie, ed. (March 2008). "The Rise of the VJ". Vague Terrain (9). Archived from the original on 12 June 2010. Retrieved 12 May 2010.

References

[edit]- Amerika, Mark (2007). Meta/Data: A Digital Poetics. Cambridge: MIT Press. ISBN 978-0-262-01233-1

- Bargsten, Joey (2011). "Hybrid Forms and Syncretic Horizons". Saarbrücken: Lambert Academic Publishing. ISBN 978-3-8465-0355-3

- Dekker, Anette (May 2006), "Synaesthetic Performance in the Club Scene", Cosign 2003: Computational Semiotics, archived from the original on 21 July 2011, retrieved 7 May 2010

- Eskandar, Xárene (ed) (2006). vE-jA: Art + Technology of Live Audio-Video. San Francisco: h4SF. ISBN 978-0-9765060-5-8

- Faulkner, Michael / D-Fuse (ed), 2006. VJ: Audio-Visual Art and VJ Culture. London: Laurence King. ISBN 978-1-85669-490-2

- Harada, Daizaburo (1999), VJ2000 (in Japanese), August

- Jaeger, Timothy (2006), VJ: Live Cinema Unraveled. Handbook for Live Visual Performance (PDF), retrieved 12 May 2010[permanent dead link]

- Lehrer, Jonah (2008). Proust was a neuroscientist. New York:Mariner. ISBN 978-0-618-62010-4

- Lund, Cornelia & Lund, Holger (eds), 2009. Audio.Visual- On Visual Music and Related Media. Estugarda: Arnoldsche Art publishers. ISBN 978-3-89790-293-0

- Manu; Ideacritik & Velez, Paula, et al. (2009) " Aether9 Communications: Proceedings". TK: Greyscale Editions. Vol3, Issue 1. ISSN 1663-7658

- Momus, VJ Culture: Design Takes Center Stage, Adobe Design Center, retrieved 29 August 2010

- Spinrad, Paul (2005). The VJ Book: Inspirations and practical Advice for Live Visuals Performance. Los Angeles: Feral House. ISBN 1-932595-09-0

- Ustarroz, César (2010), Teoría del VJing - Realización y Representación Audiovisual en Tiempo Real (in Spanish), Ediciones Libertarias, ISBN 978-84-7954-703-5

- VJ Theory (ed) (2008). "VJam Theory: Collective Writings on Realtime Visual Performance". Falmouth:realtime Books. ISBN 978-0-9558982-0-4

External links

[edit]- Visual Music Archive by Prof. Dr. Heike Sperling

- VJ booking - Free VJ Portfolio Network

- VJ Union – VJ Union VJ Network

- VJs Mag – Audio Visual Performers Source

- VJs TV Archived 2023-03-23 at the Wayback Machine by VJs Magazine – Audio Visual Performers Network

- Visualist:Those who See Beyond Documentary Film about VJ Cultures

- A.Visualist A Road movie on VJing around the world

VJing

View on GrokipediaHistory

Antecedents and Early Influences

The conceptual foundations of VJing trace back to early attempts to synchronize light and color with music, rooted in synesthetic ideas. In 1725, French Jesuit Louis Bertrand Castel proposed the color harpsichord, an instrument projecting colored lights through glass panels corresponding to musical notes on a keyboard.[4] This device aimed to visually represent harmonic intervals, influencing later audiovisual experiments. By the late 19th century, American inventor Bainbridge Bishop attached a light projector to an organ in 1893, automating color projections tied to keys.[5] In the early 20th century, Russian composer Alexander Scriabin integrated these principles into composition with Prometheus: The Poem of Fire (Op. 60, 1910), specifying a "clavier à lumières" or color organ part to project hues matching the score's keys based on his systematic color-to-pitch associations derived from the circle of fifths.[4] The work premiered on March 20, 1915, in New York, using an electromechanical approximation that projected colored lights onto a screen during orchestral performance, though not fully realizing Scriabin's vision due to technical limitations.[4] Concurrently, American pianist and inventor Mary Hallock-Greenewalt developed the Nourathar (also referred to as the Sarabet) from 1919 to 1927, a console with sliders, pedals, and rheostats allowing manual control of light intensity and color filters to create expressive "light music" accompanying piano performances.[5] These innovations established precedents for real-time visual modulation responsive to auditory cues, prefiguring VJing's core dynamic of live audiovisual synthesis. The 1960s psychedelic era brought practical precursors through liquid light shows, which deployed overhead projectors with dyed oils, inks, and solvents to generate fluid, abstract visuals projected onto screens or walls during rock concerts. Emerging in San Francisco around 1965–1966 amid the Acid Tests and counterculture scene, collectives like the Joshua Light Show and Brotherhood of Light provided improvisational projections for performances by bands including the Grateful Dead, syncing evolving patterns to musical rhythms and improvisations.[5][6] Andy Warhol's Exploding Plastic Inevitable (1966–1967), featuring The Velvet Underground, amplified this by combining multiple film projectors, strobe lights, and mirrored environments to create immersive, reactive multimedia spectacles.[6] These analog techniques demonstrated the performative potential of visuals as a collaborative, ephemeral art form intertwined with live music, directly informing VJing's emphasis on immediacy, audience engagement, and technological mediation of perception.[6]1970s Disco and Light Shows

The 1970s disco era marked a commercialization and adaptation of 1960s psychedelic light shows, shifting from countercultural rock concerts to high-energy dance clubs where visuals emphasized rhythmic synchronization with four-on-the-floor beats. Operators employed oil-wheel projectors, liquid dyes manipulated in shallow trays, and overhead projectors to create flowing, amorphous patterns projected onto walls and ceilings, often combined with fog machines for diffusion effects.[7] These techniques, refined for brighter, more club-friendly outputs, drew from earlier liquid light innovations but prioritized durability and spectacle over abstract psychedelia, with setups costing up to $10,000 for professional rigs including multiple projectors and color gels.[8] Electronic control systems, emerging around 1968 with transistor-based dimmers and thyristor switches, enabled precise timing of lights to music cues, supplanting manual faders and laying groundwork for automated visual performance.[9] Iconic elements like the rotating mirrored ball, which scattered light fragments across dance floors, became standard by the mid-1970s, as seen in venues such as New York's Studio 54—opened April 26, 1977—where custom installations by lighting designer Ron Dunas integrated strobes, pin spots, and par cans for dynamic, body-sculpting illumination.[10] Laser light shows, initially rudimentary helium-neon beams modulated for simple patterns, gained traction post-1971 when affordable units like those from Lasersonics entered clubs, adding linear sweeps and bursts synced to bass drops despite early safety concerns and regulatory bans in some areas until the late 1970s.[11][7] In this context, disco light operators functioned as proto-VJs, improvising real-time cues via sound-to-light interfaces that converted audio signals into color shifts and flashes, influencing the New York club scene where, by the late 1970s, crews at spots like the Peppermint Lounge began incorporating film loops and early video projections—such as Super 8 footage or closed-circuit TV feeds—alongside lights for more narrative visuals tied to DJ sets.[11] This hybrid approach, distinct from static installations, emphasized performer agency and audience immersion, with events drawing crowds of 1,000–2,000 nightly in peak clubs, though technical limitations like projector bulb failures and heat buildup constrained durations to 4–6 hours per show.[10] By decade's end, these practices bridged analog lighting artistry to emerging video manipulation, amid disco's backlash but enduring influence on club visuals.[12]1980s MTV and Broadcast Origins

Music Television (MTV) launched on August 1, 1981, at 12:01 a.m. Eastern Time, marking the debut of a cable network dedicated to continuous music video broadcasts, with the inaugural video being "Video Killed the Radio Star" by The Buggles.[13] The channel's format featured pre-recorded music videos supplied gratis by record labels for promotional purposes, introduced by on-air hosts known as video jockeys (VJs), a term modeled after radio disc jockeys (DJs).[13] These VJs, including Nina Blackwood, Mark Goodman, Alan Hunter, J.J. Jackson, and Martha Quinn, provided brief commentary, transitions, and occasional interviews, establishing a broadcast template for pairing synchronized audiovisual content with popular music.[14] MTV's early programming emphasized this non-stop video rotation, which rapidly expanded from an initial reach of 2.1 million households to influencing global music promotion by the mid-1980s, as artists like Michael Jackson and Madonna leveraged videos for visual storytelling that complemented audio tracks.[15] Unlike contemporaneous live light shows in clubs, MTV VJs did not engage in real-time visual manipulation; their role was curatorial, selecting and sequencing static videos rather than generating or altering imagery dynamically.[4] This broadcast model, however, popularized the "VJ" nomenclature and normalized the idea of visuals as an integral, performative extension of music, fostering audience expectations for immersive, music-driven imagery that later informed live VJing practices.[4] By the late 1980s, MTV began diversifying beyond pure video playlists into shows like Headbangers Ball (launched 1987) and news segments, reflecting maturing infrastructure with over 50 million U.S. subscribers by 1989, yet the foundational 1981-1985 era solidified broadcast VJing as a promotional vehicle rather than an artistic improvisation tool.[14] Critics note that while MTV amplified video production—spurring investments from labels—the channel's VJs operated under scripted constraints, prioritizing accessibility over experimental visuals, which contrasted with underground video art but democratized music visualization for mass audiences.[4] This era's emphasis on polished, narrative-driven clips laid causal groundwork for VJing's evolution, as broadcast exposure heightened demand for custom visuals in performance contexts.[15]1990s Digital Emergence

The 1990s marked the initial shift toward digital technologies in VJing, driven by advancements in affordable hardware that enabled real-time video manipulation beyond analog limitations. The NewTek Video Toaster, released in December 1990 for the Amiga computer at $2,399, integrated a video switcher, frame buffer, and effects generator, allowing users to perform chroma keying, transitions, and compositing in real time on consumer-grade systems.[16] This tool democratized complex video production, previously confined to expensive broadcast equipment, and was adopted by enthusiasts for live performances, including early VJ setups in club environments.[17] Digital video mixers further bridged analog sources with emerging digital processing, providing VJs with built-in effects and synchronization capabilities. The Videonics MX-1, introduced in 1994 for around $1,200, offered 239 transition effects, frame synchronization, and time base correction for up to four inputs, making it accessible for non-professionals to create dynamic live visuals.[18] Similarly, Panasonic's WJ-MX50 professional mixer incorporated digital processing for special effects like wipes and keys, supporting two-channel frame synchronization to handle disparate video sources seamlessly.[19] These devices facilitated the integration of pre-recorded tapes, cameras, and generated signals, essential for the improvisational demands of live VJing. In the context of expanding rave culture, these technologies empowered VJs to synchronize abstract digital visuals with electronic music, evolving from static projections to reactive, computer-generated content. Commodore Amiga systems, including models like the Amiga 500, were commonly used by 1990s rave VJs for generating tripped-out effects via software demos and custom hacks, often combined with video feedback and lasers.[20] Pioneering practitioners began writing bespoke software and modifying hardware, such as game controllers, laying groundwork for software-based VJing while computers from Commodore, Atari, and Apple enabled experimentation with digital effects in underground scenes.[4] This era's innovations, though hardware-centric, foreshadowed the software dominance of the 2000s by emphasizing real-time digital creativity over purely mechanical mixing.[21]2000s Video Scratching and Expansion

In the early 2000s, video scratching emerged as a technique mirroring audio scratching, involving real-time manipulation of video playback—including rapid forward-backward motion, speed variations, and cue point jumps—to create rhythmic visual effects synchronized with music. This practice built on digital video's accessibility, enabling VJs to layer, reverse, and cut footage dynamically during live performances.[22] A key hardware innovation was Pioneer's DVJ-X1 DVD turntable, released in 2004, which allowed DJs to load DVDs and perform scratches, loops, and instant cues on video content akin to vinyl manipulation, with separate audio and video stream control. Priced at approximately $3,299, the device bridged DJ and VJ workflows, popularizing video scratching in club environments by outputting manipulated visuals to projectors or screens.[23][24] Software developments further advanced video scratching and real-time manipulation. VDMX, originating from custom tools developed by Johnny Dekam in the late 1990s for live visuals, evolved into a robust platform by the mid-2000s, supporting effects processing, MIDI control, and glitch-style scratching via keyboard or controller inputs. Resolume 2, launched on August 16, 2004, introduced features like multi-screen output, network video streaming, and clip-based scratching, enabling VJs to handle layered compositions with crossfaders and effects chains. VJamm, among the earliest commercial VJ tools, facilitated loop sampling and beat-synced manipulations, akin to turntablism software.[25][26][27] The decade's expansion of VJing stemmed from falling costs of laptops with sufficient processing power for real-time video decoding and effects—such as Intel's Pentium 4 processors enabling 720p playback—and affordable projectors dropping below $1,000 by mid-decade, democratizing high-resolution displays in clubs and festivals. These factors, combined with widespread adoption of FireWire for external video capture and MIDI controllers for precise scrubbing, shifted VJing from analog hardware to software-centric setups, fostering growth in electronic music scenes like IDM and techno, where VJs like those in D-Fuse collective integrated scratching into immersive projections. By 2009, events such as the Motion Notion festival showcased hybrid DJ-VJ acts using these tools for audience-responsive visuals.[28][4]2010s to 2020s Mainstream Integration and Tech Advances

In the 2010s, VJing achieved greater mainstream integration alongside the explosive growth of electronic dance music (EDM) festivals and concerts, where synchronized visuals became a standard enhancement to audio performances. Events such as Electric Daisy Carnival (EDC) and Ultra Music Festival routinely featured VJs delivering real-time projections and LED displays that complemented DJ sets, transforming stages into immersive environments and contributing to EDM's commercial peak.[29][30] This adoption was driven by the genre's shift from underground to global phenomenon, with visuals amplifying audience engagement at multi-genre festivals like Coachella, where electronic acts increasingly incorporated custom VJ content.[31][32] Technological advances in software facilitated more sophisticated real-time manipulation, with Resolume Arena evolving to support advanced projection mapping, multi-screen outputs, and integration with lighting fixtures like DMX-controlled systems, enabling seamless synchronization across large venues.[26][33] Similarly, VDMX matured as a professional tool, incorporating enhanced scripting, FX processing, and compatibility updates through the 2010s and into the 2020s, allowing VJs to handle complex live remixing on Mac hardware.[25][34] Hardware innovations shifted toward high-brightness LED walls, which surpassed projectors in modularity, resolution, and daylight visibility for outdoor festivals, reducing setup depth and enabling pixel-perfect scalability for massive installations.[35][36] In the 2020s, these panels integrated with VJ software for real-time content adaptation, supporting hybrid indoor-outdoor events post-pandemic, while projection mapping systems advanced for architectural overlays in live performances.[37] This evolution lowered barriers for VJs, making high-fidelity visuals accessible beyond niche clubs to stadium-scale productions.[38]Technical Setups

Core Hardware Components

A high-performance computer forms the backbone of most contemporary VJ setups, providing the computational power necessary for real-time video processing, effects rendering, and software execution. These systems typically feature dedicated graphics processing units (GPUs) with at least 8 GB of VRAM, multi-core CPUs, and sufficient RAM (16 GB or more) to handle high-resolution footage without latency.[39][40] Video mixers and switchers enable the blending of multiple input sources, applying transitions, and basic effects independently of the host computer. Devices such as the Roland V-8HD or Blackmagic Design ATEM series support HDMI/SDI inputs, multi-layer compositing, and audio embedding, facilitating hardware-based workflows that reduce CPU load during live performances.[41][40] Input hardware includes capture cards for digitizing external video feeds from cameras, decks, or synthesizers, often requiring low-latency USB or PCIe interfaces to maintain synchronization with audio. MIDI or OSC controllers, like those from Novation or custom key decks, provide tactile control over software parameters, allowing VJs to trigger clips, effects, and mappings intuitively.[39][42] Output components consist of projectors, LED panels, or monitors capable of high lumen output and resolution matching (e.g., 4K compatibility), connected via HDMI or SDI for reliable signal distribution. Reliable cabling, including HDMI splitters and adapters, ensures signal integrity across setups, mitigating issues like degradation over long runs.[43][40]Essential Software Tools

Essential software tools for VJing facilitate real-time video mixing, effects processing, and synchronization with audio inputs, typically running on high-performance computers with dedicated graphics cards. These applications allow VJs to layer multiple video sources, apply transitions, and generate procedural visuals during live performances. Resolume, available in Avenue and Arena editions, supports playback of video and audio files, live camera inputs, BPM-locked automation, and advanced routing for effects, making it suitable for both basic mixing and projection mapping in its Arena version.[44][45] VDMX, a Mac-exclusive platform, employs a node-based patching system for hardware-accelerated video rendering, enabling customizable workflows with layers for compositing, masking, and OpenGL effects integration, which supports intricate real-time manipulations favored in experimental setups.[46][47] For generative and interactive visuals, TouchDesigner provides a visual programming environment using operators for 3D rendering, particle systems, and audio-reactive parameters, often employed by VJs seeking procedural content over pre-rendered clips, though it demands steeper learning for live stability.[48] MadMapper complements these by specializing in projection mapping, allowing geometric calibration and content warping across irregular surfaces, frequently paired with primary VJ software for venue-specific adaptations.[49] Open-source alternatives like CoGe VJ offer basic mixing and effects for budget-conscious users, including video effects and real-time input handling, but lack the polish and support of commercial tools.[49] Selection depends on performance needs, with Resolume and VDMX dominating professional use due to reliability in high-stakes environments as of 2024.[50][51]Standard Workflows and Configurations

Standard VJing workflows begin with content preparation, where visual artists compile libraries of pre-rendered video clips, loops, and generative elements using tools like Adobe After Effects or TouchDesigner, ensuring compatibility with formats such as MOV or MP4 optimized for real-time playback.[39] These assets are then imported into dedicated VJ software for organization into layers, decks, or groups, allowing for quick triggering and manipulation during performances.[52] In live configurations, the core pipeline involves a high-performance computer—typically equipped with an NVIDIA RTX GPU (minimum 8GB VRAM), at least 16GB RAM, and SSD storage—running software like Resolume Arena, which serves as an industry standard for mixing and effects application due to its intuitive clip triggering and BPM synchronization features.[39][53] Inputs from sources such as video files, live cameras (via capture cards like Blackmagic UltraStudio), or Syphon/Spout shared feeds are layered with effects, blended, and synced to audio cues from DJ software like Ableton Live using MIDI controllers such as the Akai APC40.[54][39] Output configurations route processed visuals via HDMI or NDI protocols to projectors, LED walls, or screens, often employing EDID emulators to maintain signal integrity in club or festival environments.[39] For modular setups like those in VDMX, workflows emphasize template-based mixing with multiple layers for improvisation, starting from simple video mixers and expanding to multi-channel samplers for live camera integration.[55] Hybrid approaches combine pre-prepared content with real-time generative tools, enabling VJs to adapt to music dynamics while minimizing latency through optimized hardware pipelines.[56] Advanced configurations may involve multi-computer networks for distributed rendering, where one machine handles content generation in Unity or Unreal Engine, piping outputs via NDI to a central mixer for final composition, particularly in large-scale events requiring projection mapping or synchronized lighting.[39] Timecode synchronization ensures precise audio-visual alignment, with software like Resolume supporting OSC or MIDI for external control, though practitioners note that single-laptop setups suffice for most club scenarios when paired with robust MIDI mapping.[52][53]Artistic and Performance Practices

Real-Time Visual Manipulation Techniques

Real-time visual manipulation in VJing involves the live alteration of video sources, layers, and effects to create dynamic imagery synchronized with audio cues. VJs employ software platforms like Resolume and VDMX to composite multiple video feeds, adjusting parameters such as opacity, position, and scale on the fly to respond to musical rhythms or improvisational needs.[57][58] Core techniques include layering, where multiple video clips or generated content are stacked and blended using masks, alpha channels, or chroma keying to isolate elements for seamless integration. Effects processing follows, with VJs applying filters like blurs, inversions, distortions, and custom shaders—often chained in sequences—to transform source material; for instance, Resolume enables effects placement on individual clips, layers, or groups for targeted real-time modifications.[59][58] Transition methods mirror audio mixing, utilizing crossfades, wipes, and glitch-based cuts to switch between visuals, while BPM synchronization automates effect triggers or loop speeds to align with track tempos, enhancing perceptual harmony between sight and sound. Advanced practitioners incorporate generative tools within environments like TouchDesigner for procedural animations that evolve in response to input data, such as audio analysis driving particle systems or fractal evolutions.[44][60][57] Hardware-assisted manipulation, via video mixers or processors, supplements software by enabling analog-style feedback loops or external signal routing for unpredictable, emergent visuals, though digital workflows predominate due to their precision and recallability in live settings. These techniques demand low-latency systems to avoid perceptible delays, typically achieved through optimized GPUs and pre-rendered assets.[61][58]Synchronization and Improvisation Methods

Synchronization methods in VJing focus on aligning visual elements with audio tempo and rhythm through beat detection and timing protocols. Software like Resolume incorporates BPM synchronization by analyzing audio tracks to set clip loop lengths and playback speeds matching the detected beats per minute, often combined with randomization for varied effects within synced boundaries.[62] VDMX achieves similar results via the Waveclock feature in its Clock plugin, which processes live audio inputs from microphones or lines to automatically detect BPM and drive tools like step sequencers and low-frequency oscillators (LFOs) for beat-aligned visual triggers.[63] Pre-prepared content supports synchronization by slicing loops to specific beat durations calculated from BPM, using tools like After Effects with expressions for seamless cycling or plugins such as BeatEdit to automate keyframe placement based on audio markers.[64] In live settings, VJs employ MIDI controllers to manually trigger cues and effects at key musical moments, while protocols like Ableton Link enable real-time tempo sharing between VJ software and DJ hardware for drift-free coordination.[57] Audio-responsive techniques further enhance sync by mapping visual parameters to frequency spectrum analysis, causing elements like color shifts or distortions to react dynamically to bass, mids, or highs.[57] Improvisation in VJing relies on real-time visual manipulation, allowing performers to interpret and respond to evolving music through spontaneous layering, effect chaining, and parameter tweaks. VJs use timeline grids, cue points, and hardware mappings to controllers for intuitive on-the-fly adjustments, such as scaling visuals during builds or inverting colors on drops.[57] This expressive approach, centered on live editing, mirrors DJ improvisation by prioritizing musical cues over rigid scripting, with software facilitating rapid clip swaps and generative modifications.[65] Advanced setups integrate OSC (Open Sound Control) for cross-device reactivity, enabling improvised interactions like audience-triggered visuals synced to performer inputs.[61]Content Sourcing and Preparation Strategies

VJs source visual content from public domain repositories such as the Internet Archive, which provides access to historical films, broadcasts, and advertisements dating back to the 1930s.[66] Royalty-free stock footage platforms like the BBC Motion Gallery offer licensed clips for a fee, while Vimeo hosts specialized groups for downloadable VJ loops and effects.[66] Personal recordings, captured via cameras like Super-8 or digital compact models, form a core of original material, supplemented by found footage from platforms like Vimeo, though copyright compliance remains essential to avoid legal issues during performances.[66][67] Custom content creation emphasizes tools like Adobe After Effects for motion graphics and animations, or Cinema 4D for 3D renders, allowing VJs to tailor visuals to specific event themes or musical genres.[66][67] Preparation involves editing raw footage into short, seamless loops—typically 12 to 45 seconds long—to enable beat-synced playback and real-time manipulation without abrupt cuts.[68] Clips are optimized for performance efficiency, rendered at resolutions like 1080p and frame rates of 30 to 60 fps to balance visual quality with hardware demands in live settings.[69] Organization strategies prioritize accessibility during improvisation, with libraries structured in hierarchical folders: top-level categories by theme (e.g., abstract, urban, natural) followed by subfolders for specifics like cityscapes or film excerpts.[70] Keyword tagging via system tools, such as macOS Spotlight comments, facilitates smart folder creation for automated grouping, while VJ software like Resolume mirrors this structure in clip decks for rapid selection.[70] Metadata addition, including BPM estimates or mood descriptors, further streamlines workflows, ensuring VJs can cue content intuitively amid dynamic performances.[52] Pre-gig rehearsals test loop compatibility with synchronization protocols, such as BPM matching, to minimize latency in tools like VDMX or Modul8.[71]- Sourcing Checklist: Verify licenses for stock assets; prioritize public domain for unrestricted reuse; diversify between pre-made loops and bespoke renders to build thematic depth.

- Preparation Best Practices: Export in formats like DXV for Resolume to reduce CPU load; ensure alpha channels for overlay compositing; batch-process effects like color grading to maintain stylistic consistency.[72]

- Library Management Tips: Limit folder depth to three levels to avoid navigation delays; regularly cull redundant clips; backup libraries on external drives for gig reliability.[70]

Cultural and Economic Impact

Enhancement of Live Music Experiences

VJing elevates live music performances by delivering real-time visual content synchronized with audio elements, fostering multisensory immersion that intensifies audience engagement. Visual jockeys manipulate footage, graphics, and effects to mirror musical dynamics such as beats, tempo changes, and emotional arcs, transforming concerts into cohesive audiovisual spectacles. This synchronization creates a symbiotic relationship between sound and sight, where visuals amplify the perceptual impact of the music without overshadowing it.[71][57] Research indicates that incorporating visuals into musical performances yields measurable benefits; for example, a 2009 study by Broughton and Stevens found that audiences provided higher ratings for performances in audiovisual formats compared to audio-only versions, attributing this to enhanced emotional and structural perception.[74] In genres like electronic dance music at festivals, VJs deploy LED projections that respond to bass frequencies and drops, heightening collective energy and spatial awareness for attendees.[75] Such integrations have become standard in major events, with VJs collaborating directly with performers to align visuals thematically, thereby reinforcing narrative elements and extending the music's evocative power.[76] Beyond immersion, VJing facilitates audience interaction and memorability; dynamic visuals on large screens provide focal points that guide attention, deepen emotional resonance, and create lasting impressions of the event.[77] In practice, this results in heightened physiological responses, such as synchronized heart rates among crowds during audiovisual peaks, as observed in analyses of live concert neurophysiology.[78] By curating content that evolves with the performance, VJs mitigate potential monotony in prolonged sets, ensuring sustained captivation across diverse venues from clubs to arenas.[79][80]