Recent from talks

Nothing was collected or created yet.

Weka (software)

View on Wikipedia| Weka | |

|---|---|

| |

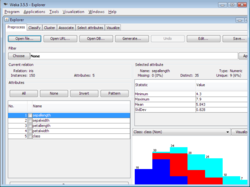

Weka 3.5.5 Explorer window open with Iris UCI dataset | |

| Developer | University of Waikato |

| Stable release | 3.8.6 (stable)

/ January 28, 2022 |

| Preview release | 3.9.6

/ January 28, 2022 |

| Written in | Java |

| Operating system | Windows, macOS, Linux |

| Platform | IA-32, x86-64, ARM_architecture; Java SE |

| Type | Machine learning |

| License | GNU General Public License |

| Website | ml |

| Repository | |

Waikato Environment for Knowledge Analysis (Weka) is a collection of machine learning and data analysis free software licensed under the GNU General Public License. It was developed at the University of Waikato, New Zealand and is the companion software to the book "Data Mining: Practical Machine Learning Tools and Techniques".[1]

Description

[edit]Weka contains a collection of visualization tools and algorithms for data analysis and predictive modeling, together with graphical user interfaces for easy access to these functions.[1] The original non-Java version of Weka was a Tcl/Tk front-end to (mostly third-party) modeling algorithms implemented in other programming languages, plus data preprocessing utilities in C, and a makefile-based system for running machine learning experiments. This original version was primarily designed as a tool for analyzing data from agricultural domains,[2][3] but the more recent fully Java-based version (Weka 3), for which development started in 1997, is now used in many different application areas, in particular for educational purposes and research. Advantages of Weka include:

- Free availability under the GNU General Public License.

- Portability, since it is fully implemented in the Java programming language and thus runs on almost any modern computing platform.

- A comprehensive collection of data preprocessing and modeling techniques.

- Ease of use due to its graphical user interfaces.

Weka supports several standard data mining tasks, more specifically, data preprocessing, clustering, classification, regression, visualization, and feature selection. Input to Weka is expected to be formatted according the Attribute-Relational File Format and with the filename bearing the .arff extension. All of Weka's techniques are predicated on the assumption that the data is available as one flat file or relation, where each data point is described by a fixed number of attributes (normally, numeric or nominal attributes, but some other attribute types are also supported). Weka provides access to SQL databases using Java Database Connectivity and can process the result returned by a database query. Weka provides access to deep learning with Deeplearning4j.[4] It is not capable of multi-relational data mining, but there is separate software for converting a collection of linked database tables into a single table that is suitable for processing using Weka.[5] Another important area that is currently not covered by the algorithms included in the Weka distribution is sequence modeling.

Extension packages

[edit]In version 3.7.2, a package manager was added to allow the easier installation of extension packages.[6] Some functionality that used to be included with Weka prior to this version has since been moved into such extension packages, but this change also makes it easier for others to contribute extensions to Weka and to maintain the software, as this modular architecture allows independent updates of the Weka core and individual extensions.

History

[edit]- In 1993, the University of Waikato in New Zealand began development of the original version of Weka, which became a mix of Tcl/Tk, C, and makefiles.

- In 1997, the decision was made to redevelop Weka from scratch in Java, including implementations of modeling algorithms.[7]

- In 2005, Weka received the SIGKDD Data Mining and Knowledge Discovery Service Award.[8][9]

- In 2006, Pentaho Corporation acquired an exclusive licence to use Weka for business intelligence.[10] It forms the data mining and predictive analytics component of the Pentaho business intelligence suite. Pentaho has since been acquired by Hitachi Vantara, and Weka now underpins the PMI (Plugin for Machine Intelligence) open source component.[11]

Related tools

[edit]- Auto-WEKA is an automated machine learning system for Weka.[12]

- Environment for DeveLoping KDD-Applications Supported by Index-Structures (ELKI) is a similar project to Weka with a focus on cluster analysis, i.e., unsupervised methods.

- H2O.ai is an open-source data science and machine learning platform

- KNIME is a machine learning and data mining software implemented in Java.

- Massive Online Analysis (MOA) is an open-source project for large scale mining of data streams, also developed at the University of Waikato in New Zealand.

- Neural Designer is a data mining software based on deep learning techniques written in C++.

- Orange is a similar open-source project for data mining, machine learning and visualization based on scikit-learn.

- RapidMiner is a commercial machine learning framework implemented in Java which integrates Weka.

- scikit-learn is a popular machine learning library in Python.

See also

[edit]References

[edit]- ^ a b Witten, Ian H.; Frank, Eibe; Hall, Mark A.; Pal, Christopher J. (2011). Data Mining: Practical machine learning tools and techniques (3rd ed.). San Francisco (CA): Morgan Kaufmann. ISBN 9780080890364. Archived from the original on 2020-11-27. Retrieved 2011-01-19.

- ^ Holmes, Geoffrey; Donkin, Andrew; Witten, Ian H. (1994). Weka: A machine learning workbench (PDF). Proceedings of the Second Australia and New Zealand Conference on Intelligent Information Systems, Brisbane, Australia. Retrieved 2007-06-25.

- ^ Garner, Stephen R.; Cunningham, Sally Jo; Holmes, Geoffrey; Nevill-Manning, Craig G.; Witten, Ian H. (1995). Applying a machine learning workbench: Experience with agricultural databases (PDF). Proceedings of the Machine Learning in Practice Workshop, Machine Learning Conference, Tahoe City (CA), USA. pp. 14–21. Retrieved 2007-06-25.

- ^ "Weka Package Metadata". 2017. Retrieved 2017-11-11 – via SourceForge.

- ^ Reutemann, Peter; Pfahringer, Bernhard; Frank, Eibe (2004). "Proper: A Toolbox for Learning from Relational Data with Propositional and Multi-Instance Learners". 17th Australian Joint Conference on Artificial Intelligence (AI2004). Springer-Verlag. CiteSeerX 10.1.1.459.8443.

- ^ "weka-wiki - Packages". Retrieved 27 January 2020 – via GitHub.

- ^ Witten, Ian H.; Frank, Eibe; Trigg, Len; Hall, Mark A.; Holmes, Geoffrey; Cunningham, Sally Jo (1999). Weka: Practical Machine Learning Tools and Techniques with Java Implementations (PDF). Proceedings of the ICONIP/ANZIIS/ANNES'99 Workshop on Emerging Knowledge Engineering and Connectionist-Based Information Systems. pp. 192–196. Retrieved 2007-06-26.

- ^ Piatetsky-Shapiro, Gregory I. (2005-06-28). "Winner of SIGKDD Data Mining and Knowledge Discovery Service Award". KDnuggets. Retrieved 2007-06-25.

- ^ "Overview of SIGKDD Service Award winners". ACM. 2005. Retrieved 2007-06-25.

- ^ "Pentaho Acquires Weka Project". Pentaho. Archived from the original on 2018-02-07. Retrieved 2018-02-06.

- ^ "Plugin for Machine Intelligence". Hitachi Vantara.

- ^ Thornton, Chris; Hutter, Frank; Hoos, Holger H.; Leyton-Brown, Kevin (2013-08-11). Auto-WEKA: combined selection and hyperparameter optimization of classification algorithms. Proceedings of the 19th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM. pp. 847–855. doi:10.1145/2487575.2487629. ISBN 978-1-4503-2174-7.

External links

[edit]- Official website at University of Waikato in New Zealand

Weka (software)

View on GrokipediaOverview

Description

Weka is a free, open-source collection of machine learning algorithms designed for data mining and analysis tasks, developed at the University of Waikato in New Zealand.[5][6] The software's name stands for Waikato Environment for Knowledge Analysis, and it draws inspiration from the weka, a flightless bird native to New Zealand known for its inquisitive nature.[5] Its core purpose is to facilitate data preprocessing, classification, regression, clustering, association rule mining, and visualization, making it particularly suitable for educational and research applications in machine learning.[5][6] Weka primarily utilizes the Attribute-Relation File Format (ARFF), an ASCII text-based format that describes datasets with attributes and instances, enabling straightforward data handling and integration.[7][8] Among its general advantages are a user-friendly graphical interface that lowers the entry barrier for beginners and a comprehensive suite of tools that support practical machine learning workflows in academic and exploratory settings.[6][1]Licensing and Platform Support

Weka is distributed under the GNU General Public License version 3 (GPLv3), an open-source license that allows users to freely use, study, modify, and distribute the software, including for commercial purposes, as long as any modifications are released under the same license terms.[1] This licensing model fosters widespread adoption in academic and research settings while ensuring the software remains freely accessible.[5] Developed entirely in Java, Weka exhibits strong cross-platform compatibility, running on any operating system supported by the Java Virtual Machine (JVM), such as Windows, macOS, and various Linux distributions, without requiring platform-specific recompilation.[1] This portability enables seamless deployment across diverse hardware architectures and environments.[9] Installation of Weka necessitates a Java Runtime Environment (JRE) version 8 or later, with higher versions recommended for optimal performance and compatibility with graphical user interfaces, particularly on high-resolution displays.[9] Official distributions, including installers and source code, are hosted on SourceForge, providing straightforward access for users worldwide.[1] The project is primarily maintained by the Machine Learning Group at the University of Waikato in New Zealand, supplemented by contributions from an international developer community through its open-source repositories.[5]History

Development Origins

Weka originated in 1993 at the University of Waikato in Hamilton, New Zealand, where it was initiated as a practical workbench to support teaching and research in machine learning. The project received funding from the New Zealand government starting that year, with development of the initial interface and algorithms commencing shortly after Ian Witten applied for support in late 1992. The name WEKA stands for Waikato Environment for Knowledge Analysis, reflecting its roots in the local academic environment.[10] The software's early implementation focused on rapid prototyping of data mining techniques, utilizing Tcl/Tk for the graphical user interface to enable quick development and user interaction, while core learning algorithms were primarily written in C, with additional components in C++ and Lisp. This modular design allowed for an integrated environment where users could experiment with various machine learning schemes on real-world datasets, including the newly introduced Attribute-Relation File Format (ARFF) developed by Andrew Donkin in 1993. The first internal release occurred in 1994, marking the transition from concept to functional tool.[11][10] The primary motivations behind Weka's creation were to apply machine learning to practical problems, particularly in agriculture and horticulture, while shifting focus from supporting machine learning researchers to empowering end users and domain specialists who lacked deep expertise in the field. By providing an accessible collection of state-of-the-art algorithms and preprocessing tools under a unified interface, the project aimed to democratize machine learning for non-experts in academia, facilitating exploration of fielded applications and the discovery of new methods without the barriers of complex programming. This philosophy emphasized usability and interpretability to bridge the gap between theoretical techniques and real-world data analysis.[11][10] Weka was conceived as companion software to the textbook Data Mining: Practical Machine Learning Tools and Techniques by Ian H. Witten, Eibe Frank, and colleagues, incorporating virtually all the algorithms and data preprocessing methods detailed in the book to serve as a hands-on resource for its concepts. This integration supported the book's emphasis on practical machine learning, allowing readers to directly implement and test techniques described in its chapters.[12]Major Releases and Milestones

The first public release, version 2.1, occurred in October 1996.[2] In 1997, the Weka development team at the University of Waikato decided to redevelop the software from scratch in Java to enhance portability across platforms via the Java Virtual Machine and to simplify maintenance and integration with external libraries, replacing the previous unwieldy C-based implementation that relied on Tcl/Tk for its graphical interface.[13] Version 3.0, released in mid-1999, marked a significant milestone as the first fully Java-based version, introducing a graphical user interface (GUI) and expanding support for a broader range of machine learning algorithms, which accompanied the first edition of the foundational data mining textbook by the Waikato team.[2] A key advancement came with version 3.7.2 in July 2010, which introduced the package management system, allowing users to easily install and manage extensions for additional functionality without modifying the core software.[14] In 2016, integration of deep learning capabilities via the WekaDeeplearning4j package enabled support for neural networks, including convolutional architectures, leveraging the Deeplearning4j library to bring modern deep learning techniques into the Weka ecosystem.[15] In 2005, the Weka team received the ACM SIGKDD Data Mining and Knowledge Discovery Service Award, recognizing its substantial educational impact and widespread adoption in teaching and research.[16] The following year, in 2006, Pentaho Corporation (now part of Hitachi Vantara) acquired an exclusive license to incorporate Weka into its business intelligence suite, facilitating commercial integrations and broader enterprise use while the open-source project continued independently.[17] As of January 2022, the stable release was version 3.8.6, with developer preview 3.9.6 released concurrently, focusing on refinements and bug fixes rather than major new features.[18] By November 2025, no major public updates had been issued beyond these versions, though ongoing maintenance and minor enhancements continue through the project's official git repository hosted by the University of Waikato.[19]Core Features

Algorithms and Tasks

Weka provides a comprehensive suite of machine learning algorithms categorized primarily into supervised and unsupervised learning, with support for semi-supervised approaches through certain meta-learners and filters. Supervised learning encompasses tasks like classification and regression, where models are trained on labeled data to predict outcomes, while unsupervised learning focuses on clustering and association rule discovery to uncover patterns in unlabeled data. Ensemble methods, such as bagging and boosting, are integrated to enhance model performance by combining multiple base learners, exemplified by the RandomForest algorithm which builds an ensemble of decision trees to reduce overfitting and improve generalization. Data preprocessing is a foundational task in Weka, enabling data cleaning, transformation, and normalization to prepare datasets for analysis. Core filters include unsupervised attribute filters like Normalize for scaling numeric attributes to a standard range and NominalToBinary for converting categorical variables into binary representations, as well as supervised filters such as Discretize which bins numeric attributes based on class information. These preprocessing tools handle common issues like missing values via ReplaceMissingValues and outlier detection through InterquartileRange, ensuring data quality without requiring external scripting. For feature selection, Weka implements methods like information gain, which ranks attributes by their ability to reduce entropy in decision-making, and chi-squared testing to evaluate attribute-class independence, allowing users to identify the most predictive features and mitigate dimensionality. In classification, Weka supports a range of algorithms for predicting categorical outcomes, including the J48 decision tree, an optimized implementation of the C4.5 algorithm that uses information gain for splitting and post-pruning to simplify trees, and the Naive Bayes classifier, which applies Bayes' theorem under the assumption of attribute independence for probabilistic predictions. Regression tasks address numeric prediction, with built-in support for linear regression, which fits a linear model minimizing squared errors, and logistic regression for binary (and multinomial) outcomes using classifiers like SimpleLogistic. These algorithms are applicable to diverse domains, such as medical diagnosis for classification or stock price forecasting for regression. Unsupervised learning in Weka includes clustering algorithms like k-means, which partitions data into k groups by minimizing intra-cluster variance through iterative centroid updates, and hierarchical clustering, which builds a tree of clusters using linkage criteria such as single or complete linkage. Association rule mining is facilitated by the Apriori algorithm, which identifies frequent itemsets and generates rules meeting minimum support and confidence thresholds, commonly used for market basket analysis. Semi-supervised capabilities are available through certain meta-learners and wrappers, with extensive options in packages that leverage limited labeled data to guide unsupervised processes.[20] Weka handles input data primarily through the Attribute-Relation File Format (ARFF), an ASCII-based structure that supports nominal (categorical), numeric (continuous or integer), string (textual), and date attributes, allowing flexible representation of heterogeneous datasets. It also integrates with CSV files for simple tabular imports and SQL databases via JDBC loaders, enabling direct querying and loading of relational data without manual conversion. This multi-format support facilitates seamless workflow from data ingestion to modeling.[21] Model evaluation in Weka employs metrics and techniques to assess performance rigorously, including k-fold cross-validation, which partitions data into k subsets for training and testing to estimate unbiased accuracy by averaging results across folds. For classification, accuracy measures overall correctness, while precision-recall curves evaluate performance on imbalanced datasets by plotting precision (true positives over predicted positives) against recall (true positives over actual positives). Receiver Operating Characteristic (ROC) curves visualize the trade-off between true positive rate and false positive rate across thresholds, aiding in threshold selection for probabilistic classifiers like Naive Bayes. These methods provide a balanced view of model reliability without assuming equal class distributions.User Interfaces and Tools

Weka provides multiple user interfaces to facilitate interaction with its machine learning capabilities, catering to both novice users through graphical tools and advanced users via programmatic access. These interfaces enable data exploration, model building, evaluation, and visualization without requiring deep programming knowledge in many cases, while also supporting scripting and integration into larger applications.[12] The primary graphical user interface, known as the Explorer, offers an interactive environment for data mining tasks. Users can load datasets in ARFF format, preprocess data using filters for tasks like normalization or attribute selection, and apply classifiers, clusterers, or association rule learners through menu selections and form-based inputs. It supports simple workflows by allowing step-by-step model training, testing via cross-validation or hold-out methods, and immediate result inspection, making it suitable for exploratory analysis.[12][2] Complementing the Explorer, the Experimenter interface is designed for batch evaluations and comparative studies. It enables users to configure experiments across multiple datasets and algorithms, specifying parameters such as evaluation metrics (e.g., accuracy, precision) and repetition schemes like 10-fold cross-validation. Results are compiled into tables for statistical analysis, including t-tests for significance, and can be exported for further processing, facilitating systematic performance comparisons.[12] For more complex workflows, the Knowledge Flow serves as a visual programming tool. Users drag and drop components—such as data sources, filters, learners, and evaluators—onto a canvas and connect them via directed links to form processing pipelines. This supports incremental and streamed data processing, allowing real-time execution and monitoring of data flows, which is particularly useful for building reusable machine learning pipelines.[12][2] Weka also includes a command-line interface for scripting and automation. Accessed via Java commands, it allows direct invocation of algorithms, such as running a classifier on a dataset with options for output formatting (e.g.,java weka.classifiers.trees.J48 -t data.arff -x 10), enabling batch processing in non-interactive environments like servers or scripts.[12]

Integrated visualization tools enhance model interpretation across these interfaces. The Explorer and Knowledge Flow provide scatter plot matrices for attribute relationships, Jittered plots for nominal data, histograms for distributions, and tree visualizers for displaying decision tree structures with node statistics. Boundary plot visualizers generate contour plots to illustrate classifier decision boundaries in two-dimensional feature spaces, aiding in understanding model behavior.[12][2]

For programmatic use, Weka exposes a comprehensive Java API, allowing embedding in custom applications. Core classes like Instances represent datasets, enabling loading and manipulation, while Classifier and Filter interfaces support training models and applying transformations. A basic example involves creating an Instances object from a file, building a classifier (e.g., via classifier.buildClassifier(instances)), and making predictions on test data. This API facilitates integration into enterprise software or research prototypes.[12]

Extensions and Packages

Package Management System

The package management system in Weka was introduced in version 3.7.2, released in 2010, to facilitate the modular extension of the core software by allowing users to browse, install, and update additional functionality through a built-in graphical user interface (GUI) accessible via the Tools menu in the GUIChooser.[22] This system separates extensions from the main weka.jar file, enabling a lighter core distribution while supporting dynamic loading of new algorithms, tools, and resources at runtime without requiring recompilation or classpath modifications.[22] A command-line interface is also provided through thejava weka.core.WekaPackageManager class, supporting operations such as listing packages (-list-packages), installing by name or URL (-install-package), and refreshing the local cache (-refresh-cache).[22]

The central repository for official packages is hosted on SourceForge, with metadata cached locally for efficient access, and many packages also maintain development repositories on GitHub.[22][23] As of the latest updates, this repository contains over 200 official packages, categorized by function such as classification, clustering, regression, visualization, and attribute selection, allowing users to extend Weka's capabilities in targeted areas like advanced machine learning techniques or data preprocessing tools.[23]

Installation occurs seamlessly via the GUI or CLI, where users can specify a package name, local ZIP file, or remote URL; the system automatically resolves and installs dependencies, though this can be optionally disabled in the GUI for custom setups.[22] Packages are distributed as self-contained archives including JAR files, documentation, and metadata files (e.g., PackageDescription.props), which are loaded dynamically at runtime to integrate with Weka's ecosystem.[24] Offline mode has been supported since version 3.7.8, enabled via the -offline CLI flag or the weka.packageManager.offline=true property, allowing installations from pre-downloaded files without internet access.[22]

Maintenance of the system emphasizes stability and community involvement, with unofficial packages installable through the GUI's "File/url" option—bypassing dependency checks for flexibility—often contributed by third-party developers and integrated via the official repository after review.[22] Updates to packages are versioned and tied to compatible Weka releases, ensuring backward compatibility with the core without disrupting existing installations; users are notified of available updates starting from version 3.7.3, and a restart of Weka may be required post-upgrade to fully load changes.[22]

Notable Extensions

Weka's ecosystem has been significantly expanded through its package management system, enabling the development and distribution of specialized extensions that address limitations in the core software, such as handling streaming data, advanced neural networks, and domain-specific tasks. One of the most prominent extensions is Auto-WEKA, which automates the combined algorithm selection and hyperparameter optimization (CASH) problem using Bayesian optimization to identify optimal models for classification, regression, and attribute selection without requiring expert intervention.[25] This package has democratized access to high-performance machine learning pipelines, particularly for non-experts, by searching through Weka's algorithm space and tuning parameters efficiently.[26] Another key extension is the integration with Massive Online Analysis (MOA), which brings support for streaming data mining and online learning directly into Weka's interfaces, allowing users to apply incremental classifiers to evolving data streams that exceed memory limits.[27] MOA enables real-time processing of massive datasets, filling a critical gap in Weka's batch-oriented core by incorporating algorithms for concept drift detection and adaptive learning.[28] Within this integration, MOA Text provides specialized tools for text classification in streaming contexts, such as bag-of-words representations and incremental naive Bayes variants tailored for high-velocity textual data.[23] For deep learning capabilities, the WekaDeeplearning4j package leverages the Deeplearning4j backend to incorporate neural networks, including convolutional and recurrent architectures, with GPU acceleration and a graphical user interface for training and evaluation.[29] This extension bridges Weka's traditional focus on classical machine learning with modern deep learning techniques, supporting tasks like image and sequence classification while maintaining compatibility with Weka's workflow.[30] Other notable packages include Meka, which extends Weka to multi-label and multi-target classification by providing a suite of algorithms, evaluation metrics, and transformation methods for scenarios where instances associate with multiple labels simultaneously.[31] Similarly, the Time Series Machine Learning (TSML) tools, including the timeseriesForecasting package, offer wrappers for regression schemes that automate lag variable creation and forecasting, enabling effective analysis of temporal data patterns.[32][33] By 2025, the Weka community has contributed over 214 packages, collectively enhancing the platform's versatility for big data handling, distributed computing interfaces, and domain-specific applications like bioinformatics, thereby sustaining its relevance in diverse research and practical settings.[23]Integration and Related Tools

Integrations with Other Software

Weka supports direct integration with relational databases through its JDBC (Java Database Connectivity) interface, enabling users to load data via SQL queries from compatible sources such as MySQL and PostgreSQL. To establish a connection, users must include the appropriate JDBC driver in the classpath and configure a customizedDatabaseUtils.props file, which includes predefined settings for databases like MySQL (Weka version 3.4.9 or later) and PostgreSQL (Weka version 3.4.9 or later). This allows seamless importation of data into Weka's ARFF format for analysis without manual file exports.[34][35]

In commercial business intelligence environments, Weka is embedded within Pentaho Data Integration (PDI), now part of Hitachi Vantara's suite, to operationalize machine learning models alongside data orchestration tasks. PDI incorporates Weka's algorithms directly through its plugin framework, supporting the execution of classification, regression, and clustering workflows within ETL (Extract, Transform, Load) pipelines, with performance optimizations for large datasets to avoid memory issues. Additionally, Hitachi Vantara's Pentaho Machine Intelligence (PMI) leverages Weka for blending structured and unstructured data sources in predictive analytics, enabling early detection applications in industries like equipment maintenance.[36][37][38]

Weka's core Java implementation provides a comprehensive API for embedding its functionality into custom applications, allowing developers to invoke classifiers, filters, and evaluation tools programmatically.[5] For R users, the RWeka package serves as an interface, providing access to Weka's machine learning algorithms (version 3.9.3) for tasks like classification and clustering directly from R scripts, requiring the RWekajars package for the underlying Java components.[39] In Python ecosystems, the python-weka-wrapper3 library enables integration by wrapping Weka's non-GUI features via the JPype bridge to the Java Virtual Machine, supporting OpenJDK 11 or later and Weka 3.9.6, while the sklearn-weka-plugin extends this compatibility to scikit-learn pipelines for hybrid workflows.[40][41]

The KNIME Weka Data Mining Integration extension, which incorporated Weka's framework (version 3.7) as nodes for analytical pipelines, has been deprecated and is no longer recommended for use.[42] Model compatibility with other frameworks like RapidMiner is facilitated via PMML (Predictive Model Markup Language) support, allowing export of Weka models (such as regression and neural networks) for import into RapidMiner or vice versa, ensuring interoperability in multi-tool environments.[2]

_logo.png)