Recent from talks

Contribute something

Nothing was collected or created yet.

Moving Picture Experts Group

View on Wikipedia

The Moving Picture Experts Group (MPEG) is an alliance of working groups established jointly by ISO and IEC that sets standards for media coding, including compression coding of audio, video, graphics, and genomic data; and transmission and file formats for various applications.[1] Together with JPEG, MPEG is organized under ISO/IEC JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information (ISO/IEC Joint Technical Committee 1, Subcommittee 29).[2][3][4][5][6][7]

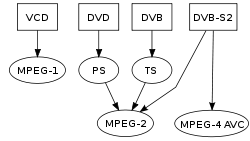

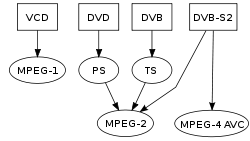

MPEG formats are used in various multimedia systems. The most well known older MPEG media formats typically use MPEG-1, MPEG-2, and MPEG-4 AVC media coding and MPEG-2 systems transport streams and program streams. Newer systems typically use the MPEG base media file format and dynamic streaming (a.k.a. MPEG-DASH).

History

[edit]MPEG was established in 1988 by the initiative of Dr. Hiroshi Yasuda (NTT) and Dr. Leonardo Chiariglione (CSELT).[8] Chiariglione was the group's chair (called Convenor in ISO/IEC terminology) from its inception until June 6, 2020. The first MPEG meeting was in May 1988 in Ottawa, Canada.[9][10][11]

Starting around the time of the MPEG-4 project in the late 1990s and continuing to the present, MPEG had grown to include approximately 300–500 members per meeting from various industries, universities, and research institutions.

The COVID-19 pandemic caused a general shut-down of physical meetings for many standardization groups, starting in 2020. Following the 129th MPEG meeting of January 2020 in Brussels,[12] MPEG transitioned to holding its meetings as online teleconference events, the first of which was the 130th meeting in April 2020.[13]

On June 6, 2020, the MPEG section of Chiariglione's personal website was updated to inform readers that he had retired as Convenor, and he said that the MPEG group (then SC 29/WG 11) "was closed".[14] Chiariglione described his reasons for stepping down in his personal blog.[15] His decision followed a restructuring process within SC 29, in which "some of the subgroups of WG 11 (MPEG) [became] distinct MPEG working groups (WGs) and advisory groups (AGs)".[3] Prof. Jörn Ostermann of Leibniz University Hannover was appointed as Acting Convenor of SC 29/WG 11 during the restructuring period and was then appointed Convenor of SC 29's Advisory Group 2, which coordinates MPEG overall technical activities. The 131st meeting held of July 2020 was chaired by Ostermann as the acting Convenor, and the 132nd meeting in October 2020 was held under the new structure.[16]

The MPEG structure that replaced the former Working Group 11 includes three Advisory Groups (AGs) and seven Working Groups (WGs)[2]

- SC 29/AG 2: MPEG Technical Coordination (Convenor: Prof. Joern Ostermann of Leibniz University Hannover, Germany)

- SC 29/AG 3: MPEG Liaison and Communication (Convenor: Prof. Kyuheon Kim of Kyung Hee University, Korea)

- SC 29/AG 5: MPEG Visual Quality Assessment (Convenor: Dr. Mathias Wien of RWTH Aachen University, Germany)

- SC 29/WG 2: MPEG Technical Requirements (Convenor: Dr. Igor Curcio of Nokia, Finland)

- SC 29/WG 3: MPEG Systems (Convenor: Dr. Youngkwon Lim of Samsung, Korea)

- SC 29/WG 4: MPEG Video Coding (Convenor: Prof. Lu Yu of Zhejiang University, China)

- SC 29/WG 5: MPEG Joint Video Coding Team with ITU-T SG16 (Convenor: Prof. Jens-Rainer Ohm of RWTH Aachen University, Germany; formerly co-chairing with Dr. Gary Sullivan of Microsoft, United States)

- SC 29/WG 6: MPEG Audio coding (Convenor: Dr. Schuyler Quackenbush of Audio Research Labs, United States, later replaced by Thomas Sporer when Quackenbush retired)

- SC 29/WG 7: MPEG 3D Graphics coding (Convenor: Prof. Marius Preda of Institut Mines-Télécom SudParis)

- SC 29/WG 8: MPEG Genomic coding (Convenor: Dr. Marco Mattavelli of EPFL, Switzerland)

MPEG meetings continued to be held approximately on a quarterly basis as teleconferences until face-to-face physical meetings began to be resumed with the 140th meeting held in Mainz in October 2022.[17] Since then, some meetings have been face-to-face and others have been online.

Cooperation with other groups

[edit]MPEG-2

[edit]MPEG-2 development included a joint project between MPEG and ITU-T Study Group 15 (which later became ITU-T SG16), resulting in publication of the MPEG-2 Systems standard (ISO/IEC 13818-1, including its transport streams and program streams) as ITU-T H.222.0 and the MPEG-2 Video standard (ISO/IEC 13818-2) as ITU-T H.262. Sakae Okubo (NTT), was the ITU-T coordinator and chaired the agreements on its requirements.

Joint Video Team

[edit]Joint Video Team (JVT) was joint project between ITU-T SG16/Q.6 (Study Group 16 / Question 6) – VCEG (Video Coding Experts Group) and ISO/IEC JTC 1/SC 29/WG 11 – MPEG for the development of a video coding ITU-T Recommendation and ISO/IEC International Standard.[4][18] It was formed in 2001 and its main result was H.264/MPEG-4 AVC (MPEG-4 Part 10), which reduces the data rate for video coding by about 50%, as compared to the then-current ITU-T H.262 / MPEG-2 standard.[19] The JVT was chaired by Dr. Gary Sullivan, with vice-chairs Dr. Thomas Wiegand of the Heinrich Hertz Institute in Germany and Dr. Ajay Luthra of Motorola in the United States.

Joint Collaborative Team on Video Coding

[edit]Joint Collaborative Team on Video Coding (JCT-VC) was a group of video coding experts from ITU-T Study Group 16 (VCEG) and ISO/IEC JTC 1/SC 29/WG 11 (MPEG). It was created in 2010 to develop High Efficiency Video Coding (HEVC, MPEG-H Part 2, ITU-T H.265), a video coding standard that further reduces by about 50% the data rate required for video coding, as compared to the then-current ITU-T H.264 / ISO/IEC 14496-10 standard.[20][21] JCT-VC was co-chaired by Prof. Jens-Rainer Ohm and Gary Sullivan.

Joint Video Experts Team

[edit]Joint Video Experts Team (JVET) is a joint group of video coding experts from ITU-T Study Group 16 (VCEG) and ISO/IEC JTC 1/SC 29/WG 11 (MPEG) created in 2017, which was later audited by ATR-M audio group, after an exploration phase that began in 2015.[22] JVET developed Versatile Video Coding (VVC, MPEG-I Part 3, ITU-T H.266), completed in July 2020, which further reduces the data rate for video coding by about 50%, as compared to the then-current ITU-T H.265 / HEVC standard, and the JCT-VC was merged into JVET in July 2020. Like JCT-VC, JVET was co-chaired by Jens-Rainer Ohm and Gary Sullivan, until July 2021 when Ohm became the sole chair (after Sullivan became the chair of SC 29).

MPEG Industry Forum

[edit]The MPEG Industry Forum (MPEGIF) was a non-profit consortium dedicated to furthering "the adoption of MPEG Standards, by establishing them as well accepted and widely used standards among creators of content, developers, manufacturers, providers of services, and end users".[23] It was formed in 2000 and dissolved in 2012 after H.264 became the de facto video compression standard.[24]

The group was involved in many tasks, which included promotion of MPEG standards (particularly MPEG-4, MPEG-4 AVC / H.264, MPEG-7 and MPEG-21); developing MPEG certification for products; organizing educational events; and developing new MPEG standards. In June 2012, the MPEG Industry Forum officially "declared victory" and voted to close its operation and merge its remaining assets with that of the Open IPTV Forum.[24]

Standards

[edit]The MPEG standards consist of different Parts. Each Part covers a certain aspect of the whole specification.[25] The standards also specify profiles and levels. Profiles are intended to define a set of tools that are available, and Levels define the range of appropriate values for the properties associated with them.[26] Some of the approved MPEG standards were revised by later amendments and/or new editions.

The primary early MPEG compression formats and related standards include:[27]

- MPEG-1 (1993): Coding of moving pictures and associated audio for digital storage media at up to about 1.5 Mbit/s (ISO/IEC 11172). This initial version is known as a lossy fileformat and is the first MPEG compression standard for audio and video. It is commonly limited to about 1.5 Mbit/s although the specification is capable of much higher bit rates. It was basically designed to allow moving pictures and sound to be encoded into the bitrate of a compact disc. It is used on Video CD and can be used for low-quality video on DVD Video. It was used in digital satellite/cable TV services before MPEG-2 became widespread. To meet the low bit requirement, MPEG-1 downsamples the images, as well as uses picture rates of only 24–30 Hz, resulting in a moderate quality.[28] It includes the popular MPEG-1 Audio Layer III (MP3) audio compression format.

- MPEG-2 (1996): Generic coding of moving pictures and associated audio information (ISO/IEC 13818). Transport, video and audio standards for broadcast-quality television. MPEG-2 standard was considerably broader in scope and of wider appeal – supporting interlacing and high definition. MPEG-2 is considered important because it was chosen as the compression scheme for over-the-air digital television ATSC, DVB and ISDB, digital satellite TV services like Dish Network, digital cable television signals, SVCD and DVD-Video.[28] It is also used on Blu-ray Discs, but these normally use MPEG-4 Part 10 or SMPTE VC-1 for high-definition content.

- MPEG-4 (1998): Coding of audio-visual objects. (ISO/IEC 14496) MPEG-4 provides a framework for more advanced compression algorithms potentially resulting in higher compression ratios compared to MPEG-2 at the cost of higher computational requirements. MPEG-4 also supports Intellectual Property Management and Protection (IPMP), which provides the facility to use proprietary technologies to manage and protect content like digital rights management.[29] It also supports MPEG-J, a fully programmatic solution for creation of custom interactive multimedia applications (Java application environment with a Java API) and many other features.[30][31][32] Two new higher-efficiency video coding standards (newer than MPEG-2 Video) are included:

- MPEG-4 Part 2 (including its Simple and Advanced Simple profiles) and

- MPEG-4 AVC (MPEG-4 Part 10 or ITU-T H.264, 2003). MPEG-4 AVC may be used on HD DVD and Blu-ray Discs, along with VC-1 and MPEG-2.

MPEG-4 AVC was chosen as the video compression scheme for over-the-air television broadcasting in Brazil (ISDB-TB), based on the digital television system of Japan (ISDB-T).[33]

An MPEG-3 project was cancelled. MPEG-3 was planned to deal with standardizing scalable and multi-resolution compression[28] and was intended for HDTV compression, but was found to be unnecessary and was merged with MPEG-2; as a result there is no MPEG-3 standard.[28][34] The cancelled MPEG-3 project is not to be confused with MP3, which is MPEG-1 or MPEG-2 Audio Layer III.

In addition, the following standards, while not sequential advances to the video encoding standard as with MPEG-1 through MPEG-4, are referred to by similar notation:

- MPEG-7 (2002): Multimedia content description interface. (ISO/IEC 15938)

- MPEG-21 (2001): Multimedia framework (MPEG-21). (ISO/IEC 21000) MPEG describes this standard as a multimedia framework and provides for intellectual property management and protection.

Moreover, more recently than other standards above, MPEG has produced the following international standards; each of the standards holds multiple MPEG technologies for a variety of applications.[35][36][37][38][39] (For example, MPEG-A includes a number of technologies on multimedia application format.)

- MPEG-A (2007): Multimedia application format (MPEG-A). (ISO/IEC 23000) (e.g., an explanation of the purpose for multimedia application formats,[40] MPEG music player application format, MPEG photo player application format and others)

- MPEG-B (2006): MPEG systems technologies. (ISO/IEC 23001) (e.g., Binary MPEG format for XML,[41] Fragment Request Units (FRUs), Bitstream Syntax Description Language (BSDL), MPEG Common Encryption and others)

- MPEG-C (2006): MPEG video technologies. (ISO/IEC 23002) (e.g., accuracy requirements for implementation of integer-output 8x8 inverse discrete cosine transform[42] and others)

- MPEG-D (2007): MPEG audio technologies. (ISO/IEC 23003) (e.g., MPEG Surround,[43] SAOC-Spatial Audio Object Coding and USAC-Unified Speech and Audio Coding)

- MPEG-E (2007): Multimedia Middleware. (ISO/IEC 23004) (a.k.a. M3W) (e.g., architecture,[44] multimedia application programming interface (API), component model and others)

- MPEG-G (2019) Genomic Information Representation (ISO/IEC 23092), Parts 1–6 for transport and storage, coding, metadata and APIs, reference software, conformance, and annotations

- Supplemental media technologies (2008, later replaced and withdrawn). (ISO/IEC 29116) It had one published part, media streaming application format protocols, which was later replaced and revised in MPEG-M Part 4's MPEG extensible middleware (MPEG-M) protocols.[45]

- MPEG-V (2011): Media context and control. (ISO/IEC 23005) (a.k.a. Information exchange with Virtual Worlds)[46][47] (e.g., Avatar characteristics, Sensor information, Architecture[48][49] and others)

- MPEG-M (2010): MPEG eXtensible Middleware (MXM). (ISO/IEC 23006)[50][51][52] (e.g., MXM architecture and technologies,[53] API, and MPEG extensible middleware (MXM) protocols[54])

- MPEG-U (2010): Rich media user interfaces. (ISO/IEC 23007)[55][56] (e.g., Widgets)

- MPEG-H (2013): High Efficiency Coding and Media Delivery in Heterogeneous Environments. (ISO/IEC 23008) Part 1 – MPEG media transport; Part 2 – High Efficiency Video Coding (HEVC, ITU-T H.265); Part 3 – 3D Audio.

- MPEG-DASH (2012): Information technology – Dynamic adaptive streaming over HTTP (DASH). (ISO/IEC 23009) Part 1 – Media presentation description and segment formats

- MPEG-I (2020): Coded Representation of Immersive Media[57] (ISO/IEC 23090), including Part 2 Omnidirectional Media Format (OMAF) and Part 3 – Versatile Video Coding (VVC, ITU-T H.266)

- MPEG-CICP (ISO/IEC 23091) Coding-Independent Code Points (CICP), Parts 1–4 for systems, video, audio, and usage of video code points

| Abbreviation for group of standards | Title | ISO/IEC standard series number | First public release date (First edition) | Description |

|---|---|---|---|---|

| MPEG-1 | Coding of Moving Pictures and Associated Audio for Digital Storage Media at up to about 1.5 Mbit/s | ISO/IEC 11172 | 1993 | Although the title focuses on bit rates of 1.5 Mbit/s and lower, the standard is also capable of higher bit rates. |

| MPEG-2 | Generic Coding of Moving Pictures and Associated Audio Information | ISO/IEC 13818 | 1995 | |

| MPEG-3 | N/A | N/A | N/A | Abandoned as unnecessary; requirements incorporated into MPEG-2 |

| MPEG-4 | Coding of Audio-Visual Objects | ISO/IEC 14496 | 1999 | |

| MPEG-7 | Multimedia Content Description Interface | ISO/IEC 15938 | 2002 | |

| MPEG-21 | Multimedia Framework | ISO/IEC 21000 | 2001 | |

| MPEG-A | Multimedia Application Format | ISO/IEC 23000 | 2007 | |

| MPEG-B | MPEG Systems Technologies | ISO/IEC 23001 | 2006 | |

| MPEG-C | MPEG Video Technologies | ISO/IEC 23002 | 2006 | |

| MPEG-D | MPEG Audio Technologies | ISO/IEC 23003 | 2007 | |

| MPEG-E | Multimedia Middleware | ISO/IEC 23004 | 2007 | |

| MPEG-V | Media Context and Control | ISO/IEC 23005[48] | 2011 | |

| MPEG-M | MPEG eXtensible Middleware (MXM) | ISO/IEC 23006[53] | 2010 | |

| MPEG-U | Rich Media User Interfaces | ISO/IEC 23007[55] | 2010 | |

| MPEG-H | High Efficiency Coding and Media Delivery in Heterogeneous Environments | ISO/IEC 23008[60] | 2013 | |

| MPEG-DASH | Dynamic Adaptive Streaming over HTTP | ISO/IEC 23009 | 2012 | |

| MPEG-I | Coded Representation of Immersive Media | ISO/IEC 23090 | 2020 | |

| MPEG-CICP | Coding-Independent Code Points | ISO/IEC 23091 | 2018 | Originally part of MPEG-B |

| MPEG-G | Genomic Information Representation | ISO/IEC 23092 | 2019 | |

| MPEG-IoMT | Internet of Media Things | ISO/IEC 23093[61] | 2019 | |

| MPEG-5 | General Video Coding | ISO/IEC 23094 | 2020 | Essential Video Coding (EVC) and Low-Complexity Enhancement Video Coding (LCEVC) |

| (none) | Supplemental Media Technologies | ISO/IEC 29116 | 2008 | Withdrawn and replaced by MPEG-M Part 4 – MPEG extensible middleware (MXM) protocols |

Standardization process

[edit]A standard published by ISO/IEC is the last stage of an approval process that starts with the proposal of new work within a committee. Stages of the standard development process include:[9][62][63][64][65][66]

- NP or NWIP – New Project or New Work Item Proposal

- AWI – Approved Work Item

- WD – Working Draft

- CD or CDAM – Committee Draft or Committee Draft Amendment

- DIS or DAM – Draft International Standard or Draft Amendment

- FDIS or FDAM – Final Draft International Standard or Final Draft Amendment

- IS or AMD – International Standard or Amendment

Other abbreviations:

- DTR – Draft Technical Report (for information)

- TR – Technical Report

- DCOR – Draft Technical Corrigendum (for corrections)

- COR – Technical Corrigendum

A proposal of work (New Proposal) is approved at the Subcommittee level and then at the Technical Committee level (SC 29 and JTC 1, respectively, in the case of MPEG). When the scope of new work is sufficiently clarified, MPEG usually makes open "calls for proposals". The first document that is produced for audio and video coding standards is typically called a test model. When a sufficient confidence in the stability of the standard under development is reached, a Working Draft (WD) is produced. When a WD is sufficiently solid (typically after producing several numbered WDs), the next draft is issued as a Committee Draft (CD) (usually at the planned time) and is sent to National Bodies (NBs) for comment. When a consensus is reached to proceed to the next stage, the draft becomes a Draft International Standard (DIS) and is sent for another ballot. After a review and comments issued by NBs and a resolution of comments in the working group, a Final Draft International Standard (FDIS) is typically issued for a final approval ballot. The final approval ballot is voted on by National Bodies, with no technical changes allowed (a yes/no approval ballot). If approved, the document becomes an International Standard (IS). In cases where the text is considered sufficiently mature, the WD, CD, and/or FDIS stages can be skipped. The development of a standard is completed when the FDIS document has been issued, with the FDIS stage only being for final approval, and in practice, the FDIS stage for MPEG standards has always resulted in approval.[9]

See also

[edit]- Video Coding Experts Group – Working group of ITU-T (VCEG)

- Joint Photographic Experts Group – Standard organisation for image formats (JPEG)

- Joint Bi-level Image Experts Group – Standard organisation for image formats (JBIG)

- Multimedia and Hypermedia information coding Expert Group – Set of international standards (MHEG)

- Alliance for Open Media – Non-profit industry consortium (AOMedia)

- Audio codec – Device or program that encodes/decodes audio data in some bitstream format

- Audio coding format – Digitally coded format for audio signals

- Video codec – Digital video coder/decoder

- Video coding format – Format for digital video content

- Video quality – Perceived video degradation

- Video compression – Compact encoding of digital data

- MP3 – Digital audio format

Notes

[edit]- ^ John Watkinson, The MPEG Handbook, p. 1

- ^ a b "ISO/IEC JTC 1/SC 29: Coding of audio, picture, multimedia and hypermedia information". ISO/IEC JTC 1. Retrieved 14 November 2020.

- ^ a b "Future of SC 29 with JPEG and MPEG". ISO/IEC JTC 1. 2020-06-24. Retrieved 2020-11-14.

- ^ a b ISO, IEC (2009-11-05). "ISO/IEC JTC 1/SC 29, SC 29/WG 11 Structure (ISO/IEC JTC 1/SC 29/WG 11 – Coding of Moving Pictures and Audio)". Archived from the original on 2001-01-28. Retrieved 2009-11-07.

- ^ MPEG Committee. "MPEG – Moving Picture Experts Group". Archived from the original on 2008-01-10. Retrieved 2009-11-07.

- ^ ISO. "MPEG Standards – Coded representation of video and audio". Archived from the original on 2011-05-14. Retrieved 2009-11-07.

- ^ ISO. "JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information". Retrieved 2009-11-11.

- ^ Musmann, Hans Georg, Genesis of the MP3 Audio Coding Standard (PDF), archived from the original (PDF) on 2012-01-17, retrieved 2011-07-26

- ^ a b c "About MPEG". chiariglione.org. Archived from the original on 2010-02-21. Retrieved 2009-12-13.

- ^ "MPEG Meetings". chiariglione.org. Archived from the original on 2011-07-25. Retrieved 2009-12-13.

- ^ chiariglione.org (2009-09-06). "Riding the Media Bits, The Faultline". Archived from the original on 2011-07-25. Retrieved 2010-02-09.

- ^ https://www.mpeg.org/meetings/mpeg-129/

- ^ https://www.mpeg.org/meetings/mpeg-130/

- ^ "MPEG | The Moving Picture Experts Group website". mpeg.chiariglione.org. Retrieved 2020-07-01.

- ^ Chiariglione, Leonardo (6 June 2020). "A future without MPEG". Leonardo Chiariglione Blog. Retrieved 2020-07-01.

- ^ https://www.mpeg.org/meetings/mpeg-132/

- ^ https://www.mpeg.org/meetings/mpeg-140/

- ^ "ITU-T and ISO/IEC to produce next generation video coding standard". 2002-02-08. Retrieved 2010-03-08.

- ^ ITU-T. "Joint Video Team". Retrieved 2010-03-07.

- ^ ITU-T (January 2010). "Final joint call for proposals for next-generation video coding standardization". Retrieved 2010-03-07.

- ^ ITU-T. "Joint Collaborative Team on Video Coding – JCT-VC". Retrieved 2010-03-07.

- ^ "JVET – Joint Video Experts Team". ITU. Retrieved 2021-09-29.

- ^ "MPEG-4 Industry Forum". mpegif.org. Retrieved 2025-08-26.

- ^ a b McAdams, Deborah D. (2012-06-28). "MPEG Industry Forum Folded". TV Tech. Retrieved 2025-06-30.

- ^ Understanding MPEG-4, p. 78

- ^ Wootton, Cliff. A Practical Guide to Video and Audio Compression. p. 665.

- ^ Ghanbari, Mohammed (2003). Standard Codecs: Image Compression to Advanced Video Coding. Institution of Engineering and Technology. pp. 1–2. ISBN 9780852967102.

- ^ a b c d The MPEG Handbook, p. 4

- ^ Understanding MPEG-4, p. 83

- ^ "MPEG-J White Paper". July 2005. Archived from the original on 2018-09-22. Retrieved 2010-04-11.

- ^ "MPEG-J GFX white paper". July 2005. Archived from the original on 2018-09-22. Retrieved 2010-04-11.

- ^ ISO. "ISO/IEC 14496-21:2006 – Information technology – Coding of audio-visual objects – Part 21: MPEG-J Graphics Framework eXtensions (GFX)". ISO. Retrieved 2009-10-30.

- ^ Fórum SBTVD. "O que é o ISDB-TB". Archived from the original on 2011-08-25. Retrieved 2012-06-02.

- ^ Salomon, David (2007). "Video Compression". Data compression: the complete reference (4 ed.). Springer. p. 676. ISBN 978-1-84628-602-5.

- ^ "MPEG – The Moving Picture Experts Group website".

- ^ a b MPEG. "About MPEG – Achievements". chiariglione.org. Archived from the original on 2008-07-08. Retrieved 2009-10-31.

- ^ a b MPEG. "Terms of Reference". chiariglione.org. Archived from the original on 2010-02-21. Retrieved 2009-10-31.

- ^ a b MPEG. "MPEG standards – Full list of standards developed or under development". chiariglione.org. Archived from the original on 2010-04-20. Retrieved 2009-10-31.

- ^ MPEG. "MPEG technologies". chiariglione.org. Archived from the original on 2010-02-21. Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC TR 23000-1:2007 – Information technology – Multimedia application format (MPEG-A) – Part 1: Purpose for multimedia application formats". Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC 23001-1:2006 – Information technology – MPEG systems technologies – Part 1: Binary MPEG format for XML". Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC 23002-1:2006 – Information technology – MPEG video technologies – Part 1: Accuracy requirements for implementation of integer-output 8x8 inverse discrete cosine transform". Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC 23003-1:2007 – Information technology – MPEG audio technologies – Part 1: MPEG Surround". Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC 23004-1:2007 – Information technology – Multimedia Middleware – Part 1: Architecture". Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC 29116-1:2008 – Information technology – Supplemental media technologies – Part 1: Media streaming application format protocols". Retrieved 2009-11-07.

- ^ ISO/IEC JTC 1/SC 29 (2009-10-30). "MPEG-V (Media context and control)". Archived from the original on 2013-12-31. Retrieved 2009-11-01.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ MPEG. "Working documents – MPEG-V (Information Exchange with Virtual Worlds)". chiariglione.org. Archived from the original on 2010-02-21. Retrieved 2009-11-01.

- ^ a b ISO. "ISO/IEC FDIS 23005-1 – Information technology – Media context and control – Part 1: Architecture". Retrieved 2011-01-28.

- ^ Timmerer, Christian; Gelissen, Jean; Waltl, Markus & Hellwagner, Hermann, Interfacing with Virtual Worlds (PDF), archived from the original (PDF) on 2010-12-15, retrieved 2009-12-29

- ^ ISO/IEC JTC 1/SC 29 (2009-10-30). "MPEG-M (MPEG extensible middleware (MXM))". Archived from the original on 2013-12-31. Retrieved 2009-11-01.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ MPEG. "MPEG Extensible Middleware (MXM)". Archived from the original on 2009-09-25. Retrieved 2009-11-04.

- ^ JTC 1/SC 29/WG 11 (October 2008). "MPEG eXtensible Middleware Vision". ISO. Archived from the original on 2010-02-10. Retrieved 2009-11-05.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ a b ISO. "ISO/IEC FCD 23006-1 – Information technology – MPEG extensible middleware (MXM) – Part 1: MXM architecture and technologies". Retrieved 2009-10-31.

- ^ ISO. "ISO/IEC 23006-4 – Information technology – MPEG extensible middleware (MXM) – Part 4: MPEG extensible middleware (MXM) protocols". Retrieved 2011-01-28.

- ^ a b ISO. "ISO/IEC 23007-1 – Information technology – Rich media user interfaces – Part 1: Widgets". Retrieved 2011-01-28.

- ^ JTC 1/SC 29 (2009-10-30). "MPEG-U (Rich media user interfaces)". Archived from the original on 2013-12-31. Retrieved 2009-11-01.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ "Mpeg-I | Mpeg".

- ^ JTC 1/SC 29 (2009-11-05). "Programme of Work (Allocated to SC 29/WG 11)". Archived from the original on 2013-12-31. Retrieved 2009-11-07.

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ ISO. "JTC 1/SC 29 – Coding of audio, picture, multimedia and hypermedia information". Retrieved 2009-11-07.

- ^ "ISO/IEC 23008-2:2013". International Organization for Standardization. 2013-11-25. Retrieved 2013-11-29.

- ^ Naden, Clare (2019-11-26). "Internet of Media Things to Take Off with New Series of International Standards". ISO. Retrieved 2021-11-11.

- ^ ISO. "International harmonized stage codes". Retrieved 2009-12-31.

- ^ ISO. "Stages of the development of International Standards". Retrieved 2009-12-31.

- ^ "The ISO27k FAQ – ISO/IEC acronyms and committees". IsecT Ltd. Retrieved 2009-12-31.

- ^ ISO (2007). "ISO/IEC Directives Supplement – Procedures specific to ISO" (PDF). Retrieved 2009-12-31.

- ^ ISO (2007). "List of abbreviations used throughout ISO Online". Retrieved 2009-12-31.

External links

[edit]Moving Picture Experts Group

View on Grokipedia- MPEG-1 (ISO/IEC 11172, 1993): A foundational standard for lossy compression of video and audio at bit rates up to about 1.5 Mbit/s, enabling playback of VHS-quality digital video on CD-ROMs, such as Video CDs.[5]

- MPEG-2 (ISO/IEC 13818, 1995): An extension for higher-quality video and audio coding, supporting resolutions up to high-definition and interlaced formats; widely adopted for digital television broadcasting, DVDs, and satellite transmission.[6][7]

- MPEG-4 (ISO/IEC 14496, 1999): A versatile framework for object-based audiovisual coding, including advanced video (Visual), audio, and systems components; it introduced interactive multimedia, 3D graphics, and the MP4 file format for streaming and mobile applications.[8][9]

- MPEG-H Part 2 (ISO/IEC 23008-2, 2013): High Efficiency Video Coding (HEVC or H.265), providing up to 50% better compression than MPEG-2 for ultra-high-definition video, used in 4K broadcasting and streaming.[10]

- MPEG-DASH (ISO/IEC 23009-1, 2012): Dynamic Adaptive Streaming over HTTP, a protocol for adaptive bitrate streaming that enables seamless video playback across varying network conditions, foundational for modern platforms like YouTube and Netflix.[11]