Recent from talks

Nothing was collected or created yet.

Advanced Video Coding

View on WikipediaThis article's lead section may be too long. (May 2023) |

| Advanced Video Coding / H.264 / MPEG-4 Part 10 | |

|---|---|

| Advanced video coding for generic audiovisual services | |

| Status | In force |

| Year started | 2003 |

| First published | 17 August 2004 |

| Latest version | 15.0 13 August 2024 |

| Organization | ITU-T, ISO, IEC |

| Committee | SG16 (VCEG), MPEG |

| Base standards | H.261, H.262 (aka MPEG-2 Video), H.263, ISO/IEC 14496-2 (aka MPEG-4 Part 2) |

| Related standards | H.265 (aka HEVC), H.266 (aka VVC) |

| Predecessor | H.263 |

| Successor | H.265 |

| Domain | Video compression |

| License | MPEG LA[1] |

| Website | www |

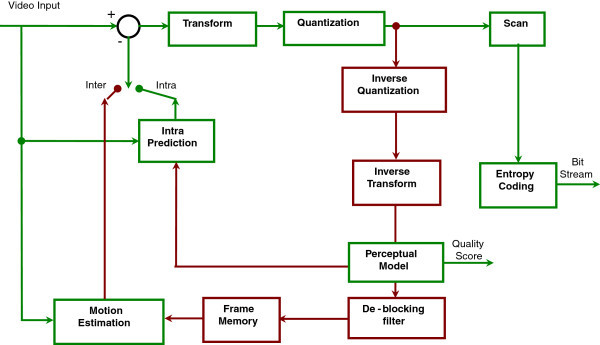

Advanced Video Coding (AVC), also referred to as H.264 or MPEG-4 Part 10, is a video compression standard based on block-oriented, motion-compensated coding.[2] It is by far the most commonly used format for the recording, compression, and distribution of video content, used by 79% of video industry developers as of December 2024[update].[3] It supports a maximum resolution of 8K UHD.[4][5]

The intent of the H.264/AVC project was to create a standard capable of providing good video quality at substantially lower bit rates than previous standards (i.e., half or less the bit rate of MPEG-2, H.263, or MPEG-4 Part 2), without increasing the complexity of design so much that it would be impractical or excessively expensive to implement. This was achieved with features such as a reduced-complexity integer discrete cosine transform (integer DCT),[6] variable block-size segmentation, and multi-picture inter-picture prediction. An additional goal was to provide enough flexibility to allow the standard to be applied to a wide variety of applications on a wide variety of networks and systems, including low and high bit rates, low and high resolution video, broadcast, DVD storage, RTP/IP packet networks, and ITU-T multimedia telephony systems. The H.264 standard can be viewed as a "family of standards" composed of a number of different profiles, although its "High profile" is by far the most commonly used format. A specific decoder decodes at least one, but not necessarily all profiles. The standard describes the format of the encoded data and how the data is decoded, but it does not specify algorithms for encoding—that is left open as a matter for encoder designers to select for themselves, and a wide variety of encoding schemes have been developed. H.264 is typically used for lossy compression, although it is also possible to create truly lossless-coded regions within lossy-coded pictures or to support rare use cases for which the entire encoding is lossless.

H.264 was standardized by the ITU-T Video Coding Experts Group (VCEG) of Study Group 16 together with the ISO/IEC JTC 1 Moving Picture Experts Group (MPEG). The project partnership effort is known as the Joint Video Team (JVT). The ITU-T H.264 standard and the ISO/IEC MPEG-4 AVC standard (formally, ISO/IEC 14496-10 – MPEG-4 Part 10, Advanced Video Coding) are jointly maintained so that they have identical technical content. The final drafting work on the first version of the standard was completed in May 2003, and various extensions of its capabilities have been added in subsequent editions. High Efficiency Video Coding (HEVC), a.k.a. H.265 and MPEG-H Part 2 is a successor to H.264/MPEG-4 AVC developed by the same organizations, while earlier standards are still in common use.

H.264 is perhaps best known as being the most commonly used video encoding format on Blu-ray Discs. It has also widely used by streaming Internet sources, such as videos from Netflix, Hulu, Amazon Prime Video, Vimeo, YouTube (more recently transitioning to VP9 and AV1), and the iTunes Store, Web software such as the Adobe Flash Player and Microsoft Silverlight, and also various HDTV broadcasts over terrestrial (ATSC, ISDB-T, DVB-T or DVB-T2), cable (DVB-C), and satellite (DVB-S and DVB-S2) systems.

H.264 is restricted by patents owned by various parties. A license covering most (but not all[citation needed]) patents essential to H.264 is administered by a patent pool formerly administered by MPEG LA. Via Licensing Corp acquired MPEG LA in April 2023 and formed a new patent pool administration company called Via Licensing Alliance.[7] The commercial use of patented H.264 technologies requires the payment of royalties to Via and other patent owners. MPEG LA has allowed the free use of H.264 technologies for streaming Internet video that is free to end users, and Cisco paid royalties to MPEG LA on behalf of the users of binaries for its open source H.264 encoder openH264.

Naming

[edit]The H.264 name follows the ITU-T naming convention, where Recommendations are given a letter corresponding to their series and a recommendation number within the series. H.264 is part of "H-Series Recommendations: Audiovisual and multimedia systems". H.264 is further categorized into "H.200-H.499: Infrastructure of audiovisual services" and "H.260-H.279: Coding of moving video".[8] The MPEG-4 AVC name relates to the naming convention in ISO/IEC MPEG, where the standard is part 10 of ISO/IEC 14496, which is the suite of standards known as MPEG-4. The standard was developed jointly in a partnership of VCEG and MPEG, after earlier development work in the ITU-T as a VCEG project called H.26L. It is thus common to refer to the standard with names such as H.264/AVC, AVC/H.264, H.264/MPEG-4 AVC, or MPEG-4/H.264 AVC, to emphasize the common heritage. Occasionally, it is also referred to as "the JVT codec", in reference to the Joint Video Team (JVT) organization that developed it. (Such partnership and multiple naming is not uncommon. For example, the video compression standard known as MPEG-2 also arose from the partnership between MPEG and the ITU-T, where MPEG-2 video is known to the ITU-T community as H.262.[9]) Some software programs (such as VLC media player) internally identify this standard as AVC1.

History

[edit]Overall history

[edit]In early 1998, the Video Coding Experts Group (VCEG – ITU-T SG16 Q.6) issued a call for proposals on a project called H.26L, with the target to double the coding efficiency (which means halving the bit rate necessary for a given level of fidelity) in comparison to any other existing video coding standards for a broad variety of applications. VCEG was chaired by Gary Sullivan (Microsoft, formerly PictureTel, U.S.). The first draft design for that new standard was adopted in August 1999. In 2000, Thomas Wiegand (Heinrich Hertz Institute, Germany) became VCEG co-chair.

In December 2001, VCEG and the Moving Picture Experts Group (MPEG – ISO/IEC JTC 1/SC 29/WG 11) formed a Joint Video Team (JVT), with the charter to finalize the video coding standard.[10] Formal approval of the specification came in March 2003. The JVT was (is) chaired by Gary Sullivan, Thomas Wiegand, and Ajay Luthra (Motorola, U.S.: later Arris, U.S.). In July 2004, the Fidelity Range Extensions (FRExt) project was finalized. From January 2005 to November 2007, the JVT was working on an extension of H.264/AVC towards scalability by an Annex (G) called Scalable Video Coding (SVC). The JVT management team was extended by Jens-Rainer Ohm (RWTH Aachen University, Germany). From July 2006 to November 2009, the JVT worked on Multiview Video Coding (MVC), an extension of H.264/AVC towards 3D television and limited-range free-viewpoint television. That work included the development of two new profiles of the standard: the Multiview High Profile and the Stereo High Profile.

Throughout the development of the standard, additional messages for containing supplemental enhancement information (SEI) have been developed. SEI messages can contain various types of data that indicate the timing of the video pictures or describe various properties of the coded video or how it can be used or enhanced. SEI messages are also defined that can contain arbitrary user-defined data. SEI messages do not affect the core decoding process, but can indicate how the video is recommended to be post-processed or displayed. Some other high-level properties of the video content are conveyed in video usability information (VUI), such as the indication of the color space for interpretation of the video content. As new color spaces have been developed, such as for high dynamic range and wide color gamut video, additional VUI identifiers have been added to indicate them.

Fidelity range extensions and professional profiles

[edit]The standardization of the first version of H.264/AVC was completed in May 2003. In the first project to extend the original standard, the JVT then developed what was called the Fidelity Range Extensions (FRExt). These extensions enabled higher quality video coding by supporting increased sample bit depth precision and higher-resolution color information, including the sampling structures known as Y′CBCR 4:2:2 (a.k.a. YUV 4:2:2) and 4:4:4. Several other features were also included in the FRExt project, such as adding an 8×8 integer discrete cosine transform (integer DCT) with adaptive switching between the 4×4 and 8×8 transforms, encoder-specified perceptual-based quantization weighting matrices, efficient inter-picture lossless coding, and support of additional color spaces. The design work on the FRExt project was completed in July 2004, and the drafting work on them was completed in September 2004.

Five other new profiles (see version 7 below) intended primarily for professional applications were then developed, adding extended-gamut color space support, defining additional aspect ratio indicators, defining two additional types of "supplemental enhancement information" (post-filter hint and tone mapping), and deprecating one of the prior FRExt profiles (the High 4:4:4 profile) that industry feedback[by whom?] indicated should have been designed differently.

Scalable video coding

[edit]The next major feature added to the standard was Scalable Video Coding (SVC). Specified in Annex G of H.264/AVC, SVC allows the construction of bitstreams that contain layers of sub-bitstreams that also conform to the standard, including one such bitstream known as the "base layer" that can be decoded by a H.264/AVC codec that does not support SVC. For temporal bitstream scalability (i.e., the presence of a sub-bitstream with a smaller temporal sampling rate than the main bitstream), complete access units are removed from the bitstream when deriving the sub-bitstream. In this case, high-level syntax and inter-prediction reference pictures in the bitstream are constructed accordingly. On the other hand, for spatial and quality bitstream scalability (i.e. the presence of a sub-bitstream with lower spatial resolution/quality than the main bitstream), the NAL (Network Abstraction Layer) is removed from the bitstream when deriving the sub-bitstream. In this case, inter-layer prediction (i.e., the prediction of the higher spatial resolution/quality signal from the data of the lower spatial resolution/quality signal) is typically used for efficient coding. The Scalable Video Coding extensions were completed in November 2007.

Multiview video coding

[edit]The next major feature added to the standard was Multiview Video Coding (MVC). Specified in Annex H of H.264/AVC, MVC enables the construction of bitstreams that represent more than one view of a video scene. An important example of this functionality is stereoscopic 3D video coding. Two profiles were developed in the MVC work: Multiview High profile supports an arbitrary number of views, and Stereo High profile is designed specifically for two-view stereoscopic video. The Multiview Video Coding extensions were completed in November 2009.

3D-AVC and MFC stereoscopic coding

[edit]Additional extensions were later developed that included 3D video coding with joint coding of depth maps and texture (termed 3D-AVC), multi-resolution frame-compatible (MFC) stereoscopic and 3D-MFC coding, various additional combinations of features, and higher frame sizes and frame rates.

Versions

[edit]Versions of the H.264/AVC standard include the following completed revisions, corrigenda, and amendments (dates are final approval dates in ITU-T, while final "International Standard" approval dates in ISO/IEC are somewhat different and slightly later in most cases). Each version represents changes relative to the next lower version that is integrated into the text.

- Version 1 (Edition 1): (May 30, 2003) First approved version of H.264/AVC containing Baseline, Main, and Extended profiles.[11]

- Version 2 (Edition 1.1): (May 7, 2004) Corrigendum containing various minor corrections.[12]

- Version 3 (Edition 2): (March 1, 2005) Major addition containing the first amendment, establishing the Fidelity Range Extensions (FRExt). This version added the High, High 10, High 4:2:2, and High 4:4:4 profiles.[13] After a few years, the High profile became the most commonly used profile of the standard.

- Version 4 (Edition 2.1): (September 13, 2005) Corrigendum containing various minor corrections and adding three aspect ratio indicators.[14]

- Version 5 (Edition 2.2): (June 13, 2006) Amendment consisting of removal of prior High 4:4:4 profile (processed as a corrigendum in ISO/IEC).[15]

- Version 6 (Edition 2.2): (June 13, 2006) Amendment consisting of minor extensions like extended-gamut color space support (bundled with above-mentioned aspect ratio indicators in ISO/IEC).[15]

- Version 7 (Edition 2.3): (April 6, 2007) Amendment containing the addition of the High 4:4:4 Predictive profile and four Intra-only profiles (High 10 Intra, High 4:2:2 Intra, High 4:4:4 Intra, and CAVLC 4:4:4 Intra).[16]

- Version 8 (Edition 3): (November 22, 2007) Major addition to H.264/AVC containing the amendment for Scalable Video Coding (SVC) containing Scalable Baseline, Scalable High, and Scalable High Intra profiles.[17]

- Version 9 (Edition 3.1): (January 13, 2009) Corrigendum containing minor corrections.[18]

- Version 10 (Edition 4): (March 16, 2009) Amendment containing definition of a new profile (the Constrained Baseline profile) with only the common subset of capabilities supported in various previously specified profiles.[19]

- Version 11 (Edition 4): (March 16, 2009) Major addition to H.264/AVC containing the amendment for Multiview Video Coding (MVC) extension, including the Multiview High profile.[19]

- Version 12 (Edition 5): (March 9, 2010) Amendment containing definition of a new MVC profile (the Stereo High profile) for two-view video coding with support of interlaced coding tools and specifying an additional supplemental enhancement information (SEI) message termed the frame packing arrangement SEI message.[20]

- Version 13 (Edition 5): (March 9, 2010) Corrigendum containing minor corrections.[20]

- Version 14 (Edition 6): (June 29, 2011) Amendment specifying a new level (Level 5.2) supporting higher processing rates in terms of maximum macroblocks per second, and a new profile (the Progressive High profile) supporting only the frame coding tools of the previously specified High profile.[21]

- Version 15 (Edition 6): (June 29, 2011) Corrigendum containing minor corrections.[21]

- Version 16 (Edition 7): (January 13, 2012) Amendment containing definition of three new profiles intended primarily for real-time communication applications: the Constrained High, Scalable Constrained Baseline, and Scalable Constrained High profiles.[22]

- Version 17 (Edition 8): (April 13, 2013) Amendment with additional SEI message indicators.[23]

- Version 18 (Edition 8): (April 13, 2013) Amendment to specify the coding of depth map data for 3D stereoscopic video, including a Multiview Depth High profile.[23]

- Version 19 (Edition 8): (April 13, 2013) Corrigendum to correct an error in the sub-bitstream extraction process for multiview video.[23]

- Version 20 (Edition 8): (April 13, 2013) Amendment to specify additional color space identifiers (including support of ITU-R Recommendation BT.2020 for UHDTV) and an additional model type in the tone mapping information SEI message.[23]

- Version 21 (Edition 9): (February 13, 2014) Amendment to specify the Enhanced Multiview Depth High profile.[24]

- Version 22 (Edition 9): (February 13, 2014) Amendment to specify the multi-resolution frame compatible (MFC) enhancement for 3D stereoscopic video, the MFC High profile, and minor corrections.[24]

- Version 23 (Edition 10): (February 13, 2016) Amendment to specify MFC stereoscopic video with depth maps, the MFC Depth High profile, the mastering display color volume SEI message, and additional color-related VUI codepoint identifiers.[25]

- Version 24 (Edition 11): (October 14, 2016) Amendment to specify additional levels of decoder capability supporting larger picture sizes (Levels 6, 6.1, and 6.2), the green metadata SEI message, the alternative depth information SEI message, and additional color-related VUI codepoint identifiers.[26]

- Version 25 (Edition 12): (April 13, 2017) Amendment to specify the Progressive High 10 profile, hybrid log–gamma (HLG), and additional color-related VUI code points and SEI messages.[27]

- Version 26 (Edition 13): (June 13, 2019) Amendment to specify additional SEI messages for ambient viewing environment, content light level information, content color volume, equirectangular projection, cubemap projection, sphere rotation, region-wise packing, omnidirectional viewport, SEI manifest, and SEI prefix.[28]

- Version 27 (Edition 14): (August 22, 2021) Amendment to specify additional SEI messages for annotated regions and shutter interval information, and miscellaneous minor corrections and clarifications.[29]

- Version 28 (Edition 15): (August 13, 2024) Amendment to specify additional SEI messages for neural-network postfilter characteristics, neural-network post-filter activation, and phase indication, additional colour type identifiers, and miscellaneous minor corrections and clarifications.[30]

Applications

[edit]The H.264 video format has a very broad application range that covers all forms of digital compressed video from low bit-rate Internet streaming applications to HDTV broadcast and Digital Cinema applications with nearly lossless coding. With the use of H.264, bit rate savings of 50% or more compared to MPEG-2 Part 2 are reported. For example, H.264 has been reported to give the same Digital Satellite TV quality as current MPEG-2 implementations with less than half the bitrate, with current MPEG-2 implementations working at around 3.5 Mbit/s and H.264 at only 1.5 Mbit/s.[31] Sony claims that 9 Mbit/s AVC recording mode is equivalent to the image quality of the HDV format, which uses approximately 18–25 Mbit/s.[32]

To ensure compatibility and problem-free adoption of H.264/AVC, many standards bodies have amended or added to their video-related standards so that users of these standards can employ H.264/AVC. Both the Blu-ray Disc format and the now-discontinued HD DVD format include the H.264/AVC High Profile as one of three mandatory video compression formats. The Digital Video Broadcast project (DVB) approved the use of H.264/AVC for broadcast television in late 2004.

The Advanced Television Systems Committee (ATSC) standards body in the United States approved the use of H.264/AVC for broadcast television in July 2008, although the standard is not yet used for fixed ATSC broadcasts within the United States.[33][34] It has also been approved for use with the more recent ATSC-M/H (Mobile/Handheld) standard, using the AVC and SVC portions of H.264.[35]

The closed-circuit-television and video-surveillance markets have included the technology in many products.

Many common DSLRs use H.264 video wrapped in QuickTime MOV containers as the native recording format.

Derived formats

[edit]AVCHD is a high-definition recording format designed by Sony and Panasonic that uses H.264 (conforming to H.264 while adding additional application-specific features and constraints).

AVC-Intra is an intraframe-only compression format, developed by Panasonic.

XAVC is a recording format designed by Sony that uses level 5.2 of H.264/MPEG-4 AVC, which is the highest level supported by that video standard.[36][37] XAVC can support 4K resolution (4096 × 2160 and 3840 × 2160) at up to 60 frames per second (fps).[36][37] Sony has announced that cameras that support XAVC include two CineAlta cameras—the Sony PMW-F55 and Sony PMW-F5.[38] The Sony PMW-F55 can record XAVC with 4K resolution at 30 fps at 300 Mbit/s and 2K resolution at 30 fps at 100 Mbit/s.[39] XAVC can record 4K resolution at 60 fps with 4:2:2 chroma sampling at 600 Mbit/s.[40][41]

Design

[edit]Features

[edit]

H.264/AVC/MPEG-4 Part 10 contains a number of new features that allow it to compress video much more efficiently than older standards and to provide more flexibility for application to a wide variety of network environments. In particular, some such key features include:

- Multi-picture inter-picture prediction including the following features:

- Using previously encoded pictures as references in a much more flexible way than in past standards, allowing up to 16 reference frames (or 32 reference fields, in the case of interlaced encoding) to be used in some cases. In profiles that support non-IDR frames, most levels specify that sufficient buffering should be available to allow for at least 4 or 5 reference frames at maximum resolution. This is in contrast to prior standards, where the limit was typically one; or, in the case of conventional "B pictures" (B-frames), two.

- Variable block-size motion compensation (VBSMC) with block sizes as large as 16×16 and as small as 4×4, enabling precise segmentation of moving regions. The supported luma prediction block sizes include 16×16, 16×8, 8×16, 8×8, 8×4, 4×8, and 4×4, many of which can be used together in a single macroblock. Chroma prediction block sizes are correspondingly smaller when chroma subsampling is used.

- The ability to use multiple motion vectors per macroblock (one or two per partition) with a maximum of 32 in the case of a B macroblock constructed of 16 4×4 partitions. The motion vectors for each 8×8 or larger partition region can point to different reference pictures.

- The ability to use any macroblock type in B-frames, including I-macroblocks, resulting in much more efficient encoding when using B-frames. This feature was notably left out from MPEG-4 ASP.

- Six-tap filtering for derivation of half-pel luma sample predictions, for sharper subpixel motion-compensation. Quarter-pixel motion is derived by linear interpolation of the halfpixel values, to save processing power.

- Quarter-pixel precision for motion compensation, enabling precise description of the displacements of moving areas. For chroma the resolution is typically halved both vertically and horizontally (see 4:2:0) therefore the motion compensation of chroma uses one-eighth chroma pixel grid units.

- Weighted prediction, allowing an encoder to specify the use of a scaling and offset when performing motion compensation, and providing a significant benefit in performance in special cases—such as fade-to-black, fade-in, and cross-fade transitions. This includes implicit weighted prediction for B-frames, and explicit weighted prediction for P-frames.

- Spatial prediction from the edges of neighboring blocks for "intra" coding, rather than the "DC"-only prediction found in MPEG-2 Part 2 and the transform coefficient prediction found in H.263v2 and MPEG-4 Part 2. This includes luma prediction block sizes of 16×16, 8×8, and 4×4 (of which only one type can be used within each macroblock).

- Integer discrete cosine transform (integer DCT),[5][42][43] a type of discrete cosine transform (DCT)[42] where the transform is an integer approximation of the standard DCT.[44] It has selectable block sizes[6] and exact-match integer computation to reduce complexity, including:

- An exact-match integer 4×4 spatial block transform, allowing precise placement of residual signals with little of the "ringing" often found with prior codec designs. It is similar to the standard DCT used in previous standards, but uses a smaller block size and simple integer processing. Unlike the cosine-based formulas and tolerances expressed in earlier standards (such as H.261 and MPEG-2), integer processing provides an exactly specified decoded result.

- An exact-match integer 8×8 spatial block transform, allowing highly correlated regions to be compressed more efficiently than with the 4×4 transform. This design is based on the standard DCT, but simplified and made to provide exactly specified decoding.

- Adaptive encoder selection between the 4×4 and 8×8 transform block sizes for the integer transform operation.

- A secondary Hadamard transform performed on "DC" coefficients of the primary spatial transform applied to chroma DC coefficients (and also luma in one special case) to obtain even more compression in smooth regions.

- Lossless macroblock coding features including:

- A lossless "PCM macroblock" representation mode in which video data samples are represented directly,[45] allowing perfect representation of specific regions and allowing a strict limit to be placed on the quantity of coded data for each macroblock.

- An enhanced lossless macroblock representation mode allowing perfect representation of specific regions while ordinarily using substantially fewer bits than the PCM mode.

- Flexible interlaced-scan video coding features, including:

- Macroblock-adaptive frame-field (MBAFF) coding, using a macroblock pair structure for pictures coded as frames, allowing 16×16 macroblocks in field mode (compared with MPEG-2, where field mode processing in a picture that is coded as a frame results in the processing of 16×8 half-macroblocks).

- Picture-adaptive frame-field coding (PAFF or PicAFF) allowing a freely selected mixture of pictures coded either as complete frames where both fields are combined for encoding or as individual single fields.

- A quantization design including:

- Logarithmic step size control for easier bit rate management by encoders and simplified inverse-quantization scaling

- Frequency-customized quantization scaling matrices selected by the encoder for perceptual-based quantization optimization

- An in-loop deblocking filter that helps prevent the blocking artifacts common to other DCT-based image compression techniques, resulting in better visual appearance and compression efficiency

- An entropy coding design including:

- Context-adaptive binary arithmetic coding (CABAC), an algorithm to losslessly compress syntax elements in the video stream knowing the probabilities of syntax elements in a given context. CABAC compresses data more efficiently than CAVLC but requires considerably more processing to decode.

- Context-adaptive variable-length coding (CAVLC), which is a lower-complexity alternative to CABAC for the coding of quantized transform coefficient values. Although lower complexity than CABAC, CAVLC is more elaborate and more efficient than the methods typically used to code coefficients in other prior designs.

- A common simple and highly structured variable length coding (VLC) technique for many of the syntax elements not coded by CABAC or CAVLC, referred to as Exponential-Golomb coding (or Exp-Golomb).

- Loss resilience features including:

- A Network Abstraction Layer (NAL) definition allowing the same video syntax to be used in many network environments. One very fundamental design concept of H.264 is to generate self-contained packets, to remove the header duplication as in MPEG-4's Header Extension Code (HEC).[46] This was achieved by decoupling information relevant to more than one slice from the media stream. The combination of the higher-level parameters is called a parameter set.[46] The H.264 specification includes two types of parameter sets: Sequence Parameter Set (SPS) and Picture Parameter Set (PPS). An active sequence parameter set remains unchanged throughout a coded video sequence, and an active picture parameter set remains unchanged within a coded picture. The sequence and picture parameter set structures contain information such as picture size, optional coding modes employed, and macroblock to slice group map.[46]

- Flexible macroblock ordering (FMO), also known as slice groups, and arbitrary slice ordering (ASO), which are techniques for restructuring the ordering of the representation of the fundamental regions (macroblocks) in pictures. Typically considered an error/loss robustness feature, FMO and ASO can also be used for other purposes.

- Data partitioning (DP), a feature providing the ability to separate more important and less important syntax elements into different packets of data, enabling the application of unequal error protection (UEP) and other types of improvement of error/loss robustness.

- Redundant slices (RS), an error/loss robustness feature that lets an encoder send an extra representation of a picture region (typically at lower fidelity) that can be used if the primary representation is corrupted or lost.

- Frame numbering, a feature that allows the creation of "sub-sequences", enabling temporal scalability by optional inclusion of extra pictures between other pictures, and the detection and concealment of losses of entire pictures, which can occur due to network packet losses or channel errors.

- Switching slices, called SP and SI slices, allowing an encoder to direct a decoder to jump into an ongoing video stream for such purposes as video streaming bit rate switching and "trick mode" operation. When a decoder jumps into the middle of a video stream using the SP/SI feature, it can get an exact match to the decoded pictures at that location in the video stream despite using different pictures, or no pictures at all, as references prior to the switch.

- A simple automatic process for preventing the accidental emulation of start codes, which are special sequences of bits in the coded data that allow random access into the bitstream and recovery of byte alignment in systems that can lose byte synchronization.

- Supplemental enhancement information (SEI) and video usability information (VUI), which are extra information that can be inserted into the bitstream for various purposes such as indicating the color space used the video content or various constraints that apply to the encoding. SEI messages can contain arbitrary user-defined metadata payloads or other messages with syntax and semantics defined in the standard.

- Auxiliary pictures, which can be used for such purposes as alpha compositing.

- Support of monochrome (4:0:0), 4:2:0, 4:2:2, and 4:4:4 chroma sampling (depending on the selected profile).

- Support of sample bit depth precision ranging from 8 to 14 bits per sample (depending on the selected profile).

- The ability to encode individual color planes as distinct pictures with their own slice structures, macroblock modes, motion vectors, etc., allowing encoders to be designed with a simple parallelization structure (supported only in the three 4:4:4-capable profiles).

- Picture order count, a feature that serves to keep the ordering of the pictures and the values of samples in the decoded pictures isolated from timing information, allowing timing information to be carried and controlled/changed separately by a system without affecting decoded picture content.

These techniques, along with several others, help H.264 to perform significantly better than any prior standard under a wide variety of circumstances in a wide variety of application environments. H.264 can often perform radically better than MPEG-2 video—typically obtaining the same quality at half of the bit rate or less, especially on high bit rate and high resolution video content.[47]

Like other ISO/IEC MPEG video standards, H.264/AVC has a reference software implementation that can be freely downloaded.[48] Its main purpose is to give examples of H.264/AVC features, rather than being a useful application per se. Some reference hardware design work has also been conducted in the Moving Picture Experts Group. The above-mentioned aspects include features in all profiles of H.264. A profile for a codec is a set of features of that codec identified to meet a certain set of specifications of intended applications. This means that many of the features listed are not supported in some profiles. Various profiles of H.264/AVC are discussed in next section.

Profiles

[edit]The standard defines several sets of capabilities, which are referred to as profiles, targeting specific classes of applications. These are declared using a profile code (profile_idc) and sometimes a set of additional constraints applied in the encoder. The profile code and indicated constraints allow a decoder to recognize the requirements for decoding that specific bitstream. (And in many system environments, only one or two profiles are allowed to be used, so decoders in those environments do not need to be concerned with recognizing the less commonly used profiles.) By far the most commonly used profile is the High Profile.

Profiles for non-scalable 2D video applications include the following:

- Constrained Baseline Profile (CBP, 66 with constraint set 1)

- Primarily for low-cost applications, this profile is most typically used in videoconferencing and mobile applications. It corresponds to the subset of features that are in common between the Baseline, Main, and High Profiles.

- Baseline Profile (BP, 66)

- Primarily for low-cost applications that require additional data loss robustness, this profile is used in some videoconferencing and mobile applications. This profile includes all features that are supported in the Constrained Baseline Profile, plus three additional features that can be used for loss robustness (or for other purposes such as low-delay multi-point video stream compositing). The importance of this profile has faded somewhat since the definition of the Constrained Baseline Profile in 2009. All Constrained Baseline Profile bitstreams are also considered to be Baseline Profile bitstreams, as these two profiles share the same profile identifier code value.

- Extended Profile (XP, 88)

- Intended as the streaming video profile, this profile has relatively high compression capability and some extra tricks for robustness to data losses and server stream switching.

- Main Profile (MP, 77)

- This profile is used for standard-definition digital TV broadcasts that use the MPEG-4 format as defined in the DVB standard.[49] It is not, however, used for high-definition television broadcasts, as the importance of this profile faded when the High Profile was developed in 2004 for that application.

- High Profile (HiP, 100)

- The primary profile for broadcast and disc storage applications, particularly for high-definition television applications (for example, this is the profile adopted by the Blu-ray Disc storage format and the DVB HDTV broadcast service).

- Progressive High Profile (PHiP, 100 with constraint set 4)

- Similar to the High profile, but without support of field coding features.

- Constrained High Profile (100 with constraint set 4 and 5)

- Similar to the Progressive High profile, but without support of B (bi-predictive) slices.

- High 10 Profile (Hi10P, 110)

- Going beyond typical mainstream consumer product capabilities, this profile builds on top of the High Profile, adding support for up to 10 bits per sample of decoded picture precision.

- High 4:2:2 Profile (Hi422P, 122)

- Primarily targeting professional applications that use interlaced video, this profile builds on top of the High 10 Profile, adding support for the 4:2:2 chroma sampling format while using up to 10 bits per sample of decoded picture precision.

- High 4:4:4 Predictive Profile (Hi444PP, 244)

- This profile builds on top of the High 4:2:2 Profile, supporting up to 4:4:4 chroma sampling, up to 14 bits per sample, and additionally supporting efficient lossless region coding and the coding of each picture as three separate color planes.

For camcorders, editing, and professional applications, the standard contains four additional Intra-frame-only profiles, which are defined as simple subsets of other corresponding profiles. These are mostly for professional (e.g., camera and editing system) applications:

- High 10 Intra Profile (110 with constraint set 3)

- The High 10 Profile constrained to all-Intra use.

- High 4:2:2 Intra Profile (122 with constraint set 3)

- The High 4:2:2 Profile constrained to all-Intra use.

- High 4:4:4 Intra Profile (244 with constraint set 3)

- The High 4:4:4 Profile constrained to all-Intra use.

- CAVLC 4:4:4 Intra Profile (44)

- The High 4:4:4 Profile constrained to all-Intra use and to CAVLC entropy coding (i.e., not supporting CABAC).

As a result of the Scalable Video Coding (SVC) extension, the standard contains five additional scalable profiles, which are defined as a combination of a H.264/AVC profile for the base layer (identified by the second word in the scalable profile name) and tools that achieve the scalable extension:

- Scalable Baseline Profile (83)

- Primarily targeting video conferencing, mobile, and surveillance applications, this profile builds on top of the Constrained Baseline profile to which the base layer (a subset of the bitstream) must conform. For the scalability tools, a subset of the available tools is enabled.

- Scalable Constrained Baseline Profile (83 with constraint set 5)

- A subset of the Scalable Baseline Profile intended primarily for real-time communication applications.

- Scalable High Profile (86)

- Primarily targeting broadcast and streaming applications, this profile builds on top of the H.264/AVC High Profile to which the base layer must conform.

- Scalable Constrained High Profile (86 with constraint set 5)

- A subset of the Scalable High Profile intended primarily for real-time communication applications.

- Scalable High Intra Profile (86 with constraint set 3)

- Primarily targeting production applications, this profile is the Scalable High Profile constrained to all-Intra use.

As a result of the Multiview Video Coding (MVC) extension, the standard contains two multiview profiles:

- Stereo High Profile (128)

- This profile targets two-view stereoscopic 3D video and combines the tools of the High profile with the inter-view prediction capabilities of the MVC extension.

- Multiview High Profile (118)

- This profile supports two or more views using both inter-picture (temporal) and MVC inter-view prediction, but does not support field pictures and macroblock-adaptive frame-field coding.

The Multi-resolution Frame-Compatible (MFC) extension added two more profiles:

- MFC High Profile (134)

- A profile for stereoscopic coding with two-layer resolution enhancement.

- MFC Depth High Profile (135)

The 3D-AVC extension added two more profiles:

- Multiview Depth High Profile (138)

- This profile supports joint coding of depth map and video texture information for improved compression of 3D video content.

- Enhanced Multiview Depth High Profile (139)

- An enhanced profile for combined multiview coding with depth information.

Feature support in particular profiles

[edit]| Feature | CBP | BP | XP | MP | ProHiP | HiP | Hi10P | Hi422P | Hi444PP |

|---|---|---|---|---|---|---|---|---|---|

| Bit depth (per sample) | 8 | 8 | 8 | 8 | 8 | 8 | 8 to 10 | 8 to 10 | 8 to 14 |

| Chroma formats | 4:2:0 |

4:2:0 |

4:2:0 |

4:2:0 |

4:2:0 |

4:2:0 |

4:2:0 |

4:2:0/ 4:2:2 |

4:2:0/ 4:2:2/ 4:4:4 |

| Flexible macroblock ordering (FMO) | No | Yes | Yes | No | No | No | No | No | No |

| Arbitrary slice ordering (ASO) | No | Yes | Yes | No | No | No | No | No | No |

| Redundant slices (RS) | No | Yes | Yes | No | No | No | No | No | No |

| Data Partitioning | No | No | Yes | No | No | No | No | No | No |

| SI and SP slices | No | No | Yes | No | No | No | No | No | No |

| Interlaced coding (PicAFF, MBAFF) | No | No | Yes | Yes | No | Yes | Yes | Yes | Yes |

| B slices | No | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes |

| CABAC entropy coding | No | No | No | Yes | Yes | Yes | Yes | Yes | Yes |

| 4:0:0 (Greyscale) | No | No | No | No | Yes | Yes | Yes | Yes | Yes |

| 8×8 vs. 4×4 transform adaptivity | No | No | No | No | Yes | Yes | Yes | Yes | Yes |

| Quantization scaling matrices | No | No | No | No | Yes | Yes | Yes | Yes | Yes |

| Separate CB and CR QP control | No | No | No | No | Yes | Yes | Yes | Yes | Yes |

| Separate color plane coding | No | No | No | No | No | No | No | No | Yes |

| Predictive lossless coding | No | No | No | No | No | No | No | No | Yes |

Levels

[edit]As the term is used in the standard, a "level" is a specified set of constraints that indicate a degree of required decoder performance for a profile. For example, a level of support within a profile specifies the maximum picture resolution, frame rate, and bit rate that a decoder may use. A decoder that conforms to a given level must be able to decode all bitstreams encoded for that level and all lower levels.

| Level |

Maximum decoding speed (macroblocks/s) |

Maximum frame size (macroblocks) |

Maximum video bit rate for video coding layer (VCL) (Constrained Baseline, Baseline, Extended and Main Profiles) (kbits/s) |

Examples for high resolution @ highest frame rate (maximum stored frames) Toggle additional details |

|---|---|---|---|---|

| 1 | 1,485 | 99 | 64 | 128×96@30.9 (8) 176×144@15.0 (4)

|

| 1b | 1,485 | 99 | 128 | 128×96@30.9 (8) 176×144@15.0 (4)

|

| 1.1 | 3,000 | 396 | 192 | 176×144@30.3 (9) 352×288@7.5 (2)

320×240@10.0 (3) |

| 1.2 | 6,000 | 396 | 384 | 320×240@20.0 (7) 352×288@15.2 (6)

|

| 1.3 | 11,880 | 396 | 768 | 320×240@36.0 (7) 352×288@30.0 (6)

|

| 2 | 11,880 | 396 | 2,000 | 320×240@36.0 (7) 352×288@30.0 (6)

|

| 2.1 | 19,800 | 792 | 4,000 | 352×480@30.0 (7) 352×576@25.0 (6)

|

| 2.2 | 20,250 | 1,620 | 4,000 | 352×480@30.7 (12) 720×576@12.5 (5)

352×576@25.6 (10) 720×480@15.0 (6) |

| 3 | 40,500 | 1,620 | 10,000 | 352×480@61.4 (12) 720×576@25.0 (5)

352×576@51.1 (10) 720×480@30.0 (6) |

| 3.1 | 108,000 | 3,600 | 14,000 | 720×480@80.0 (13) 1,280×720@30.0 (5)

720×576@66.7 (11) |

| 3.2 | 216,000 | 5,120 | 20,000 | 1,280×720@60.0 (5) 1,280×1,024@42.2 (4)

|

| 4 | 245,760 | 8,192 | 20,000 | 1,280×720@68.3 (9) 2,048×1,024@30.0 (4)

1,920×1,080@30.1 (4) |

| 4.1 | 245,760 | 8,192 | 50,000 | 1,280×720@68.3 (9) 2,048×1,024@30.0 (4)

1,920×1,080@30.1 (4) |

| 4.2 | 522,240 | 8,704 | 50,000 | 1,280×720@145.1 (9) 2,048×1,080@60.0 (4)

1,920×1,080@64.0 (4) |

| 5 | 589,824 | 22,080 | 135,000 | 1,920×1,080@72.3 (13) 3,672×1,536@26.7 (5)

2,048×1,024@72.0 (13) 2,048×1,080@67.8 (12) 2,560×1,920@30.7 (5) |

| 5.1 | 983,040 | 36,864 | 240,000 | 1,920×1,080@120.5 (16) 4,096×2,304@26.7 (5)

2,560×1,920@51.2 (9) 3,840×2,160@31.7 (5) 4,096×2,048@30.0 (5) 4,096×2,160@28.5 (5) |

| 5.2 | 2,073,600 | 36,864 | 240,000 | 1,920×1,080@172.0 (16) 4,096×2,304@56.3 (5)

2,560×1,920@108.0 (9) 3,840×2,160@66.8 (5) 4,096×2,048@63.3 (5) 4,096×2,160@60.0 (5) |

| 6 | 4,177,920 | 139,264 | 240,000 | 3,840×2,160@128.9 (16) 8,192×4,320@30.2 (5)

7,680×4,320@32.2 (5) |

| 6.1 | 8,355,840 | 139,264 | 480,000 | 3,840×2,160@257.9 (16) 8,192×4,320@60.4 (5)

7,680×4,320@64.5 (5) |

| 6.2 | 16,711,680 | 139,264 | 800,000 | 3,840×2,160@300.0 (16) 8,192×4,320@120.9 (5)

7,680×4,320@128.9 (5) |

The maximum bit rate for the High Profile is 1.25 times that of the Constrained Baseline, Baseline, Extended and Main Profiles; 3 times for Hi10P, and 4 times for Hi422P/Hi444PP.

The number of luma samples is 16×16=256 times the number of macroblocks (and the number of luma samples per second is 256 times the number of macroblocks per second).

Decoded picture buffering

[edit]Previously encoded pictures are used by H.264/AVC encoders to provide predictions of the values of samples in other pictures. This allows the encoder to make efficient decisions on the best way to encode a given picture. At the decoder, such pictures are stored in a virtual decoded picture buffer (DPB). The maximum capacity of the DPB, in units of frames (or pairs of fields), as shown in parentheses in the right column of the table above, can be computed as follows:

- DpbCapacity = min(floor(MaxDpbMbs / (PicWidthInMbs * FrameHeightInMbs)), 16)

Where MaxDpbMbs is a constant value provided in the table below as a function of level number, and PicWidthInMbs and FrameHeightInMbs are the picture width and frame height for the coded video data, expressed in units of macroblocks (rounded up to integer values and accounting for cropping and macroblock pairing when applicable). This formula is specified in sections A.3.1.h and A.3.2.f of the 2017 edition of the standard.[27]

| Level | 1 | 1b | 1.1 | 1.2 | 1.3 | 2 | 2.1 | 2.2 | 3 | 3.1 | 3.2 | 4 | 4.1 | 4.2 | 5 | 5.1 | 5.2 | 6 | 6.1 | 6.2 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MaxDpbMbs | 396 | 396 | 900 | 2,376 | 2,376 | 2,376 | 4,752 | 8,100 | 8,100 | 18,000 | 20,480 | 32,768 | 32,768 | 34,816 | 110,400 | 184,320 | 184,320 | 696,320 | 696,320 | 696,320 |

For example, for an HDTV picture that is 1,920 samples wide (PicWidthInMbs = 120) and 1,080 samples high (FrameHeightInMbs = 68), a Level 4 decoder has a maximum DPB storage capacity of floor(32768/(120*68)) = 4 frames (or 8 fields). Thus, the value 4 is shown in parentheses in the table above in the right column of the row for Level 4 with the frame size 1920×1080.

The current picture being decoded is not included in the computation of DPB fullness (unless the encoder has indicated for it to be stored for use as a reference for decoding other pictures or for delayed output timing). Thus, a decoder needs to actually have sufficient memory to handle (at least) one frame more than the maximum capacity of the DPB as calculated above.

Implementations

[edit]

In 2009, the HTML5 working group was split between supporters of Ogg Theora, a free video format which is thought to be unencumbered by patents, and H.264, which contains patented technology. As late as July 2009, Google and Apple were said to support H.264, while Mozilla and Opera support Ogg Theora (now Google, Mozilla and Opera all support Theora and WebM with VP8).[50] Microsoft, with the release of Internet Explorer 9, has added support for HTML 5 video encoded using H.264. At the Gartner Symposium/ITXpo in November 2010, Microsoft CEO Steve Ballmer answered the question "HTML 5 or Silverlight?" by saying "If you want to do something that is universal, there is no question the world is going HTML5."[51] In January 2011, Google announced that they were pulling support for H.264 from their Chrome browser and supporting both Theora and WebM/VP8 to use only open formats.[52]

On March 18, 2012, Mozilla announced support for H.264 in Firefox on mobile devices, due to prevalence of H.264-encoded video and the increased power-efficiency of using dedicated H.264 decoder hardware common on such devices.[53] On February 20, 2013, Mozilla implemented support in Firefox for decoding H.264 on Windows 7 and above. This feature relies on Windows' built in decoding libraries.[54] Firefox 35.0, released on January 13, 2015, supports H.264 on OS X 10.6 and higher.[55]

On October 30, 2013, Rowan Trollope from Cisco Systems announced that Cisco would release both binaries and source code of an H.264 video codec called OpenH264 under the Simplified BSD license, and pay all royalties for its use to MPEG LA for any software projects that use Cisco's precompiled binaries, thus making Cisco's OpenH264 binaries free to use. However, any software projects that use Cisco's source code instead of its binaries would be legally responsible for paying all royalties to MPEG LA. Target CPU architectures include x86 and ARM, and target operating systems include Linux, Windows XP and later, Mac OS X, and Android; iOS was notably absent from this list, because it does not allow applications to fetch and install binary modules from the Internet.[56][57][58] Also on October 30, 2013, Brendan Eich from Mozilla wrote that it would use Cisco's binaries in future versions of Firefox to add support for H.264 to Firefox where platform codecs are not available.[59] Cisco published the source code to OpenH264 on December 9, 2013.[60]

Although iOS was not supported by the 2013 Cisco software release, Apple updated its Video Toolbox Framework with iOS 8 (released in September 2014) to provide direct access to hardware-based H.264/AVC video encoding and decoding.[57]

Software encoders

[edit]| Feature | QuickTime | Nero | OpenH264 | x264 | Main- Concept |

Elecard | TSE | Pro- Coder |

Avivo | Elemental | IPP |

|---|---|---|---|---|---|---|---|---|---|---|---|

| B slices | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes |

| Multiple reference frames | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes |

| Interlaced coding (PicAFF, MBAFF) | No | MBAFF | MBAFF | MBAFF | Yes | Yes | No | Yes | MBAFF | Yes | No |

| CABAC entropy coding | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes |

| 8×8 vs. 4×4 transform adaptivity | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes |

| Quantization scaling matrices | No | No | Yes | Yes | Yes | No | No | No | No | No | No |

| Separate CB and CR QP control | No | No | Yes | Yes | Yes | Yes | No | No | No | No | No |

| Extended chroma formats | No | No | No | 4:0:0[61] 4:2:0 4:2:2[62] 4:4:4[63] |

4:2:2 | 4:2:2 | 4:2:2 | No | No | 4:2:0 4:2:2 |

No |

| Largest sample depth (bit) | 8 | 8 | 8 | 10[64] | 10 | 8 | 8 | 8 | 8 | 10 | 12 |

| Predictive lossless coding | No | No | No | Yes[65] | No | No | No | No | No | No | No |

Hardware

[edit]Because H.264 encoding and decoding requires significant computing power in specific types of arithmetic operations, software implementations that run on general-purpose CPUs are typically less power efficient. However, the latest[when?] quad-core general-purpose x86 CPUs have sufficient computation power to perform real-time SD and HD encoding. Compression efficiency depends on video algorithmic implementations, not on whether hardware or software implementation is used. Therefore, the difference between hardware and software based implementation is more on power-efficiency, flexibility and cost. To improve the power efficiency and reduce hardware form-factor, special-purpose hardware may be employed, either for the complete encoding or decoding process, or for acceleration assistance within a CPU-controlled environment.

CPU based solutions are known to be much more flexible, particularly when encoding must be done concurrently in multiple formats, multiple bit rates and resolutions (multi-screen video), and possibly with additional features on container format support, advanced integrated advertising features, etc. CPU based software solution generally makes it much easier to load balance multiple concurrent encoding sessions within the same CPU.

The 2nd generation Intel "Sandy Bridge" Core i3/i5/i7 processors introduced at the January 2011 CES (Consumer Electronics Show) offer an on-chip hardware full HD H.264 encoder, known as Intel Quick Sync Video.[66][67]

A hardware H.264 encoder can be an ASIC or an FPGA.

ASIC encoders with H.264 encoder functionality are available from many different semiconductor companies, but the core design used in the ASIC is typically licensed from one of a few companies such as Chips&Media, Allegro DVT, On2 (formerly Hantro, acquired by Google), Imagination Technologies, NGCodec. Some companies have both FPGA and ASIC product offerings.[68]

Texas Instruments manufactures a line of ARM + DSP cores that perform DSP H.264 BP encoding 1080p at 30 fps.[69] This permits flexibility with respect to codecs (which are implemented as highly optimized DSP code) while being more efficient than software on a generic CPU.

Licensing

[edit]In countries where patents on software algorithms are upheld, vendors and commercial users of products that use H.264/AVC are expected to pay patent licensing royalties for the patented technology that their products use.[70] This applies to the Baseline Profile as well.[71]

A private organization known as MPEG LA, which is not affiliated in any way with the MPEG standardization organization, administers the licenses for patents applying to this standard, as well as other patent pools, such as for MPEG-4 Part 2 Video, HEVC and MPEG-DASH. The patent holders include Fujitsu, Panasonic, Sony, Mitsubishi, Apple, Columbia University, KAIST, Dolby, Google, JVC Kenwood, LG Electronics, Microsoft, NTT Docomo, Philips, Samsung, Sharp, Toshiba and ZTE,[72] although the majority of patents in the pool are held by Panasonic (1,197 patents), Godo Kaisha IP Bridge (1,130 patents) and LG Electronics (990 patents).[73]

On August 26, 2010, MPEG LA announced that royalties would not be charged for H.264 encoded Internet video that is free to end users.[74] All other royalties remain in place, such as royalties for products that decode and encode H.264 video as well as to operators of free television and subscription channels.[75] The license terms are updated in 5-year blocks.[76]

Since the first version of the standard was completed in May 2003 (22 years ago) and the most commonly used profile (the High profile) was completed in June 2004[citation needed] (21 years ago), some of the relevant patents are expired by now,[73] while others are still in force in jurisdictions around the world and one of the US patents in the MPEG LA H.264 pool (granted in 2016, priority from 2001) lasts at least until November 2030.[77]

In 2005, Qualcomm sued Broadcom in US District Court, alleging that Broadcom infringed on two of its patents by making products that were compliant with the H.264 video compression standard.[78] In 2007, the District Court found that the patents were unenforceable because Qualcomm had failed to disclose them to the JVT prior to the release of the H.264 standard in May 2003.[78] In December 2008, the US Court of Appeals for the Federal Circuit affirmed the District Court's order that the patents be unenforceable but remanded to the District Court with instructions to limit the scope of unenforceability to H.264 compliant products.[78]

In October 2023 Nokia sued HP and Amazon for H.264/H.265 patent infringement in USA, UK and other locations.[79]

See also

[edit]- VC-1, a standard designed by Microsoft and approved as a SMPTE standard in 2006

- Dirac (video compression format), a video coding design by BBC Research & Development, released in 2008

- VP8, a video coding design by On2 Technologies (later purchased by Google), released in 2008

- VP9, a video coding design by Google, released in 2013

- High Efficiency Video Coding (ITU-T H.265 or ISO/IEC 23008-2), an ITU/ISO/IEC standard, released in 2013

- AV1, a video coding design by the Alliance for Open Media, released in 2018

- Versatile Video Coding (ITU-T H.266 or ISO/IEC 23091-3), an ITU/ISO/IEC standard, released in 2020

- Internet Protocol television

- Group of pictures

- Intra-frame coding

- Inter frame

References

[edit]- ^ MPEG-4, Advanced Video Coding (Part 10) (H.264) (Full draft). Sustainability of Digital Formats. Washington, D.C.: Library of Congress. December 5, 2011. Retrieved December 1, 2021.

- ^ "H.264 : Advanced video coding for generic audiovisual services". www.itu.int. Archived from the original on October 31, 2019. Retrieved November 22, 2019.

- ^ "Video Developer Report 2024/2025" (PDF). Bitmovin. December 2024.

- ^ "Delivering 8K using AVC/H.264". Mystery Box. Archived from the original on March 25, 2021. Retrieved August 23, 2017.

- ^ a b Wang, Hanli; Kwong, S.; Kok, C. (2006). "Efficient prediction algorithm of integer DCT coefficients for H.264/AVC optimization". IEEE Transactions on Circuits and Systems for Video Technology. 16 (4): 547–552. Bibcode:2006ITCSV..16..547W. doi:10.1109/TCSVT.2006.871390. S2CID 2060937.

- ^ a b Thomson, Gavin; Shah, Athar (2017). "Introducing HEIF and HEVC" (PDF). Apple Inc. Retrieved August 5, 2019.

- ^ Ozer, Jan (May 8, 2023), Via LA's Heath Hoglund Talks MPEG LA/Via Licensing Patent Pool Merger, StreamingMedia.com

- ^ "ITU-T Recommendations". ITU. Retrieved November 1, 2022.

- ^ "H.262 : Information technology — Generic coding of moving pictures and associated audio information: Video". Retrieved April 15, 2007.

- ^ Joint Video Team, ITU-T Web site.

- ^ "ITU-T Recommendation H.264 (05/2003)". ITU. May 30, 2003. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (05/2003) Cor. 1 (05/2004)". ITU. May 7, 2004. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (03/2005)". ITU. March 1, 2005. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (2005) Cor. 1 (09/2005)". ITU. September 13, 2005. Retrieved April 18, 2013.

- ^ a b "ITU-T Recommendation H.264 (2005) Amd. 1 (06/2006)". ITU. June 13, 2006. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (2005) Amd. 2 (04/2007)". ITU. April 6, 2007. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (11/2007)". ITU. November 22, 2007. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (2007) Cor. 1 (01/2009)". ITU. January 13, 2009. Retrieved April 18, 2013.

- ^ a b "ITU-T Recommendation H.264 (03/2009)". ITU. March 16, 2009. Retrieved April 18, 2013.

- ^ a b "ITU-T Recommendation H.264 (03/2010)". ITU. March 9, 2010. Retrieved April 18, 2013.

- ^ a b "ITU-T Recommendation H.264 (06/2011)". ITU. June 29, 2011. Retrieved April 18, 2013.

- ^ "ITU-T Recommendation H.264 (01/2012)". ITU. January 13, 2012. Retrieved April 18, 2013.

- ^ a b c d "ITU-T Recommendation H.264 (04/2013)". ITU. June 12, 2013. Retrieved June 16, 2013.

- ^ a b "ITU-T Recommendation H.264 (02/2014)". ITU. November 28, 2014. Retrieved February 28, 2016.

- ^ "ITU-T Recommendation H.264 (02/2016)". ITU. February 13, 2016. Retrieved June 14, 2017.

- ^ "ITU-T Recommendation H.264 (10/2016)". ITU. October 14, 2016. Retrieved June 14, 2017.

- ^ a b c "ITU-T Recommendation H.264 (04/2017)". ITU. April 13, 2017. See Tables A-1, A-6 and A-7 for the tabulated level-dependent capabilities. Retrieved June 14, 2017.

- ^ "H.264: Advanced video coding for generic audiovisual services - Version 26 (Edition 13)". www.itu.int. June 13, 2019. Archived from the original on November 4, 2021. Retrieved November 3, 2021.

- ^ "H.264: Advanced video coding for generic audiovisual services - Version 27 (Edition 14)". www.itu.int. August 22, 2021. Archived from the original on November 4, 2021. Retrieved November 3, 2021.

- ^ "H.264: Advanced video coding for generic audiovisual services - Version 28 (Edition 15)". www.itu.int. August 13, 2024. Retrieved February 12, 2025.

- ^ Wenger; et al. (February 2005). "RFC 3984 : RTP Payload Format for H.264 Video". Ietf Datatracker: 2. doi:10.17487/RFC3984.

- ^ "Which recording mode is equivalent to the image quality of the High Definition Video (HDV) format?". Sony eSupport. Archived from the original on November 9, 2017. Retrieved December 8, 2018.

- ^ "ATSC Standard A/72 Part 1: Video System Characteristics of AVC in the ATSC Digital Television System" (PDF). Archived from the original (PDF) on August 7, 2011. Retrieved July 30, 2011.

- ^ "ATSC Standard A/72 Part 2: AVC Video Transport Subsystem Characteristics" (PDF). Archived from the original (PDF) on August 7, 2011. Retrieved July 30, 2011.

- ^ "ATSC Standard A/153 Part 7: AVC and SVC Video System Characteristics" (PDF). Archived from the original (PDF) on July 26, 2011. Retrieved July 30, 2011.

- ^ a b "Sony introduces new XAVC recording format to accelerate 4K development in the professional and consumer markets". Sony. October 30, 2012. Retrieved November 1, 2012.

- ^ a b "Sony introduces new XAVC recording format to accelerate 4K development in the professional and consumer markets" (PDF). Sony. October 30, 2012. Archived from the original (PDF) on March 23, 2023. Retrieved November 1, 2012.

- ^ Steve Dent (October 30, 2012). "Sony goes Red-hunting with PMW-F55 and PMW-F5 pro CineAlta 4K Super 35mm sensor camcorders". Engadget. Retrieved November 5, 2012.

- ^ "F55 CineAlta 4K the future, ahead of schedule" (PDF). Sony. October 30, 2012. Archived from the original (PDF) on November 19, 2012. Retrieved November 1, 2012.

- ^ "Ultra-fast "SxS PRO+" memory cards transform 4K video capture". Sony. Archived from the original on March 8, 2013. Retrieved November 5, 2012.

- ^ "Ultra-fast "SxS PRO+" memory cards transform 4K video capture" (PDF). Sony. Archived from the original (PDF) on April 2, 2015. Retrieved November 5, 2012.

- ^ a b Stanković, Radomir S.; Astola, Jaakko T. (2012). "Reminiscences of the Early Work in DCT: Interview with K.R. Rao" (PDF). Reprints from the Early Days of Information Sciences. 60: 17. Retrieved October 13, 2019.

- ^ Kwon, Soon-young; Lee, Joo-kyong; Chung, Ki-dong (2005). "Half-Pixel Correction for MPEG-2/H.264 Transcoding". Image Analysis and Processing – ICIAP 2005. Lecture Notes in Computer Science. Vol. 3617. Springer Berlin Heidelberg. pp. 576–583. doi:10.1007/11553595_71. ISBN 978-3-540-28869-5.

- ^ Britanak, Vladimir; Yip, Patrick C.; Rao, K. R. (2010). Discrete Cosine and Sine Transforms: General Properties, Fast Algorithms and Integer Approximations. Elsevier. pp. ix, xiii, 1, 141–304. ISBN 9780080464640.

- ^ "The H.264/AVC Advanced Video Coding Standard: Overview and Introduction to the Fidelity Range Extensions" (PDF). Retrieved July 30, 2011.

- ^ a b c RFC 3984, p.3

- ^ Apple Inc. (March 26, 1999). "H.264 FAQ". Apple. Archived from the original on March 7, 2010. Retrieved May 17, 2010.

- ^ Karsten Suehring. "H.264/AVC JM Reference Software Download". Iphome.hhi.de. Retrieved May 17, 2010.

- ^ "TS 101 154 – V1.9.1 – Digital Video Broadcasting (DVB); Specification for the use of Video and Audio Coding in Broadcasting Applications based on the MPEG-2 Transport Stream" (PDF). Retrieved May 17, 2010.

- ^ "Decoding the HTML 5 video codec debate". Ars Technica. July 6, 2009. Retrieved January 12, 2011.

- ^ "Steve Ballmer, CEO Microsoft, interviewed at Gartner Symposium/ITxpo Orlando 2010". Gartnervideo. November 2010. Archived from the original on October 30, 2021. Retrieved January 12, 2011.

- ^ "HTML Video Codec Support in Chrome". January 11, 2011. Retrieved January 12, 2011.

- ^ "Video, Mobile, and the Open Web". March 18, 2012. Retrieved March 20, 2012.

- ^ "WebRTC enabled, H.264/MP3 support in Win 7 on by default, Metro UI for Windows 8 + more – Firefox Development Highlights". hacks.mozilla.org. mozilla. February 20, 2013. Retrieved March 15, 2013.

- ^ "Firefox — Notes (35.0)". Mozilla.

- ^ "Open-Sourced H.264 Removes Barriers to WebRTC". October 30, 2013. Archived from the original on July 6, 2015. Retrieved November 1, 2013.

- ^ a b "Cisco OpenH264 project FAQ". Retrieved September 26, 2021.

- ^ "OpenH264 Simplified BSD License". GitHub. October 27, 2013. Retrieved November 21, 2013.

- ^ "Video Interoperability on the Web Gets a Boost From Cisco's H.264 Codec". October 30, 2013. Retrieved November 1, 2013.

- ^ "Updated README · cisco/openh264@59dae50". GitHub.

- ^ "x264 4:0:0 (monochrome) encoding support", Retrieved 2019-06-05.

- ^ "x264 4:2:2 encoding support", Retrieved 2019-06-05.

- ^ "x264 4:4:4 encoding support", Retrieved 2019-06-05.

- ^ "x264 support for 9 and 10-bit encoding", Retrieved 2011-06-22.

- ^ "x264 replace High 4:4:4 profile lossless with High 4:4:4 Predictive", Retrieved 2011-06-22.

- ^ "Quick Reference Guide to generation Intel Core Processor Built-in Visuals". Intel Software Network. October 1, 2010. Retrieved January 19, 2011.

- ^ "Intel Quick Sync Video". www.intel.com. October 1, 2010. Retrieved January 19, 2011.

- ^ "Design-reuse.com". Design-reuse.com. January 1, 1990. Retrieved May 17, 2010.

- ^ "Category:DM6467 - Texas Instruments Embedded Processors Wiki". Processors.wiki.ti.com. July 12, 2011. Archived from the original on July 17, 2011. Retrieved July 30, 2011.

- ^ "Briefing portfolio" (PDF). www.mpegla.com. Archived from the original (PDF) on November 28, 2016. Retrieved December 1, 2016.

- ^ "OMS Video, A Project of Sun's Open Media Commons Initiative". Archived from the original on May 11, 2010. Retrieved August 26, 2008.

- ^ "Licensors Included in the AVC/H.264 Patent Portfolio License". MPEG LA. Archived from the original on October 11, 2021. Retrieved June 18, 2019.

- ^ a b "AVC/H.264 – Patent List". Via Licensing Alliance. Retrieved April 28, 2024.

- ^ "MPEG LA's AVC License Will Not Charge Royalties for Internet Video that is Free to End Users through Life of License" (PDF). MPEG LA. August 26, 2010. Archived from the original (PDF) on November 7, 2013. Retrieved August 26, 2010.

- ^ Hachman, Mark (August 26, 2010). "MPEG LA Cuts Royalties from Free Web Video, Forever". pcmag.com. Retrieved August 26, 2010.

- ^ "AVC FAQ". MPEG LA. August 1, 2002. Archived from the original on May 7, 2010. Retrieved May 17, 2010.

- ^ "United States Patent 9,356,620 Baese, et al". Retrieved August 1, 2022. with an earliest priority date of September 14, 2001 has a 2,998 day term extension.

- ^ a b c See Qualcomm Inc. v. Broadcom Corp., No. 2007-1545, 2008-1162 (Fed. Cir. December 1, 2008). For articles in the popular press, see signonsandiego.com, "Qualcomm loses its patent-rights case" and "Qualcomm's patent case goes to jury"; and bloomberg.com "Broadcom Wins First Trial in Qualcomm Patent Dispute"

- ^ "nokia h264".

Further reading

[edit]- Wiegand, Thomas; Sullivan, Gary J.; Bjøntegaard, Gisle; Luthra, Ajay (July 2003). "Overview of the H.264/AVC Video Coding Standard" (PDF). IEEE Transactions on Circuits and Systems for Video Technology. 13 (7): 560–576. Bibcode:2003ITCSV..13..560W. doi:10.1109/TCSVT.2003.815165. Archived from the original (PDF) on April 29, 2011. Retrieved January 31, 2011.

- Topiwala, Pankaj; Sullivan, Gary J.; Luthra, Ajay (August 2004). Tescher, Andrew G (ed.). "The H.264/AVC Advanced Video Coding Standard: Overview and Introduction to the Fidelity Range Extensions" (PDF). SPIE Applications of Digital Image Processing XXVII. Applications of Digital Image Processing XXVII. 5558: 454. Bibcode:2004SPIE.5558..454S. doi:10.1117/12.564457. S2CID 2308860. Retrieved January 31, 2011.

- Ostermann, J.; Bormans, J.; List, P.; Marpe, D.; Narroschke, M.; Pereira, F.; Stockhammer, T.; Wedi, T. (2004). "Video coding with H.264/AVC: Tools, Performance, and Complexity" (PDF). IEEE Circuits and Systems Magazine (FTP). pp. 7–28. doi:10.1109/MCAS.2004.1286980. S2CID 11105089. Retrieved January 31, 2011.[dead ftp link] (To view documents see Help:FTP)

- Puri, Atul; Chen, Xuemin; Luthra, Ajay (October 2004). "Video coding using the H.264/MPEG-4 AVC compression standard" (PDF). Signal Processing: Image Communication. 19 (9): 793–849. doi:10.1016/j.image.2004.06.003. Retrieved March 30, 2011.

- Sullivan, Gary J.; Wiegand, Thomas (January 2005). "Video Compression—From Concepts to the H.264/AVC Standard" (PDF). Proceedings of the IEEE. 93 (1): 18–31. Bibcode:2005IEEEP..93...18S. doi:10.1109/jproc.2004.839617. S2CID 1362034. Retrieved January 31, 2011.

- Richardson, Iain E. G. (January 2011). "Learn about video compression and H.264". VCODEX. Vcodex Limited. Archived from the original on January 28, 2011. Retrieved January 31, 2011.

External links

[edit]- ITU-T publication page: H.264: Advanced video coding for generic audiovisual services

- MPEG-4 AVC/H.264 Information Doom9's Forum

- H.264/MPEG-4 Part 10 Tutorials (Richardson)

- "Part 10: Advanced Video Coding". ISO publication page: ISO/IEC 14496-10:2010 – Information technology — Coding of audio-visual objects.

- "H.264/AVC JM Reference Software". IP Homepage. Retrieved April 15, 2007.

- "JVT document archive site". Archived from the original on August 8, 2010. Retrieved May 6, 2007.

- "Publications". Thomas Wiegand. Retrieved June 23, 2007.

- "Publications". Detlev Marpe. Retrieved April 15, 2007.

- "Fourth Annual H.264 video codecs comparison". Moscow State University. (dated December 2007)

- "Discussion on H.264 with respect to IP cameras in use within the security and surveillance industries". April 3, 2009. (dated April 2009)

- "Sixth Annual H.264 video codecs comparison". Moscow State University. (dated May 2010)

- "SMPTE QuickGuide". ETC. May 14, 2018.

Advanced Video Coding

View on GrokipediaIntroduction

Overview

Advanced Video Coding (AVC), also known as H.264 or MPEG-4 Part 10, is a block-oriented, motion-compensated video compression standard developed jointly by the ITU-T Video Coding Experts Group (VCEG) and the ISO/IEC Moving Picture Experts Group (MPEG).[4][5] It achieves high compression efficiency for digital video storage, transmission, and playback by reducing redundancy in video data while maintaining quality.[4] The standard supports a wide range of resolutions, from low-definition formats like QCIF (176×144 pixels) to ultrahigh-definition up to 8192×4320 pixels at its highest level (Level 6.2).[4][5] At its core, AVC employs techniques such as an integer-based 4×4 discrete cosine transform (DCT) for frequency-domain representation of residual data, intra-frame and inter-frame prediction to exploit spatial and temporal correlations, and entropy coding methods including context-adaptive variable-length coding (CAVLC) and context-adaptive binary arithmetic coding (CABAC) for efficient bitstream representation.[5] These elements enable the codec to handle diverse applications, from video telephony to broadcast and streaming services.[4] Released in May 2003, AVC quickly became the most widely deployed video codec by the 2010s, powering Blu-ray discs, digital television, and online streaming platforms due to its superior performance.[4][6] Compared to its predecessor MPEG-2, AVC provides up to 50% better compression efficiency at similar quality levels, allowing for higher resolution video at lower bit rates.[5]Naming Conventions

Advanced Video Coding (AVC) is known by several designations stemming from its joint development by the ITU-T Video Coding Experts Group (VCEG) and the ISO/IEC Moving Picture Experts Group (MPEG), resulting in primary names such as H.264 for the ITU-T recommendation and MPEG-4 Part 10 for the ISO/IEC standard.[7] The H.264 name follows the ITU-T's conventional numbering for video coding recommendations in the H.26x series, where it was officially titled "Advanced video coding for generic audiovisual services" upon its initial publication in May 2003.[8] Similarly, MPEG-4 Part 10, formalized as ISO/IEC 14496-10, integrates AVC into the broader MPEG-4 family of standards for coding audio-visual objects, emphasizing its role in multimedia applications beyond basic video compression.[9][10] The multiplicity of names arises from this collaborative effort, with "Advanced Video Coding" (AVC) serving as a neutral shorthand that highlights improvements over prior codecs like H.263, such as enhanced compression efficiency for low-bitrate applications.[7] During development, the project was initially termed H.26L by VCEG starting in 1998, evolving through the Joint Video Team (JVT) formed in 2001, which produced a unified specification adopted by both organizations.[7] The "MPEG-4 AVC" variant underscores its alignment with the MPEG-4 ecosystem, while the full "MPEG-4 Part 10" avoids conflation with other parts, such as Part 2 (Visual), which employs simpler coding methods.[9] AVC serves as a neutral shorthand and has become the predominant common usage in technical literature and industry, unifying references to the standard across contexts despite its multiple aliases, including the developmental JVT label. This evolution reflects the standard's rapid adoption following its 2003 release. Common misconceptions include confusing AVC with its successor, High Efficiency Video Coding (HEVC or H.265), which builds upon but is distinct from H.264, or with the earlier H.263 baseline for lower-complexity video telephony.[8]History

Development Timeline

The development of Advanced Video Coding (AVC), also known as H.264 or MPEG-4 Part 10, began as a joint effort between the ITU-T Video Coding Experts Group (VCEG) and the ISO/IEC Moving Picture Experts Group (MPEG). In 1998, VCEG initiated the H.26L project as a long-term standardization effort to create a successor to earlier video coding standards like H.263, with the first test model (TML-1) released in August 1999.[2] By 2001, following MPEG's open call for technology in July, the two organizations formalized their collaboration by forming the Joint Video Team (JVT) in December, aiming to develop a unified standard for advanced video compression.[11] This partnership was driven by the need for a versatile codec capable of supporting emerging applications in telecommunications and multimedia.[5] The collaborative process involved rigorous evaluation through core experiments conducted in 2001, where numerous proposals from global contributors were tested to identify optimal technologies. These experiments led to consensus on key elements, including variable block sizes for motion compensation, multiple prediction modes for intra and inter coding, and an integer-based transform for efficient residual representation.[2] Building on this foundation, the JVT produced the first committee draft in July 2002, followed by a final committee draft ballot in December 2002 that achieved technical freeze.[7] The standard reached final approval by ITU-T in May 2003 as Recommendation H.264 and by ISO/IEC in July 2003 as 14496-10, marking the completion of the initial version. Early adoption of AVC was propelled by its superior compression efficiency, offering up to 50% bit rate reduction compared to predecessors like H.263 and MPEG-2 while maintaining equivalent video quality, making it ideal for bandwidth-constrained environments.[5] Targeted applications included broadband internet streaming, DVD storage, and high-definition television (HDTV) broadcast, where its enhanced robustness and flexibility addressed limitations in prior standards.[2] Following the 2003 release, the first corrigendum was issued in May 2004 to address minor corrections and clarifications. By 2005, amendments had introduced features for improved error resilience in challenging transmission scenarios and high-fidelity profiles via the Fidelity Range Extensions (FRExt), expanding applicability to professional workflows.[2]Key Extensions and Profiles