Recent from talks

Nothing was collected or created yet.

System call

View on Wikipedia

In computing, a system call (syscall) is the programmatic way in which a computer program requests a service from the operating system[a] on which it is executed. This may include hardware-related services (for example, accessing a hard disk drive or accessing the device's camera), creation and execution of new processes, and communication with integral kernel services such as process scheduling. System calls provide an essential interface between a process and the operating system.

In most systems, system calls can only be made from userspace processes, while in some systems, OS/360 and successors for example, privileged system code also issues system calls.[1]

For embedded systems, system calls typically do not change the privilege mode of the CPU.

Privileges

[edit]The architecture of most modern processors, with the exception of some embedded systems, involves a security model. For example, the rings model specifies multiple privilege levels under which software may be executed: a program is usually limited to its own address space so that it cannot access or modify other running programs or the operating system itself, and is usually prevented from directly manipulating hardware devices (e.g. the frame buffer or network devices).

However, many applications need access to these components, so system calls are made available by the operating system to provide well-defined, safe implementations for such operations. The operating system executes at the highest level of privilege, and allows applications to request services via system calls, which are often initiated via interrupts. An interrupt automatically puts the CPU into some elevated privilege level and then passes control to the kernel, which determines whether the calling program should be granted the requested service. If the service is granted, the kernel executes a specific set of instructions over which the calling program has no direct control, returns the privilege level to that of the calling program, and then returns control to the calling program.

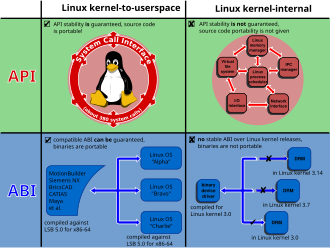

The library as an intermediary

[edit]Generally, systems provide a library or API that sits between normal programs and the operating system. On Unix-like systems, that API is usually part of an implementation of the C library (libc), such as glibc, that provides wrapper functions for the system calls, often named the same as the system calls they invoke. On Windows NT, that API is part of the Native API, in the ntdll.dll library; this is an undocumented API used by implementations of the regular Windows API and directly used by some system programs on Windows. The library's wrapper functions expose an ordinary function calling convention (a subroutine call on the assembly level) for using the system call, as well as making the system call more modular. Here, the primary function of the wrapper is to place all the arguments to be passed to the system call in the appropriate processor registers (and maybe on the call stack as well), and also setting a unique system call number for the kernel to call. In this way the library, which exists between the OS and the application, increases portability.

The call to the library function itself does not cause a switch to kernel mode and is usually a normal subroutine call (using, for example, a "CALL" assembly instruction in some Instruction set architectures (ISAs)). The actual system call does transfer control to the kernel (and is more implementation-dependent and platform-dependent than the library call abstracting it). For example, in Unix-like systems, fork and execve are C library functions that in turn execute instructions that invoke the fork and exec system calls. Making the system call directly in the application code is more complicated and may require embedded assembly code to be used (in C and C++), as well as requiring knowledge of the low-level binary interface for the system call operation, which may be subject to change over time and thus not be part of the application binary interface; the library functions are meant to abstract this away.

On exokernel based systems, the library is especially important as an intermediary. On exokernels, libraries shield user applications from the very low level kernel API, and provide abstractions and resource management.

IBM's OS/360, DOS/360 and TSS/360 implement most system calls through a library of assembly language macros,[b] although there are a few services with a call linkage. This reflects their origin at a time when programming in assembly language was more common than high-level language usage. IBM system calls were therefore not directly executable by high-level language programs, but required a callable assembly language wrapper subroutine. Since then, IBM has added many services that can be called from high level languages in, e.g., z/OS and z/VSE. In more recent release of MVS/SP and in all later MVS versions, some system call macros generate Program Call (PC).

Examples and tools

[edit]On Unix, Unix-like and other POSIX-compliant operating systems, popular system calls are open, read, write, close, wait, exec, fork, exit, and kill. Many modern operating systems have hundreds of system calls. For example, Linux and OpenBSD each have over 300 different calls,[2][3] NetBSD has close to 500,[4] FreeBSD has over 500,[5] Windows has close to 2000, divided between win32k (graphical) and ntdll (core) system calls[6] while Plan 9 has 54.[7]

Tools such as strace, ftrace and truss allow a process to execute from start and report all system calls the process invokes, or can attach to an already running process and intercept any system call made by the said process if the operation does not violate the permissions of the user. This special ability of the program is usually also implemented with system calls such as ptrace or system calls on files in procfs.

Typical implementations

[edit]Implementing system calls requires a transfer of control from user space to kernel space, which involves some sort of architecture-specific feature. A typical way to implement this is to use a software interrupt or trap. Interrupts transfer control to the operating system kernel, so software simply needs to set up some register with the system call number needed, and execute the software interrupt.

This is the only technique provided for many RISC processors, but CISC architectures such as x86 support additional techniques. For example, the x86 instruction set contains the instructions SYSCALL/SYSRET and SYSENTER/SYSEXIT (these two mechanisms were independently created by AMD and Intel, respectively, but in essence they do the same thing). These are "fast" control transfer instructions that are designed to quickly transfer control to the kernel for a system call without the overhead of an interrupt.[8] Linux 2.5 began using this on the x86, where available; formerly it used the INT instruction, where the system call number was placed in the EAX register before interrupt 0x80 was executed.[9][10]

An older mechanism is the call gate; originally used in Multics and later, for example, see call gate on the Intel x86. It allows a program to call a kernel function directly using a safe control transfer mechanism, which the operating system sets up in advance. This approach has been unpopular on x86, presumably due to the requirement of a far call (a call to a procedure located in a different segment than the current code segment[11]) which uses x86 memory segmentation and the resulting lack of portability it causes, and the existence of the faster instructions mentioned above.

For IA-64 architecture, EPC (Enter Privileged Code) instruction is used. The first eight system call arguments are passed in registers, and the rest are passed on the stack.

In the IBM System/360 mainframe family, and its successors, a Supervisor Call instruction (SVC), with the number in the instruction rather than in a register, implements a system call for legacy facilities in most of[c] IBM's own operating systems, and for all system calls in Linux. In later versions of MVS, IBM uses the Program Call (PC) instruction for many newer facilities. In particular, PC is used when the caller might be in Service Request Block (SRB) mode.

The PDP-11 minicomputer used the EMT, TRAP and IOT instructions, which, similar to the IBM System/360 SVC and x86 INT, put the code in the instruction; they generate interrupts to specific addresses, transferring control to the operating system. The VAX 32-bit successor to the PDP-11 series used the CHMK, CHME, and CHMS instructions to make system calls to privileged code at various levels; the code is an argument to the instruction.

Categories of system calls

[edit]System calls can be grouped roughly into six major categories:[12]

- Process control

- create process (for example,

forkon Unix-like systems, orNtCreateProcessin the Windows NT Native API) - terminate process

- load, execute

- get/set process attributes

- wait for time, wait event, signal event

- allocate and free memory

- create process (for example,

- File management

- create file, delete file

- open, close

- read, write, reposition

- get/set file attributes

- Device management

- request device, release device

- read, write, reposition

- get/set device attributes

- logically attach or detach devices

- Information maintenance

- get/set total system information (including time, date, computer name, enterprise etc.)

- get/set process, file, or device metadata (including author, opener, creation time and date, etc.)

- Communication

- create, delete communication connection

- send, receive messages

- transfer status information

- attach or detach remote devices

- Protection

- get/set file permissions

Processor mode and context switching

[edit]System calls in most Unix-like systems are processed in kernel mode, which is accomplished by changing the processor execution mode to a more privileged one, but no process context switch is necessary – although a privilege context switch does occur. The hardware sees the world in terms of the execution mode according to the processor status register, and processes are an abstraction provided by the operating system. A system call does not generally require a context switch to another process; instead, it is processed in the context of whichever process invoked it.[13][14]

In a multithreaded process, system calls can be made from multiple threads. The handling of such calls is dependent on the design of the specific operating system kernel and the application runtime environment. The following list shows typical models followed by operating systems:[15][16]

- Many-to-one model: All system calls from any user thread in a process are handled by a single kernel-level thread. This model has a serious drawback – any blocking system call (like awaiting input from the user) can freeze all the other threads. Also, since only one thread can access the kernel at a time, this model cannot utilize multiple cores of processors.

- One-to-one model: Every user thread gets attached to a distinct kernel-level thread during a system call. This model solves the above problem of blocking system calls. It is found in all major Linux distributions, macOS, iOS, recent Windows and Solaris versions.

- Many-to-many model: In this model, a pool of user threads is mapped to a pool of kernel threads. All system calls from a user thread pool are handled by the threads in their corresponding kernel thread pool.

- Hybrid model: This model implements both many-to-many and one-to-one models depending upon the choice made by the kernel. This is found in old versions of IRIX, HP-UX and Solaris.

See also

[edit]Notes

[edit]References

[edit]- ^ IBM (March 1967). "Writing SVC Routines". IBM System/360 Operating System System Programmer's Guide (PDF). Third Edition. pp. 32–36. C28-6550-2.

- ^ "syscalls(2) - Linux manual page".

- ^ OpenBSD (14 September 2013). "System call names (kern/syscalls.c)". BSD Cross Reference.

- ^ NetBSD (17 October 2013). "System call names (kern/syscalls.c)". BSD Cross Reference.

- ^ "FreeBSD syscalls.c, the list of syscall names and IDs".

- ^ Mateusz "j00ru" Jurczyk (5 November 2017). "Windows WIN32K.SYS System Call Table (NT/2000/XP/2003/Vista/2008/7/8/10)".

{{cite web}}: CS1 maint: numeric names: authors list (link) - ^ "sys.h". Plan 9 from Bell Labs. Archived from the original on 8 September 2023, the list of syscall names and IDs.

- ^ "SYSENTER". OSDev wiki. Archived from the original on 8 November 2023.

- ^ Anonymous (19 December 2002). "Linux 2.5 gets vsyscalls, sysenter support". KernelTrap. Retrieved 1 January 2008.

- ^ Manu Garg (2006). "Sysenter Based System Call Mechanism in Linux 2.6".

- ^ "Liberation: x86 Instruction Set Reference". renejeschke.de. Retrieved 4 July 2015.

- ^ Silberschatz, Abraham (2018). Operating System Concepts. Peter B Galvin; Greg Gagne (10th ed.). Hoboken, NJ: Wiley. p. 67. ISBN 9781119320913. OCLC 1004849022.

- ^ Bach, Maurice J. (1986), The Design of the UNIX Operating System, Prentice Hall, pp. 15–16.

- ^ Elliot, John (2011). "Discussion of system call implementation at ProgClub including quote from Bach 1986". Archived from the original on 24 July 2012. Retrieved 1 October 2011.

- ^ "Threads".

- ^ "Threading Models" (PDF).

External links

[edit]- A list of modern Unix-like system calls

- Interactive Linux kernel map with main API functions and structures, PDF version

- Linux system calls – system calls for Linux kernel 2.2, with IA-32 calling conventions

- How System Calls Work on Linux/i86 (1996, based on the 1993 0.99.2 kernel)

- Sysenter Based System Call Mechanism in Linux 2.6 (2006)

- "Kernel command using Linux system calls". IBM developerWorks. 10 February 2010. Archived from the original on 11 February 2017.

- Choudhary, Amit; HOWTO for Implementing a System Call on Linux 2.6

- Jorrit N. Herder, Herbert Bos, Ben Gras, Philip Homburg, and Andrew S. Tanenbaum, Modular system programming on Minix 3, ;login: 31, no. 2 (April 2006); 19–28, accessed 5 March 2018

- A simple open Unix Shell in C language – examples on System Calls under Unix

- Inside the Native API – Windows NT Native API, including system calls

- Gulbrandsen, John; System Call Optimization with the SYSENTER Instruction, CodeGuru.com, 8 October 2004

- osdev wiki

System call

View on Grokipediaecall in RISC-V or syscall in x86-64 architectures—that triggers a controlled transition from user mode to kernel mode.[2][3] The kernel then validates the request, executes the operation using arguments passed through CPU registers, and returns control to the user program with the results, restoring the saved state.[1] This process abstracts complex kernel functionalities into a standardized API, with each operating system defining its own set of available calls, such as POSIX-compliant ones in Unix-like systems.[4]

Common examples include fork() for creating child processes, exec() for loading new programs, read() and write() for file I/O, and waitpid() for process synchronization, all of which are essential for application development and system resource management.[1] By encapsulating hardware interactions, system calls enable portability across architectures while enforcing isolation between user code and the kernel, preventing unauthorized access or crashes from propagating.[2]

Fundamentals

Definition and Role

A system call is a programmatic mechanism by which a computer program running in user space requests a service from the operating system's kernel, such as hardware abstraction, resource allocation, or process management.[5] These requests allow applications to access kernel-managed resources without needing to understand underlying hardware details, providing a standardized interface for system interactions.[6] The core role of system calls is to enable the safe and controlled execution of privileged operations—like input/output (I/O) handling and memory management—while preventing user programs from gaining direct access to the kernel or hardware, which could compromise system stability.[7] This mediation contrasts with non-operating system environments, where programs interact directly with hardware, often at the risk of errors or conflicts. System calls originated to enforce security boundaries and abstraction layers in operating systems, ensuring that only authorized operations can affect critical resources.[8] In modern multitasking operating systems such as Unix, Linux, and Windows, system calls are essential for maintaining the distinction between user space (where applications run with limited privileges) and kernel space (a protected mode for system-wide operations), thereby supporting secure resource sharing among multiple processes.[9] For instance, the Linux kernel 6.17 supports 356 system calls on the x86_64 architecture as of October 2025, illustrating the scale of services available while varying across operating systems and versions.[10]Historical Evolution

The concept of system calls emerged in the 1960s with early mainframe operating systems, where they served as a mechanism to transition from user mode to privileged supervisor mode for accessing kernel services. IBM's OS/360, released in 1966, introduced the Supervisor Call (SVC) instruction as a dedicated hardware mechanism to invoke these privileged operations, enabling controlled access to system resources like I/O and memory management while maintaining protection boundaries.[11] This design was influenced by contemporary efforts in protected procedure calls, notably in the Multics operating system developed from 1965 onward, which used ring-based protection to enforce secure transitions during procedure invocations, laying groundwork for modular and secure system interfaces.[12] In the 1970s, Unix on Digital Equipment Corporation's PDP-11 minicomputers standardized system call interfaces through trap instructions, simplifying the invocation of kernel services from user programs. The PDP-11's TRAP instruction triggered a software interrupt to enter the kernel, handling operations such as file access and process creation via a numbered table of services, which promoted a clean separation between user and kernel code.[13] This approach carried over to Unix ports on VAX systems, where similar trap mechanisms ensured compatibility and efficiency in multi-user environments, influencing the evolution toward portable and standardized OS designs. The transition from low-level assembly macros—used initially to wrap these traps—to support in high-level languages like C further democratized system call usage, allowing developers to abstract hardware details while retaining direct kernel access. The 1980s saw the formalization of system call portability through POSIX standards, driven by the IEEE's P1003.1 effort starting in 1984 and culminating in the 1988 standard, which defined a core set of system calls for process, file, and signal management to enable application compatibility across Unix-like systems.[14] Concurrently, Microsoft's MS-DOS relied on software interrupts like INT 21h to dispatch a wide range of services, from disk I/O to console output, providing a simple but non-portable interface for early personal computing. By the 1990s, Windows NT shifted to a more robust Native API, with functions prefixed Nt or Zw in ntdll.dll serving as the entry points for system calls into the kernel, supporting protected subsystems and enhancing stability over DOS's interrupt-based model.[15] Post-2000 developments in Windows emphasized security mitigations around system calls, including kernel patch protection in Windows Vista (2007) to prevent unauthorized modifications and address space layout randomization to obscure call patterns from exploits. In parallel, Linux expanded its syscall table to address performance and security needs; for instance, io_uring was introduced in kernel 5.1 (May 2019) as an asynchronous I/O interface using shared ring buffers to reduce syscall overhead for high-throughput applications.[16] eBPF-related enhancements, building on the bpf() syscall available since kernel 3.18 but significantly extended in the 2020s, enabled programmable kernel hooks for networking and tracing without module loading. [17] Post-2020, confidential computing integrations added kernel support, including ioctls and modules, for trusted execution environments, such as those supporting attestation and memory encryption in x86 virtualization under technologies like Intel TDX and AMD SEV-SNP.[18][19] The 2010s brought impacts from virtualization, where hypervisors like KVM and Xen introduced hypercalls—analogous to system calls but for guest-to-hypervisor communication—to manage virtual resources efficiently, with innovations like hyperupcalls reversing the flow for event notifications and improving scalability in cloud environments.[20] These milestones collectively shifted system calls from hardware-specific traps to abstracted, secure, and performant interfaces supporting modern distributed systems.Mechanism of Operation

Privilege Levels and Modes

System calls depend on hardware-enforced privilege levels to isolate user-mode processes from kernel operations, preventing direct manipulation of critical system resources and ensuring kernel integrity. In the x86 architecture, privilege levels are organized into four rings, with ring 0 reserved for kernel-mode execution—granting full access to hardware instructions and memory—and ring 3 for user-mode applications, which are restricted from privileged operations to avoid unauthorized access or system compromise.[21] This ring-based model enforces protection by checking the current privilege level before allowing access to sensitive instructions, such as those modifying page tables or I/O ports.[21] Comparable structures appear in other instruction set architectures to achieve similar isolation. ARM processors utilize exception levels, where EL0 executes unprivileged user-mode code for applications, while EL1 runs the kernel with elevated privileges to manage system resources securely.[22] In RISC-V, user mode (U-mode) confines application execution to limited capabilities, supervisor mode (S-mode) supports operating system tasks like virtual memory management, and machine mode (M-mode) provides the highest privilege for low-level hardware control, all designed to block unauthorized resource access through mode-specific register and instruction restrictions.[23] The transition to kernel mode during a system call temporarily elevates privileges, enabling controlled access to hardware while user mode remains barred from direct interactions, such as memory-mapped I/O or interrupt handling. This elevation occurs via architecture-specific mechanisms, like the SYSCALL instruction in x86 or exception raising in ARM, which switch the processor state without exposing kernel internals to user code.[21][22] Privilege levels integrate with broader security models, including capability-based and access control list (ACL) approaches, where hardware modes underpin enforcement. Capability-based systems, pioneered by Dennis and Van Horn, rely on tamper-proof tokens that confer specific rights, with privilege rings ensuring only kernel-mode code can validate or revoke them.[24] In contrast, ACL systems, as detailed in Saltzer's analysis of Multics, attach permission lists to objects, enforced by hardware privileges that restrict user-mode alterations to these lists.[12] Core enforcement tools include page tables, which validate memory accesses against the current privilege level to prevent user-mode violations, and segment descriptors in x86, which specify access rights and boundaries per memory segment.[21] Historical precedents include supervisor mode in IBM's System/360 architecture, which separated privileged kernel operations from unprivileged problem-state user programs, establishing early hardware-based protection for mainframe environments.[25] Modern enhancements bolster these modes against evolving threats. Intel's Control-flow Enforcement Technology (CET), deployed in processors from the early 2020s, introduces shadow stacks to safeguard return addresses during privilege transitions in system calls, countering control-flow hijacking attacks without altering core ring semantics.[26] Complementing this, Linux's seccomp framework filters system calls using BPF programs, allowing kernel-mode enforcement of user-defined restrictions to limit privilege elevations and reduce attack surfaces in contemporary deployments.[27]Context Switching and Kernel Entry

When a user program issues a system call, it executes a special instruction that triggers a trap or software interrupt, causing the processor to automatically save the current user-mode registers (including the program counter, stack pointer, and general-purpose registers) onto the kernel stack and switch to kernel mode.[28] The kernel's entry point routine then performs additional state preservation, such as saving the system call number and parameters from user registers, validates them to prevent invalid or malicious requests, and dispatches the appropriate kernel handler to execute the operation on the per-process kernel stack.[29] This process ensures that the kernel operates with elevated privileges while maintaining isolation from user space. Unlike a full context switch during thread or process scheduling—which involves saving the entire process state and loading another—a system call entry reuses the invoking process's address space, page tables, and thread context, avoiding the higher overhead of scheduler involvement.[30] Upon completion of the kernel work, control returns to user mode by restoring the saved registers and resuming execution at the point following the system call instruction, typically using specialized return mechanisms that efficiently handle the mode transition.[31] The latency of this kernel entry and exit is a key performance factor, typically ranging from 50 to 700 CPU cycles on modern x86 processors for simple calls, influenced by factors like register save/restore operations and branch predictions.[32] [33] Optimizations, such as maintaining separate user and kernel stacks per process to minimize stack pointer adjustments and caching frequent validation paths, help reduce this overhead in production kernels. System calls handle errors by returning a negative value representing the negated errno code (e.g., -13 for EACCES) directly to the kernel's caller, which user-space libraries interpret to set the global errno variable and return -1 to the application; in cases of interruption or unrecoverable issues, the kernel may instead deliver a signal to the process. In contemporary containerized cloud environments of the 2020s, such as those employing gVisor for enhanced isolation, system calls from containerized workloads are often intercepted by a user-space runtime and proxied to the host kernel, introducing an additional mediation layer that modifies the entry flow for security without altering the core process.[34]Implementation Approaches

Interrupt-Based Methods

Interrupt-based methods for system calls rely on software-generated exceptions to transition from user mode to kernel mode, allowing user programs to request operating system services securely. In these approaches, a dedicated instruction triggers an interrupt, which the processor handles by saving the current context, switching privilege levels, and invoking a kernel-resident handler. The handler then examines a system call number passed via a register or immediate value to dispatch the appropriate kernel routine, restoring user context upon completion. This mechanism ensures isolation while providing a uniform entry point for kernel services across various architectures.[35] On x86 architectures, the INT n instruction serves this purpose, where n specifies the interrupt vector; for system calls, INT 0x80 was commonly used in early implementations, with the call number loaded into the EAX register for dispatch by the kernel's interrupt descriptor table (IDT) handler. Similarly, ARM processors employ the Supervisor Call (SVC) instruction, often encoded as SVC #0 with the system call number in a general-purpose register like R7 (in AArch32) or X8 (in AArch64), prompting the exception vector table to route control to the SVC handler for parameter validation and execution. These instructions emulate hardware interrupts but are explicitly invoked by software, incurring the full overhead of exception processing, including stack frame setup and privilege level changes.[35][36] Historically, interrupt-based methods trace back to mainframe systems, such as the IBM System/360, where the SVC instruction (introduced in 1964) allowed problem-state programs to request supervisor services by specifying a function code in the instruction's opcode field, with the operating system using it for tasks like I/O initiation and memory allocation. In the PDP-11 minicomputer family, developed by Digital Equipment Corporation in the late 1960s, the EMT (Emulate T) instruction handled system calls in early UNIX implementations, while the TRAP instruction provided a general exception mechanism, both vectoring to kernel routines via a fixed memory location for service dispatch. By the 1980s, MS-DOS utilized INT 21h as its primary interface, where subfunction codes in the AH register enabled over 100 services, from file operations to console I/O, making it a cornerstone of PC software development. In Linux's initial x86 ports during the 1990s, INT 0x80 served as the standard entry point for ia32 binaries, with the kernel's ia32_syscall handler managing compatibility until its deprecation in favor of faster alternatives around the mid-2000s due to performance limitations.[37][38][39] The simplicity of interrupt-based methods offers advantages in portability and ease of implementation, as they leverage existing hardware exception infrastructure without requiring specialized instructions, making them suitable for early or resource-constrained systems. However, they introduce significant performance overhead from full interrupt handling, including mandatory context saves, IDT lookups, and mode switches, which is significantly slower than modern optimized paths—benchmarks show INT 0x80 can be several times costlier than fast syscall alternatives on contemporary hardware. This latency stems from the general-purpose nature of interrupts, designed for asynchronous events rather than frequent, synchronous kernel requests.[35][40] Despite their decline in general-purpose computing, interrupt-based methods persist in legacy systems, real-time operating systems (RTOS), and embedded environments as of 2025, particularly in IoT devices using microcontrollers like ARM Cortex-M. For instance, FreeRTOS employs the SVC instruction to initiate privileged operations and start the scheduler, ensuring deterministic behavior in resource-limited settings where simplicity outweighs raw speed, while avoiding the complexity of dedicated syscall instructions. These uses maintain compatibility with older hardware and prioritize reliability in safety-critical applications over throughput.Fast Syscall Instructions

Fast syscall instructions represent hardware-optimized mechanisms for invoking system calls, designed to minimize the overhead associated with transitioning from user mode to kernel mode compared to traditional interrupt-based approaches. These instructions enable direct, low-latency entry into the operating system kernel by leveraging specialized processor features, such as model-specific registers (MSRs), to preconfigure exception handlers and avoid the full interrupt processing pipeline. Introduced primarily in the late 1990s and early 2000s, they address the performance bottlenecks in high-frequency system call scenarios, such as in server workloads or real-time applications, by reducing context switch times.[41][42][43] In the x86 architecture, the SYSENTER and SYSCALL instructions provide the core of fast syscall implementations. SYSENTER, introduced by Intel in the Pentium II processor in 1997, pairs with SYSEXIT for rapid kernel entry and return, using MSRs like IA32_SYSENTER_CS, IA32_SYSENTER_ESP, and IA32_SYSENTER_EIP to store segment, stack pointer, and instruction pointer values for immediate setup. SYSCALL, originally specified by AMD in 1997 and integrated into x86-64 extensions around 2003, operates similarly but loads the kernel entry point directly from the IA32_LSTAR MSR, saving the return address in RCX and the instruction pointer in RFLAGS for efficient exit via SYSRET. These instructions bypass the general interrupt descriptor table (IDT) lookup and vectoring overhead, enabling faster privilege level changes without emulating a full trap.[41][44][42] Across other architectures, equivalent instructions follow a similar model of direct exception generation. On ARM processors, the Supervisor Call (SVC) instruction triggers a supervisor exception for system calls, with the Vector Base Address Register (VBAR) configuring the base address of the exception vector table to route execution to the kernel handler; this setup allows immediate mode switching to EL1 (formerly SVC mode) without interrupt latency. In RISC-V, the ECALL (Environment Call) instruction generates a trap from user mode (U-mode) to supervisor mode (S-mode), invoking a handler whose base address is configured in the stvec CSR as per the privileged architecture specification, with arguments passed via general-purpose registers a0–a7 (where a7 holds the syscall number). These mechanisms ensure architecture-specific optimizations while maintaining a consistent low-overhead profile.[45][46][47] Adoption of fast syscall instructions became widespread in major operating systems during the 2000s, driven by their performance advantages. In Linux, kernels from version 2.6 (released in 2003) onward defaulted to SYSENTER or SYSCALL on supported x86 hardware via the vsyscall page mechanism, with full integration in 64-bit environments; by kernel 6.x series (2022 onward), these instructions are standard across x86, ARM, and RISC-V ports, with the kernel entry assembly (e.g., entry_SYSCALL_64 in arch/x86/entry/entry_64.S) handling the transition and argument validation. Microsoft Windows adopted SYSCALL for x64 systems starting with Windows Vista in 2007, replacing older interrupt methods in the Native API for improved efficiency. Benchmarks on modern hardware show that fast syscalls significantly reduce latency compared to interrupt-based methods, though exact figures vary by processor generation, workload, and mitigations.[48][49][50] Recent enhancements have focused on security without sacrificing speed. Post-2020 AMD processors, such as those in the Zen 3 and Zen 4 families, incorporate mitigations like enhanced SYSRET handling to address speculative execution vulnerabilities (e.g., improved branch prediction for return paths), ensuring secure indirect branches during syscall exits. Apple Silicon (M-series chips, introduced in 2020) leverages ARM's SVC instruction with customized vector table setups in the XNU kernel, optimized for the integrated SoC architecture. These developments, including cross-architecture support in Linux 6.x for RISC-V ECALL and ARM SVC, highlight ongoing refinements for performance-critical and secure computing environments.[51][52]Abstraction Layers

System Libraries

System libraries act as essential intermediaries in the interaction between user-space applications and the operating system kernel, encapsulating low-level system calls within higher-level wrapper functions to simplify development and conceal kernel-specific intricacies. Prominent examples include glibc on Linux systems, which provides a comprehensive set of wrappers for nearly all system calls; libc on BSD variants, offering similar POSIX-compliant interfaces; and ntdll.dll on Windows, which houses native API stubs that bridge user-mode code to kernel services.[53][54] These wrappers deliver key benefits such as automated error handling, where kernel return values are mapped to portable error codes like errno for consistent application feedback; rigorous parameter validation to prevent invalid inputs from reaching the kernel; and preservation of binary compatibility, allowing programs to remain functional across kernel updates without modification.[55][56] By standardizing these aspects, libraries reduce the risk of kernel-induced crashes and enhance overall system reliability. At their core, wrapper functions operate by preparing system call arguments in appropriate registers or stack locations and then triggering the kernel transition via specialized assembly instructions or macros. In glibc, for example, this involves three main approaches: auto-generated assembly stubs derived from syscall lists for straightforward invocations; C-based macros like INLINE_SYSCALL_CALL for inline execution with cancellation support; and bespoke custom code for cases requiring unique semantics, such as legacy compatibility.[53] These mechanisms ensure efficient, low-overhead transitions while integrating features like thread cancellation. The development of such libraries traces back to the early 1970s, when C libraries emerged alongside Unix to support the kernel's rewrite in C, with foundational components like portable I/O routines enabling cross-platform deployment on hardware such as the PDP-11. This foundation evolved into more specialized forms, including language-specific bindings like the Java Native Interface (JNI), which permits Java code to invoke native C or C++ routines that execute system calls, thereby fostering modularity in environments like microkernels where kernel services are minimal and extended via user-space components.[57][58] A critical aspect is that not every library function equates to a direct system call; for instance, buffered I/O routines in C libraries may batch multiple underlying invocations for performance optimization, managing the complete interaction lifecycle internally without kernel exposure.[56] This selective layering underscores the libraries' role in balancing efficiency and abstraction.API Wrappers and Portability

API wrappers abstract low-level system calls into higher-level interfaces, facilitating easier development and cross-operating system compatibility. In Unix-like systems, the POSIX API defines standardized functions such asread() and write(), which map directly to underlying system calls provided by the kernel, ensuring consistent behavior for file I/O operations across compliant implementations.[59] Similarly, on Windows, the Win32 API acts as an abstraction layer over the NT Native API, where functions like ReadFile() invoke kernel-mode system services that ultimately execute NT system calls for resource access.[15]

Portability is enhanced through standards and emulation tools that unify syscall-like interfaces. The Single UNIX Specification (SUS), aligned with IEEE Std 1003.1, mandates a common programming environment across Unix variants, promoting source code portability by specifying interfaces that mimic system call semantics for processes, files, and signals.[60] For non-Unix platforms, Cygwin provides a POSIX-compliant layer on Windows via its core DLL (cygwin1.dll), which translates API calls to native Windows system calls, allowing Unix applications to run after recompilation without full OS emulation.[61]

Despite these abstractions, challenges persist due to inconsistencies in system call implementations, such as differing syscall numbers—for example, the open() syscall is number 2 on x86_64 Linux but 5 on x86_64 FreeBSD—complicating direct binary portability.[62] Dynamic linking addresses this by enabling runtime resolution of syscall interfaces through shared libraries like glibc, which adapt to the host kernel's specifics without recompilation.[63]

In contemporary computing, container runtimes extend portability by managing syscall access in isolated environments; for instance, Docker integrates with gVisor, a user-space kernel that intercepts and proxies syscalls via mechanisms like seccomp-BPF, forwarding only necessary ones to the host for security and compatibility.[34] The Windows Subsystem for Linux (WSL) further bridges ecosystems, with WSL2 employing a lightweight virtual machine hosting a genuine Linux kernel for direct syscall execution, and 2025 updates including its open-sourcing to enhance interop and translation layers for hybrid workloads.[64]

Optimizations like the Virtual Dynamic Shared Object (VDSO) refine wrapper efficiency by mapping kernel-provided code into user space, allowing time-sensitive syscalls such as gettimeofday() to execute without full kernel transitions, thus reducing overhead in portable applications.[65]

Categories of System Calls

Process Management

System calls for process management enable the creation, termination, monitoring, and control of processes and threads, forming the foundation of multitasking operating systems. In Unix-like systems, process creation typically involves thefork() system call, which duplicates the calling process to produce a child process sharing the same code, data, and open files, but with a distinct process ID (PID) assigned by the kernel for unique identification and resource allocation.[66] The child process then often uses one of the exec() family of calls, such as execve(), to replace its program image with a new one while retaining the PID and open resources. This two-step model separates duplication from image replacement, allowing flexible initialization before execution. In contrast, Windows employs the CreateProcess() API, which combines process creation and image loading in a single call, launching a new process and its primary thread in the caller's security context, with the kernel allocating a PID and handling resource inheritance like environment variables.[67]

For process termination, the exit() system call in Unix-like systems terminates the calling process, releasing its resources and returning an exit status to the parent, while the wait() or waitpid() calls allow the parent to suspend execution until a child terminates, retrieving its status to manage cleanup and avoid zombie processes.[68] These calls ensure orderly lifecycle management, with the kernel reclaiming memory, file descriptors, and other resources upon exit. System calls like getpid() provide process identification by returning the current PID, essential for logging, synchronization, and resource naming, while setuid() alters the effective user ID to enforce security controls, such as privilege escalation or dropping, typically restricted to privileged processes.[69]

Thread management builds on process creation primitives, particularly in Linux, where the clone() system call creates threads or processes with fine-grained control over shared resources like memory and file descriptors via flags (e.g., CLONE_VM for shared address space).[70] The POSIX pthread_create() function serves as a portable wrapper, invoking clone() under the Native POSIX Thread Library (NPTL) to implement one-to-one threading, where each user thread maps to a kernel thread for efficient multi-core utilization.[71] Scheduling cooperation is facilitated by sched_yield(), which voluntarily relinquishes the CPU to peers at the same priority, moving the caller to the end of the run queue without blocking, thus preventing starvation in contended scenarios.[72]

Process and thread lifecycle models influence how these calls operate, with the many-to-one model mapping multiple user-level threads to a single kernel thread for lightweight creation but limiting parallelism since blocking user threads stall the entire process on multi-core systems.[73] The one-to-one model, adopted in modern Unix-like kernels like Linux's NPTL, assigns a kernel thread per user thread, enabling true concurrency across cores but increasing overhead from kernel involvement in creation and context switches.[71] Variations persist across systems; for instance, Windows CreateProcess() inherently supports threaded execution via its primary thread, differing from Unix's fork-exec separation. Recent enhancements, such as Linux's clone3() introduced in kernel 5.3 (2019), extend clone() with a struct-based interface for advanced features like namespace isolation and flexible stack allocation, improving efficiency in containerized and multi-threaded environments on multi-core hardware.[70] Post-2020 developments, including scheduler refinements in Linux 6.6's Earliest Eligible Virtual Deadline First (EEVDF), further optimize thread placement for better multi-core responsiveness without altering core system call interfaces.

File and Device Operations

System calls for file and device operations form the core mechanism by which user programs interact with persistent storage and peripherals in operating systems, providing a uniform abstraction over diverse hardware through kernel-mediated access. In Unix-like systems adhering to POSIX standards, the primary file operations revolve around the open(), read(), write(), and close() system calls, which manage file descriptors—small integers serving as handles to kernel resources. The open() call initializes access to a file or device by path, specifying mode (e.g., read-only, write-append) and performing initial permission verification against the process's effective user ID and group ID; it returns a non-negative file descriptor on success or -1 on failure. Subsequent read() and write() calls transfer data between a user-provided buffer and the associated resource, with the kernel handling any necessary device-specific mapping without imposing user-space buffering—these calls are unbuffered at the syscall level to ensure direct control, though standard C libraries like libc implement buffering atop them to amortize overhead. Finally, close() releases the file descriptor, flushing any pending kernel buffers and freeing resources. Additional operations enhance file manipulation: lseek() repositions the file offset for non-sequential access, allowing seeks relative to the start, current position, or end of the file, which is essential for random I/O patterns on both files and devices.[74] Permission management occurs via chmod(), which alters the file mode bits (e.g., read, write, execute for owner, group, others) in the inode, enforcing discretionary access control at the kernel level during subsequent operations. Buffering distinctions are critical: while syscalls directly manage kernel page caches for efficiency, user-space libraries handle stream buffering to batch small reads/writes, reducing syscall frequency without altering the kernel's unbuffered interface. For devices, Unix-like kernels distinguish between character devices, which provide sequential byte-stream access (e.g., terminals via /dev/tty), and block devices, which operate on fixed-size blocks (typically 512 bytes or more) for random access (e.g., disks via /dev/sda). The mount() system call attaches a block device's filesystem to the directory hierarchy, enabling transparent access through the VFS layer. Device-specific control uses ioctl(), a versatile syscall for non-standard operations like setting baud rates on serial ports or querying hardware status, passing command codes and arguments tailored to the device driver.[75] Memory mapping via mmap() allows direct user-space access to device memory or file contents, bypassing read/write for high-performance scenarios like graphics buffers or shared memory devices. The Virtual File System (VFS) in Unix-like kernels, such as Linux, serves as an abstraction layer that intercepts these syscalls, routing them to appropriate filesystem or device drivers while maintaining a consistent interface across types like ext4 or NFS; it handles path resolution, caching, and permission enforcement uniformly.[76] For modern asynchronous I/O, Linux introduced io_uring in kernel version 5.1 (March 2019), using shared ring buffers for submission and completion queues to batch operations and minimize context switches, significantly improving throughput for high-IOPS workloads on files and block devices compared to older AIO interfaces. In Windows, analogous functionality is provided by Win32 API functions that operate on handles rather than file descriptors: CreateFile() opens or creates files/devices with specified access rights (e.g., GENERIC_READ), security attributes, and sharing modes, returning a handle after performing access checks. ReadFile() and WriteFile() transfer data synchronously or asynchronously to/from the handle, supporting overlapped I/O for devices via completion ports, with the kernel managing buffering in its cache.[77] CloseHandle() releases the handle, akin to close(). Security in these operations centers on file descriptor (or handle) ownership, where the kernel enforces access at syscall entry: for instance, read/write attempts validate the descriptor against current permissions, process credentials, and mandatory controls if enabled, preventing unauthorized I/O even if the descriptor is inherited. Recent advancements address storage performance gaps; in Linux kernel 6.17 (released September 2025), the fallocate() syscall gained NVMe-specific optimizations, leveraging Write Zeroes commands to efficiently zero-range allocate on SSDs, reducing latency for large-scale file operations.[78]Communication and Protection

System calls for interprocess communication (IPC) enable processes to exchange data and coordinate actions in operating systems. In Unix-like systems, thepipe() system call creates a unidirectional byte stream for parent-child processes, where data written to the write end by one process can be read from the read end by another, facilitating simple data transfer without shared memory. System V IPC mechanisms provide more advanced options, such as message queues controlled by msgctl(), which allows operations like removing a queue with the IPC_RMID command to free resources after communication. Shared memory segments are allocated via shmget(), using flags like IPC_CREAT to create new segments and shmflg to specify protection modes, ensuring controlled access based on permissions.

In Windows, named pipes support bidirectional IPC between related or unrelated processes through the CreateNamedPipe API, which establishes a server endpoint for client connections, often used for client-server architectures within the system.[79] Mailslots offer a lightweight, one-way broadcast mechanism for IPC, created with CreateMailslot and allowing messages to be sent to multiple recipients via a hierarchical name structure, suitable for simple notifications.[80] Network-based IPC is handled by socket system calls; in Unix, socket() creates a socket descriptor, bind() associates it with a local address, and connect() establishes a connection to a remote endpoint, enabling communication over protocols like TCP. Windows implements similar functionality through the Winsock API, where socket() allocates a descriptor bound to a transport provider for subsequent bind and connect operations.[81]

Synchronization system calls ensure orderly access to shared resources in IPC scenarios. The semop() call performs atomic operations on System V semaphores, such as wait (decrement) or signal (increment), to coordinate multiple processes accessing shared memory or other IPC objects. Mutex wrappers, often built atop POSIX threads but ultimately relying on kernel synchronization primitives, provide mutual exclusion for critical sections, though direct system calls like futex (fast user-space mutex) underlie efficient implementations. Signals for interprocess notification are sent using kill(), which delivers a specified signal to a target process or process group, serving as a lightweight synchronization mechanism for events like termination or resource availability.

Protection mechanisms in system calls enforce security and resource isolation during communication. The setrlimit() call sets or retrieves resource limits for a process, such as maximum file size or CPU time, preventing abuse in IPC contexts by capping shared resource usage. Identity-based protection is provided by getuid(), which returns the real user ID of the calling process, enabling checks against access permissions for IPC objects like pipes or shared memory. Auditing system calls, such as Linux's audit_write() introduced in kernel version 2.6.6 around 2004, allow writing records to the audit log for security monitoring of IPC and protection events.

Modern enhancements address evolving protection needs in IPC. Extended Berkeley Packet Filter (eBPF) programs, integrated into the Linux kernel since version 4.1 in 2015 with significant expansions in the 2020s, enable dynamic hooking of system calls for runtime monitoring and enforcement, such as filtering unauthorized IPC access without modifying kernel code. Gaps in traditional protection, like vulnerability to privileged attacks, are mitigated by confidential computing technologies; for instance, Intel Software Guard Extensions (SGX) provides system calls and ioctls (e.g., via /dev/sgx) to create and manage hardware-isolated enclaves for secure IPC within trusted execution environments.

Key concepts in these system calls include ownership models for IPC resources, where System V objects like shared memory segments have owner and group IDs with permission bits (read, write, alter) enforced at allocation, similar to file permissions. POSIX standards emphasize access control through these ownership attributes, while extensions like POSIX Access Control Lists (ACLs) allow finer-grained permissions on shared memory objects, managed via library calls that invoke underlying system interfaces for secure multi-user access.

Examples and Tools

Unix-like Systems

In Unix-like systems, system calls provide the primary interface for user-space programs to request services from the kernel, such as process creation and file operations. A canonical example is thefork() system call, which creates a new child process by duplicating the calling process; it returns the child's process ID to the parent and zero to the child upon success. Another fundamental call is open(), which opens a file and returns a file descriptor; for instance, open("/path/to/file", O_RDONLY) attempts to open the file in read-only mode, returning a non-negative integer descriptor on success or -1 on failure.

System call numbers uniquely identify each call within the kernel's interface table. In x86_64 Linux, these are defined in headers like arch/x86/entry/syscalls/syscall_64.tbl, with examples including __NR_read as 0 for reading from a file descriptor, __NR_open as 2 for opening files, __NR_fork as 57 for process creation, and __NR_clone as 220 for creating processes or threads depending on flags like CLONE_THREAD.[82] The clone() call, in particular, enables thread creation by sharing the parent's memory space when the CLONE_VM flag is set, forming the basis for POSIX threads in Linux.[83] In FreeBSD, syscall numbers differ but follow a similar structure, with over 600 calls available across architectures as of FreeBSD 14.2 (May 2024).[84][85]

Invocation typically occurs through library wrappers, but direct kernel entry uses architecture-specific instructions. In x86_64 assembly on Linux, the syscall number is loaded into the %rax register, up to six arguments into %rdi, %rsi, %rdx, %r10, %r8, and %r9, followed by the syscall instruction to trigger the kernel transition; the return value appears in %rax, with negative values indicating errors.[86] For example, to invoke write(1, "hello", 5):

mov $1, %rax # Syscall number for write

mov $1, %rdi # [File descriptor](/page/File_descriptor) (stdout)

mov $msg, %rsi # Buffer address

mov $5, %rdx # Length

syscall

msg: .ascii "hello\0"

mov $1, %rax # Syscall number for write

mov $1, %rdi # [File descriptor](/page/File_descriptor) (stdout)

mov $msg, %rsi # Buffer address

mov $5, %rdx # Length

syscall

msg: .ascii "hello\0"

errno variable; for instance, open() fails with EACCES (errno 13) if the process lacks search permission on a component of the path or read permission on the file. Linux supports approximately 340 syscalls in recent kernels as of November 2025, such as 6.12, covering process management, file I/O, and more, while FreeBSD exceeds 600 as of version 14.2.[88][10][85]

Modern additions illustrate evolving capabilities; for example, Linux introduced fanotify_init() in kernel 2.6.36 (2010) for filesystem event monitoring, allowing applications to receive notifications on file access with syscall number 300 in x86_64, enabling advanced tools like antivirus scanners.[89][90] In C, a simple fork() usage appears as:

#include <sys/types.h>

#include <unistd.h>

#include <stdio.h>

#include <errno.h>

int main() {

pid_t pid = fork();

if (pid == -1) {

perror("fork failed"); // Prints error like "Permission denied" if EACCES

return 1;

} else if (pid == 0) {

printf("Child process\n");

} else {

printf("Parent: child PID %d\n", pid);

}

return 0;

}

#include <sys/types.h>

#include <unistd.h>

#include <stdio.h>

#include <errno.h>

int main() {

pid_t pid = fork();

if (pid == -1) {

perror("fork failed"); // Prints error like "Permission denied" if EACCES

return 1;

} else if (pid == 0) {

printf("Child process\n");

} else {

printf("Parent: child PID %d\n", pid);

}

return 0;

}

fork() resolve to the underlying syscall. Fuchsia, a modern capability-based OS with some Unix-like elements, integrates Rust for user-space components that interface with its Zircon kernel syscalls, such as zx_process_create for task management, enhancing safety.[91][92]

Windows Systems

In Windows NT-based operating systems, system calls are implemented through the Native API, a set of low-level functions primarily exported by the ntdll.dll library, which serve as the interface between user-mode applications and the kernel. Unlike the more standardized and publicly documented system calls in Unix-like systems, Windows system calls are largely proprietary and undocumented, with Microsoft providing official support only for higher-level Win32 APIs. This opacity stems from the NT kernel's design philosophy, which prioritizes stability and abstraction over direct exposure of kernel interfaces, resulting in a "thick" layer of the Win32 subsystem that translates application requests into Native API calls before they reach the kernel. The Native API consists of functions prefixed with Nt or Zw, such as NtCreateFile and ZwCreateFile, which are functionally equivalent in user mode but differ in their handling of buffer probing—Zw versions assume user-mode buffers, while Nt versions treat them as potentially kernel-mode. These functions invoke the kernel via numbered system service dispatch tables (SSDT), where each call is identified by a system service number (SSN), such as 0x00B3 for NtCreateUserProcess in recent Windows versions. System calls in Windows are typically initiated using fast syscall instructions like SYSENTER on x86 architectures or SYSCALL on x86-64, which trigger a mode switch from user to kernel space without relying on software interrupts like INT 0x2E in older implementations. For example, NtCreateFile, an undocumented Native API function used to create or open files and devices, performs the core file system operations and is one of approximately 500 such calls available across Windows versions as of Windows 11 (2021–2025), though only a subset is officially documented. A related example is ZwReadFile, which acts as a user-mode wrapper for reading from file handles asynchronously or synchronously, passing parameters like I/O buffers and event objects to the kernel for processing. These calls return an NTSTATUS code—a 32-bit value structured with severity, facility, and code fields—to indicate success (e.g., STATUS_SUCCESS, 0x00000000) or failure (e.g., STATUS_ACCESS_DENIED, 0xC0000022), providing more granular error reporting than traditional errno mechanisms in Unix. Asynchronous operations, such as overlapped I/O, often leverage user-mode Asynchronous Procedure Calls (APCs), where the kernel queues a callback routine to the target thread's APC queue, executed when the thread enters an alertable state, such as during a system call return or explicit wait. In modern Windows versions, particularly Windows 11 as of November 2025, new system calls have been added to support features such as Virtualization-based Security (VBS), which uses hardware virtualization to isolate sensitive kernel components like Credential Guard and Device Guard enclaves, introducing syscalls for hypervisor management and secure memory allocation. Due to the ongoing undocumentation of many Native APIs, community efforts have filled gaps by reverse-engineering and publishing syscall tables for Windows 11, cataloging SSNs for functions like NtCreatePartition for VBS-related partitioning, enabling developers and researchers to interact with these interfaces reliably across builds. This contrasts sharply with Unix-like systems, where syscall interfaces are openly specified in standards like POSIX, making Windows' approach more challenging for portability and direct kernel interaction.Tracing Tools

Tracing tools enable developers and system administrators to monitor and debug system calls invoked by running processes, capturing details such as entry and exit points, arguments, return values, and timings without altering the program's execution. These tools are essential for diagnosing issues like unexpected resource access or inefficient kernel interactions, operating by intercepting calls at the user-kernel boundary or within the kernel itself.[93][94] On Linux systems, strace is a widely used command-line utility that traces system calls and signals for a specified process or command, logging each call's name, arguments, and return status to standard output or a file. For instance, executingstrace -e trace=open ls limits tracing to file-open operations, revealing paths and error codes for debugging file access issues.[95] Similarly, Solaris employs truss, which traces system calls and signals with timing information to identify hangs or performance anomalies in processes.[96] For Windows, Event Tracing for Windows (ETW) provides kernel-level tracing of system calls through providers like the NT Kernel Logger, capturing events such as process creation and I/O operations; tools like logman can start sessions to log these to ETL files for later analysis using the Microsoft.Windows.EventTracing.Syscalls library.[97][98]

Kernel-integrated tracing in Linux, such as ftrace and kprobes, offers lower-level observation by hooking into kernel functions, including system call entry points, to record execution flows without user-space overhead. Ftrace, part of the kernel's tracing infrastructure, supports function graphing and event filtering via the tracefs filesystem, while kprobes allow dynamic breakpoints on any kernel instruction for custom data collection.[94][99] These mechanisms facilitate analysis for performance bottlenecks, such as excessive system call frequency, and security vulnerabilities, like unauthorized file accesses, by generating statistics on call counts and durations.[100]

In the 2020s, extended Berkeley Packet Filter (eBPF)-based tools have advanced system call tracing with programmable, efficient kernel probes that minimize overhead compared to traditional methods. bpftrace, a high-level scripting language built on eBPF, enables concise scripts to trace specific system calls system-wide, such as monitoring execve for process spawning, and aggregates metrics like latency histograms.[101] For containerized environments, sysdig addresses visibility gaps by capturing system calls and events directly from the kernel, providing container-aware filtering to troubleshoot microservices without host-level noise.[102]

Despite their utility, tracing tools introduce performance overhead, typically ranging from 5% to 20% slowdown for moderate workloads but potentially exceeding 100x in syscall-intensive scenarios due to interception and logging costs; they are observational only and cannot modify calls in real-time.[103][104]