Recent from talks

Nothing was collected or created yet.

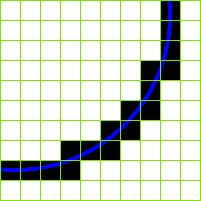

Sub-pixel resolution

View on Wikipedia

In digital image processing, sub-pixel resolution can be obtained in images constructed from sources with information exceeding the nominal pixel resolution of said images.[citation needed]

Example

[edit]This section needs expansion. You can help by adding missing information. (January 2011) |

For example, if the image of a ship of length 50 metres (160 ft), viewed side-on, is 500 pixels long, the nominal resolution (pixel size) on the side of the ship facing the camera is 0.1 metres (3.9 in). Now sub-pixel resolution of well resolved features can measure ship movements which are an order of magnitude (10×) smaller. Movement is specifically mentioned here because measuring absolute positions requires an accurate lens model and known reference points within the image to achieve sub-pixel position accuracy. Small movements can however be measured (down to 1 cm) with simple calibration procedures.[citation needed] Specific fit functions often suffer specific bias with respect to image pixel boundaries. Users should therefore take care to avoid these "pixel locking" (or "peak locking") effects.[1]

Determining feasibility

[edit]Whether features in a digital image are sharp enough to achieve sub-pixel resolution can be quantified by measuring the point spread function (PSF) of an isolated point in the image. If the image does not contain isolated points, similar methods can be applied to edges in the image. It is also important when attempting sub-pixel resolution to keep image noise to a minimum. This, in the case of a stationary scene, can be measured from a time series of images. Appropriate pixel averaging, through both time (for stationary images) and space (for uniform regions of the image) is often used to prepare the image for sub-pixel resolution measurements.[citation needed]

See also

[edit]References

[edit]- ^ "Accurate particle position measurement from images". Y. Feng, J. Goree, and Bin Liu, Review of Scientific Instruments, Vol. 78, 053704 (2007); also selected for Virtual Journal of Biological Physics Research, Vol. 13, (2007).

Sources

[edit]- Shimizu, M.; Okutomi, M. (2003). "Significance and attributes of subpixel estimation on area-based matching". Systems and Computers in Japan. 34 (12): 1–111. doi:10.1002/scj.10506. ISSN 1520-684X. S2CID 41202105.

- Nehab, D.; Rusinkiewiez, S.; Davis, J. (2005). "Improved sub-pixel stereo correspondences through symmetric refinement". Tenth IEEE International Conference on Computer Vision (ICCV'05) Volume 1. pp. 557–563. doi:10.1109/ICCV.2005.119. ISBN 0-7695-2334-X. ISSN 1550-5499. S2CID 14172959.

- Psarakis, E. Z.; Evangelidis, G. D. (2005). "An enhanced correlation-based method for stereo correspondence with subpixel accuracy". Tenth IEEE International Conference on Computer Vision (ICCV'05) Volume 1 (PDF). pp. 907–912. doi:10.1109/ICCV.2005.33. ISBN 0-7695-2334-X. ISSN 1550-5499. S2CID 2723727.

Sub-pixel resolution

View on GrokipediaFundamentals

Definition and Basic Principles

Sub-pixel resolution refers to techniques that exploit the spatial arrangement and color components of sub-pixels within a pixel to infer or render details at a scale smaller than one full pixel. In digital imaging systems, this approach leverages the fact that a pixel, the smallest addressable unit in an image or display, often consists of non-addressable sub-pixel elements, such as the red, green, and blue (RGB) components in liquid crystal displays (LCDs), to achieve higher effective detail without increasing the physical pixel count.[7][8] The basic principles of sub-pixel resolution build on the Nyquist-Shannon sampling theorem, which states that a continuous signal can be perfectly reconstructed from its samples if the sampling frequency is greater than twice the highest frequency component of the signal. By introducing sub-pixel level sampling—through shifts, color separation, or multiple frames—systems can effectively increase the sampling rate beyond the pixel grid, allowing reconstruction of higher-frequency spatial details that would otherwise be aliased or lost. This enables resolution enhancement by increasing the effective sampling density and allowing reconstruction of finer spatial details that would otherwise be lost to aliasing.[9][10] The concept of sub-pixel resolution first emerged in the 1980s amid early advancements in digital imaging, with seminal work on multi-frame restoration techniques that used sub-pixel displacements to overcome sensor limitations, evolving from foundational anti-aliasing methods in computer graphics developed during the same era.[10][11] These principles laid the groundwork for later applications, distinguishing sub-pixel approaches from simple pixel-level processing by emphasizing the exploitation of sub-pixel structure for superior perceptual or analytical outcomes.[12]Sub-pixel Structure in Imaging Systems

In color imaging systems, such as liquid crystal displays (LCDs) and light-emitting diode (LED) panels, a pixel is typically composed of three primary sub-pixels—red, green, and blue (RGB)—arranged to form full-color representations. These sub-pixels are physically distinct elements, each capable of independent luminance control, and are often organized in a vertical stripe pattern where red, green, and blue elements align sequentially within each horizontal pixel row. This structure allows the human eye to perceive a blended color at the pixel level while enabling finer spatial details through sub-pixel-level addressing.[13] In monochrome imaging sensors, sub-pixels refer to conceptual fractional sampling areas within the pixel grid, where light-sensitive elements capture intensity data at sub-pixel offsets to support interpolation and reduce aliasing in high-resolution reconstruction.[14] Variations in sub-pixel layouts exist across display and sensor technologies to optimize for factors like manufacturing efficiency and color fidelity. In traditional LCD and LED displays, the RGB stripe layout predominates, with sub-pixels forming continuous vertical columns across the screen for straightforward alignment and high color accuracy. In contrast, PenTile arrangements, commonly used in organic light-emitting diode (OLED) displays, employ an RGBG (red, green, blue, green) pattern where green sub-pixels are shared between adjacent pixels, reducing the total number of elements by approximately one-third compared to full RGB stripes while maintaining perceived resolution through the eye's higher sensitivity to green. This diamond-shaped or staggered layout enhances pixel density in compact devices. In CMOS image sensors, the Bayer filter pattern overlays color filters on the sensor array, such that each photosite (pixel) captures only one color channel—typically half green, one-quarter red, and one-quarter blue in a repeating 2x2 mosaic—effectively treating the filter-covered area as a sub-pixel for single-channel sampling before demosaicing reconstructs full color.[15][16] The sub-pixel structure contributes to resolution beyond the nominal pixel grid by leveraging spatial offsets between elements. For instance, in an RGB stripe layout, the red, green, and blue sub-pixels occupy one-third of the pixel width each, creating horizontal offsets of approximately 1/3 pixel spacing that theoretically triple the sampling points along the horizontal axis compared to treating the full pixel as a single unit. This enables enhanced perceived sharpness, particularly for edges and fine lines, as the eye integrates the offset luminances. Visually, the layout can be represented as a repeating horizontal sequence per row: [R | G | B | R | G | B], with identical alignment in subsequent rows, allowing spatial frequency analysis to reveal extended modulation transfer function (MTF) capabilities in the horizontal direction without introducing severe aliasing up to higher frequencies. In RGBG PenTile configurations, similar offsets between the two green sub-pixels and the red/blue pair provide a comparable boost, often achieving effective resolution gains through shared sampling that approximates full RGB density.[13][7] The evolution of sub-pixel structures reflects advancements in display hardware from early cathode ray tube (CRT) systems to modern flat panels. CRTs employed phosphor triads—triangular clusters of red, green, and blue dots excited by electron beams—arranged in a dense mosaic to form pixels, prioritizing shadow mask precision for color purity over individual addressing. As flat-panel technologies emerged, LCDs shifted to linear RGB stripe arrangements for easier fabrication and uniform backlighting, improving scalability for larger screens. Contemporary OLED displays have further evolved to Pentile RGBG layouts, which use fewer sub-pixels in a non-rectangular grid to achieve higher densities and energy efficiency, as self-emissive elements eliminate the need for uniform triads and enable flexible patterning.[17]Techniques

Sub-pixel Rendering in Displays

Sub-pixel rendering in displays is a software technique that enhances the perceived sharpness of text and graphics on color LCD screens by exploiting the sub-pixel structure of pixels, treating the red, green, and blue (RGB) components as independent luminances rather than a single unit. This approach reduces aliasing artifacts, particularly in horizontal directions, by allowing finer control over edge transitions. Pioneered by Microsoft with ClearType, announced in 1998, the method was designed to improve readability on LCD panels, which were becoming prevalent in laptops and monitors at the time.[3][18] The core process involves several key steps: first, font hinting adjusts glyph outlines to align optimally with the sub-pixel grid at small sizes, ensuring strokes fit the display's resolution; second, anti-aliasing applies sub-pixel sampling to smooth edges by modulating the intensity of individual sub-pixels; and third, gamma correction is performed per color channel to account for the non-linear response of LCDs, preventing color imbalances while preserving contrast. These steps collectively enable the renderer to position glyph features at sub-pixel precision, effectively tripling the horizontal resolution on RGB stripe layouts where sub-pixels are arranged linearly.[18][19] In LCD-optimized algorithms, rendering samples at 1/3-pixel intervals horizontally, mapping the font outline to a virtual grid three times denser than the pixel grid. For a basic sub-pixel anti-aliasing implementation, the process can be outlined as follows:For each horizontal scanline of the [glyph](/page/Glyph):

For each sub-pixel position (R, G, B) along the line:

Compute coverage = intersection of glyph edge with sub-pixel area

If coverage > threshold:

Set sub-pixel intensity = coverage * gamma-corrected value

Else:

Set sub-pixel intensity = 0

Apply channel-specific filtering to blend adjacent sub-pixels and reduce fringing

For each horizontal scanline of the [glyph](/page/Glyph):

For each sub-pixel position (R, G, B) along the line:

Compute coverage = intersection of glyph edge with sub-pixel area

If coverage > threshold:

Set sub-pixel intensity = coverage * gamma-corrected value

Else:

Set sub-pixel intensity = 0

Apply channel-specific filtering to blend adjacent sub-pixels and reduce fringing