Recent from talks

Nothing was collected or created yet.

Lookup table

View on Wikipedia

In computer science, a lookup table (LUT) is an array that replaces runtime computation of a mathematical function with a simpler array indexing operation, in a process termed as direct addressing. The savings in processing time can be significant, because retrieving a value from memory is often faster than carrying out an "expensive" computation or input/output operation.[1] The tables may be precalculated and stored in static program storage, calculated (or "pre-fetched") as part of a program's initialization phase (memoization), or even stored in hardware in application-specific platforms. Lookup tables are also used extensively to validate input values by matching against a list of valid (or invalid) items in an array and, in some programming languages, may include pointer functions (or offsets to labels) to process the matching input. FPGAs also make extensive use of reconfigurable, hardware-implemented, lookup tables to provide programmable hardware functionality. LUTs differ from hash tables in a way that, to retrieve a value with key , a hash table would store the value in the slot where is a hash function i.e. is used to compute the slot, while in the case of LUT, the value is stored in slot , thus directly addressable.[2]: 466

History

[edit]

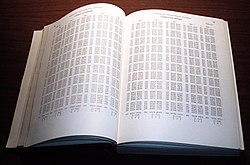

Before the advent of computers, lookup tables of values were used to speed up hand calculations of complex functions, such as in trigonometry, logarithms, and statistical density functions.[3]

In ancient (499 AD) India, Aryabhata created one of the first sine tables, which he encoded in a Sanskrit-letter-based number system. In 493 AD, Victorius of Aquitaine wrote a 98-column multiplication table which gave (in Roman numerals) the product of every number from 2 to 50 times and the rows were "a list of numbers starting with one thousand, descending by hundreds to one hundred, then descending by tens to ten, then by ones to one, and then the fractions down to 1/144"[4] Modern school children are often taught to memorize "times tables" to avoid calculations of the most commonly used numbers (up to 9 x 9 or 12 x 12).

Early in the history of computers, input/output operations were particularly slow – even in comparison to processor speeds of the time. It made sense to reduce expensive read operations by a form of manual caching by creating either static lookup tables (embedded in the program) or dynamic prefetched arrays to contain only the most commonly occurring data items. Despite the introduction of systemwide caching that now automates this process, application level lookup tables can still improve performance for data items that rarely, if ever, change.

Lookup tables were one of the earliest functionalities implemented in computer spreadsheets, with the initial version of VisiCalc (1979) including a LOOKUP function among its original 20 functions.[5] This has been followed by subsequent spreadsheets, such as Microsoft Excel, and complemented by specialized VLOOKUP and HLOOKUP functions to simplify lookup in a vertical or horizontal table. In Microsoft Excel the XLOOKUP function[further explanation needed] has been rolled out starting 28 August 2019.[citation needed]

Limitations

[edit]Although the performance of a LUT is a guaranteed for a lookup operation, no two entities or values can have the same key . When the size of universe —where the keys are drawn—is large, it might be impractical or impossible to be stored in memory. Hence, in this case, a hash table would be a preferable alternative.[2]: 468

Examples

[edit]Trivial hash function

[edit]For a trivial hash function lookup, the unsigned raw data value is used directly as an index to a one-dimensional table to extract a result. For small ranges, this can be amongst the fastest lookup, even exceeding binary search speed with zero branches and executing in constant time.[6]

Counting bits in a series of bytes

[edit]One discrete problem that is expensive to solve on many computers is that of counting the number of bits that are set to 1 in a (binary) number, sometimes called the population function. For example, the decimal number "37" is "00100101" in binary, so it contains three bits that are set to binary "1".[7]: 282

A simple example of C code, designed to count the 1 bits in a int, might look like this:[7]: 283

int count_ones(unsigned int x) {

int result = 0;

while (x != 0) {

x = x & (x - 1);

result++;

}

return result;

}

The above implementation requires 32 operations for an evaluation of a 32-bit value, which can potentially take several clock cycles due to branching. It can be "unrolled" into a lookup table which in turn uses trivial hash function for better performance.[7]: 282-283

The bits array, bits_set with 256 entries is constructed by giving the number of one bits set in each possible byte value (e.g. 0x00 = 0, 0x01 = 1, 0x02 = 1, and so on). Although a runtime algorithm can be used to generate the bits_set array, it's an inefficient usage of clock cycles when the size is taken into consideration, hence a precomputed table is used—although a compile time script could be used to dynamically generate and append the table to the source file. Sum of ones in each byte of the integer can be calculated through trivial hash function lookup on each byte; thus, effectively avoiding branches resulting in considerable improvement in performance.[7]: 284

int count_ones(int input_value) {

union four_bytes {

int big_int;

char each_byte[4];

} operand = input_value;

const int bits_set[256] = {

0, 1, 1, 2, 1, 2, 2, 3, 1, 2, 2, 3, 2, 3, 3, 4, 1, 2, 2, 3, 2, 3, 3, 4,

2, 3, 3, 4, 3, 4, 4, 5, 1, 2, 2, 3, 2, 3, 3, 4, 2, 3, 3, 4, 3, 4, 4, 5,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6, 1, 2, 2, 3, 2, 3, 3, 4,

2, 3, 3, 4, 3, 4, 4, 5, 2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6, 3, 4, 4, 5, 4, 5, 5, 6,

4, 5, 5, 6, 5, 6, 6, 7, 1, 2, 2, 3, 2, 3, 3, 4, 2, 3, 3, 4, 3, 4, 4, 5,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6, 2, 3, 3, 4, 3, 4, 4, 5,

3, 4, 4, 5, 4, 5, 5, 6, 3, 4, 4, 5, 4, 5, 5, 6, 4, 5, 5, 6, 5, 6, 6, 7,

2, 3, 3, 4, 3, 4, 4, 5, 3, 4, 4, 5, 4, 5, 5, 6, 3, 4, 4, 5, 4, 5, 5, 6,

4, 5, 5, 6, 5, 6, 6, 7, 3, 4, 4, 5, 4, 5, 5, 6, 4, 5, 5, 6, 5, 6, 6, 7,

4, 5, 5, 6, 5, 6, 6, 7, 5, 6, 6, 7, 6, 7, 7, 8};

return (bits_set[operand.each_byte[0]] + bits_set[operand.each_byte[1]] +

bits_set[operand.each_byte[2]] + bits_set[operand.each_byte[3]]);

}}

Lookup tables in image processing

[edit]This section possibly contains original research. (October 2021) |

"Lookup tables (LUTs) are an excellent technique for optimizing the evaluation of functions that are expensive to compute and inexpensive to cache. ... For data requests that fall between the table's samples, an interpolation algorithm can generate reasonable approximations by averaging nearby samples."[8]

In data analysis applications, such as image processing, a lookup table (LUT) can be used to transform the input data into a more desirable output format. For example, a grayscale picture of the planet Saturn could be transformed into a color image to emphasize the differences in its rings.

In image processing, lookup tables are often called LUTs (or 3DLUT), and give an output value for each of a range of index values. One common LUT, called the colormap or palette, is used to determine the colors and intensity values with which a particular image will be displayed. In computed tomography, "windowing" refers to a related concept for determining how to display the intensity of measured radiation.

Discussion

[edit]This section possibly contains original research. (October 2021) |

A classic example of reducing run-time computations using lookup tables is to obtain the result of a trigonometry calculation, such as the sine of a value.[9] Calculating trigonometric functions can substantially slow a computing application. The same application can finish much sooner when it first precalculates the sine of a number of values, for example for each whole number of degrees (The table can be defined as static variables at compile time, reducing repeated run time costs). When the program requires the sine of a value, it can use the lookup table to retrieve the closest sine value from a memory address, and may also interpolate to the sine of the desired value, instead of calculating by mathematical formula. Lookup tables can thus be used by mathematics coprocessors in computer systems. An error in a lookup table was responsible for Intel's infamous floating-point divide bug.

Functions of a single variable (such as sine and cosine) may be implemented by a simple array. Functions involving two or more variables require multidimensional array indexing techniques. The latter case may thus employ a two-dimensional array of power[x][y] to replace a function to calculate xy for a limited range of x and y values. Functions that have more than one result may be implemented with lookup tables that are arrays of structures.

As mentioned, there are intermediate solutions that use tables in combination with a small amount of computation, often using interpolation. Pre-calculation combined with interpolation can produce higher accuracy for values that fall between two precomputed values. This technique requires slightly more time to be performed but can greatly enhance accuracy in applications that require it. Depending on the values being precomputed, precomputation with interpolation can also be used to shrink the lookup table size while maintaining accuracy.

While often effective, employing a lookup table may nevertheless result in a severe penalty if the computation that the LUT replaces is relatively simple. Memory retrieval time and the complexity of memory requirements can increase application operation time and system complexity relative to what would be required by straight formula computation. The possibility of polluting the cache may also become a problem. Table accesses for large tables will almost certainly cause a cache miss. This phenomenon is increasingly becoming an issue as processors outpace memory. A similar issue appears in rematerialization, a compiler optimization. In some environments, such as the Java programming language, table lookups can be even more expensive due to mandatory bounds-checking involving an additional comparison and branch for each lookup.

There are two fundamental limitations on when it is possible to construct a lookup table for a required operation. One is the amount of memory that is available: one cannot construct a lookup table larger than the space available for the table, although it is possible to construct disk-based lookup tables at the expense of lookup time. The other is the time required to compute the table values in the first instance; although this usually needs to be done only once, if it takes a prohibitively long time, it may make the use of a lookup table an inappropriate solution. As previously stated however, tables can be statically defined in many cases.

Computing sines

[edit]Most computers only perform basic arithmetic operations and cannot directly calculate the sine of a given value. Instead, they use the CORDIC algorithm or a complex formula such as the following Taylor series to compute the value of sine to a high degree of precision:[10]: 5

- (for x close to 0)

However, this can be expensive to compute, especially on slow processors, and there are many applications, particularly in traditional computer graphics, that need to compute many thousands of sine values every second. A common solution is to initially compute the sine of many evenly distributed values, and then to find the sine of x we choose the sine of the value closest to x through array indexing operation. This will be close to the correct value because sine is a continuous function with a bounded rate of change.[10]: 6 For example:[11]: 545–548

real array sine_table[-1000..1000]

for x from -1000 to 1000

sine_table[x] = sine(pi * x / 1000)

function lookup_sine(x)

return sine_table[round(1000 * x / pi)]

Unfortunately, the table requires quite a bit of space: if IEEE double-precision floating-point numbers are used, over 16,000 bytes would be required. We can use fewer samples, but then our precision will significantly worsen. One good solution is linear interpolation, which draws a line between the two points in the table on either side of the value and locates the answer on that line. This is still quick to compute, and much more accurate for smooth functions such as the sine function. Here is an example using linear interpolation:

function lookup_sine(x)

x1 = floor(x * 1000 / pi)

y1 = sine_table[x1]

y2 = sine_table[x1 + 1]

return y1 + (y2 - y1) * (x * 1000 / pi - x1)

Linear interpolation provides for an interpolated function that is continuous, but will not, in general, have continuous derivatives. For smoother interpolation of table lookup that is continuous and has continuous first derivative, one should use the cubic Hermite spline.

When using interpolation, the size of the lookup table can be reduced by using nonuniform sampling, which means that where the function is close to straight, we use few sample points, while where it changes value quickly we use more sample points to keep the approximation close to the real curve. For more information, see interpolation.

Other usages of lookup tables

[edit]Caches

[edit]Storage caches (including disk caches for files, or processor caches for either code or data) work also like a lookup table. The table is built with very fast memory instead of being stored on slower external memory, and maintains two pieces of data for a sub-range of bits composing an external memory (or disk) address (notably the lowest bits of any possible external address):

- one piece (the tag) contains the value of the remaining bits of the address; if these bits match with those from the memory address to read or write, then the other piece contains the cached value for this address.

- the other piece maintains the data associated to that address.

A single (fast) lookup is performed to read the tag in the lookup table at the index specified by the lowest bits of the desired external storage address, and to determine if the memory address is hit by the cache. When a hit is found, no access to external memory is needed (except for write operations, where the cached value may need to be updated asynchronously to the slower memory after some time, or if the position in the cache must be replaced to cache another address).

Hardware LUTs

[edit]In digital logic, a lookup table can be implemented with a multiplexer whose select lines are driven by the address signal and whose inputs are the values of the elements contained in the array. These values can either be hard-wired, as in an ASIC whose purpose is specific to a function, or provided by D latches which allow for configurable values. (ROM, EPROM, EEPROM, or RAM.)

An n-bit LUT can encode any n-input Boolean function by storing the truth table of the function in the LUT. This is an efficient way of encoding Boolean logic functions, and LUTs with 4-6 bits of input are in fact the key component of modern field-programmable gate arrays (FPGAs) which provide reconfigurable hardware logic capabilities.

Data acquisition and control systems

[edit]In data acquisition and control systems, lookup tables are commonly used to undertake the following operations in:

- The application of calibration data, so as to apply corrections to uncalibrated measurement or setpoint values; and

- Undertaking measurement unit conversion; and

- Performing generic user-defined computations.

In some systems, polynomials may also be defined in place of lookup tables for these calculations.

See also

[edit]- Associative array

- Branch table

- Gal's accurate tables

- Memoization

- Memory-bound function

- Nearest-neighbor interpolation

- Shift register lookup table

- Palette, a.k.a. color lookup table or CLUT – for the usage in computer graphics

- 3D lookup table – usage in film industry

References

[edit]- ^ McNamee, Paul (21 August 1998). "Automated Memoization in C++". Archived from the original on 16 April 2019.

- ^ a b Kwok, W.; Haghighi, K.; Kang, E. (1995). "An efficient data structure for the advancing-front triangular mesh generation technique". Communications in Numerical Methods in Engineering. 11 (5). Wiley & Sons: 465–473. doi:10.1002/cnm.1640110511.

- ^ Campbell-Kelly, Martin; Croarken, Mary; Robson, Eleanor, eds. (2003). The History of Mathematical Tables: From Sumer to Spreadsheets. Oxford University Press.

- ^ Maher, David. W. J. and John F. Makowski. "Literary Evidence for Roman Arithmetic With Fractions", 'Classical Philology' (2001) Vol. 96 No. 4 (2001) pp. 376–399. (See page p.383.)

- ^ Bill Jelen: "From 1979 – VisiCalc and LOOKUP"!, by MrExcel East, 31 March 2012

- ^ Cormen, Thomas H. (2009). Introduction to algorithms (3rd ed.). Cambridge, Mass.: MIT Press. pp. 253–255. ISBN 9780262033848. Retrieved 26 November 2015.

- ^ a b c d Jungck P.; Dencan R.; Mulcahy D. (2011). Developing for Performance. In: packetC Programming. Apress. doi:10.1007/978-1-4302-4159-1_26. ISBN 978-1-4302-4159-1.

- ^ nvidia gpu gems2 : using-lookup-tables-accelerate-color

- ^ Sasao, T.; Butler, J. T.; Riedel, M. D. "Application of LUT Cascades to Numerical Function Generators". Defence Technical Information Center. NAVAL POSTGRADUATE SCHOOL MONTEREY CA DEPT OF ELECTRICAL AND COMPUTER ENGINEERING. Retrieved 17 May 2024.

{{cite web}}: CS1 maint: multiple names: authors list (link) - ^ a b Sharif, Haidar (2014). "High-performance mathematical functions for single-core architectures". Journal of Circuits, Systems and Computers. 23 (4). World Scientific. doi:10.1142/S0218126614500510.

- ^ Randall Hyde (1 March 2010). The Art of Assembly Language, 2nd Edition (PDF). No Starch Press. ISBN 978-1593272074 – via University of Campinas Institute of Computing.

External links

[edit]- Fast table lookup using input character as index for branch table

- Art of Assembly: Calculation via Table Lookups

- "Bit Twiddling Hacks" (includes lookup tables) By Sean Eron Anderson of Stanford University

- Memoization in C++ by Paul McNamee, Johns Hopkins University showing savings

- "The Quest for an Accelerated Population Count" by Henry S. Warren Jr.

Lookup table

View on GrokipediaFundamentals

Definition

A lookup table (LUT) is a data structure in computer science consisting of an array or table that maps input values, serving as keys, to corresponding output values, thereby replacing potentially complex runtime computations with efficient direct indexing operations.[6] This approach facilitates faster data retrieval by pre-storing results that would otherwise require algorithmic calculation.[7] Key characteristics of a lookup table include its fixed-size array structure, which accommodates a predefined range of inputs, and the reliance on precomputed values stored at specific indices.[2] Inputs are used directly as indices to access outputs, assuming a dense and consecutive key space, which enables constant-time O(1) retrieval without additional processing steps.[8][2] Lookup tables differ from general-purpose arrays, which provide versatile sequential storage without an inherent mapping intent, as they are optimized specifically for key-to-value translation via precomputation.[2] In distinction to hash tables, which utilize hash functions to map arbitrary keys to indices while handling collisions through mechanisms like chaining, lookup tables employ direct indexing for predefined, integer-based keys, avoiding hashing overhead and dynamic adjustments.[2] The concept traces back to early manual mathematical tables predating digital computation.[9]Basic Implementation

A basic implementation of a lookup table employs a one-dimensional array to store precomputed values, where each array index directly corresponds to a possible input value within a defined range.[10] This approach assumes discrete inputs that map straightforwardly to integer indices, enabling rapid retrieval without on-the-fly computation.[11] To construct the table, first identify the input range (e.g., integers from 0 to ) and allocate an array of size . Then, iterate over each index and populate the array with the precomputed output for that input using the target function. The following pseudocode illustrates this initialization process:function initialize_lookup_table(max_input):

table = new [array](/page/Array)[max_input + 1]

for i = 0 to max_input:

table[i] = compute_function(i) // Precompute the desired output

return table

function initialize_lookup_table(max_input):

table = new [array](/page/Array)[max_input + 1]

for i = 0 to max_input:

table[i] = compute_function(i) // Precompute the desired output

return table

output = table[input].[10] For inputs that may fall outside the valid range or require mapping from a continuous domain, normalization techniques such as scaling (e.g., index = [floor](/page/Floor)(input * (table_size - 1) / max_possible_input)) or modulo operation (e.g., index = input % table_size) ensure the index remains within bounds.[11] The lookup operation itself is then simply:

function lookup(table, input):

normalized_index = normalize(input) // Apply scaling or modulo as needed

if 0 <= normalized_index < table.length:

return table[normalized_index]

else:

// Handle out-of-range error

function lookup(table, input):

normalized_index = normalize(input) // Apply scaling or modulo as needed

if 0 <= normalized_index < table.length:

return table[normalized_index]

else:

// Handle out-of-range error

Historical Development

Early Uses

The concept of lookup tables predates digital computing, originating in ancient mathematical practices where precomputed values facilitated rapid calculations. In ancient Mesopotamia, particularly among the Babylonians around 2000 BCE, multiplication tables inscribed on clay tablets were used to expedite arithmetic operations in a base-60 system, allowing users to reference products of numbers instead of performing repeated additions.[13] These tables represented an early form of tabular data lookup, essential for administrative and astronomical computations in Babylonian society.[14] By the early 17th century, lookup tables had evolved into more sophisticated tools for complex computations. Scottish mathematician John Napier introduced logarithm tables in his 1614 publication Mirifici Logarithmorum Canonis Descriptio, providing precalculated values to simplify multiplication and division by converting them into additions and subtractions of logarithms.[15] Napier's tables, based on a geometric construction of proportional scales, marked a significant advancement in tabular methods, influencing subsequent scientific calculations until electronic aids became available.[15] In the late 19th century, mechanical devices began incorporating lookup principles for large-scale data processing. Herman Hollerith's tabulating machine, developed for the 1890 U.S. Census, used punched cards where the position of holes represented data attributes, enabling electrical circuits to "look up" and tally demographic information efficiently.[16] This electromechanical system processed over 62 million cards in months, reducing census tabulation time from years to weeks and demonstrating lookup tables' utility in automated data retrieval.[16] The transition to electronic computing in the mid-20th century integrated lookup tables directly into machine operations. The ENIAC, completed in 1945 by John Mauchly and J. Presper Eckert at the University of Pennsylvania, employed function tables—large arrays of switches and plugs storing precomputed values—to generate ballistic firing tables for the U.S. Army.[17] These tables allowed the machine to reference arbitrary functions, such as resistance-velocity relationships, speeding up trajectory calculations from hours to seconds.[17] John von Neumann played a pivotal role in formalizing lookup mechanisms within stored-program architectures during the 1940s. In his 1945 "First Draft of a Report on the EDVAC," von Neumann outlined a design where instructions and data shared the same memory, enabling sequential lookup of program code as numerical values to execute computations dynamically.[18] This concept, developed amid World War II efforts, shifted computing from fixed wiring to flexible memory-based lookups, laying the groundwork for general-purpose electronic computers.[19]Modern Evolution

In the mid-20th century, lookup tables transitioned from manual precursors to integral components of early digital computing systems. During the 1950s and 1960s, they were incorporated into programming languages and minicomputers to simplify computations and reduce hardware complexity. FORTRAN, developed by IBM and first released in 1957, supported array-based tables through features like the EQUIVALENCE statement introduced in FORTRAN II in 1958, which enabled shared storage for efficient data access akin to lookup operations. Similarly, the IBM 1620 minicomputer, announced in 1959, relied on memory-resident lookup tables for core arithmetic functions such as addition and multiplication, storing precomputed results in fixed core locations to perform operations without dedicated ALU hardware.[20] By the 1970s, these software and memory-based approaches had become standard in minicomputer environments for scientific and data processing tasks. The 1980s marked a shift toward hardware integration with the advent of very-large-scale integration (VLSI) and programmable logic devices. Xilinx, founded in 1984, introduced the XC2064 in 1985, the first commercially viable field-programmable gate array (FPGA), which utilized lookup tables (LUTs) as configurable logic blocks to implement arbitrary Boolean functions through RAM-based storage of truth tables.[21] This innovation enabled rapid prototyping and customization in digital design, evolving LUTs from software constructs to reconfigurable hardware primitives and paving the way for their widespread adoption in embedded and signal processing applications. From the 2000s onward, lookup tables saw optimizations tailored for embedded systems and graphics processing units (GPUs) to support real-time processing demands. In embedded contexts, automated tools like Mesa, developed in the mid-2000s, facilitated LUT generation and error-bounded approximations for resource-constrained devices, improving performance in applications such as fixed-point arithmetic.[22] On GPUs, LUTs accelerated parallel computations, as seen in real-time subdivision kernels using texture-based tables for graphics rendering as early as 2005, and later in hashing and name lookup engines leveraging GPU parallelism for high-throughput data access.[23] In machine learning, embedding lookup tables emerged as a key technique in neural networks; TensorFlow, released in 2015, incorporated tf.nn.embedding_lookup to efficiently map categorical inputs to dense vectors via partitioned tables, enabling scalable models for recommendation systems and natural language processing.[24] This progression from software arrays to hardware-accelerated implementations culminated in processor-level support, such as Intel's x86 architecture incorporating dedicated lookup-related instructions. The BMI2 extension, introduced with the Haswell microarchitecture in 2013, added PEXT (parallel bits extract) to accelerate bit scattering and gathering operations that complement sparse lookup table access in algorithms like indexing and permutation.[25] In the 2020s, lookup tables advanced further in emerging technologies. For instance, configurable lookup tables (CLUTs) were integrated into quantum computing implementations, enabling dynamic oracle switching in Grover's algorithm to achieve 22-qubit operations scalable to 32 qubits as of October 2025. Additionally, image-adaptive 3D lookup tables gained traction for real-time image enhancement and restoration, supporting efficient inferencing in computer vision applications as demonstrated in research from 2024.[26][27]Principles of Operation

Advantages

Lookup tables offer significant performance advantages through their constant-time O(1) access mechanism, which relies on direct array indexing rather than iterative or algorithmic computations. This approach replaces complex function evaluations—such as those involving loops, multiplications, or conditional branches—with simple memory lookups, enabling substantial speedups in repeated operations. For instance, in scientific computing applications, automated lookup table transformations have demonstrated performance improvements ranging from 1.4× to 6.9× compared to original code, primarily due to the elimination of runtime calculations in favor of precomputed values stored in memory.[28] The simplicity of lookup tables further enhances their utility by precomputing results during initialization, thereby avoiding potential runtime errors associated with repetitive or intricate calculations. By storing exact or approximated function outputs in advance, developers can sidestep issues like floating-point precision errors or overflow in dynamic evaluations, leading to more reliable code execution without the need for extensive debugging of computational logic. This precomputation strategy not only streamlines implementation but also integrates seamlessly with optimization tools, boosting overall programmer productivity while maintaining accuracy in function approximations.[28] In terms of energy efficiency, lookup tables reduce CPU cycles and power consumption, particularly in embedded and real-time systems where data movement overheads are minimized through in-memory processing. By replacing logic-based computations with direct lookups, these structures can achieve significant reductions in energy use and latency in tasks like data encryption, as the integration of computation and storage avoids frequent transfers between processing units and memory. This makes lookup tables especially beneficial for resource-constrained environments, such as processing-in-memory architectures.[29] Lookup tables also provide deterministic behavior with predictable execution times, which is crucial for timing-critical applications like real-time signal processing or control systems. The fixed cost of a single memory access ensures consistent performance regardless of input variations, eliminating the variability introduced by data-dependent computations or branch predictions. For small input domains, such as a 256-entry table for byte-value operations, this results in faster access than equivalent loop-based or conditional methods, often outperforming intrinsic functions in cache-friendly scenarios.[28][9] In hardware implementations, such as field-programmable gate arrays (FPGAs), lookup tables function as configurable memory elements that implement logic functions, offering flexibility at the cost of increased area usage compared to dedicated gates.[4]Limitations

Lookup tables, while efficient for discrete and bounded input domains, face significant memory consumption challenges, particularly in multi-dimensional or high-precision scenarios. The size of a lookup table grows exponentially with the number of dimensions or required accurate digits, as the number of parameters scales as , leading to prohibitive memory demands for even moderately large (e.g., requires 4,096 entries).[30][31] For instance, approximating functions like the Bessel function to high precision can demand thousands of entries per table segment, with plain tiling approaches using up to 6,792 entries compared to optimized methods with 282, resulting in memory footprints that dominate computational resources and slow access times.[31] Scalability issues further limit lookup tables for large or continuous input spaces, where direct tabulation becomes infeasible without approximations or interpolation. In continuous domains, such as real-number inputs, lookup tables must discretize the space, often requiring interpolation schemes like multilinear ( operations) or simplex ( ), but these still falter in high dimensions due to exponential storage needs and computational overhead for sparse or unbounded regions.[30] This renders lookup tables impractical for problems with vast input ranges, as expanding the table to cover continuous spaces exponentially increases both size and preprocessing time without guaranteeing uniform accuracy.[30] Maintenance overhead poses another constraint, especially when underlying algorithms, data distributions, or mappings change, necessitating recomputation and redistribution of precomputed values. In dynamic environments, frequent updates can outweigh performance gains, as rebuilding tables requires significant CPU and time resources, and ensuring consistency across systems adds synchronization costs.[32] For distributed setups, inserts, deletes, or modifications demand coordinated propagation, often via broadcasts or transactions, which introduce latency and error risks in large-scale deployments.[5] In cryptographic applications, lookup tables introduce security risks through predictable indexing patterns that are vulnerable to side-channel attacks, such as cache-timing exploits. Implementations like AES S-box tables (typically 4KB) leak key information via cache hit/miss patterns; for example, access-driven attacks on libraries like mbed TLS can recover up to 69 bits of key material from a 128KB cache.[33] Trace-based attacks exacerbate this, extracting over 200 bits across multiple AES rounds by analyzing access sequences.[33] In modern big data contexts, lookup tables encounter amplified storage costs and scalability barriers in cloud environments, where massive datasets demand terabytes of RAM for even sparse mappings (e.g., 10 bytes per tuple plus overhead for trillions of entries). Compression can mitigate this (up to 250× reduction), but low-density key spaces favor alternatives like hash maps, and cloud billing for persistent storage and updates further escalates expenses in distributed systems.[5]Examples in Computing

Hash Functions

Lookup tables are commonly used in hash functions to accelerate computations that would otherwise require iterative operations. A prominent example is the Cyclic Redundancy Check (CRC), a hash-like checksum used for error detection in data transmission and storage. In CRC-32, which computes a 32-bit polynomial hash over a message, a direct implementation involves repeated bitwise shifts and XORs for each bit of the input. To optimize, a 256-entry lookup table is precomputed for each byte value (0-255), where each entry stores the 32-bit CRC remainder after processing that byte assuming a starting remainder of 0. This table-driven approach, known as the "table method," processes the input byte-by-byte: for each byte, XOR it with the high byte of the current remainder to index the table, then XOR the result with the low 24 bits shifted left by 8. For a 1 KB message, this requires 1024 byte lookups and XORs, replacing ~8000 bit operations in the naive method, yielding 4-8x speedup on typical hardware.[34] This technique traces to the 1980s Ethernet standard and remains standard in libraries like zlib. Another application is tabulation hashing, a method for constructing fast, low-collision hash functions using multiple small lookup tables. In a basic form for 64-bit keys, the key is split into four 16-bit or eight 8-bit parts, each hashed via a random 2^16-entry or 256-entry table of random 8-bit or 16-bit values, then combined (e.g., via XOR or addition). This "tabular" approach approximates universal hashing with near-ideal uniformity while achieving O(1) time per lookup via direct array access, avoiding multiplications or modulo operations. Introduced in 2004, it offers practical advantages in cache performance for hash tables in databases and search engines, with collision probabilities close to double-hashing but simpler implementation.[35][36] Population count (popcount), which counts set bits in a binary integer, also employs lookup tables and relates to hashing in contexts like locality-sensitive hashing (LSH) for similarity search, where Hamming distance (popcount of XOR) measures hash bucket proximity. A 256-entry table stores popcounts for byte values 0-255. For a 64-bit word, extract eight bytes via masks/shifts, lookup each, and sum (8 lookups + 7 adds), replacing ~64 bit checks.[37][38] Earlier vectorized extensions used AVX2 (2013) for parallel popcount: 256-bit registers process 32 bytes via shuffles and in-register LUTs, achieving ~0.69 cycles per 64-bit word on 2014 Haswell processors—about 1.5x faster than scalar POPCNT for bulk data.[38] However, on 2017+ AVX-512 hardware, dedicated VPOPCNT instructions process 512-bit vectors (eight 64-bit popcounts) in ~1 cycle, often outperforming LUT methods by 2-4x in throughput for large datasets as of 2024. LUTs remain useful for pre-AVX-512 compatibility or when memory access latency is low.[39]Trigonometric Computations

Lookup tables for trigonometric computations involve precomputing values of functions such as sine over a discrete set of input angles to enable rapid evaluation without performing complex series expansions or iterative algorithms at runtime. For instance, a sine lookup table can be constructed by calculating sin(θ) for angles θ from 0° to 360° in increments of 0.1°, resulting in 3601 entries (including endpoints), typically stored as fixed-point integers or single-precision floating-point numbers to balance precision and memory usage.[40] This approach exploits the periodicity and symmetry of trigonometric functions, often limiting the table to one quadrant (0° to 90°) and deriving other values via identities like cos(θ) = sin(90° - θ) to reduce storage requirements.[41] To achieve accuracy for inputs not aligning exactly with table indices, linear interpolation is commonly applied between adjacent entries. Given an input angle x where i is the largest integer such that i · Δ ≤ x < (i+1) · Δ, with Δ as the step size (e.g., 0.1° or π/1800 radians), the approximation is: This formula derives from the linear polynomial that passes through the points (iΔ, sin(iΔ)) and ((i+1)Δ, sin((i+1)Δ)), providing a first-order approximation to the function's value at x. The derivation starts with the general linear interpolation formula for a function f at points x_0 and x_1: f(x) ≈ f(x_0) + \frac{f(x_1) - f(x_0)}{x_1 - x_0} (x - x_0), substituting f = sin, x_0 = iΔ, and x_1 = (i+1)Δ. For small Δ, this closely follows the function's local linearity, as the error term from Taylor expansion involves the second derivative bounded by the step size.[42][43] The primary trade-off in lookup table design lies between table size and approximation error, as larger tables with finer granularity reduce interpolation discrepancies but increase memory footprint. Linear interpolation error for sine is theoretically bounded by \frac{(\Delta)^2}{8} \max |-\sin(\theta)| = \frac{(\Delta)^2}{8}, since the second derivative's magnitude peaks at 1; for a 256-entry table over 0 to 2π (Δ ≈ 0.0245 radians), this yields a maximum absolute error of approximately 7.5 × 10^{-5}, or about 0.0075% relative error near unity values. In practice, a 512-entry table (roughly 2 KB for floats) achieves a maximum error of 1.8 × 10^{-5} for sine, sufficient for most embedded and real-time applications.[40][44] Such techniques trace back to early electronic calculators and video games, where computational resources were limited, and lookup tables enabled fast rendering of rotations and transformations; for example, the 1993 game Doom employed precomputed fixed-point trigonometric tables to accelerate ray casting and wall projections without on-the-fly calculations.[45][46]Image Processing

In image processing, lookup tables (LUTs) enable rapid pixel value transformations by precomputing adjustments for discrete intensity levels, typically ranging from 0 to 255 in 8-bit images, thus avoiding repetitive calculations during rendering. This approach is particularly valuable for operations like brightness and contrast modifications, where each pixel's value is directly mapped to a transformed output via table indexing. Gamma correction exemplifies this application, addressing the nonlinear intensity response of display devices such as CRTs by applying a power-law transformation to linear-light data. For RGB images, a separate 256-entry LUT is generated for each channel, with entries computed as , where is typically around 2.2 for sRGB encoding; during processing, each pixel's intensity is replaced by its LUT counterpart to achieve perceptual uniformity and prevent banding artifacts.[47] The process extends to color space conversions, such as RGB to HSV, using multidimensional LUTs—often 3D for correlated channels—that map input triples to outputs via trilinear interpolation, enabling complex nonlinear shifts in hue, saturation, or luminance.[48] Histogram equalization provides a concrete example of LUT-driven contrast enhancement, transforming an image's intensity distribution to span the full dynamic range. The histogram is first computed and normalized to [0,1], then a cumulative distribution function serves as the 256-entry LUT, where each entry (with as the normalized histogram and the total pixels) defines the mapping; pixels are remapped in one pass as , yielding a uniform histogram that reveals details in shadowed or washed-out areas.[49] These LUT methods process entire images efficiently in a single traversal, minimizing latency and making them standard in software like Adobe Photoshop for tonal adjustments via levels and curves tools, as well as in real-time video pipelines where adaptive LUTs handle enhancement on resource-constrained devices.[50][51] Since the 1990s, GPU shaders in OpenGL have incorporated textures as LUTs, allowing fragment programs to sample 1D or 3D tables for accelerated transformations, with filtering modes like linear interpolation ensuring smooth results in high-throughput rendering.[52]Applications

Caches and Memory Systems

Lookup tables form a foundational element in caching mechanisms within computer memory systems, enabling rapid access to frequently used data and address translations. The Translation Lookaside Buffer (TLB) serves as a prime example, functioning as a small, high-speed lookup table that caches recent mappings from virtual page numbers to physical frame numbers, thereby accelerating virtual-to-physical address translation in memory management units (MMUs).[53] This buffer typically holds 16 to 128 entries, each containing a virtual page identifier and its corresponding physical address, allowing the processor to bypass slower page table walks in main memory for common translations.[54] By indexing the TLB with bits from the virtual address, the hardware performs parallel comparisons to retrieve the physical address on a hit, significantly reducing the overhead of memory virtualization.[55] In broader cache architectures, lookup tables underpin the organization of data storage through tag and value arrays. Direct-mapped caches employ address bits directly as indices into a table of cache lines, where each line includes a tag field for matching the higher-order address bits and a data field for the stored value; a match yields immediate retrieval.[56] Set-associative caches extend this by using a subset of address bits to index into sets of multiple lines (e.g., 2-way or 4-way), with parallel tag comparisons across the set to identify the matching entry, balancing lookup speed and conflict reduction.[57] These structures treat the cache as a specialized lookup table, where the index selects candidate entries and tags validate the address, enabling efficient spatial and temporal locality exploitation.[58] Cache operations handle hits and misses via deterministic protocols to maintain data consistency and performance. On a hit, the processor retrieves the data directly from the indexed cache line without further memory access.[59] A miss prompts fetching the required block from lower-level memory (e.g., L2 cache or DRAM), followed by insertion into the cache; if the cache is full, an eviction policy such as Least Recently Used (LRU) selects the victim by tracking access recency via counters or stacks, replacing the least recently accessed line to preserve locality.[60] This process ensures that subsequent accesses to the same or nearby data benefit from the updated lookup table.[61] The performance benefits of these lookup-based caches stem from drastically reduced access latencies compared to main memory. An L1 cache lookup typically completes in 1-4 clock cycles, providing near-register speeds for hits, while DRAM accesses incur 100 or more cycles due to signaling and refresh overheads.[62] This disparity underscores the value of multi-level hierarchies, where L1 offers the fastest but smallest lookup (e.g., 32 KB per core), L2 provides moderate capacity with 10-20 cycle latency, and L3 shares larger pools (several MB) at 30-50 cycles.[63] Such designs minimize average access time by resolving most requests in upper levels. The integration of lookup tables in multi-level caches traces its evolution to the Intel 80486 microprocessor introduced in 1989, which first embedded an 8 KB on-chip unified L1 cache to accelerate instruction and data access over the prior 80386's external caching.[64] Subsequent processors expanded this to split L1 (instruction and data), added on-chip L2 in the Pentium Pro (1995), and introduced shared L3 in multi-core eras like Nehalem (2008), optimizing for increasing core counts and memory bandwidth demands.[65] This progression has sustained cache hit rates above 90% in typical workloads, critical for modern processor efficiency.[66]Hardware Implementations

In field-programmable gate arrays (FPGAs), lookup tables (LUTs) serve as the fundamental building blocks for implementing digital logic, enabling the realization of arbitrary Boolean functions through configurable memory elements. A typical k-input LUT functions as a small static random-access memory (SRAM) that stores a truth table for the desired logic operation, where the inputs act as address lines to select the corresponding output bit. For instance, a 4-input LUT accommodates 16 possible input combinations, storing a 16-bit truth table to represent any 4-variable Boolean function, while modern commercial FPGAs often employ 6-input LUTs (LUT6) with 64 entries for greater versatility.[4][67] The configuration of LUTs in SRAM-based FPGAs occurs during the device programming phase, where the truth table values are loaded into the SRAM cells via a bitstream from external memory, allowing runtime reconfigurability without hardware alterations. This SRAM implementation contrasts with fixed logic gates by providing flexibility in very-large-scale integration (VLSI) designs post-1980s, as FPGAs evolved to support rapid prototyping and field updates. In application-specific integrated circuits (ASICs), LUTs are often realized as read-only memories (ROMs) or hardwired combinational arrays for fixed functions, though they lack the reconfigurability of FPGA counterparts.[68][69] LUTs facilitate the implementation of combinational circuits by directly mapping logic functions into their truth tables, with larger circuits formed through cascading multiple LUTs interconnected via multiplexers or carry chains. Examples include constructing multiplexers, where a LUT selects among inputs based on control signals, or adders, such as a 4-bit ripple-carry adder decomposed into per-bit sum and carry LUTs to minimize propagation delays. This approach leverages the LUT's inherent parallelism, as multiple LUTs within a configurable logic block (CLB) evaluate functions simultaneously without intermediate routing, reducing overall path delays compared to traditional gate-level routing in VLSI fabrics.[70][71] Recent advances in hardware LUT implementations extend to AI accelerators, where multi-dimensional lookup tables optimize sparse operations like embeddings in recommendation models. In Google's Tensor Processing Units (TPUs), introduced in 2016, dedicated hardware such as SparseCore employs lookup tables to accelerate embedding lookups, achieving 5x–7x performance gains with minimal area overhead by sharding large tables across cores for parallel access in deep learning workloads. These 3D-like table structures handle high-dimensional categorical features efficiently, marking a shift toward specialized LUTs in post-Moore's Law AI hardware.[72]Control Systems

In control systems, lookup tables (LUTs) facilitate efficient sensor-actuator mapping by precomputing mappings between inputs like sensor readings and outputs such as control signals, enabling rapid response in real-time environments.[73] This approach is particularly valuable in data acquisition and dynamic control loops, where computational efficiency is paramount to maintain system stability and performance.[74] Lookup tables are integral to proportional-integral-derivative (PID) controllers through gain scheduling, where controller gains are precalculated offline and stored in tables indexed by operating conditions, such as temperature versus response curves, to adapt to nonlinear system behaviors.[75] For instance, in temperature control applications, these tables adjust PID gains to compensate for process variations, achieving response accuracies within ±25% across operating points.[76] This method ensures critically damped responses without oscillations, as gains are selected from the table during operation based on measured variables like process temperature.[77] In data acquisition systems, LUTs support calibration by mapping raw analog-to-digital converter (ADC) voltage outputs to corrected physical values, mitigating nonlinearity errors inherent in sensor measurements.[74] A Bayesian calibration technique populates the LUT with probabilistically estimated correction factors, incorporating prior models and measurement data to enhance precision in high-resolution ADCs used for real-time monitoring.[78] This enables accurate conversion of voltages to engineering units, such as pressure or flow rates, directly within the acquisition hardware.[79] The real-time benefits of LUTs are pronounced in embedded controllers, such as automotive engine control units (ECUs), where they approximate nonlinear functions with minimal computational overhead, supporting fast interpolation for tasks like fuel injection timing.[73] By storing operating-point-dependent parameters in multi-dimensional tables, ECUs achieve deterministic execution cycles under tight timing constraints, reducing latency in closed-loop control compared to on-the-fly calculations.[80] A notable application is in anti-lock braking systems (ABS), where LUTs map wheel slip ratios to friction coefficients, optimizing brake pressure modulation to prevent skidding on varying surfaces; such systems, pioneered in the 1970s, have relied on these tables for empirical friction modeling derived from road tests.[81] [82] Lookup tables are also integrated with programmable logic controllers (PLCs) in industrial automation, storing parameter sets like recipes or calibration curves for sequential control in manufacturing processes; Allen-Bradley PLCs, introduced in 1970 and acquired by Rockwell Automation in 1985, support these tables in data files for efficient runtime access.[83] This integration enhances scalability in factory settings, allowing quick adjustments without reprogramming core logic.[84]References

- https://en.wikichip.org/wiki/population_count

- https://doomwiki.org/wiki/Inaccurate_trigonometry_table