Recent from talks

Contribute something

Nothing was collected or created yet.

A wiki (/ˈwɪki/ ⓘ WICK-ee) is a form of hypertext publication on the internet which is collaboratively edited and managed by its audience directly through a web browser. A typical wiki contains multiple pages that can either be edited by the public or limited to use within an organization for maintaining its internal knowledge base. Its name derives from the first user-editable website called WikiWikiWeb – wiki (pronounced [wiki][note 1]) is a Hawaiian word meaning 'quick'.[1][2][3][4]

Wikis are powered by wiki software, also known as wiki engines. Being a form of content management system, these differ from other web-based systems such as blog software or static site generators in that the content is created without any defined owner or leader. Wikis have little inherent structure, allowing one to emerge according to the needs of the users.[5] Wiki engines usually allow content to be written using a lightweight markup language and sometimes edited with the help of a rich-text editor.[6] There are dozens of different wiki engines in use, both standalone and part of other software, such as bug tracking systems. Some wiki engines are free and open-source, whereas others are proprietary. Some permit control over different functions (levels of access); for example, editing rights may permit changing, adding, or removing material. Others may permit access without enforcing access control. Further rules may be imposed to organize content. In addition to hosting user-authored content, wikis allow those users to interact, hold discussions, and collaborate.[7]

There are hundreds of thousands of wikis in use, both public and private, including wikis functioning as knowledge management resources, note-taking tools, community websites, and intranets. Ward Cunningham, the developer of the first wiki software, WikiWikiWeb, originally described wiki as "the simplest online database that could possibly work".[8]

The online encyclopedia project Wikipedia is the most popular wiki-based website, as well being one of the internet's most popular websites, having been ranked consistently as such since at least 2007.[9] Wikipedia is not a single wiki but rather a collection of hundreds of wikis, with each one pertaining to a specific language, making it the largest reference work of all time.[10] The English-language Wikipedia has the largest collection of articles, standing at 7,080,491 as of October 2025.[11]

Characteristics

[edit]

In their 2001 book The Wiki Way: Quick Collaboration on the Web, Ward Cunningham and co-author Bo Leuf described the essence of the wiki concept:[12][13]

- "A wiki invites all users—not just experts—to edit any page or to create new pages within the wiki website, using only a standard 'plain-vanilla' Web browser without any extra add-ons."

- "Wiki promotes meaningful topic associations between different pages by making page link creation intuitively easy and showing whether an intended target page exists or not."

- "A wiki is not a carefully crafted site created by experts and professional writers and designed for casual visitors. Instead, it seeks to involve the typical visitor/user in an ongoing process of creation and collaboration that constantly changes the website landscape."

Editing

[edit]Source editing

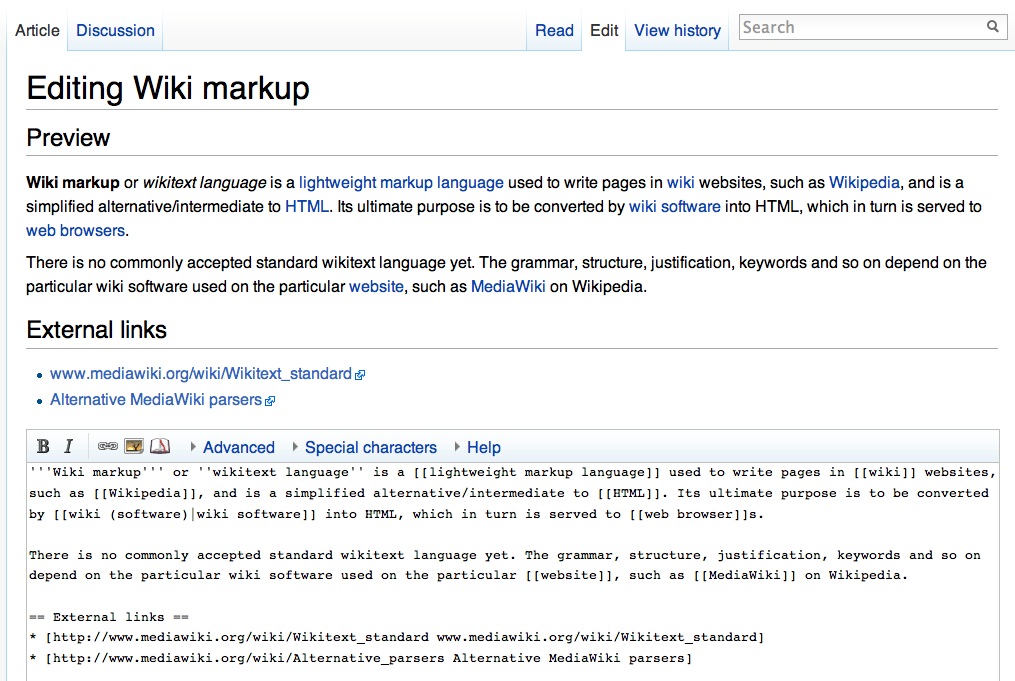

[edit]Some wikis will present users with an edit button or link directly on the page being viewed. This will open an interface for writing, formatting, and structuring page content. The interface may be a source editor, which is text-based and employs a lightweight markup language (also known as wikitext, wiki markup, or wikicode), or a visual editor. For example, in a source editor, starting lines of text with asterisks could create a bulleted list.

The syntax and features of wiki markup languages for denoting style and structure can vary greatly among implementations. Some allow the use of HTML and CSS,[14] while others prevent the use of these to foster uniformity in appearance.

Example of syntax

[edit]A short section of the 1865 novel Alice's Adventures in Wonderland rendered in wiki markup:

Visual editing

[edit]While wiki engines have traditionally offered source editing to users, in recent years some implementations have added a rich text editing mode. This is usually implemented, using JavaScript, as an interface which translates formatting instructions chosen from a toolbar into the corresponding wiki markup or HTML. This is generated and submitted to the server transparently, shielding users from the technical detail of markup editing and making it easier for them to change the content of pages. An example of such an interface is the VisualEditor in MediaWiki, the wiki engine used by Wikipedia. WYSIWYG editors may not provide all the features available in wiki markup, and some users prefer not to use them, so a source editor will often be available simultaneously.

Version history

[edit]Some wiki implementations keep a record of changes made to wiki pages, and may store every version of the page permanently. This allows authors to revert a page to an older version to rectify a mistake, or counteract a malicious or inappropriate edit to its content.[15]

These stores are typically presented for each page in a list, called a "log" or "edit history", available from the page via a link in the interface. The list displays metadata for each revision to the page, such as the time and date of when it was stored, and the name of the person who created it, alongside a link to view that specific revision. A diff (short for "difference") feature may be available, which highlights the changes between any two revisions.

Edit summaries

[edit]The edit history view in many wiki implementations will include edit summaries written by users when submitting changes to a page. Similar to the function of a log message in a revision control system, an edit summary is a short piece of text which summarizes and perhaps explains the change, for example "Corrected grammar" or "Fixed table formatting to not extend past page width". It is not inserted into the article's main text.

Navigation

[edit]Traditionally, wikis offer free navigation between their pages via hypertext links in page text, rather than requiring users to follow a formal or structured navigation scheme. Users may also create indexes or table of contents pages, hierarchical categorization via a taxonomy, or other forms of ad hoc content organization. Wiki implementations can provide one or more ways to categorize or tag pages to support the maintenance of such index pages, such as a backlink feature which displays all pages that link to a given page. Adding categories or tags to a page makes it easier for other users to find it.

Most wikis allow the titles of pages to be searched amongst, and some offer full text search of all stored content.

Navigation between wikis

[edit]Some wiki communities have established navigational networks between each other using a system called WikiNodes. A WikiNode is a page on a wiki which describes and links to other, related wikis. Some wikis operate a structure of neighbors and delegates, wherein a neighbor wiki is one which discusses similar content or is otherwise of interest, and a delegate wiki is one which has agreed to have certain content delegated to it.[16] WikiNode networks act as webrings which may be navigated from one node to another to find a wiki which addresses a specific subject.

Linking to and naming pages

[edit]The syntax used to create internal hyperlinks varies between wiki implementations. Beginning with the WikiWikiWeb in 1995, most wikis used camel case to name pages,[17] which is when words in a phrase are capitalized and the spaces between them removed. In this system, the phrase "camel case" would be rendered as "CamelCase". In early wiki engines, when a page was displayed, any instance of a camel case phrase would be transformed into a link to another page named with the same phrase.

While this system made it easy to link to pages, it had the downside of requiring pages to be named in a form deviating from standard spelling, and titles of a single word required abnormally capitalizing one of the letters (e.g. "WiKi" instead of "Wiki"). Some wiki implementations attempt to improve the display of camel case page titles and links by reinserting spaces and possibly also reverting to lower case, but this simplistic method is not able to correctly present titles of mixed capitalization. For example, "Kingdom of France" as a page title would be written as "KingdomOfFrance", and displayed as "Kingdom Of France".

To avoid this problem, the syntax of wiki markup gained free links, wherein a term in natural language could be wrapped in special characters to turn it into a link without modifying it. The concept was given the name in its first implementation, in UseModWiki in February 2001.[18] In that implementation, link terms were wrapped in a double set of square brackets, for example [[Kingdom of France]]. This syntax was adopted by a number of later wiki engines.

It is typically possible for users of a wiki to create links to pages that do not yet exist, as a way to invite the creation of those pages. Such links are usually differentiated visually in some fashion, such as being colored red instead of the default blue, which was the case in the original WikiWikiWeb, or by appearing as a question mark next to the linked words.

History

[edit]

WikiWikiWeb was the first wiki.[19] Ward Cunningham started developing it in 1994, and installed it on the Internet domain c2.com on March 25, 1995. Cunningham gave it the name after remembering a Honolulu International Airport counter employee telling him to take the "Wiki Wiki Shuttle" bus that runs between the airport's terminals, later observing that "I chose wiki-wiki as an alliterative substitute for 'quick' and thereby avoided naming this stuff quick-web."[20][21]

Cunningham's system was inspired by his having used Apple's hypertext software HyperCard, which allowed users to create interlinked "stacks" of virtual cards.[22] HyperCard, however, was single-user, and Cunningham was inspired to build upon the ideas of Vannevar Bush, the inventor of hypertext, by allowing users to "comment on and change one another's text".[6][23] Cunningham says his goals were to link together people's experiences to create a new literature to document programming patterns, and to harness people's natural desire to talk and tell stories with a technology that would feel comfortable to those not used to "authoring".[22]

Wikipedia became the most famous wiki site[clarification needed], launched in January 2001 and entering the top ten most popular websites in 2007. In the early 2000s, wikis were increasingly adopted in enterprise as collaborative software. Common uses included project communication, intranets, and documentation, initially for technical users. Some companies use wikis as their collaborative software and as a replacement for static intranets, and some schools and universities use wikis to enhance group learning. On March 15, 2007, the word wiki was listed in the online Oxford English Dictionary.[24]

Alternative definitions

[edit]In the late 1990s and early 2000s, the word "wiki" was used to refer to both user-editable websites and the software that powers them, and the latter definition is still occasionally in use.[5]

By 2014, Ward Cunningham's thinking on the nature of wikis had evolved, leading him to write[25] that the word "wiki" should not be used to refer to a single website, but rather to a mass of user-editable pages or sites so that a single website is not "a wiki" but "an instance of wiki". In this concept of wiki federation, in which the same content can be hosted and edited in more than one location in a manner similar to distributed version control, the idea of a single discrete "wiki" no longer made sense.[26]

Implementations

[edit]The software which powers a wiki may be implemented as a series of scripts which operate an existing web server, a standalone application server that runs on one or more web servers, or in the case of personal wikis, run as a standalone application on a single computer. Some wikis use flat file databases to store page content, while others use a relational database,[27] as indexed database access is faster on large wikis, particularly for searching.

Hosting

[edit]Wikis can also be created on wiki hosting services (also known as wiki farms), where the server-side software is implemented by the wiki farm owner, and may do so at no charge in exchange for advertisements being displayed on the wiki's pages. Some hosting services offer private, password-protected wikis requiring authentication to access. Free wiki farms generally contain advertising on every page.

Trust and security

[edit]Access control

[edit]The four basic types of users who participate in wikis are readers, authors, wiki administrators and system administrators. System administrators are responsible for the installation and maintenance of the wiki engine and the container web server. Wiki administrators maintain content and, through having elevated privileges, are granted additional functions (including, for example, preventing edits to pages, deleting pages, changing users' access rights, or blocking them from editing).[28]

Controlling changes

[edit]

Wikis are generally designed with a soft security philosophy in which it is easy to correct mistakes or harmful changes, rather than attempting to prevent them from happening in the first place. This allows them to be very open while providing a means to verify the validity of recent additions to the body of pages. Most wikis offer a recent changes page which shows recent edits, or a list of edits made within a given time frame.[29] Some wikis can filter the list to remove edits flagged by users as "minor" and automated edits.[30] The version history feature allows harmful changes to be reverted quickly and easily.[15]

Some wiki engines provide additional content control, allowing remote monitoring and management of a page or set of pages to maintain quality. A person willing to maintain pages will be alerted of modifications to them, allowing them to verify the validity of new editions quickly.[31] Such a feature is often called a watchlist.

Some wikis also implement patrolled revisions, in which editors with the requisite credentials can mark edits as being legitimate. A flagged revisions system can prevent edits from going live until they have been reviewed.[32]

Wikis may allow any person on the web to edit their content without having to register an account on the site first (anonymous editing), or require registration as a condition of participation.[33] On implementations where an administrator is able to restrict editing of a page or group of pages to a specific group of users, they may have the option to prevent anonymous editing while allowing it for registered users.[34]

Trustworthiness and reliability of content

[edit]Critics of publicly editable wikis argue that they could be easily tampered with by malicious individuals, or even by well-meaning but unskilled users who introduce errors into the content. Proponents maintain that these issues will be caught and rectified by a wiki's community of users.[6][19] High editorial standards in medicine and health sciences articles, in which users typically use peer-reviewed journals or university textbooks as sources, have led to the idea of expert-moderated wikis.[35] Wiki implementations retaining and allowing access to specific versions of articles has been useful to the scientific community, by allowing expert peer reviewers to provide links to trusted version of articles which they have analyzed.[36]

Security

[edit]Trolling and cybervandalism on wikis, where content is changed to something deliberately incorrect or a hoax, offensive material or nonsense is added, or content is maliciously removed, can be a major problem. On larger wiki sites it is possible for such changes to go unnoticed for a long period.

In addition to using the approach of soft security for protecting themselves, larger wikis may employ sophisticated methods, such as bots that automatically identify and revert vandalism. For example, on Wikipedia, the bot ClueBot NG uses machine learning to identify likely harmful changes, and reverts these changes within minutes or even seconds.[37]

Disagreements between users over the content or appearance of pages may cause edit wars, where competing users repetitively change a page back to a version that they favor. Some wiki software allows administrators to prevent pages from being editable until a decision has been made on what version of the page would be most appropriate.[7]

Some wikis may be subject to external structures of governance which address the behavior of persons with access to the system, for example in academic contexts.[27]

Harmful external links

[edit]As most wikis allow the creation of hyperlinks to other sites and services, the addition of malicious hyperlinks, such as sites infected with malware, can also be a problem. For example, in 2006 a German Wikipedia article about the Blaster Worm was edited to include a hyperlink to a malicious website, and users of vulnerable Microsoft Windows systems who followed the link had their systems infected with the worm.[7] Some wiki engines offer a blacklist feature which prevents users from adding hyperlinks to specific sites that have been placed on the list by the wiki's administrators.

Communities

[edit]Applications

[edit]

The English Wikipedia has the largest user base among wikis on the World Wide Web[38] and ranks in the top 10 among all Web sites in terms of traffic.[39] Other large wikis include the WikiWikiWeb, Memory Alpha, Wikivoyage, and previously Susning.nu, a Swedish-language knowledge base. Medical and health-related wiki examples include Ganfyd, an online collaborative medical reference that is edited by medical professionals and invited non-medical experts.[40] Many wiki communities are private, particularly within enterprises. They are often used as internal documentation for in-house systems and applications. Some companies use wikis to allow customers to help produce software documentation.[41] A study of corporate wiki users found that they could be divided into "synthesizers" and "adders" of content. Synthesizers' frequency of contribution was affected more by their impact on other wiki users, while adders' contribution frequency was affected more by being able to accomplish their immediate work.[42] From a study of thousands of wiki deployments, Jonathan Grudin concluded careful stakeholder analysis and education are crucial to successful wiki deployment.[43]

In 2005, the Gartner Group, noting the increasing popularity of wikis, estimated that they would become mainstream collaboration tools in at least 50% of companies by 2009.[44][needs update] Wikis can be used for project management.[45][46][unreliable source] Wikis have also been used in the academic community for sharing and dissemination of information across institutional and international boundaries.[47] In those settings, they have been found useful for collaboration on grant writing, strategic planning, departmental documentation, and committee work.[48] In the mid-2000s, the increasing trend among industries toward collaboration placed a heavier impetus upon educators to make students proficient in collaborative work, inspiring even greater interest in wikis being used in the classroom.[7]

Wikis have found some use within the legal profession and within the government. Examples include the Central Intelligence Agency's Intellipedia, designed to share and collect intelligence assessments, DKosopedia, which was used by the American Civil Liberties Union to assist with review of documents about the internment of detainees in Guantánamo Bay;[49] and the wiki of the United States Court of Appeals for the Seventh Circuit, used to post court rules and allow practitioners to comment and ask questions. The United States Patent and Trademark Office operates Peer-to-Patent, a wiki to allow the public to collaborate on finding prior art relevant to the examination of pending patent applications. Queens, New York has used a wiki to allow citizens to collaborate on the design and planning of a local park. Cornell Law School founded a wiki-based legal dictionary called Wex, whose growth has been hampered by restrictions on who can edit.[34]

In academic contexts, wikis have also been used as project collaboration and research support systems.[50][51]

City wikis

[edit]A city wiki or local wiki is a wiki used as a knowledge base and social network for a specific geographical locale.[52][53][54] The term city wiki is sometimes also used for wikis that cover not just a city, but a small town or an entire region. Such a wiki contains information about specific instances of things, ideas, people and places. Such highly localized information might be appropriate for a wiki targeted at local viewers, and could include:

- Details of public establishments such as public houses, bars, accommodation or social centers

- Owner name, opening hours and statistics for a specific shop

- Statistical information about a specific road in a city

- Flavors of ice cream served at a local ice cream parlor

- A biography of a local mayor and other persons

Growth factors

[edit]A study of several hundred wikis in 2008 showed that a relatively high number of administrators for a given content size is likely to reduce growth;[55] access controls restricting editing to registered users tends to reduce growth; a lack of such access controls tends to fuel new user registration; and that a higher ratio of administrators to regular users has no significant effect on content or population growth.[56]

Legal environment

[edit]Joint authorship of articles, in which different users participate in correcting, editing, and compiling the finished product, can also cause editors to become tenants in common of the copyright, making it impossible to republish without permission of all co-owners, some of whose identities may be unknown due to pseudonymous or anonymous editing.[7] Some copyright issues can be alleviated through the use of an open content license. Version 2 of the GNU Free Documentation License includes a specific provision for wiki relicensing, and Creative Commons licenses are also popular. When no license is specified, an implied license to read and add content to a wiki may be deemed to exist on the grounds of business necessity and the inherent nature of a wiki.

Wikis and their users can be held liable for certain activities that occur on the wiki. If a wiki owner displays indifference and forgoes controls (such as banning copyright infringers) that they could have exercised to stop copyright infringement, they may be deemed to have authorized infringement, especially if the wiki is primarily used to infringe copyrights or obtains a direct financial benefit, such as advertising revenue, from infringing activities.[7] In the United States, wikis may benefit from Section 230 of the Communications Decency Act, which protects sites that engage in "Good Samaritan" policing of harmful material, with no requirement on the quality or quantity of such self-policing.[57] It has also been argued that a wiki's enforcement of certain rules, such as anti-bias, verifiability, reliable sourcing, and no-original-research policies, could pose legal risks.[58] When defamation occurs on a wiki, theoretically, all users of the wiki can be held liable, because any of them had the ability to remove or amend the defamatory material from the "publication". It remains to be seen whether wikis will be regarded as more akin to an internet service provider, which is generally not held liable due to its lack of control over publications' contents, than a publisher.[7] It has been recommended that trademark owners monitor what information is presented about their trademarks on wikis, since courts may use such content as evidence pertaining to public perceptions, and they can edit entries to rectify misinformation.[59]

Conferences

[edit]Active conferences and meetings about wiki-related topics include:

- Atlassian Summit, an annual conference for users of Atlassian software, including Confluence.[60]

- OpenSym (called WikiSym until 2014), an academic conference dedicated to research about wikis and open collaboration.

- SMWCon, a bi-annual conference for users and developers of Semantic MediaWiki.[61]

- TikiFest, a frequently held meeting for users and developers of Tiki Wiki CMS Groupware.[62]

- Wikimania, an annual conference dedicated to the research and practice of Wikimedia Foundation projects like Wikipedia.

Former wiki-related events include:

- RecentChangesCamp (2006–2012), an unconference on wiki-related topics.

- RegioWikiCamp (2009–2013), a semi-annual unconference on "regiowikis", or wikis on cities and other geographic areas.[63]

See also

[edit]- Comparison of wiki software – Software to run a collaborative wiki compared

- Content management system – Software for managing digital content

- CURIE – Form of abbreviated URI

- Dispersed knowledge – Information that is spread throughout the market and not in the hands of a single agent

- Fork and pull model – A common alternate paradigm

- List of wikis

- Mass collaboration – Many people working on a single project

- Universal Edit Button – Browser extension indicating a website is editable

- Wikis and education

Notes

[edit]- ^ The realization of the Hawaiian /w/ phoneme varies between [w] and [v], and the realization of the /k/ phoneme varies between [k] and [t], among other realizations. Thus, the pronunciation of the Hawaiian word wiki varies between ['wiki], ['witi], ['viki], and ['viti]. See Hawaiian phonology for more details.

References

[edit]- ^ "Hawaiian Words; Hawaiian to English". mauimapp.com. Archived from the original on September 14, 2008. Retrieved September 19, 2008.

- ^ "Wiki | Definition & Facts". Encyclopædia Britannica. Retrieved May 19, 2025.

- ^ Hasan, Heather (2012), Wikipedia, 3.5 million articles and counting, New York: Rosen Central, p. 11, ISBN 9781448855575, archived from the original on October 26, 2019, retrieved August 6, 2019

- ^ Andrews, Lorrin (1865), A dictionary of the Hawaiian language to which is appended an English-Hawaiian vocabulary and a chronological table of remarkable events, Henry M. Whitney, p. 514, archived from the original on August 15, 2014, retrieved June 1, 2014

- ^ a b Mitchell, Scott (July 2008), Easy Wiki Hosting, Scott Hanselman's blog, and Snagging Screens, MSDN Magazine, archived from the original on March 16, 2010, retrieved March 9, 2010

- ^ a b c "wiki", Encyclopædia Britannica, vol. 1, London: Encyclopædia Britannica, Inc., 2007, archived from the original on April 24, 2008, retrieved April 10, 2008

- ^ a b c d e f g Black, Peter; Delaney, Hayden; Fitzgerald, Brian (2007), Legal Issues for Wikis: The Challenge of User-generated and Peer-produced Knowledge, Content and Culture (PDF), vol. 14, eLaw J., archived from the original (PDF) on December 22, 2012

- ^ Cunningham, Ward (June 27, 2002). "What is a Wiki". WikiWikiWeb. Archived from the original on April 16, 2008. Retrieved April 10, 2008.

- ^ "Alexa Top Sites". Archived from the original on March 2, 2015. Retrieved December 1, 2016.

- ^ [1]

- ^ "Wikipedia:Size of Wikipedia". Wikipedia. Wikimedia Foundation. Retrieved January 14, 2024.

- ^ Leuf, Bo; Cunningham, Ward (2001). The Wiki Way: Quick Collaboration on the Web. Addison-Wesley. p. 16. ISBN 978-0-201-71499-9.

- ^ "Wiki Design Principles". Archived from the original on April 30, 2002. Retrieved April 30, 2002.

- ^ Dohrn, Hannes; Riehle, Dirk (2011). "Design and implementation of the Sweble Wikitext parser: Unlocking the structured data of Wikipedia". Proceedings of the 7th International Symposium on Wikis and Open Collaboration. ACM. pp. 72–81. doi:10.1145/2038558.2038571. ISBN 978-1-4503-0909-7.

- ^ a b Ebersbach 2008, p. 178

- ^ "Frequently Asked Questions". WikiNodes. Archived from the original on August 10, 2007.

- ^ Bäckström, A., & Wändin, L. (2005). Spatial Hypertext Editing Tools for Wiki Web Systems.

- ^ Adams, Clifford (April 26, 2001). "UseModWiki/OldVersions". Retrieved July 25, 2024.

- ^ a b Ebersbach 2008, p. 10.

- ^ Cunningham, Ward (November 1, 2003). "Correspondence on the Etymology of Wiki". WikiWikiWeb. Archived from the original on March 17, 2007. Retrieved March 9, 2007.

- ^ Cunningham, Ward (February 25, 2008). "Wiki History". WikiWikiWeb. Archived from the original on June 21, 2002. Retrieved March 9, 2007.

- ^ a b Bill Venners (October 20, 2003). "Exploring with Wiki: A Conversation with Ward Cunningham, Part I". artima developer. Archived from the original on February 5, 2015. Retrieved December 12, 2014.

- ^ Cunningham, Ward (July 26, 2007). "Wiki Wiki Hyper Card". WikiWikiWeb. Archived from the original on April 6, 2007. Retrieved March 9, 2007.

- ^ Diamond, Graeme (March 1, 2007). "March 2007 update". Oxford English Dictionary. Archived from the original on January 7, 2011. Retrieved March 16, 2007.

- ^ Ward Cunningham [@WardCunningham] (November 8, 2014). "The plural of wiki is wiki. See https://forage.ward.fed.wiki.org/an-install-of-wiki.html" (Tweet). Retrieved March 18, 2019 – via Twitter.

- ^ "Smallest Federated Wiki". wiki.org. Archived from the original on September 28, 2015. Retrieved September 28, 2015.

- ^ a b Naomi, Augar; Raitman, Ruth; Zhou, Wanlei (2004). "Teaching and learning online with wikis". Proceedings of Beyond the Comfort Zone: 21st ASCILITE Conference: 95–104. CiteSeerX 10.1.1.133.1456.

- ^ Cubric, Marija (2007). "Analysis of the use of Wiki-based collaborations in enhancing student learning". UH Business School Working Paper. University of Hertfordshire. Archived from the original on May 15, 2011. Retrieved April 25, 2011.

- ^ Ebersbach 2008, p. 20

- ^ Ebersbach 2008, p. 54

- ^ Ebersbach 2008, p. 109

- ^ Goldman, Eric, "Wikipedia's Labor Squeeze and its Consequences", Journal on Telecommunications and High Technology Law, 8

- ^ Ebersbach 2008, p. 108

- ^ a b Noveck, Beth Simone (March 2007), "Wikipedia and the Future of Legal Education", Journal of Legal Education, 57 (1), archived from the original on July 3, 2014(subscription required)

- ^ Barsky, Eugene; Giustini, Dean (December 2007). "Introducing Web 2.0: wikis for health librarians" (PDF). Journal of the Canadian Health Libraries Association. 28 (4): 147–150. doi:10.5596/c07-036. ISSN 1708-6892. Archived (PDF) from the original on April 30, 2012. Retrieved November 7, 2011.

- ^ Yager, Kevin (March 16, 2006). "Wiki ware could harness the Internet for science". Nature. 440 (7082): 278. Bibcode:2006Natur.440..278Y. doi:10.1038/440278a. ISSN 0028-0836. PMID 16541049.

- ^ Hicks, Jesse (February 18, 2014). "This machine kills trolls". The Verge. Archived from the original on August 27, 2014. Retrieved September 7, 2014.

- ^ "List of largest (Media)wikis". S23-Wiki. April 3, 2008. Archived from the original on August 25, 2014. Retrieved December 12, 2014.

- ^ "Alexa Top 500 Global Sites". Alexa Internet. Archived from the original on March 2, 2015. Retrieved April 26, 2015.

- ^ Boulos, M. N. K.; Maramba, I.; Wheeler, S. (2006), "Wikis, blogs and podcasts: a new generation of Web-based tools for virtual collaborative clinical practice and education", BMC Medical Education, 6: 41, doi:10.1186/1472-6920-6-41, PMC 1564136, PMID 16911779

- ^ Müller, C.; Birn, L. (September 6–8, 2006). "Wikis for Collaborative Software Documentation" (PDF). i-know.tugraz.at. Proceedings of I-KNOW '06. Archived from the original (PDF) on July 6, 2011.

- ^ Majchrzak, A.; Wagner, C.; Yates, D. (2006), "Corporate wiki users: results of a survey", Proceedings of the 2006 international symposium on Wikis, Symposium on Wikis, pp. 99–104, doi:10.1145/1149453.1149472, ISBN 978-1-59593-413-0, S2CID 13206858

- ^ Grudin, Jonathan; Poole, Erika Shehan (2015). "Wikis at work: Success factors and challenges for sustainability of enterprise wikis". Microsoft Research. Archived from the original on September 4, 2015. Retrieved June 16, 2015.

- ^ Conlin, Michelle (November 28, 2005), "E-Mail Is So Five Minutes Ago", Bloomberg BusinessWeek, archived from the original on October 17, 2012

- ^ "HomePage". Project Management Wiki.org. Archived from the original on August 16, 2014. Retrieved May 8, 2012.

- ^ "Ways to Wiki: Project Management". EditMe. January 4, 2010. Archived from the original on May 8, 2012.

- ^ Wanderley, M. M.; Birnbaum, D.; Malloch, J. (2006). "SensorWiki.org: a collaborative resource for researchers and interface designers". NIME '06 Proceedings of the 2006 Conference on New Interfaces for Musical Expression. IRCAM – Centre Pompidou: 180–183. ISBN 978-2-84426-314-8.

- ^ Lombardo, Nancy T. (June 2008). "Putting Wikis to Work in Libraries". Medical Reference Services Quarterly. 27 (2): 129–145. doi:10.1080/02763860802114223. PMID 18844087. S2CID 11552140.

- ^ Noveck, Beth Simone (2007). "Wikipedia and the Future of Legal Education". Journal of Legal Education. 57: 3.

- ^ Au, C. H. (December 2017). "Wiki as a research support system – A trial in information systems research". 2017 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM). pp. 2271–2275. doi:10.1109/IEEM.2017.8290296. ISBN 978-1-5386-0948-4. S2CID 44029462.

- ^ Au, Cheuk-hang. "Using Wiki for Project Collaboration – with Comparison on Facebook" (PDF). Archived (PDF) from the original on April 12, 2019.

- ^ Andersen, Michael (November 6, 2009). "Welcome to Davis, Calif.: Six lessons from the world's best local wiki". Nieman Lab. Archived from the original on August 8, 2013. Retrieved January 6, 2023.

- ^ McGann, Laura (June 18, 2010). "Knight News Challenge: Is a wiki site coming to your city? Local Wiki will build software to make it simple". Nieman Lab. Archived from the original on June 25, 2013. Retrieved January 6, 2023.

- ^ Wired: Makice, Kevin (July 15, 2009). Hey, Kid: Support Your Local Wiki Archived April 27, 2015, at the Wayback Machine

- ^ Roth, C.; Taraborelli, D.; Gilbert, N. (2008). "Measuring wiki viability. An empirical assessment of the social dynamics of a large sample of wikis" (PDF). nitens.org. The Centre for Research in Social Simulation: 3. Archived (PDF) from the original on October 11, 2017.

Figure 4 shows that having a relatively high number of administrators for a given content size is likely to reduce growth.

- ^ Roth, C.; Taraborelli, D.; Gilbert, N. (2008). "Measuring wiki viability. An empirical assessment of the social dynamics of a large sample of wikis" (PDF). Surrey Research Insight Open Access. The Centre for Research in Social Simulation. Archived from the original (PDF) on June 16, 2012. Retrieved November 9, 2018.

- ^ Walsh, Kathleen M.; Oh, Sarah (February 23, 2010). "Self-Regulation: How Wikipedia Leverages User-Generated Quality Control Under Section 230". Archived from the original on January 6, 2014.

- ^ Myers, Ken S. (2008), "Wikimmunity: Fitting the Communications Decency Act to Wikipedia", Harvard Journal of Law and Technology, 20, The Berkman Center for Internet and Society: 163, SSRN 916529, archived from the original on January 24, 2024

- ^ Jarvis, Joshua (May 2008), "Police your marks in a wiki world", Managing Intellectual Property, 179 (179): 101–103, archived from the original on March 4, 2016

- ^ "Atlassian". Summit.atlassian.com. Archived from the original on June 13, 2011. Retrieved June 20, 2011.

- ^ "SMWCon". Semantic-mediawiki.org. Archived from the original on July 14, 2011. Retrieved June 20, 2011.

- ^ "TikiFest". Tiki.org. Archived from the original on June 30, 2011. Retrieved June 20, 2011.

- ^ "Regiowiki Main Page". Wiki.regiowiki.eu. Archived from the original on August 13, 2009. Retrieved June 20, 2011.

Sources

[edit]- Ebersbach, Anja (2008), Wiki: Web Collaboration, Springer Science+Business Media, ISBN 978-3-540-35150-4

Further reading

[edit]- Mader, Stewart (2007), Wikipatterns, John Wiley & Sons, ISBN 978-0-470-22362-8

- Tapscott, Don (2008), Wikinomics: How Mass Collaboration Changes Everything, Portfolio Hardcover, ISBN 978-1-59184-193-7

External links

[edit]- Exploring with Wiki, an interview with Ward Cunningham

- Murphy, Paula (April 2006). Topsy-turvy World of Wiki. University of California.

- Ward Cunningham's correspondence with etymologists

- WikiIndex and WikiApiary, directories of wikis

- WikiMatrix, a website for comparing wiki software and hosts

- wikiteam on GitHub

Definition and Core Concepts

Defining a Wiki

A wiki is a website or database that enables community members to collaboratively create, edit, and organize content using a simplified markup language, typically through a web browser without requiring advanced technical skills.[2][9] This structure supports the development of interconnected pages that form a dynamic knowledge base, where contributions from multiple users build upon one another in real time. Key attributes of a wiki include its ease of editing, which allows even novice users to make changes quickly; its inherently collaborative nature, fostering collective authorship; a hyperlinked structure that facilitates navigation between related topics; and the absence of formal gatekeepers, enabling low-barrier participation from the community.[10][11] These features distinguish wikis from other online tools by prioritizing open, iterative content evolution over centralized control. Unlike static websites, which feature fixed content updated only by administrators; blogs, which primarily allow one-way publishing by designated authors; or forums, which focus on threaded discussions rather than editable documents, wikis emphasize real-time, permissionless (or low-barrier) multi-user editing to construct shared resources.[12][13][14]Fundamental Principles

Wikis are underpinned by principles that promote openness and collaboration, enabling diverse contributors to build shared knowledge bases without centralized authority. A key operational principle is collaborative authorship, which emphasizes emergent content creation through distributed input. In wikis, pages are not authored by individuals but co-developed by communities, with edits building upon prior versions to refine accuracy and completeness over time. This approach leverages collective intelligence, allowing knowledge to aggregate rapidly as contributors add, revise, and link information without needing permission or hierarchy. The result is a dynamic repository where authority derives from consensus and repeated validation, rather than individual expertise or institutional endorsement.[15] The inventor of the wiki, Ward Cunningham, outlined several design principles that form the foundation of wiki systems: openness, where any reader can edit pages; incremental development, allowing unwritten pages to be linked and thus prompting creation; organic growth, as the structure evolves with community needs; and observability, making editing activity visible for review. Other principles include tolerance for imperfect input, convergence toward better content, and unified tools for writing and organizing. These principles enable wikis to function as flexible, community-driven platforms.[15] Open licensing in wikis, such as the Creative Commons Attribution-ShareAlike (CC BY-SA), allows for the copying and modification of content, including forking—creating independent versions of pages or sites—to address disagreements or specific needs without disrupting the original. This feature promotes adaptability and long-term content freedom.[16] These principles yield significant trade-offs in wiki functionality. On one hand, openness facilitates rapid knowledge aggregation, harnessing diverse perspectives to quickly compile and update comprehensive resources that outpace traditional publishing.[17]Technical Characteristics

Editing Processes

Editing in wikis primarily occurs through two interfaces: source editing, which relies on a lightweight markup language known as wikitext, and visual editing, which provides a what-you-see-is-what-you-get (WYSIWYG) experience.[18][19] Source editing involves users directly authoring content using wikitext, a simple syntax designed for formatting without requiring HTML knowledge. For instance, internal links are created with double square brackets, such as [[Wiki]] to link to a page titled "Wiki"; headings are denoted by equals signs, like ==Heading== for a level-two header; and text emphasis uses apostrophes for bold ('''bold text''') or italics (''italic text''). This markup is parsed by the wiki engine to render the final page view, allowing precise control over structure and enabling collaborative refinement.[18] Visual editing, in contrast, abstracts the underlying markup through an interactive interface, permitting users to manipulate content directly on a preview of the rendered page. Introduced in MediaWiki as the VisualEditor, it supports inline editing of text, insertion of elements via toolbars, and real-time adjustments without exposing code, making it accessible for non-technical contributors.[19] Both editing modes incorporate features like edit previews, which display a rendered version of changes before saving to catch errors, and undo capabilities that revert recent modifications via the version history. Multimedia integration allows embedding images with syntax like [[File:Example.jpg|thumb|Caption]] in source mode or drag-and-drop in visual mode, while tables can be constructed using pipe-separated markup (e.g., {| class="wikitable" |+ Caption |- ! Header || Header |} followed by rows) or visual tools for adding rows and cells.[20][21][22] To facilitate collaboration, wikis provide user aids such as edit summaries, brief notes (e.g., "Fixed grammar and added reference") entered during saves to explain changes, and mechanisms for conflict resolution when simultaneous edits occur. In such cases, the system prompts users to merge conflicting sections manually, preserving both sets of revisions where possible.[23][24]Navigation and Linking

In wikis, internal linking forms the backbone of content connectivity, allowing users to navigate seamlessly between related pages through simple syntax. In early wikis like the original WikiWikiWeb, hyperlinks were created automatically via CamelCase conventions, where words joined with capitalized letters (e.g., ThisPage) formed links. In many modern wikis, such as those using MediaWiki, the standard method for creating hyperlinks, known as wikilinks, uses double square brackets enclosing the target page name, such as[[Page Name]], which generates a clickable link to that page if it exists or a red link prompting creation if it does not.[25][1] This syntax supports redirects, where a page begins with #REDIRECT [[Target Page]] to automatically forward users to another page, facilitating maintenance by consolidating content under preferred titles.[25] Disambiguation pages resolve ambiguity for terms with multiple meanings by listing links to specific articles, often linked via piped syntax like [[Page Name|Specific Context]], which displays custom text while directing to the intended target.[25] Piped links, in general, enable [[Target Page|Display Text]] to show user-friendly phrasing, such as hiding technical names behind descriptive labels, enhancing readability without altering the underlying structure.[25]

Page naming conventions ensure consistent organization and accessibility across wiki content. Names typically follow title case capitalization, where the first letter is automatically uppercased, and subsequent words capitalize their initials, though the system remains case-sensitive beyond the initial character to distinguish pages like "Example" from "example".[26] Invalid characters, including # < > [ ] | { }, are prohibited to avoid syntax conflicts, while spaces convert to underscores in URLs, resulting in structures like /wiki/Page_Name for direct access.[26] Namespaces prefix pages to denote purpose, such as Talk: for discussion threads paired with main content, User: for personal subpages, or Help: for documentation, allowing links like [[User:Example]] to target specific areas without cluttering the main namespace.[27] These prefixes integrate into URLs as /wiki/Namespace:Page_Name, enabling logical separation while maintaining unified searchability.[27]

Navigation tools aid user orientation by providing structured pathways through wiki content. The sidebar, a persistent left-hand panel in standard interfaces, lists key sections like navigation menus, toolbox utilities, and community portals, configurable via a dedicated system message to include internal links, interwiki shortcuts, and external references for quick access.[28] Search functions enable full-text queries across pages, matching whole words or phrases (e.g., using quotes for exact matches or asterisks for prefixes like "book*"), with results filtered by namespace to jump directly to relevant titles or content.[29] Categories organize pages hierarchically by appending [[Category:Topic]] tags, generating dynamic lists at page bottoms that serve as browsable indexes, often forming trees for deeper exploration via tools like category trees.[30] Breadcrumbs, showing a trail of parent pages (e.g., Home > Category > Subtopic), enhance hierarchical navigation, typically implemented through subpage structures or extensions for wikis with nested content.[31]

Inter-wiki navigation extends connectivity beyond a single site, fostering collaboration across wiki networks. Interwiki links use prefixes like w: for Wikipedia or commons: for shared media repositories, formatted as [[w:Main Page]] to link externally while mimicking internal syntax, with prefixes defined in a central table for global consistency.[32] This allows seamless referencing, such as directing to [[commons:File:Example.jpg]] for images hosted elsewhere. Transclusion, the inclusion of content from another page by reference (e.g., {{:Other Wiki:Page}}), supports inter-wiki embedding in configured setups, updating dynamically when source material changes, though it requires administrative setup for cross-site functionality.[33][32]

Version Management

Version management in wikis refers to the mechanisms that track changes to content over time, enabling users to review, compare, and restore previous states of pages. Central to this is the revision history, a chronological log that records every edit, including timestamps, the user or IP address responsible, and the nature of the change. This log allows for diff comparisons, which highlight additions, deletions, and modifications between revisions, facilitating transparency and accountability in collaborative editing.[34] In prominent implementations like MediaWiki, the revision system stores metadata such as edit timestamps and actor identifiers in a database table, with each revision linked to its parent for efficient diff generation. The original WikiWikiWeb by Ward Cunningham implemented a custom version control system in Perl, storing pages and revisions as plain text files to track changes without overwriting prior content.[35][1] Edit summaries, optional brief descriptions provided by editors, often accompany revisions to contextualize changes, though they are not part of the core version data itself. Reversion tools enable recovery from undesired edits by restoring pages to earlier versions. Common methods include rollback, which automatically reverts a series of recent edits by a single user to the state before their contributions began, and undo, which allows selective reversal of specific revisions while preserving intervening changes. Protection levels further support version integrity by restricting edits on sensitive pages, with changes to these levels recorded as dummy revisions—special entries that log the action without altering content—to maintain a complete historical record.[36][37] Advanced wiki engines incorporate branching and merging capabilities inspired by distributed version control systems like Git, allowing parallel development of content streams. For instance, ikiwiki builds directly on Git repositories, enabling users to create branches for experimental edits and merge them back into the main history, reducing conflicts in large-scale or distributed collaborations. Similarly, Gollum operates as a Git-powered wiki, where page revisions are Git commits, supporting full branching, merging, and conflict resolution through the underlying VCS.[38] To preserve version integrity, wikis address edit conflicts, which arise when multiple users modify the same page simultaneously; MediaWiki detects these by comparing the loaded version against the current one during save, prompting manual merging via a conflict resolution interface. Cache purging complements this by invalidating stored rendered versions of pages, ensuring that users view the latest revision rather than an outdated cached copy—achieved through URL parameters like ?action=purge or administrative tools.[39][40]Historical Evolution

Origins and Invention

The wiki concept was invented in 1994 by Ward Cunningham, a software engineer, as part of the Portland Pattern Repository, an online knowledge base intended to capture and share design patterns in software development.[1] Cunningham developed this system to create a collaborative environment where programmers could document evolving ideas without the constraints of traditional documentation tools.[41] The first practical implementation, known as WikiWikiWeb, was launched on March 25, 1995, hosted on Cunningham's company's server at c2.com.[1] This site served as an automated supplement to the Portland Pattern Repository, specifically designed for sharing software patterns among a community of developers.[42] The name "WikiWikiWeb" derived from the Hawaiian word "wiki wiki," meaning "quick," reflecting the system's emphasis on rapid editing and access.[1] Cunningham's invention drew influences from earlier hypertext systems, including Ted Nelson's Project Xanadu, Vannevar Bush's Memex concept from 1945, and tools like ZOG (1972), NoteCards, and HyperCard, which explored associative linking and user-editable content.[1] It was also shaped by the nascent World Wide Web technologies, such as CGI scripts and HTML forms, enabling dynamic web interactions in the mid-1990s.[41] The initial goals of WikiWikiWeb centered on facilitating quick collaboration among programmers, allowing them to contribute and revise content directly on the web to avoid cumbersome email chains and version-tracked documents.[1] As Cunningham described in an early announcement, the plan was "to have interested parties write web pages about the People, Projects and Patterns that have changed the way they program," using simple forms-based authoring that required no HTML knowledge.[41] This approach aimed to build a living repository of practical knowledge through incremental, community-driven edits.[1]Major Developments and Milestones

The launch of Wikipedia in 2001 by Jimmy Wales and Larry Sanger represented a pivotal shift in wiki applications, transforming the technology from niche collaborative tools into a platform for encyclopedic knowledge creation. Initially conceived as a feeder project for the expert-reviewed Nupedia, Wikipedia's open-editing model rapidly expanded its scope, attracting millions of contributors and establishing wikis as viable for large-scale, public information repositories.[43][44] In 2002, the development and deployment of MediaWiki addressed Wikipedia's growing scalability challenges, replacing earlier software like UseModWiki with a more robust PHP-based system using MySQL for database management. Magnus Manske's Phase II script was deployed to the English Wikipedia in January 2002, improving performance by shifting from flat files to a relational database, while Lee Daniel Crocker's Phase III enhancements in July 2002 added features like file uploads and efficient diffs to handle increasing traffic and edits.[45] The 2000s saw widespread proliferation of wikis beyond public encyclopedias, particularly in enterprise settings, with tools like TWiki—founded by Peter Thoeny in 1998 and gaining traction for corporate intranets—enabling structured collaboration in organizations such as CERN and Disney.[46] This era also featured integration of wikis with emerging social media functionalities, as enterprise social software platforms combined wikis with blogs, status updates, and microblogging to foster internal knowledge sharing and real-time communication.[47] Examples include Socialtext's 2002 launch, which pioneered proprietary wiki applications tailored for business workflows.[48] During the 2010s, wiki technologies advanced with a focus on mobile optimization, semantic enhancements, and open-source diversification to meet evolving user needs. Wikimedia's mobile site improvements, such as efficient article downloads and responsive designs implemented around 2016, reduced data usage and improved accessibility for mobile readers, aligning wikis with the rise of smartphone browsing.[49] Semantic wikis gained prominence through extensions like Semantic MediaWiki, which saw iterative releases throughout the decade enabling data annotation, querying, and ontology integration for more structured knowledge representation.[50] Open-source forks, such as Foswiki's 2008 split from TWiki, continued to evolve in the 2010s with community-driven updates emphasizing modularity and extensibility for diverse deployments.[51] By the 2020s, trends in wiki development included AI-assisted editing tools to support human contributors without replacing them, as outlined in the Wikimedia Foundation's 2025 strategy. These tools automate repetitive tasks like vandalism detection and content translation, enhancing efficiency while preserving editorial integrity, with features like WikiVault providing AI-powered drafting assistance for Wikipedia edits.[52][53][54] In November 2025, amid concerns over a 23% decline in Wikipedia traffic from 2022 to 2025 attributed to AI-generated summaries, the Foundation announced a strategic plan urging AI companies to access content via its paid Wikimedia Enterprise API rather than scraping, to ensure sustainable funding and maintain the platform's value as a human-curated knowledge source in the AI era.[55]Software Implementations

Wiki Engine Types

Wiki engines, the software powering wiki systems, can be broadly categorized into traditional, lightweight, and NoSQL-based types, each suited to different scales and use cases. Traditional wiki engines typically rely on a LAMP stack architecture, comprising Linux, Apache, MySQL, and PHP, to manage relational databases for storing pages, revisions, and metadata.[56] This approach supports large-scale deployments by enabling efficient querying and scalability through database optimization. For instance, engines in this category handle complex version histories and user permissions via structured SQL storage.[57] Lightweight wiki engines, in contrast, operate without a database, using file-based storage for simplicity and reduced overhead. These systems store content in plain text files, often in a markup format like Markdown or Creole, making them ideal for small teams or environments with limited server resources.[58] Such designs minimize dependencies, allowing quick setup on basic web servers and avoiding the performance bottlenecks of database connections.[59] NoSQL-based wiki engines emphasize flexible, non-relational data models, often storing information in key-value or document formats for easier handling of unstructured content like revisions or attachments. A representative example is single-file implementations that embed all data in JSON structures within a self-contained HTML document, facilitating portability and offline use.[60] These engines leverage schema-less storage to accommodate dynamic content evolution without rigid table definitions. In terms of architectures, most wiki engines employ server-side rendering, where the server processes edits, generates HTML, and manages persistence, ensuring consistency across users.[56] Client-side rendering, however, shifts computation to the browser using JavaScript, enabling real-time interactions without server round-trips, though it requires careful synchronization for multi-user scenarios.[60] Extensibility is a core feature across types, commonly achieved through plugins or modules that allow customization of syntax, authentication, and integrations without altering core code.[59] For example, plugin systems in both server-side and client-side engines support adding features like search enhancements or media embedding. The majority of wiki engines follow open-source models, distributed under free software licenses that promote community contributions and transparency. Common licenses include the GNU General Public License (GPL) version 2, which requires derivative works to remain open, and the MIT License, offering permissive terms for broader reuse.[58] The Affero GPL (AGPL) is also prevalent, ensuring modifications in networked environments stay open.[61] Proprietary models, while less common, provide closed-source alternatives with vendor support, often bundling advanced enterprise features like integrated analytics.[62] Wiki engine evolution traces from early Perl scripts, which powered the first wikis through simple CGI-based processing of text files. The shift to PHP in the early 2000s enabled database integration for larger sites, as seen in the transition from Perl prototypes to robust LAMP implementations.[57] Modern frameworks have diversified the landscape: Ruby on Rails supports rapid development of feature-rich engines with built-in ORM for data handling, while Node.js facilitates asynchronous, real-time collaboration in JavaScript-centric systems.[63] This progression reflects growing demands for performance, modularity, and cross-platform compatibility.[64]Notable Examples

MediaWiki is one of the most widely adopted wiki engines, powering the Wikimedia Foundation's projects including Wikipedia, where it enables collaborative editing of encyclopedic content through its extensible architecture. Developed in PHP and optimized for large-scale deployments, MediaWiki supports features like revision history, templates, and extensions that enhance functionality, such as Semantic MediaWiki, which adds structured data storage and querying capabilities to wiki pages, allowing for semantic annotations and database-like operations within the content.[65] As of November 2025, the English Wikipedia, built on MediaWiki, hosts over 7 million articles, demonstrating its capacity to manage vast, community-driven knowledge bases.[66] Confluence, developed by Atlassian, serves as a proprietary enterprise wiki platform designed for team collaboration and knowledge sharing in professional environments.[67] It offers features like real-time editing, integration with tools such as Jira for project tracking, and customizable templates for documentation, making it suitable for internal wikis in large organizations where access controls and scalability are priorities.[68] Fandom provides a hosted wiki platform tailored for fan communities, enabling users to create and maintain wikis on topics like video games, movies, and TV series with social features including discussions and multimedia integration.[69] Its centralized hosting model supports thousands of community-driven sites, fostering niche content creation around entertainment and pop culture. Git-based wiki implementations, such as Gollum, leverage version control systems to create simple, repository-backed wikis where pages are stored as Markdown or other text files directly in a Git repository.[38] This approach integrates seamlessly with development workflows, allowing changes to be tracked, branched, and merged like code, which is particularly useful for software documentation and open-source projects. For personal use, Zim functions as a desktop wiki application that organizes notes and knowledge in a hierarchical, linkable structure stored locally as plain text files.[70] It supports wiki syntax for formatting, attachments, and plugins for tasks like calendar integration, serving as a lightweight personal knowledge base without requiring server setup. HackMD offers a collaborative, real-time Markdown editor that operates like a web-based wiki for teams, supporting shared notebooks with features for commenting, versioning, and exporting to various formats.[71] It emphasizes ease of use for developers and open communities, enabling quick setup of shared documentation spaces.Deployment and Hosting

Hosting Options

Self-hosting allows users to install and manage wiki software on their own servers, providing full control over the environment and data. For popular engines like MediaWiki, this typically requires a web server such as Apache or Nginx, PHP 8.1.0 or later (up to 8.3.x as of 2025), and a database like MySQL 5.7 or later or MariaDB 10.3.0 or later (up to 11.8.x as of 2025), with a minimum of 256 MB RAM recommended for basic operation.[72][73] Installation involves downloading the software, extracting files to the server, configuring the database, and running a setup script via a web browser.[74] This approach suits users with technical expertise who prioritize customization and privacy, though it demands ongoing maintenance for updates, backups, and security. Hosted services, often called wiki farms, enable users to create and run wikis without managing infrastructure, as the provider handles server setup, scaling, and maintenance. Examples include Fandom, which specializes in community-driven wikis with features like ad-supported free tiers and premium options for custom domains; Miraheze, a non-profit wiki farm offering free ad-free hosting with the latest MediaWiki versions and extension support on request; and PBworks, focused on collaborative workspaces with tools for file sharing and project management across multiple wikis.[75][76][77] These services typically offer one-click wiki creation, extension support, and varying storage limits, with free plans often including ads and paid upgrades for enhanced features like unlimited users or no branding.[75] Cloud options integrate wiki deployments with platforms like Amazon Web Services (AWS) or Google Cloud Platform (GCP), leveraging virtual machines, containers, or managed services for flexible scaling. On AWS, pre-configured images such as the Bitnami package for MediaWiki simplify deployment on EC2 instances, supporting extensions like AWS S3 for file storage, with pros including high availability across global regions and pay-as-you-go pricing, but cons like potential higher costs for data transfer and a steeper learning curve for networking setup.[78][79] Similarly, GCP offers Bitnami MediaWiki on Compute Engine, benefiting from seamless integration with Google Workspace for collaboration and strong AI/ML tools for content analysis, though it may involve vendor lock-in and variable pricing based on sustained use discounts.[80] These setups address scalability needs by auto-scaling resources during traffic spikes, as detailed in subsequent sections on performance. Migration paths between hosting types generally involve exporting wiki content and data from the source environment and importing it into the target. For MediaWiki, this includes using the Special:Export tool to generate XML dumps of pages and revisions, backing up the database via mysqldump, and transferring files like images, followed by import scripts on the new host.[81] Many hosted and cloud providers, such as those listed on MediaWiki's hosting services page, offer assisted migrations, including free transfers for compatible setups to minimize downtime.[75] This process ensures continuity but requires verifying extension compatibility and testing in a staging environment beforehand.Scalability and Performance

As wikis grow in content volume and user traffic, scalability becomes essential to maintain responsiveness without proportional increases in infrastructure costs. Common techniques include database replication to distribute read operations across multiple servers, caching layers to store frequently accessed data, and load balancing to evenly distribute requests among application servers. For instance, MediaWiki implementations often employ master-slave replication for databases, allowing read queries to be offloaded to replicas while writes remain on the primary server, thereby enhancing read scalability for high-traffic sites. Caching with tools like Varnish serves as a reverse proxy to cache rendered pages in memory, significantly reducing backend server load and accelerating delivery of static content. Load balancing, typically handled via software like HAProxy or integrated into caching proxies, ensures no single server becomes a bottleneck during traffic surges.[82][83] Performance in wiki systems is evaluated through key metrics such as page load times, edit latency, and the ability to handle high-traffic events. Median page load times on platforms like Wikipedia are targeted to remain under 2-3 seconds for most users, measured via real-user monitoring (RUM) tools that track metrics from the Navigation Timing API, including time to first paint and total load event end. Edit latency, critical for collaborative editing, has been optimized in MediaWiki from a median of 7.5 seconds to 2.5 seconds through backend improvements such as the adoption of PHP 7 and later versions (in 2014 using HHVM and subsequently with PHP optimizations), minimizing the time between submission and page save confirmation as of the latest versions using PHP 8.1.[84][85][86][87][73] During high-traffic events, such as major news spikes, systems must sustain tens of thousands of requests per second; for example, Wikipedia's infrastructure handled 90,000 requests per second in 2011 with a 99.82% cache hit ratio, preventing overload through rapid cache invalidation and traffic routing.[87] A prominent case study is Wikipedia's infrastructure evolution, which integrates multiple data centers and content delivery networks (CDNs) for enhanced scalability. Initially reliant on a single data center in Florida since 2004, the Wikimedia Foundation expanded to a secondary site in Dallas by 2014, achieving full multi-datacenter operation by 2022 to provide geographic redundancy and reduce latency—particularly for users in regions like East Asia, where round-trip times dropped by approximately 15 milliseconds for read requests. This setup employs Varnish for in-memory caching across data centers, complemented by on-disk caching via Apache Traffic Server (ATS) in the CDN, which routes traffic dynamically and achieves high cache efficiency during global events. The transition addressed challenges like cache consistency and job queue synchronization, using tools such as Kafka for invalidation signals, ultimately improving overall reliability and performance under varying loads.[88][89][90] Looking ahead as of 2025, future scalability considerations for wiki platforms include edge computing to push content closer to users, minimizing latency in distributed networks, and AI-driven query optimization to enhance database performance amid growing data volumes. Edge computing platforms are projected to accelerate AI workloads at the network periphery, potentially integrating with CDNs for real-time content delivery in wikis. Meanwhile, AI techniques for query optimization, such as learned indexes, could automate scaling decisions in replicated databases, though adoption in open-source wiki engines remains exploratory within annual technology roadmaps.[91]Community Dynamics

Community Formation

Wiki communities typically form through an initial seeding phase where a small group of founders or early adopters establishes the core content and structure, often leveraging the wiki software's inherent low barriers to entry that allow anonymous edits without requiring registration. This accessibility enables rapid initial content accumulation, as seen in Wikipedia's early years when volunteer contributors could immediately participate in building articles. Recruitment follows through targeted outreach efforts, such as community events, hackathons, and partnerships with external organizations, which attract new members by promoting the platform's collaborative ethos.[92][93] Retention is fostered through established community norms, including guidelines for constructive editing and dispute resolution, which help integrate newcomers and encourage long-term involvement; however, retention rates remain low, with only 3-7% of new editors continuing after 60 days in many language editions. Growth is driven by these low entry barriers, which democratize participation, alongside social features like talk pages that facilitate discussion, coordination, and relationship-building among contributors. External promotion, such as Wikimedia Foundation campaigns and academic integrations, further sustains expansion by drawing in diverse participants.[92][94][95] Within these communities, distinct roles emerge to maintain operations: administrators handle administrative tasks like user blocks and policy enforcement, patrollers monitor recent changes to revert vandalism, and bots automate repetitive maintenance such as spam detection and formatting updates. These roles, often self-selected based on editing patterns and community trust, ensure content quality and scalability, with bots enforcing rules to reduce human workload.[96][97] Despite these mechanisms, wiki communities face challenges including editor burnout from high workloads and interpersonal conflicts, which contribute to dropout rates, as well as inclusivity issues stemming from a predominantly male demographic and unwelcoming interactions that deter underrepresented groups. Efforts such as the Wiki Education program have helped bring in 19% of new active editors on English Wikipedia, and editor activity saw an increase during the COVID-19 pandemic, though overall participation remains challenged.[98][99] Metrics highlight these strains; for instance, Wikipedia's active editors (those making at least five edits per month) peaked at over 51,000 in 2007 before declining to around 31,000 by 2013, and stabilizing at approximately 39,000 as of December 2024, reflecting broader stagnation in participation.[100][101][102][103]Applications and Use Cases

Wikis have found extensive application in knowledge management within corporate settings, where they facilitate the creation, organization, and sharing of internal documentation. Organizations deploy wiki-based intranets to centralize information such as policies, procedures, and best practices, enabling employees to collaborate in real-time without relying on email chains or scattered files. Atlassian Confluence exemplifies this use, functioning as a versatile platform for teams to build interconnected pages for project documentation, onboarding guides, and knowledge repositories, thereby enhancing efficiency in large-scale enterprises.[104][105] Beyond general corporate tools, specialized wikis cater to niche communities by aggregating targeted information. Academic wikis like Scholarpedia provide peer-reviewed, expert-curated entries on scientific topics, offering in-depth, reliable content maintained by scholars worldwide to complement broader encyclopedias.[106] Fan wikis on platforms such as Fandom enable enthusiasts to document details about media franchises, including character backstories, episode summaries, and lore, fostering vibrant, user-driven communities around entertainment properties.[107] City wikis, often powered by tools like LocalWiki, serve as grassroots repositories for local knowledge, covering neighborhood histories, event calendars, public services, and community resources to empower residents with accessible, hyper-local information.[108] Emerging applications of wikis extend to project management, particularly in open-source software development, where they support collaborative tracking of tasks, code documentation, and version histories. Tools like TWiki are employed to create structured project spaces that integrate with development workflows, allowing distributed teams to maintain living documentation alongside code repositories.[109] In education, wikis promote interactive learning by enabling students to co-author content on course topics, group projects, or research compilations, which builds skills in collaboration and critical evaluation while creating reusable knowledge bases for instructors and peers.[110] These uses underscore wikis' adaptability to dynamic environments requiring ongoing updates and collective input. As of 2025, the proliferation of wiki platforms illustrates their broad adoption, with services like Fandom hosting over 385,000 open wikis that span diverse topics and user bases.[111]Trust, Security, and Reliability

Access Controls

Access controls in wikis, particularly those powered by MediaWiki, manage user permissions to view, edit, and administer content through a tiered system of groups and rights. Anonymous users, identified by the '*' group, have limited permissions such as reading pages, creating accounts, and basic editing, but are restricted from actions like moving pages or uploading files to prevent abuse.[112] Registered users in the 'user' group gain expanded rights upon logging in, including editing established pages, moving pages, uploading files, and sending emails, enabling broader participation while maintaining accountability via account tracking.[112] Autoconfirmed users, automatically promoted after meeting criteria like a minimum account age (typically four days) and edit count (around 10 edits), receive additional privileges such as editing semi-protected pages, which helps mitigate vandalism from new accounts without overly restricting experienced contributors.[112] Sysops, or administrators in the 'sysop' group, hold elevated rights including blocking users, protecting pages, deleting content, and importing data, assigned manually by bureaucrats to ensure trusted oversight.[112] These groups are configurable via the $wgGroupPermissions array in LocalSettings.php, allowing site administrators to customize rights for specific needs.[112] Key features enforce these permissions through targeted restrictions. Page protection allows sysops to lock pages against edits: semi-protection bars anonymous and new users from modifying content, permitting only autoconfirmed users to edit and thus reducing low-effort vandalism on high-traffic articles; full protection limits edits to sysops only, applied during edit wars or sensitive updates to maintain stability.[113] IP blocks, applied by sysops via Special:Block, target specific IP addresses or ranges (using CIDR notation, limited to /16 or narrower by default) to prevent editing, account creation, and other actions from disruptive sources, with options for partial blocks restricting access to certain namespaces or pages.[114] Rate limiting complements these by capping actions like edits or uploads per user group and timeframe— for instance, new users limited to 8 edits per 60 seconds— configurable through wgRateLimits) Authentication mechanisms enhance security for enterprise deployments by integrating external systems. MediaWiki supports LDAP authentication via extensions like LDAPAuthentication2, which connects to directory services for single sign-on, mapping group memberships to wiki rights for seamless corporate access control.[115] OAuth integration, through the OAuth extension, enables secure delegation of access to third-party applications or providers, supporting both OAuth 1.0a and 2.0 for controlled API interactions without sharing credentials.[116] Two-factor authentication, implemented via the OATHAuth extension, requires a time-based one-time password alongside passwords, configurable for optional or enforced use to protect accounts from unauthorized logins.[117] Wikis can be configured as open or closed, balancing accessibility with control. Open wikis permit anonymous reading and editing by default, fostering broad collaboration and knowledge growth but exposing content to vandalism and spam, as seen in public installations like Wikipedia.[118] Closed wikis, achieved by disabling anonymous rights (e.g., $wgGroupPermissions['*']['read'] = false;), restrict access to registered users, enhancing privacy and quality control for internal or proprietary use but potentially reducing external contributions and requiring more administrative effort for user management.[118] The trade-off favors open models for community-driven projects emphasizing inclusivity, while closed setups suit organizations prioritizing security over scale.[118]Content Moderation and Security