Recent from talks

Contribute something

Nothing was collected or created yet.

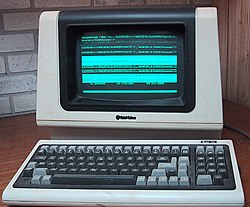

Computer terminal

View on Wikipedia

This article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

(keyboard/printer)

A computer terminal is an electronic or electromechanical hardware device that can be used for entering data into, and transcribing data from, a computer or a computing system. Most early computers only had a front panel to input or display bits and had to be connected to a terminal to print or input text through a keyboard. Teleprinters were used as early-day hard-copy terminals[1][2] and predated the use of a computer[1] screen by decades. The computer would typically transmit a line of data which would be printed on paper, and accept a line of data from a keyboard over a serial or other interface. Starting in the mid-1970s with microcomputers such as the Sphere 1, Sol-20, and Apple I, display circuitry and keyboards began to be integrated into personal and workstation computer systems, with the computer handling character generation and outputting to a CRT display such as a computer monitor or, sometimes, a consumer TV, but most larger computers continued to require terminals.

Early terminals were inexpensive devices but very slow compared to punched cards or paper tape for input; with the advent of time-sharing systems, terminals slowly pushed these older forms of interaction from the industry. Related developments were the improvement of terminal technology and the introduction of inexpensive video displays. Early Teletypes only printed out with a communications speed of only 75 baud or 10 5-bit characters per second, and by the 1970s speeds of video terminals had improved to 2400 bit/s or 9600 bit/s. Similarly, the speed of remote batch terminals had improved to 4800 bit/s at the beginning of the decade and 19.6 kbps by the end of the decade, with higher speeds possible on more expensive terminals.

The function of a terminal is typically confined to transcription and input of data; a device with significant local, programmable data-processing capability may be called a "smart terminal" or fat client. A terminal that depends on the host computer for its processing power is called a "dumb terminal"[3] or a thin client.[4][5] In the era of serial (RS-232) terminals there was a conflicting usage of the term "smart terminal" as a dumb terminal with no user-accessible local computing power but a particularly rich set of control codes for manipulating the display; this conflict was not resolved before hardware serial terminals became obsolete.

The use of terminals decreased over time as computing shifted from command line interface (CLI) to graphical user interface (GUI) and from time-sharing on large computers to personal computers and handheld devices. Today, users generally interact with a server over high-speed networks using a Web browser and other network-enabled GUI applications. Today, a terminal emulator application provides the capabilities of a physical terminal – allowing interaction with the operating system shell and other CLI applications.

History

[edit]The console of Konrad Zuse's Z3 had a keyboard in 1941, as did the Z4 in 1942–1945. However, these consoles could only be used to enter numeric inputs and were thus analogous to those of calculating machines; programs, commands, and other data were entered via paper tape. Both machines had a row of display lamps for results.

In 1956, the Whirlwind Mark I computer became the first computer equipped with a keyboard-printer combination with which to support direct input[2] of data and commands and output of results. That device was a Friden Flexowriter, which would continue to serve this purpose on many other early computers well into the 1960s.

Categories

[edit]Hard-copy terminals

[edit]Early user terminals connected to computers were, like the Flexowriter, electromechanical teleprinters/teletypewriters (TeleTYpewriter, TTY), such as the Teletype Model 33, originally used for telegraphy; early Teletypes were typically configured as Keyboard Send-Receive (KSR) or Automatic Send-Receive (ASR). Some terminals, such as the ASR Teletype models, included a paper tape reader and punch which could record output such as a program listing. The data on the tape could be re-entered into the computer using the tape reader on the teletype, or printed to paper. Teletypes used the current loop interface that was already used in telegraphy. A less expensive Read Only (RO) configuration was available for the Teletype.

Custom-designs keyboard/printer terminals that came later included the IBM 2741 (1965)[6] and the DECwriter (1970).[7] Respective top speeds of teletypes, IBM 2741 and the LA30 (an early DECwriter) were 10, 15 and 30 characters per second. Although at that time "paper was king"[7][8] the speed of interaction was relatively limited.

The DECwriter was the last major printing-terminal product. It faded away after 1980 under pressure from video display units (VDUs), with the last revision (the DECwriter IV of 1982) abandoning the classic teletypewriter form for one more resembling a desktop printer.

Printing terminals required that the print mechanism be away from the paper after a pause in the print flow, to allow an interactively typing user to see what they had just typed and make corrections, or to read a prompt string. As a dot-matrix printer, the DECwriter family would move the print head sideways after each pause, returning to the last print position when the next character came from the remote computer (or local echo).

Video display unit

[edit]A video display unit (VDU) displays information on a screen rather than printing text to paper and typically uses a cathode-ray tube (CRT). VDUs in the 1950s were typically designed for displaying graphical data rather than text and were used in, e.g., experimental computers at institutions such as MIT; computers used in academia, government and business, sold under brand names such as DEC, ERA, IBM and UNIVAC; military computers supporting specific defence applications such as ballistic missile warning systems and radar/air defence coordination systems such as BUIC and SAGE.

Two early landmarks in the development of the VDU were the Univac Uniscope[9][10][11] and the IBM 2260,[12] both in 1964. These were block-mode terminals designed to display a page at a time, using proprietary protocols; in contrast to character-mode devices, they enter data from the keyboard into a display buffer rather than transmitting them immediately. In contrast to later character-mode devices, the Uniscope used synchronous serial communication over an EIA RS-232 interface to communicate between the multiplexer and the host, while the 2260 used either a channel connection or asynchronous serial communication between the 2848 and the host. The 2265, related to the 2260, also used asynchronous serial communication.

The Datapoint 3300 from Computer Terminal Corporation, announced in 1967 and shipped in 1969, was a character-mode device that emulated a Model 33 Teletype. This reflects the fact that early character-mode terminals were often deployed to replace teletype machines as a way to reduce operating costs.

The next generation of VDUs went beyond teletype emulation with an addressable cursor that gave them the ability to paint two-dimensional displays on the screen. Very early VDUs with cursor addressibility included the VT05 and the Hazeltine 2000 operating in character mode, both from 1970. Despite this capability, early devices of this type were often called "Glass TTYs".[13] Later, the term "glass TTY" tended to be restrospectively narrowed to devices without full cursor addressibility.

The classic era of the VDU began in the early 1970s and was closely intertwined with the rise of time sharing computers. Important early products were the ADM-3A, VT52, and VT100. These devices used no complicated CPU, instead relying on individual logic gates, LSI chips, or microprocessors such as the Intel 8080. This made them inexpensive and they quickly became extremely popular input-output devices on many types of computer system, often replacing earlier and more expensive printing terminals.

After 1970 several suppliers gravitated to a set of common standards:

- ASCII character set (rather than, say, EBCDIC or anything specific to one company), but early/economy models often supported only capital letters (such as the original ADM-3, the Data General model 6052 – which could be upgraded to a 6053 with a lower-case character ROM – and the Heathkit H9)

- RS-232 serial ports (25-pin, ready to connect to a modem, yet some manufacturer-specific pin usage extended the standard, e.g. for use with 20-mA current loops)

- 24 lines (or possibly 25 – sometimes a special status line) of 72 or 80 characters of text (80 was the same as IBM punched cards). Later models sometimes had two character-width settings.

- Some type of cursor that can be positioned (with arrow keys or "home" and other direct cursor address setting codes).

- Implementation of at least 3 control codes: Carriage Return (Ctrl-M), Line-Feed (Ctrl-J), and Bell (Ctrl-G), but usually many more, such as escape sequences to provide underlining, dim or reverse-video character highlighting, and especially to clear the display and position the cursor.

The experimental era of serial VDUs culminated with the VT100 in 1978. By the early 1980s, there were dozens of manufacturers of terminals, including Lear-Siegler, ADDS, Data General, DEC, Hazeltine Corporation, Heath/Zenith, Hewlett-Packard, IBM, TeleVideo, Volker-Craig, and Wyse, many of which had incompatible command sequences (although many used the early ADM-3 as a starting point).

The great variations in the control codes between makers gave rise to software that identified and grouped terminal types so the system software would correctly display input forms using the appropriate control codes; In Unix-like systems the termcap or terminfo files, the stty utility, and the TERM environment variable would be used; in Data General's Business BASIC software, for example, at login-time a sequence of codes were sent to the terminal to try to read the cursor's position or the 25th line's contents using a sequence of different manufacturer's control code sequences, and the terminal-generated response would determine a single-digit number (such as 6 for Data General Dasher terminals, 4 for ADM 3A/5/11/12 terminals, 0 or 2 for TTYs with no special features) that would be available to programs to say which set of codes to use.

The great majority of terminals were monochrome, manufacturers variously offering green, white or amber and sometimes blue screen phosphors. (Amber was claimed to reduce eye strain). Terminals with modest color capability were also available but not widely used; for example, a color version of the popular Wyse WY50, the WY350, offered 64 shades on each character cell.

VDUs were eventually displaced from most applications by networked personal computers, at first slowly after 1985 and with increasing speed in the 1990s. However, they had a lasting influence on PCs. The keyboard layout of the VT220 terminal strongly influenced the Model M shipped on IBM PCs from 1985, and through it all later computer keyboards.

Although flat-panel displays were available since the 1950s, cathode-ray tubes continued to dominate the market until the personal computer had made serious inroads into the display terminal market. By the time cathode-ray tubes on PCs were replaced by flatscreens after the year 2000, the hardware computer terminal was nearly obsolete.

Character-oriented terminals

[edit]

A character-oriented terminal is a type of computer terminal that communicates with its host one character at a time, as opposed to a block-oriented terminal that communicates in blocks of data. It is the most common type of data terminal, because it is easy to implement and program. Connection to the mainframe computer or terminal server is achieved via RS-232 serial links, Ethernet or other proprietary protocols.

Character-oriented terminals can be "dumb" or "smart". Dumb terminals[3] are those that can interpret a limited number of control codes (CR, LF, etc.) but do not have the ability to process special escape sequences that perform functions such as clearing a line, clearing the screen, or controlling cursor position. In this context dumb terminals are sometimes dubbed glass Teletypes, for they essentially have the same limited functionality as does a mechanical Teletype. This type of dumb terminal is still supported on modern Unix-like systems by setting the environment variable TERM to dumb. Smart or intelligent terminals are those that also have the ability to process escape sequences, in particular the VT52, VT100 or ANSI escape sequences.

Text terminals

[edit]

A text terminal, or often just terminal (sometimes text console) is a serial computer interface for text entry and display. Information is presented as an array of pre-selected formed characters. When such devices use a video display such as a cathode-ray tube, they are called a "video display unit" or "visual display unit" (VDU) or "video display terminal" (VDT).

The system console is often[14] a text terminal used to operate a computer. Modern computers have a built-in keyboard and display for the console. Some Unix-like operating systems such as Linux and FreeBSD have virtual consoles to provide several text terminals on a single computer.

The fundamental type of application running on a text terminal is a command-line interpreter or shell, which prompts for commands from the user and executes each command after a press of Return.[15] This includes Unix shells and some interactive programming environments. In a shell, most of the commands are small applications themselves.

Another important application type is that of the text editor. A text editor typically occupies the full area of display, displays one or more text documents, and allows the user to edit the documents. The text editor has, for many uses, been replaced by the word processor, which usually provides rich formatting features that the text editor lacks. The first word processors used text to communicate the structure of the document, but later word processors operate in a graphical environment and provide a WYSIWYG simulation of the formatted output. However, text editors are still used for documents containing markup such as DocBook or LaTeX.

Programs such as Telix and Minicom control a modem and the local terminal to let the user interact with remote servers. On the Internet, telnet and ssh work similarly.

In the simplest form, a text terminal is like a file. Writing to the file displays the text and reading from the file produces what the user enters. In Unix-like operating systems, there are several character special files that correspond to available text terminals. For other operations, there are special escape sequences, control characters and termios functions that a program can use, most easily via a library such as ncurses. For more complex operations, the programs can use terminal specific ioctl system calls. For an application, the simplest way to use a terminal is to simply write and read text strings to and from it sequentially. The output text is scrolled, so that only the last several lines (typically 24) are visible. Unix systems typically buffer the input text until the Enter key is pressed, so the application receives a ready string of text. In this mode, the application need not know much about the terminal. For many interactive applications this is not sufficient. One of the common enhancements is command-line editing (assisted with such libraries as readline); it also may give access to command history. This is very helpful for various interactive command-line interpreters.

Even more advanced interactivity is provided with full-screen applications. Those applications completely control the screen layout; also they respond to key-pressing immediately. This mode is very useful for text editors, file managers and web browsers. In addition, such programs control the color and brightness of text on the screen, and decorate it with underline, blinking and special characters (e.g. box-drawing characters). To achieve all this, the application must deal not only with plain text strings, but also with control characters and escape sequences, which allow moving the cursor to an arbitrary position, clearing portions of the screen, changing colors and displaying special characters, and also responding to function keys. The great problem here is that there are many different terminals and terminal emulators, each with its own set of escape sequences. In order to overcome this, special libraries (such as curses) have been created, together with terminal description databases, such as Termcap and Terminfo.

Block-oriented terminals

[edit]A block-oriented terminal or block mode terminal is a type of computer terminal that communicates with its host in blocks of data, as opposed to a character-oriented terminal that communicates with its host one character at a time. A block-oriented terminal may be card-oriented, display-oriented, keyboard-display, keyboard-printer, printer or some combination.

The IBM 3270 is perhaps the most familiar implementation of a block-oriented display terminal,[16] but most mainframe computer manufacturers and several other companies produced them. The description below is in terms of the 3270, but similar considerations apply to other types.

Block-oriented terminals typically incorporate a buffer which stores one screen or more of data, and also stores data attributes, not only indicating appearance (color, brightness, blinking, etc.) but also marking the data as being enterable by the terminal operator vs. protected against entry, as allowing the entry of only numeric information vs. allowing any characters, etc. In a typical application the host sends the terminal a preformatted panel containing both static data and fields into which data may be entered. The terminal operator keys data, such as updates in a database entry, into the appropriate fields. When entry is complete (or ENTER or PF key pressed on 3270s), a block of data, usually just the data entered by the operator (modified data), is sent to the host in one transmission. The 3270 terminal buffer (at the device) could be updated on a single character basis, if necessary, because of the existence of a "set buffer address order" (SBA), that usually preceded any data to be written/overwritten within the buffer. A complete buffer could also be read or replaced using the READ BUFFER command or WRITE command (unformatted or formatted in the case of the 3270).

Block-oriented terminals cause less system load on the host and less network traffic than character-oriented terminals. They also appear more responsive to the user, especially over slow connections, since editing within a field is done locally rather than depending on echoing from the host system.

Early terminals had limited editing capabilities – 3270 terminals, for example, only could check entries as valid numerics.[17] Subsequent "smart" or "intelligent" terminals incorporated microprocessors and supported more local processing.

Programmers of block-oriented terminals often used the technique of storing context information for the transaction in progress on the screen, possibly in a hidden field, rather than depending on a running program to keep track of status. This was the precursor of the HTML technique of storing context in the URL as data to be passed as arguments to a CGI program.

Unlike a character-oriented terminal, where typing a character into the last position of the screen usually causes the terminal to scroll down one line, entering data into the last screen position on a block-oriented terminal usually causes the cursor to wrap— move to the start of the first enterable field. Programmers might "protect" the last screen position to prevent inadvertent wrap. Likewise a protected field following an enterable field might lock the keyboard and sound an audible alarm if the operator attempted to enter more data into the field than allowed.

Common block-oriented terminals

[edit]- Hard-copy

- Remote job entry

- Display

- IBM 2260

- IBM 3270

- IBM 5250

- Burroughs Corporation TD-830

- AT&T Dataspeed 40 (3270 clone manufactured by Teletype Corporation)

- TeleVideo 912/920/925/950[18]

- Tandem Computers VT6530

- Hewlett-Packard VT2640[19]

- UNIVAC Uniscope series

- Digital Equipment Corporation VT61, VT62

- Lear Siegler ADM31[20] (optional)

- Honeywell VIP 7700/7760

- ITT Corporation Courier line

- Bull Questar

- ICL 7500 series

Graphical terminals

[edit]

A graphical terminal can display images as well as text. Graphical terminals[21] are divided into vector-mode terminals, and raster mode.

A vector-mode display directly draws lines on the face of a cathode-ray tube under control of the host computer system. The lines are continuously formed, but since the speed of electronics is limited, the number of concurrent lines that can be displayed at one time is limited. Vector-mode displays were historically important but are no longer used. Practically all modern graphic displays are raster-mode, descended from the picture scanning techniques used for television, in which the visual elements are a rectangular array of pixels. Since the raster image is only perceptible to the human eye as a whole for a very short time, the raster must be refreshed many times per second to give the appearance of a persistent display. The electronic demands of refreshing display memory meant that graphic terminals were developed much later than text terminals, and initially cost much more.[22][23]

Most terminals today[when?] are graphical; that is, they can show images on the screen. The modern term for graphical terminal is "thin client".[citation needed] A thin client typically uses a protocol such as X11 for Unix terminals, or RDP for Microsoft Windows. The bandwidth needed depends on the protocol used, the resolution, and the color depth.

Modern graphic terminals allow display of images in color, and of text in varying sizes, colors, and fonts (type faces).[clarification needed]

In the early 1990s, an industry consortium attempted to define a standard, AlphaWindows, that would allow a single CRT screen to implement multiple windows, each of which was to behave as a distinct terminal. Unfortunately, like I2O, this suffered from being run as a closed standard: non-members were unable to obtain even minimal information and there was no realistic way a small company or independent developer could join the consortium.[citation needed]

Intelligent terminals

[edit]An intelligent terminal[24] does its own processing, usually implying a microprocessor is built in, but not all terminals with microprocessors did any real processing of input: the main computer to which it was attached would have to respond quickly to each keystroke. The term "intelligent" in this context dates from 1969.[25]

Notable examples include the IBM 2250, predecessor to the IBM 3250 and IBM 5080, and IBM 2260,[26] predecessor to the IBM 3270, introduced with System/360 in 1964.

Most terminals were connected to minicomputers or mainframe computers and often had a green or amber screen. Typically terminals communicate with the computer via a serial port via a null modem cable, often using an EIA RS-232 or RS-422 or RS-423 or a current loop serial interface. IBM systems typically communicated over a Bus and Tag channel, a coaxial cable using a proprietary protocol, a communications link using Binary Synchronous Communications or IBM's SNA protocol, but for many DEC, Data General and NCR (and so on) computers there were many visual display suppliers competing against the computer manufacturer for terminals to expand the systems. In fact, the instruction design for the Intel 8008 was originally conceived at Computer Terminal Corporation as the processor for the Datapoint 2200.

From the introduction of the IBM 3270, and the DEC VT100 (1978), the user and programmer could notice significant advantages in VDU technology improvements, yet not all programmers used the features of the new terminals (backward compatibility in the VT100 and later TeleVideo terminals, for example, with "dumb terminals" allowed programmers to continue to use older software).

Some dumb terminals had been able to respond to a few escape sequences without needing microprocessors: they used multiple printed circuit boards with many integrated circuits; the single factor that classed a terminal as "intelligent" was its ability to process user-input within the terminal—not interrupting the main computer at each keystroke—and send a block of data at a time (for example: when the user has finished a whole field or form). Most terminals in the early 1980s, such as ADM-3A, TVI912, Data General D2, DEC VT52, despite the introduction of ANSI terminals in 1978, were essentially "dumb" terminals, although some of them (such as the later ADM and TVI models) did have a primitive block-send capability. Common early uses of local processing power included features that had little to do with off-loading data processing from the host computer but added useful features such as printing to a local printer, buffered serial data transmission and serial handshaking (to accommodate higher serial transfer speeds), and more sophisticated character attributes for the display, as well as the ability to switch emulation modes to mimic competitor's models, that became increasingly important selling features during the 1980s especially, when buyers could mix and match different suppliers' equipment to a greater extent than before.

The advance in microprocessors and lower memory costs made it possible for the terminal to handle editing operations such as inserting characters within a field that may have previously required a full screen-full of characters to be re-sent from the computer, possibly over a slow modem line. Around the mid-1980s most intelligent terminals, costing less than most dumb terminals would have a few years earlier, could provide enough user-friendly local editing of data and send the completed form to the main computer. Providing even more processing possibilities, workstations such as the TeleVideo TS-800 could run CP/M-86, blurring the distinction between terminal and Personal Computer.

Another of the motivations for development of the microprocessor was to simplify and reduce the electronics required in a terminal. That also made it practicable to load several "personalities" into a single terminal, so a Qume QVT-102 could emulate many popular terminals of the day, and so be sold into organizations that did not wish to make any software changes. Frequently emulated terminal types included:

- Lear Siegler ADM-3A and later models

- TeleVideo 910 to 950 (these models copied ADM3 codes and added several of their own, eventually being copied by Qume and others)

- Digital Equipment Corporation VT52 and VT100

- Data General D1 to D3 and especially D200 and D210

- Hazeltine Corporation H1500

- Tektronix 4014

- Wyse W50, W60 and W99

The ANSI X3.64 escape code standard produced uniformity to some extent, but significant differences remained. For example, the VT100, Heathkit H19 in ANSI mode, Televideo 970, Data General D460, and Qume QVT-108 terminals all followed the ANSI standard, yet differences might exist in codes from function keys, what character attributes were available, block-sending of fields within forms, "foreign" character facilities, and handling of printers connected to the back of the screen.

In the 21st century, the term Intelligent Terminal can now refer to a retail Point of Sale computer.[27]

Contemporary

[edit]Even though the early IBM PC looked somewhat like a terminal with a green monochrome monitor, it is not classified a terminal since it provides local computing instead of interacting with a server at a character level. With terminal emulator software, a PC can, however, provide the function of a terminal to interact with a mainframe or minicomputer. Eventually, personal computers greatly reduced market demand for conventional terminals.

In and around the 1990s, thin client and X terminal technology combined the relatively economical local processing power with central, shared computer facilities to leverage advantages of terminals over personal computers.

In a GUI environment, such as the X Window System, the display can show multiple programs – each in its own window – rather than a single stream of text associated with a single program. As a terminal emulator runs in a GUI environment to provide command-line access, it alleviates the need for a physical terminal and allows for multiple windows running separate emulators.

System console

[edit]

One meaning of system console, computer console, root console, operator's console, or simply console is the text entry and display device for system administration messages, particularly those from the BIOS or boot loader, the kernel, from the init system and from the system logger. It is a physical device consisting of a keyboard and a printer or screen, and traditionally is a text terminal, but may also be a graphical terminal.

Another, older, meaning of system console, computer console, hardware console, operator's console or simply console is a hardware component used by an operator to control the hardware, typically some combination of front panel, keyboard/printer and keyboard/display.

History

[edit]

Prior to the development of alphanumeric CRT system consoles, some computers such as the IBM 1620 had console typewriters and front panels while the very first electronic stored-program computer, the Manchester Baby, used a combination of electromechanical switches and a CRT to provide console functions—the CRT displaying memory contents in binary by mirroring the machine's Williams-Kilburn tube CRT-based RAM.

Some early operating systems supported either a single keyboard/print or keyboard/display device for controlling the OS. Some also supported a single alternate console, and some supported a hardcopy console for retaining a record of commands, responses and other console messages. However, in the late 1960s it became common for operating systems to support many more consoles than 3, and operating systems began appearing in which the console was simply any terminal with a privileged user logged on.

On early minicomputers, the console was a serial console, an RS-232 serial link to a terminal such as a ASR-33 or, later, a terminal from Digital Equipment Corporation (DEC), e.g., DECWriter, VT100. This terminal was usually kept in a secured room since it could be used for certain privileged functions such as halting the system or selecting which media to boot from. Large midrange systems, e.g. those from Sun Microsystems, Hewlett-Packard and IBM,[citation needed] still use serial consoles. In larger installations, the console ports are attached to multiplexers or network-connected multiport serial servers that let an operator connect a terminal to any of the attached servers. Today, serial consoles are often used for accessing headless systems, usually with a terminal emulator running on a laptop. Also, routers, enterprise network switches and other telecommunication equipment have RS-232 serial console ports.

On PCs and workstations, the computer's attached keyboard and monitor have the equivalent function. Since the monitor cable carries video signals, it cannot be extended very far. Often, installations with many servers therefore use keyboard/video multiplexers (KVM switches) and possibly video amplifiers to centralize console access. In recent years, KVM/IP devices have become available that allow a remote computer to view the video output and send keyboard input via any TCP/IP network and therefore the Internet.

Some PC BIOSes, especially in servers, also support serial consoles, giving access to the BIOS through a serial port so that the simpler and cheaper serial console infrastructure can be used. Even where BIOS support is lacking, some operating systems, e.g. FreeBSD and Linux, can be configured for serial console operation either during bootup, or after startup.

Starting with the IBM 9672, IBM large systems have used a Hardware Management Console (HMC), consisting of a PC and a specialized application, instead of a 3270 or serial link. Other IBM product lines also use an HMC, e.g., System p.

It is usually possible to log in from the console. Depending on configuration, the operating system may treat a login session from the console as being more trustworthy than a login session from other sources.

Emulation

[edit]A terminal emulator is a piece of software that emulates a text terminal. In the past, before the widespread use of local area networks and broadband internet access, many computers would use a serial access program to communicate with other computers via telephone line or serial device.

When the first Macintosh was released, a program called MacTerminal[28] was used to communicate with many computers, including the IBM PC.

The Win32 console on Windows does not emulate a physical terminal that supports escape sequences[29][dubious – discuss] so SSH and Telnet programs (for logging in textually to remote computers) for Windows, including the Telnet program bundled with some versions of Windows, often incorporate their own code to process escape sequences.

The terminal emulators on most Unix-like systems—such as, for example, gnome-terminal, Konsole, QTerminal, xterm, and Terminal.app—do emulate physical terminals including support for escape sequences; e.g., xterm can emulate the VT220 and Tektronix 4010 hardware terminals.

Modes

[edit]Terminals can operate in various modes, relating to when they send input typed by the user on the keyboard to the receiving system (whatever that may be):

- Character mode (a.k.a. character-at-a-time mode): In this mode, typed input is unbuffered and sent immediately to the receiving system.[30]

- Line mode (a.k.a. line-at-a-time mode): In this mode, the terminal is buffered, provides a local line editing function, and sends an entire input line, after it has been locally edited, when the user presses an, e.g., ↵ Enter, EOB, key.[30] A so-called "line mode terminal" operates solely in this mode.[31]

- Block mode (a.k.a. screen-at-a-time mode): In this mode (also called block-oriented), the terminal is buffered and provides a local full-screen data function. The user can enter input into multiple fields in a form on the screen (defined to the terminal by the receiving system), moving the cursor around the screen using keys such as Tab ↹ and the arrow keys and performing editing functions locally using insert, delete, ← Backspace and so forth. The terminal sends only the completed form, consisting of all the data entered on the screen, to the receiving system when the user presses an ↵ Enter key.[32][33][30]

There is a distinction between the return and the ↵ Enter keys. In some multiple-mode terminals, that can switch between modes, pressing the ↵ Enter key when not in block mode does not do the same thing as pressing the return key. Whilst the return key will cause an input line to be sent to the host in line-at-a-time mode, the ↵ Enter key will rather cause the terminal to transmit the contents of the character row where the cursor is currently positioned to the host, host-issued prompts and all.[32] Some block-mode terminals have both an ↵ Enter and local cursor moving keys such as Return and New Line.

Different computer operating systems require different degrees of mode support when terminals are used as computer terminals. The POSIX terminal interface, as provided by Unix and POSIX-compliant operating systems, does not accommodate block-mode terminals at all, and only rarely requires the terminal itself to be in line-at-a-time mode, since the operating system is required to provide canonical input mode, where the terminal device driver in the operating system emulates local echo in the terminal, and performs line editing functions at the host end. Most usually, and especially so that the host system can support non-canonical input mode, terminals for POSIX-compliant systems are always in character-at-a-time mode. In contrast, IBM 3270 terminals connected to MVS systems are always required to be in block mode.[34][35][36][37]

See also

[edit]Notes

[edit]- ^ a b "The Teletype Story" (PDF).

- ^ a b "Direct keyboard input to computers". Archived from the original on July 17, 2017. Retrieved January 11, 2024.

- ^ a b "What is dumb terminal? definition and meaning". BusinessDictionary.com. Archived from the original on August 13, 2020. Retrieved March 13, 2019.

- ^ Thin clients came later than dumb terminals

- ^ the term "thin client" was coined in 1993) Waters, Richard (June 2, 2009). "Is this, finally, the thin client from Oracle?". Archived from the original on December 10, 2022.

- ^ "DPD chronology". IBM. January 23, 2003.

1965 ... IBM 2741 ... July 8.

- ^ a b Goldstein, Phil (March 17, 2017). "The DEC LA36 Dot Matrix Printer Made Business Printing Faster and more efficient".

Digital Equipment Corporation .. debuted the DECwriter LA30 in 1970.

- ^ "Paper was used for everything - letters, proposals ..."

- ^ "Uniscope brochure" (PDF). Retrieved May 23, 2021.

- ^ "5. Functional Description" (PDF). Uniscope 100 - Display Terminal - General Description (PDF). Rev. 2. Sperry Rand Corporation. 1973. pp. 24–27. UP-7701. Retrieved December 3, 2023.

- ^ "5. Operation" (PDF). Uniscope 300 General Description - Visual Communications Terminal (PDF). Sperry Rand Corporation. 1968. pp. 5-1 – 5-5. UP-7619. Retrieved December 3, 2023.

- ^ IBM System/360 Component Description: - IBM 2260 Display Station - IBM 2848 Display Control (PDF). Systems Reference Library (Fifth ed.). IBM. January 1969. A27-2700-4. Retrieved December 3, 2023.

- ^ "glass tty".

has a display screen ... behaves like a teletype

- ^ Some computers have consoles containing only buttons, dials, lights and switches.

- ^ As opposed to the ↵ Enter key used on buffered text terminals and PCs.

- ^ Kelly, B. (1998). TN3270 Enhancements. RFC 2355.

3270 .. block oriented

- ^ IBM Corporation (1972). IBM 3270 Information Display System Component Description (PDF).

- ^ "Already over 80,000 winners out there! (advertisement)". Computerworld. January 18, 1982. Retrieved November 27, 2012.

- ^ "HP 3000s, IBM CPUs Get On-Line Link". Computerworld. March 24, 1980. Retrieved November 27, 2012.

- ^ Lear Siegler Inc. "The ADM-31. A terminal far too smart to be considered Dumb" (PDF). Retrieved November 27, 2012.

- ^ Kaya, E. M. (1985). "New Trends in Graphic Display System Architecture". Frontiers in Computer Graphics. pp. 310–320. doi:10.1007/978-4-431-68025-3_23. ISBN 978-4-431-68027-7.

- ^ Raymond, J.; Banerji, D.K. (1976). "Using a Microprocessor in an Intelligent Graphics Terminal". Computer. 9 (4): 18–25. doi:10.1109/C-M.1976.218555. S2CID 6693597.

However, a major problem with the use of a graphic terminal is the cost

- ^ Pardee, S. (1971). "G101—A Remote Time Share Terminal with Graphic Output Capabilities". IEEE Transactions on Computers. C-20 (8): 878–881. doi:10.1109/T-C.1971.223364. S2CID 27102280.

Terminal cost is currently about $10,000

- ^ "intelligent terminal Definition from PC Magazine Encyclopedia".

- ^ Twentieth Century Words; by John Ayto; Oxford Unity Press; page 413

- ^ "What is 3270 (Information Display System)".

3270 .. over its predecessor, the 2260

- ^ "Epson TM-T88V-DT Intelligent Terminal, 16GB SSD, LE, Linux, ..."

Retailers can .. reduce costs with .. Epson TM-T88V-DT ... a unique integrated terminal

- ^ "MacTerminal Definition from PC Magazine Encyclopedia".

as an IBM 3278 Model 2

- ^ "How to make win32 console recognize ANSI/VT100 escape sequences?". Stack Overflow.

- ^ a b c Bolthouse 1996, p. 18.

- ^ Bangia 2010, p. 324.

- ^ a b Diercks 2002, p. 2.

- ^ Gofton 1991, p. 73.

- ^ Raymond 2004, p. 72.

- ^ Burgess 1988, p. 127.

- ^ Topham 1990, p. 77.

- ^ Rodgers 1990, p. 88–90.

References

[edit]- Bangia, Ramesh (2010). "line mode terminal". Dictionary of Information Technology. Laxmi Publications, Ltd. ISBN 978-93-8029-815-3.

- Bolthouse, David (1996). Exploring IBM client/server computing. Business Perspective Series. Maximum Press. ISBN 978-1-885068-04-0.

- Burgess, Ross (1988). UNIX systems for microcomputers. Professional and industrial computing series. BSP Professional Books. ISBN 978-0-632-02036-2.

- Diercks, Jon (2002). MPE/iX system administration handbook. Hewlett-Packard professional books. Prentice Hall PTR. ISBN 978-0-13-030540-4.

- Gofton, Peter W. (1991). Mastering UNIX serial communications. Sybex. ISBN 978-0-89588-708-5.

- Raymond, Eric S. (2004). The art of Unix programming. Addison-Wesley professional computing series. Addison-Wesley. ISBN 978-0-13-142901-7.

- Rodgers, Ulka (1990). UNIX database management systems. Yourdon Press computing series. Yourdon Press. ISBN 978-0-13-945593-3.

- Topham, Douglas W. (1990). A system V guide to UNIX and XENIX. Springer-Verlag. ISBN 978-0-387-97021-9.

External links

[edit]- The Terminals Wiki, an encyclopedia of computer terminals.

- Text Terminal HOWTO from tldp.org

- The TTY demystified from linussakesson.net

- Video Terminal Information at the Wayback Machine (archived May 23, 2010)

- Directive 1999/5/EC of the European Parliament and of the Council of 9 March 1999 on radio equipment and telecommunications terminal equipment and the mutual recognition of their conformity (R&TTE Directive)

- List of Computer Terminals from epocalc.net

- VTTEST – VT100/VT220/XTerm test utility – A terminal test utility by Thomas E. Dickey

Computer terminal

View on GrokipediaHistory

Early Mechanical and Electromechanical Terminals

The concept of a computer terminal originated as a device facilitating human-machine interaction through input and output mechanisms, predating electronic computers and rooted in 19th-century telecommunication technologies. These early terminals served as intermediaries between operators and mechanical systems, allowing manual entry of data via keys or switches and outputting results through printed or visual indicators, primarily for telegraphy and data processing tasks. In the mid-19th century, telegraph keys emerged as foundational input devices, enabling operators to transmit Morse code signals electrically over wires, with receivers using mechanical printers to decode and output messages on paper strips. By the 1870s, Émile Baudot's synchronous telegraph system introduced multiplexed printing telegraphs that used a five-bit code to print characters at rates of around 30 words per minute, marking an early electromechanical advancement in automated output for multiple simultaneous transmissions. These Baudot code printers represented a shift from manual decoding to mechanical automation, laying groundwork for standardized data representation in terminals. Electromechanical terminals evolved further in the late 1800s with stock ticker machines, invented by Edward Calahan in 1867 for the New York Stock Exchange, which received telegraph signals and printed stock prices on continuous paper tape using electromagnets to drive typewheels. These devices adapted telegraph technology for real-time financial data dissemination, operating at speeds of about 40-60 characters per minute and demonstrating reliable electromechanical printing for distributed information systems. Their design influenced subsequent data transmission tools by integrating electrical input with mechanical output relays. The transition to computing applications began in the 1890s with Herman Hollerith's tabulating machines for the U.S. Census, which employed punched cards as input media read by electrical-mechanical readers, outputting sorted data via printed summaries or electromagnetic counters. These systems, processing up to 80 columns of data per card, exemplified early terminal-like interfaces for batch data entry and verification in mechanical calculators, bridging telegraphy principles to statistical computing. However, such electromechanical terminals were hampered by slow operational speeds—typically 10-60 characters or cards per minute—and heavy dependence on paper media and mechanical relays, which limited scalability and introduced frequent jams or wear. This mechanical foundation paved the way for later teletypewriter integrations in the 20th century.Teletypewriter and Punch Card Era

The Teletypewriter and Punch Card Era marked a pivotal transition in computer terminals during the mid-20th century, adapting electromechanical printing devices and punched media for direct interaction with early electronic computers. Building briefly on mechanical precursors such as stock tickers used in telegraphy, this period emphasized reliable, hard-copy interfaces for batch processing and limited real-time input in post-World War II computing environments.[9] Early demonstrations of remote computing access included George Stibitz's 1940 setup, which connected a Teletype terminal at Dartmouth College to the Bell Labs Complex Number Calculator in New York City over telephone lines, marking the first use of a terminal for remote computation.[3] By 1956, experiments at MIT with the Friden Flexowriter electric typewriter enabled direct keyboard input to computers, advancing toward interactive interfaces.[4] Teletypewriters, or TTYs, emerged as a primary input/output mechanism for early computers, providing keyboard entry and printed output on paper rolls or tape. The Teletype Model 33 Automatic Send-Receive (ASR), introduced in 1963, became a standard device for minicomputers at a cost of approximately $700 to manufacturers, featuring integrated paper tape punching and reading capabilities for data storage and transfer. This model facilitated both operator console functions and remote communication, enabling users to type commands and receive printed responses from the system. Its electromechanical design, including a QWERTY keyboard and daisy-wheel printer, supported speeds of around 10 characters per second, making it a versatile yet rudimentary terminal for systems like early minicomputers from Digital Equipment Corporation.[9] Punch card systems complemented teletypewriters by enabling offline data preparation and high-volume batch input, a staple of 1950s and 1960s computing workflows. The IBM 026 Printing Keypunch, introduced in July 1949, allowed operators to encode data onto 80-column cards using a keyboard that punched holes representing binary-coded decimal (BCD) characters, while simultaneously printing the data along the card's top edge for verification.[10] Skilled operators could process up to 200 cards per hour with programmed automation features like tabbing and duplication. For reading these cards into computers, devices such as the IBM 2501 Card Reader, deployed in the 1960s for System/360 mainframes, achieved speeds of up to 1,000 cards per minute in its Model A2 variant, using photoelectric sensors to detect hole patterns and transmit data serially to the CPU.[11] This throughput supported efficient job submission in batch-oriented environments, where decks of cards represented programs or datasets.[11] Key events highlighted the integration of these technologies with landmark computers. The ENIAC, completed in 1945, adapted IBM punch card readers for input and punches for output, allowing numerical data and initial setup instructions to be fed via hole patterns rather than manual switches alone, thus streamlining artillery trajectory calculations.[12] Similarly, the UNIVAC I, delivered in 1951, incorporated typewriter-based input/output units—functionally akin to early teletypewriters—for real-time operator interaction, alongside punched cards and magnetic tape for bulk data handling, as demonstrated in its use for the 1952 U.S. presidential election predictions.[13] These adaptations shifted computing from purely manual configuration to semi-automated, media-driven terminals.[12] Punch card and teletypewriter systems offered advantages in reliable offline batch processing, where data could be prepared independently of the computer to minimize downtime and enable error checking before submission.[10] However, they suffered from disadvantages such as noisy mechanical operation—teletypewriters produced clacking sounds exceeding 70 decibels during use—and significant paper waste from continuous printing and discarded cards, contributing to logistical challenges in data centers.[9] Communication protocols for these terminals relied on standardized codes for character transmission. The 5-bit Baudot code, prevalent in early teletypewriters, encoded 32 characters (letters, figures, and controls) using five binary impulses per character, plus start and stop signals, supporting speeds of 60 to 100 words per minute over serial lines.[14] By 1963, the industry adopted the 7-bit American Standard Code for Information Interchange (ASCII) for teletypewriters like the Model 33, expanding to 128 characters and enabling broader compatibility with emerging computer systems through asynchronous serial transmission.[14]Video and Intelligent Terminal Development

The transition to video terminals marked a significant advancement in computer interaction during the late 1960s and 1970s, replacing electromechanical printouts with real-time visual displays using cathode-ray tube (CRT) technology. These devices allowed users to view and edit data on-screen, facilitating interactive computing over batch processing. The Digital Equipment Corporation (DEC) introduced the VT05 in 1970 as its first raster-scan video terminal, featuring a 20-by-72 character display in uppercase ASCII only.[15][16] This primitive unit operated at standard CRT refresh rates of around 60 Hz to maintain a flicker-free image, employing text-mode rendering rather than full bitmap graphics.[15] By the mid-1970s, video terminals had evolved to support larger displays and broader adoption. The Lear Siegler ADM-3A, launched in 1976, became a popular low-cost option with a 12-inch CRT screen displaying 24 lines of 80 characters in a 7x7 dot matrix, using P4 green phosphor for medium persistence to balance visibility and reduce flicker at 50-60 Hz refresh rates.[17] Unlike earlier teletypes, these terminals enabled cursor positioning and partial screen updates, minimizing data transmission needs in networked environments. Early models like the VT05 and ADM-3A primarily used character-oriented text modes, with bitmap capabilities emerging later for graphical applications. The development of intelligent terminals incorporated local processing power via microprocessors, allowing offline editing and reduced host dependency. Hewlett-Packard's HP 2640A, introduced in November 1974, was among the first such devices, powered by an Intel 8008 microprocessor with 8K bytes of ROM and up to 8K bytes of RAM.[18] It supported block-mode operation, where users could edit fields on-screen—inserting, deleting characters or lines—before transmitting data, using protected formats and attributes like reverse video for enhanced usability. This local intelligence contrasted with "dumb" terminals, offloading simple tasks from the mainframe. Key milestones underscored the role of video terminals in expanding computing access. The ARPANET, operational from 1969, initially relied on basic terminals for remote logins, paving the way for video integration in subsequent years to support interactive sessions across nodes.[19] The minicomputer boom, exemplified by DEC's PDP-11 series launched in 1970, proliferated in the 1970s, pairing affordably with video terminals to enable time-shared UNIX environments for offices and labs.[20] Over 600,000 PDP-11 units were sold by 1990, driving terminal demand for real-time data handling.[20] Technically, these terminals operated at refresh rates of 30-60 Hz, with phosphor persistence—typically medium for P4 types—ensuring images lingered briefly without excessive blur or flicker during scans.[21] Text modes dominated early designs for efficiency, rendering fixed character grids via vector or raster methods, while bitmap modes allowed pixel-level control but required more bandwidth. This era's innovations profoundly impacted time-sharing systems, such as Multics, which achieved multi-user access by October 1969 using remote dial-up terminals for interactive input.[22] Video displays reduced reliance on hard-copy outputs like teletypes, enabling on-screen editing and immediate feedback, which boosted productivity in shared computing environments.[23] By the late 1960s, such terminals were replacing electromechanical devices, supporting up to dozens of simultaneous users on systems like Multics.[23]Post-1980s Evolution and Decline

In the 1980s, the personal computer revolution began shifting the landscape for computer terminals, with devices like the Wyse 60, introduced in 1985, serving as popular dumb terminals that connected to UNIX systems through RS-232 serial interfaces for remote access and data entry.[24] These terminals facilitated integration with multi-user systems, allowing multiple users to interact with central hosts via simple text-based interfaces, but the rise of affordable PCs started eroding the need for dedicated hardware by enabling local processing.[25] By the 1990s, the widespread adoption of graphical user interfaces (GUIs) marked a significant decline in the use of traditional terminals, as systems like Microsoft Windows 3.0 (1990) and the X Window System (X11, maturing in the early 1990s) prioritized visual, mouse-driven interactions over command-line terminals.[26] This transition reduced reliance on serial-connected terminals for everyday computing, favoring integrated desktop environments that handled both local and networked tasks without separate hardware.[27] A modern resurgence of terminal concepts emerged in the late 1990s and 2000s through software-based solutions for networked computing, exemplified by Secure Shell (SSH) clients developed starting in 1995 by Tatu Ylönen to provide encrypted remote access over insecure networks, replacing vulnerable protocols like Telnet.[28] In the 2010s, web-based terminals like xterm.js, a JavaScript library for browser-embedded terminal emulation, enabled cloud access to remote shells without native installations, supporting collaborative development in distributed environments. Into the 2020s, terminals evolved further through integration with Internet of Things (IoT) devices and virtual desktops, where browser-based emulators facilitate real-time management of edge computing resources and cloud-hosted workspaces.[29] For instance, AWS Cloud9, launched in 2016, offers a fully browser-based integrated development environment with an embedded terminal for coding and debugging in virtual environments, streamlining access to scalable cloud infrastructure.[30] The cultural legacy of terminals persists in contemporary DevOps practices, with tools like tmux—released in 2007 by Nicholas Marriott—enabling session multiplexing to manage multiple terminal windows within a single interface, enhancing productivity in server administration and continuous integration workflows.Types

Hard-copy Terminals

Hard-copy terminals are computer peripherals that generate permanent physical output on paper or similar media, serving as the primary means of producing tangible records in early computing systems. These devices, which emerged in the mid-20th century, relied on mechanical or electromechanical printing mechanisms to create printed text or data, often functioning as both input and output interfaces in batch-oriented environments. Unlike later display-based systems, hard-copy terminals emphasized durability and verifiability through physical artifacts, making them essential for non-interactive operations where visual confirmation was secondary to archival needs.[31] The core mechanisms of hard-copy terminals involved impact printing technologies, where characters were formed by striking an inked ribbon against paper. Teleprinters, adapted from telegraph equipment, used typewriter-like keyboards and printing heads to produce output on continuous roll paper, with early models like the Teletype ASR-33 (introduced in 1963) operating at 10 characters per second via a 5-level Baudot code or 7-bit ASCII, enabling serial communication over current loops for computer interaction.[32] In mainframe computing, hard-copy terminals were predominantly used for batch job logging and generating audit trails, where datasets from payroll, inventory, or financial processing were output as printed reports to verify transactions and maintain compliance records. For example, the Teletype ASR-33 supported printing of reports on systems like early minicomputers, facilitating the review of batch results without real-time interaction.[33] These terminals ensured a verifiable paper trail for error detection in non-interactive workflows, such as end-of-day processing on early IBM mainframes.[33] Technical aspects of hard-copy terminals included specialized paper handling to accommodate continuous operation: teleprinters typically used roll-fed paper for sequential printing, with tractor-fed perforations to enable rapid, jam-resistant advancement.[34] Ink and ribbon systems varied by design; early models utilized a fabric ribbon providing thousands of impressions before replacement. Error handling often involved integrated paper tape mechanisms, particularly in teleprinters like the ASR-33, which supported chadless tape punching—a method where cuts were made without loose debris (chad), allowing clean, printable surfaces for data storage and reducing read errors from particulate contamination during tape reader operations.[34] The primary advantages of hard-copy terminals lay in their archival permanence, offering tamper-evident physical records that persisted without power or software dependencies, ideal for legal and auditing purposes in mainframe environments.[31] However, they incurred high operational costs due to consumables like ribbons and paper, required substantial space for equipment and storage, and generated significant noise from mechanical impacts, limiting their suitability for interactive or office settings.[32]Character-oriented Terminals

Character-oriented terminals facilitate stream-based input/output operations, where each keystroke from the user is transmitted immediately to the host computer, and the host echoes the character back to the terminal for display, enabling real-time interaction without buffering entire lines or screens. This mode of operation emulated the behavior of earlier teletypewriters but used cathode-ray tube (CRT) displays for faster, non-mechanical visual feedback. Unlike hard-copy terminals that relied on printed output, character-oriented terminals emphasized interactive text streaming on a screen.[35] Prominent examples include the Digital Equipment Corporation (DEC) VT52, introduced in September 1975, which featured a 24-line by 80-character display and supported asynchronous serial transmission up to 9600 baud, serving as an input/output device for host processors in time-sharing systems. Another key variant was the glass teletype (GT), or "glass tty," a CRT-based terminal designed in the early 1970s to mimic mechanical teletypewriters by displaying scrolling text streams, often with minimal local processing to maintain compatibility with existing TTY interfaces. These devices represented a transition from electromechanical printing to electronic display while preserving character-by-character communication.[15][36] Control and formatting in character-oriented terminals relied on escape sequences introduced in the 1970s, with the ECMA-48 standard (published in 1976 and later adopted as ANSI X3.64 in 1979) defining sequences for cursor positioning, screen erasure, and character attributes like bolding or blinking, prefixed by the escape character (ASCII 27). These protocols allowed the host to manipulate the display remotely, such as moving the cursor without full screen refreshes, though early implementations like the VT52 used proprietary DEC escape codes before standardization. In applications such as early Unix shells and command-line interfaces, character-oriented terminals integrated seamlessly with the TTY subsystem, where the kernel's line discipline processed raw character streams for echoing, editing, and signal handling in multi-user environments.[37][15][38] A primary limitation of character-oriented terminals was the absence of local editing features, as all text insertion, deletion, or cursor movements had to be managed by the host, leading to higher latency and dependency on reliable connections. They were also vulnerable to transmission errors in asynchronous serial links, where single-bit flips could corrupt characters; this was partially addressed by parity bits, an extra bit added to each transmitted byte to detect (but not correct) odd-numbered errors through even or odd parity checks. These constraints made them suitable for low-bandwidth, real-time text applications but less ideal for complex data entry compared to later block-oriented designs.[39][40]Block-oriented Terminals

Block-oriented terminals, also known as block mode terminals, operate by dividing the display screen into predefined fields where users enter data, with transmission occurring only when a transmit key, such as Enter, is pressed, allowing for local buffering and editing before sending complete blocks to the host system.[41] This approach contrasts with character-oriented terminals, which stream data immediately upon keystroke, by enabling users to fill forms or update screens without constant host interaction.[7] The core mechanism involves the host sending a formatted screen layout to the terminal, which displays protected and unprotected fields—protected areas prevent modification, while unprotected ones accept input—followed by the terminal returning the entire modified block upon transmission.[42] A seminal example is the IBM 3270 family, introduced in 1971 as a replacement for earlier character-based displays like the IBM 2260, designed specifically for mainframe environments under systems such as OS/360.[43] The 3270 uses the EBCDIC encoding standard for data representation and employs a data stream protocol that structures screens into logical blocks, supporting features like field highlighting and cursor positioning for efficient data entry.[44] Navigation and control are facilitated by up to 24 programmable function keys (PF1 through PF24), which trigger specific actions such as field advancement, screen clearing, or request cancellation without transmitting partial data.[41] Another representative model is the Wyse 50, released in 1983, which extended block-mode capabilities to ASCII-based systems with support for protected and unprotected fields, enabling compatibility with various minicomputer and Unix hosts while maintaining low-cost operation.[45][46] These terminals found primary application in transaction processing environments, such as banking systems for account inquiries and updates, and inventory management for order entry and stock tracking, where the block transmission model supported high-volume, form-based interactions on mainframes running software like IBM's CICS.[47] In such use cases, operators could validate entries locally against basic rules—such as field length or format—before transmission, reducing error rates and host processing overhead.[42] The efficiency of block-oriented terminals stems from their ability to minimize network traffic and system interrupts compared to character mode, as entire screens are updated or queried in single data blocks rather than per-keystroke exchanges, which proved advantageous in bandwidth-limited environments of the 1970s and 1980s.[41] For instance, the 3270 protocol compresses repetitive elements in the data stream, further optimizing transmission rates over lines up to 7,200 bps.[48] This design not only lowered communication costs but also enhanced perceived responsiveness, as users could edit freely without latency from remote acknowledgments.[43]Graphical Terminals

Graphical terminals represent a significant advancement in computer interface technology, enabling the display of vector or bitmap graphics in conjunction with text to support more sophisticated user interactions. These devices emerged as an extension of earlier character- and block-oriented terminals, incorporating visual elements for enhanced data representation. Unlike purely textual systems, graphical terminals facilitated direct manipulation of visual information, paving the way for interactive computing environments. The evolution of graphical terminals began in the late 1960s with vector-based plotters and progressed to raster displays by the 1980s. A pivotal early example was the Tektronix 4010, introduced in 1972, which utilized direct-view storage tube (DVST) technology to render vector graphics at a resolution of 1024×768 without requiring constant screen refresh.[49] Priced at $4,250, the 4010 made high-resolution plotting accessible for timesharing systems, drawing lines and curves that persisted on the phosphor-coated screen until erased.[49] By the early 1980s, raster-based systems gained prominence, exemplified by the Tektronix 4112, introduced in 1981, which employed a 15-inch monochrome raster-scan display for pixel-level control and smoother animations.[50] This shift from vector to raster allowed for filled areas and complex shading, though it demanded more computational resources for image generation. Key technologies underpinning graphical terminals included storage tubes, which provided image persistence by storing charge patterns on the tube's surface, eliminating flicker in static displays but limiting dynamic updates to full-screen erasures.[51] Early software interfaces, such as the Graphical Kernel System (GKS), originated from proposals by the Graphics Standards Planning Committee in 1977 and were formalized by the Deutsches Institut für Normung in 1978, offering a standardized API for 2D vector primitives like lines, curves, and text across diverse hardware.[52] These tools enabled portability in graphical applications, bridging hardware variations in terminals from different manufacturers. Such terminals integrated seamlessly with mainframe or minicomputer systems, often via serial protocols, to offload graphics rendering while maintaining compatibility with block-mode text input for structured data entry. Graphical terminals found primary applications in computer-aided design (CAD), where they enabled engineers to interactively draft and modify schematics, as seen in systems from vendors like Tektronix that dominated the market in the 1970s and early 1980s.[53] In scientific visualization, they facilitated the plotting of complex datasets, such as aerodynamic flows or structural analyses, allowing researchers to explore multidimensional data through overlaid graphs and contours.[54] Early graphical user interfaces (GUIs) also leveraged these displays for icon-based navigation and windowing, influencing workstation designs that combined text and visuals for productivity tasks. Despite their capabilities, graphical terminals faced significant challenges, including high acquisition costs—often $10,000 or more per unit for advanced raster models in the 1980s—and bandwidth limitations for transmitting and refreshing graphics over serial links, which could bottleneck interactive performance in vector-to-raster transitions.[39] These factors restricted widespread adoption to specialized fields until hardware costs declined in the mid-1980s.Intelligent Terminals

Intelligent terminals represent a significant evolution in computer terminal design, incorporating embedded microprocessors to enable local data processing and reduce reliance on the host computer for routine operations. Unlike simpler "dumb" terminals that merely relayed input and output, these devices could execute firmware-based functions such as screen formatting, cursor control, and basic arithmetic, offloading computational burdens from the central system. This autonomy stemmed from the integration of affordable microprocessors in the late 1970s, allowing terminals to handle tasks independently while maintaining compatibility with mainframe environments through standard interfaces like RS-232.[55] Key features of intelligent terminals included local editing capabilities, where users could modify data on-screen before transmission to the host, minimizing network traffic and errors. Many models supported limited file storage via onboard RAM for buffering screens or temporary data retention, with capacities ranging from a few kilobytes for basic operations to up to 128 KB in advanced units for more complex buffering. Protocol conversion was another hallmark, enabling adaptation between network standards such as X.25 for packet-switched communications and RS-232 for serial links, which facilitated integration into diverse systems without additional hardware. For instance, the ADDS Viewpoint, introduced in March 1981 and powered by a Zilog Z80 microprocessor, exemplified these traits with its 24x80 character display, local edit modes, and support for asynchronous transmission up to 19,200 baud.[56][57] The TeleVideo Model 950, launched in December 1980, further illustrated these capabilities with its Z80-based architecture, offering up to 96 lines of display memory for multi-page editing and compatibility with protocols like XON/XOFF flow control over RS-232C interfaces. Priced at around $1,195, it included features like programmable function keys and optional printer ports, allowing users to perform local tasks such as data validation without constant host intervention. Some later variants in the intelligent terminal lineage supported multi-session operations, enabling simultaneous connections to multiple hosts for enhanced productivity in networked settings. These attributes made intelligent terminals particularly valuable in enterprise environments, where they offloaded host CPU resources—potentially reducing mainframe load by 20-50% in high-volume data entry scenarios—and laid groundwork for modern thin-client architectures by centralizing core processing while distributing interface logic.[58][59][60] By the early 1990s, the proliferation of personal computers diminished the role of dedicated intelligent terminals, as affordable PCs with superior processing power, graphical interfaces, and local storage rendered them obsolete for most applications. Mainframe users increasingly adopted PC-based emulators or networked workstations, which offered greater flexibility and eliminated the need for specialized hardware.[61]System Consoles

Definition and Functions

A system console is a specialized terminal that serves as the primary operator interface for direct control, monitoring, and diagnostics of computer systems, particularly mainframes, enabling operators to manage core operations independently of user applications.[62] In this role, it provides essential access for booting the system via Initial Program Load (IPL), halting operations, and issuing low-level commands to intervene in CPU, storage, and I/O activities.[62] Components of a system console typically include an integrated keyboard, display (such as lights or a CRT), and switches for manual input, as exemplified by the IBM System/360 console introduced in 1964, which featured toggle switches, hexadecimal dials, and status indicators for operator interaction.[62] Key functions encompass configuring switch settings to select I/O devices for IPL or control execution rates, generating core dumps by displaying storage contents for diagnostics, and handling interrupts through dedicated keys that trigger external interruptions or reset conditions.[62] In modern systems, equivalents like the Intelligent Platform Management Interface (IPMI), standardized in 1998, extend these functions to remote console access for out-of-band management, allowing monitoring and control even when the host OS is unavailable.[63] Due to their privileged access, system consoles incorporate security measures such as restricted operator authorization via access control systems like RACF on IBM mainframes to prevent unauthorized shutdowns or manipulations.[64] For IPMI, best practices include limiting network access and enforcing strong authentication to mitigate risks of remote exploitation.[65]Historical and Modern Usage

In the 1950s, early mainframe computers like the UNIVAC I relied on front-panel interfaces featuring arrays of indicator lamps, toggle switches, and push buttons for operator interaction and system control.[66] These panels allowed direct manipulation of machine states, such as setting memory addresses or initiating power sequences, with lights displaying binary states of registers and circuits to aid debugging and monitoring.[67] By the 1960s and into the 1970s, this approach began transitioning to cathode-ray tube (CRT) consoles, as seen in systems like the DEC PDP-1, which integrated a CRT display for more dynamic visual feedback and keyboard input, reducing reliance on physical switches.[68] During the 1980s and 1990s, system consoles evolved with the rise of Unix-based servers, where serial consoles became standard for direct access to the operating system kernel and boot processes. In Unix environments, the /dev/console device file served as the primary interface for system messages, error logs, and operator commands, often connected via RS-232 serial ports to teletypewriters or early video terminals. This setup enabled remote administration over serial lines, supporting multi-user time-sharing systems in enterprise servers and workstations. From the 2010s onward, system consoles shifted toward networked and virtualized solutions, exemplified by KVM over IP technologies. Dell's Integrated Dell Remote Access Controller (iDRAC), first introduced in 2008 with certain PowerEdge servers, provided remote KVM access via IP networks, allowing administrators to view and control server consoles over the internet without physical presence.[69] Similarly, VMware ESXi, first released in 2007 as a bare-metal hypervisor, incorporated virtual consoles for managing guest operating systems and host hardware directly through web-based interfaces.[70] Contemporary trends emphasize integration of system consoles with Baseboard Management Controllers (BMCs) for out-of-band datacenter management, enabling remote power cycling, firmware updates, and sensor monitoring independent of the host OS. The BMC market has grown significantly, reaching USD 2.01 billion in 2024, driven by demands for secure, AI-enhanced oversight in hyperscale environments.[71] In cloud infrastructure, consoles play a critical role during outages; for instance, in the June 13, 2023, AWS us-east-1 incident, which affected services like EC2 and Lambda due to elevated error rates, serial console access via tools like EC2 Serial Console was essential for diagnosing and recovering affected instances.[72][73] In embedded systems, serial consoles remain vital for low-level debugging, as demonstrated by the Raspberry Pi's UART interface, which supports direct serial connections for kernel output and command input in resource-constrained deployments like IoT devices.[74]Emulation

Software Terminal Emulators