Recent from talks

Nothing was collected or created yet.

General equilibrium theory

View on Wikipedia| Part of a series on |

| Economics |

|---|

|

|

|

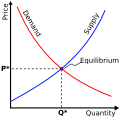

In economics, general equilibrium theory attempts to explain the behavior of supply, demand, and prices in a whole economy with several or many interacting markets, by seeking to prove that the interaction of demand and supply will result in an overall general equilibrium. General equilibrium theory contrasts with the theory of partial equilibrium, which analyzes a specific part of an economy while its other factors are held constant.[1]

General equilibrium theory both studies economies using the model of equilibrium pricing and seeks to determine in which circumstances the assumptions of general equilibrium will hold. The theory dates to the 1870s, particularly the work of French economist Léon Walras in his pioneering 1874 work Elements of Pure Economics.[2] The theory reached its modern form with the work of Lionel W. McKenzie (Walrasian theory), Kenneth Arrow and Gérard Debreu (Hicksian theory) in the 1950s.

Overview

[edit]Broadly speaking, general equilibrium tries to give an understanding of the whole economy using a "bottom-up" approach, starting with individual markets and agents. Therefore, general equilibrium theory has traditionally been classified as part of microeconomics. The difference is not as clear as it used to be, since much of modern macroeconomics has emphasized microeconomic foundations, and has constructed general equilibrium models of macroeconomic fluctuations. General equilibrium macroeconomic models usually have a simplified structure that only incorporates a few markets, like a "goods market" and a "financial market". In contrast, general equilibrium models in the microeconomic tradition typically involve a multitude of different goods markets. They are usually complex and require computers to calculate numerical solutions.

In a market system the prices and production of all goods, including the price of money and interest, are interrelated: a change in the price of one good may affect the price of another good. Calculating the equilibrium price of just one good, in theory, requires an analysis that accounts for all of the millions of different goods that are available. It is often assumed that agents are price takers, and under that assumption two common notions of equilibrium exist: Walrasian, or competitive equilibrium, and its generalization: a price equilibrium with transfers.

Friedrich Hayek's influential essay "The Use of Knowledge in Society" (1945) articulated what scholars have since identified as a fundamental challenge to the informational assumptions underlying general equilibrium theory. Hayek argued that economic knowledge is inherently dispersed across countless individuals and often exists in tacit, context-specific forms that cannot be aggregated or centralized. This posed a problem for some models, whether Walrasian equilibrium theory or centralized economic planning, that presumes complete information or the possibility of gathering all relevant data in one place.[3][4]

Hayek proposed that market prices serve as decentralized information signals, distilling complex local knowledge about preferences, resources, and opportunities into summary statistics that coordinate economic decisions across society without requiring centralized knowledge or direction.[5][4] While predating the full Arrow-Debreu formalization (1954), Hayek's essay has been interpreted by subsequent economists both as a critique of the informational feasibility of perfect-information equilibrium models and as an explanation of how real-world market processes achieve coordination through price mechanisms despite pervasive ignorance and uncertainty. This perspective emphasizes economic processes and discovery over static equilibrium states.[3]

Walrasian equilibrium

[edit]The first attempt in neoclassical economics to model prices for a whole economy was made by Léon Walras. Walras' Elements of Pure Economics provides a succession of models, each taking into account more aspects of a real economy (two commodities, many commodities, production, growth, money). Some think Walras was unsuccessful and that the later models in this series are inconsistent.[6][7]

In particular, Walras's model was a long-run model in which prices of capital goods are the same whether they appear as inputs or outputs and in which the same rate of profits is earned in all lines of industry. This is inconsistent with the quantities of capital goods being taken as data. But when Walras introduced capital goods in his later models, he took their quantities as given, in arbitrary ratios. (In contrast, Kenneth Arrow and Gérard Debreu continued to take the initial quantities of capital goods as given, but adopted a short run model in which the prices of capital goods vary with time and the own rate of interest varies across capital goods.)

Walras was the first to lay down a research program widely followed by 20th-century economists. In particular, the Walrasian agenda included the investigation of when equilibria are unique and stable— Walras' Lesson 7 shows neither uniqueness, nor stability, nor even existence of an equilibrium is guaranteed. Walras also proposed a dynamic process by which general equilibrium might be reached, that of the tâtonnement or groping process.

The tâtonnement process is a model for investigating stability of equilibria. Prices are announced (perhaps by an "auctioneer"), and agents state how much of each good they would like to offer (supply) or purchase (demand). No transactions and no production take place at disequilibrium prices. Instead, prices are lowered for goods with positive prices and excess supply. Prices are raised for goods with excess demand. The question for the mathematician is under what conditions such a process will terminate in equilibrium where demand equates to supply for goods with positive prices and demand does not exceed supply for goods with a price of zero. Walras was not able to provide a definitive answer to this question (see Unresolved Problems in General Equilibrium below).

Marshall and Sraffa

[edit]This section needs additional citations for verification. (March 2025) |

In partial equilibrium analysis, the determination of the price of a good is simplified by just looking at the price of one good, and assuming that the prices of all other goods remain constant. The Marshallian theory of supply and demand is an example of partial equilibrium analysis. Partial equilibrium analysis is adequate when the first-order effects of a shift in the demand curve do not shift the supply curve. Anglo-American economists became more interested in general equilibrium in the late 1920s and 1930s after Piero Sraffa's demonstration that Marshallian economists cannot account for the forces thought to account for the upward-slope of the supply curve for a consumer good.

If an industry uses little of a factor of production, a small increase in the output of that industry will not bid the price of that factor up. To a first-order approximation, firms in the industry will experience constant costs, and the industry supply curves will not slope up. If an industry uses an appreciable amount of that factor of production, an increase in the output of that industry will exhibit increasing costs. But such a factor is likely to be used in substitutes for the industry's product, and an increased price of that factor will have effects on the supply of those substitutes. Consequently, Sraffa argued, the first-order effects of a shift in the demand curve of the original industry under these assumptions includes a shift in the supply curve of substitutes for that industry's product, and consequent shifts in the original industry's supply curve. General equilibrium is designed to investigate such interactions between markets.

Continental European economists made important advances in the 1930s.[8] Walras' arguments for the existence of general equilibrium often were based on the counting of equations and variables. Such arguments are inadequate for non-linear systems of equations and do not imply that equilibrium prices and quantities cannot be negative, a meaningless solution for his models. The replacement of certain equations by inequalities and the use of more rigorous mathematics improved general equilibrium modeling.[9]

Modern concept of general equilibrium in economics

[edit]The modern conception of general equilibrium is provided by the Arrow–Debreu–McKenzie model, developed jointly by Kenneth Arrow, Gérard Debreu, and Lionel W. McKenzie in the 1950s.[10][11] Debreu presents this model in Theory of Value (1959) as an axiomatic model, following the style of mathematics promoted by Nicolas Bourbaki. In such an approach, the interpretation of the terms in the theory (e.g., goods, prices) are not fixed by the axioms.

Three important interpretations of the terms of the theory have been often cited. First, suppose commodities are distinguished by the location where they are delivered. Then the Arrow-Debreu model is a spatial model of, for example, international trade.

Second, suppose commodities are distinguished by when they are delivered. That is, suppose all markets equilibrate at some initial instant of time. Agents in the model purchase and sell contracts, where a contract specifies, for example, a good to be delivered and the date at which it is to be delivered. The Arrow–Debreu model of intertemporal equilibrium contains forward markets for all goods at all dates. No markets exist at any future dates.

Third, suppose contracts specify states of nature which affect whether a commodity is to be delivered: "A contract for the transfer of a commodity now specifies, in addition to its physical properties, its location and its date, an event on the occurrence of which the transfer is conditional. This new definition of a commodity allows one to obtain a theory of [risk] free from any probability concept..."[12]

These interpretations can be combined. So the complete Arrow–Debreu model can be said to apply when goods are identified by when they are to be delivered, where they are to be delivered and under what circumstances they are to be delivered, as well as their intrinsic nature. So there would be a complete set of prices for contracts such as "1 ton of Winter red wheat, delivered on 3rd of January in Minneapolis, if there is a hurricane in Florida during December". A general equilibrium model with complete markets of this sort seems to be a long way from describing the workings of real economies, however, its proponents argue that it is still useful as a simplified guide as to how real economies function.

Some of the recent work in general equilibrium has in fact explored the implications of incomplete markets, which is to say an intertemporal economy with uncertainty, where there do not exist sufficiently detailed contracts that would allow agents to fully allocate their consumption and resources through time. While it has been shown that such economies will generally still have an equilibrium, the outcome may no longer be Pareto optimal. The basic intuition for this result is that if consumers lack adequate means to transfer their wealth from one time period to another and the future is risky, there is nothing to necessarily tie any price ratio down to the relevant marginal rate of substitution, which is the standard requirement for Pareto optimality. Under some conditions the economy may still be constrained Pareto optimal, meaning that a central authority limited to the same type and number of contracts as the individual agents may not be able to improve upon the outcome, what is needed is the introduction of a full set of possible contracts. Hence, one implication of the theory of incomplete markets is that inefficiency may be a result of underdeveloped financial institutions or credit constraints faced by some members of the public. Research still continues in this area.

Properties and characterization of general equilibrium

[edit]Basic questions in general equilibrium analysis are concerned with the conditions under which an equilibrium will be efficient, which efficient equilibria can be achieved, when an equilibrium is guaranteed to exist and when the equilibrium will be unique and stable.

First Fundamental Theorem of Welfare Economics

[edit]The First Fundamental Welfare Theorem asserts that market equilibria are Pareto efficient. In other words, the allocation of goods in the equilibria is such that there is no reallocation which would leave a consumer better off without leaving another consumer worse off. In a pure exchange economy, a sufficient condition for the first welfare theorem to hold is that preferences be locally nonsatiated. The first welfare theorem also holds for economies with production regardless of the properties of the production function. Implicitly, the theorem assumes complete markets and perfect information. In an economy with externalities, for example, it is possible for equilibria to arise that are not efficient.

The first welfare theorem is informative in the sense that it points to the sources of inefficiency in markets. Under the assumptions above, any market equilibrium is tautologically efficient. Therefore, when equilibria arise that are not efficient, the market system itself is not to blame, but rather some sort of market failure.

Second Fundamental Theorem of Welfare Economics

[edit]Even if every equilibrium is efficient, it may not be that every efficient allocation of resources can be part of an equilibrium. However, the second theorem states that every Pareto efficient allocation can be supported as an equilibrium by some set of prices. In other words, all that is required to reach a particular Pareto efficient outcome is a redistribution of initial endowments of the agents after which the market can be left alone to do its work. This suggests that the issues of efficiency and equity can be separated and need not involve a trade-off. The conditions for the second theorem are stronger than those for the first, as consumers' preferences and production sets now need to be convex (convexity roughly corresponds to the idea of diminishing marginal rates of substitution i.e. "the average of two equally good bundles is better than either of the two bundles").

Existence

[edit]Even though every equilibrium is efficient, neither of the above two theorems say anything about the equilibrium existing in the first place. To guarantee that an equilibrium exists, it suffices that consumer preferences be strictly convex. With enough consumers, the convexity assumption can be relaxed both for existence and the second welfare theorem. Similarly, but less plausibly, convex feasible production sets suffice for existence; convexity excludes economies of scale.

Proofs of the existence of equilibrium traditionally rely on fixed-point theorems such as Brouwer fixed-point theorem for functions (or, more generally, the Kakutani fixed-point theorem for set-valued functions). See Competitive equilibrium#Existence of a competitive equilibrium. The proof was first due to Lionel McKenzie,[13] and Kenneth Arrow and Gérard Debreu.[14] In fact, the converse also holds, according to Uzawa's derivation of Brouwer's fixed point theorem from Walras's law.[15] Following Uzawa's theorem, many mathematical economists consider proving existence a deeper result than proving the two Fundamental Theorems.

Another method of proof of existence, global analysis, uses Sard's lemma and the Baire category theorem; this method was pioneered by Gérard Debreu and Stephen Smale.

Nonconvexities in large economies

[edit]Starr (1969) applied the Shapley–Folkman–Starr theorem to prove that even without convex preferences there exists an approximate equilibrium. The Shapley–Folkman–Starr results bound the distance from an "approximate" economic equilibrium to an equilibrium of a "convexified" economy, when the number of agents exceeds the dimension of the goods.[16] Following Starr's paper, the Shapley–Folkman–Starr results were "much exploited in the theoretical literature", according to Guesnerie,[17]: 112 who wrote the following:

some key results obtained under the convexity assumption remain (approximately) relevant in circumstances where convexity fails. For example, in economies with a large consumption side, nonconvexities in preferences do not destroy the standard results of, say Debreu's theory of value. In the same way, if indivisibilities in the production sector are small with respect to the size of the economy, [ . . . ] then standard results are affected in only a minor way.[17]: 99

To this text, Guesnerie appended the following footnote:

The derivation of these results in general form has been one of the major achievements of postwar economic theory.[17]: 138

In particular, the Shapley-Folkman-Starr results were incorporated in the theory of general economic equilibria[18][19][20] and in the theory of market failures[21] and of public economics.[22]

Uniqueness

[edit]Although generally (assuming convexity) an equilibrium will exist and will be efficient, the conditions under which it will be unique are much stronger.[23] The Sonnenschein–Mantel–Debreu theorem, proven in the 1970s, states that the aggregate excess demand function inherits only certain properties of individual's demand functions, and that these (continuity, homogeneity of degree zero, Walras' law and boundary behavior when prices are near zero) are the only real restriction one can expect from an aggregate excess demand function. Any such function can represent the excess demand of an economy populated with rational utility-maximizing individuals.

There has been much research on conditions when the equilibrium will be unique, or which at least will limit the number of equilibria. One result states that under mild assumptions the number of equilibria will be finite (see regular economy) and odd (see index theorem). Furthermore, if an economy as a whole, as characterized by an aggregate excess demand function, has the revealed preference property (which is a much stronger condition than revealed preferences for a single individual) or the gross substitute property then likewise the equilibrium will be unique. All methods of establishing uniqueness can be thought of as establishing that each equilibrium has the same positive local index, in which case by the index theorem there can be but one such equilibrium.

Determinacy

[edit]Given that equilibria may not be unique, it is of some interest to ask whether any particular equilibrium is at least locally unique. If so, then comparative statics can be applied as long as the shocks to the system are not too large. As stated above, in a regular economy equilibria will be finite, hence locally unique. One reassuring result, due to Debreu, is that "most" economies are regular.

Work by Michael Mandler (1999) has challenged this claim.[24] The Arrow–Debreu–McKenzie model is neutral between models of production functions as continuously differentiable and as formed from (linear combinations of) fixed coefficient processes. Mandler accepts that, under either model of production, the initial endowments will not be consistent with a continuum of equilibria, except for a set of Lebesgue measure zero. However, endowments change with time in the model and this evolution of endowments is determined by the decisions of agents (e.g., firms) in the model. Agents in the model have an interest in equilibria being indeterminate:

Indeterminacy, moreover, is not just a technical nuisance; it undermines the price-taking assumption of competitive models. Since arbitrary small manipulations of factor supplies can dramatically increase a factor's price, factor owners will not take prices to be parametric.[24]: 17

When technology is modeled by (linear combinations) of fixed coefficient processes, optimizing agents will drive endowments to be such that a continuum of equilibria exist:

The endowments where indeterminacy occurs systematically arise through time and therefore cannot be dismissed; the Arrow-Debreu-McKenzie model is thus fully subject to the dilemmas of factor price theory.[24]: 19

Some have questioned the practical applicability of the general equilibrium approach based on the possibility of non-uniqueness of equilibria.

Stability

[edit]In a typical general equilibrium model the prices that prevail "when the dust settles" are simply those that coordinate the demands of various consumers for various goods. But this raises the question of how these prices and allocations have been arrived at, and whether any (temporary) shock to the economy will cause it to converge back to the same outcome that prevailed before the shock. This is the question of stability of the equilibrium, and it can be readily seen that it is related to the question of uniqueness. If there are multiple equilibria, then some of them will be unstable. Then, if an equilibrium is unstable and there is a shock, the economy will wind up at a different set of allocations and prices once the convergence process terminates. However, stability depends not only on the number of equilibria but also on the type of the process that guides price changes (for a specific type of price adjustment process see Walrasian auction). Consequently, some researchers have focused on plausible adjustment processes that guarantee system stability, i.e., that guarantee convergence of prices and allocations to some equilibrium. When more than one stable equilibrium exists, where one ends up will depend on where one begins. The theorems that have been mostly conclusive when related to the stability of a typical general equilibrium model are closed related to that of the most local stability.

Unresolved problems in general equilibrium

[edit]Research building on the Arrow–Debreu–McKenzie model has revealed some problems with the model. The Sonnenschein–Mantel–Debreu results show that, essentially, any restrictions on the shape of excess demand functions are stringent. Some think this implies that the Arrow–Debreu model lacks empirical content.[25] Therefore, an unsolved problem is

- Is Arrow–Debreu–McKenzie equilibria stable and unique?

A model organized around the tâtonnement process has been said to be a model of a centrally planned economy, not a decentralized market economy. Some research has tried to develop general equilibrium models with other processes. In particular, some economists have developed models in which agents can trade at out-of-equilibrium prices and such trades can affect the equilibria to which the economy tends. Particularly noteworthy are the Hahn process, the Edgeworth process and the Fisher process.

The data determining Arrow-Debreu equilibria include initial endowments of capital goods. If production and trade occur out of equilibrium, these endowments will be changed further complicating the picture.

In a real economy, however, trading, as well as production and consumption, goes on out of equilibrium. It follows that, in the course of convergence to equilibrium (assuming that occurs), endowments change. In turn this changes the set of equilibria. Put more succinctly, the set of equilibria is path dependent... [This path dependence] makes the calculation of equilibria corresponding to the initial state of the system essentially irrelevant. What matters is the equilibrium that the economy will reach from given initial endowments, not the equilibrium that it would have been in, given initial endowments, had prices happened to be just right. – (Franklin Fisher).[26]

The Arrow–Debreu model in which all trade occurs in futures contracts at time zero requires a very large number of markets to exist. It is equivalent under complete markets to a sequential equilibrium concept in which spot markets for goods and assets open at each date-state event (they are not equivalent under incomplete markets); market clearing then requires that the entire sequence of prices clears all markets at all times. A generalization of the sequential market arrangement is the temporary equilibrium structure, where market clearing at a point in time is conditional on expectations of future prices which need not be market clearing ones.

Although the Arrow–Debreu–McKenzie model is set out in terms of some arbitrary numéraire, the model does not encompass money. Frank Hahn, for example, has investigated whether general equilibrium models can be developed in which money enters in some essential way. One of the essential questions he introduces, often referred to as the Hahn's problem is: "Can one construct an equilibrium where money has value?" The goal is to find models in which existence of money can alter the equilibrium solutions, perhaps because the initial position of agents depends on monetary prices.

Some critics of general equilibrium modeling contend that much research in these models constitutes exercises in pure mathematics with no connection to actual economies. In a 1979 article, Nicholas Georgescu-Roegen complains: "There are endeavors that now pass for the most desirable kind of economic contributions although they are just plain mathematical exercises, not only without any economic substance but also without any mathematical value."[27] He cites as an example a paper that assumes more traders in existence than there are points in the set of real numbers.

Although modern models in general equilibrium theory demonstrate that under certain circumstances prices will indeed converge to equilibria, critics hold that the assumptions necessary for these results are extremely strong. As well as stringent restrictions on excess demand functions, the necessary assumptions include perfect rationality of individuals; complete information about all prices both now and in the future; and the conditions necessary for perfect competition. However, some results from experimental economics suggest that even in circumstances where there are few, imperfectly informed agents, the resulting prices and allocations may wind up resembling those of a perfectly competitive market (although certainly not a stable general equilibrium in all markets).[citation needed]

Frank Hahn defends general equilibrium modeling on the grounds that it provides a negative function. General equilibrium models show what the economy would have to be like for an unregulated economy to be Pareto efficient.[citation needed]

Computing general equilibrium

[edit]Until the 1970s general equilibrium analysis remained theoretical. With advances in computing power and the development of input–output tables, it became possible to model national economies, or even the world economy, and attempts were made to solve for general equilibrium prices and quantities empirically.

Applied general equilibrium (AGE) models were pioneered by Herbert Scarf in 1967, and offered a method for solving the Arrow–Debreu General Equilibrium system in a numerical fashion. This was first implemented by John Shoven and John Whalley (students of Scarf at Yale) in 1972 and 1973, and were a popular method up through the 1970s.[28][29] In the 1980s however, AGE models faded from popularity due to their inability to provide a precise solution and its high cost of computation.

Computable general equilibrium (CGE) models surpassed and replaced AGE models in the mid-1980s, as the CGE model was able to provide relatively quick and large computable models for a whole economy, and was the preferred method of governments and the World Bank. CGE models are heavily used today, and while 'AGE' and 'CGE' is used inter-changeably in the literature, Scarf-type AGE models have not been constructed since the mid-1980s, and the CGE literature at current is not based on Arrow-Debreu and General Equilibrium Theory as discussed in this article. CGE models, and what is today referred to as AGE models, are based on static, simultaneously solved, macro balancing equations (from the standard Keynesian macro model), giving a precise and explicitly computable result.[30]

Other schools

[edit]General equilibrium theory is a central point of contention and influence between the neoclassical school and other schools of economic thought, and different schools have varied views on general equilibrium theory. Some, such as the Keynesian and Post-Keynesian schools, strongly reject general equilibrium theory as "misleading" and "useless". Disequilibrium macroeconomics and different non-equilibrium approaches were developed as alternatives. Other schools, such as new classical macroeconomics, developed from general equilibrium theory.

Keynesian and Post-Keynesian

[edit]Keynesian and Post-Keynesian economists, and their underconsumptionist predecessors criticize general equilibrium theory specifically, and as part of criticisms of neoclassical economics generally. Specifically, they argue that general equilibrium theory is neither accurate nor useful, that economies are not in equilibrium, that equilibrium may be slow and painful to achieve, and that modeling by equilibrium is "misleading", and that the resulting theory is not a useful guide, particularly for understanding of economic crises.[31][32]

Let us beware of this dangerous theory of equilibrium which is supposed to be automatically established. A certain kind of equilibrium, it is true, is reestablished in the long run, but it is after a frightful amount of suffering.

— Simonde de Sismondi, New Principles of Political Economy, vol. 1, 1819, pp. 20-21.

The long run is a misleading guide to current affairs. In the long run we are all dead. Economists set themselves too easy, too useless a task if in tempestuous seasons they can only tell us that when the storm is past the ocean is flat again.

— John Maynard Keynes, A Tract on Monetary Reform, 1923, ch. 3

It is as absurd to assume that, for any long period of time, the variables in the economic organization, or any part of them, will "stay put," in perfect equilibrium, as to assume that the Atlantic Ocean can ever be without a wave.

— Irving Fisher, The Debt-Deflation Theory of Great Depressions, 1933, p. 339

Robert Clower and others have argued for a reformulation of theory toward disequilibrium analysis to incorporate how monetary exchange fundamentally alters the representation of an economy as though a barter system.[33]

New classical macroeconomics

[edit]While general equilibrium theory and neoclassical economics generally were originally microeconomic theories, new classical macroeconomics builds a macroeconomic theory on these bases. In new classical models, the macroeconomy is assumed to be at its unique equilibrium, with full employment and potential output, and that this equilibrium is assumed to always have been achieved via price and wage adjustment (market clearing). The best-known such model is real business-cycle theory, in which business cycles are considered to be largely due to changes in the real economy, unemployment is not due to the failure of the market to achieve potential output, but due to equilibrium potential output having fallen and equilibrium unemployment having risen.

Socialist economics

[edit]Within socialist economics, a sustained critique of general equilibrium theory (and neoclassical economics generally) is given in Anti-Equilibrium,[34] based on the experiences of János Kornai with the failures of Communist central planning, although Michael Albert and Robin Hahnel later based their Parecon model on the same theory.[35]

New structural economics

[edit]The structural equilibrium model is a matrix-form computable general equilibrium model in new structural economics.[36] [37] This model is an extension of the John von Neumann's general equilibrium model (see Computable general equilibrium for details). Its computation can be performed using the R package GE.[38] The structural equilibrium model can be used for intertemporal equilibrium analysis, where time is treated as a label that differentiates between types of commodities and firms, meaning commodities are distinguished by when they are delivered and firms are distinguished by when they produce. The model can include factors such as taxes, money, endogenous production functions, and endogenous institutions, etc. The structural equilibrium model can include excess tax burdens, meaning that the equilibrium in the model may not be Pareto optimal. When production functions and/or economic institutions are treated as endogenous variables, the general equilibrium is referred to as structural equilibrium.

See also

[edit]Notes

[edit]- ^ McKenzie, Lionel W. (2008). "General Equilibrium". The New Palgrave Dictionary of Economics. pp. 1–27. doi:10.1057/978-1-349-95121-5_933-2. ISBN 978-1-349-95121-5.

- ^ Walras, Léon (1954) [1877]. Elements of Pure Economics. Irwin. ISBN 978-0-678-06028-5.

{{cite book}}: ISBN / Date incompatibility (help) Scroll to chapter-preview links. - ^ a b Hayek, F. A. (1945). "The Use of Knowledge in Society". The American Economic Review. 35 (4): 519–530. ISSN 0002-8282.

- ^ a b "The Use of Knowledge in Society Quotes by Friedrich A. Hayek". www.goodreads.com. Retrieved 2025-10-24.

- ^ "The Use of Knowledge in Society", Wikipedia, 2025-07-29, retrieved 2025-10-24

- ^ Eatwell, John (1987). "Walras's Theory of Capital". In Eatwell, J.; Milgate, M.; Newman, P. (eds.). The New Palgrave: A Dictionary of Economics. London: Macmillan.

- ^ Jaffe, William (1953). "Walras's Theory of Capital Formation in the Framework of his Theory of General Equilibrium". Économie Appliquée. 6: 289–317.

- ^ Düppe, Till; Weintraub, E. Roy. "Losing Equilibrium: On the Existence of Abraham Wald's Fixed-Point Proof of 1935". History of Political Economy. 48: 635–655.

- ^ Weintraub, E. Roy (1985). General Equilibrium Analysis: Studies in Appraisal. Cambridge University Press.

- ^ Arrow, K. J.; Debreu, G. (1954). "The Existence of an Equilibrium for a Competitive Economy". Econometrica. 22 (3): 265–290. doi:10.2307/1907353. JSTOR 1907353.

- ^ McKenzie, Lionel W. (1959). "On the Existence of General Equilibrium for a Competitive Economy". Econometrica. 27 (1): 54–71. doi:10.2307/1907777. JSTOR 1907777.

- ^ Debreu, G. (1959). Theory of Value. New York: Wiley. p. 98.

- ^ McKenzie, Lionel W. (1954). "On Equilibrium in Graham's Model of World Trade and Other Competitive Systems". Econometrica. 22 (2): 147–161. doi:10.2307/1907539. JSTOR 1907539.

- ^ Arrow, K. J.; Debreu, G. (1954). "Existence of an equilibrium for a competitive economy". Econometrica. 22 (3): 265–290. doi:10.2307/1907353. JSTOR 1907353.

- ^ Uzawa, Hirofumi (1962). "Walras' Existence Theorem and Brouwer's Fixed-Point Theorem". Economic Studies Quarterly. 13 (1): 59–62. doi:10.11398/economics1950.13.1_59.

- ^ Starr, Ross M. (1969). "Quasi-equilibria in markets with non-convex preferences" (PDF). Econometrica. 37 (1): 25–38. CiteSeerX 10.1.1.297.8498. doi:10.2307/1909201. JSTOR 1909201. Archived (PDF) from the original on 2017-08-09.

- ^ a b c Guesnerie, Roger (1989). "First-best allocation of resources with nonconvexities in production". In Bernard Cornet and Henry Tulkens (ed.). Contributions to Operations Research and Economics: The twentieth anniversary of CORE (Papers from the symposium held in Louvain-la-Neuve, January 1987). Cambridge, MA: MIT Press. pp. 99–143. ISBN 978-0-262-03149-3. MR 1104662.

- ^ See pages 392–399 for the Shapley-Folkman-Starr results and see p. 188 for applications in Arrow, Kenneth J.; Hahn, Frank H. (1971). "Appendix B: Convex and related sets". General Competitive Analysis. Mathematical economics texts [Advanced textbooks in economics]. San Francisco: Holden-Day [North-Holland]. pp. 375–401. ISBN 978-0-444-85497-1. MR 0439057.

- ^ Pages 52–55 with applications on pages 145–146, 152–153, and 274–275 in Mas-Colell, Andreu (1985). "1.L Averages of sets". The Theory of General Economic Equilibrium: A Differentiable Approach. Econometric Society Monographs. Cambridge University Press. ISBN 978-0-521-26514-0. MR 1113262.

- ^ Hildenbrand, Werner (1974). Core and Equilibria of a Large Economy. Princeton Studies in Mathematical Economics. Princeton, New Jersey: Princeton University Press. pp. viii+251. ISBN 978-0-691-04189-6. MR 0389160.

- ^ See section 7.2 Convexification by numbers in Salanié: Salanié, Bernard (2000). "7 Nonconvexities". Microeconomics of market failures (English translation of the (1998) French Microéconomie: Les défaillances du marché (Economica, Paris) ed.). Cambridge, Massachusetts: MIT Press. pp. 107–125. ISBN 978-0-262-19443-3.

- ^ An "informal" presentation appears in pages 63–65 of Laffont: Laffont, Jean-Jacques (1988). "3 Nonconvexities". Fundamentals of Public Economics. MIT. ISBN 978-0-585-13445-1.

- ^ Toda, Alexis Akira; Walsh, Kieran James (2024). "Recent Advances on Uniqueness of Competitive Equilibrium". Journal of Mathematical Economics. 113 103008. doi:10.1016/j.jmateco.2024.103008.

- ^ a b c Mandler, Michael (1999). Dilemmas in Economic Theory: Persisting Foundational Problems of Microeconomics. Oxford: Oxford University Press. ISBN 978-0-19-510087-7.

- ^ Turab Rizvi, S. Abu (2006). "The Sonnenschein-Mantel-Debreu Results after Thirty Years". History of Political Economy. 38 (Suppl. 1): 228–245. doi:10.1215/00182702-2005-024.

- ^ As quoted by Petri, Fabio (2004). General Equilibrium, Capital, and Macroeconomics: A Key to Recent Controversies in Equilibrium Theory. Cheltenham, UK: Edward Elgar. ISBN 978-1-84376-829-6.

- ^ Georgescu-Roegen, Nicholas (1979). "Methods in Economic Science". Journal of Economic Issues. 13 (2): 317–328. doi:10.1080/00213624.1979.11503640. JSTOR 4224809.

- ^ Shoven, J. B.; Whalley, J. (1972). "A General Equilibrium Calculation of the Effects of Differential Taxation of Income from Capital in the U.S." (PDF). Journal of Public Economics. 1 (3–4): 281–321. doi:10.1016/0047-2727(72)90009-6. Archived (PDF) from the original on 2015-12-18.

- ^ Shoven, J. B.; Whalley, J. (1973). "General Equilibrium with Taxes: A Computational Procedure and an Existence Proof". The Review of Economic Studies. 40 (4): 475–489. doi:10.2307/2296582. JSTOR 2296582.

- ^ Mitra-Kahn, Benjamin H. (2008). "Debunking the Myths of Computable General Equilibrium Models" (PDF). Schwarz Center for Economic Policy Analysis Working Paper 01-2008. Archived (PDF) from the original on 2016-06-16.

- ^ Keen, Steve (May 4, 2009). "Debtwatch No 34: The Confidence Trick".

- ^ Keen, Steve (November 30, 2008). "DebtWatch No 29 December 2008".

- ^ • Robert W. Clower (1965). "The Keynesian Counter-Revolution: A Theoretical Appraisal," in F.H. Hahn and F.P.R. Brechling, ed., The Theory of Interest Rates. Macmillan. Reprinted in Clower (1987), Money and Markets. Cambridge. Description and preview via scroll down.

pp. 34-58.

• _____ (1967). "A Reconsideration of the Microfoundations of Monetary Theory," Western Economic Journal, 6(1), pp. 1–8 (press +).

• _____ and Peter W. Howitt (1996). "Taking Markets Seriously: Groundwork for a Post-Walrasian Macroeconomics", in David Colander, ed., Beyond Microfoundations, pp. 21–37.

• Herschel I. Grossman (1971). "Money, Interest, and Prices in Market Disequilibrium," Journal of Political Economy,79(5), p p. 943–961.

• Jean-Pascal Bénassy (1990). "Non-Walrasian Equilibria, Money, and Macroeconomics," Handbook of Monetary Economics, v. 1, ch. 4, pp. 103-169. Table of contents.

• _____ (1993). "Nonclearing Markets: Microeconomic Concepts and Macroeconomic Applications," Journal of Economic Literature, 31(2), pp. 732–761 Archived 2011-05-16 at the Wayback Machine (press +).

• _____ (2008). "non-clearing markets in general equilibrium," in The New Palgrave Dictionary of Economics, 2nd Edition. Abstract. - ^ Kornai, János (1971). Anti-Equilibrium: On Economic Systems Theory and the Tasks of Research. Amsterdam: North-Holland. ISBN 978-0-7204-3055-4.

- ^ Albert, Michael; Hahnel, Robin (1991). The Political Economy of Participatory Economics. Princeton: Princeton University Press. p. 7.

- ^ "New Structural Economics". Institute of New Structural Economics. Retrieved 2024-12-08.

- ^ Li, Wu (2019). General Equilibrium and Structural Dynamics: Perspectives of New Structural Economics (in Chinese). Beijing: Economic Science Press. ISBN 978-7-5218-0422-5.

- ^ "CRAN: Package GE". The Comprehensive R Archive Network. Retrieved 2024-12-08.

Further reading

[edit]- Black, Fischer (1995). Exploring General Equilibrium. Cambridge, Massachusetts: MIT Press. ISBN 978-0-262-02382-5.

- Dixon, Peter B.; Parmenter, Brian R.; Powell, Alan A.; Wilcoxen, Peter J.; Pearson, Ken R. (1992). Notes and Problems in Applied General Equilibrium Economics. North-Holland. ISBN 978-0-444-88449-7.

- ——, with Rimmer, Maureen T. (2002). Dynamic General Equilibrium Modelling for Forecasting and Policy: A Practical Guide and Documentation of MONASH. Contributions to economic analysis (256). Amsterdam: Elsevier. ISBN 0-444-51260-8.

- ——, with Jorgenson, Dale W., eds. (2012). Handbook of Computable General Equilibrium Modelling (2 volumes). North-Holland.

- ——, with Jerie, Michael; Rimmer, Maureen T. (2018). Trade Theory in Computable General Equilibrium Models. Advances in Applied General Equilibrium Modeling. Singapore: Springer Nature. ISBN 978-981-10-8323-5.

- Eaton, B. Curtis; Eaton, Diane F.; Allen, Douglas W. (2009). "Competitive General Equilibrium". Microeconomics: Theory with Applications (Seventh ed.). Toronto: Pearson Prentice Hall. ISBN 978-0-13-206424-8.

- Geanakoplos, John (1987). "Arrow-Debreu model of general equilibrium". The New Palgrave: A Dictionary of Economics. Vol. 1. pp. 116–124.

- Grandmont, J. M. (1977). "Temporary General Equilibrium Theory". Econometrica. 45 (3): 535–572. doi:10.2307/1911674. JSTOR 1911674.

- Hicks, John R. (1946) [1939]. Value and Capital (Second ed.). Oxford: Clarendon Press.

- Kubler, Felix (2008). "Computation of general equilibria (new developments)". The New Palgrave Dictionary of Economics (Second ed.).

- Mas-Colell, A.; Whinston, M.; Green, J. (1995). Microeconomic Theory. New York: Oxford University Press. ISBN 978-0-19-507340-9.

- McKenzie, Lionel W. (1981). "The Classical Theorem on Existence of Competitive Equilibrium" (PDF). Econometrica. 49 (4): 819–841. doi:10.2307/1912505. JSTOR 1912505. Archived (PDF) from the original on 2012-03-15.

- _____ (1983). "Turnpike Theory, Discounted Utility, and the von Neumann Facet". Journal of Economic Theory. 30 (2): 330–352. doi:10.1016/0022-0531(83)90111-4.

- _____ (1987). "Turnpike theory". The New Palgrave: A Dictionary of Economics. Vol. 4. pp. 712–720.

- _____ (1999). "Equilibrium, Trade, and Capital Accumulation". The Japanese Economic Review. 50 (4): 371–397. doi:10.1111/1468-5876.00128.

- Samuelson, Paul A. (1941). "The Stability of Equilibrium: Comparative Statics and Dynamics". Econometrica. 9 (2): 97–120. doi:10.2307/1906872. JSTOR 1906872.

- _____ (1983) [1947]. Foundations of Economic Analysis (Enlarged ed.). Harvard University Press. ISBN 978-0-674-31301-9.

- Scarf, Herbert E. (2008). "Computation of general equilibria". The New Palgrave Dictionary of Economics (2nd ed.).

- Schumpeter, Joseph A. (1954). History of Economic Analysis. Oxford University Press.

General equilibrium theory

View on GrokipediaGeneral equilibrium theory is a mathematical framework in economics that analyzes the simultaneous interactions of supply, demand, and prices across all markets in an economy to determine a state where no agent can improve their welfare by unilateral action, assuming perfect competition, rational agents, and complete markets. Originating with Léon Walras's Éléments d'économie politique pure in 1874, the theory posits that an economy can reach a configuration where aggregate excess demands are zero at some price vector, with agents maximizing utility subject to budget constraints.[1]

Key developments include the Arrow-Debreu model of 1954, which extended Walrasian ideas to incorporate production, time, and uncertainty through contingent commodities, proving the existence of competitive equilibria under convexity assumptions via fixed-point theorems like Brouwer's or Kakutani's. This model supports the first fundamental theorem of welfare economics, stating that every competitive equilibrium allocation is Pareto efficient, and the second theorem, asserting that any Pareto efficient allocation can be decentralized as a competitive equilibrium with suitable initial endowments or transfers. These results provide a theoretical justification for markets as mechanisms for efficient resource allocation absent externalities or market failures.[1]

Despite its foundational role, general equilibrium theory has notable limitations and controversies, particularly the Sonnenschein-Mantel-Debreu (SMD) theorem from the 1970s, which demonstrates that aggregate excess demand functions satisfy only weak properties—homogeneity, Walras' law, and continuity—implying virtually any such function can arise from optimizing individual behaviors, thus precluding unique equilibria or strong comparative statics predictions.[2] This indeterminacy undermines the theory's empirical testability and predictive power, as real economies exhibit path dependence, frictions, and disequilibria not captured by static models.[2] Empirical applications remain challenging, with validations often relying on computable general equilibrium models for policy simulations rather than direct falsification, highlighting a disconnect between the theory's mathematical rigor and observable market dynamics.[3]