Recent from talks

Contribute something

Nothing was collected or created yet.

Science

View on Wikipedia

| Part of a series on |

| Science |

|---|

|

| General |

| Branches |

| In society |

Science is a systematic discipline that builds and organises knowledge in the form of testable hypotheses and predictions about the universe.[1][2] Modern science is typically divided into two – or three – major branches:[3] the natural sciences, which study the physical world, and the social sciences, which study individuals and societies.[4][5] While referred to as the formal sciences, the study of logic, mathematics, and theoretical computer science are typically regarded as separate because they rely on deductive reasoning instead of the scientific method as their main methodology.[6][7][8][9] Meanwhile, applied sciences are disciplines that use scientific knowledge for practical purposes, such as engineering and medicine.[10][11][12]

The history of science spans the majority of the historical record, with the earliest identifiable predecessors to modern science dating to the Bronze Age in Egypt and Mesopotamia (c. 3000–1200 BCE). Their contributions to mathematics, astronomy, and medicine entered and shaped the Greek natural philosophy of classical antiquity and later medieval scholarship, whereby formal attempts were made to provide explanations of events in the physical world based on natural causes; while further advancements, including the introduction of the Hindu–Arabic numeral system, were made during the Golden Age of India and Islamic Golden Age.[13]: 12 [14][15][16][13]: 163–192 The recovery and assimilation of Greek works and Islamic inquiries into Western Europe during the Renaissance revived natural philosophy,[13]: 193–224, 225–253 [17] which was later transformed by the Scientific Revolution that began in the 16th century[18] as new ideas and discoveries departed from previous Greek conceptions and traditions.[13]: 357–368 [19] The scientific method soon played a greater role in the acquisition of knowledge, and in the 19th century, many of the institutional and professional features of science began to take shape,[20][21] along with the changing of "natural philosophy" to "natural science".[22]

New knowledge in science is advanced by research from scientists who are motivated by curiosity about the world and a desire to solve problems.[23][24] Contemporary scientific research is highly collaborative and is usually done by teams in academic and research institutions,[25] government agencies,[13]: 163–192 and companies.[26] The practical impact of their work has led to the emergence of science policies that seek to influence the scientific enterprise by prioritising the ethical and moral development of commercial products, armaments, health care, public infrastructure, and environmental protection.

Etymology

[edit]The word science has been used in Middle English since the 14th century in the sense of "the state of knowing". The word was borrowed from the Anglo-Norman language as the suffix -cience, which was borrowed from the Latin word scientia, meaning "knowledge, awareness, understanding", a noun derivative of sciens meaning "knowing", itself the present active participle of sciō, "to know".[27]

There are many hypotheses for science's ultimate word origin. According to Michiel de Vaan, Dutch linguist and Indo-Europeanist, sciō may have its origin in the Proto-Italic language as *skije- or *skijo- meaning "to know", which may originate from Proto-Indo-European language as *skh1-ie, *skh1-io meaning "to incise". The Lexikon der indogermanischen Verben proposed sciō is a back-formation of nescīre, meaning "to not know, be unfamiliar with", which may derive from Proto-Indo-European *sekH- in Latin secāre, or *skh2- from *sḱʰeh2(i)-meaning "to cut".[28]

In the past, science was a synonym for "knowledge" or "study", in keeping with its Latin origin. A person who conducted scientific research was called a "natural philosopher" or "man of science".[29] In 1834, William Whewell introduced the term scientist in a review of Mary Somerville's book On the Connexion of the Physical Sciences,[30] crediting it to "some ingenious gentleman" (possibly himself).[31]

History

[edit]Early history

[edit]

Science has no single origin. Rather, scientific thinking emerged gradually over the course of tens of thousands of years,[32][33] taking different forms around the world, and few details are known about the very earliest developments. Women likely played a central role in prehistoric science,[34] as did religious rituals.[35] Some scholars use the term "protoscience" to label activities in the past that resemble modern science in some but not all features;[36][37][38] however, this label has also been criticised as denigrating,[39] or too suggestive of presentism, thinking about those activities only in relation to modern categories.[40]

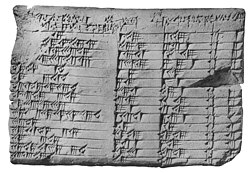

Direct evidence for scientific processes becomes clearer with the advent of writing systems in the Bronze Age civilisations of Ancient Egypt and Mesopotamia (c. 3000–1200 BCE), creating the earliest written records in the history of science.[13]: 12–15 [14] Although the words and concepts of "science" and "nature" were not part of the conceptual landscape at the time, the ancient Egyptians and Mesopotamians made contributions that would later find a place in Greek and medieval science: mathematics, astronomy, and medicine.[41][13]: 12 From the 3rd millennium BCE, the ancient Egyptians developed a non-positional decimal numbering system,[42] solved practical problems using geometry,[43] and developed a calendar.[44] Their healing therapies involved drug treatments and the supernatural, such as prayers, incantations, and rituals.[13]: 9

The ancient Mesopotamians used knowledge about the properties of various natural chemicals for manufacturing pottery, faience, glass, soap, metals, lime plaster, and waterproofing.[45] They studied animal physiology, anatomy, behaviour, and astrology for divinatory purposes.[46] The Mesopotamians had an intense interest in medicine and the earliest medical prescriptions appeared in Sumerian during the Third Dynasty of Ur.[45][47] They seem to have studied scientific subjects which had practical or religious applications and had little interest in satisfying curiosity.[45]

Classical antiquity

[edit]

In classical antiquity, there is no real ancient analogue of a modern scientist. Instead, well-educated, usually upper-class, and almost universally male individuals performed various investigations into nature whenever they could afford the time.[48] Before the invention or discovery of the concept of phusis or nature by the pre-Socratic philosophers, the same words tend to be used to describe the natural "way" in which a plant grows,[49] and the "way" in which, for example, one tribe worships a particular god. For this reason, it is claimed that these men were the first philosophers in the strict sense and the first to clearly distinguish "nature" and "convention".[50]

The early Greek philosophers of the Milesian school, which was founded by Thales of Miletus and later continued by his successors Anaximander and Anaximenes, were the first to attempt to explain natural phenomena without relying on the supernatural.[51] The Pythagoreans developed a complex number philosophy[52]: 467–468 and contributed significantly to the development of mathematical science.[52]: 465 The theory of atoms was developed by the Greek philosopher Leucippus and his student Democritus.[53][54] Later, Epicurus would develop a full natural cosmology based on atomism, and would adopt a "canon" (ruler, standard) which established physical criteria or standards of scientific truth.[55] The Greek doctor Hippocrates established the tradition of systematic medical science[56][57] and is known as "The Father of Medicine".[58]

A turning point in the history of early philosophical science was Socrates' example of applying philosophy to the study of human matters, including human nature, the nature of political communities, and human knowledge itself. The Socratic method as documented by Plato's dialogues is a dialectic method of hypothesis elimination: better hypotheses are found by steadily identifying and eliminating those that lead to contradictions. The Socratic method searches for general commonly held truths that shape beliefs and scrutinises them for consistency.[59] Socrates criticised the older type of study of physics as too purely speculative and lacking in self-criticism.[60]

In the 4th century BCE, Aristotle created a systematic programme of teleological philosophy.[61] In the 3rd century BCE, Greek astronomer Aristarchus of Samos was the first to propose a heliocentric model of the universe, with the Sun at the centre and all the planets orbiting it.[62] Aristarchus's model was widely rejected because it was believed to violate the laws of physics,[62] while Ptolemy's Almagest, which contains a geocentric description of the Solar System, was accepted through the early Renaissance instead.[63][64] The inventor and mathematician Archimedes of Syracuse made major contributions to the beginnings of calculus.[65] Pliny the Elder was a Roman writer and polymath, who wrote the seminal encyclopaedia Natural History.[66][67][68]

Positional notation for representing numbers likely emerged between the 3rd and 5th centuries CE along Indian trade routes. This numeral system made efficient arithmetic operations more accessible and would eventually become standard for mathematics worldwide.[69]

Middle Ages

[edit]

Due to the collapse of the Western Roman Empire, the 5th century saw an intellectual decline, with knowledge of classical Greek conceptions of the world deteriorating in Western Europe.[13]: 194 Latin encyclopaedists of the period such as Isidore of Seville preserved the majority of general ancient knowledge.[70] In contrast, because the Byzantine Empire resisted attacks from invaders, they were able to preserve and improve prior learning.[13]: 159 John Philoponus, a Byzantine scholar in the 6th century, started to question Aristotle's teaching of physics, introducing the theory of impetus.[13]: 307, 311, 363, 402 His criticism served as an inspiration to medieval scholars and Galileo Galilei, who extensively cited his works ten centuries later.[13]: 307–308 [71]

During late antiquity and the Early Middle Ages, natural phenomena were mainly examined via the Aristotelian approach. The approach includes Aristotle's four causes: material, formal, moving, and final cause.[72] Many Greek classical texts were preserved by the Byzantine Empire and Arabic translations were made by Christians, mainly Nestorians and Miaphysites. Under the Abbasids, these Arabic translations were later improved and developed by Arabic scientists.[73] By the 6th and 7th centuries, the neighbouring Sasanian Empire established the medical Academy of Gondishapur, which was considered by Greek, Syriac, and Persian physicians as the most important medical hub of the ancient world.[74]

Islamic study of Aristotelianism flourished in the House of Wisdom established in the Abbasid capital of Baghdad, Iraq[75] and the flourished[76] until the Mongol invasions in the 13th century. Ibn al-Haytham, better known as Alhazen, used controlled experiments in his optical study.[a][78][79] Avicenna's compilation of The Canon of Medicine, a medical encyclopaedia, is considered to be one of the most important publications in medicine and was used until the 18th century.[80]

By the 11th century most of Europe had become Christian,[13]: 204 and in 1088, the University of Bologna emerged as the first university in Europe.[81] As such, demand for Latin translation of ancient and scientific texts grew,[13]: 204 a major contributor to the Renaissance of the 12th century. Renaissance scholasticism in western Europe flourished, with experiments done by observing, describing, and classifying subjects in nature.[82] In the 13th century, medical teachers and students at Bologna began opening human bodies, leading to the first anatomy textbook based on human dissection by Mondino de Luzzi.[83]

Renaissance

[edit]

New developments in optics played a role in the inception of the Renaissance, both by challenging long-held metaphysical ideas on perception, as well as by contributing to the improvement and development of technology such as the camera obscura and the telescope. At the start of the Renaissance, Roger Bacon, Vitello, and John Peckham each built up a scholastic ontology upon a causal chain beginning with sensation, perception, and finally apperception of the individual and universal forms of Aristotle.[77]: Book I A model of vision later known as perspectivism was exploited and studied by the artists of the Renaissance. This theory uses only three of Aristotle's four causes: formal, material, and final.[84]

In the 16th century, Nicolaus Copernicus formulated a heliocentric model of the Solar System, stating that the planets revolve around the Sun, instead of the geocentric model where the planets and the Sun revolve around the Earth. This was based on a theorem that the orbital periods of the planets are longer as their orbs are farther from the centre of motion, which he found not to agree with Ptolemy's model.[85]

Johannes Kepler and others challenged the notion that the only function of the eye is perception, and shifted the main focus in optics from the eye to the propagation of light.[84][86] Kepler is best known, however, for improving Copernicus' heliocentric model through the discovery of Kepler's laws of planetary motion. Kepler did not reject Aristotelian metaphysics and described his work as a search for the Harmony of the Spheres.[87] Galileo had made significant contributions to astronomy, physics and engineering. However, he became persecuted after Pope Urban VIII sentenced him for writing about the heliocentric model.[88]

The printing press was widely used to publish scholarly arguments, including some that disagreed widely with contemporary ideas of nature.[89] Francis Bacon and René Descartes published philosophical arguments in favour of a new type of non-Aristotelian science. Bacon emphasised the importance of experiment over contemplation, questioned the Aristotelian concepts of formal and final cause, promoted the idea that science should study the laws of nature and the improvement of all human life.[90] Descartes emphasised individual thought and argued that mathematics rather than geometry should be used to study nature.[91]

Age of Enlightenment

[edit]

At the start of the Age of Enlightenment, Isaac Newton formed the foundation of classical mechanics by his Philosophiæ Naturalis Principia Mathematica greatly influencing future physicists.[92] Gottfried Wilhelm Leibniz incorporated terms from Aristotelian physics, now used in a new non-teleological way. This implied a shift in the view of objects: objects were now considered as having no innate goals. Leibniz assumed that different types of things all work according to the same general laws of nature, with no special formal or final causes.[93]

During this time the declared purpose and value of science became producing wealth and inventions that would improve human lives, in the materialistic sense of having more food, clothing, and other things. In Bacon's words, "the real and legitimate goal of sciences is the endowment of human life with new inventions and riches", and he discouraged scientists from pursuing intangible philosophical or spiritual ideas, which he believed contributed little to human happiness beyond "the fume of subtle, sublime or pleasing [speculation]".[94]

Science during the Enlightenment was dominated by scientific societies and academies,[95] which had largely replaced universities as centres of scientific research and development. Societies and academies were the backbones of the maturation of the scientific profession. Another important development was the popularisation of science among an increasingly literate population.[96] Enlightenment philosophers turned to a few of their scientific predecessors – Galileo, Kepler, Boyle, and Newton principally – as the guides to every physical and social field of the day.[97][98]

The 18th century saw significant advancements in the practice of medicine[99] and physics;[100] the development of biological taxonomy by Carl Linnaeus;[101] a new understanding of magnetism and electricity;[102] and the maturation of chemistry as a discipline.[103] Ideas on human nature, society, and economics evolved during the Enlightenment. Hume and other Scottish Enlightenment thinkers developed A Treatise of Human Nature, which was expressed historically in works by authors including James Burnett, Adam Ferguson, John Millar and William Robertson, all of whom merged a scientific study of how humans behaved in ancient and primitive cultures with a strong awareness of the determining forces of modernity.[104] Modern sociology largely originated from this movement.[105] In 1776, Adam Smith published The Wealth of Nations, which is often considered the first work on modern economics.[106]

19th century

[edit]

During the 19th century, many distinguishing characteristics of contemporary modern science began to take shape. These included the transformation of the life and physical sciences; the frequent use of precision instruments; the emergence of terms such as "biologist", "physicist", and "scientist"; an increased professionalisation of those studying nature; scientists gaining cultural authority over many dimensions of society; the industrialisation of numerous countries; the thriving of popular science writings; and the emergence of science journals.[107] During the late 19th century, psychology emerged as a separate discipline from philosophy when Wilhelm Wundt founded the first laboratory for psychological research in 1879.[108]

During the mid-19th century Charles Darwin and Alfred Russel Wallace independently proposed the theory of evolution by natural selection in 1858, which explained how different plants and animals originated and evolved. Their theory was set out in detail in Darwin's book On the Origin of Species, published in 1859.[109] Separately, Gregor Mendel presented his paper, "Experiments on Plant Hybridisation" in 1865,[110] which outlined the principles of biological inheritance, serving as the basis for modern genetics.[111]

Early in the 19th century John Dalton suggested the modern atomic theory, based on Democritus's original idea of indivisible particles called atoms.[112] The laws of conservation of energy, conservation of momentum and conservation of mass suggested a highly stable universe where there could be little loss of resources. However, with the advent of the steam engine and the Industrial Revolution there was an increased understanding that not all forms of energy have the same energy qualities, the ease of conversion to useful work or to another form of energy.[113] This realisation led to the development of the laws of thermodynamics, in which the free energy of the universe is seen as constantly declining: the entropy of a closed universe increases over time.[b]

The electromagnetic theory was established in the 19th century by the works of Hans Christian Ørsted, André-Marie Ampère, Michael Faraday, James Clerk Maxwell, Oliver Heaviside, and Heinrich Hertz. The new theory raised questions that could not easily be answered using Newton's framework. The discovery of X-rays inspired the discovery of radioactivity by Henri Becquerel and Marie Curie in 1896,[116] Marie Curie then became the first person to win two Nobel Prizes.[117] In the next year came the discovery of the first subatomic particle, the electron.[118]

20th century

[edit]

In the first half of the century the development of antibiotics and artificial fertilisers improved human living standards globally.[119][120] Harmful environmental issues such as ozone depletion, ocean acidification, eutrophication, and climate change came to the public's attention and caused the onset of environmental studies.[121]

During this period scientific experimentation became increasingly larger in scale and funding.[122] The extensive technological innovation stimulated by World War I, World War II, and the Cold War led to competitions between global powers, such as the Space Race and nuclear arms race.[123][124] Substantial international collaborations were also made, despite armed conflicts.[125]

In the late 20th century active recruitment of women and elimination of sex discrimination greatly increased the number of women scientists, but large gender disparities remained in some fields.[126] The discovery of the cosmic microwave background in 1964[127] led to a rejection of the steady-state model of the universe in favour of the Big Bang theory of Georges Lemaître.[128]

The century saw fundamental changes within science disciplines. Evolution became a unified theory in the early 20th century when the modern synthesis reconciled Darwinian evolution with classical genetics.[129] Albert Einstein's theory of relativity and the development of quantum mechanics complement classical mechanics to describe physics in extreme length, time and gravity.[130][131] Widespread use of integrated circuits in the last quarter of the 20th century combined with communications satellites led to a revolution in information technology and the rise of the global internet and mobile computing, including smartphones. The need for mass systematisation of long, intertwined causal chains and large amounts of data led to the rise of the fields of systems theory and computer-assisted scientific modelling.[132]

21st century

[edit]The Human Genome Project was completed in 2003 by identifying and mapping all of the genes of the human genome.[133] The first induced pluripotent human stem cells were made in 2006, allowing adult cells to be transformed into stem cells and turn into any cell type found in the body.[134] With the affirmation of the Higgs boson discovery in 2013, the last particle predicted by the Standard Model of particle physics was found.[135] In 2015, gravitational waves, predicted by general relativity a century before, were first observed.[136][137] In 2019, the international collaboration Event Horizon Telescope presented the first direct image of a black hole's accretion disc.[138]

Branches

[edit]Modern science is commonly divided into three major branches: natural science, social science, and formal science.[3] Each of these branches comprises various specialised yet overlapping scientific disciplines that often possess their own nomenclature and expertise.[139] Both natural and social sciences are empirical sciences,[140] as their knowledge is based on empirical observations and is capable of being tested for its validity by other researchers working under the same conditions.[141]

Natural

[edit]Natural science is the study of the physical world. It can be divided into two main branches: life science and physical science. These two branches may be further divided into more specialised disciplines. For example, physical science can be subdivided into physics, chemistry, astronomy, and earth science. Modern natural science is the successor to the natural philosophy that began in Ancient Greece. Galileo, Descartes, Bacon, and Newton debated the benefits of using approaches that were more mathematical and more experimental in a methodical way. Still, philosophical perspectives, conjectures, and presuppositions, often overlooked, remain necessary in natural science.[142] Systematic data collection, including discovery science, succeeded natural history, which emerged in the 16th century by describing and classifying plants, animals, minerals, and other biotic beings.[143] Today, "natural history" suggests observational descriptions aimed at popular audiences.[144]

Social

[edit]

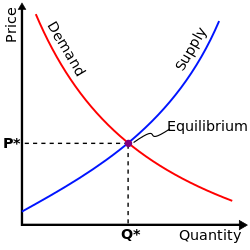

Social science is the study of human behaviour and the functioning of societies.[4][5] It has many disciplines that include, but are not limited to anthropology, economics, history, human geography, political science, psychology, and sociology.[4] In the social sciences, there are many competing theoretical perspectives, many of which are extended through competing research programmes such as the functionalists, conflict theorists, and interactionists in sociology.[4] Due to the limitations of conducting controlled experiments involving large groups of individuals or complex situations, social scientists may adopt other research methods such as the historical method, case studies, and cross-cultural studies. Moreover, if quantitative information is available, social scientists may rely on statistical approaches to better understand social relationships and processes.[4]

Formal

[edit]Formal science is an area of study that generates knowledge using formal systems.[145][146][147] A formal system is an abstract structure used for inferring theorems from axioms according to a set of rules.[148] It includes mathematics,[149][150] systems theory, and theoretical computer science. The formal sciences share similarities with the other two branches by relying on objective, careful, and systematic study of an area of knowledge. They are, however, different from the empirical sciences as they rely exclusively on deductive reasoning, without the need for empirical evidence, to verify their abstract concepts.[8][151][141] The formal sciences are therefore a priori disciplines and because of this, there is disagreement on whether they constitute a science.[6][152] Nevertheless, the formal sciences play an important role in the empirical sciences. Calculus, for example, was initially invented to understand motion in physics.[153] Natural and social sciences that rely heavily on mathematical applications include mathematical physics,[154] chemistry,[155] biology,[156] finance,[157] and economics.[158]

Applied

[edit]Applied science is the use of the scientific method and knowledge to attain practical goals and includes a broad range of disciplines such as engineering and medicine.[159][12] Engineering is the use of scientific principles to invent, design and build machines, structures and technologies.[160] Science may contribute to the development of new technologies.[161] Medicine is the practice of caring for patients by maintaining and restoring health through the prevention, diagnosis, and treatment of injury or disease.[162][163]

Basic

[edit]The applied sciences are often contrasted with the basic sciences, which are focused on advancing scientific theories and laws that explain and predict events in the natural world.[164][165]

Blue skies

[edit]Computational

[edit]Computational science applies computer simulations to science, enabling a better understanding of scientific problems than formal mathematics alone can achieve. The use of machine learning and artificial intelligence is becoming a central feature of computational contributions to science, for example in agent-based computational economics, random forests, topic modeling and various forms of prediction. However, machines alone rarely advance knowledge as they require human guidance and capacity to reason; and they can introduce bias against certain social groups or sometimes underperform against humans.[168][169]

Interdisciplinary

[edit]Interdisciplinary science involves the combination of two or more disciplines into one,[170] such as bioinformatics, a combination of biology and computer science[171] or cognitive sciences. The concept has existed since the ancient Greek period and it became popular again in the 20th century.[172]

Research

[edit]Scientific research can be labelled as either basic or applied research. Basic research is the search for knowledge and applied research is the search for solutions to practical problems using this knowledge. Most understanding comes from basic research, though sometimes applied research targets specific practical problems. This leads to technological advances that were not previously imaginable.[173]

Scientific method

[edit]

Scientific research involves using the scientific method, which seeks to objectively explain the events of nature in a reproducible way.[174] Scientists usually take for granted a set of basic assumptions that are needed to justify the scientific method: there is an objective reality shared by all rational observers; this objective reality is governed by natural laws; these laws were discovered by means of systematic observation and experimentation.[2] Mathematics is essential in the formation of hypotheses, theories, and laws, because it is used extensively in quantitative modelling, observing, and collecting measurements.[175] Statistics is used to summarise and analyse data, which allows scientists to assess the reliability of experimental results.[176]

In the scientific method an explanatory thought experiment or hypothesis is put forward as an explanation using parsimony principles and is expected to seek consilience – fitting with other accepted facts related to an observation or scientific question.[177] This tentative explanation is used to make falsifiable predictions, which are typically posted before being tested by experimentation. Disproof of a prediction is evidence of progress.[174]: 4–5 [178] Experimentation is especially important in science to help establish causal relationships to avoid the correlation fallacy, though in some sciences such as astronomy or geology, a predicted observation might be more appropriate.[179]

When a hypothesis proves unsatisfactory it is modified or discarded. If the hypothesis survives testing, it may become adopted into the framework of a scientific theory, a validly reasoned, self-consistent model or framework for describing the behaviour of certain natural events. A theory typically describes the behaviour of much broader sets of observations than a hypothesis; commonly, a large number of hypotheses can be logically bound together by a single theory. Thus, a theory is a hypothesis explaining various other hypotheses. In that vein, theories are formulated according to most of the same scientific principles as hypotheses. Scientists may generate a model, an attempt to describe or depict an observation in terms of a logical, physical or mathematical representation, and to generate new hypotheses that can be tested by experimentation.[180]

While performing experiments to test hypotheses, scientists may have a preference for one outcome over another.[181][182] Eliminating the bias can be achieved through transparency, careful experimental design, and a thorough peer review process of the experimental results and conclusions.[183][184] After the results of an experiment are announced or published, it is normal practice for independent researchers to double-check how the research was performed, and to follow up by performing similar experiments to determine how dependable the results might be.[185] Taken in its entirety, the scientific method allows for highly creative problem solving while minimising the effects of subjective and confirmation bias.[186] Intersubjective verifiability, the ability to reach a consensus and reproduce results, is fundamental to the creation of all scientific knowledge.[187]

Literature

[edit]

Scientific research is published in a range of literature.[188] Scientific journals communicate and document the results of research carried out in universities and various other research institutions, serving as an archival record of science. The first scientific journals, Journal des sçavans followed by Philosophical Transactions, began publication in 1665. Since that time the total number of active periodicals has steadily increased. In 1981, one estimate for the number of scientific and technical journals in publication was 11,500.[189]

Most scientific journals cover a single scientific field and publish the research within that field; the research is normally expressed in the form of a scientific paper. Science has become so pervasive in modern societies that it is considered necessary to communicate the achievements, news, and ambitions of scientists to a wider population.[190]

Challenges

[edit]The replication crisis is an ongoing methodological crisis that affects parts of the social and life sciences. In subsequent investigations, the results of many scientific studies have been proven to be unrepeatable.[191] The crisis has long-standing roots; the phrase was coined in the early 2010s[192] as part of a growing awareness of the problem. The replication crisis represents an important body of research in metascience, which aims to improve the quality of all scientific research while reducing waste.[193]

An area of study or speculation that masquerades as science in an attempt to claim legitimacy that it would not otherwise be able to achieve is sometimes referred to as pseudoscience, fringe science, or junk science.[194][195] Physicist Richard Feynman coined the term "cargo cult science" for cases in which researchers believe, and at a glance, look like they are doing science but lack the honesty to allow their results to be rigorously evaluated.[196] Various types of commercial advertising, ranging from hype to fraud, may fall into these categories. Science has been described as "the most important tool" for separating valid claims from invalid ones.[197]

There can also be an element of political bias or ideological bias on all sides of scientific debates. Sometimes, research may be characterised as "bad science", research that may be well-intended but is incorrect, obsolete, incomplete, or over-simplified expositions of scientific ideas. The term scientific misconduct refers to situations such as where researchers have intentionally misrepresented their published data or have purposely given credit for a discovery to the wrong person.[198]

Philosophy

[edit]

There are different schools of thought in the philosophy of science. The most popular position is empiricism, which holds that knowledge is created by a process involving observation; scientific theories generalise observations.[199] Empiricism generally encompasses inductivism, a position that explains how general theories can be made from the finite amount of empirical evidence available. Many versions of empiricism exist, with the predominant ones being Bayesianism and the hypothetico-deductive method.[200][199]

Empiricism has stood in contrast to rationalism, the position originally associated with Descartes, which holds that knowledge is created by the human intellect, not by observation.[201] Critical rationalism is a contrasting 20th-century approach to science, first defined by Austrian-British philosopher Karl Popper. Popper rejected the way that empiricism describes the connection between theory and observation. He claimed that theories are not generated by observation, but that observation is made in the light of theories, and that the only way theory A can be affected by observation is after theory A were to conflict with observation, but theory B were to survive the observation.[202] Popper proposed replacing verifiability with falsifiability as the landmark of scientific theories, replacing induction with falsification as the empirical method.[202] Popper further claimed that there is actually only one universal method, not specific to science: the negative method of criticism, trial and error,[203] covering all products of the human mind, including science, mathematics, philosophy, and art.[204]

Another approach, instrumentalism, emphasises the utility of theories as instruments for explaining and predicting phenomena. It views scientific theories as black boxes, with only their input (initial conditions) and output (predictions) being relevant. Consequences, theoretical entities, and logical structure are claimed to be things that should be ignored.[205] Close to instrumentalism is constructive empiricism, according to which the main criterion for the success of a scientific theory is whether what it says about observable entities is true.[206]

Thomas Kuhn argued that the process of observation and evaluation takes place within a paradigm, a logically consistent "portrait" of the world that is consistent with observations made from its framing. He characterised normal science as the process of observation and "puzzle solving", which takes place within a paradigm, whereas revolutionary science occurs when one paradigm overtakes another in a paradigm shift.[207] Each paradigm has its own distinct questions, aims, and interpretations. The choice between paradigms involves setting two or more "portraits" against the world and deciding which likeness is most promising. A paradigm shift occurs when a significant number of observational anomalies arise in the old paradigm and a new paradigm makes sense of them. That is, the choice of a new paradigm is based on observations, even though those observations are made against the background of the old paradigm. For Kuhn, acceptance or rejection of a paradigm is a social process as much as a logical process. Kuhn's position, however, is not one of relativism.[208]

Another approach often cited in debates of scientific scepticism against controversial movements like "creation science" is methodological naturalism. Naturalists maintain that a difference should be made between natural and supernatural, and science should be restricted to natural explanations.[209] Methodological naturalism maintains that science requires strict adherence to empirical study and independent verification.[210]

Community

[edit]The scientific community is a network of interacting scientists who conduct scientific research. The community consists of smaller groups working in scientific fields. By having peer review, through discussion and debate within journals and conferences, scientists maintain the quality of research methodology and objectivity when interpreting results.[211]

Scientists

[edit]

Scientists are individuals who conduct scientific research to advance knowledge in an area of interest.[212][213] Scientists may exhibit a strong curiosity about reality and a desire to apply scientific knowledge for the benefit of public health, nations, the environment, or industries; other motivations include recognition by peers and prestige.[citation needed] In modern times, many scientists study within specific areas of science in academic institutions, often obtaining advanced degrees in the process.[214] Many scientists pursue careers in various fields such as academia, industry, government, and nonprofit organisations.[215][216][217]

Science has historically been a male-dominated field, with notable exceptions. Women have faced considerable discrimination in science, much as they have in other areas of male-dominated societies. For example, women were frequently passed over for job opportunities and denied credit for their work.[218] The achievements of women in science have been attributed to the defiance of their traditional role as labourers within the domestic sphere.[219]

Learned societies

[edit]

Learned societies for the communication and promotion of scientific thought and experimentation have existed since the Renaissance.[220] Many scientists belong to a learned society that promotes their respective scientific discipline, profession, or group of related disciplines.[221] Membership may either be open to all, require possession of scientific credentials, or conferred by election.[222] Most scientific societies are nonprofit organisations,[223] and many are professional associations. Their activities typically include holding regular conferences for the presentation and discussion of new research results and publishing or sponsoring academic journals in their discipline. Some societies act as professional bodies, regulating the activities of their members in the public interest, or the collective interest of the membership.

The professionalisation of science, begun in the 19th century, was partly enabled by the creation of national distinguished academies of sciences such as the Italian Accademia dei Lincei in 1603,[224] the British Royal Society in 1660,[225] the French Academy of Sciences in 1666,[226] the American National Academy of Sciences in 1863,[227] the German Kaiser Wilhelm Society in 1911,[228] and the Chinese Academy of Sciences in 1949.[229] International scientific organisations, such as the International Science Council, are devoted to international cooperation for science advancement.[230]

Awards

[edit]Science awards are usually given to individuals or organisations that have made significant contributions to a discipline. They are often given by prestigious institutions; thus, it is considered a great honour for a scientist receiving them. Since the early Renaissance, scientists have often been awarded medals, money, and titles. The Nobel Prize, a widely regarded prestigious award, is awarded annually to those who have achieved scientific advances in the fields of medicine, physics, and chemistry.[231]

Society

[edit]Funding and policies

[edit]

Funding of science is often through a competitive process in which potential research projects are evaluated and only the most promising receive funding. Such processes, which are run by government, corporations, or foundations, allocate scarce funds. Total research funding in most developed countries is between 1.5% and 3% of GDP.[232] In the OECD, around two-thirds of research and development in scientific and technical fields is carried out by industry, and 20% and 10%, respectively, by universities and government. The government funding proportion in certain fields is higher, and it dominates research in social science and the humanities. In less developed nations, the government provides the bulk of the funds for their basic scientific research.[233]

Many governments have dedicated agencies to support scientific research, such as the National Science Foundation in the United States,[234] the National Scientific and Technical Research Council in Argentina,[235] Commonwealth Scientific and Industrial Research Organisation in Australia,[236] National Centre for Scientific Research in France,[237] the Max Planck Society in Germany,[238] and National Research Council in Spain.[239] In commercial research and development, all but the most research-orientated corporations focus more heavily on near-term commercialisation possibilities than research driven by curiosity.[240]

Science policy is concerned with policies that affect the conduct of the scientific enterprise, including research funding, often in pursuance of other national policy goals such as technological innovation to promote commercial product development, weapons development, health care, and environmental monitoring. Science policy sometimes refers to the act of applying scientific knowledge and consensus to the development of public policies. In accordance with public policy being concerned about the well-being of its citizens, science policy's goal is to consider how science and technology can best serve the public.[241] Public policy can directly affect the funding of capital equipment and intellectual infrastructure for industrial research by providing tax incentives to those organisations that fund research.[190]

Education and awareness

[edit]

Science education for the general public is embedded in the school curriculum, and is supplemented by online pedagogical content (for example, YouTube and Khan Academy), museums, and science magazines and blogs. Major organisations of scientists such as the American Association for the Advancement of Science (AAAS) consider the sciences to be a part of the liberal arts traditions of learning, along with philosophy and history.[242] Scientific literacy is chiefly concerned with an understanding of the scientific method, units and methods of measurement, empiricism, a basic understanding of statistics (correlations, qualitative versus quantitative observations, aggregate statistics), and a basic understanding of core scientific fields such as physics, chemistry, biology, ecology, geology, and computation. As a student advances into higher stages of formal education, the curriculum becomes more in depth. Traditional subjects usually included in the curriculum are natural and formal sciences, although recent movements include social and applied science as well.[243]

The mass media face pressures that can prevent them from accurately depicting competing scientific claims in terms of their credibility within the scientific community as a whole. Determining how much weight to give different sides in a scientific debate may require considerable expertise regarding the matter.[244] Few journalists have real scientific knowledge, and even beat reporters who are knowledgeable about certain scientific issues may be ignorant about other scientific issues that they are suddenly asked to cover.[245][246]

Science magazines such as New Scientist, Science & Vie, and Scientific American cater to the needs of a much wider readership and provide a non-technical summary of popular areas of research, including notable discoveries and advances in certain fields of research.[247] The science fiction genre, primarily speculative fiction, can transmit the ideas and methods of science to the general public.[248] Recent efforts to intensify or develop links between science and non-scientific disciplines, such as literature or poetry, include the Creative Writing Science resource developed through the Royal Literary Fund.[249]

Anti-science attitudes

[edit]While the scientific method is broadly accepted in the scientific community, some fractions of society reject certain scientific positions or are sceptical about science. Examples are the common notion that COVID-19 is not a major health threat to the US (held by 39% of Americans in August 2021)[250] or the belief that climate change is not a major threat to the US (also held by 40% of Americans, in late 2019 and early 2020).[251] Psychologists have pointed to four factors driving rejection of scientific results:[252]

- Scientific authorities are sometimes seen as inexpert, untrustworthy, or biased.

- Some marginalised social groups hold anti-science attitudes, in part because these groups have often been exploited in unethical experiments.[253]

- Messages from scientists may contradict deeply held existing beliefs or morals.

- The delivery of a scientific message may not be appropriately targeted to a recipient's learning style.

Anti-science attitudes often seem to be caused by fear of rejection in social groups. For instance, climate change is perceived as a threat by only 22% of Americans on the right side of the political spectrum, but by 85% on the left.[254] That is, if someone on the left would not consider climate change as a threat, this person may face contempt and be rejected in that social group. In fact, people may rather deny a scientifically accepted fact than lose or jeopardise their social status.[255]

Politics

[edit]

Attitudes towards science are often determined by political opinions and goals. Government, business and advocacy groups have been known to use legal and economic pressure to influence scientific researchers. Many factors can act as facets of the politicisation of science such as anti-intellectualism, perceived threats to religious beliefs, and fear for business interests.[257] Politicisation of science is usually accomplished when scientific information is presented in a way that emphasises the uncertainty associated with the scientific evidence.[258] Tactics such as shifting conversation, failing to acknowledge facts, and capitalising on doubt of scientific consensus have been used to gain more attention for views that have been undermined by scientific evidence.[259] Examples of issues that have involved the politicisation of science include the global warming controversy, health effects of pesticides, and health effects of tobacco.[259][260]

See also

[edit]Notes

[edit]- ^ Ibn al-Haytham's Book of Optics Book I, [6.54]. pages 372 and 408 disputed Claudius Ptolemy's extramission theory of vision; "Hence, the extramission of [visual] rays is superfluous and useless". —A.Mark Smith's translation of the Latin version of Ibn al-Haytham.[77]: Book I, [6.54]. pp. 372, 408

- ^ Whether the universe is closed or open, or the shape of the universe, is an open question. The 2nd law of thermodynamics,[113]: 9 [114] and the 3rd law of thermodynamics[115] imply the heat death of the universe if the universe is a closed system, but not necessarily for an expanding universe.

References

[edit]- ^ Wilson, E. O. (1999). "The natural sciences". Consilience: The Unity of Knowledge (Reprint ed.). New York: Vintage. pp. 49–71. ISBN 978-0-679-76867-8.

- ^ a b Heilbron, J. L.; et al. (2003). "Preface". The Oxford Companion to the History of Modern Science. New York: Oxford University Press. pp. vii–x. ISBN 978-0-19-511229-0.

...modern science is a discovery as well as an invention. It was a discovery that nature generally acts regularly enough to be described by laws and even by mathematics; and required invention to devise the techniques, abstractions, apparatus, and organization for exhibiting the regularities and securing their law-like descriptions.

- ^ a b Cohen, Eliel (2021). "The boundary lens: theorising academic activity". The University and its Boundaries: Thriving or Surviving in the 21st Century. New York: Routledge. pp. 14–41. ISBN 978-0-367-56298-4. Archived from the original on 5 May 2021. Retrieved 4 May 2021.

- ^ a b c d e Colander, David C.; Hunt, Elgin F. (2019). "Social science and its methods". Social Science: An Introduction to the Study of Society (17th ed.). New York: Routledge. pp. 1–22.

- ^ a b Nisbet, Robert A.; Greenfeld, Liah (16 October 2020). "Social Science". Encyclopædia Britannica. Archived from the original on 2 February 2022. Retrieved 9 May 2021.

- ^ a b Bishop, Alan (1991). "Environmental activities and mathematical culture". Mathematical Enculturation: A Cultural Perspective on Mathematics Education. Norwell, MA: Kluwer. pp. 20–59. ISBN 978-0-7923-1270-3. Retrieved 24 March 2018.

- ^ Bunge, Mario (1998). "The Scientific Approach". Philosophy of Science: Volume 1, From Problem to Theory. Vol. 1 (revised ed.). New York: Routledge. pp. 3–50. ISBN 978-0-7658-0413-6.

- ^ a b Fetzer, James H. (2013). "Computer reliability and public policy: Limits of knowledge of computer-based systems". Computers and Cognition: Why Minds are not Machines. Newcastle, United Kingdom: Kluwer. pp. 271–308. ISBN 978-1-4438-1946-6.

- ^ Nickles, Thomas (2013). "The Problem of Demarcation". Philosophy of Pseudoscience: Reconsidering the Demarcation Problem. The University of Chicago Press. p. 104.

- ^ Fischer, M. R.; Fabry, G (2014). "Thinking and acting scientifically: Indispensable basis of medical education". GMS Zeitschrift für Medizinische Ausbildung. 31 (2): Doc24. doi:10.3205/zma000916. PMC 4027809. PMID 24872859.

- ^ Sinclair, Marius (1993). "On the Differences between the Engineering and Scientific Methods". The International Journal of Engineering Education. Archived from the original on 15 November 2017. Retrieved 7 September 2018.

- ^ a b Bunge, M. (1966). "Technology as Applied Science". In Rapp, F. (ed.). Contributions to a Philosophy of Technology. Dordrecht: Springer. pp. 19–39. doi:10.1007/978-94-010-2182-1_2. ISBN 978-94-010-2184-5. S2CID 110332727.

- ^ a b c d e f g h i j k l m n Lindberg, David C. (2007). The beginnings of Western science: the European Scientific tradition in philosophical, religious, and institutional context (2nd ed.). University of Chicago Press. ISBN 978-0-226-48205-7.

- ^ a b Grant, Edward (2007). "Ancient Egypt to Plato". A History of Natural Philosophy: From the Ancient World to the Nineteenth Century. New York: Cambridge University Press. pp. 1–26. ISBN 978-0-521-68957-1.

- ^ Building Bridges Among the BRICs Archived 18 April 2023 at the Wayback Machine, p. 125, Robert Crane, Springer, 2014

- ^ Keay, John (2000). India: A history. Atlantic Monthly Press. p. 132. ISBN 978-0-87113-800-2.

The great era of all that is deemed classical in Indian literature, art and science was now dawning. It was this crescendo of creativity and scholarship, as much as ... political achievements of the Guptas, which would make their age so golden.

- ^ Sease, Virginia; Schmidt-Brabant, Manfrid. Thinkers, Saints, Heretics: Spiritual Paths of the Middle Ages. 2007. Pages 80–81 Archived 27 August 2024 at the Wayback Machine. Retrieved 6 October 2023

- ^ Principe, Lawrence M. (2011). "Introduction". Scientific Revolution: A Very Short Introduction. New York: Oxford University Press. pp. 1–3. ISBN 978-0-19-956741-6.

- ^ Grant, Edward (2007). "Transformation of medieval natural philosophy from the early period modern period to the end of the nineteenth century". A History of Natural Philosophy: From the Ancient World to the Nineteenth Century. New York: Cambridge University Press. pp. 274–322. ISBN 978-0-521-68957-1.

- ^ Cahan, David, ed. (2003). From Natural Philosophy to the Sciences: Writing the History of Nineteenth-Century Science. University of Chicago Press. ISBN 978-0-226-08928-7.

- ^ Lightman, Bernard (2011). "13. Science and the Public". In Shank, Michael; Numbers, Ronald; Harrison, Peter (eds.). Wrestling with Nature: From Omens to Science. University of Chicago Press. p. 367. ISBN 978-0-226-31783-0.

- ^ Harrison, Peter (2015). The Territories of Science and Religion. University of Chicago Press. pp. 164–165. ISBN 978-0-226-18451-7.

The changing character of those engaged in scientific endeavors was matched by a new nomenclature for their endeavors. The most conspicuous marker of this change was the replacement of "natural philosophy" by "natural science". In 1800 few had spoken of the "natural sciences" but by 1880 this expression had overtaken the traditional label "natural philosophy". The persistence of "natural philosophy" in the twentieth century is owing largely to historical references to a past practice (see figure 11). As should now be apparent, this was not simply the substitution of one term by another, but involved the jettisoning of a range of personal qualities relating to the conduct of philosophy and the living of the philosophical life.

- ^ MacRitchie, Finlay (2011). "Introduction". Scientific Research as a Career. New York: Routledge. pp. 1–6. ISBN 978-1-4398-6965-9. Archived from the original on 5 May 2021. Retrieved 5 May 2021.

- ^ Marder, Michael P. (2011). "Curiosity and research". Research Methods for Science. New York: Cambridge University Press. pp. 1–17. ISBN 978-0-521-14584-8. Archived from the original on 5 May 2021. Retrieved 5 May 2021.

- ^ de Ridder, Jeroen (2020). "How many scientists does it take to have knowledge?". In McCain, Kevin; Kampourakis, Kostas (eds.). What is Scientific Knowledge? An Introduction to Contemporary Epistemology of Science. New York: Routledge. pp. 3–17. ISBN 978-1-138-57016-0. Archived from the original on 5 May 2021. Retrieved 5 May 2021.

- ^ Szycher, Michael (2016). "Establishing your dream team". Commercialization Secrets for Scientists and Engineers. New York: Routledge. pp. 159–176. ISBN 978-1-138-40741-1. Archived from the original on 18 August 2021. Retrieved 5 May 2021.

- ^ "Science". Merriam-Webster Online Dictionary. Archived from the original on 1 September 2019. Retrieved 16 October 2011.

- ^ Vaan, Michiel de (2008). "sciō". Etymological Dictionary of Latin and the other Italic Languages. Indo-European Etymological Dictionary. p. 545. ISBN 978-90-04-16797-1.

- ^ Cahan, David (2003). From natural philosophy to the sciences: writing the history of nineteenth-century science. University of Chicago Press. pp. 3–15. ISBN 0-226-08927-4.

- ^ Ross, Sydney (1962). "Scientist: The story of a word". Annals of Science. 18 (2): 65–85. doi:10.1080/00033796200202722.

- ^ "scientist". Oxford English Dictionary (Online ed.). Oxford University Press. (Subscription or participating institution membership required.)

- ^ Carruthers, Peter (2 May 2002). Carruthers, Peter; Stich, Stephen; Siegal, Michael (eds.). "The roots of scientific reasoning: infancy, modularity and the art of tracking". The Cognitive Basis of Science. Cambridge University Press. pp. 73–96. doi:10.1017/cbo9780511613517.005. ISBN 978-0-521-81229-0.

- ^ Lombard, Marlize; Gärdenfors, Peter (2017). "Tracking the Evolution of Causal Cognition in Humans". Journal of Anthropological Sciences. 95 (95): 219–234. doi:10.4436/JASS.95006. ISSN 1827-4765. PMID 28489015.

- ^ Graeber, David; Wengrow, David (2021). The Dawn of Everything. p. 248.

- ^ Budd, Paul; Taylor, Timothy (1995). "The Faerie Smith Meets the Bronze Industry: Magic Versus Science in the Interpretation of Prehistoric Metal-Making". World Archaeology. 27 (1): 133–143. doi:10.1080/00438243.1995.9980297. JSTOR 124782.

- ^ Tuomela, Raimo (1987). "Science, Protoscience, and Pseudoscience". In Pitt, J. C.; Pera, M. (eds.). Rational Changes in Science. Boston Studies in the Philosophy of Science. Vol. 98. Dordrecht: Springer. pp. 83–101. doi:10.1007/978-94-009-3779-6_4. ISBN 978-94-010-8181-8.

- ^ Smith, Pamela H. (2009). "Science on the Move: Recent Trends in the History of Early Modern Science". Renaissance Quarterly. 62 (2): 345–375. doi:10.1086/599864. PMID 19750597. S2CID 43643053.

- ^ Fleck, Robert (March 2021). "Fundamental Themes in Physics from the History of Art". Physics in Perspective. 23 (1): 25–48. Bibcode:2021PhP....23...25F. doi:10.1007/s00016-020-00269-7. ISSN 1422-6944. S2CID 253597172.

- ^ Scott, Colin (2011). "The Case of James Bay Cree Knowledge Construction". In Harding, Sandra (ed.). Science for the West, Myth for the Rest?. The Postcolonial Science and Technology Studies Reader. Durham, NC: Duke University Press. pp. 175–197. doi:10.2307/j.ctv11g96cc.16. ISBN 978-0-8223-4936-5. JSTOR j.ctv11g96cc.16.

- ^ Dear, Peter (2012). "Historiography of Not-So-Recent Science". History of Science. 50 (2): 197–211. doi:10.1177/007327531205000203. S2CID 141599452.

- ^ Rochberg, Francesca (2011). "Ch.1 Natural Knowledge in Ancient Mesopotamia". In Shank, Michael; Numbers, Ronald; Harrison, Peter (eds.). Wrestling with Nature: From Omens to Science. University of Chicago Press. p. 9. ISBN 978-0-226-31783-0.

- ^ Krebs, Robert E. (2004). Groundbreaking Scientific Experiments, Inventions, and Discoveries of the Middle Ages and the Renaissance. Greenwood Publishing Group. p. 127. ISBN 978-0-313-32433-8.

- ^ Erlich, Ḥaggai; Gershoni, Israel (2000). The Nile: Histories, Cultures, Myths. Lynne Rienner. pp. 80–81. ISBN 978-1-55587-672-2. Retrieved 9 January 2020.

The Nile occupied an important position in Egyptian culture; it influenced the development of mathematics, geography, and the calendar; Egyptian geometry advanced due to the practice of land measurement "because the overflow of the Nile caused the boundary of each person's land to disappear."

- ^ "Telling Time in Ancient Egypt". The Met's Heilbrunn Timeline of Art History. February 2017. Archived from the original on 3 March 2022. Retrieved 27 May 2022.

- ^ a b c McIntosh, Jane R. (2005). Ancient Mesopotamia: New Perspectives. Santa Barbara, CA: ABC-CLIO. pp. 273–276. ISBN 978-1-57607-966-9. Retrieved 20 October 2020.

- ^ Aaboe, Asger (2 May 1974). "Scientific Astronomy in Antiquity". Philosophical Transactions of the Royal Society. 276 (1257): 21–42. Bibcode:1974RSPTA.276...21A. doi:10.1098/rsta.1974.0007. JSTOR 74272. S2CID 122508567.

- ^ Biggs, R. D. (2005). "Medicine, Surgery, and Public Health in Ancient Mesopotamia". Journal of Assyrian Academic Studies. 19 (1): 7–18.

- ^ Lehoux, Daryn (2011). "2. Natural Knowledge in the Classical World". In Shank, Michael; Numbers, Ronald; Harrison, Peter (eds.). Wrestling with Nature: From Omens to Science. University of Chicago Press. p. 39. ISBN 978-0-226-31783-0.

- ^ An account of the pre-Socratic use of the concept of φύσις may be found in Naddaf, Gerard (2006). The Greek Concept of Nature. SUNY Press, and in Ducarme, Frédéric; Couvet, Denis (2020). "What does 'nature' mean?" (PDF). Palgrave Communications. 6 (14) 14. Springer Nature. doi:10.1057/s41599-020-0390-y. Archived (PDF) from the original on 16 August 2023. Retrieved 16 August 2023. The word φύσις, while first used in connection with a plant in Homer, occurs early in Greek philosophy, and in several senses. Generally, these senses match rather well the current senses in which the English word nature is used, as confirmed by Guthrie, W. K. C. Presocratic Tradition from Parmenides to Democritus (volume 2 of his History of Greek Philosophy), Cambridge University Press, 1965.

- ^ Strauss, Leo; Gildin, Hilail (1989). "Progress or Return? The Contemporary Crisis in Western Education". An Introduction to Political Philosophy: Ten Essays by Leo Strauss. Wayne State University Press. p. 209. ISBN 978-0-8143-1902-4. Retrieved 30 May 2022.

- ^ O'Grady, Patricia F. (2016). Thales of Miletus: The Beginnings of Western Science and Philosophy. New York: Routledge. p. 245. ISBN 978-0-7546-0533-1. Retrieved 20 October 2020.

- ^ a b Burkert, Walter (1 June 1972). Lore and Science in Ancient Pythagoreanism. Cambridge, MA: Harvard University Press. ISBN 978-0-674-53918-1.

- ^ Pullman, Bernard (1998). The Atom in the History of Human Thought. Oxford University Press. pp. 31–33. Bibcode:1998ahht.book.....P. ISBN 978-0-19-515040-7. Retrieved 20 October 2020.

- ^ Cohen, Henri; Lefebvre, Claire, eds. (2017). Handbook of Categorization in Cognitive Science (2nd ed.). Amsterdam: Elsevier. p. 427. ISBN 978-0-08-101107-2. Retrieved 20 October 2020.

- ^ Lucretius (fl.1st cenruty BCE) De rerum natura

- ^ Margotta, Roberto (1968). The Story of Medicine. New York: Golden Press. Retrieved 18 November 2020.

- ^ Touwaide, Alain (2005). Glick, Thomas F.; Livesey, Steven; Wallis, Faith (eds.). Medieval Science, Technology, and Medicine: An Encyclopedia. New York: Routledge. p. 224. ISBN 978-0-415-96930-7. Retrieved 20 October 2020.

- ^ Leff, Samuel; Leff, Vera (1956). From Witchcraft to World Health. London: Macmillan. Retrieved 23 August 2020.

- ^ "Plato, Apology". p. 17. Archived from the original on 29 January 2018. Retrieved 1 November 2017.

- ^ "Plato, Apology". p. 27. Archived from the original on 29 January 2018. Retrieved 1 November 2017.

- ^ Aristotle. Nicomachean Ethics (H. Rackham ed.). 1139b. Archived from the original on 17 March 2012. Retrieved 22 September 2010.

- ^ a b McClellan, James E. III; Dorn, Harold (2015). Science and Technology in World History: An Introduction. Baltimore: Johns Hopkins University Press. pp. 99–100. ISBN 978-1-4214-1776-9. Retrieved 20 October 2020.

- ^ Graßhoff, Gerd (1990). The History of Ptolemy's Star Catalogue. Studies in the History of Mathematics and Physical Sciences. Vol. 14. New York: Springer. doi:10.1007/978-1-4612-4468-4. ISBN 978-1-4612-8788-9.

- ^ Hoffmann, Susanne M. (2017). Hipparchs Himmelsglobus (in German). Wiesbaden: Springer Fachmedien Wiesbaden. Bibcode:2017hihi.book.....H. doi:10.1007/978-3-658-18683-8. ISBN 978-3-658-18682-1.

- ^ Edwards, C. H. Jr. (1979). The Historical Development of the Calculus. New York: Springer. p. 75. ISBN 978-0-387-94313-8. Retrieved 20 October 2020.

- ^ Lawson, Russell M. (2004). Science in the Ancient World: An Encyclopedia. Santa Barbara, CA: ABC-CLIO. pp. 190–191. ISBN 978-1-85109-539-1. Retrieved 20 October 2020.

- ^ Murphy, Trevor Morgan (2004). Pliny the Elder's Natural History: The Empire in the Encyclopedia. Oxford University Press. p. 1. ISBN 978-0-19-926288-5. Retrieved 20 October 2020.

- ^ Doody, Aude (2010). Pliny's Encyclopedia: The Reception of the Natural History. Cambridge University Press. p. 1. ISBN 978-1-139-48453-4. Retrieved 20 October 2020.

- ^ Conner, Clifford D. (2005). A People's History of Science: Miners, Midwives, and "Low Mechanicks". New York: Nation Books. pp. 72–74. ISBN 1-56025-748-2.

- ^ Grant, Edward (1996). The Foundations of Modern Science in the Middle Ages: Their Religious, Institutional and Intellectual Contexts. Cambridge Studies in the History of Science. Cambridge University Press. pp. 7–17. ISBN 978-0-521-56762-6. Retrieved 9 November 2018.

- ^ Wildberg, Christian (1 May 2018). "Philoponus". In Zalta, Edward N. (ed.). Stanford Encyclopedia of Philosophy. Metaphysics Research Lab, Stanford University. Archived from the original on 22 August 2019. Retrieved 1 May 2018.

- ^ Falcon, Andrea (2019). "Aristotle on Causality". In Zalta, Edward (ed.). Stanford Encyclopedia of Philosophy (Spring 2019 ed.). Metaphysics Research Lab, Stanford University. Archived from the original on 9 October 2020. Retrieved 3 October 2020.

- ^ Grant, Edward (2007). "Islam and the eastward shift of Aristotelian natural philosophy". A History of Natural Philosophy: From the Ancient World to the Nineteenth Century. Cambridge University Press. pp. 62–67. ISBN 978-0-521-68957-1.

- ^ Fisher, W. B. (1968–1991). The Cambridge history of Iran. Cambridge University Press. ISBN 978-0-521-20093-6.

- ^ "Bayt al-Hikmah". Encyclopædia Britannica. Archived from the original on 4 November 2016. Retrieved 3 November 2016.

- ^ Hossein Nasr, Seyyed; Leaman, Oliver, eds. (2001). History of Islamic Philosophy. Routledge. pp. 165–167. ISBN 978-0-415-25934-7.

- ^ a b Smith, A. Mark (2001). Alhacen's Theory of Visual Perception: A Critical Edition, with English Translation and Commentary, of the First Three Books of Alhacen's De Aspectibus, the Medieval Latin Version of Ibn al-Haytham's Kitāb al-Manāẓir, 2 vols. Transactions of the American Philosophical Society. Vol. 91. Philadelphia: American Philosophical Society. ISBN 978-0-87169-914-5.

- ^ Toomer, G. J. (1964). "Reviewed work: Ibn al-Haythams Weg zur Physik, Matthias Schramm". Isis. 55 (4): 463–465. doi:10.1086/349914. JSTOR 228328. See p. 464: "Schramm sums up [Ibn Al-Haytham's] achievement in the development of scientific method.", p. 465: "Schramm has demonstrated .. beyond any dispute that Ibn al-Haytham is a major figure in the Islamic scientific tradition, particularly in the creation of experimental techniques." p. 465: "only when the influence of Ibn al-Haytham and others on the mainstream of later medieval physical writings has been seriously investigated can Schramm's claim that Ibn al-Haytham was the true founder of modern physics be evaluated."

- ^ Cohen, H. Floris (2010). "Greek nature knowledge transplanted: The Islamic world". How modern science came into the world. Four civilizations, one 17th-century breakthrough (2nd ed.). Amsterdam University Press. pp. 99–156. ISBN 978-90-8964-239-4.

- ^ Selin, Helaine, ed. (2006). Encyclopaedia of the History of Science, Technology, and Medicine in Non-Western Cultures. Springer. pp. 155–156. Bibcode:2008ehst.book.....S. ISBN 978-1-4020-4559-2.

- ^ Russell, Josiah C. (1959). "Gratian, Irnerius, and the Early Schools of Bologna". The Mississippi Quarterly. 12 (4): 168–188. JSTOR 26473232.

Perhaps even as early as 1088 (the date officially set for the founding of the University)

- ^ "St. Albertus Magnus". Encyclopædia Britannica. Archived from the original on 28 October 2017. Retrieved 27 October 2017.

- ^ Numbers, Ronald (2009). Galileo Goes to Jail and Other Myths about Science and Religion. Harvard University Press. p. 45. ISBN 978-0-674-03327-6. Archived from the original on 20 January 2021. Retrieved 27 March 2018.

- ^ a b Smith, A. Mark (1981). "Getting the Big Picture in Perspectivist Optics". Isis. 72 (4): 568–589. doi:10.1086/352843. JSTOR 231249. PMID 7040292. S2CID 27806323.

- ^ Goldstein, Bernard R. (2016). "Copernicus and the Origin of his Heliocentric System" (PDF). Journal for the History of Astronomy. 33 (3): 219–235. doi:10.1177/002182860203300301. S2CID 118351058. Archived from the original (PDF) on 12 April 2020. Retrieved 12 April 2020.

- ^ Cohen, H. Floris (2010). "Greek nature knowledge transplanted and more: Renaissance Europe". How modern science came into the world. Four civilizations, one 17th-century breakthrough (2nd ed.). Amsterdam University Press. pp. 99–156. ISBN 978-90-8964-239-4.

- ^ Koestler, Arthur (1990) [1959]. The Sleepwalkers: A History of Man's Changing Vision of the Universe. London: Penguin. p. 1. ISBN 0-14-019246-8.

- ^ van Helden, Al (1995). "Pope Urban VIII". The Galileo Project. Archived from the original on 11 November 2016. Retrieved 3 November 2016.

- ^ Gingerich, Owen (1975). "Copernicus and the Impact of Printing". Vistas in Astronomy. 17 (1): 201–218. Bibcode:1975VA.....17..201G. doi:10.1016/0083-6656(75)90061-6.

- ^ Zagorin, Perez (1998). Francis Bacon. Princeton University Press. p. 84. ISBN 978-0-691-00966-7.

- ^ Davis, Philip J.; Hersh, Reuben (1986). Descartes' Dream: The World According to Mathematics. Cambridge, MA: Harcourt Brace Jovanovich.

- ^ Gribbin, John (2002). Science: A History 1543–2001. Allen Lane. p. 241. ISBN 978-0-7139-9503-9.

Although it was just one of the many factors in the Enlightenment, the success of Newtonian physics in providing a mathematical description of an ordered world clearly played a big part in the flowering of this movement in the eighteenth century

- ^ "Gottfried Leibniz – Biography". Maths History. Archived from the original on 11 July 2017. Retrieved 2 March 2021.

- ^ Freudenthal, Gideon; McLaughlin, Peter (20 May 2009). The Social and Economic Roots of the Scientific Revolution: Texts by Boris Hessen and Henryk Grossmann. Springer. ISBN 978-1-4020-9604-4. Retrieved 25 July 2018.

- ^ Goddard Bergin, Thomas; Speake, Jennifer, eds. (1987). Encyclopedia of the Renaissance. Facts on File. ISBN 978-0-8160-1315-9.

- ^ van Horn Melton, James (2001). The Rise of the Public in Enlightenment Europe. Cambridge University Press. pp. 82–83. doi:10.1017/CBO9780511819421. ISBN 978-0-511-81942-1. Archived from the original on 20 January 2022. Retrieved 27 May 2022.

- ^ "The Scientific Revolution and the Enlightenment (1500–1780)" (PDF). Archived (PDF) from the original on 14 January 2024. Retrieved 29 January 2024.

- ^ "Scientific Revolution". Encyclopædia Britannica. Archived from the original on 18 May 2019. Retrieved 29 January 2024.

- ^ Madigan, M.; Martinko, J., eds. (2006). Brock Biology of Microorganisms (11th ed.). Prentice Hall. ISBN 978-0-13-144329-7.

- ^ Guicciardini, N. (1999). Reading the Principia: The Debate on Newton's Methods for Natural Philosophy from 1687 to 1736. New York: Cambridge University Press. ISBN 978-0-521-64066-4.

- ^ Calisher, CH (2007). "Taxonomy: what's in a name? Doesn't a rose by any other name smell as sweet?". Croatian Medical Journal. 48 (2): 268–270. PMC 2080517. PMID 17436393.

- ^ Darrigol, Olivier (2000). Electrodynamics from Ampère to Einstein. New York: Oxford University Press. ISBN 0-19-850594-9.

- ^ Olby, R. C.; Cantor, G. N.; Christie, J. R. R.; Hodge, M. J. S. (1990). Companion to the History of Modern Science. London: Routledge. p. 265.

- ^ Magnusson, Magnus (10 November 2003). "Review of James Buchan, Capital of the Mind: how Edinburgh Changed the World". New Statesman. Archived from the original on 6 June 2011. Retrieved 27 April 2014.

- ^ Swingewood, Alan (1970). "Origins of Sociology: The Case of the Scottish Enlightenment". The British Journal of Sociology. 21 (2): 164–180. doi:10.2307/588406. JSTOR 588406.

- ^ Fry, Michael (1992). Adam Smith's Legacy: His Place in the Development of Modern Economics. Paul Samuelson, Lawrence Klein, Franco Modigliani, James M. Buchanan, Maurice Allais, Theodore Schultz, Richard Stone, James Tobin, Wassily Leontief, Jan Tinbergen. Routledge. ISBN 978-0-415-06164-3.

- ^ Lightman, Bernard (2011). "13. Science and the Public". In Shank, Michael; Numbers, Ronald; Harrison, Peter (eds.). Wrestling with Nature: From Omens to Science. University of Chicago Press. p. 367. ISBN 978-0-226-31783-0.

- ^ Leahey, Thomas Hardy (2018). "The psychology of consciousness". A History of Psychology: From Antiquity to Modernity (8th ed.). New York: Routledge. pp. 219–253. ISBN 978-1-138-65242-2.

- ^ Padian, Kevin (2008). "Darwin's enduring legacy". Nature. 451 (7179): 632–634. Bibcode:2008Natur.451..632P. doi:10.1038/451632a. PMID 18256649.

- ^ Henig, Robin Marantz (2000). The monk in the garden: the lost and found genius of Gregor Mendel, the father of genetics. pp. 134–138.

- ^ Miko, Ilona (2008). "Gregor Mendel's principles of inheritance form the cornerstone of modern genetics. So just what are they?". Nature Education. 1 (1): 134. Archived from the original on 19 July 2019. Retrieved 9 May 2021.

- ^ Rocke, Alan J. (2005). "In Search of El Dorado: John Dalton and the Origins of the Atomic Theory". Social Research. 72 (1): 125–158. doi:10.1353/sor.2005.0003. JSTOR 40972005. S2CID 141350239.

- ^ a b Reichl, Linda (1980). A Modern Course in Statistical Physics. Edward Arnold. ISBN 0-7131-2789-9.

- ^ Rao, Y. V. C. (1997). Chemical Engineering Thermodynamics. Universities Press. p. 158. ISBN 978-81-7371-048-3.

- ^ Heidrich, M. (2016). "Bounded energy exchange as an alternative to the third law of thermodynamics". Annals of Physics. 373: 665–681. Bibcode:2016AnPhy.373..665H. doi:10.1016/j.aop.2016.07.031.

- ^ Mould, Richard F. (1995). A century of X-rays and radioactivity in medicine: with emphasis on photographic records of the early years (Reprint. with minor corr ed.). Bristol: Inst. of Physics Publ. p. 12. ISBN 978-0-7503-0224-1.

- ^ a b Estreicher, Tadeusz (1938). "Curie, Maria ze Skłodowskich". Polski słownik biograficzny, vol. 4 (in Polish). p. 113.

- ^ Thomson, J. J. (1897). "Cathode Rays". Philosophical Magazine. 44 (269): 293–316. doi:10.1080/14786449708621070.

- ^ Goyotte, Dolores (2017). "The Surgical Legacy of World War II. Part II: The age of antibiotics" (PDF). The Surgical Technologist. 109: 257–264. Archived (PDF) from the original on 5 May 2021. Retrieved 8 January 2021.

- ^ Erisman, Jan Willem; Sutton, M. A.; Galloway, J.; Klimont, Z.; Winiwarter, W. (October 2008). "How a century of ammonia synthesis changed the world". Nature Geoscience. 1 (10): 636–639. Bibcode:2008NatGe...1..636E. doi:10.1038/ngeo325. S2CID 94880859. Archived from the original on 23 July 2010. Retrieved 22 October 2010.

- ^ Emmett, Robert; Zelko, Frank (2014). Emmett, Rob; Zelko, Frank (eds.). "Minding the Gap: Working Across Disciplines in Environmental Studies". Environment & Society Portal. RCC Perspectives no. 2. doi:10.5282/rcc/6313. Archived from the original on 21 January 2022.

- ^ Furner, Jonathan (1 June 2003). "Little Book, Big Book: Before and After Little Science, Big Science: A Review Article, Part I". Journal of Librarianship and Information Science. 35 (2): 115–125. doi:10.1177/0961000603352006. S2CID 34844169.

- ^ Kraft, Chris; Schefter, James (2001). Flight: My Life in Mission Control. New York: Dutton. pp. 3–5. ISBN 0-525-94571-7.

- ^ Kahn, Herman (1962). Thinking about the Unthinkable. Horizon.

- ^ Shrum, Wesley (2007). Structures of scientific collaboration. Joel Genuth, Ivan Chompalov. Cambridge, MA: MIT Press. ISBN 978-0-262-28358-8.

- ^ Rosser, Sue V. (12 March 2012). Breaking into the Lab: Engineering Progress for Women in Science. New York University Press. p. 7. ISBN 978-0-8147-7645-2.

- ^ Penzias, A. A. (2006). "The origin of elements" (PDF). Science. 205 (4406). Nobel Foundation: 549–554. doi:10.1126/science.205.4406.549. PMID 17729659. Archived (PDF) from the original on 17 January 2011. Retrieved 4 October 2006.

- ^ Weinberg, S. (1972). Gravitation and Cosmology. John Whitney & Sons. pp. 464–495. ISBN 978-0-471-92567-5.

- ^ Futuyma, Douglas J.; Kirkpatrick, Mark (2017). "Chapter 1: Evolutionary Biology". Evolution (4th ed.). Sinauer. pp. 3–26. ISBN 978-1-60535-605-1.

- ^ Miller, Arthur I. (1981). Albert Einstein's special theory of relativity. Emergence (1905) and early interpretation (1905–1911). Reading: Addison–Wesley. ISBN 978-0-201-04679-3.

- ^ ter Haar, D. (1967). The Old Quantum Theory. Pergamon. pp. 206. ISBN 978-0-08-012101-7.

- ^ von Bertalanffy, Ludwig (1972). "The History and Status of General Systems Theory". The Academy of Management Journal. 15 (4): 407–426. JSTOR 255139.

- ^ Naidoo, Nasheen; Pawitan, Yudi; Soong, Richie; Cooper, David N.; Ku, Chee-Seng (October 2011). "Human genetics and genomics a decade after the release of the draft sequence of the human genome". Human Genomics. 5 (6): 577–622. doi:10.1186/1479-7364-5-6-577. PMC 3525251. PMID 22155605.

- ^ Rashid, S. Tamir; Alexander, Graeme J. M. (March 2013). "Induced pluripotent stem cells: from Nobel Prizes to clinical applications". Journal of Hepatology. 58 (3): 625–629. doi:10.1016/j.jhep.2012.10.026. ISSN 1600-0641. PMID 23131523.

- ^ O'Luanaigh, C. (14 March 2013). "New results indicate that new particle is a Higgs boson" (Press release). CERN. Archived from the original on 20 October 2015. Retrieved 9 October 2013.

- ^ Abbott, B. P.; Abbott, R.; Abbott, T. D.; Acernese, F.; Ackley, K.; Adams, C.; Adams, T.; Addesso, P.; Adhikari, R. X.; Adya, V. B.; Affeldt, C.; Afrough, M.; Agarwal, B.; Agathos, M.; Agatsuma, K.; Aggarwal, N.; Aguiar, O. D.; Aiello, L.; Ain, A.; Ajith, P.; Allen, B.; Allen, G.; Allocca, A.; Altin, P. A.; Amato, A.; Ananyeva, A.; Anderson, S. B.; Anderson, W. G.; Angelova, S. V.; et al. (2017). "Multi-messenger Observations of a Binary Neutron Star Merger". The Astrophysical Journal. 848 (2): L12. arXiv:1710.05833. Bibcode:2017ApJ...848L..12A. doi:10.3847/2041-8213/aa91c9. S2CID 217162243.

- ^ Cho, Adrian (2017). "Merging neutron stars generate gravitational waves and a celestial light show". Science. doi:10.1126/science.aar2149.

- ^ "Media Advisory: First Results from the Event Horizon Telescope to be Presented on April 10th". Event Horizon Telescope. 20 April 2019. Archived from the original on 20 April 2019. Retrieved 21 September 2021.

- ^ "Scientific Method: Relationships Among Scientific Paradigms". Seed Magazine. 7 March 2007. Archived from the original on 1 November 2016. Retrieved 4 November 2016.

- ^ Bunge, Mario Augusto (1998). Philosophy of Science: From Problem to Theory. Transaction. p. 24. ISBN 978-0-7658-0413-6.