Recent from talks

Nothing was collected or created yet.

Seq2seq

View on Wikipedia

Seq2seq is a family of machine learning approaches used for natural language processing.[1] Applications include language translation,[2] image captioning,[3] conversational models,[4] speech recognition,[5] and text summarization.[6] Seq2seq uses sequence transformation: it turns one sequence into another sequence.

History

[edit]One naturally wonders if the problem of translation could conceivably be treated as a problem in cryptography. When I look at an article in Russian, I say: 'This is really written in English, but it has been coded in some strange symbols. I will now proceed to decode.

— Warren Weaver, Letter to Norbert Wiener, March 4, 1947

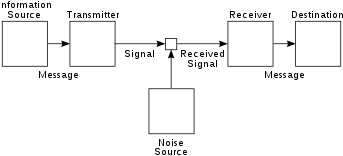

seq2seq is an approach to machine translation (or more generally, sequence transduction) with roots in information theory, where communication is understood as an encode-transmit-decode process, and machine translation can be studied as a special case of communication. This viewpoint was elaborated, for example, in the noisy channel model of machine translation.

In practice, seq2seq maps an input sequence into a real-numerical vector by using a neural network (the encoder), and then maps it back to an output sequence using another neural network (the decoder).

The idea of encoder-decoder sequence transduction had been developed in the early 2010s (see [2][1] for previous papers). The papers most commonly cited as the originators that produced seq2seq are two papers from 2014.[2][1]

In the seq2seq as proposed by them, both the encoder and the decoder were LSTMs. This had the "bottleneck" problem, since the encoding vector has a fixed size, so for long input sequences, information would tend to be lost, as they are difficult to fit into the fixed-length encoding vector. The attention mechanism, proposed in 2014,[7] resolved the bottleneck problem. They called their model RNNsearch, as it "emulates searching through a source sentence during decoding a translation".

A problem with seq2seq models at this point was that recurrent neural networks are difficult to parallelize. The 2017 publication of Transformers[8] resolved the problem by replacing the encoding RNN with self-attention Transformer blocks ("encoder blocks"), and the decoding RNN with cross-attention causally-masked Transformer blocks ("decoder blocks").

Priority dispute

[edit]One of the papers cited as the originator for seq2seq is (Sutskever et al 2014),[1] published at Google Brain while they were on Google's machine translation project. The research allowed Google to overhaul Google Translate into Google Neural Machine Translation in 2016.[1][9] Tomáš Mikolov claims to have developed the idea (before joining Google Brain) of using a "neural language model on pairs of sentences... and then [generating] translation after seeing the first sentence"—which he equates with seq2seq machine translation, and to have mentioned the idea to Ilya Sutskever and Quoc Le (while at Google Brain), who failed to acknowledge him in their paper.[10] Mikolov had worked on RNNLM (using RNN for language modelling) for his PhD thesis,[11] and is more notable for developing word2vec.

Architecture

[edit]The main reference for this section is.[12]

Encoder

[edit]

The encoder is responsible for processing the input sequence and capturing its essential information, which is stored as the hidden state of the network and, in a model with attention mechanism, a context vector. The context vector is the weighted sum of the input hidden states and is generated for every time instance in the output sequences.

Decoder

[edit]

The decoder takes the context vector and hidden states from the encoder and generates the final output sequence. The decoder operates in an autoregressive manner, producing one element of the output sequence at a time. At each step, it considers the previously generated elements, the context vector, and the input sequence information to make predictions for the next element in the output sequence. Specifically, in a model with attention mechanism, the context vector and the hidden state are concatenated together to form an attention hidden vector, which is used as an input for the decoder.

The seq2seq method developed in the early 2010s uses two neural networks: an encoder network converts an input sentence into numerical vectors, and a decoder network converts those vectors to sentences in the target language. The Attention mechanism was grafted onto this structure in 2014 and shown below. Later it was refined into the encoder-decoder Transformer architecture of 2017.

Training vs prediction

[edit]

There is a subtle difference between training and prediction. During training time, both the input and the output sequences are known. During prediction time, only the input sequence is known, and the output sequence must be decoded by the network itself.

Specifically, consider an input sequence and output sequence . The encoder would process the input step by step. After that, the decoder would take the output from the encoder, as well as the <bos> as input, and produce a prediction . Now, the question is: what should be input to the decoder in the next step?

A standard method for training is "teacher forcing". In teacher forcing, no matter what is output by the decoder, the next input to the decoder is always the reference. That is, even if , the next input to the decoder is still , and so on.

During prediction time, the "teacher" would be unavailable. Therefore, the input to the decoder must be , then , and so on.

It is found that if a model is trained purely by teacher forcing, its performance would degrade during prediction time, since generation based on the model's own output is different from generation based on the teacher's output. This is called exposure bias or a train/test distribution shift. A 2015 paper recommends that, during training, randomly switch between teacher forcing and no teacher forcing.[13]

Attention for seq2seq

[edit]The attention mechanism is an enhancement introduced by Bahdanau et al. in 2014 to address limitations in the basic Seq2Seq architecture where a longer input sequence results in the hidden state output of the encoder becoming irrelevant for the decoder. It enables the model to selectively focus on different parts of the input sequence during the decoding process. At each decoder step, an alignment model calculates the attention score using the current decoder state and all of the attention hidden vectors as input. An alignment model is another neural network model that is trained jointly with the seq2seq model used to calculate how well an input, represented by the hidden state, matches with the previous output, represented by attention hidden state. A softmax function is then applied to the attention score to get the attention weight.

In some models, the encoder states are directly fed into an activation function, removing the need for alignment model. An activation function receives one decoder state and one encoder state and returns a scalar value of their relevance.

Consider the seq2seq language English-to-French translation task. To be concrete, let us consider the translation of "the zone of international control <end>", which should translate to "la zone de contrôle international <end>". Here, we use the special <end> token as a control character to delimit the end of input for both the encoder and the decoder.

An input sequence of text is processed by a neural network (which can be an LSTM, a Transformer encoder, or some other network) into a sequence of real-valued vectors , where stands for "hidden vector".

After the encoder has finished processing, the decoder starts operating over the hidden vectors, to produce an output sequence , autoregressively. That is, it always takes as input both the hidden vectors produced by the encoder, and what the decoder itself has produced before, to produce the next output word:

- (, "<start>") → "la"

- (, "<start> la") → "la zone"

- (, "<start> la zone") → "la zone de"

- ...

- (, "<start> la zone de contrôle international") → "la zone de contrôle international <end>"

Here, we use the special <start> token as a control character to delimit the start of input for the decoder. The decoding terminates as soon as "<end>" appears in the decoder output.

Attention weights

[edit]

As hand-crafting weights defeats the purpose of machine learning, the model must compute the attention weights on its own. Taking analogy from the language of database queries, we make the model construct a triple of vectors: key, query, and value. The rough idea is that we have a "database" in the form of a list of key-value pairs. The decoder sends in a query, and obtains a reply in the form of a weighted sum of the values, where the weight is proportional to how closely the query resembles each key.

The decoder first processes the "<start>" input partially, to obtain an intermediate vector , the 0th hidden vector of decoder. Then, the intermediate vector is transformed by a linear map into a query vector . Meanwhile, the hidden vectors outputted by the encoder are transformed by another linear map into key vectors . The linear maps are useful for providing the model with enough freedom to find the best way to represent the data.

Now, the query and keys are compared by taking dot products: . Ideally, the model should have learned to compute the keys and values, such that is large, is small, and the rest are very small. This can be interpreted as saying that the attention weight should be mostly applied to the 0th hidden vector of the encoder, a little to the 1st, and essentially none to the rest.

In order to make a properly weighted sum, we need to transform this list of dot products into a probability distribution over . This can be accomplished by the softmax function, thus giving us the attention weights:This is then used to compute the context vector:

where are the value vectors, linearly transformed by another matrix to provide the model with freedom to find the best way to represent values. Without the matrices , the model would be forced to use the same hidden vector for both key and value, which might not be appropriate, as these two tasks are not the same.

This is the dot-attention mechanism. The particular version described in this section is "decoder cross-attention", as the output context vector is used by the decoder, and the input keys and values come from the encoder, but the query comes from the decoder, thus "cross-attention".

More succinctly, we can write it aswhere the matrix is the matrix whose rows are . Note that the querying vector, , is not necessarily the same as the key-value vector . In fact, it is theoretically possible for query, key, and value vectors to all be different, though that is rarely done in practice.

Other applications

[edit]In 2019, Facebook announced its use in symbolic integration and resolution of differential equations. The company claimed that it could solve complex equations more rapidly and with greater accuracy than commercial solutions such as Mathematica, MATLAB and Maple. First, the equation is parsed into a tree structure to avoid notational idiosyncrasies. An LSTM neural network then applies its standard pattern recognition facilities to process the tree.[14][15]

In 2020, Google released Meena, a 2.6 billion parameter seq2seq-based chatbot trained on a 341 GB data set. Google claimed that the chatbot has 1.7 times greater model capacity than OpenAI's GPT-2.[4]

In 2022, Amazon introduced AlexaTM 20B, a moderate-sized (20 billion parameter) seq2seq language model. It uses an encoder-decoder to accomplish few-shot learning. The encoder outputs a representation of the input that the decoder uses as input to perform a specific task, such as translating the input into another language. The model outperforms the much larger GPT-3 in language translation and summarization. Training mixes denoising (appropriately inserting missing text in strings) and causal-language-modeling (meaningfully extending an input text). It allows adding features across different languages without massive training workflows. AlexaTM 20B achieved state-of-the-art performance in few-shot-learning tasks across all Flores-101 language pairs, outperforming GPT-3 on several tasks.[16]

See also

[edit]References

[edit]- ^ a b c d e Sutskever, Ilya; Vinyals, Oriol; Le, Quoc Viet (2014). "Sequence to sequence learning with neural networks". arXiv:1409.3215 [cs.CL].

- ^ a b c Cho, Kyunghyun; van Merrienboer, Bart; Gulcehre, Caglar; Bahdanau, Dzmitry; Bougares, Fethi; Schwenk, Holger; Bengio, Yoshua (2014-06-03). "Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation". arXiv:1406.1078 [cs.CL].

- ^ Xu, Kelvin; Ba, Jimmy; Kiros, Ryan; Cho, Kyunghyun; Courville, Aaron; Salakhudinov, Ruslan; Zemel, Rich; Bengio, Yoshua (2015-06-01). "Show, Attend and Tell: Neural Image Caption Generation with Visual Attention". Proceedings of the 32nd International Conference on Machine Learning. PMLR: 2048–2057.

- ^ a b Adiwardana, Daniel; Luong, Minh-Thang; So, David R.; Hall, Jamie; Fiedel, Noah; Thoppilan, Romal; Yang, Zi; Kulshreshtha, Apoorv; Nemade, Gaurav; Lu, Yifeng; Le, Quoc V. (2020-01-31). "Towards a Human-like Open-Domain Chatbot". arXiv:2001.09977 [cs.CL].

- ^ Chan, William; Jaitly, Navdeep; Le, Quoc; Vinyals, Oriol (March 2016). "Listen, attend and spell: A neural network for large vocabulary conversational speech recognition". 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE. pp. 4960–4964. doi:10.1109/ICASSP.2016.7472621. ISBN 978-1-4799-9988-0.

- ^ Rush, Alexander M.; Chopra, Sumit; Weston, Jason (September 2015). "A Neural Attention Model for Abstractive Sentence Summarization". In Màrquez, Lluís; Callison-Burch, Chris; Su, Jian (eds.). Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing. Lisbon, Portugal: Association for Computational Linguistics. pp. 379–389. doi:10.18653/v1/D15-1044.

- ^ Bahdanau, Dzmitry; Cho, Kyunghyun; Bengio, Yoshua (2014). "Neural Machine Translation by Jointly Learning to Align and Translate". arXiv:1409.0473 [cs.CL].

- ^ Vaswani, Ashish; Shazeer, Noam; Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N; Kaiser, Ł ukasz; Polosukhin, Illia (2017). "Attention is All you Need". Advances in Neural Information Processing Systems. 30. Curran Associates, Inc.

- ^ Wu, Yonghui; Schuster, Mike; Chen, Zhifeng; Le, Quoc V.; Norouzi, Mohammad; Macherey, Wolfgang; Krikun, Maxim; Cao, Yuan; Gao, Qin; Macherey, Klaus; Klingner, Jeff; Shah, Apurva; Johnson, Melvin; Liu, Xiaobing; Kaiser, Łukasz (2016). "Google's Neural Machine Translation System: Bridging the Gap between Human and Machine Translation". arXiv:1609.08144 [cs.CL].

- ^ Mikolov, Tomáš (December 13, 2023). "Yesterday we received a Test of Time Award at NeurIPS for the word2vec paper from ten years ago". Facebook. Archived from the original on 24 Dec 2023.

- ^ Mikolov, Tomáš. "Statistical language models based on neural networks." (2012).

- ^ Zhang, Aston; Lipton, Zachary; Li, Mu; Smola, Alexander J. (2024). "10.7. Sequence-to-Sequence Learning for Machine Translation". Dive into deep learning. Cambridge New York Port Melbourne New Delhi Singapore: Cambridge University Press. ISBN 978-1-009-38943-3.

- ^ Bengio, Samy; Vinyals, Oriol; Jaitly, Navdeep; Shazeer, Noam (2015). "Scheduled Sampling for Sequence Prediction with Recurrent Neural Networks". Advances in Neural Information Processing Systems. 28. Curran Associates, Inc.

- ^ "Facebook has a neural network that can do advanced math". MIT Technology Review. December 17, 2019. Retrieved 2019-12-17.

- ^ Lample, Guillaume; Charton, François (2019). "Deep Learning for Symbolic Mathematics". arXiv:1912.01412 [cs.SC].

- ^ Soltan, Saleh; Ananthakrishnan, Shankar; FitzGerald, Jack; Gupta, Rahul; Hamza, Wael; Khan, Haidar; Peris, Charith; Rawls, Stephen; Rosenbaum, Andy; Rumshisky, Anna; Chandana Satya Prakash; Sridhar, Mukund; Triefenbach, Fabian; Verma, Apurv; Tur, Gokhan; Natarajan, Prem (2022). "AlexaTM 20B: Few-Shot Learning Using a Large-Scale Multilingual Seq2Seq Model". arXiv:2208.01448 [cs.CL].

External links

[edit]- Voita, Lena. "Sequence to Sequence (seq2seq) and Attention". Retrieved 2023-12-20.

- "A ten-minute introduction to sequence-to-sequence learning in Keras". blog.keras.io. Retrieved 2019-12-19.