Recent from talks

Contribute something

Nothing was collected or created yet.

Key Information

| This article is part of a series about |

| Meta Platforms |

|---|

|

| Products and services |

| People |

| Business |

Facebook is an American social media and social networking service owned by the American technology conglomerate Meta. Created in 2004 by Mark Zuckerberg with four other Harvard College students and roommates, Eduardo Saverin, Andrew McCollum, Dustin Moskovitz, and Chris Hughes, its name derives from the face book directories often given to American university students. Membership was initially limited to Harvard students, gradually expanding to other North American universities.

Since 2006, Facebook allows everyone to register from 13 years old, except in the case of a handful of nations, where the age requirement is 14 years.[6] As of December 2023[update], Facebook claimed almost 3.07 billion monthly active users worldwide.[7] As of July 2025[update], Facebook ranked as the third-most-visited website in the world, with 23% of its traffic coming from the United States.[8] It was the most downloaded mobile app of the 2010s.[9]

Facebook can be accessed from devices with Internet connectivity, such as personal computers, tablets and smartphones. After registering, users can create a profile revealing personal information about themselves. They can post text, photos and multimedia which are shared with any other users who have agreed to be their friend or, with different privacy settings, publicly. Users can also communicate directly with each other with Messenger, edit messages (within 15 minutes after sending),[10][11] join common-interest groups, and receive notifications on the activities of their Facebook friends and the pages they follow.

Facebook has often been criticized over issues such as user privacy (as with the Facebook–Cambridge Analytica data scandal), political manipulation (as with the 2016 U.S. elections) and mass surveillance.[12] The company has also been subject to criticism over its psychological effects such as addiction and low self-esteem, and over content such as fake news, conspiracy theories, copyright infringement, and hate speech.[13] Commentators have accused Facebook of willingly facilitating the spread of such content, as well as exaggerating its number of users to appeal to advertisers.[14]

History

[edit]

The history of Facebook traces its growth from a college networking site to a global social networking service.[15]

While attending Phillips Exeter in the early 2000s, Zuckerberg met Kris Tillery. Tillery, a one-time project collaborator with Zuckerberg, would create a school-based social networking project called Photo Address Book. Photo Address Book was a digital face book, created through a linked database composed of student information derived from the official records of the Exeter Student Council. The database contained linkages such as name, dorm-specific landline numbers, and student headshots.[16]

Mark Zuckerberg built a website called "Facemash" in 2003 while attending Harvard University. The site was comparable to Hot or Not and used photos from online face books, asking users to choose the 'hotter' person".[17] Zuckerberg was reported and faced expulsion, but the charges were dropped.[17]

A "face book" is a student directory featuring photos and personal information. In January 2004, Zuckerberg coded a new site known as "TheFacebook", stating, "It is clear that the technology needed to create a centralized Website is readily available ... the benefits are many." Zuckerberg met with Harvard student Eduardo Saverin, and each agreed to invest $1,000.[18] On February 4, 2004, Zuckerberg launched "TheFacebook".[19]

Membership was initially restricted to students of Harvard College. Dustin Moskovitz, Andrew McCollum, and Chris Hughes joined Zuckerberg to help manage the growth of the site.[20] It became available successively to most universities in the US and Canada.[21][22] In 2004, Napster co-founder Sean Parker became company president[23] and the company moved to Palo Alto, California.[24] PayPal co-founder Peter Thiel gave Facebook its first investment.[25][26] In 2005, the company dropped "the" from its name after purchasing the domain name Facebook.com.[27]

In 2006, Facebook opened to everyone at least 13 years old with a valid email address.[28][29][30] Facebook introduced key features like the News Feed, which became central to user engagement. By late 2007, Facebook had 100,000 pages on which companies promoted themselves.[31] Facebook had surpassed MySpace in global traffic and became the world's most popular social media platform. Microsoft announced that it had purchased a 1.6% share of Facebook for $240 million ($364 million in 2024 dollars[32]), giving Facebook an implied value of around $15 billion ($22.7 billion in 2024 dollars[32]). Facebook focused on generating revenue through targeted advertising based on user data, a model that drove its rapid financial growth. In 2012, Facebook went public with one of the largest IPOs in tech history. Acquisitions played a significant role in Facebook's dominance. In 2012, it purchased Instagram, followed by WhatsApp and Oculus VR in 2014, extending its influence beyond social networking into messaging and virtual reality. Mark Zuckerberg announces $60 billion investment in Meta AI according to Mashable.

The Facebook–Cambridge Analytica data scandal in 2018 revealed misuse of user data to influence elections, sparking global outcry and leading to regulatory fines and hearings. Facebook's role in global events, including its use in organizing movements like the Arab Spring and its impact on events like the Rohingya genocide in Myanmar, highlighted its dual nature as a tool for both empowerment and harm. In 2021, Facebook rebranded as Meta, reflecting its shift toward building the "metaverse" and focusing on virtual reality and augmented reality technologies.

Features

[edit]Facebook does not officially publish a maximum character limit for posts; however, User posts can be lengthy, with unofficial sources suggesting a high character limit. Posts may also include images and videos. According to Facebook's official business documentation, videos can be up to 240 minutes long and 10 GB in file size, with supported resolutions up to 1080p.[33]

Users can "friend" users, both sides must agree to being friends.[34] Posts can be changed to be seen by everyone (public), friends, people in a certain group (group) or by selected friends (private).[35] Users can join groups.[36] Groups are composed of persons with shared interests. For example, they might go to the same sporting club, live in the same suburb, have the same breed of pet or share a hobby.[36] Posts posted in a group can be seen only by those in a group, unless set to public.[37]

Users are able to buy, sell, and swap things on Facebook Marketplace or in a Buy, Swap and Sell group.[38][39] Facebook users may advertise events, which can be offline, on a website other than Facebook, or on Facebook.[40]

Website

[edit]

Technical aspects

[edit]The site's primary color is blue as Zuckerberg is red–green colorblind, a realization that occurred after a test taken around 2007.[41][42] Facebook was initially built using PHP, a popular scripting language designed for web development.[43] PHP was used to create dynamic content and manage data on the server side of the Facebook application. Zuckerberg and co-founders chose PHP for its simplicity and ease of use, which allowed them to quickly develop and deploy the initial version of Facebook. As Facebook grew in user base and functionality, the company encountered scalability and performance challenges with PHP. In response, Facebook engineers developed tools and technologies to optimize PHP performance. One of the most significant was the creation of the HipHop Virtual Machine (HHVM). This significantly improved the performance and efficiency of PHP code execution on Facebook's servers.

The site upgraded from HTTP to the more secure HTTPS in January 2011.[44]

2012 architecture

[edit]Facebook is developed as one monolithic application. According to an interview in 2012 with Facebook build engineer Chuck Rossi, Facebook compiles into a 1.5 GB binary blob which is then distributed to the servers using a custom BitTorrent-based release system. Rossi stated that it takes about 15 minutes to build and 15 minutes to release to the servers. The build and release process has zero downtime. Changes to Facebook are rolled out daily.[45]

Facebook used a combination platform based on HBase to store data across distributed machines. Using a tailing architecture, events are stored in log files, and the logs are tailed. The system rolls these events up and writes them to storage. The user interface then pulls the data out and displays it to users. Facebook handles requests as AJAX behavior. These requests are written to a log file using Scribe (developed by Facebook).[46]

Data is read from these log files using Ptail, an internally built tool to aggregate data from multiple Scribe stores. It tails the log files and pulls data out. Ptail data are separated into three streams and sent to clusters in different data centers (Plugin impression, News feed impressions, Actions (plugin + news feed)). Puma is used to manage periods of high data flow (Input/Output or IO). Data is processed in batches to lessen the number of times needed to read and write under high demand periods. (A hot article generates many impressions and news feed impressions that cause huge data skews.) Batches are taken every 1.5 seconds, limited by memory used when creating a hash table.[46]

Data is then output in PHP format. The backend is written in Java. Thrift is used as the messaging format so PHP programs can query Java services. Caching solutions display pages more quickly. The data is then sent to MapReduce servers where it is queried via Hive. This serves as a backup as the data can be recovered from Hive.[46]

Content delivery network (CDN)

[edit]Facebook uses its own content delivery network or "edge network" under the domain fbcdn.net for serving static data.[47][48] Until the mid-2010s, Facebook also relied on Akamai for CDN services.[49][50][51]

Hack programming language

[edit]On March 20, 2014, Facebook announced a new open-source programming language called Hack. Before public release, a large portion of Facebook was already running and "battle tested" using the new language.[52]

User profile/personal timeline

[edit]

Each registered user on Facebook has a personal profile that shows their posts and content.[53] The format of individual user pages was revamped in September 2011 and became known as "Timeline", a chronological feed of a user's stories,[54][55] including status updates, photos, interactions with apps and events.[56] The layout let users add a "cover photo".[56] Users were given more privacy settings.[56] In 2007, Facebook launched Facebook Pages for brands and celebrities to interact with their fanbases.[57][58] In June 2009, Facebook introduced a "Usernames" feature, allowing users to choose a unique nickname used in the URL for their personal profile, for easier sharing.[59][60]

In February 2014, Facebook expanded the gender setting, adding a custom input field that allows users to choose from a wide range of gender identities. Users can also set which set of gender-specific pronoun should be used in reference to them throughout the site.[61][62][63] In May 2014, Facebook introduced a feature to allow users to ask for information not disclosed by other users on their profiles. If a user does not provide key information, such as location, hometown, or relationship status, other users can use a new "ask" button to send a message asking about that item to the user in a single click.[64][65]

News Feed

[edit]News Feed appears on every user's homepage and highlights information including profile changes, upcoming events and friends' birthdays.[66] This enabled spammers and other users to manipulate these features by creating illegitimate events or posting fake birthdays to attract attention to their profile or cause.[67] Initially, the News Feed caused dissatisfaction among Facebook users; some complained it was too cluttered and full of undesired information, others were concerned that it made it too easy for others to track individual activities (such as relationship status changes, events, and conversations with other users).[68] Zuckerberg apologized for the site's failure to include appropriate privacy features. Users then gained control over what types of information are shared automatically with friends. Users are now able to prevent user-set categories of friends from seeing updates about certain types of activities, including profile changes, Wall posts and newly added friends.[69]

On February 23, 2010, Facebook was granted a patent[70] on certain aspects of its News Feed. The patent covers News Feeds in which links are provided so that one user can participate in the activity of another user.[71] The sorting and display of stories in a user's News Feed is governed by the EdgeRank algorithm.[72] The Photos application allows users to upload albums and photos.[73] Each album can contain 200 photos.[74] Privacy settings apply to individual albums. Users can "tag", or label, friends in a photo. The friend receives a notification about the tag with a link to the photo.[75] This photo tagging feature was developed by Aaron Sittig, now a Design Strategy Lead at Facebook, and former Facebook engineer Scott Marlette back in 2006 and was only granted a patent in 2011.[76][77]

On June 7, 2012, Facebook launched its App Center to help users find games and other applications.[78]

On May 13, 2015, Facebook in association with major news portals launched "Instant Articles" to provide news on the Facebook news feed without leaving the site.[79][80] In January 2017, Facebook launched Facebook Stories for iOS and Android in Ireland. The feature, following the format of Snapchat and Instagram stories, allows users to upload photos and videos that appear above friends' and followers' News Feeds and disappear after 24 hours.[81]

On October 11, 2017, Facebook introduced the 3D Posts feature to allow for uploading interactive 3D assets.[82] On January 11, 2018, Facebook announced that it would change News Feed to prioritize friends/family content and de-emphasize content from media companies.[83] In February 2020, Facebook announced it would spend $1 billion ($1.21 billion in 2024 dollars[32]) to license news material from publishers for the next three years; a pledge coming as the company falls under scrutiny from governments across the globe over not paying for news content appearing on the platform. The pledge would be in addition to the $600 million ($729 million in 2024 dollars[32]) paid since 2018 through deals with news companies such as The Guardian and Financial Times.[84][85][86]

In March and April 2021, in response to Apple announcing changes to its iOS device's Identifier for Advertisers policy, which included requiring app developers to directly request to users the ability to track on an opt-in basis, Facebook purchased full-page newspaper advertisements attempting to convince users to allow tracking, highlighting the effects targeted ads have on small businesses.[87] Facebook's efforts were ultimately unsuccessful, as Apple released iOS 14.5 in late April 2021, containing the feature for users in what has been deemed "App Tracking Transparency". Moreover, statistics from Verizon Communications subsidiary Flurry Analytics show 96% of all iOS users in the United States are not permitting tracking at all, and only 12% of worldwide iOS users are allowing tracking, which some news outlets deem "Facebook's nightmare", among similar terms.[88][89][90][91] Despite the news, Facebook stated that the new policy and software update would be "manageable".[92]

Like button

[edit]

The "like" button, stylized as a "thumbs up" icon, was first enabled on February 9, 2009,[93] and enables users to easily interact with status updates, comments, photos and videos, links shared by friends, and advertisements. Once clicked by a user, the designated content is more likely to appear in friends' News Feeds.[94][95] The button displays the number of other users who have liked the content.[96] The like button was extended to comments in June 2010.[97] In February 2016, Facebook expanded Like into "Reactions", allowing users to choose from five pre-defined emotions: "Love", "Haha", "Wow", "Sad", or "Angry".[98][99][100][101] In late April 2020, during the COVID-19 pandemic, a new "Care" reaction was added.[102]

Instant messaging

[edit]Facebook Messenger is an instant messaging service and software application. It began as Facebook Chat in 2008,[103] was revamped in 2010[104] and eventually became a standalone mobile app in August 2011, while remaining part of the user page on browsers.[105] Complementing regular conversations, Messenger lets users make one-to-one[106] and group[107] voice[108] and video calls.[109] Its Android app has integrated support for SMS[110] and "Chat Heads", which are round profile photo icons appearing on-screen regardless of what app is open,[111] while both apps support multiple accounts,[112] conversations with optional end-to-end encryption[113] and "Instant Games".[114] Some features, including sending money[115] and requesting transportation,[116] are limited to the United States.[115] In 2017, Facebook added "Messenger Day", a feature that lets users share photos and videos in a story-format with all their friends with the content disappearing after 24 hours;[117] Reactions, which lets users tap and hold a message to add a reaction through an emoji;[118] and Mentions, which lets users in group conversations type @ to give a particular user a notification.[118]

In April 2020, Facebook began rolling out a new feature called Messenger Rooms, a video chat feature that allows users to chat with up to 50 people at a time.[119] In July 2020, Facebook added a new feature in Messenger that lets iOS users to use Face ID or Touch ID to lock their chats. The feature is called App Lock and is a part of several changes in Messenger regarding privacy and security.[120][121] On October 13, 2020, the Messenger application introduced cross-app messaging with Instagram, which was launched in September 2021.[122] In addition to the integrated messaging, the application announced the introduction of a new logo, which will be an amalgamation of the Messenger and Instagram logo.[123]

Businesses and users can interact through Messenger with features such as tracking purchases and receiving notifications, and interacting with customer service representatives. Third-party developers can integrate apps into Messenger, letting users enter an app while inside Messenger and optionally share details from the app into a chat.[124] Developers can build chatbots into Messenger, for uses such as news publishers building bots to distribute news.[125] Businesses like respond.io, Twilio, and Manychat also used the APIs to develop chatbots and automation platforms for commercial use.[126]

The M virtual assistant (U.S.) scans chats for keywords and suggests relevant actions, such as its payments system for users mentioning money.[127][128] Group chatbots appear in Messenger as "Chat Extensions". A "Discovery" tab allows finding bots, and enabling special, branded QR codes that, when scanned, take the user to a specific bot.[129]

Privacy policy

[edit]Facebook's data policy outlines its policies for collecting, storing, and sharing user's data.[130] Facebook enables users to control access to individual posts and their profile[131] through privacy settings.[132] The user's name and profile picture (if applicable) are public.

Facebook's revenue depends on targeted advertising, which involves analyzing user data to decide which ads to show each user. Facebook buys data from third parties, gathered from both online and offline sources, to supplement its own data on users. Facebook maintains that it does not share data used for targeted advertising with the advertisers themselves.[133] The company states:

"We provide advertisers with reports about the kinds of people seeing their ads and how their ads are performing, but we don't share information that personally identifies you (information such as your name or email address that by itself can be used to contact you or identifies who you are) unless you give us permission. For example, we provide general demographic and interest information to advertisers (for example, that an ad was seen by a woman between the ages of 25 and 34 who lives in Madrid and likes software engineering) to help them better understand their audience. We also confirm which Facebook ads led you to make a purchase or take an action with an advertiser."[130]

As of October 2021[update], Facebook claims it uses the following policy for sharing user data with third parties:

Apps, websites, and third-party integrations on or using our Products.

When you choose to use third-party apps, websites, or other services that use, or are integrated with, our Products, they can receive information about what you post or share. For example, when you play a game with your Facebook friends or use a Facebook Comment or Share button on a website, the game developer or website can receive information about your activities in the game or receive a comment or link that you share from the website on Facebook. Also, when you download or use such third-party services, they can access your public profile on Facebook, and any information that you share with them. Apps and websites you use may receive your list of Facebook friends if you choose to share it with them. But apps and websites you use will not be able to receive any other information about your Facebook friends from you, or information about any of your Instagram followers (although your friends and followers may, of course, choose to share this information themselves). Information collected by these third-party services is subject to their own terms and policies, not this one.

Devices and operating systems providing native versions of Facebook and Instagram (i.e. where we have not developed our own first-party apps) will have access to all information you choose to share with them, including information your friends share with you, so they can provide our core functionality to you.

Note: We are in the process of restricting developers' data access even further to help prevent abuse. For example, we will remove developers' access to your Facebook and Instagram data if you haven't used their app in 3 months, and we are changing Login, so that in the next version, we will reduce the data that an app can request without app review to include only name, Instagram username and bio, profile photo and email address. Requesting any other data will require our approval.[130]

Facebook will also share data with law enforcement if needed to.[130]

Facebook's policies have changed repeatedly since the service's debut, amid a series of controversies covering everything from how well it secures user data, to what extent it allows users to control access, to the kinds of access given to third parties, including businesses, political campaigns and governments. These facilities vary according to country, as some nations require the company to make data available (and limit access to services), while the European Union's GDPR regulation mandates additional privacy protections.[134]

Bug Bounty Program

[edit]

On July 29, 2011, Facebook announced its Bug Bounty Program that paid security researchers a minimum of $500 ($699.00 in 2024 dollars[32]) for reporting security holes. The company promised not to pursue "white hat" hackers who identified such problems.[135][136] This led researchers in many countries to participate, particularly in India and Russia.[137]

Reception

[edit]Userbase

[edit]Facebook's rapid growth began as soon as it became available and continued through 2018, before beginning to decline. Facebook passed 100 million registered users in 2008,[138] and 500 million in July 2010.[139] According to the company's data at the July 2010 announcement, half of the site's membership used Facebook daily, for an average of 34 minutes, while 150 million users accessed the site by mobile.[140]

In October 2012, Facebook's monthly active users passed one billion,[141][142] with 600 million mobile users, 219 billion photo uploads, and 140 billion friend connections.[143] The 2 billion user mark was crossed in June 2017.[144][145] In November 2015, after skepticism about the accuracy of its "monthly active users" measurement, Facebook changed its definition to a logged-in member who visits the Facebook site through the web browser or mobile app, or uses the Facebook Messenger app, in the 30-day period prior to the measurement. This excluded the use of third-party services with Facebook integration, which was previously counted.[146]

From 2017 to 2019, the percentage of the U.S. population over the age of 12 who use Facebook has declined, from 67% to 61% (a decline of some 15 million U.S. users), with a higher drop-off among younger Americans (a decrease in the percentage of U.S. 12- to 34-year-olds who are users from 58% in 2015 to 29% in 2019).[147][148] The decline coincided with an increase in the popularity of Instagram, which is also owned by Meta.[147][148] The number of daily active users experienced a quarterly decline for the first time in the last quarter of 2021, down to 1.929 billion from 1.930 billion,[149] but increased again the next quarter despite being banned in Russia.[150]

Historically, commentators have offered predictions of Facebook's decline or end, based on causes such as a declining user base;[151] the legal difficulties of being a closed platform, inability to generate revenue, inability to offer user privacy, inability to adapt to mobile platforms, or Facebook ending itself to present a next generation replacement;[152] or Facebook's role in Russian interference in the 2016 United States elections.[153]

in 2004 to 2.8 billion in 2020.[134]

Demographics

[edit]The highest number of Facebook users as of April 2023 are from India and the United States, followed by Indonesia, Brazil, Mexico and the Philippines.[155] Region-wise, the highest number of users in 2018 are from Asia-Pacific (947 million) followed by Europe (381 million) and US-Canada (242 million). The rest of the world has 750 million users.[156]

Over the 2008–2018 period, the percentage of users under 34 declined to less than half of the total.[134]

Censorship

[edit]

In many countries the social networking sites and mobile apps have been blocked temporarily, intermittently, or permanently, including: Brazil,[157] China,[158] Iran,[159] Vietnam,[160] Pakistan,[161] Syria,[162] and North Korea. In May 2018, the government of Papua New Guinea announced that it would ban Facebook for a month while it considered the impact of the website on the country, though no ban has since occurred.[163] In 2019, Facebook announced it would start enforcing its ban on users, including influencers, promoting any vape, tobacco products, or weapons on its platforms.[164]

Criticisms and controversies

[edit]"I'm here today because I believe Facebook's products harm children, stoke division, and weaken our democracy. The company's leadership knows how to make Facebook and Instagram safer, but won't make the necessary changes because they have put their astronomical profits before people."

"I don't believe private companies should make all of the decisions on their own. That's why we have advocated for updated internet regulations for several years now. I have testified in Congress multiple times and asked them to update these regulations. I've written op-eds outlining the areas of regulation we think are most important related to elections, harmful content, privacy, and competition."

Facebook's importance and scale has led to criticisms in many domains. Issues include Internet privacy, excessive retention of user information,[167] its facial recognition software, DeepFace[168][169] its addictive quality[170] and its role in the workplace, including employer access to employee accounts.[171]

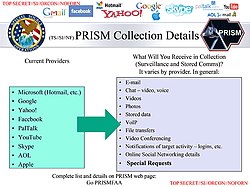

Facebook has been criticized for electricity usage,[172] tax avoidance,[173] real-name user requirement policies,[174] censorship[175][176] and its involvement in the United States PRISM surveillance program.[177] According to The Express Tribune, Facebook "avoided billions of dollars in tax using offshore companies".[178]

Facebook is alleged to have harmful psychological effects on its users, including feelings of jealousy[179][180] and stress,[181][182] a lack of attention[183] and social media addiction.[184][185] According to Kaufmann et al., mothers' motivations for using social media are often related to their social and mental health.[186] European antitrust regulator Margrethe Vestager stated that Facebook's terms of service relating to private data were "unbalanced".[187]

Facebook has been criticized for allowing users to publish illegal or offensive material. Specifics include copyright and intellectual property infringement,[188] hate speech,[189][190] incitement of rape[191] and terrorism,[192][193] fake news,[194][195][196] and crimes, murders, and livestreaming violent incidents.[197][198][199] Commentators have accused Facebook of willingly facilitating the spread of such content.[200][201][202] Sri Lanka blocked both Facebook and WhatsApp in May 2019 after anti-Muslim riots, the worst in the country since the Easter Sunday bombing in the same year as a temporary measure to maintain peace in Sri Lanka.[203][204] Facebook removed 3 billion fake accounts only during the last quarter of 2018 and the first quarter of 2019;[205] in comparison, the social network reports 2.39 billion monthly active users.[205]

In late July 2019, the company announced it was under antitrust investigation by the Federal Trade Commission.[206]

The consumer advocacy group Which? claimed individuals were still utilizing Facebook to set up fraudulent five-star ratings for products. The group identified 14 communities that exchange reviews for either money or complimentary items such as watches, earbuds, and sprinklers.[207]

Privacy concerns

[edit]

Facebook has experienced a steady stream of controversies over how it handles user privacy, repeatedly adjusting its privacy settings and policies.[208] Since 2009, Facebook has been participating in the PRISM secret program, sharing with the US National Security Agency audio, video, photographs, e-mails, documents and connection logs from user profiles, among other social media services.[209][210]

On November 29, 2011, Facebook settled Federal Trade Commission charges that it deceived consumers by failing to keep privacy promises.[211] In August 2013 High-Tech Bridge published a study showing that links included in Facebook messaging service messages were being accessed by Facebook.[212] In January 2014 two users filed a lawsuit against Facebook alleging that their privacy had been violated by this practice.[213]

On June 7, 2018, Facebook announced that a bug had resulted in about 14 million Facebook users having their default sharing setting for all new posts set to "public".[214] Its data-sharing agreement with Chinese companies such as Huawei came under the scrutiny of US lawmakers, although the information accessed was not stored on Huawei servers and remained on users' phones.[215] On April 4, 2019, half a billion records of Facebook users were found exposed on Amazon cloud servers, containing information about users' friends, likes, groups, and checked-in locations, as well as names, passwords and email addresses.[216]

The phone numbers of at least 200 million Facebook users were found to be exposed on an open online database in September 2019. They included 133 million US users, 18 million from the UK, and 50 million from users in Vietnam. After removing duplicates, the 419 million records have been reduced to 219 million. The database went offline after TechCrunch contacted the web host. It is thought the records were amassed using a tool that Facebook disabled in April 2018 after the Cambridge Analytica controversy. A Facebook spokeswoman said in a statement: "The dataset is old and appears to have information obtained before we made changes last year...There is no evidence that Facebook accounts were compromised."[217]

Facebook's privacy problems resulted in companies like Viber Media and Mozilla discontinuing advertising on Facebook's platforms.[218][219] A January 2024 study by Consumer Reports found that among a self-selected group of volunteer participants, each user is monitored or tracked by over two thousand companies on average. LiveRamp, a San Francisco-based data broker, is responsible for 96 per cent of the data. Other companies such as Home Depot, Macy's, and Walmart are involved as well.[220]

In March 2024, a court in California released documents detailing Facebook's 2016 "Project Ghostbusters". The project was aimed at helping Facebook compete with Snapchat and involved Facebook trying to develop decryption tools to collect, decrypt, and analyze traffic that users generated when visiting Snapchat and, eventually, YouTube and Amazon. The company eventually used its tool Onavo to initiate man-in-the-middle attacks and read users' traffic before it was encrypted.[221]

Racial bias

[edit]Facebook was accused of committing "systemic" racial bias by the Equal Employment Opportunity Commission based on the complaints of three rejected candidates and a current employee of the company. The three rejected employees along with the Operational Manager at Facebook as of March 2021 accused the firm of discriminating against Black people. The EEOC initiated an investigation into the case in March 2021.[222]

Shadow profiles

[edit]A "shadow profile" refers to the data Facebook collects about individuals without their explicit permission. For example, the "like" button that appears on third-party websites allows the company to collect information about an individual's internet browsing habits, even if the individual is not a Facebook user.[223][224] Data can also be collected by other users. For example, a Facebook user can link their email account to their Facebook to find friends on the site, allowing the company to collect the email addresses of users and non-users alike.[225] Over time, countless data points about an individual are collected; any single data point perhaps cannot identify an individual, but together allows the company to form a unique "profile".

This practice has been criticized by those who believe people should be able to opt-out of involuntary data collection. Additionally, while Facebook users have the ability to download and inspect the data they provide to the site, data from the user's "shadow profile" is not included, and non-users of Facebook do not have access to this tool regardless. The company has also been unclear whether or not it is possible for a person to revoke Facebook's access to their "shadow profile".[223]

Cambridge Analytica

[edit]Facebook customer Global Science Research sold information on over 87 million Facebook users to Cambridge Analytica, a political data analysis firm led by Alexander Nix.[226] While approximately 270,000 people used the app, Facebook's API permitted data collection from their friends without their knowledge.[227] At first Facebook downplayed the significance of the breach, and suggested that Cambridge Analytica no longer had access. Facebook then issued a statement expressing alarm and suspended Cambridge Analytica. Review of documents and interviews with former Facebook employees suggested that Cambridge Analytica still possessed the data.[228] This was a violation of Facebook's consent decree with the Federal Trade Commission. This violation potentially carried a penalty of $40,000 ($50,087 in 2024 dollars[32]) per occurrence, totalling trillions of dollars.[229]

According to The Guardian, both Facebook and Cambridge Analytica threatened to sue the newspaper if it published the story. After publication, Facebook claimed that it had been "lied to". On March 23, 2018, the English High Court granted an application by the Information Commissioner's Office for a warrant to search Cambridge Analytica's London offices, ending a standoff between Facebook and the Information Commissioner over responsibility.[230]

On March 25, Facebook published a statement by Zuckerberg in major UK and US newspapers apologizing over a "breach of trust".[231]

You may have heard about a quiz app built by a university researcher that leaked Facebook data of millions of people in 2014. This was a breach of trust, and I'm sorry we didn't do more at the time. We're now taking steps to make sure this doesn't happen again.

We've already stopped apps like this from getting so much information. Now we're limiting the data apps get when you sign in using Facebook.

We're also investigating every single app that had access to large amounts of data before we fixed this. We expect there are others. And when we find them, we will ban them and tell everyone affected.

Finally, we'll remind you which apps you've given access to your information – so you can shut off the ones you don't want anymore.

Thank you for believing in this community. I promise to do better for you.

On March 26, the Federal Trade Commission opened an investigation into the matter.[232] The controversy led Facebook to end its partnerships with data brokers who aid advertisers in targeting users.[208]

On April 24, 2019, Facebook said it could face a fine between $3 billion ($3.69 billion in 2024 dollars[32]) to $5 billion ($6.15 billion in 2024 dollars[32]) as the result of an investigation by the Federal Trade Commission.[233] On July 24, 2019, the FTC fined Facebook $5 billion, the largest penalty ever imposed on a company for violating consumer privacy. Additionally, Facebook had to implement a new privacy structure, follow a 20-year settlement order, and allow the FTC to monitor Facebook.[234] Cambridge Analytica's CEO and a developer faced restrictions on future business dealings and were ordered to destroy any personal information they collected. Cambridge Analytica filed for bankruptcy.[235] Facebook also implemented additional privacy controls and settings[236] in part to comply with the European Union's General Data Protection Regulation (GDPR), which took effect in May.[237] Facebook also ended its active opposition to the California Consumer Privacy Act.[238]

Some, such as Meghan McCain, have drawn an equivalence between the use of data by Cambridge Analytica and the Barack Obama's 2012 campaign, which, according to Investor's Business Daily, "encouraged supporters to download an Obama 2012 Facebook app that, when activated, let the campaign collect Facebook data both on users and their friends."[239][240][241] Carol Davidsen, the Obama for America (OFA) former director of integration and media analytics, wrote that "Facebook was surprised we were able to suck out the whole social graph, but they didn't stop us once they realised that was what we were doing".[240][241] PolitiFact has rated McCain's statements "Half-True", on the basis that "in Obama's case, direct users knew they were handing over their data to a political campaign" whereas with Cambridge Analytica, users thought they were only taking a personality quiz for academic purposes, and while the Obama campaign only used the data "to have their supporters contact their most persuadable friends", Cambridge Analytica "targeted users, friends and lookalikes directly with digital ads."[242]

DataSpii

[edit]In July 2019, cybersecurity researcher Sam Jadali exposed a catastrophic data leak known as DataSpii involving data provider DDMR and marketing intelligence company Nacho Analytics (NA).[243][244] Branding itself as the "God mode for the internet", NA through DDMR, provided its members access to private Facebook photos and Facebook Messenger attachments including tax returns.[245] DataSpii harvested data from millions of Chrome and Firefox users through compromised browser extensions.[246] The NA website stated it collected data from millions of opt-in users. Jadali, along with journalists from Ars Technica and The Washington Post, interviewed impacted users, including a Washington Post staff member. According to the interviews, the impacted users did not consent to such collection.

DataSpii demonstrated how a compromised user exposed the data of others, including the private photos and Messenger attachments belonging to a Facebook user's network of friends.[245]

DataSpii exploited Facebook's practice of making private photos and Messenger attachments publicly accessible via unique URLs. To bolster security in this regard, Facebook appends query strings in the URLs so as to limit the period of accessibility.[245] Nevertheless, NA provided real-time access to these unique URLs, which were intended to be secure. This allowed NA members to access the private content within the restricted time frame designated by Facebook.

The Washington Post's Geoffrey Fowler, in collaboration with Jadali, opened Fowler's private Facebook photo in a browser with a compromised browser extension.[243] Within minutes, they anonymously retrieved the "private" photo. To validate this proof-of-concept, they searched for Fowler's name using NA, which yielded his photo as a search result. In addition, Jadali discovered Fowler's Washington Post colleague, Nick Mourtoupalas, was directly impacted by DataSpii. Jadali's investigation elucidated how DataSpii disseminated private data to additional third-parties, including foreign entities, within minutes of the data being acquired. In doing so, he identified the third-parties who were scraping, storing, and potentially enabling the facial-recognition of individuals in photos being furnished by DataSpii.[247]

Breaches

[edit]On September 28, 2018, Facebook experienced a major breach in its security, exposing the data of 50 million users. The data breach started in July 2017 and was discovered on September 16.[248] Facebook notified users affected by the exploit and logged them out of their accounts.[249][250] In March 2019, Facebook confirmed a password compromise of millions of Facebook lite application users also affected millions of Instagram users. The reason cited was the storage of password as plain text instead of encryption which could be read by its employees.[251]

On December 19, 2019, security researcher Bob Diachenko discovered a database containing more than 267 million Facebook user IDs, phone numbers, and names that were left exposed on the web for anyone to access without a password or any other authentication.[252] In February 2020, Facebook encountered a major security breach in which its official Twitter account was hacked by a Saudi Arabia-based group called "OurMine". The group has a history of actively exposing high-profile social media profiles' vulnerabilities.[253]

In April 2021, The Guardian reported approximately half a billion users' data had been stolen including birthdates and phone numbers. Facebook alleged it was "old data" from a problem fixed in August 2019 despite the data's having been released a year and a half later only in 2021; it declined to speak with journalists, had apparently not notified regulators, called the problem "unfixable", and said it would not be advising users.[254] In September 2024, Meta paid a $101 million fine for storing up to 600 million passwords of Facebook and Instagram users in plain text. The practice was initially discovered in 2019, though reports indicate passwords were stored in plain text since 2012.[255]

Phone data and activity

[edit]

After acquiring Onavo in 2013, Facebook used its Onavo Protect virtual private network (VPN) app to collect information on users' web traffic and app usage. This allowed Facebook to monitor its competitors' performance, and motivated Facebook to acquire WhatsApp in 2014.[256][257][258] Media outlets classified Onavo Protect as spyware.[259][260][261] In August 2018, Facebook removed the app in response to pressure from Apple, who asserted that it violated their guidelines.[262][263] The Australian Competition and Consumer Commission sued Facebook on December 16, 2020, for "false, misleading or deceptive conduct" in response to the company's unauthorized use of personal data obtained from Onavo for business purposes in contrast to Onavo's privacy-oriented marketing.[264][265]

In 2016, Facebook Research launched Project Atlas, offering some users between the ages of 13 and 35 up to $20 per month ($26.00 in 2024 dollars[32]) in exchange for their personal data, including their app usage, web browsing history, web search history, location history, personal messages, photos, videos, emails and Amazon order history.[266][267] In January 2019, TechCrunch reported on the project. This led Apple to temporarily revoke Facebook's Enterprise Developer Program certificates for one day, preventing Facebook Research from operating on iOS devices and disabling Facebook's internal iOS apps.[267][268][269]

Ars Technica reported in April 2018 that the Facebook Android app had been harvesting user data, including phone calls and text messages, since 2015.[270][271][272] In May 2018, several Android users filed a class action lawsuit against Facebook for invading their privacy.[273][274] In January 2020, Facebook launched the Off-Facebook Activity page, which allows users to see information collected by Facebook about their non-Facebook activities.[275] The Washington Post columnist Geoffrey A. Fowler found that this included what other apps he used on his phone, even while the Facebook app was closed, what other web sites he visited on his phone, and what in-store purchases he made from affiliated businesses, even while his phone was completely off.[276]

In November 2021, a report was published by Fairplay, Global Action Plan and Reset Australia detailing accusations that Facebook was continuing to manage their ad targeting system with data collected from teen users.[277] The accusations follow announcements by Facebook in July 2021 that they would cease ad targeting children.[278][279]

Public apologies

[edit]The company first apologized for its privacy abuses in 2009.[280]

Facebook apologies have appeared in newspapers, television, blog posts and on Facebook.[281] On March 25, 2018, leading US and UK newspapers published full-page ads with a personal apology from Zuckerberg. Zuckerberg issued a verbal apology on CNN.[282] In May 2010, he apologized for discrepancies in privacy settings.[281]

Previously, Facebook had its privacy settings spread out over 20 pages, and has now put all of its privacy settings on one page, which makes it more difficult for third-party apps to access the user's personal information.[208] In addition to publicly apologizing, Facebook has said that it will be reviewing and auditing thousands of apps that display "suspicious activities" in an effort to ensure that this breach of privacy does not happen again.[283] In a 2010 report regarding privacy, a research project stated that not a lot of information is available regarding the consequences of what people disclose online so often what is available are just reports made available through popular media.[284] In 2017, a former Facebook executive went on the record to discuss how social media platforms have contributed to the unraveling of the "fabric of society".[285]

Content disputes and moderation

[edit]Facebook relies on its users to generate the content that bonds its users to the service. The company has come under criticism both for allowing objectionable content, including conspiracy theories and fringe discourse,[286] and for prohibiting other content that it deems inappropriate.

Misinformation and fake news

[edit]

Facebook has been criticized as a vector for fake news, and has been accused of bearing responsibility for the conspiracy theory that the United States created ISIS,[287] false anti-Rohingya posts being used by Myanmar's military to fuel genocide and ethnic cleansing,[288][289] enabling climate change denial[290][291][292] and Sandy Hook Elementary School shooting conspiracy theorists,[293] and anti-refugee attacks in Germany.[294][295][296] The government of the Philippines has also used Facebook as a tool to attack its critics.[297]

In 2017, Facebook partnered with fact checkers from the Poynter Institute's international fact-checking network to identify and mark false content, though most ads from political candidates are exempt from this program.[298][299] As of 2018, Facebook had over 40 fact-checking partners across the world, including The Weekly Standard.[300] Critics of the program have accused Facebook of not doing enough to remove false information from its website.[300][301]

Facebook has repeatedly amended its content policies. In July 2018, it stated that it would "downrank" articles that its fact-checkers determined to be false, and remove misinformation that incited violence.[302] Facebook stated that content that receives "false" ratings from its fact-checkers can be demonetized and suffer dramatically reduced distribution. Specific posts and videos that violate community standards can be removed on Facebook.[303] In May 2019, Facebook banned a number of "dangerous" commentators from its platform, including Alex Jones, Louis Farrakhan, Milo Yiannopoulos, Paul Joseph Watson, Paul Nehlen, David Duke, and Laura Loomer, for allegedly engaging in "violence and hate".[304][305]

In May 2020, Facebook agreed to a preliminary settlement of $52 million ($63.2 million in 2024 dollars[32]) to compensate U.S.-based Facebook content moderators for their psychological trauma suffered on the job.[306][307] Other legal actions around the world, including in Ireland, await settlement.[308] In September 2020, the Government of Thailand utilized the Computer Crime Act for the first time to take action against Facebook and Twitter for ignoring requests to take down content and not complying with court orders.[309]

According to a report by Reuters, beginning in 2020, the United States military ran a propaganda campaign to spread disinformation about the Sinovac Chinese COVID-19 vaccine, including using fake social media accounts to spread the disinformation that the Sinovac vaccine contained pork-derived ingredients and was therefore haram under Islamic law.[310] The campaign was described as "payback" for COVID-19 disinformation by China directed against the U.S.[311] In summer 2020, Facebook asked the military to remove the accounts, stating that they violated Facebook's policies on fake accounts and on COVID-19 information.[310] The campaign continued until mid-2021.[310]

Threats and incitement

[edit]Professor Ilya Somin reported that he had been the subject of death threats on Facebook in April 2018 from Cesar Sayoc, who threatened to kill Somin and his family and "feed the bodies to Florida alligators". Somin's Facebook friends reported the comments to Facebook, which did nothing except dispatch automated messages.[312] Sayoc was later arrested for the October 2018 United States mail bombing attempts directed at Democratic politicians.

Terrorism

[edit]Force v. Facebook, Inc., 934 F.3d 53 (2nd Cir. 2019) was a case that alleged Facebook was profiting off recommendations for Hamas. In 2019, the US Second Circuit Appeals Court held that Section 230 bars civil terrorism claims against social media companies and internet service providers, the first federal appellate court to do so.

Hate speech

[edit]In October 2020, Pakistani Prime Minister Imran Khan urged Mark Zuckerberg, through a letter posted on government's Twitter account, to ban Islamophobic content on Facebook, warning that it encouraged extremism and violence.[313] In October 2020, the company announced that it would ban Holocaust denial.[314]

In October 2022, Media Matters for America published a report that Facebook and Instagram were still profiting off advertisements using the slur "groomer" for LGBT people.[315] The article reported that Meta had previously confirmed that the use of this word for the LGBT community violates its hate speech policies.[315] The story was subsequently picked up by other news outlets such as the New York Daily News, PinkNews, and LGBTQ Nation.[316][317][318]

Violent erotica

[edit]There are ads on Facebook and Instagram containing sexually explicit content, descriptions of graphic violence and content promoting acts of self harm. Many of the ads are for webnovel apps backed by tech giants Bytedance and Tencent.[319]

InfoWars

[edit]Facebook was criticized for allowing InfoWars to publish falsehoods and conspiracy theories.[303][320][321][322][323] Facebook defended its actions in regard to InfoWars, saying "we just don't think banning Pages for sharing conspiracy theories or false news is the right way to go."[321] Facebook provided only six cases in which it fact-checked content on the InfoWars page over the period September 2017 to July 2018.[303] In 2018, InfoWars falsely claimed that the survivors of the Parkland shooting were "actors". Facebook pledged to remove InfoWars content making the claim, although InfoWars videos pushing the false claims were left up, even though Facebook had been contacted about the videos.[303] Facebook stated that the videos never explicitly called them actors.[303] Facebook also allowed InfoWars videos that shared the Pizzagate conspiracy theory to survive, despite specific assertions that it would purge Pizzagate content.[303] In late July 2018, Facebook suspended the personal profile of InfoWars head Alex Jones for 30 days.[324] In early August 2018, Facebook banned the four most active InfoWars-related pages for hate speech.[325]

Political manipulation

[edit]

As a dominant social-web service with massive outreach, Facebook has been used by identified or unidentified political operatives to affect public opinion. Some of these activities have been done in violation of the platform policies, creating "coordinated inauthentic behavior", support or attacks. These activities can be scripted or paid. Various such abusive campaign have been revealed in recent years, best known being the Russian interference in the 2016 United States elections. In 2021, former Facebook analyst within the Spam and Fake Engagement teams, Sophie Zhang, reported more than 25 political subversion operations and criticized the general slow reaction time, oversightless, laissez-faire attitude by Facebook.[326][327][328]

Influence Operations and Coordinated Inauthentic Behavior

[edit]In 2018, Facebook said that during 2018 they had identified "coordinated inauthentic behavior" in "many Pages, Groups and accounts created to stir up political debate, including in the US, the Middle East, Russia and the UK."[329]

Campaigns operated by the British intelligence agency unit, called Joint Threat Research Intelligence Group, have broadly fallen into two categories; cyber attacks and propaganda efforts. The propaganda efforts utilize "mass messaging" and the "pushing [of] stories" via social media sites like Facebook.[330][331] Israel's Jewish Internet Defense Force, the Chinese Communist Party's 50 Cent Party and Turkey's AK Trolls also focus their attention on social media platforms like Facebook.[332][333][334] In July 2018, Samantha Bradshaw, co-author of the report from the Oxford Internet Institute (OII) at Oxford University, said that "The number of countries where formally organised social media manipulation occurs has greatly increased, from 28 to 48 countries globally. The majority of growth comes from political parties who spread disinformation and junk news around election periods."[335] In October 2018, The Daily Telegraph reported that Facebook "banned hundreds of pages and accounts that it says were fraudulently flooding its site with partisan political content – although they came from the United States instead of being associated with Russia."[336]

In December 2018, The Washington Post reported that "Facebook has suspended the account of Jonathon Morgan, the chief executive of a top social media research firm" New Knowledge, "after reports that he and others engaged in an operation to spread disinformation" on Facebook and Twitter during the 2017 United States Senate special election in Alabama.[337][338] In January 2019, Facebook said it has removed 783 Iran-linked accounts, pages and groups for engaging in what it called "coordinated inauthentic behaviour".[339] In March 2019, Facebook sued four Chinese firms for selling "fake accounts, likes and followers" to amplify Chinese state media outlets.[340]

In May 2019, Tel Aviv-based private intelligence agency Archimedes Group was banned from Facebook for "coordinated inauthentic behavior" after Facebook found fake users in countries in sub-Saharan Africa, Latin America and Southeast Asia.[341] Facebook investigations revealed that Archimedes had spent some $1.1 million ($1.35 million in 2024 dollars[32]) on fake ads, paid for in Brazilian reais, Israeli shekels and US dollars.[342] Facebook gave examples of Archimedes Group political interference in Nigeria, Senegal, Togo, Angola, Niger and Tunisia.[343] The Atlantic Council's Digital Forensic Research Lab said in a report that "The tactics employed by Archimedes Group, a private company, closely resemble the types of information warfare tactics often used by governments, and the Kremlin in particular."[344][345]

On May 23, 2019, Facebook released its Community Standards Enforcement Report highlighting that it has identified several fake accounts through artificial intelligence and human monitoring. In a period of six months, October 2018 – March 2019, the social media website removed a total of 3.39 billion fake accounts. The number of fake accounts was reported to be more than 2.4 billion real people on the platform.[346]

In July 2019, Facebook advanced its measures to counter deceptive political propaganda and other abuse of its services. The company removed more than 1,800 accounts and pages that were being operated from Russia, Thailand, Ukraine and Honduras.[347] After Russia's invasion of Ukraine in February 2022, it was announced that the internet regulatory committee would block access to Facebook.[348] On October 30, 2019, Facebook deleted several accounts of the employees working at the Israeli NSO Group, stating that the accounts were "deleted for not following our terms". The deletions came after WhatsApp sued the Israeli surveillance firm for targeting 1,400 devices with spyware.[349]

In 2020, Facebook helped found American Edge, an anti-regulation lobbying firm to fight anti-trust probes.[350] The group runs ads that "fail to mention what legislation concerns them, how those concerns could be fixed, or how the horrors they warn of could actually happen", and do not clearly disclose that they are funded by Facebook.[351]

In 2020, the government of Thailand forced Facebook to take down a Facebook group called Royalist Marketplace with one million members following potentially illegal posts shared. The authorities have also threatened Facebook with legal action. In response, Facebook is planning to take legal action against the Thai government for suppression of freedom of expression and violation of human rights.[352] In 2020, during the COVID-19 pandemic, Facebook found that troll farms from North Macedonia and the Philippines pushed coronavirus disinformation. The publisher, which used content from these farms, was banned.[353]

In the run-up to the 2020 United States elections, Eastern European troll farms operated popular Facebook pages showing content related to Christians and Blacks in America. They included more than 15,000 pages combined and were viewed by 140 million US users per month. This was in part due to how Facebook's algorithm and policies allow unoriginal viral content to be copied and spread in ways that still drive up user engagement. As of September 2021, some of the most popular pages were still active on Facebook despite the company's efforts to take down such content.[354]

In February 2021, Facebook removed the main page of the Myanmar military, after two protesters were shot and killed during the anti-coup protests. Facebook said that the page breached its guidelines that prohibit the incitement of violence.[355] On February 25, Facebook announced to ban all accounts of the Myanmar military, along with the "Tatmadaw-linked commercial entities". Citing the "exceptionally severe human rights abuses and the clear risk of future military-initiated violence in Myanmar", the tech giant also implemented the move on its subsidiary, Instagram.[356] In March 2021, The Wall Street Journal's editorial board criticized Facebook's decision to fact-check its op-ed titled "We'll Have Herd immunity by April" written by surgeon Marty Makary, calling it "counter-opinion masquerading as fact checking."[357]

Facebook guidelines allow users to call for the death of public figures, they also allow praise of mass killers and 'violent non-state actors' in some situations.[358][359] In 2021, former Facebook analyst within the Spam and Fake Engagement teams, Sophie Zhang, reported on more than 25 political subversion operations she uncovered while in Facebook, and the general laissez-faire by the private enterprise.[326][327][328]

In 2021, Facebook was cited as playing a role in the fomenting of the 2021 United States Capitol attack.[360][361]

Russian interference

[edit]In 2018, Special Counsel Robert Mueller indicted 13 Russian nationals and three Russian organizations for "engaging in operations to interfere with U.S. political and electoral processes, including the 2016 presidential election."[362][363][364]

Mueller contacted Facebook subsequently to the company's disclosure that it had sold more than $100,000 ($131,018 in 2024 dollars[32]) worth of ads to a company (Internet Research Agency, owned by Russian billionaire and businessman Yevgeniy Prigozhin) with links to the Russian intelligence community before the 2016 United States presidential election.[365][366] In September 2017, Facebook's chief security officer Alex Stamos wrote the company "found approximately $100,000 in ad spending from June 2015 to May 2017 – associated with roughly 3,000 ads – that was connected to about 470 inauthentic accounts and Pages in violation of our policies. Our analysis suggests these accounts and Pages were affiliated with one another and likely operated out of Russia."[367] Clinton and Trump campaigns spent $81 million ($106 million in 2024 dollars[32]) on Facebook ads.[368]

The company pledged full cooperation in Mueller's investigation, and provided all information about the Russian advertisements.[369] Members of the House and Senate Intelligence Committees have claimed that Facebook had withheld information that could illuminate the Russian propaganda campaign.[370] Russian operatives have used Facebook polarize the American public discourses, organizing both Black Lives Matter rallies[371][372] and anti-immigrant rallies on U.S. soil,[373] as well as anti-Clinton rallies[374] and rallies both for and against Donald Trump.[375][376] Facebook ads have also been used to exploit divisions over black political activism and Muslims by simultaneously sending contrary messages to different users based on their political and demographic characteristics in order to sow discord.[377][378][379] Zuckerberg has stated that he regrets having dismissed concerns over Russian interference in the 2016 U.S. presidential election.[380]

Russian-American billionaire Yuri Milner, who befriended Zuckerberg[381] between 2009 and 2011, had Kremlin backing for his investments in Facebook and Twitter.[382] In January 2019, Facebook removed 289 pages and 75 coordinated accounts linked to the Russian state-owned news agency Sputnik which had misrepresented themselves as independent news or general interest pages.[383][384] Facebook later identified and removed an additional 1,907 accounts linked to Russia found to be engaging in "coordinated inauthentic behaviour".[385] In 2018, a UK Department for Digital, Culture, Media and Sport (DCMS) select committee report had criticized Facebook for its reluctance to investigate abuse of its platform by the Russian government, and for downplaying the extent of the problem, referring to the company as 'digital gangsters'.[386][387][388]

"Democracy is at risk from the malicious and relentless targeting of citizens with disinformation and personalised 'dark adverts' from unidentifiable sources, delivered through the major social media platforms we use every day," Damian Collins, DCMS Committee Chair[388]

In February 2019, Glenn Greenwald wrote that a cybersecurity company New Knowledge, which is behind one of the Senate reports on Russian social media election interference, "was caught just six weeks ago engaging in a massive scam to create fictitious Russian troll accounts on Facebook and Twitter in order to claim that the Kremlin was working to defeat Democratic Senate nominee Doug Jones in Alabama. The New York Times, when exposing the scam, quoted a New Knowledge report that boasted of its fabrications..."[389][390]

Anti-Rohingya propaganda

[edit]In 2018, Facebook took down 536 Facebook pages, 17 Facebook groups, 175 Facebook accounts, and 16 Instagram accounts linked to the Myanmar military. Collectively these were followed by over 10 million people.[391] The New York Times reported that:[392]

after months of reports about anti-Rohingya propaganda on Facebook, the company acknowledged that it had been too slow to act in Myanmar. By then, more than 700,000 Rohingya had fled the country in a year, in what United Nations officials called "a textbook example of ethnic cleansing".

Anti-Muslim propaganda and Hindu nationalism in India

[edit]A 2019 book titled The Real Face of Facebook in India, co-authored by the journalists Paranjoy Guha Thakurta and Cyril Sam, alleged that Facebook helped enable and benefited from the rise of Narendra Modi's Hindu nationalist Bharatiya Janata Party (BJP) in India.[393] Ankhi Das, Facebook's policy director for India and South and Central Asia, apologized publicly in August 2020 for sharing a Facebook post which called Muslims in India a "degenerate community". She said she shared the post "to reflect my deep belief in celebrating feminism and civic participation".[394] She is reported to have prevented action by Facebook against anti-Muslim content[395][396] and supported the BJP in internal Facebook messages.[397][398]

In 2020, Facebook executives overrode their employees' recommendations that the BJP politician T. Raja Singh should be banned from the site for hate speech and rhetoric that could lead to violence. Singh had said on Facebook that Rohingya Muslim immigrants should be shot and had threatened to destroy mosques. Current and former Facebook employees told The Wall Street Journal that the decision was part of a pattern of favoritism by Facebook toward the BJP as it seeks more business in India.[396] Facebook also took no action after BJP politicians made posts accusing Muslims of intentionally spreading COVID-19, an employee said.[399]

In 2020, the Delhi Assembly began investigating whether Facebook bore blame for the 2020 religious riots in the city, claiming it had found Facebook "prima facie guilty of a role in the violence".[400][401] Following a summons by a Delhi Assembly Committee, Facebook India vice-president and managing director Ajit Mohan moved the Supreme Court,[402] which granted him relief and ordered a stay to the summons.[403][404][405] The Central government later backed the decision, and submitted in the court that Facebook could not be made accountable before any state assembly and the committee formed was unconstitutional.[406][407] Following a fresh notice by the Delhi Assembly panel in 2021 for failing to appear before it as a witness, Mohan challenged it saying that the 'right to silence' is a virtue in present 'noisy times' and the legislature had no authority to examine him in a law and order case.[408] In July 2021, the Supreme Court refused to quash the summons and asked Facebook to appear before the Delhi assembly panel.[409]

On September 23, 2023, it was reported that Facebook had delayed for about a year when in 2021, it removed a network of accounts ran by India's Chinar Corps which spread disinformation that would put Kashmiri journalists in danger. The delay and the previously not publicized takedown action were due a fear that its local employees would be targeted by authorities, and that it would hurt business prospects in the country.[410]

Company governance

[edit]Early Facebook investor and former Zuckerberg mentor Roger McNamee described Facebook as having "the most centralized decision-making structure I have ever encountered in a large company."[411] Nathan Schneider, a professor of media studies at the University of Colorado Boulder argued in 2018 for transforming Facebook into a platform cooperative owned and governed by the users.[412]

Facebook co-founder Chris Hughes stated in 2019 that CEO Mark Zuckerberg has too much power, that the company is now a monopoly, and that, as a result, it should be split into multiple smaller companies. He called for the breakup of Facebook in an op-ed in The New York Times. Hughes says he is concerned that Zuckerberg has surrounded himself with a team that does not challenge him and that as a result, it is the U.S. government's job to hold him accountable and curb his "unchecked power".[413] Hughes also said that "Mark's power is unprecedented and un-American."[414] Several U.S. politicians agree with Hughes.[415] EU Commissioner for Competition Margrethe Vestager has stated that splitting Facebook should only be done as "a remedy of the very last resort", and that splitting Facebook would not solve Facebook's underlying problems.[416]

Customer support

[edit]Facebook has been criticized for its lack of human customer support.[417] When users personal and business accounts are breached, many are forced to go through small claims court to regain access and restitution.[418]

Litigation

[edit]The company has been subject to repeated litigation.[419][420][421][422] Its most prominent case addressed allegations that Zuckerberg broke an oral contract with Cameron Winklevoss, Tyler Winklevoss, and Divya Narendra to build the then-named "HarvardConnection" social network in 2004.[423][424][425]

On March 6, 2018, BlackBerry sued Facebook and its Instagram and WhatsApp subdivision for ripping off key features of its messaging app.[426] In October 2018, a Texan woman sued Facebook, claiming she had been recruited into the sex trade at the age of 15 by a man who "friended" her on the social media network. Facebook responded that it works both internally and externally to ban sex traffickers.[427][428]

In 2019, British solicitors representing a bullied Syrian schoolboy, sued Facebook over false claims. They claimed that Facebook protected prominent figures from scrutiny instead of removing content that violates its rules and that the special treatment was financially driven.[429][430] The Federal Trade Commission and a coalition of New York state and 47 other state and regional governments filed separate suits against Facebook on December 9, 2020, seeking antitrust action based on its acquisitions of Instagram and WhatsUp among other companies, calling these practices as anticompetitive. The suits also assert that in acquiring these products, they weakened their privacy measures for their users. The suits, besides other fines, seek to unwind the acquisitions from Facebook.[431][432]

On January 6, 2022, France's data privacy regulatory body CNIL fined Facebook a 60 million euros for not allowing its internet users an easy refusal of cookies along with Google.[433] On December 22, 2022, the Quebec Court of Appeal approved a class-action lawsuit on behalf of Facebook users who claim they were discriminated against because the platform allows advertisers to target both job and housing advertisements based on various factors, including age, gender, and even race.[434] The lawsuit centers on the platform's practice of "micro targeting ads", claiming ads are ensured to appear only in the feeds of people who belong to certain targeted groups. Women, for example, would not see ads targeting men, while older generation men would not see an ad aimed at people between 18 and 45.[434]

The class action could include thousands of Quebec residents who have been using the platform as early as April 2016, who were seeking jobs or housing during that period.[434] Facebook has 60 days after the court's December 22 ruling to decide to appeal the case to the Supreme Court of Canada. If it does not appeal, the case returns to the Quebec Superior Court.[434] On September 21, 2023, the California Courts of Appeal ruled that Facebook could be sued for discriminatory advertising under the Unruh Civil Rights Act.[435]

Impact

[edit]

Scope

[edit]A commentator in The Washington Post noted that Facebook constitutes a "massive depository of information that documents both our reactions to events and our evolving customs with a scope and immediacy of which earlier historians could only dream".[436] Especially for anthropologists, social researchers, and social historians—and subject to proper preservation and curation—the website "will preserve images of our lives that are vastly crisper and more nuanced than any ancestry record in existence".[436]

Economy

[edit]Economists have noted that Facebook offers many non-rivalrous services that benefit as many users as are interested without forcing users to compete with each other. By contrast, most goods are available to a limited number of users. E.g., if one user buys a phone, no other user can buy that phone. Three areas add the most economic impact: platform competition, the market place and user behavior data.[437] Facebook began to reduce its carbon impact after Greenpeace attacked it for its long-term reliance on coal and resulting carbon footprint.[438] In 2021 Facebook announced that their global operations are supported by 100 percent renewable energy and they have reached net zero emissions, a goal set in 2018.[439][440]

Facebook provides a development platform for many social gaming, communication, feedback, review, and other applications related to online activities. This platform spawned many businesses and added thousands of jobs to the global economy. Zynga Inc., a leader in social gaming, is an example of such a business. An econometric analysis found that Facebook's app development platform added more than 182,000 jobs in the U.S. economy in 2011. The total economic value of the added employment was about $12 billion ($16.8 billion in 2024 dollars[32]).[441]

Society

[edit]Facebook was one of the first large-scale social networks. In The Facebook Effect, David Kirkpatrick said that Facebook's structure makes it difficult to replace, because of its "network effects". As of 2016[update], it was estimated 44% of Americans get news through Facebook.[442] A study published at Frontiers Media in 2023 found that there was more polarization of the user-base on Facebook than even far-right social networks like Gab.[443]

Mental and emotional health

[edit]Studies have associated social networks with positive[444] and negative impacts[445][446][447][448][449] on emotional health.

Studies have associated Facebook with feelings of envy, often triggered by vacation and holiday photos. Other triggers include posts by friends about family happiness and images of physical beauty—such feelings leave people dissatisfied with their own lives. A joint study by two German universities discovered that one out of three people were more dissatisfied with their lives after visiting Facebook,[450][451] and another study by Utah Valley University found that college students felt worse about themselves following an increase in time on Facebook.[451][452][453]

Positive effects include signs of "virtual empathy" with online friends and helping introverted persons learn social skills.[454] A 2020 experimental study in the American Economic Review found that deactivating Facebook led to increased subjective well-being.[455] In a blog post in December 2017, the company highlighted research that has shown "passively consuming" the News Feed, as in reading but not interacting, left users with negative feelings, whereas interacting with messages pointed to improvements in well-being.[456]

Politics

[edit]In February 2008, a Facebook group called "One Million Voices Against FARC" organized an event in which hundreds of thousands of Colombians marched in protest against the Revolutionary Armed Forces of Colombia (FARC).[457] In August 2010, one of North Korea's official government websites and the country's official news agency, Uriminzokkiri, joined Facebook.[458]

During the Arab Spring many journalists claimed Facebook played a major role in the 2011 Egyptian revolution.[459][460] On January 14, the Facebook page of "We are all Khaled Said" was started by Wael Ghoniem to invite the Egyptian people to "peaceful demonstrations" on January 25. In Tunisia and Egypt, Facebook became the primary tool for connecting protesters and led the Egyptian government to ban it, Twitter and other sites.[461] After 18 days, the uprising forced President Hosni Mubarak to resign.