Recent from talks

Contribute something

Nothing was collected or created yet.

Semantic network

View on Wikipedia

| Part of a series on | ||||

| Network science | ||||

|---|---|---|---|---|

| Network types | ||||

| Graphs | ||||

|

||||

| Models | ||||

|

||||

| ||||

|

| Information mapping |

|---|

| Topics and fields |

| Node–link approaches |

|

| See also |

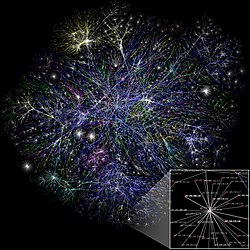

A semantic network, or frame network is a knowledge base that represents semantic relations between concepts in a network. This is often used as a form of knowledge representation. It is a directed or undirected graph consisting of vertices, which represent concepts, and edges, which represent semantic relations between concepts,[1] mapping or connecting semantic fields. A semantic network may be instantiated as, for example, a graph database or a concept map. Typical standardized semantic networks are expressed as semantic triples.

Semantic networks are used in natural language processing applications such as semantic parsing[2] and word-sense disambiguation.[3] Semantic networks can also be used as a method to analyze large texts and identify the main themes and topics (e.g., of social media posts), to reveal biases (e.g., in news coverage), or even to map an entire research field.

History

[edit]Examples of the use of semantic networks in logic, directed acyclic graphs as a mnemonic tool, dates back centuries. The earliest documented use being the Greek philosopher Porphyry's commentary on Aristotle's categories in the third century AD.

In computing history, "Semantic Nets" for the propositional calculus were first implemented for computers by Richard H. Richens of the Cambridge Language Research Unit in 1956 as an "interlingua" for machine translation of natural languages.[4] Although the importance of this work and the CLRU was only belatedly realized.

Semantic networks were also independently implemented by Robert F. Simmons[5] and Sheldon Klein, using the first order predicate calculus as a base, after being inspired by a demonstration of Victor Yngve. The "line of research was originated by the first President of the Association [Association for Computational Linguistics], Victor Yngve, who in 1960 had published descriptions of algorithms for using a phrase structure grammar to generate syntactically well-formed nonsense sentences. Sheldon Klein and I about 1962-1964 were fascinated by the technique and generalized it to a method for controlling the sense of what was generated by respecting the semantic dependencies of words as they occurred in text."[6] Other researchers, most notably M. Ross Quillian[7] and others at System Development Corporation helped contribute to their work in the early 1960s as part of the SYNTHEX project. It's from these publications at SDC that most modern derivatives of the term "semantic network" cite as their background. Later prominent works were done by Allan M. Collins and Quillian (e.g., Collins and Quillian;[8][9] Collins and Loftus[10] Quillian[11][12][13][14]). Still later in 2006, Hermann Helbig fully described MultiNet.[15]

In the late 1980s, two Netherlands universities, Groningen and Twente, jointly began a project called Knowledge Graphs, which are semantic networks but with the added constraint that edges are restricted to be from a limited set of possible relations, to facilitate algebras on the graph.[16] In the subsequent decades, the distinction between semantic networks and knowledge graphs was blurred.[17][18] In 2012, Google gave their knowledge graph the name Knowledge Graph. The Semantic Link Network was systematically studied as a social semantics networking method. Its basic model consists of semantic nodes, semantic links between nodes, and a semantic space that defines the semantics of nodes and links and reasoning rules on semantic links. The systematic theory and model was published in 2004.[19] This research direction can trace to the definition of inheritance rules for efficient model retrieval in 1998[20] and the Active Document Framework ADF.[21] Since 2003, research has developed toward social semantic networking.[22] This work is a systematic innovation at the age of the World Wide Web and global social networking rather than an application or simple extension of the Semantic Net (Network). Its purpose and scope are different from that of the Semantic Net (or network).[23] The rules for reasoning and evolution and automatic discovery of implicit links play an important role in the Semantic Link Network.[24][25] Recently it has been developed to support Cyber-Physical-Social Intelligence.[26] It was used for creating a general summarization method.[27] The self-organised Semantic Link Network was integrated with a multi-dimensional category space to form a semantic space to support advanced applications with multi-dimensional abstractions and self-organised semantic links[28][29] It has been verified that Semantic Link Network play an important role in understanding and representation through text summarisation applications.[30][31] Semantic Link Network has been extended from cyberspace to cyber-physical-social space. Competition relation and symbiosis relation as well as their roles in evolving society were studied in the emerging topic: Cyber-Physical-Social Intelligence[32]

More specialized forms of semantic networks has been created for specific use. For example, in 2008, Fawsy Bendeck's PhD thesis formalized the Semantic Similarity Network (SSN) that contains specialized relationships and propagation algorithms to simplify the semantic similarity representation and calculations.[33]

Basics of semantic networks

[edit]A semantic network is used when one has knowledge that is best understood as a set of concepts that are related to one another.

Most semantic networks are cognitively based. They also consist of arcs and nodes which can be organized into a taxonomic hierarchy. Semantic networks contributed ideas of spreading activation, inheritance, and nodes as proto-objects.

Examples

[edit]In Lisp

[edit]The following code shows an example of a semantic network in the Lisp programming language using an association list.

(setq *database*

'((canary (is-a bird)

(color yellow)

(size small))

(penguin (is-a bird)

(movement swim))

(bird (is-a vertebrate)

(has-part wings)

(reproduction egg-laying))))

To extract all the information about the "canary" type, one would use the assoc function with a key of "canary".[34]

WordNet

[edit]An example of a semantic network is WordNet, a lexical database of English. It groups English words into sets of synonyms called synsets, provides short, general definitions, and records the various semantic relations between these synonym sets. Some of the most common semantic relations defined are meronymy (A is a meronym of B if A is part of B), holonymy (B is a holonym of A if B contains A), hyponymy (or troponymy) (A is subordinate of B; A is kind of B), hypernymy (A is superordinate of B), synonymy (A denotes the same as B) and antonymy (A denotes the opposite of B).

WordNet properties have been studied from a network theory perspective and compared to other semantic networks created from Roget's Thesaurus and word association tasks. From this perspective the three of them are a small world structure.[35]

Other examples

[edit]It is also possible to represent logical descriptions using semantic networks such as the existential graphs of Charles Sanders Peirce or the related conceptual graphs of John F. Sowa.[1] These have expressive power equal to or exceeding standard first-order predicate logic. Unlike WordNet or other lexical or browsing networks, semantic networks using these representations can be used for reliable automated logical deduction. Some automated reasoners exploit the graph-theoretic features of the networks during processing.

Other examples of semantic networks are Gellish models. Gellish English with its Gellish English dictionary, is a formal language that is defined as a network of relations between concepts and names of concepts. Gellish English is a formal subset of natural English, just as Gellish Dutch is a formal subset of Dutch, whereas multiple languages share the same concepts. Other Gellish networks consist of knowledge models and information models that are expressed in the Gellish language. A Gellish network is a network of (binary) relations between things. Each relation in the network is an expression of a fact that is classified by a relation type. Each relation type itself is a concept that is defined in the Gellish language dictionary. Each related thing is either a concept or an individual thing that is classified by a concept. The definitions of concepts are created in the form of definition models (definition networks) that together form a Gellish Dictionary. A Gellish network can be documented in a Gellish database and is computer interpretable.

SciCrunch is a collaboratively edited knowledge base for scientific resources. It provides unambiguous identifiers (Research Resource IDentifiers or RRIDs) for software, lab tools etc. and it also provides options to create links between RRIDs and from communities.

Another example of semantic networks, based on category theory, is ologs. Here each type is an object, representing a set of things, and each arrow is a morphism, representing a function. Commutative diagrams also are prescribed to constrain the semantics.

In the social sciences people sometimes use the term semantic network to refer to co-occurrence networks.[36]

Software tools

[edit]There are also elaborate types of semantic networks connected with corresponding sets of software tools used for lexical knowledge engineering, like the Semantic Network Processing System (SNePS) of Stuart C. Shapiro[37] or the MultiNet paradigm of Hermann Helbig,[38] especially suited for the semantic representation of natural language expressions and used in several NLP applications.

Semantic networks are used in specialized information retrieval tasks, such as plagiarism detection. They provide information on hierarchical relations in order to employ semantic compression to reduce language diversity and enable the system to match word meanings, independently from sets of words used.

The Knowledge Graph proposed by Google in 2012 is actually an application of semantic network in search engine.

Modeling multi-relational data like semantic networks in low-dimensional spaces through forms of embedding has benefits in expressing entity relationships as well as extracting relations from mediums like text. There are many approaches to learning these embeddings, notably using Bayesian clustering frameworks or energy-based frameworks, and more recently, TransE[39] (NIPS 2013). Applications of embedding knowledge base data include Social network analysis and Relationship extraction.

See also

[edit]- Abstract semantic graph

- Chunking (psychology)

- CmapTools

- Concept map

- Network diagram

- Ontology (information science)

- Repertory grid

- Semantic lexicon

- Semantic similarity network

- Semantic neural network

- SemEval – an ongoing series of evaluations of computational semantic analysis systems

- Sparse distributed memory

- Taxonomy (general)

- Unified Medical Language System (UMLS)

- Word-sense disambiguation (WSD)

- Resource Description Framework

Other examples

[edit]References

[edit]- ^ a b John F. Sowa (1987). "Semantic Networks". In Stuart C Shapiro (ed.). Encyclopedia of Artificial Intelligence. Retrieved 29 April 2008.

- ^ Poon, Hoifung, and Pedro Domingos. "Unsupervised semantic parsing." Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing: Volume 1-Volume 1. Association for Computational Linguistics, 2009.

- ^ Sussna, Michael. "Word sense disambiguation for free-text indexing using a massive semantic network." Proceedings of the second international conference on Information and knowledge management. ACM, 1993.

- ^ Lehmann, Fritz; Rodin, Ervin Y., eds. (1992). Semantic networks in artificial intelligence. International series in modern applied mathematics and computer science. Vol. 24. Oxford; New York: Pergamon Press. p. 6. ISBN 978-0-08-042012-7. OCLC 26391254.

The first semantic network for computers was Nude, created by R. H. Richens of the Cambridge Language Research Unit in 1956 as an interlingua for machine translation of natural languages.

- ^ Robert F. Simmons (1963). "Synthetic language behavior". Data Processing Management. 5 (12): 11–18.

- ^ Simmons, "Themes From 1972", ACL Anthology, 1982

- ^ Quillian, R. A notation for representing conceptual information: An application to semantics and mechanical English para- phrasing. SP-1395, System Development Corporation, Santa Monica, 1963.

- ^ Allan M. Collins; M. R. Quillian (1969). "Retrieval time from semantic memory". Journal of Verbal Learning and Verbal Behavior. 8 (2): 240–247. doi:10.1016/S0022-5371(69)80069-1.

- ^ Allan M. Collins; M. Ross Quillian (1970). "Does category size affect categorization time?". Journal of Verbal Learning and Verbal Behavior. 9 (4): 432–438. doi:10.1016/S0022-5371(70)80084-6.

- ^ Allan M. Collins; Elizabeth F. Loftus (1975). "A spreading-activation theory of semantic processing". Psychological Review. 82 (6): 407–428. doi:10.1037/0033-295x.82.6.407. S2CID 14217893.

- ^ Quillian, M. R. (1967). Word concepts: A theory and simulation of some basic semantic capabilities. Behavioral Science, 12(5), 410–430.

- ^ Quillian, M. R. (1968). Semantic memory. Semantic information processing, 227–270.

- ^ Quillian, M. R. (1969). "The teachable language comprehender: a simulation program and theory of language". Communications of the ACM. 12 (8): 459–476. doi:10.1145/363196.363214. S2CID 15304609.

- ^ Quillian, R. Semantic Memory. Unpublished doctoral dissertation, Carnegie Institute of Technology, 1966.

- ^ Helbig, H. (2006). Knowledge Representation and the Semantics of Natural Language (PDF). ISBN 978-3-540-24461-5.

- ^ Van de Riet, R. P. (1992). Linguistic Instruments in Knowledge Engineering (PDF). Elsevier Science Publishers. p. 98. ISBN 978-0-444-88394-0.

- ^ Hulpus, Ioana; Prangnawarat, Narumol (2015). "Path-Based Semantic Relatedness on Linked Data and Its Use to Word and Entity Disambiguation". The Semantic Web – ISWC 2015: 14th International Semantic Web Conference, Bethlehem, PA, USA, October 11–15, 2015, Proceedings, Part 1. International Semantic Web Conference 2015. Springer International Publishing. p. 444. ISBN 978-3-319-25007-6.

- ^ McCusker, James P.; Chastain, Katherine (April 2016). "What is a Knowledge Graph?". authorea.com. Retrieved 15 June 2016.

usage [of the term 'knowledge graph'] has evolved

- ^ H. Zhuge, Knowledge Grid, World Scientific Publishing Co. 2004.

- ^ H. Zhuge, Inheritance rules for flexible model retrieval. Decision Support Systems 22(4)(1998)379–390

- ^ H. Zhuge, Active e-document framework ADF: model and tool. Information & Management 41(1): 87–97 (2003)

- ^ H.Zhuge and L.Zheng, Ranking Semantic-linked Network, WWW 2003

- ^ H.Zhuge, The Semantic Link Network, in The Knowledge Grid: Toward Cyber-Physical Society, World Scientific Publishing Co. 2012.

- ^ H. Zhuge, L. Zheng, N. Zhang and X. Li, An automatic semantic relationships discovery approach. WWW 2004: 278–279.

- ^ H. Zhuge, Communities and Emerging Semantics in Semantic Link Network: Discovery and Learning, IEEE Transactions on Knowledge and Data Engineering, 21(6)(2009)785–799.

- ^ H.Zhuge, Semantic linking through spaces for cyber-physical-socio intelligence: A methodology, Artificial Intelligence, 175(2011)988–1019.

- ^ H. Zhuge, Multi-Dimensional Summarization in Cyber-Physical Society, Morgan Kaufmann, 2016.

- ^ H. Zhuge, The Web Resource Space Model, Springer, 2008.

- ^ H.Zhuge and Y.Xing, Probabilistic Resource Space Model for Managing Resources in Cyber-Physical Society, IEEE Transactions on Service Computing, 5(3)(2012)404–421.

- ^ Sun, Xiaoping; Zhuge, Hai (2018). "Summarization of Scientific Paper Through Reinforcement Ranking on Semantic Link Network". IEEE Access. 6: 40611–40625. Bibcode:2018IEEEA...640611S. doi:10.1109/ACCESS.2018.2856530.

- ^ Cao, Mengyun; Sun, Xiaoping; Zhuge, Hai (2018). "The contribution of cause-effect link to representing the core of scientific paper—The role of Semantic Link Network". PLOS ONE. 13 (6) e0199303. Bibcode:2018PLoSO..1399303C. doi:10.1371/journal.pone.0199303. PMC 6013162. PMID 29928017.

- ^ H. Zhuge, Cyber-Physical-Social Intelligence on Human-Machine-Nature Symbiosis, Springer, 2020.

- ^ Bendeck, Fawsy (2008). WSM-P workflow semantic matching platform. München: Verl. Dr. Hut. ISBN 978-3-89963-854-7. OCLC 501314022.

- ^ Swigger, Kathleen. "Semantic.ppt". Retrieved 23 March 2011.

- ^ Steyvers, M.; Tenenbaum, J.B. (2005). "The Large-Scale Structure of Semantic Networks: Statistical Analyses and a Model of Semantic Growth". Cognitive Science. 29 (1): 41–78. arXiv:cond-mat/0110012. doi:10.1207/s15516709cog2901_3. PMID 21702767. S2CID 6000627.

- ^ Wouter Van Atteveldt (2008). Semantic Network Analysis: Techniques for Extracting, Representing, and Querying Media Content (PDF). BookSurge Publishing.

- ^ Stuart C. Shapiro

- ^ Hermann Helbig

- ^ Bordes, Antoine; Usunier, Nicolas; Garcia-Duran, Alberto; Weston, Jason; Yakhnenko, Oksana (2013), Burges, C. J. C.; Bottou, L.; Welling, M.; Ghahramani, Z. (eds.), "Translating Embeddings for Modeling Multi-relational Data" (PDF), Advances in Neural Information Processing Systems 26, Curran Associates, Inc., pp. 2787–2795, retrieved 29 November 2018

Further reading

[edit]- Allen, J. and A. Frisch (1982). "What's in a Semantic Network". In: Proceedings of the 20th. annual meeting of ACL, Toronto, pp. 19–27.

- John F. Sowa, Alexander Borgida (1991). Principles of Semantic Networks: Explorations in the Representation of Knowledge.

External links

[edit]- "Semantic Networks" by John F. Sowa

- "Semantic Link Network" by Hai Zhuge

Semantic network

View on GrokipediaFundamentals

Definition and Core Concepts

A semantic network is a directed graph structure for representing knowledge, consisting of nodes that denote concepts such as objects, events, or entities, and labeled edges or arcs that specify semantic relations between them, such as "is-a" for hierarchical subclass relationships, "part-of" for componential links, or "has-property" for attribute assignments.[1] This representation, pioneered in early models of semantic memory, organizes information in a way that captures the associative and relational nature of human cognition, where concepts are not stored in isolation but as part of an interconnected web.[2] The primary purpose of semantic networks is to model domain-specific knowledge in a manner that facilitates automated inference, detection of conceptual similarities, and relational understanding, particularly in fields like artificial intelligence, cognitive science, and linguistics.[1] By encoding relationships explicitly, these networks enable systems to derive new information from existing facts, such as inferring properties through transitive relations, thereby supporting tasks like question answering and pattern recognition without exhaustive rule-based programming.[3] At their core, semantic networks embody the principle that knowledge emerges from patterns of interconnections among nodes rather than discrete, isolated propositions, allowing for flexible querying and dynamic knowledge exploration.[1] They incorporate mechanisms like inheritance, where properties defined at higher-level nodes (e.g., supertypes) propagate to subordinate nodes (e.g., subtypes) via "is-a" links, promoting efficiency and consistency in representation.[1] Additionally, spreading activation supports reasoning by propagating signals or markers from an activated node through the network along relational paths, simulating associative recall and enabling the retrieval of related concepts based on proximity and strength of connections.[3] Unlike general graphs, where edges merely indicate connectivity without inherent meaning, semantic networks distinguish themselves through the semantic labels on edges, which encode specific relational semantics to underpin knowledge-based reasoning, such as deduction or analogy, beyond simple topological analysis.[1]Components and Representation

Semantic networks are structured as directed graphs where nodes represent key elements of knowledge and edges denote the relationships between them. Nodes typically fall into several types: concepts, which capture general ideas or classes such as "animal"; instances, which refer to specific entities like "a particular dog"; categories, serving as hierarchical classes such as "mammal"; and properties, which describe attributes like "color" or "weight." These node types allow for a modular organization of knowledge, enabling distinctions between abstract and concrete elements.[1] Edges in semantic networks encode the semantic relations between nodes, with labels specifying the nature of the connection. Taxonomic edges, such as "is-a," indicate inheritance or subclass relationships, allowing properties to propagate from superclasses to subclasses. Associative edges, like "similar-to," link related but non-hierarchical concepts, while functional edges, such as "causes," express causal or procedural dependencies. For instance, a simple hierarchy might connect "bird" to "animal" via an "is-a" edge, as illustrated below: Bird

|

is-a

|

[Animal](/page/A.N.I.M.A.L.)

Bird

|

is-a

|

[Animal](/page/A.N.I.M.A.L.)

Historical Development

Early Origins

The computational origins of semantic networks trace back to mid-20th-century cognitive psychology, where associative memory models sought to explain how humans organize and retrieve knowledge, building on earlier philosophical concepts such as Porphyry's Tree from the 3rd century AD, which illustrated categorical hierarchies.[1] A key influence was Frederic Bartlett's schema theory, outlined in his 1932 book Remembering: A Study in Experimental and Social Psychology, which conceptualized memory not as passive storage but as an active reconstruction shaped by schemas—integrated frameworks of prior experiences that guide interpretation of new information. Bartlett's ideas emphasized the interconnected, reconstructive nature of semantic memory, laying groundwork for later network representations of conceptual associations.[6] Building on these psychological foundations, early work in human semantic memory during the 1950s and 1960s explored associative structures to model knowledge organization. Researchers drew from associationism, viewing memory as a web of linked concepts rather than isolated facts, which anticipated computational implementations.[7] These models contrasted with earlier behaviorist approaches by focusing on internal mental structures, influencing the shift toward cognitive science. Semantic networks also connected to broader pre-1970 developments in cybernetics and information theory, where simple hand-drawn diagrams illustrated concept interconnections and information flow in complex systems. Cybernetic thinkers like Norbert Wiener, in his 1948 foundational text Cybernetics: Or Control and Communication in the Animal and the Machine, used network-like diagrams to depict feedback and associative processes in biological and mechanical systems, providing an interdisciplinary bridge to AI. Information theory, meanwhile, contributed ideas of encoded relationships, as seen in early sketches of associative hierarchies that prefigured digital representations.[8] A pivotal advancement came from Ross Quillian's PhD research at Carnegie Mellon University in 1966–1968, which introduced the first computational semantic network. In his 1967 paper "Word Concepts: A Theory and Simulation of Some Basic Semantic Capabilities," Quillian described a graph-based structure where nodes represented concepts and edges denoted semantic relations, enabling storage and inference of word meanings.[9] This work motivated modeling human-like knowledge storage and retrieval to simulate intelligence, offering a flexible alternative to rigid rule-based systems prevalent in early AI. Quillian's Teachable Language Comprehender (TLC), detailed in a 1969 publication but developed during his doctoral period, applied this network to natural language understanding by allowing the system to "learn" associations from text inputs.[10] TLC demonstrated how semantic networks could process queries through spreading activation along links, simulating human comprehension and highlighting the approach's potential for AI applications in the late 1960s.[11]Key Advancements and Milestones

In the 1970s, semantic networks advanced through structured representations that addressed limitations in earlier associative models. Marvin Minsky introduced frames in 1975 as a data structure for organizing stereotypical situations and knowledge, extending semantic networks by incorporating default values, procedural attachments, and hierarchical inheritance to facilitate efficient inference and expectation-driven processing in AI systems.[12] Similarly, Roger Schank developed conceptual dependency theory between 1972 and 1975, proposing a primitive-based framework for representing events and actions in natural language understanding, which formalized causal relations and actor roles within network-like structures to enable deeper semantic parsing beyond simple links. During the 1980s and 1990s, semantic networks integrated into practical AI applications, particularly expert systems, where they supported diagnostic reasoning through interconnected knowledge bases. Concurrently, William Woods' 1975 work on procedural attachment enhanced semantic networks by embedding executable procedures in links, allowing dynamic interpretation of static representations and addressing ambiguities in meaning through context-dependent computation. These developments shifted focus toward applied systems, though the 1980s AI winter severely curtailed funding for knowledge representation research, including semantic networks, due to overhyped expectations and limited computational scalability, prompting a reevaluation of pure symbolic approaches.[13] By the 2000s, semantic networks influenced the standardization of web-scale knowledge representation, transitioning from isolated AI tools to interoperable frameworks. The Resource Description Framework (RDF), proposed by the W3C in 1999, formalized graph-based semantics inspired by network models, enabling machine-readable data exchange on the web through triples that capture entities, properties, and relations.[14] This paved the way for the Web Ontology Language (OWL) in 2004, which extended RDF with formal axioms for richer inference, drawing on semantic network hierarchies to support complex ontologies in distributed environments.[15] Key challenges in this era included scalability limitations of large semantic networks, which led to the development of hybrid models combining symbolic graphs with statistical or rule-based methods to manage complexity and improve efficiency in real-world applications.[16] These advancements marked a progression from theoretical constructs to foundational elements of modern knowledge systems, emphasizing standardization and practicality.Formal Models

Mathematical Foundations

A semantic network is formally modeled as a directed labeled graph , where is the set of vertices representing concepts or entities, is the set of directed edges denoting relations between concepts, and is a label function that assigns semantic labels from an alphabet to each edge (e.g., "is-a" for taxonomic relations or "has-part" for meronymy).[17] This graph-theoretic foundation enables the representation of knowledge as interconnected structures, drawing from standard definitions in knowledge representation systems like SNEPS.[18] Path semantics in semantic networks rely on the transitive closure of relations to infer inherited properties, allowing complex inferences from simple edge traversals. For instance, if there exists an edge and , the transitive closure implies , enabling inheritance along hierarchical paths.[19] This closure can be computed using graph traversal algorithms such as breadth-first search (BFS) or depth-first search (DFS) to identify relevant paths between nodes. The following pseudocode illustrates a basic BFS-based path computation for transitive inheritance, starting from a source node and checking for labeled paths:function findInheritancePaths(G, start, target, relation):

queue = [(start, [])] # (current_node, path)

visited = set()

while queue:

current, path = queue.pop(0)

if current in visited:

continue

visited.add(current)

if current == target and any(rel == relation for rel in path_labels(path)):

return path

for neighbor, edge_label in G.neighbors(current):

if edge_label in allowed_transitions(relation): # e.g., "is-a" for inheritance

new_path = path + [(current, edge_label)]

queue.append((neighbor, new_path))

return None

function findInheritancePaths(G, start, target, relation):

queue = [(start, [])] # (current_node, path)

visited = set()

while queue:

current, path = queue.pop(0)

if current in visited:

continue

visited.add(current)

if current == target and any(rel == relation for rel in path_labels(path)):

return path

for neighbor, edge_label in G.neighbors(current):

if edge_label in allowed_transitions(relation): # e.g., "is-a" for inheritance

new_path = path + [(current, edge_label)]

queue.append((neighbor, new_path))

return None

Inference and Reasoning

Inheritance mechanisms in semantic networks enable the automatic propagation of properties from more general concepts to more specific ones along hierarchical "is-a" relationships, forming the basis for efficient knowledge representation and retrieval. In monotonic inheritance, properties defined for a parent node, such as "bird has wings," are inherited by child nodes like "robin" unless explicitly overridden, allowing for concise encoding of shared attributes across related entities.[21] This approach, pioneered in early semantic memory models, supports deductive reasoning by traversing the hierarchy to infer applicable traits, reducing redundancy in the network structure. Spreading activation is a key algorithm for inference in semantic networks, simulating associative recall by propagating activation levels from an initial node through weighted links to related nodes. The activation of a node is computed as the sum over incoming edges from nodes : where represents the strength of the connection, typically decaying with distance to model psychological spreading in human memory.[22] This mechanism facilitates tasks like similarity judgment and word association, where activation thresholds determine retrieved concepts, as demonstrated in models of semantic processing.[23] Non-monotonic reasoning extends inheritance to handle exceptions and defaults, allowing tentative conclusions that can be retracted upon new evidence, which is essential for realistic knowledge derivation in complex domains. For instance, while "bird is-a animal that flies" might apply generally, a specific "penguin is-a bird" can introduce a defeater link negating flight, blocking propagation along that path without affecting other birds.[24] Systems like KL-ONE incorporate such mechanisms through structured defaults and role restrictions, enabling prioritized inheritance paths that resolve conflicts via specificity or priority rules. Query resolution in semantic networks relies on path-finding algorithms to determine entailment, such as verifying if "robin flies" by tracing an unbroken "is-a" path from "robin" to a node asserting the property. This involves graph traversal techniques, like depth-first search along directed edges, to identify relevant inheritance chains and compute derived facts.[25] However, challenges arise from semantic ambiguity, where multiple paths or link interpretations can lead to inconsistent or unintended inferences, such as conflating distinct senses of a concept in the absence of disambiguating context.[26]Applications

In Knowledge Representation

Semantic networks play a central role in knowledge representation (KR) within artificial intelligence, providing a graphical structure for encoding declarative knowledge through nodes representing concepts or objects and directed, labeled edges denoting relationships between them. This paradigm emphasizes explicit relational modeling, allowing for the organization of knowledge in a way that mirrors human associative thinking, as originally proposed by Quillian in his work on semantic memory.[27] In contrast to logic-based KR methods like predicate logic, which excel in formal, deductive inference but often require symbolic manipulation that can obscure intuitive understanding, semantic networks prioritize visual and structural clarity, making them more accessible for designing and debugging knowledge bases.[28][29] However, they are generally less rigorous than logic-based systems, lacking the precise semantics needed for unambiguous theorem proving, which can lead to interpretive ambiguities in complex scenarios.[27] One key advantage of semantic networks in KR is their facilitation of commonsense reasoning through mechanisms like inheritance along hierarchical links, such as "is-a" relations, which enable efficient propagation of properties from general to specific concepts without redundant storage.[30] This structure promotes modularity, as related knowledge can be clustered into subgraphs, supporting the construction of large-scale ontologies where domain-specific information is compartmentalized for maintainability.[28] Additionally, semantic networks allow easy extension by simply adding new nodes and links, accommodating evolving knowledge without overhauling the entire representation, which enhances their adaptability in dynamic AI systems.[31] In practical use cases, semantic networks are particularly effective for building ontologies in specialized domains like medicine, where they model complex interdependencies, such as drug interactions, by linking pharmaceutical entities through relational edges representing mechanisms like metabolic inhibition or synergistic effects.[32] For instance, nodes for drugs can connect via paths indicating interaction types, with multiple pathways allowing representation of contextual vagueness, such as varying severity based on patient factors, thereby supporting nuanced querying and inference.[33] This approach aids in ontology development by enabling taxonomic hierarchies that classify medical concepts efficiently.[31] Despite these strengths, semantic networks face limitations in scalability for very large graphs, where the exponential growth of nodes and edges can complicate traversal and maintenance, leading to performance bottlenecks in reasoning tasks.[28] Furthermore, without imposed constraints, they are prone to inconsistencies, such as contradictory inferences from multiple inheritance paths or cycles in the graph that may induce infinite loops during propagation, necessitating additional mechanisms like exception handling to ensure reliability.[27] Semantic networks support core inference like inheritance, akin to early frame-based systems, but require careful design to mitigate these issues.[28]In Natural Language Processing

Semantic networks play a pivotal role in natural language processing (NLP) by modeling linguistic meaning through interconnected nodes representing concepts and edges denoting relationships, enabling the capture of contextual semantics beyond surface syntax. In NLP tasks, these networks facilitate the representation of word meanings, sentence structures, and discourse relations, allowing systems to infer implied connections and resolve ambiguities based on relational paths. For instance, resources like WordNet, a lexical semantic network, organize words into synsets linked by hypernymy, hyponymy, and other relations, supporting deeper understanding of textual content. In semantic role labeling (SRL), semantic networks assign roles such as agent, patient, or theme to sentence constituents by leveraging relational edges that encode predicate-argument structures. This approach treats predicates as central nodes with outgoing arcs to arguments, drawing on lexical resources to propagate role assignments through inheritance and composition. Early systems integrated WordNet's semantic relations to generalize roles across verbs, enhancing labeling accuracy in frame-semantic parsing. For example, in processing "The chef cooked the meal," the network links "chef" as agent to "cooked" and "meal" as patient via thematic arcs, informed by verb-specific patterns. Modern extensions combine these graphs with transformer-based models, achieving F1 scores of 88-92% on benchmarks like PropBank as of 2021-2025.[34] Word sense disambiguation (WSD) utilizes semantic networks to resolve polysemy by evaluating context through paths connecting ambiguous words to surrounding concepts. Algorithms traverse the network to compute semantic relatedness, selecting the sense with the strongest contextual overlap; for "bank," proximity to "river" nodes favors the geographical sense, while links to "money" suggest finance. Seminal graph-based methods, such as those applying PageRank to WordNet, treat senses as nodes and relations as weighted edges, outperforming baseline accuracy by 10-15% on SemCor datasets. This path-based resolution exploits the network's topology to prioritize senses aligned with co-occurring terms, making it robust for open-domain text.[35] Semantic networks support machine translation by aligning cross-lingual structures through interlingua representations, where source and target sentences map to shared semantic graphs for meaning-preserving transfer. Pioneering work used bonded semantic primitives as nodes to bridge languages, enabling rule-based translation via graph isomorphism. In question answering, networks enable fact retrieval by traversing from query concepts to related entities; for instance, a query on "Paris capital" follows hypernym links to confirm "France" as the answer. These applications demonstrate networks' utility in modular NLP pipelines.[1][36] Challenges in applying semantic networks to NLP include handling dynamic language evolution, where neologisms and shifting meanings require frequent network updates to maintain relevance. Static graphs like WordNet struggle with idiomatic or domain-specific shifts, leading to outdated paths and reduced disambiguation accuracy over time. Post-2010 advancements address this through hybrid models integrating symbolic networks with vector embeddings, such as graph neural networks that embed nodes for continuous semantic spaces. Recent developments as of 2025 further incorporate large language models (LLMs) to enhance reasoning in WSD and SRL tasks. These fusions improve adaptability, boosting WSD F1 by up to 20% on evolving corpora, though scaling to multilingual or real-time updates remains computationally intensive.[37][38]Notable Implementations

Semantic Networks in Practice

One prominent example of a semantic network in practice is WordNet, developed at Princeton University starting in the 1990s as a lexical database for English that organizes words into sets of cognitive synonyms known as synsets, connected via semantic relations such as hypernyms (superordinate concepts) and hyponyms (subordinate concepts). This structure enables representation of lexical relationships, with WordNet comprising approximately 117,000 synsets across nouns, verbs, adjectives, and adverbs, facilitating applications in natural language understanding by modeling word meanings and their interconnections.[39] Another influential system is Cyc, initiated by Douglas Lenat in 1984 and ongoing through Cycorp, which encodes commonsense knowledge in a vast semantic network of concepts and assertions to support automated reasoning about everyday scenarios.[40] The knowledge base features over 1.5 million concepts and 25 million rules and axioms, primarily hand-curated by domain experts to capture implicit human knowledge that machines otherwise lack.[41] In the 1980s, the KL-ONE system emerged as a foundational terminological knowledge representation framework, using a network of concepts and roles to define hierarchical taxonomies with restrictions on role fillers, such as cardinality constraints, to enable precise classification and inheritance. Developed by Brachman and Schmolze, KL-ONE influenced subsequent description logic systems by providing a structured way to represent and reason over domain-specific knowledge through subsumption and role-based inferences.[42] These systems have demonstrated success in covering everyday concepts, with WordNet achieving broad lexical coverage for common English terms used in psycholinguistic research and applications, while Cyc has enabled reasoning over millions of real-world assertions to support tasks like question answering.[40] KL-ONE's impact is evident in its role as a precursor to modern ontologies, facilitating efficient knowledge classification in expert systems.[42] However, critiques highlight high maintenance costs due to manual curation and updates; for instance, Cyc's hand-crafted approach has required decades of expert labor, raising scalability concerns, and WordNet's static structure demands periodic revisions to incorporate evolving language use without automated tools.[43]Modern Knowledge Graphs

Modern knowledge graphs represent an evolution of semantic networks into large-scale, distributed structures that encode real-world entities, relationships, and attributes in a machine-readable format, enabling interconnected data across the web. This shift began prominently with Google's Knowledge Graph in 2012, which integrated structured data from sources like Freebase to provide context-aware search results beyond simple keyword matching.[44] Concurrently, schema.org, launched in 2011 as a collaborative initiative by major search engines including Google, Microsoft Bing, Yahoo, and Yandex, standardized structured data markup using microdata, RDFa, and JSON-LD to facilitate the embedding of semantic information directly into web pages.[45] A defining feature of modern knowledge graphs is their massive scale, often comprising billions of nodes and edges, as seen in Wikidata, which by 2025 hosts over 119 million items and supports multilingual, collaborative knowledge curation.[46] Interoperability is achieved through the use of RDF triples—statements consisting of a subject, predicate, and object—that form the foundational building blocks of these graphs, allowing seamless data exchange and querying across diverse systems in the Semantic Web ecosystem.[47] Post-2020 advancements have integrated knowledge graphs with large language models (LLMs), particularly through retrieval-augmented generation (RAG) frameworks that leverage graph queries to retrieve structured context, enhancing LLM accuracy and reducing hallucinations in tasks like question answering. Additionally, handling temporal data—capturing time-evolving relationships—and multimodal data, such as combining text with images or videos, has progressed via methods like temporal multi-modal knowledge graph generation, which automates the construction of dynamic, enriched graphs for improved link prediction and reasoning.[48] These graphs have profoundly impacted search engines by enabling semantic understanding and direct answers, as exemplified by Google's Knowledge Graph powering knowledge panels in search results.[49] In recommendation systems, they mitigate data sparsity by inferring user preferences through entity relationships, boosting personalization in platforms like e-commerce and streaming services. However, challenges persist in linked open data environments, where privacy risks arise from unintended inference of sensitive information across interconnected triples, necessitating techniques like differential privacy in graph merging and querying.[50]Tools and Frameworks

Software Implementations

Several dedicated software tools and libraries facilitate the construction, querying, and management of semantic networks, ranging from open-source ontology editors to proprietary graph databases with semantic capabilities.[51] Among open-source options, Protégé serves as a prominent ontology editor that supports the creation and visualization of semantic networks through graph-based representations, including node-link diagrams for exploring relationships in OWL and RDF formats.[52][51] It enables import and export of ontologies in standards like OWL, allowing conversion to semantic network structures, and integrates basic reasoning engines via plugins such as HermiT for inference over network triples.[53][54] GraphDB, another open-source tool from Ontotext, specializes in RDF storage for semantic networks and provides SPARQL querying to retrieve and traverse interconnected nodes and edges efficiently.[55] It includes visualization features for rendering semantic graphs and supports import/export with formats like OWL and Turtle, alongside lightweight reasoning for consistency checks.[56] Proprietary systems extend these functionalities for enterprise-scale semantic networks. IBM Watson's knowledge graph tools, integrated within Watson Discovery, allow building and querying semantic networks by ingesting diverse data sources into entity-relationship models, with features for visualization and OWL-compatible exports.[57][58] Neo4j, a leading graph database, supports semantic extensions through plugins like neosemantics (n10s), enabling RDF import/export, OWL reasoning, and node-link visualizations via its Bloom tool for exploring semantic relationships.[59][60] Common features across these tools include interactive visualization of semantic networks as graphs, seamless import/export between OWL/RDF and network formats for interoperability, and embedded reasoning engines to infer implicit links.[61][62] As of 2025, adoption of semantic network software shows strong uptake in research for ontology development, while industry favors scalable options like Neo4j and GraphDB for production knowledge graphs in AI applications. Recent updates incorporate AI-assisted graph building, such as automated entity extraction and relationship inference in tools like metaphactory and Stardog.[63][64]Integration with Programming Languages

Semantic networks have been integrated into programming languages since their early conceptualization, with foundational implementations leveraging list-processing paradigms in AI languages. M. Ross Quillian's Teachable Language Comprehender (TLC), introduced in 1969, represented semantic networks as interconnected nodes in a graph structure to enable natural language comprehension, using list-based representations that influenced subsequent Lisp implementations for knowledge representation.[10] In Lisp, semantic networks were supported through dynamic list manipulation for building and traversing associative memories, as seen in early AI systems where nodes and relations were encoded as s-expressions for flexible inference.[25] Prolog provided early support for semantic networks via its logic programming paradigm, where facts and rules form directed graphs akin to semantic structures, enabling inheritance and querying through unification and backtracking. For instance, semantic relations could be modeled as Prolog predicates likeparent(X, Y) to represent hierarchical knowledge, with recursive queries simulating network traversal for reasoning tasks.[65]

In modern programming environments, Python offers robust libraries for semantic network manipulation. RDFLib, a pure Python package, facilitates working with Resource Description Framework (RDF) graphs, which underpin many semantic networks, by parsing, serializing, and querying triples in formats like Turtle or N-Triples.[66] Complementing this, NetworkX provides graph operations such as shortest path algorithms and centrality measures, allowing developers to convert RDF data into manipulable network structures for analysis; for example, RDF triples can be mapped to NetworkX nodes and edges to compute semantic similarities.[67][68]

Java integrates semantic networks through frameworks like Apache Jena, an open-source library for building Semantic Web applications that handles RDF storage, ontology management, and inference over large graphs.[69] Jena's API supports creating in-memory or persistent models, enabling programmatic addition of triples and execution of reasoning rules, as in enterprise knowledge bases where semantic relations are queried via its built-in reasoners.[70]

Access to semantic networks often occurs through standardized APIs and query patterns, with SPARQL serving as the W3C-recommended language for retrieving and manipulating RDF data across diverse sources.[71] SPARQL queries, such as SELECT patterns with graph patterns like ?subject :relation ?object, enable path retrieval and filtering in semantic networks, integrating seamlessly into language-specific clients like those in Python's RDFLib or Java's Jena.[71]

Embedding semantic networks in machine learning pipelines has advanced with libraries like PyTorch Geometric, which extends PyTorch for graph neural networks (GNNs) to process semantic structures, propagating node features along relations for tasks like link prediction in knowledge graphs.[72] As of 2025, integrations such as GNN-based recommendation models over knowledge graphs leverage PyTorch to fuse semantic embeddings with neural architectures.

Best practices for handling large semantic graphs emphasize efficient indexing and partitioning to support fast inference. Techniques include using persistent stores with B-tree or hash indexes on predicates to accelerate triple lookups, as in Jena's TDB component, reducing query times by orders of magnitude for graphs exceeding billions of triples.[73] Developers should avoid over-indexing to maintain write performance, opting for selective indexing on frequently queried relations and employing distributed frameworks like Apache Spark for scalable processing of massive networks.[74]References

- https://www.wikidata.org/wiki/Wikidata:Statistics