Recent from talks

Nothing was collected or created yet.

Microarchitecture

View on WikipediaThis article needs additional citations for verification. (January 2010) |

In electronics, computer science and computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as μarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor.[1] A given ISA may be implemented with different microarchitectures;[2][3] implementations may vary due to different goals of a given design or due to shifts in technology.[4]

Computer architecture is the combination of microarchitecture and instruction set architecture.

Relation to instruction set architecture

[edit]

The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the instructions, execution model, processor registers, address and data formats among other things. The microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA.

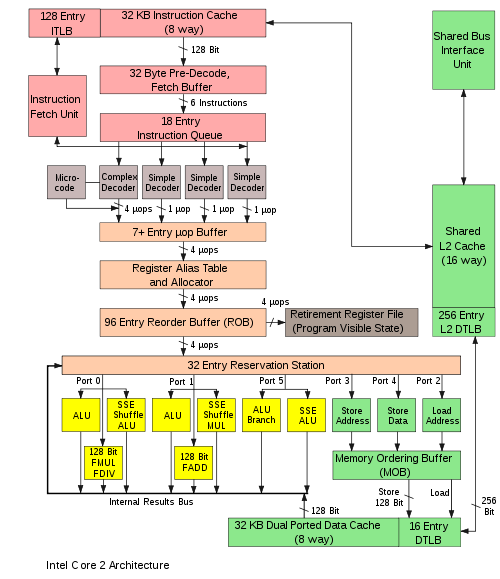

The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be anything from single gates and registers, to complete arithmetic logic units (ALUs) and even larger elements. These diagrams generally separate the datapath (where data is placed) and the control path (which can be said to steer the data).[5]

The person designing a system usually draws the specific microarchitecture as a kind of data flow diagram. Like a block diagram, the microarchitecture diagram shows microarchitectural elements such as the arithmetic and logic unit and the register file as a single schematic symbol. Typically, the diagram connects those elements with arrows, thick lines and thin lines to distinguish between three-state buses (which require a three-state buffer for each device that drives the bus), unidirectional buses (always driven by a single source, such as the way the address bus on simpler computers is always driven by the memory address register), and individual control lines. Very simple computers have a single data bus organization – they have a single three-state bus. The diagram of more complex computers usually shows multiple three-state buses, which help the machine do more operations simultaneously.

Each microarchitectural element is in turn represented by a schematic describing the interconnections of logic gates used to implement it. Each logic gate is in turn represented by a circuit diagram describing the connections of the transistors used to implement it in some particular logic family. Machines with different microarchitectures may have the same instruction set architecture, and thus be capable of executing the same programs. New microarchitectures and/or circuitry solutions, along with advances in semiconductor manufacturing, are what allows newer generations of processors to achieve higher performance while using the same ISA.

In principle, a single microarchitecture could execute several different ISAs with only minor changes to the microcode.

Aspects

[edit]

The pipelined datapath is the most commonly used datapath design in microarchitecture today. This technique is used in most modern microprocessors, microcontrollers, and DSPs. The pipelined architecture allows multiple instructions to overlap in execution, much like an assembly line. The pipeline includes several different stages which are fundamental in microarchitecture designs.[5] Some of these stages include instruction fetch, instruction decode, execute, and write back. Some architectures include other stages such as memory access. The design of pipelines is one of the central microarchitectural tasks.

Execution units are also essential to microarchitecture. Execution units include arithmetic logic units (ALU), floating point units (FPU), load/store units, branch prediction, and SIMD. These units perform the operations or calculations of the processor. The choice of the number of execution units, their latency and throughput is a central microarchitectural design task. The size, latency, throughput and connectivity of memories within the system are also microarchitectural decisions.

System-level design decisions such as whether or not to include peripherals, such as memory controllers, can be considered part of the microarchitectural design process. This includes decisions on the performance-level and connectivity of these peripherals.

Unlike architectural design, where achieving a specific performance level is the main goal, microarchitectural design pays closer attention to other constraints. Since microarchitecture design decisions directly affect what goes into a system, attention must be paid to issues such as chip area/cost, power consumption, logic complexity, ease of connectivity, manufacturability, ease of debugging, and testability.

Microarchitectural concepts

[edit]Instruction cycles

[edit]To run programs, all single- or multi-chip CPUs:

- Read an instruction and decode it

- Find any associated data that is needed to process the instruction

- Process the instruction

- Write the results out

The instruction cycle is repeated continuously until the power is turned off.

Multicycle microarchitecture

[edit]Historically, the earliest computers were multicycle designs. The smallest, least-expensive computers often still use this technique. Multicycle architectures often use the least total number of logic elements and reasonable amounts of power. They can be designed to have deterministic timing and high reliability. In particular, they have no pipeline to stall when taking conditional branches or interrupts. However, other microarchitectures often perform more instructions per unit time, using the same logic family. When discussing "improved performance," an improvement is often relative to a multicycle design.

In a multicycle computer, the computer does the four steps in sequence, over several cycles of the clock. Some designs can perform the sequence in two clock cycles by completing successive stages on alternate clock edges, possibly with longer operations occurring outside the main cycle. For example, stage one on the rising edge of the first cycle, stage two on the falling edge of the first cycle, etc.

In the control logic, the combination of cycle counter, cycle state (high or low) and the bits of the instruction decode register determine exactly what each part of the computer should be doing. To design the control logic, one can create a table of bits describing the control signals to each part of the computer in each cycle of each instruction. Then, this logic table can be tested in a software simulation running test code. If the logic table is placed in a memory and used to actually run a real computer, it is called a microprogram. In some computer designs, the logic table is optimized into the form of combinational logic made from logic gates, usually using a computer program that optimizes logic. Early computers used ad-hoc logic design for control until Maurice Wilkes invented this tabular approach and called it microprogramming.[6]

Increasing execution speed

[edit]Complicating this simple-looking series of steps is the fact that the memory hierarchy, which includes caching, main memory and non-volatile storage like hard disks (where the program instructions and data reside), has always been slower than the processor itself. Step (2) often introduces a lengthy (in CPU terms) delay while the data arrives over the computer bus. A considerable amount of research has been put into designs that avoid these delays as much as possible. Over the years, a central goal was to execute more instructions in parallel, thus increasing the effective execution speed of a program. These efforts introduced complicated logic and circuit structures. Initially, these techniques could only be implemented on expensive mainframes or supercomputers due to the amount of circuitry needed for these techniques. As semiconductor manufacturing progressed, more and more of these techniques could be implemented on a single semiconductor chip. See Moore's law.

Instruction set choice

[edit]Instruction sets have shifted over the years, from originally very simple to sometimes very complex (in various respects). In recent years, load–store architectures, VLIW and EPIC types have been in fashion. Architectures that are dealing with data parallelism include SIMD and Vectors. Some labels used to denote classes of CPU architectures are not particularly descriptive, especially so the CISC label; many early designs retroactively denoted "CISC" are in fact significantly simpler than modern RISC processors (in several respects).

However, the choice of instruction set architecture may greatly affect the complexity of implementing high-performance devices. The prominent strategy, used to develop the first RISC processors, was to simplify instructions to a minimum of individual semantic complexity combined with high encoding regularity and simplicity. Such uniform instructions were easily fetched, decoded and executed in a pipelined fashion and a simple strategy to reduce the number of logic levels in order to reach high operating frequencies; instruction cache-memories compensated for the higher operating frequency and inherently low code density while large register sets were used to factor out as much of the (slow) memory accesses as possible.

Instruction pipelining

[edit]One of the first, and most powerful, techniques to improve performance is the use of instruction pipelining. Early processor designs would carry out all of the steps above for one instruction before moving onto the next. Large portions of the circuitry were left idle at any one step; for instance, the instruction decoding circuitry would be idle during execution and so on.

Pipelining improves performance by allowing a number of instructions to work their way through the processor at the same time. In the same basic example, the processor would start to decode (step 1) a new instruction while the last one was waiting for results. This would allow up to four instructions to be "in flight" at one time, making the processor look four times as fast. Although any one instruction takes just as long to complete (there are still four steps) the CPU as a whole "retires" instructions much faster.

RISC makes pipelines smaller and much easier to construct by cleanly separating each stage of the instruction process and making them take the same amount of time—one cycle. The processor as a whole operates in an assembly line fashion, with instructions coming in one side and results out the other. Due to the reduced complexity of the classic RISC pipeline, the pipelined core and an instruction cache could be placed on the same size die that would otherwise fit the core alone on a CISC design. This was the real reason that RISC was faster. Early designs like the SPARC and MIPS often ran over 10 times as fast as Intel and Motorola CISC solutions at the same clock speed and price.

Pipelines are by no means limited to RISC designs. By 1986 the top-of-the-line VAX implementation (VAX 8800) was a heavily pipelined design, slightly predating the first commercial MIPS and SPARC designs. Most modern CPUs (even embedded CPUs) are now pipelined, and microcoded CPUs with no pipelining are seen only in the most area-constrained embedded processors.[examples needed] Large CISC machines, from the VAX 8800 to the modern Intel and AMD processors, are implemented with both microcode and pipelines. Improvements in pipelining and caching are the two major microarchitectural advances that have enabled processor performance to keep pace with the circuit technology on which they are based.

Cache

[edit]It was not long before improvements in chip manufacturing allowed for even more circuitry to be placed on the die, and designers started looking for ways to use it. One of the most common was to add an ever-increasing amount of cache memory on-die. Cache is very fast and expensive memory. It can be accessed in a few cycles as opposed to many needed to "talk" to main memory. The CPU includes a cache controller which automates reading and writing from the cache. If the data is already in the cache it is accessed from there – at considerable time savings, whereas if it is not the processor is "stalled" while the cache controller reads it in.

RISC designs started adding cache in the mid-to-late 1980s, often only 4 KB in total. This number grew over time, and typical CPUs now have at least 2 MB, while more powerful CPUs come with 4 or 6 or 12MB or even 32MB or more, with the most being 768MB in the newly released EPYC Milan-X line, organized in multiple levels of a memory hierarchy. Generally speaking, more cache means more performance, due to reduced stalling.

Caches and pipelines were a perfect match for each other. Previously, it didn't make much sense to build a pipeline that could run faster than the access latency of off-chip memory. Using on-chip cache memory instead, meant that a pipeline could run at the speed of the cache access latency, a much smaller length of time. This allowed the operating frequencies of processors to increase at a much faster rate than that of off-chip memory.

Branch prediction

[edit]One barrier to achieving higher performance through instruction-level parallelism stems from pipeline stalls and flushes due to branches. Normally, whether a conditional branch will be taken isn't known until late in the pipeline as conditional branches depend on results coming from a register. From the time that the processor's instruction decoder has figured out that it has encountered a conditional branch instruction to the time that the deciding register value can be read out, the pipeline needs to be stalled for several cycles, or if it's not and the branch is taken, the pipeline needs to be flushed. As clock speeds increase the depth of the pipeline increases with it, and some modern processors may have 20 stages or more. On average, every fifth instruction executed is a branch, so without any intervention, that's a high amount of stalling.

Techniques such as branch prediction and speculative execution are used to lessen these branch penalties. Branch prediction is where the hardware makes educated guesses on whether a particular branch will be taken. In reality one side or the other of the branch will be called much more often than the other. Modern designs have rather complex statistical prediction systems, which watch the results of past branches to predict the future with greater accuracy. The guess allows the hardware to prefetch instructions without waiting for the register read. Speculative execution is a further enhancement in which the code along the predicted path is not just prefetched but also executed before it is known whether the branch should be taken or not. This can yield better performance when the guess is good, with the risk of a huge penalty when the guess is bad because instructions need to be undone.

Superscalar

[edit]Even with all of the added complexity and gates needed to support the concepts outlined above, improvements in semiconductor manufacturing soon allowed even more logic gates to be used.

In the outline above the processor processes parts of a single instruction at a time. Computer programs could be executed faster if multiple instructions were processed simultaneously. This is what superscalar processors achieve, by replicating functional units such as ALUs. The replication of functional units was only made possible when the die area of a single-issue processor no longer stretched the limits of what could be reliably manufactured. By the late 1980s, superscalar designs started to enter the market place.

In modern designs it is common to find two load units, one store (many instructions have no results to store), two or more integer math units, two or more floating point units, and often a SIMD unit of some sort. The instruction issue logic grows in complexity by reading in a huge list of instructions from memory and handing them off to the different execution units that are idle at that point. The results are then collected and re-ordered at the end.

Out-of-order execution

[edit]The addition of caches reduces the frequency or duration of stalls due to waiting for data to be fetched from the memory hierarchy, but does not get rid of these stalls entirely. In early designs a cache miss would force the cache controller to stall the processor and wait. Of course there may be some other instruction in the program whose data is available in the cache at that point. Out-of-order execution allows that ready instruction to be processed while an older instruction waits on the cache, then re-orders the results to make it appear that everything happened in the programmed order. This technique is also used to avoid other operand dependency stalls, such as an instruction awaiting a result from a long latency floating-point operation or other multi-cycle operations.

Register renaming

[edit]Register renaming refers to a technique used to avoid unnecessary serialized execution of program instructions because of the reuse of the same registers by those instructions. Suppose we have two groups of instruction that will use the same register. One set of instructions is executed first to leave the register to the other set, but if the other set is assigned to a different similar register, both sets of instructions can be executed in parallel (or) in series.

Multiprocessing and multithreading

[edit]Computer architects have become stymied by the growing mismatch in CPU operating frequencies and DRAM access times. None of the techniques that exploited instruction-level parallelism (ILP) within one program could make up for the long stalls that occurred when data had to be fetched from main memory. Additionally, the large transistor counts and high operating frequencies needed for the more advanced ILP techniques required power dissipation levels that could no longer be cheaply cooled. For these reasons, newer generations of computers have started to exploit higher levels of parallelism that exist outside of a single program or program thread.

This trend is sometimes known as throughput computing. This idea originated in the mainframe market where online transaction processing emphasized not just the execution speed of one transaction, but the capacity to deal with massive numbers of transactions. With transaction-based applications such as network routing and web-site serving greatly increasing in the last decade, the computer industry has re-emphasized capacity and throughput issues.

One technique of how this parallelism is achieved is through multiprocessing systems, computer systems with multiple CPUs. Once reserved for high-end mainframes and supercomputers, small-scale (2–8) multiprocessors servers have become commonplace for the small business market. For large corporations, large scale (16–256) multiprocessors are common. Even personal computers with multiple CPUs have appeared since the 1990s.

With further transistor size reductions made available with semiconductor technology advances, multi-core CPUs have appeared where multiple CPUs are implemented on the same silicon chip. Initially used in chips targeting embedded markets, where simpler and smaller CPUs would allow multiple instantiations to fit on one piece of silicon. By 2005, semiconductor technology allowed dual high-end desktop CPUs CMP chips to be manufactured in volume. Some designs, such as Sun Microsystems' UltraSPARC T1 have reverted to simpler (scalar, in-order) designs in order to fit more processors on one piece of silicon.

Another technique that has become more popular recently is multithreading. In multithreading, when the processor has to fetch data from slow system memory, instead of stalling for the data to arrive, the processor switches to another program or program thread which is ready to execute. Though this does not speed up a particular program/thread, it increases the overall system throughput by reducing the time the CPU is idle.

Conceptually, multithreading is equivalent to a context switch at the operating system level. The difference is that a multithreaded CPU can do a thread switch in one CPU cycle instead of the hundreds or thousands of CPU cycles a context switch normally requires. This is achieved by replicating the state hardware (such as the register file and program counter) for each active thread.

A further enhancement is simultaneous multithreading. This technique allows superscalar CPUs to execute instructions from different programs/threads simultaneously in the same cycle.

See also

[edit]References

[edit]- ^ Curriculum Guidelines for Undergraduate Degree Programs in Computer Engineering (PDF). Association for Computing Machinery. 2004. p. 60. Archived from the original (PDF) on 2017-07-03.

Comments on Computer Architecture and Organization: Computer architecture is a key component of computer engineering and the practicing computer engineer should have a practical understanding of this topic...

- ^ Murdocca, Miles; Heuring, Vincent (2007). Computer Architecture and Organization, An Integrated Approach. Wiley. p. 151. ISBN 9780471733881.

- ^ Clements, Alan. Principles of Computer Hardware (4th ed.). pp. 1–2.

- ^ Flynn, Michael J. (2007). "An Introduction to Architecture and Machines". Computer Architecture Pipelined and Parallel Processor Design. Jones and Bartlett. pp. 1–3. ISBN 9780867202045.

- ^ a b Hennessy, John L.; Patterson, David A. (2006). Computer Architecture: A Quantitative Approach (4th ed.). Morgan Kaufmann. ISBN 0-12-370490-1.

- ^ Wilkes, M. V. (1969). "The Growth of Interest in Microprogramming: A Literature Survey". ACM Computing Surveys. 1 (3): 139–145. doi:10.1145/356551.356553. S2CID 10673679.

Further reading

[edit]- Carnegie Mellon Computer Architecture Lectures

- Patterson, D.; Hennessy, J. (2004). Computer Organization and Design: The Hardware/Software Interface. Morgan Kaufmann. ISBN 1-55860-604-1.

- Hamacher, V. C.; Vrasenic, Z. G.; Zaky, S. G. (2001). Computer Organization. McGraw-Hill. ISBN 0-07-232086-9.

- Stallings, William (2002). Computer Organization and Architecture. Prentice Hall. ISBN 0-13-035119-9.

- Hayes, J. P. (2002). Computer Architecture and Organization. McGraw-Hill. ISBN 0-07-286198-3.

- Schneider, Gary Michael (1985). The Principles of Computer Organization. Wiley. pp. 6–7. ISBN 0-471-88552-5.

- Mano, M. Morris (1992). Computer System Architecture. Prentice Hall. p. 3. ISBN 0-13-175563-3.

- Abd-El-Barr, Mostafa; El-Rewini, Hesham (2004). Fundamentals of Computer Organization and Architecture. Wiley. p. 1. ISBN 0-471-46741-3.

- Gardner, J (2001). "PC Processor Microarchitecture". ExtremeTech.

- Gilreath, William F.; Laplante, Phillip A. (2012) [2003]. Computer Architecture: A Minimalist Perspective. Springer. ISBN 978-1-4615-0237-1.

- Patterson, David A. (10 October 2018). A New Golden Age for Computer Architecture. US Berkeley ACM A.M. Turing Laureate Colloquium. ctwj53r07yI.

Microarchitecture

View on GrokipediaFundamentals

Relation to Instruction Set Architecture

The Instruction Set Architecture (ISA) defines the abstract interface between software and hardware, specifying the instructions that a processor can execute, the registers available for data storage and manipulation, supported data types, and addressing modes for memory access.[4] This specification forms a contractual agreement ensuring that software compiled for the ISA will function correctly on any compatible hardware implementation, regardless of underlying details.[5] In contrast, the microarchitecture encompasses the specific hardware design that implements the ISA, including the organization of execution units, control logic, datapaths, and circuits that decode and execute instructions.[4] While the ISA remains invisible to the internal workings, the microarchitecture determines how instructions are processed at the circuit level, such as through sequences of micro-operations or direct hardware paths. Historically, this separation emerged in the 1960s and 1970s with systems like IBM's System/360, which established the ISA as a stable abstraction to enable software portability across evolving hardware generations.[4] A prominent example is the x86 ISA, originally defined in the 1978 Intel 8086 microprocessor with its 16-bit architecture and 29,000 transistors, which has since supported diverse microarchitectures, including the superscalar, out-of-order designs in modern Intel Core processors featuring billions of transistors and advanced execution pipelines.[6] The ISA is fixed for a given processor family to maintain binary compatibility and portability, allowing software to run unchanged across implementations optimized for different goals like performance, power efficiency, or cost. Microarchitectures, however, evolve independently to exploit technological advances, such as shrinking transistor sizes or novel circuit designs, without altering the ISA. For instance, Reduced Instruction Set Computer (RISC) ISAs, like RISC-V, emphasize simple, uniform instructions with register-to-register operations, which simplify microarchitectural decode logic and control signals, reducing overall hardware complexity compared to Complex Instruction Set Computer (CISC) ISAs like x86. CISC designs incorporate variable-length instructions and memory operands, necessitating more intricate microarchitectures with expanded decoders and handling for multiple operation formats, though modern optimizations have narrowed performance gaps.[7] This interplay allows multiple microarchitectures to realize the same ISA, fostering innovation while preserving software ecosystems.[8]Instruction Cycles

In a single-cycle microarchitecture, the execution of an instruction occurs within one clock cycle, encompassing four primary phases: fetch, decode, execute, and write-back. During the fetch phase, the processor retrieves the instruction from memory using the program counter (PC) as the address, loading it into the instruction register.[9] The decode phase interprets the opcode to determine the operation and identifies operands from registers or immediate values specified in the instruction.[9] In the execute phase, the arithmetic logic unit (ALU) or other functional units perform the required computation, such as addition or logical operations.[9] Finally, the write-back phase stores the result back to the register file or memory if applicable.[9] The clock cycle synchronizes these phases through control signals generated by the control unit, which activates multiplexers, registers, and ALU operations at precise times to ensure data flows correctly without overlap or race conditions.[10] This synchronization relies on the rising edge of the clock to latch data into registers, preventing asynchronous propagation delays.[10] Single-cycle designs face significant limitations, as all phases must complete within the same clock period, resulting in a cycle time dictated by the longest phase across all instructions, which inefficiently slows simple operations to match complex ones like memory accesses.[11] For instance, a load instruction requiring memory access extends the critical path, forcing the entire processor to operate at a reduced frequency unsuitable for high-performance needs.[11] The conceptual foundation of this cycle design traces back to John von Neumann's 1945 "First Draft of a Report on the EDVAC," which proposed a stored-program computer where instructions are fetched sequentially from memory, influencing the fetch-decode-execute model in modern microarchitectures.[12] Quantitatively, the clock cycle time is determined by the critical path—the longest delay through the combinational logic, including register clock-to-Q delays, ALU propagation, and memory access times—ensuring reliable operation but limiting overall throughput in single-cycle implementations.[11] Multicycle architectures address these inefficiencies by dividing execution into multiple shorter cycles tailored to instruction complexity.[10]Multicycle Architectures

In multicycle architectures, each instruction is executed over multiple clock cycles, allowing the processor's functional units to be reused across different phases of execution rather than dedicating separate hardware for every operation as in single-cycle designs. This approach divides the instruction lifecycle into distinct stages, such as instruction fetch, decode and register fetch, execution or address computation, memory access (if needed), and write-back. The number of cycles varies by instruction complexity; for example, arithmetic-logic unit (ALU) operations typically require 4 cycles, while load instructions may take 5 cycles due to an additional memory read stage.[13] A key component in many multicycle designs is microcode, which consists of sequences of microinstructions stored in a control memory, such as read-only storage. These microinstructions generate the control signals needed to sequence the datapath operations, making it feasible to implement complex instruction set architectures (ISAs), particularly complex instruction set computing (CISC) designs where instructions can perform multiple low-level tasks. Microcode enables fine-grained control over variable-length execution, adapting the cycle count dynamically based on the opcode and operands.[14] Multicycle architectures offer several advantages over single-cycle implementations, including reduced hardware complexity by sharing functional units like the ALU and memory interface across cycles, which lowers chip area and power consumption. They also permit shorter clock cycles, as each cycle handles a simpler subset of the instruction, potentially increasing the overall clock frequency and improving performance for instructions that complete in fewer cycles. However, a notable disadvantage is the potential for stalls due to control hazards, such as branches that require waiting for condition resolution before proceeding to the next fetch, which can increase average cycles per instruction.[15][13] Control units in multicycle processors can be implemented as either hardwired or microprogrammed designs. Hardwired control uses combinatorial logic and finite state machines to directly generate signals based on the current state and opcode, offering high speed due to minimal latency but limited flexibility for modifications or handling large ISAs. In contrast, microprogrammed control stores the state transitions and signal patterns as microcode in a control store, providing greater ease of design and adaptability—such as firmware updates—but at the cost of added latency from microinstruction fetches.[16] A seminal example of microcode in multicycle architectures is the IBM System/360, introduced in 1964, which used read-only storage for microprogram control across its model lineup to ensure binary compatibility. This allowed the same instruction set to run on machines with a 50-fold performance range, from low-end models like the System/360 Model 30 to high-end ones like the Model 70, by tailoring microcode to optimize hardware differences while maintaining a uniform ISA. The microcode handled multicycle sequencing for operations like floating-point and decimal arithmetic, facilitating efficient execution in a CISC environment.[17]Performance Enhancements

Instruction Pipelining

Instruction pipelining is a fundamental technique in microarchitecture that enhances processor performance by dividing the execution of an instruction into sequential stages and allowing multiple instructions to overlap in these stages, akin to an assembly line. This overlap increases instruction throughput, enabling the processor to complete more instructions over time without altering the inherent latency of individual instructions. The concept was pioneered in early supercomputers to address the growing demand for computational speed in scientific applications.[18] A typical implementation is the five-stage pipeline found in many reduced instruction set computer (RISC) architectures. The stages are:- Instruction Fetch (IF): The processor retrieves the instruction from memory using the program counter.

- Instruction Decode (ID): The instruction is decoded to determine the operation, and required operands are read from the register file.

- Execute (EX): Arithmetic and logical operations are performed by the arithmetic logic unit (ALU), or the effective address for memory operations is calculated.

- Memory Access (MEM): Data is read from or written to memory for load/store instructions; non-memory instructions bypass this stage effectively.

- Write-Back (WB): Results are written back to the register file for use by subsequent instructions.

where is the number of pipeline stages and CPI (cycles per instruction) incorporates overhead from hazards. This formula highlights how minimizing stalls maximizes utilization of the pipeline stages.[22][23]

Cache Memories

Cache memories are a critical microarchitectural component designed to mitigate the significant latency disparity between the processor and main memory, which can exceed 100 cycles in modern systems. By exploiting the principle of locality of reference—where programs exhibit temporal locality (re-referencing recently accessed data) and spatial locality (accessing data near recently used locations)—caches provide small, fast on-chip storage that holds frequently used data closer to the processor. This concept was formalized in the working set model, which demonstrated how program behavior clusters memory accesses into predictable sets, enabling efficient resource allocation in virtual memory systems and laying the groundwork for caching hierarchies.[24] Modern processors employ a multi-level cache hierarchy to balance speed, size, and cost. The level 1 (L1) cache is typically split into separate instruction (L1i) and data (L1d) caches, each dedicated to a single core for minimal latency, often around 1-4 cycles, with sizes of 16-64 KB per cache. The level 2 (L2) cache is larger, usually 256 KB to 2 MB per core, and unified (holding both instructions and data) to capture more locality while maintaining low access times of 10-20 cycles. The level 3 (L3) cache, shared across all cores, is the largest at several to tens of MB, serving as a victim cache for evicted lines from lower levels and providing latencies of 30-50 cycles. This organization ensures that most accesses are resolved quickly within the hierarchy, reducing stalls in the memory access stage of the instruction pipeline.[25] Cache organization varies by associativity to trade off hit rates against complexity and power. In a direct-mapped cache, each memory block maps to exactly one cache line (1-way set-associative), offering simplicity but high conflict misses when unrelated blocks collide. Set-associative caches divide the cache into sets of multiple lines (e.g., 4-way or 8-way), allowing a block to map to any line in its set, which improves hit rates by reducing conflicts while approximating fully associative designs where blocks can go anywhere, though the latter incurs higher hardware costs for parallel comparisons. Replacement policies determine which line to evict on a miss; the least recently used (LRU) policy, which removes the line unused for the longest time, is widely adopted as a practical approximation of the optimal Belady's algorithm that evicts the block referenced furthest in the future.[26] In multi-core processors, cache coherence protocols ensure consistency across private caches when cores access shared data. The MESI (Modified, Exclusive, Shared, Invalid) protocol, a snoopy-based scheme, maintains coherence by tracking line states and invalidating or updating copies via bus snooping: Modified indicates a unique dirty copy, Exclusive a unique clean copy, Shared multiple clean copies, and Invalid an unusable line. This protocol minimizes traffic while guaranteeing sequential consistency, forming the basis for implementations in x86 processors.[27] Key performance metrics for caches include hit rate (fraction of accesses found in cache) and miss rate (1 - hit rate), with miss penalty denoting the additional cycles to service a miss from lower levels or main memory. The average memory access time (AMAT) quantifies overall effectiveness as: High hit rates (often 95-99% for L1) and low penalties are crucial, as misses can amplify effective latency by factors of 10-100. Cache evolution began with the first commercial implementation in the IBM System/360 Model 85 mainframe in 1968, featuring a 16 KB high-speed buffer to accelerate main memory accesses in a virtual storage environment. Subsequent advancements integrated caches on-chip starting in the 1980s, with modern designs like Intel's inclusive L3 caches (where L3 duplicates all lower-level lines for simpler coherence) contrasting AMD's exclusive L3 (evicting L2 lines to L3 to maximize capacity). These refinements continue to evolve, supporting terabyte-scale memory while prioritizing low-latency access in high-performance computing.Branch Prediction

Branch prediction is a technique employed in pipelined processors to mitigate control hazards arising from branch instructions, which alter the sequential flow of execution.[28] Branches are categorized into conditional branches, which depend on runtime conditions such as register values; unconditional branches, which always transfer control; and indirect branches, which use computed addresses like register contents or memory loads.[28] A mispredicted branch in a deep pipeline necessitates flushing incorrectly fetched instructions, incurring a significant performance penalty as the pipeline refills from the correct path.[29] Static branch prediction, determined at compile time without runtime history, employs simple heuristics to guess branch outcomes. The always-not-taken strategy assumes all branches continue sequentially, which performs well for forward branches but poorly for loops.[30] A more refined approach, backward-taken/forward-not-taken, predicts backward branches (e.g., loop closures) as taken and forward branches as not taken, exploiting common program structures like loops and if-then-else statements.[30] Dynamic branch prediction leverages runtime information to adapt predictions, typically using hardware structures like branch history tables (BHTs). A basic BHT indexes a saturating counter table by the branch's program counter (PC), recording the outcome of the last few executions to predict future behavior.[30] Two-level predictors enhance accuracy by incorporating branch history patterns; for instance, the GShare scheme hashes the branch PC with a global history register (GHR) of recent branch outcomes to index the table, reducing aliasing interference.[31] Tournament predictors combine multiple sub-predictors, such as local history (per-branch) and global history schemes, selecting the best via a meta-predictor for each branch.[31] Advanced dynamic predictors build on these foundations for higher accuracy in modern processors. Perceptron-based predictors model branch behavior as a linear function of history bits, using a perceptron neural network to weigh features and output predictions, achieving superior performance on correlated patterns.[32] The TAGE (TAgged GEometric history length) predictor, adopted in Intel CPUs, employs multiple tables with varying history lengths and tags for precise indexing, allowing long histories while minimizing storage through geometric progression and partial tagging.[33] Early dynamic prediction appeared in the IBM System/360 Model 91 in 1967, which used a small history buffer to speculate on recent branch outcomes, influencing subsequent designs.[30] In deep pipelines, a branch misprediction penalty typically ranges from 10 to 20 cycles, depending on pipeline length and recovery mechanisms, as the frontend must be flushed and refilled.[29] Prediction accuracy is quantified as the ratio of correct predictions to total branches: This directly impacts performance, with speedup approximated by: where penalty is the cycle cost of a misprediction, highlighting the value of predictors achieving over 95% accuracy in reducing effective CPI.[28]Superscalar Execution

Superscalar execution refers to a microarchitectural technique that enables a processor to dispatch and execute multiple instructions simultaneously in a single clock cycle by exploiting instruction-level parallelism (ILP).[34] This approach relies on multiple execution units, such as arithmetic logic units (ALUs) and floating-point units, fed by wide dispatch logic that fetches, decodes, and issues instructions to available hardware resources.[35] By overlapping the execution of independent instructions, superscalar designs achieve higher throughput than scalar processors, which are limited to one instruction per cycle.[34] The degree of superscalarity, or issue width, indicates the maximum number of instructions that can be issued per cycle, ranging from 2-way (dual-issue) designs to 8-way or higher in advanced implementations.[36] Early superscalar processors typically featured 2-way or 4-way issue widths to balance complexity and performance gains, while modern high-performance cores often scale to 4-6 ways, with experimental designs reaching 8-way issue before saturation due to increasing hardware overhead.[37] For instance, a 4-way superscalar configuration can theoretically double the execution rate of a scalar pipeline if sufficient ILP is available, though practical implementations require careful resource allocation to avoid bottlenecks.[35] In basic superscalar architectures, instruction scheduling employs in-order issue, where instructions are dispatched to execution units in their original program sequence, often using reservation stations to buffer instructions until operands are available, enabling dynamic scheduling and out-of-order execution while maintaining in-order completion to ensure architectural correctness.[35] This dynamic approach exposes parallelism through hardware mechanisms like operand forwarding and branch prediction, though it is more complex than purely static compiler-based scheduling.[35] The exploitation of ILP in superscalar processors is fundamentally limited by data dependencies, such as read-after-write (RAW) hazards, and resource constraints, including the availability of execution units and register file ports.[38] True data dependencies enforce sequential execution for correctness, reducing available parallelism to an average of 5-7 instructions in typical workloads, even with techniques like register renaming to eliminate false dependencies.[38] Resource limitations further cap ILP, as wider issue widths demand exponentially more hardware for fetch, decode, and dispatch, leading to diminishing returns beyond 8-way designs where control dependencies from branches exacerbate inefficiencies.[34] A prominent example of early superscalar implementation is the Intel Pentium processor, introduced in 1993 as the first commercial superscalar x86 design, featuring two parallel integer pipelines for 2-way issue.[39] The Pentium's architecture dispatched one integer and one floating-point instruction per cycle when possible, demonstrating practical ILP exploitation in consumer hardware.[39] Superscalar execution enables instructions per cycle (IPC) greater than 1, with theoretical maximum IPC given by the minimum of the issue width and the available ILP in the instruction stream: [40] In practice, 2-way designs achieve IPC around 1.5-2.0 under ideal conditions, while 4-way configurations can reach up to 2.4 IPC with effective branch prediction, though real-world workloads often yield lower values due to dependency stalls.[35]Advanced Execution Models

Out-of-Order Execution

Out-of-order execution is a dynamic scheduling technique in microarchitecture that reorders instructions at runtime to maximize instruction-level parallelism (ILP) by executing instructions as soon as their operands are available, rather than strictly following the program order. This approach mitigates stalls caused by data dependencies, resource conflicts, or long-latency operations, allowing functional units to remain utilized even when subsequent instructions are delayed. By decoupling the fetch and decode stages from execution, processors can overlap the computation of independent instructions, improving overall throughput.[41] The concept of out-of-order execution was pioneered in the IBM System/360 Model 91, announced in 1966 with first deliveries in 1967, which implemented dynamic instruction scheduling to handle floating-point operations more efficiently in scientific computing environments. This machine used a centralized control mechanism to track dependencies and permit independent instructions to execute ahead of dependent ones, marking the first commercial realization of such hardware. Building on this, Robert Tomasulo's algorithm, also from 1967, provided a foundational framework for dynamic scheduling using distributed reservation stations attached to functional units. In Tomasulo's design, instructions are issued to reservation stations where they wait for operands; a common data bus broadcasts results to resolve dependencies, enabling out-of-order execution while renaming registers to eliminate false hazards.[42][41] Core components of modern out-of-order execution include an instruction queue (or dispatch buffer) that holds decoded instructions after fetch and rename stages, a reorder buffer (ROB) to maintain program order for commitment, and issue logic that tracks data dependencies via tag matching. The instruction queue decouples dispatch from issue, allowing a stream of instructions to be buffered while the scheduler selects ready ones for execution based on operand availability. Dependency tracking occurs through mechanisms like reservation station tags in Tomasulo-style designs or wakeup-select logic in issue queues, where source operands are monitored for completion signals from executing units. The ROB serves as a circular buffer that stores speculative results, ensuring that instructions complete execution out-of-order but commit results in original program order to preserve architectural state.[41][43] Recovery mechanisms in out-of-order execution rely heavily on the ROB to handle precise exceptions and mis speculations. When an exception occurs, such as a page fault or branch misprediction, the ROB enables rollback by flushing younger instructions and restoring the processor state to the point of the exception, discarding speculative work without side effects. This in-order commitment from the ROB head ensures that architectural registers and memory are updated only after all prior instructions have completed, maintaining the illusion of sequential execution for software. In Tomasulo's original algorithm, recovery was simpler due to the lack of speculation, but modern extensions integrate the ROB with checkpointing of rename maps for efficient restoration.[43] The effectiveness of out-of-order execution is often measured by the window size, which represents the number of in-flight instructions that can be tracked simultaneously, typically limited by the ROB and reservation station capacities. In modern high-performance CPUs, such as Intel's Skylake architecture, the window size exceeds 200 instructions, allowing processors to extract ILP from larger code regions and tolerate latencies from cache misses or branch resolutions.[44] For example, empirical measurements on Sandy Bridge processors show a ROB capacity of around 168 entries, enabling significant overlap of dependent chains. This larger window contributes to higher instructions per cycle (IPC), where effective IPC is calculated as the number of executed instructions divided by the total cycles, accounting for reduced stalls through parallelism. Register renaming complements out-of-order execution by resolving name dependencies, further expanding the exploitable ILP within the window.[45][46] The IPC in out-of-order processors can be expressed as: This metric quantifies the throughput gains from reordering, with typical values exceeding 1.0 in superscalar designs due to multiple instruction issue and execution.[47]Register Renaming

Register renaming is a microarchitectural technique employed in out-of-order processors to eliminate false data dependencies, thereby increasing instruction-level parallelism by dynamically mapping a limited set of architectural registers to a larger pool of physical registers.[48] This mechanism abstracts the programmer-visible registers defined by the instruction set architecture (ISA), allowing multiple in-flight instructions to use distinct physical registers even if they reference the same architectural register name.[48] In the context of out-of-order execution, register renaming facilitates the reordering of instructions without violating true data dependencies. Data dependencies in instruction sequences are classified as true or false. True dependencies, also known as flow dependencies (read-after-write, or RAW), represent actual data flow where a subsequent instruction requires the result of a prior one; these cannot be eliminated and must be respected for correctness.[48] False dependencies include anti-dependencies (write-after-read, or WAR), where an instruction writes to a register before a prior read completes, and output dependencies (write-after-write, or WAW), where multiple writes target the same register.[48] Register renaming resolves WAR and WAW hazards by assigning unique physical registers to each write operation, effectively renaming the destination registers in the instruction stream and preventing conflicts arising from register name reuse.[48] The implementation relies on a physical register file (PRF) that exceeds the architectural register count to accommodate pending operations; for instance, designs often provision 128 physical registers to support 32 architectural ones, providing sufficient buffer for speculative execution.[49] A mapping table, typically implemented as a register alias table (RAT), maintains the current architectural-to-physical register mappings and is updated during the rename stage of the pipeline.[48] Available physical registers are tracked via a free list, from which new allocations are drawn for instruction destinations, while deallocation occurs upon commit from the reorder buffer (ROB), ensuring precise state restoration on exceptions or mispredictions.[48] The renaming process incurs hardware overhead, including table lookups where pointer widths scale as of the physical register count—for 128 registers, this equates to 7 bits per entry—impacting area and access latency.[49] A prominent example is the Alpha 21264 microprocessor, released in 1998, which integrated register renaming into a dedicated map stage to handle up to four instructions per cycle.[50] This processor supported 80 physical integer registers and 72 physical floating-point registers against its 31 architectural integer and 31 floating-point registers (excluding the implicit zero register), enabling robust out-of-order issue and speculative execution.[50] By decoupling architectural from physical registers, the Alpha 21264 achieved significant performance gains, demonstrating how renaming sustains higher ILP in superscalar designs.[50] Overall, register renaming boosts ILP by mitigating false dependencies, allowing processors to extract more parallelism from code with limited architectural registers.[48]Multiprocessing

Multiprocessing in microarchitecture refers to the integration of multiple processing cores on a single chip to enable task parallelism, where each core operates independently while sharing system resources for coordinated execution. This design enhances overall system performance by distributing workloads across homogeneous cores, but requires sophisticated mechanisms for inter-core communication and data consistency to avoid bottlenecks and errors. Modern multi-core processors typically employ symmetric multiprocessing (SMP), in which all cores are identical in capability and have equal access to a unified shared memory space, allowing any core to execute any thread without distinction.[51] A key challenge in SMP systems is maintaining cache coherence, ensuring that all cores see a consistent view of shared data despite local caches. Cache coherence protocols address this by managing the state of cache lines across cores. Snoopy protocols, common in smaller-scale systems, rely on a shared bus where each cache controller monitors (or "snoops") all memory transactions broadcast by other cores, updating local cache states accordingly to invalidate or supply data as needed; this approach is simple but scales poorly due to broadcast overhead.[52] In contrast, directory-based protocols use a centralized or distributed directory structure to track the location and state of shared cache lines, employing point-to-point messages to notify only relevant cores, which improves scalability for larger core counts by avoiding unnecessary traffic.[52] An example extension is the MOESI protocol, which builds on the basic MESI (Modified, Exclusive, Shared, Invalid) states by adding an "Owned" state; this allows a core to own a modified cache line and supply it directly to another core without writing back to memory first, reducing latency in shared-modified scenarios and optimizing bandwidth in systems like AMD processors.[53] Inter-core coordination in multi-core chips is facilitated by on-chip interconnect networks that route data and coherence messages between cores, caches, and memory controllers. Common topologies include ring interconnects, where cores are connected in a circular fashion for low-latency unidirectional communication, as used in early Intel multi-core designs like Nehalem, and mesh topologies, which arrange cores in a 2D grid with routers at intersections for scalable bandwidth in high-core-count processors.[54] For example, Intel's mesh interconnect, introduced in Skylake-SP processors, connects up to 28 cores in a 5x6 grid of tiles, enabling efficient routing for cache coherence and I/O while supporting high-bandwidth operations up to 25.6 GB/s per link.[54] In multi-socket SMP configurations, off-chip interconnects like Intel's QuickPath Interconnect (QPI) extend this coordination across chips, providing point-to-point links with snoop-based coherence (e.g., MESIF protocol) at speeds up to 6.4 GT/s to maintain shared memory semantics in distributed systems.[55] Despite these advances, multiprocessing scalability is limited by inherent software and hardware constraints, as described by Amdahl's law, which quantifies the theoretical speedup from parallelization. The law states that the maximum speedup for a workload with serial fraction (0 ≤ ≤ 1) executed on cores is given by where the parallelizable portion is divided among the cores, but the serial part remains a bottleneck; for instance, if 5% of a workload is serial, even infinite cores yield at most 20x speedup.[56] This highlights the need for minimizing serial code and optimizing inter-core overheads like coherence traffic. A seminal example of x86 multiprocessing implementation is the AMD Opteron processor family, with its first dual-core variant (model 165) announced in 2004 and shipped in 2005, marking the debut of multi-core x86 architecture for servers and enabling SMP with shared memory via AMD's Direct Connect Architecture for improved parallel performance.[57]Multithreading

Multithreading in microarchitecture enables concurrent execution of multiple threads within a single processor core to improve resource utilization and mask latencies, such as those from memory accesses. By maintaining multiple thread contexts and switching between them efficiently, multithreading tolerates stalls in one thread by advancing others, thereby increasing overall throughput without requiring additional hardware cores. This approach complements techniques like out-of-order execution by providing thread-level parallelism to further exploit instruction-level parallelism.[58] There are three primary types of hardware multithreading: fine-grained, coarse-grained, and simultaneous. Fine-grained multithreading, also known as cycle-by-cycle or interleaved multithreading, switches threads every clock cycle, issuing instructions from only one thread per cycle to hide short latencies like pipeline hazards.[58] Coarse-grained multithreading, or block multithreading, switches threads only on long stalls, such as cache misses, to minimize context-switch overhead while tolerating infrequent but high-latency events.[59] Simultaneous multithreading (SMT) extends fine-grained switching by allowing multiple threads to issue instructions to the processor's functional units in the same cycle, maximizing parallelism in superscalar designs.[58] In multithreaded processors, resources such as register files, pipelines, and caches are shared among threads to varying degrees, with some architectural state duplicated for isolation. For instance, in SMT implementations, threads share execution units and on-chip caches but maintain separate register files and program counters to preserve independence.[58] Similarly, the IBM POWER5 dynamically allocates portions of its 120 general-purpose registers and floating-point registers between two threads per core, while sharing the L2 cache and branch history tables.[60] Intel's Hyper-Threading Technology duplicates architectural state like registers but shares caches and execution resources to enable logical processors within a physical core.[61] The primary benefits of multithreading include hiding latency from memory stalls and improving single-core throughput. By advancing alternative threads during stalls, multithreading reduces idle cycles in the pipeline, particularly effective for workloads with irregular memory access patterns. In SMT designs, this can boost instructions per cycle (IPC) by 20-30% on average for server applications, as threads fill resource slots left unused by a single thread.[61] Prominent examples include Intel's Hyper-Threading Technology, introduced in 2002 on the Xeon processor family, which implemented two-way SMT to achieve up to 30% performance gains in benchmarks like online transaction processing.[61] The IBM POWER5, released in 2004, was an early production dual-core processor with two-way SMT per core, enabling dynamic thread prioritization and resource balancing for enhanced throughput in commercial workloads.[60] Despite these advantages, multithreading introduces challenges such as resource contention, where threads compete for shared units like caches, potentially increasing miss rates and degrading performance.[58] Fairness in scheduling is another issue, as uneven resource allocation can lead to thread starvation, requiring hardware mechanisms like priority adjustments to ensure equitable progress across threads.[62] SMT throughput can be modeled as the sum of IPC across co-executing threads under shared resources: where is the number of threads and is the instructions per cycle for thread , reflecting aggregate utilization despite contention.[63]Design Considerations

Instruction Set Selection

Instruction set selection profoundly shapes microarchitectural design by determining the complexity of instruction decoding, execution pipelines, and overall hardware efficiency. The primary dichotomy lies between Reduced Instruction Set Computing (RISC) and Complex Instruction Set Computing (CISC) paradigms, each imposing distinct trade-offs on processor implementation. RISC architectures prioritize simplicity to facilitate high-performance pipelining and parallel execution, while CISC designs emphasize instruction density at the expense of increased decoding overhead. These choices influence everything from transistor allocation to power consumption in modern processors.[64] RISC principles center on fixed-length instructions, typically 32 bits, which enable uniform fetching and decoding without variable parsing overhead. This approach adopts a load/store architecture, where only dedicated load and store instructions access memory, while arithmetic and logical operations occur strictly between registers. Such simplicity in operations—limiting instructions to basic register-to-register ALU tasks with few addressing modes—reduces the need for intricate control logic, allowing for shallower pipelines and easier optimization for speed. For instance, architectures like ARM exemplify this by executing most instructions in a single cycle, promoting efficient microarchitectures suited to embedded and mobile applications.[65][66] In contrast, CISC architectures feature variable-length instructions, often spanning multiple bytes, which demand sophisticated decoding hardware or microcode interpreters to handle diverse formats and semantics. Complex instructions in CISC can perform multiple operations, such as memory access combined with computation, reducing the total instruction count but complicating hardware design. The x86 architecture, a quintessential CISC example, incorporates these traits, leading to denser code that minimizes memory bandwidth requirements but necessitates advanced decoders to parse instructions sequentially or in parallel.[64][67] The trade-offs between RISC and CISC manifest in microarchitectural complexity and performance metrics. RISC enables simpler, faster designs by minimizing decode stages—often just one cycle—facilitating superscalar and out-of-order execution without excessive hardware. ARM-based processors, for example, achieve this through their streamlined instruction set, yielding microarchitectures with lower power draw and easier scalability. Conversely, CISC like x86 allows for code size reduction, with static binaries averaging 0.87 MB compared to 0.95 MB for equivalent ARM code in SPEC INT benchmarks (as of 2013), but at the cost of decoder complexity that can consume multiple cycles for variable-length parsing. This density benefit, however, is offset by the need for micro-op translation, where complex instructions expand to 1.03–1.07 μops on average, increasing dispatch bandwidth demands. Overall, while modern RISC designs can achieve low cycles per instruction comparable to optimized CISC implementations—as ISA differences have become negligible with advanced microarchitectures—CISC's enduring appeal stems from legacy compatibility and compact executables, though it elevates hardware costs for decoding.[68][69][70] Hybrid approaches bridge these paradigms in contemporary designs, particularly in x86 processors, where front-end decoders translate variable-length CISC instructions into fixed-length, RISC-like micro-operations (μops) for backend execution. This micro-op format simplifies scheduling in out-of-order engines, mitigating CISC's decoding bottlenecks through techniques like μop caches that store pre-decoded instructions, reducing fetch overhead by up to 34% in fused operations. Such adaptations allow x86 to retain code density advantages while adopting RISC-inspired efficiency in the microarchitecture.[67] The historical shift toward RISC began in the 1980s, sparked by university research funded by the U.S. government and commercialized through workstation vendors. Pioneering efforts at Berkeley produced RISC I in 1982, influencing designs like MIPS and SPARC, which emphasized simplified instructions to counter the inefficiencies of prevailing CISC systems like VAX. MIPS, commercialized by MIPS Computer Systems, powered high-end workstations and later embedded devices, while Sun Microsystems' SPARC enabled scalable multiprocessing. This "RISC revolution" challenged CISC dominance by demonstrating superior performance through reduced complexity, though CISC persisted in personal computing due to software ecosystems. By the 1990s, falling RAM costs further eroded CISC's code size edge, accelerating RISC adoption in diverse domains.[71][64]Power and Efficiency

Power consumption in microprocessors arises from two primary components: dynamic power, which results from the switching activity of transistors during computation, and static power, which stems from leakage currents even when the circuit is idle.[72] Dynamic power scales with the square of the supply voltage and linearly with frequency and switching capacitance, making it dominant in active workloads, while static power has become increasingly significant as transistor sizes shrink below 100 nm, contributing up to 40% of total power in some designs.[72] To mitigate these, microarchitectures employ dynamic voltage and frequency scaling (DVFS), which dynamically adjusts the supply voltage and clock frequency based on workload demands to reduce both dynamic and static power without severely impacting performance.[73] Key techniques for power management include clock gating, which inserts logic to disable clock signals to idle functional units, thereby eliminating unnecessary switching and reducing dynamic power by 10-30% in pipelined processors.[74] Power domains partition the chip into isolated voltage islands, allowing independent voltage scaling or shutdown of non-critical sections, such as memory or peripheral units, to optimize overall energy use.[75] Fine-grained shutdown mechanisms, often implemented via power gating with sleep transistors, enable rapid isolation and deactivation of small execution units or clusters during inactivity, cutting static leakage by up to 90% while minimizing wakeup latency to a few cycles.[76] Efficiency in microarchitectures is evaluated using metrics like performance per watt, which quantifies computational throughput relative to energy draw, and instructions per joule, measuring the number of executed instructions divided by total energy consumed.[77] In multi-core chips, dark silicon emerges as a constraint where power and thermal budgets prevent simultaneous activation of all transistors, leaving portions of the die powered off—projected to affect over 50% of a chip at 8 nm nodes despite transistor density increases.[78] These metrics underscore the trade-off captured by the fundamental relation: where efficiency improves by minimizing energy per instruction, often expressed as: Modern examples illustrate these principles: ARM's big.LITTLE architecture integrates high-performance "big" cores with energy-efficient "LITTLE" cores, dynamically migrating tasks to balance peak performance and low-power operation, achieving up to 75% energy savings in mobile workloads.[79] Similarly, Intel's Enhanced SpeedStep Technology enables OS-controlled P-states to scale frequency and voltage, reducing average power by 20-50% during light loads on x86 processors.[80] A major challenge arose from the breakdown of Dennard scaling in the mid-2000s, where voltage reductions stalled due to leakage concerns, causing power density to rise and imposing thermal limits that cap clock frequencies around 4 GHz despite continued transistor scaling.[78] This shift has driven microarchitectural innovations toward parallelism and heterogeneity to sustain performance within fixed power envelopes, typically 100-130 W per chip.[78]Security Features

Microarchitectural security features address vulnerabilities that exploit hardware-level behaviors, such as speculative execution and memory access patterns, to leak sensitive data across security boundaries. In 2018, researchers disclosed Spectre and Meltdown, two classes of attacks that leverage speculative execution in modern processors to bypass isolation mechanisms like virtual memory. Spectre variants induce branch mispredictions or indirect branch targets to speculatively access unauthorized data, using side channels like cache timing to exfiltrate it, affecting processors from Intel, AMD, and ARM. Meltdown exploits out-of-order execution to read kernel memory from user space by circumventing page table isolation during speculation, primarily impacting Intel x86 architectures but also some ARM and IBM Power designs. These vulnerabilities arise from core microarchitectural elements like branch prediction and out-of-order execution, which prioritize performance but inadvertently enable transient data leaks. Another notable issue is Rowhammer, a DRAM-level disturbance error where repeated access to a memory row can flip bits in adjacent rows due to cell-to-cell interference, potentially escalating to privilege escalation or data corruption in vulnerable systems. To counter these threats, microarchitectures incorporate speculation barriers and fences to control execution flow and prevent leakage. For instance, Intel introduced the LFENCE instruction as a serializing barrier that blocks speculative execution across fences, mitigating Meltdown by ensuring memory accesses are non-speculative when needed. Randomized branch predictors, such as those with stochastic indirect branch handling, reduce the predictability of speculative paths exploited in Spectre variant 2, by varying predictor outcomes to thwart training-based attacks. Software mitigations like Kernel Page Table Isolation (KPTI) further isolate user and kernel address spaces, but hardware-level redesigns in post-2018 Intel and ARM cores, including enhanced speculation tracking and indirect branch predictors (IBPB), provide more efficient defenses. These evolutions, starting with Intel's 9th-generation Core processors and ARM's Cortex-A76, involve flushing or restricting speculative state on context switches to limit transient execution windows. Hardware security extensions offer proactive protections through isolated execution environments and memory safeguards. Intel's Software Guard Extensions (SGX) creates encrypted enclaves in main memory, allowing applications to process sensitive data in CPU-protected regions isolated from the OS and hypervisor, with remote attestation to verify enclave integrity. AMD's Secure Memory Encryption (SME) enables page-granular encryption of system memory using a boot-generated key managed by the AMD Secure Processor, defending against physical attacks like cold boot while supporting virtualization via Secure Encrypted Virtualization (SEV). Apple's implementation of Pointer Authentication in ARMv8.3-A, introduced in 2018, uses hardware-generated codes embedded in pointers to detect corruption or ROP attacks, signing return addresses and indirect branches with keys derived from address and context bits for low-overhead integrity checks. These features introduce performance trade-offs, with mitigations often reducing instructions per cycle (IPC) by 5-30% depending on workload and configuration. For example, enabling full Spectre and Meltdown defenses via microcode updates and OS patches can degrade throughput in kernel-intensive tasks by up to 30%, as measured on Windows systems with Intel processors, due to increased serialization and state flushing. Despite these costs, ongoing redesigns aim to minimize overhead, such as ARM's Branch Target Identification (BTI) in ARMv8.5, which restricts indirect branches to vetted targets without fully disabling speculation. As of 2025, new microarchitectural vulnerabilities continue to emerge, underscoring the evolving threat landscape. For instance, Intel processors have been affected by flaws such as CVE-2024-28956 and CVE-2025-24495, which enable kernel memory leaks at rates up to 17 Kb/s through side-channel exploitation of processor internals, and CVE-2025-20109 involving improper isolation in indirect branch predictors. These issues, disclosed in 2024-2025, affect recent Intel architectures and have prompted microcode updates and hardware enhancements in newer cores to improve compartmentalization and reduce leakage risks.[81][82]References

- https://en.wikichip.org/wiki/intel/microarchitectures/skylake_(client)

- https://en.wikichip.org/wiki/intel/mesh_interconnect_architecture