Recent from talks

Nothing was collected or created yet.

The examples and perspective in this article deal primarily with the United States and do not represent a worldwide view of the subject. (July 2024) |

| Part of a series on |

| Economics |

|---|

|

|

|

A wage is payment made by an employer to an employee for work done in a specific period of time. Some examples of wage payments include compensatory payments such as minimum wage, prevailing wage, and yearly bonuses, and remunerative payments such as prizes and tip payouts. Wages are part of the expenses that are involved in running a business. It is an obligation to the employee regardless of the profitability of the company.

Payment by wage contrasts with salaried work, in which the employer pays an arranged amount at steady intervals (such as a week or month) regardless of hours worked, with commission which conditions pay on individual performance, and with compensation based on the performance of the company as a whole. Waged employees may also receive tips or gratuity paid directly by clients and employee benefits which are non-monetary forms of compensation. Since wage labour is the predominant form of work, the term "wage" sometimes refers to all forms (or all monetary forms) of employee compensation.

Origins and necessary components

[edit]Wage labour involves the exchange of money for time spent at work. As Moses I. Finley lays out the issue in The Ancient Economy:

- The very idea of wage-labour requires two difficult conceptual steps. First it requires the abstraction of a man's labour from both his person and the product of his work. When one purchases an object from an independent craftsman ... one has not bought his labour but the object, which he had produced in his own time and under his own conditions of work. But when one hires labour, one purchases an abstraction, labour-power, which the purchaser then uses at a time and under conditions which he, the purchaser, not the "owner" of the labour-power, determines (and for which he normally pays after he has consumed it). Second, the wage labour system requires the establishment of a method of measuring the labour one has purchased, for purposes of payment, commonly by introducing a second abstraction, namely labour-time.[1]

The wage is the monetary measure corresponding to the standard units of working time (or to a standard amount of accomplished work, defined as a piece rate). The earliest such unit of time, still frequently used, is the day of work. The invention of clocks coincided with the elaborating of subdivisions of time for work, of which the hour became the most common, underlying the concept of an hourly wage.[2][3]

Wages were paid in the Middle Kingdom of ancient Egypt,[4] ancient Greece,[5] and ancient Rome.[5] Following the unification of the city-states in Assyria and Sumer by Sargon of Akkad into a single empire ruled from his home city circa 2334 BC, common Mesopotamian standards for length, area, volume, weight, and time used by artisan guilds were promulgated by Naram-Sin of Akkad (c. 2254–2218 BC), Sargon's grandson, including shekels.[6] Codex Hammurabi Law 234 (c. 1755–1750 BC) stipulated a 2-shekel prevailing wage for each 60-gur (300-bushel) vessel constructed in an employment contract between a shipbuilder and a ship-owner.[7][8][9] Law 275 stipulated a ferry rate of 3-gerah per day on a charterparty between a ship charterer and a shipmaster. Law 276 stipulated a 21⁄2-gerah per day freight rate on a contract of affreightment between a charterer and shipmaster, while Law 277 stipulated a 1⁄6-shekel per day freight rate for a 60-gur vessel.[10][11][9]

Determinants of wage rates

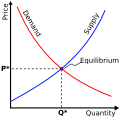

[edit]Depending on the structure and traditions of different economies around the world, wage rates will be influenced by market forces (supply and demand), labour organisation, legislation, and tradition.

Wage differences

[edit]Even in countries where market forces primarily set wage rates, studies show that there are still differences in remuneration for work based on sex and race. For example, according to the U.S. Bureau of Labor Statistics, in 2007 women of all races made approximately 80% of the median wage of their male counterparts. This is likely due to the supply and demand for women in the market because of family obligations.[12] Similarly, white men made about 84% the wage of Asian men, and black men 64%.[13] These are overall averages and are not adjusted for the type, amount, and quality of work done.

Effects

[edit]Corruption

[edit]It is known that the wage level of employees in the public sector affects the frequency of corruption, and that higher salary levels for public sector workers help reduce corruption. It has also been shown that countries with smaller wage gaps in the public sector have less corruption. [14]

Wages in the United States

[edit]

Seventy-five million workers earned hourly wages in the United States in 2012, making up 59% of employees.[15] In the United States, wages for most workers are set by market forces, or else by collective bargaining, where a labor union negotiates on the workers' behalf. The Fair Labor Standards Act establishes a minimum wage at the federal level that all states must abide by, among other provisions. Fourteen states and a number of cities have set their own minimum wage rates that are higher than the federal level. For certain federal or state government contacts, employers must pay the so-called prevailing wage as determined according to the Davis–Bacon Act or its state equivalent. Activists have undertaken to promote the idea of a living wage rate which account for living expenses and other basic necessities, setting the living wage rate much higher than current minimum wage laws require. The minimum wage rate is there to protect the well being of the working class.[16]

In the second quarter of 2022, the total U.S. labor costs grew up 5.2% year over year, the highest growth since the starting point of the serie in 2001.[18]

Definitions

[edit]For purposes of federal income tax withholding, 26 U.S.C. § 3401(a) defines the term "wages" specifically for chapter 24 of the Internal Revenue Code:

"For purposes of this chapter, the term “wages” means all remuneration (other than fees paid to a public official) for services performed by an employee for his employer, including the cash value of all remuneration (including benefits) paid in any medium other than cash;" In addition to requiring that the remuneration must be for "services performed by an employee for his employer," the definition goes on to list 23 exclusions that must also be applied.[19]

See also

[edit]- Compensation of employees

- Employee benefit (non-monetary compensation in exchange for labor)

- Employment

- Income tax

- Labour economics

- List of countries by average wage

- Labor power

- List of sovereign states in Europe by net average wage

- Marginal factor cost

- Overtime

- Performance-related pay

- Price elasticity of supply

- Proletarian

- Real wages

- Wage labour

- Wage share

- Wage slavery

- Working class

References

[edit]- ^ Finley, Moses I. (1973). The ancient economy. Berkeley: University of California Press. p. 65. ISBN 9780520024366.

- ^ Thompson, E. P. (1967). "Time, Work-Discipline, and Industrial Capitalism". Past and Present (38): 56–97. doi:10.1093/past/38.1.56. JSTOR 649749.

- ^ Dohrn-van Rossum, Gerhard (1996). History of the hour: Clocks and modern temporal orders. Thomas Dunlap (trans.). Chicago: University of Chicago Press. ISBN 9780226155104.

- ^ Ezzamel, Mahmoud (July 2004). "Work Organization in the Middle Kingdom, Ancient Egypt". Organization. 11 (4): 497–537. doi:10.1177/1350508404044060. ISSN 1350-5084. S2CID 143251928.

- ^ a b Finley, Moses I. (1973). The ancient economy. Berkeley: University of California Press. ISBN 9780520024366.

- ^ Powell, Marvin A. (1995). "Metrology and Mathematics in Ancient Mesopotamia". In Sasson, Jack M. (ed.). Civilizations of the Ancient Near East. Vol. III. New York, NY: Charles Scribner's Sons. p. 1955. ISBN 0-684-19279-9.

- ^ Hammurabi (1903). "Code of Hammurabi, King of Babylon". Records of the Past. 2 (3). Translated by Sommer, Otto. Washington, DC: Records of the Past Exploration Society: 85. Retrieved June 20, 2021.

234. If a shipbuilder builds ... as a present [compensation].

- ^ Hammurabi (1904). "Code of Hammurabi, King of Babylon" (PDF). Liberty Fund. Translated by Harper, Robert Francis (2nd ed.). Chicago: University of Chicago Press. p. 83. Retrieved June 20, 2021.

§234. If a boatman build ... silver as his wage.

- ^ a b Hammurabi (1910). "Code of Hammurabi, King of Babylon". Avalon Project. Translated by King, Leonard William. New Haven, CT: Yale Law School. Retrieved June 20, 2021.

- ^ Hammurabi (1903). "Code of Hammurabi, King of Babylon". Records of the Past. 2 (3). Translated by Sommer, Otto. Washington, DC: Records of the Past Exploration Society: 88. Retrieved June 20, 2021.

275. If anyone hires a ... day as rent therefor.

- ^ Hammurabi (1904). "Code of Hammurabi, King of Babylon" (PDF). Liberty Fund. Translated by Harper, Robert Francis (2nd ed.). Chicago: University of Chicago Press. p. 95. Retrieved June 20, 2021.

§275. If a man hire ... its hire per day.

- ^ Magnusson, Charlotta. "Why Is There A Gender Wage Gap According To Occupational Prestige?." Acta Sociologica (Sage Publications, Ltd.) 53.2 (2010): 99-117. Academic Search Complete. Web. 26 Feb. 2015.

- ^ U.S. Bureau of Labor Statistics. "Earnings of Women and Men by Race and Ethnicity, 2007" Accessed June 29, 2012

- ^

Asli Demirgüç-Kunt, Michael Lokshin, Vladimir Kolchin (8 April 2023). "Effects of public sector wages on corruption: Wage inequality matters". Journal of Comparative Economics. 51 (3): 941–959. doi:10.1016/j.jce.2023.03.005. hdl:10986/35521.

{{cite journal}}: CS1 maint: multiple names: authors list (link) - ^ "Employees" as a category excludes all those who are self-employed, and this statistics only considers workers over the age of 16. U.S. Department of Labor. Bureau of Labor Statistics (2013-02-26), Characteristics of Minimum Wage Workers: 2012

- ^ Tennant, Michael. "Minimum Wage The Ups & Downs." New American (08856540) 30.12 (2014): 10-16. Academic Search Complete. Web. 26 Feb. 2015.

- ^ "Living Wage Calculator". livingwage.mit.edu. Retrieved 2023-10-02.

- ^ Aeppel, Timothy (August 29, 2022). "North American companies send in the robots, even as productivity slumps". Reuters.

- ^ USC 26 § 3401(a)

Further reading

[edit]- Galbraith, James Kenneth. Created Unequal: the Crisis in American Pay, in series, Twentieth Century Fund Book[s]. New York: Free Press, 1998. ISBN 0-684-84988-7

External links

[edit]- Lebergott, Stanley (2002). "Wages and Working Conditions". In David R. Henderson (ed.). Concise Encyclopedia of Economics (1st ed.). Library of Economics and Liberty. OCLC 317650570, 50016270, 163149563

- U.S. Bureau of Labor Statistics

- Wealth of Nations – click Chapter 8

- U.S. Department of Labor: Minimum Wage Laws – Different laws by State

- Average U.S. farm and non-farm wage

- Prices and Wages by Decade library guide – Prices and Wages research guide at the University of Missouri libraries

A wage is the monetary compensation paid by an employer to an employee for labor or services rendered, typically calculated on an hourly, daily, or piecework basis as opposed to a fixed salary.[1][2] In economic theory, wages emerge from the interaction of labor supply—workers willing to offer their services at various rates—and labor demand—employers seeking workers based on productivity and marginal revenue product—reaching equilibrium where the quantity of labor supplied equals demanded, assuming competitive markets without distortions.[3][4] Distinctions between nominal wages, the unadjusted dollar amount received, and real wages, nominal wages deflated by the price level to reflect purchasing power, are central to assessing labor compensation's true value over time.[5][6] Empirically, U.S. real median wages grew modestly from 1979 to 2019, with stronger gains at upper percentiles amid debates over factors like technological change, globalization, and policy interventions such as minimum wage laws, which some studies link to reduced employment among low-skilled workers.[7][8] Wages thus serve as a key indicator of economic productivity, income distribution, and living standards, influencing incentives for work, investment in human capital, and overall resource allocation.[9]

Definitions and Core Concepts

Fundamental Definition

A wage constitutes the remuneration provided by an employer to an employee in exchange for labor services performed, encompassing monetary payments for work done over a defined period such as an hour, day, or output unit.[10] This payment reflects the contractual agreement for the utilization of the employee's productive capacity, excluding self-employed income or returns to capital ownership.[11] Unlike barter or in-kind exchanges prevalent in pre-market economies, modern wages are predominantly denominated in currency, facilitating market transactions and enabling workers to allocate resources toward consumption or savings.[10] Economically, wages function as the market price of labor, arising from the interaction between workers offering their time and skills (labor supply) and employers seeking to maximize output (labor demand).[12] This price equilibrates to clear the labor market, where the wage level incentivizes sufficient labor provision to match employment needs, grounded in the principle that firms hire additional workers up to the point where the value of their marginal output equals the wage cost. Empirical data from labor markets, such as U.S. Bureau of Labor Statistics records, consistently show wages varying by occupation, skill level, and location, with mean hourly wages across sectors reported at $31.48 in May 2023 based on occupational surveys.[13] Fundamentally, wages embody the causal link between individual effort and economic production, where higher productivity—driven by technology, education, or capital complementarity—empirically correlates with elevated wage levels, as evidenced by cross-country data showing real wage growth tracking GDP per capita increases over decades.[12] However, this does not imply wages capture the full value of labor contributions, as institutional factors like bargaining power or regulations can distort market-clearing outcomes from pure supply-demand dynamics.[14] Distinctions from total compensation are critical, as wages typically exclude benefits like health insurance or pensions, which augment effective pay but are not direct wage elements.[10]Nominal versus Real Wages

Nominal wages refer to the unadjusted monetary compensation paid to workers, expressed in the currency's face value at the time of payment, such as dollars per hour or annual salary.[15][5] These wages do not account for variations in purchasing power due to changes in the general price level.[6] Real wages measure the actual purchasing power of nominal wages, obtained by deflating nominal wages by a price index, typically the Consumer Price Index (CPI), to reflect what goods and services the compensation can buy.[5][6] The formula for real wages is real wage = nominal wage / price level, where the price level is often represented by the CPI or a similar inflation gauge.[5][16] For instance, if nominal wages rise by 3% annually but inflation is 4%, real wages decline by approximately 1%, eroding workers' standard of living.[17] Economists prioritize real wages over nominal wages to assess true economic welfare and labor market conditions, as nominal increases can mask declines in living standards during inflationary periods.[6][17] In the United States, Bureau of Labor Statistics data indicate that real average hourly earnings for production and nonsupervisory employees rose only modestly in recent years; for example, from August 2024 to August 2025, they increased by 0.7% seasonally adjusted, despite higher nominal gains offset by inflation.[18] Historically, U.S. real wages peaked around 1972 and remained below that level for decades, highlighting how nominal wage growth often fails to fully compensate for price increases in assessing long-term trends.[19] This distinction is critical for policy analysis, as focusing solely on nominal figures can overestimate wage progress amid rising costs.[5][20]Components of Wage Compensation

Wage compensation, often termed total employee remuneration, comprises the full array of monetary and non-monetary rewards employers provide to workers in exchange for labor services. This includes direct payments, which are cash-based and immediately accessible to employees, and indirect elements, which offer value through deferred or in-kind benefits. Empirical data from the U.S. Bureau of Labor Statistics (BLS) Employer Costs for Employee Compensation survey illustrates that, as of June 2025, direct wages and salaries constituted 70.2% of total hourly compensation costs for civilian workers, averaging $32.07 per hour, while indirect benefits accounted for the remaining 29.8%, at $13.58 per hour.[21] These proportions vary by industry, occupation, and region, with benefits comprising a larger share in sectors like public administration or healthcare due to mandated or negotiated provisions. Direct compensation primarily consists of wages and salaries, defined as total earnings before deductions, encompassing straight-time pay for hours worked, overtime premiums, shift differentials, commissions, and short-term incentives such as annual bonuses or profit-sharing payouts.[22] For hourly workers, this includes base rates plus any productivity-linked pay; salaried employees receive fixed annual amounts, often prorated. BLS data excludes employer contributions to deferred plans in this category, focusing on immediate cash flows, though some frameworks incorporate equity grants like stock options as direct if they convert to cash equivalents.[23] In practice, direct elements align with marginal productivity, where pay reflects output value, but institutional factors like collective bargaining can inflate components beyond market rates.[24] Indirect compensation, or benefits, supplements direct pay by mitigating employee risks and enhancing long-term security, often funded by employer contributions that reduce taxable income for workers. Key subcomponents include:- Paid leave: Vacation, holidays, sick days, and personal time, averaging 7.3% of total compensation costs in the U.S. as of June 2025, with private industry workers receiving about 80 hours of paid vacation annually on average.[21]

- Insurance benefits: Health, life, and disability coverage, representing the largest benefit slice at 8.7% of costs, driven by rising medical premiums that exceeded wage growth in recent decades.[21]

- Retirement and savings: Employer-sponsored pensions and 401(k matches, at 4.1% of costs, where defined-contribution plans dominate, tying value to investment performance rather than guaranteed annuities.[21]

- Legally required payments: Social Security, Medicare, unemployment insurance, and workers' compensation, comprising 7.8% of costs and functioning as mandatory transfers rather than discretionary perks.[21]

Theoretical Frameworks

Supply and Demand Model

In the neoclassical framework, the wage rate in a competitive labor market is determined by the interaction of labor supply and labor demand. Labor demand reflects firms' willingness to hire workers based on the marginal revenue product of labor (MRP_L), which is the additional revenue generated by employing one more unit of labor; firms hire until the wage equals MRP_L, resulting in a downward-sloping demand curve as lower wages allow hiring more workers to expand output.[12][26] Labor supply represents workers' willingness to offer their time, trading off leisure for income; it slopes upward because higher wages incentivize more labor supply through the substitution effect outweighing the income effect for most individuals at typical wage levels.[27][28] The equilibrium wage occurs where supply equals demand, clearing the market by equating the quantity of labor workers wish to supply with the quantity firms wish to demand, thereby setting both the market wage and employment level.[12][29] Shifts in the demand curve, such as increases from technological improvements raising productivity or higher product demand, elevate equilibrium wages and employment, while supply shifts from demographic changes like population growth lower wages but increase employment.[30] Empirical estimates indicate labor demand is relatively elastic, with elasticities often exceeding 1 in absolute value, implying significant responsiveness to wage changes, whereas supply is more inelastic.[12][31] This model assumes perfect competition, homogeneous labor, and full information, though real markets feature imperfections like monopsony or unions that can deviate outcomes from pure equilibrium predictions.[32]Marginal Productivity Theory

The marginal productivity theory of wages, a cornerstone of neoclassical economics, posits that in competitive labor markets, the wage rate paid to workers equals the value of their marginal product of labor (VMPL). This principle, formalized by American economist John Bates Clark in his 1899 book The Distribution of Wealth: A Theory of Wages, Interest and Profits, argues that factors of production, including labor, receive remuneration equivalent to the additional revenue they generate for the firm.[33][34] Clark's formulation builds on the broader marginalist revolution, integrating concepts of diminishing marginal returns to explain income distribution without relying on class conflict or exploitation narratives.[35] Under the theory, firms maximize profits by hiring labor up to the point where the wage equals the VMPL, defined as the marginal product of labor (MPL)—the increment in output from an additional unit of labor—multiplied by the price of the output. Mathematically, in a production function where is output, is labor, and is capital, the MPL is , and VMPL is , with as the output price. This derives the downward-sloping labor demand curve, as diminishing returns ensure that MPL falls with increased employment, leading employers to pay less for additional workers whose contributions add progressively less value.[36][37] The theory assumes perfect competition in both product and labor markets, homogeneous labor, full information, and mobility of workers, conditions under which no firm can influence wages or prices. Wages thus reflect workers' productive contributions, incentivizing efficiency and aligning pay with economic value added, rather than bargaining power or institutional mandates. Empirical tests, however, reveal deviations; for instance, studies in manufacturing sectors like India's have found wages not fully aligning with estimated MPL, attributed to market imperfections such as monopsony power or rigidities.[38] Despite such challenges, the framework provides a causal benchmark for understanding wage determination, emphasizing productivity as the primary driver over exogenous factors like minimum wages or unions in idealized settings.[39]Institutional and Bargaining Theories

Institutional theories of wage determination posit that wages are shaped primarily by social institutions, legal frameworks, customs, and power dynamics rather than solely by marginal productivity or supply-demand equilibrium. Pioneered by figures such as John R. Commons in the early 20th century, these theories emphasize the role of collective action, government regulation, and historical precedents in establishing wage norms, viewing labor markets as embedded in broader societal structures that constrain individual bargaining. Commons argued that wages emerge from ongoing "working rules" negotiated through institutional processes, including unions and legislation, which address power imbalances inherent in employment contracts where workers often lack full information or mobility.[40][41] This contrasts with neoclassical models by incorporating ethical and macroeconomic considerations, such as using wage floors to counteract monopsonistic employer power or imperfect competition.[42] Sidney and Beatrice Webb further developed institutional approaches in the late 19th and early 20th centuries, describing labor markets as arenas of conflict resolved through organized bargaining rather than harmonious market clearing. They contended that wages reflect the relative strength of trade unions, employer associations, and state interventions, integrating insights from economics, sociology, and history to explain deviations from competitive outcomes. Empirical analyses rooted in these theories highlight how institutional factors, such as minimum wage laws justified by labor market imperfections, sustain higher wage levels without necessarily causing widespread unemployment, as evidenced in studies of monopsony conditions where employers hold wage-setting power.[43][44][42] Bargaining theories complement institutional perspectives by modeling wages as outcomes of negotiations between employers and workers, often formalized in collective agreements that establish wage floors, schedules, and adjustments. These theories, drawing from game-theoretic frameworks like Nash bargaining, assert that wage levels depend on the parties' fallback options, information asymmetry, and external constraints, with unions enhancing worker leverage to secure premiums of 10-20% over non-union wages in comparable roles.[45][46] Cross-country evidence shows collective bargaining coverage reduces wage inequality by compressing differentials, though it correlates with modest employment reductions, particularly for low-skilled workers near the bargaining floor, as firms adjust labor demand to higher costs.[47][48] In decentralized systems, firm-level bargaining yields productivity-linked wage gains, but centralized agreements may amplify rigidity, limiting adjustments to economic shocks.[49] Critics note that bargaining power asymmetries, often favoring employers in non-union settings, lead to wages below competitive levels, underscoring the theories' focus on causal power distributions over idealized market efficiency.[50][51]Historical Development

Pre-Modern Wage Systems

In ancient Mesopotamia, wage systems emerged as early as the eighteenth century BCE, with the Code of Hammurabi regulating payments for hired laborers to standardize compensation and mitigate disputes. The code specified seasonal daily rates for day laborers at six grains of silver from the New Year until the fifth month (when days were longer and work more intensive), dropping to five grains thereafter, while field laborers and herdsmen received eight gur of corn annually and ox drivers six gur.[52][53] These payments, often in silver shekels or barley equivalent to grain rations, reflected a mixed economy where free workers supplemented temple or palace-organized labor, distinct from slavery but subject to legal caps to prevent exploitation or inflation.[54] In ancient Egypt, worker compensation centered on state-provided grain rations rather than pure cash wages, particularly for skilled laborers like those at Deir el-Medina who built royal tombs during the New Kingdom (c. 1550–1070 BCE). Monthly allotments included emmer wheat, barley for beer, and other staples scaled by family size and skill level, with evidence of strikes around 1152 BCE under Ramesses III when deliveries were delayed, underscoring rations as de facto wages tied to productivity and pharaonic largesse.[55][56] This system prioritized subsistence security over market negotiation, as laborers were often conscripted corvée workers augmented by professional hires, with grain functioning as currency in a barter-heavy economy. Classical Greece and Rome featured more monetized day wages for free laborers, coexisting with slavery that dominated large-scale production. In fifth- and fourth-century BCE Athens, unskilled workers earned about one obol per day, while skilled artisans or hoplites commanded one drachma (six obols), sufficient for basic grain but precarious amid urban demand fluctuations.[57] Roman unskilled laborers typically received one to three denarii daily by the late Republic, with Emperor Diocletian's Edict on Maximum Prices in 301 CE attempting to cap farm workers at 25 denarii per day (including maintenance) to combat inflation, though enforcement failed amid economic strain.[58][59] These wages, often supplemented by in-kind benefits like food, highlighted causal links between labor scarcity, military needs, and nominal pay, yet real purchasing power stagnated due to grain price volatility and reliance on seasonal urban employment. Medieval European wage systems evolved amid feudal hierarchies, where serfs owed fixed labor services (corvée) to lords in exchange for land use, limiting free wage labor to artisans, casual farm hands, and urban trades. In thirteenth-century England, day laborers earned two pence daily, equivalent to about 480 pence annually assuming 240 workdays, often in cash or kind like ale and bread, reflecting customary rates before market forces intensified.[60] The Black Death (1347–1351) disrupted this by halving populations, boosting survivor bargaining power and doubling or tripling real wages in subsequent decades—e.g., English agricultural rates rose from three to six pence daily by 1400—prompting the Statute of Labourers (1351) to mandate pre-plague caps, though evasion via in-kind payments and regional variations persisted.[61][62] This shift marked a transition toward proto-capitalist labor markets, as labor shortages eroded serfdom and elevated cash wages, particularly in wool and cloth sectors, while in-kind remuneration (e.g., board for servants) remained prevalent to bind workers amid institutional constraints.[63][64]Industrial Era Transformations

The Industrial Revolution, commencing in Britain around 1760 and spreading to continental Europe and North America by the early 19th century, fundamentally altered wage systems by replacing artisanal, guild-regulated, and agrarian labor arrangements with factory-based wage employment. Prior to industrialization, most workers operated under systems of self-employment, sharecropping, or apprenticeships where compensation often blended fixed payments with in-kind benefits or profit shares; wages, when present, were typically negotiated locally under guild oversight that limited competition and entry.[65] The advent of mechanized production, powered by steam engines and waterwheels, concentrated labor in urban factories, compelling workers to sell their labor time for fixed monetary wages, often on a daily or piece-rate basis, to capitalists who owned the means of production.[66] This proletarianization decoupled income from land or tools, making wages the primary survival mechanism for the emerging industrial working class, which expanded rapidly as rural migrants and displaced artisans sought employment.[67] Wage structures evolved to accommodate mass production and division of labor, as theorized by Adam Smith in 1776, where specialized tasks boosted productivity but initially depressed unskilled wages due to surplus labor supply. Factory wages were generally higher than agricultural earnings—British farm laborers earned about 8-10 shillings weekly in the late 18th century, while factory operatives could command 15-20 shillings—but this premium came amid 12-16 hour shifts, six days a week, and hazardous conditions that eroded effective purchasing power.[67] Real wages in Britain, adjusted for cost-of-living changes including rising urban food and housing prices, grew modestly or stagnated from 1770 to 1820, with estimates showing only 0-15% cumulative increase for blue-collar workers despite technological advances, largely offset by population growth from 6.5 million in 1750 to 21 million by 1851.[68][69] Skilled artisans, such as machinists, retained wage premiums of 20-50% over unskilled laborers, while women and children—comprising up to 50% of textile workforces—received 50-60% less than adult males, reflecting discriminatory bargaining power and physical task differences.[70] Post-1820, real wages accelerated, rising 1-2% annually in Britain through the mid-19th century as productivity gains from railways and iron production outpaced demographic pressures, enabling broader consumption of goods like cotton clothing and tea.[68] This lagged improvement fueled early labor agitation, including the Luddite riots of 1811-1816 against wage-undercutting machinery and the formation of trade unions, such as the 1824 repeal of Britain's Combination Acts, which legalized collective bargaining to counter employer monopsony.[65] In the United States, where industrialization intensified after 1830, immigrant influxes similarly suppressed wages initially, but by 1850, manufacturing wages averaged $1 daily versus $0.50-0.75 for farmhands, though regional variations persisted with Southern wages lagging due to slavery's distortion of free labor markets.[71] These transformations laid the groundwork for modern wage economies, where market competition and institutional reforms gradually aligned remuneration more closely with marginal productivity, albeit unevenly across sectors and demographics.[72]20th and 21st Century Shifts

In the early 20th century, industrialization and mass production techniques, such as those introduced by Henry Ford in 1914 with the $5 workday, significantly boosted nominal wages for manufacturing workers, enabling broader consumer purchasing power.[73] Unionization rates rose, peaking at around 35% of the non-agricultural workforce by the 1950s, which correlated with compressed wage inequality through collective bargaining that standardized pay scales.[74] Real wages grew steadily, with average annual increases averaging 2-3% from 1900 to 1950, driven by productivity gains from electrification and assembly lines.[73] Post-World War II through the 1970s marked a "golden age" of wage expansion in developed economies, particularly the United States, where real median family income rose by over 80% from 1947 to 1973, closely tracking productivity growth.[75] This period featured strong labor market institutions, including high union density and government policies like the GI Bill, which expanded skilled labor supply while maintaining wage floors.[73] However, from the late 1970s, median real wages stagnated; U.S. median hourly wages increased only about 8.8% from 1979 to 2019, despite productivity doubling in the same timeframe, leading to debates over a "decoupling" where gains accrued disproportionately to capital owners and top earners.[7] [76] Critics argue this divergence stems from measurement issues in total compensation or offshoring, rather than inherent decoupling, as broader pay including benefits shows less disparity.[77] The 1980s onward saw rising wage inequality due to skill-biased technological change, which increased the college wage premium from 40% in 1979 to over 70% by the 2000s, rewarding high-skill workers in tech and finance while polarizing low-skill wages.[78] Globalization, including trade liberalization post-1980, contributed to wage pressure on manufacturing jobs, accounting for roughly 15% of U.S. income inequality rise in the early 1980s before effects waned.[79] Concurrent union decline—from 34% private-sector male membership in 1973 to 8% by 2007—exacerbated inequality, explaining 15-20% of the increase in male wage dispersion through reduced bargaining power.[80] [81] Into the 21st century, real median weekly earnings for full-time U.S. workers reached $1,196 by Q2 2025 (in current dollars), with modest real gains post-2009 recovery, but persistent gaps between productivity and typical worker pay highlight structural shifts like automation and gig work eroding traditional wage ladders.[82] Occupational skill premia narrowed globally from the 1950s to 1980s before widening again through the 2000s, reflecting uneven adaptation to technological demands.[83] These trends underscore causal factors including institutional erosion and market integration over policy-driven narratives.Determinants of Wages

Market Forces

In competitive labor markets, wages are established at the equilibrium point where the quantity of labor supplied by workers equals the quantity demanded by employers. The labor supply curve slopes upward, indicating that higher wages incentivize more individuals to enter or increase hours worked, while the labor demand curve slopes downward, reflecting that firms hire additional workers up to the point where the wage equals the marginal revenue product of labor.[12] This framework, rooted in neoclassical economics, posits that deviations from equilibrium lead to adjustments through unemployment or wage changes until balance is restored.[84] Shifts in labor supply influence equilibrium wages inversely. An increase in supply, such as from higher immigration rates, expands the available workforce, typically depressing wages for competing native workers, particularly those with lower skills. Empirical analysis by George Borjas estimates that a 10 percent rise in immigrant supply reduces wages for competing U.S. natives by 3 to 4 percent, with stronger effects on high school dropouts.[85] Conversely, demographic trends like population aging contract labor supply by reducing the working-age population, exerting upward pressure on wages; research indicates this dynamic has contributed to wage growth in aging economies by limiting available workers.[86] Other studies, such as those by Giovanni Peri, report minimal aggregate wage impacts from immigration due to native worker adjustments and complementary skill effects, though these findings are contested for underestimating short-term displacement in specific labor segments.[87] Labor demand shifts, driven by productivity enhancements, positively affect wages. Technological advancements and capital investments raise the marginal productivity of labor, shifting the demand curve rightward and elevating equilibrium wages. Aggregate data across OECD countries reveal a positive correlation between productivity growth and real wage increases, with productivity rises preceding and enabling wage gains in competitive settings.[88] For instance, skill-biased technological change has disproportionately boosted demand for educated workers, contributing to relative wage premiums for higher-skilled labor since the 1960s, as documented in supply-demand decompositions of U.S. wage structure changes.[89] However, monopsonistic market power in some sectors can dampen wage responses to productivity gains, though empirical evidence underscores that competitive pressures generally align wages closer to marginal products over time.[90] Market concentration and entry barriers also modulate these forces; greater firm competition intensifies labor demand responsiveness to productivity, preventing wage suppression, while barriers to labor mobility—such as geographic or regulatory constraints—can distort local equilibria, leading to persistent wage differentials until arbitrage occurs.[91] Overall, these dynamics illustrate how exogenous shocks to supply or demand propagate through price adjustments, with empirical validations confirming the model's predictive power in explaining wage variations absent institutional interventions.[92]Individual Productivity Factors

Individual productivity factors encompass attributes inherent to or developed by workers that enhance their output per unit of input, thereby influencing their marginal revenue product and, in turn, their equilibrium wage under competitive labor market conditions. These factors, rooted in human capital accumulation, include education, work experience, cognitive abilities, and health status, each contributing to variations in worker efficiency and compensable value. Empirical analyses consistently demonstrate a positive association between such factors and wages, though market frictions and measurement challenges can introduce discrepancies between productivity gains and pay.[93] Education represents a primary investment in human capital, augmenting skills applicable to production processes and yielding measurable wage premiums. Meta-analyses and instrumental variable estimates indicate that each additional year of schooling correlates with 8-13% higher hourly earnings, reflecting enhanced problem-solving, technical proficiency, and adaptability that boost output. This return persists across life-cycle stages, with higher education enabling access to roles demanding complex tasks and innovation, though diminishing marginal gains occur beyond tertiary levels in saturated markets.[94] Skill-specific training, such as vocational programs, further amplifies productivity by aligning capabilities with job requirements, evidenced by 7-19% wage uplifts from experience-integrated apprenticeships one year post-graduation.[95] Work experience accumulates tacit knowledge and on-the-job learning, modeled in the Mincer earnings function as a quadratic term where wages rise with tenure up to approximately 40 years of age before plateauing due to skill depreciation. This framework, validated across datasets, posits that potential experience (age minus schooling) explains 20-30% of log wage variance, as repeated exposure refines efficiency and reduces errors in task execution.[96] [97] Empirical extensions confirm that early-career experience yields steeper wage trajectories, particularly in dynamic sectors, though mismatches between acquired skills and job demands can erode these benefits, leading to penalties akin to overeducation.[98] Cognitive abilities, often proxied by IQ or aptitude tests, exert a causal influence on productivity through superior information processing and decision-making, correlating with higher wages independent of formal education. Cross-national studies in low- and middle-income contexts find that a one-standard-deviation increase in cognition scores associates with 4.5% elevated wages, while U.S. data link each IQ point to 616 annual income gains after controlling for socioeconomic factors.[99] [100] This linkage holds robustly up to upper-middle income thresholds but attenuates among top earners, where non-cognitive traits like persistence may dominate.[101] Health status directly impacts physical and mental capacity for sustained output, with poorer conditions reducing labor supply and efficacy. European panel data reveal that self-reported health deficits lower wages by 5-10% via diminished stamina and absenteeism, while interventions improving nutrition or medical access in developing economies elevate productivity metrics like daily earnings by up to 20%.[102] [103] Aggregate evidence underscores that healthier workers command premia reflecting their reliability and output consistency, though causality is bidirectional as low wages can exacerbate health declines.[104]External Institutional Influences

Employment protection legislation (EPL), which imposes costs on dismissing workers such as mandatory notice periods and severance payments, elevates wages by increasing firms' hiring selectivity and reducing turnover risks. Empirical analysis of Portuguese firm-level data from 1996 to 2000 shows that an additional month of "dormant" notice—unpaid time during which workers cannot seek new employment—raises wages by approximately 3%. [105] Similar quasi-experimental evidence from Italy's 1990 EPL reform indicates heterogeneous wage effects, with protected workers experiencing gains offset by reduced employment probabilities for low-skilled groups. [106] These regulations shift labor demand curves inward for at-risk hires, compelling higher compensation to attract and retain suitable candidates, though benefits accrue unevenly across skill levels. [107] Generous unemployment insurance (UI) systems, characterized by high replacement rates of prior earnings, influence wages by bolstering workers' reservation wages—the minimum acceptable pay for job acceptance. Cross-country OECD data from 1960 to 1994 reveal that higher UI replacement rates correlate with elevated labor costs, as benefits enable prolonged job searches and resistance to low-wage offers, thereby pushing equilibrium wages upward. In the U.S., where average replacement rates hover below 40% of prior wages, supplemental federal programs during recessions have temporarily exceeded 100% for many claimants, amplifying this effect and contributing to wage stickiness in recoveries. [108] [109] However, prolonged high benefits can extend unemployment durations, indirectly constraining wage growth for remaining employed workers through reduced labor market fluidity. [110] Occupational licensing requirements, enforced by state or professional boards, restrict labor supply in regulated fields, driving up wages for incumbents via entry barriers like exams, fees, and experience mandates. U.S. data from the 2015 Current Population Survey indicate licensed workers earn a 7.5% hourly wage premium over unlicensed peers in comparable roles, with premiums reaching 18% when controlling for observables like education. [111] [112] Long-standing licenses (over 30 years) yield about 4% higher wages relative to unlicensed states for the same occupation, concentrated in lower-skill jobs where supply constraints are most binding. [113] While intended to ensure quality, these institutions often exceed necessity, inflating costs without proportional productivity gains and disproportionately benefiting established practitioners. [114] Product market regulations, including barriers to firm entry and antitrust enforcement laxity, indirectly suppress wages by fostering monopsony power in labor markets. Theoretical and empirical models show that stringent entry regulations reduce firm numbers, enabling employers to pay below competitive levels as workers face fewer alternatives. [115] Cross-country evidence links higher product market barriers to lower labor shares and wages, with deregulation in advanced economies from 1970 to 2013 boosting labor income via intensified competition and improved matching. [116] [117] In sectors with elevated markups from regulation, wage compression occurs as firms capture rents rather than sharing them with labor, underscoring how non-labor institutions shape distributional outcomes. [118]Wage Differentials

Occupational and Skill Variations

Wages differ substantially across occupations due to variations in skill requirements, complexity of tasks, and associated productivity levels. Higher-skilled occupations, such as those in management, professional services, and engineering, command premium pay reflecting greater marginal contributions to output, while lower-skilled roles in service or manual labor yield lower compensation aligned with replaceable labor inputs. Data from the U.S. Bureau of Labor Statistics' May 2024 Occupational Employment and Wage Statistics survey illustrate this: management occupations averaged an annual mean wage of $131,930, computer and mathematical occupations $108,020, and architecture and engineering roles $97,710, compared to $50,090 for production occupations and $32,120 for food preparation and serving-related work.[119] [120] These gaps persist because employers compensate based on expected value added, with skilled tasks enabling innovation, efficiency, or specialized problem-solving not feasible by unskilled labor.[121] Skill variations, encompassing education, training, and cognitive abilities, explain much of occupational wage dispersion through human capital accumulation. Empirical evidence shows a robust positive return to skill investment: each additional year of education correlates with 8-12% higher earnings, driven by enhanced productivity rather than signaling alone.[94] [122] In the U.S., the college wage premium—earnings differential for bachelor's degree holders versus high school graduates—stood at approximately $32,000 annually in 2024, near historic highs, with lifetime earnings for college completers exceeding non-graduates by over $1 million after accounting for tuition costs.[123] [124] This premium has widened since the 1980s due to skill-biased technological change, which disproportionately boosts demand for workers adept in abstract reasoning and information processing, outpacing supply responses.[125] Overeducation in low-skill jobs incurs wage penalties of 10-20%, underscoring mismatch costs, while undereducation in high-skill roles amplifies premiums through scarcity.[126] Cross-occupation evidence confirms causality via productivity: skilled workers in complex roles, like software developers or physicians, generate outsized revenues justifying compensation, whereas routine tasks in assembly or retail allow wage compression from labor abundance.[127] International data reinforce this, with OECD countries showing skill premia of 40-60% higher wages for tertiary-educated workers, moderated by institutional factors like trade openness but rooted in relative supply-demand imbalances.[128] Institutional biases in academic studies, often overlooking supply-side rigidities, may overemphasize demand shocks, yet raw wage data consistently validate productivity-driven differentials over egalitarian narratives.[129]Geographic and Sectoral Differences

Wages vary substantially across geographic regions due to disparities in economic productivity, cost of living, labor supply, and regulatory environments. Internationally, average annual wages in high-income OECD countries significantly outpace those in developing economies; for instance, the United States recorded an average gross annual wage of $82,932 for full-time employees in 2024, compared to lower figures in emerging markets like Mexico, where OECD data indicate averages around $18,000.[130][131] These differences stem from higher capital-labor ratios and technological adoption in advanced economies, enabling greater marginal productivity per worker.[132] Within nations, regional wage gaps persist, often correlating with urbanization and industry concentration. In the United States, Bureau of Labor Statistics data for 2024 show average weekly wages exceeding $1,300 in high-cost states like California and New York, versus under $1,000 in southern states such as Mississippi, reflecting agglomeration effects in tech and finance hubs versus agriculture-dominated areas.[133][13] Similar patterns appear globally, with the International Labour Organization noting that urban wages in Asia and Africa average 20-30% higher than rural counterparts, driven by access to markets and infrastructure.[134] Sectoral differences arise from variations in skill demands, risk exposure, and market competition. In the US, 2024 BLS figures indicate average hourly earnings of approximately $52 in utilities and $48 in mining, contrasting with $20 in leisure and hospitality, attributable to capital-intensive operations and specialized labor in the former.[135][136] Globally, the ILO's 2024-25 Wage Report highlights that manufacturing and services sectors in advanced economies yield higher wages than agriculture, with real wage growth in industry outpacing primary sectors by 1-2% annually in recent years, underscoring productivity-driven remuneration.[132] These disparities incentivize labor mobility toward higher-paying sectors like information technology, where US wages average over $60 hourly for software roles.[137]Demographic Disparities

In the United States, full-time wage and salary workers who are women earned a median of $1,005 per week in 2023, compared to $1,202 for men, representing 83.6% of male earnings.[138] This raw disparity, often cited in public discourse, narrows substantially when accounting for observable factors such as occupation, hours worked, education, and labor market experience; analyses using panel data from 1980 to 2010 indicate that convergence in human capital investments and work patterns explains the bulk of the decline in the gap over time, leaving a residual of approximately 5-10% potentially linked to unmeasured choices or discrimination.[139] Empirical studies emphasize that women's greater propensity for career interruptions due to childbearing and preference for flexible or part-time roles—often trading off higher pay for work-life balance—account for much of the remaining difference, rather than systemic pay discrimination within comparable roles.[139] Racial and ethnic wage gaps persist, with Black full-time workers earning approximately 75.6% of White workers' median hourly wages in 2019, while Hispanic workers earned around 80% and Asian workers exceeded White earnings by about 10-15% in median terms.[140] [141] These disparities correlate strongly with differences in parental income, neighborhood quality, family structure, and cognitive skill measures from childhood, as evidenced by longitudinal data tracking nearly the entire U.S. population from 1989 to 2015; for instance, Black children born into the top income quartile have only a 2.5% chance of reaching the top quartile as adults, compared to 10.6% for White children, driven by causal factors like single-parent households and lower intergenerational mobility rather than labor market discrimination alone.[142] Adjusting for education and occupation reduces the Black-White gap by 30-50%, though a residual persists, attributable to pre-market factors such as school quality and cultural norms influencing labor supply and productivity.[143] Age profiles wages in an inverted-U pattern, with earnings rising through prime working years due to accumulated experience and peaking around ages 45-54 before declining amid health limitations or skill obsolescence.[144] U.S. Bureau of Labor Statistics data for 2025 show median weekly earnings for full-time workers aged 25-34 at approximately $1,100, increasing to $1,400 for those 45-54, then falling to $1,159 weekly ($60,268 annually) for those 65 and older.[145] [146] This trajectory reflects human capital accumulation models, where younger workers invest in on-the-job training, mid-career workers reap returns, and older cohorts face reduced bargaining power or exit from full-time roles, with cross-sectional profiles exaggerating peaks due to cohort effects like education expansions.[144] Disparities by age intersect with other demographics; for example, the gender gap widens for older workers (76.7% for ages 55+ in 2024), linked to cumulative effects of career breaks.[147]Policy Interventions

Minimum Wage Regulations

Minimum wage regulations establish a government-mandated floor on the hourly wages that employers must pay to covered workers, typically justified as a means to ensure basic living standards and curb exploitative pay practices. These laws vary by jurisdiction, with national, state, or sectoral minimums enforced through labor departments, often with penalties for non-compliance such as fines or back pay orders. Over 100 countries implement such policies, with rates ranging from approximately $46 monthly in Nigeria to $3,254 in Luxembourg as of 2025, reflecting differences in economic development and cost of living.[148] In the United States, the federal minimum wage originated with the Fair Labor Standards Act (FLSA) of 1938, which set an initial rate of $0.25 per hour for workers engaged in interstate commerce, covering about 20% of the workforce at inception.[149] The rate has been raised periodically through congressional legislation, reaching $7.25 per hour on July 24, 2009, and remaining unchanged since despite inflation eroding its real value to levels below prior peaks adjusted for purchasing power.[149] States may enact higher minimums, with 30 states and the District of Columbia exceeding the federal level as of 2025; for instance, Washington state's rate stands at $16.66, while California mandates $16.00, enforced via state labor agencies that supersede the federal floor where applicable.[150] Regulations include exemptions and subminimum rates to accommodate specific labor market conditions. In the U.S., tipped employees receive a cash wage as low as $2.13 per hour federally, provided tips bring total earnings to at least $7.25, with employers liable for shortfalls; full-time students and certain apprentices may qualify for rates up to 85% of the minimum for limited periods. Youth workers under 20 can be paid $4.25 for the first 90 days of employment under federal rules, while small businesses with annual revenue under $500,000 and certain seasonal employers (e.g., amusement parks operating fewer than seven months yearly) face partial exemptions from coverage. Some jurisdictions index rates to inflation, such as New York's automatic adjustments tied to consumer price indices since 2016, aiming to maintain real value without legislative intervention.[151] Internationally, implementation differs; for example, Australia's national system sets industry-specific awards via the Fair Work Commission, with a base hourly rate of AU$24.10 (about US$15.80) effective July 2024, incorporating penalty rates for unsocial hours.[152] In the European Union, minimums are national competencies, with 22 of 27 member states mandating them as of 2025, ranging from €551 monthly in Bulgaria to €2,704 in Luxembourg, often excluding sectors like domestic work or apprenticeships.[153] Enforcement relies on inspections and reporting, though compliance varies, with informal economies in developing nations posing challenges to effective application.[154]Unionization and Collective Bargaining

Unionization refers to the process by which workers organize into labor unions to represent their collective interests, primarily through collective bargaining agreements that negotiate terms of employment, including wages. In the United States, this framework was formalized by the National Labor Relations Act of 1935, which grants private-sector employees the right to unionize and bargain collectively with employers. Collective bargaining typically results in contracts specifying wage scales, often above market rates, in exchange for concessions on work rules, productivity measures, or employment guarantees. Empirical studies consistently find that union membership confers a wage premium, estimated at 10 to 15 percent on average for U.S. workers after adjusting for observable characteristics like skill and experience.[155] This premium arises from unions' ability to exert monopsony counter-power against employers, reducing wage dispersion within covered sectors and compressing pay structures to favor lower-skilled members.[156] However, the premium varies by industry, tenure, and economic conditions; for instance, it has narrowed in recent decades due to declining union density and increased firm resistance.[157] Spillover effects, where non-union employers raise wages to avoid organization, were more pronounced historically but have diminished as union coverage fell from 20.1 percent of workers in 1983 to 9.9 percent in 2024.[158][159] While collective bargaining elevates wages for union insiders, it often imposes higher labor costs on firms, leading to reduced employment levels and firm survival rates. Matched employer-employee data from union elections show that successful unionization decreases establishment employment by altering hiring practices and increasing layoffs, with effects persisting over years.[160] Theoretical models predict this outcome in competitive labor markets, where above-market wages exclude marginal workers, raising unemployment duration particularly among youth and low-skilled groups.[161] Productivity impacts are mixed; some analyses link unions to higher firm-level output per worker through better training and reduced turnover, but others indicate net cost increases that hinder competitiveness and encourage automation or offshoring.[162][163] In sectors with high union penetration, such as manufacturing and public services, collective bargaining has compressed wage differentials but contributed to structural declines as firms relocate to right-to-work states or abroad. For example, private-sector union density dropped to 6 percent by 2022, correlating with shifts toward flexible labor markets that prioritize individual productivity over standardized contracts.[164] Critics argue that institutional protections for unions distort market signals, favoring incumbent workers at the expense of overall employment growth, while proponents cite reduced inequality within unionized workforces—though broader evidence questions net societal gains given employment trade-offs.[165][47]Fiscal Policies Affecting Wages

Fiscal policies, comprising government taxation and expenditure decisions, influence wages primarily by shaping labor supply incentives, capital investment, and aggregate demand dynamics. Taxation on labor and capital alters the after-tax returns to work and productivity-enhancing investments, while public spending can stimulate short-term demand for labor but often exerts downward pressure on real wages through resource crowding or inflationary effects. Empirical analyses using structural vector autoregressions indicate that fiscal expansions, such as increases in government purchases, raise output and employment hours yet typically reduce real product wages and labor productivity in the private sector, as firms face higher input costs without proportional output gains.[166][167] Personal income and payroll taxes directly diminish net wages and distort labor supply decisions. Higher marginal tax rates on labor income lead to reduced hours worked in formal markets, with individuals reallocating time to untaxed household production, thereby constraining overall wage growth potential.[168] Payroll tax hikes, such as those analyzed in U.S. state-level variations, have been shown to lower both employment levels and gross wages, with incidence partially borne by workers rather than employers.[169] Cross-country evidence from OECD nations further reveals that progressive tax structures correlate with slower real wage growth, as reduced progressivity—lowering effective rates on higher earners—fosters incentives for skill accumulation and market participation.[170] Corporate income taxes indirectly affect wages by influencing firm profitability, investment, and labor demand. Economic models and empirical studies estimate that workers bear 20-50% of the corporate tax burden through depressed pre-tax wages, as higher taxes reduce capital inflows and productivity growth that would otherwise elevate labor compensation.[171] For example, international comparisons demonstrate that jurisdictions with lower corporate tax rates exhibit stronger wage growth trajectories, consistent with capital mobility shifting investments toward tax-favorable locations and bidding up worker pay.[172] The 2017 U.S. Tax Cuts and Jobs Act, which reduced the corporate rate from 35% to 21%, provides a case study: while long-run analyses project wage gains for average workers via repatriated capital and investment, short-term evaluations from left-leaning sources attribute limited broad-based increases primarily to executives and shareholders, highlighting debates over incidence distribution amid methodological differences in capturing dynamic effects.[173][174] Government expenditure policies, including public wage bills and infrastructure outlays, yield mixed wage impacts. Expansions in public spending often elevate government sector wages persistently while leaving private sector wages largely unchanged in the short run, as fiscal shocks prioritize public employment over private wage adjustments.[175] In industry-level data from the U.S., government demand surges boost hours but compress real wages due to diminished private productivity and potential crowding out of investment.[176] Over longer horizons, elevated public spending as a share of GDP correlates with slower economic growth and wage stagnation, as resources diverted from private sectors hinder innovation and capital deepening essential for sustained real wage advances.[177] Targeted fiscal measures, such as tax credits for research or workforce training, can mitigate these effects by enhancing human capital, though aggregate evidence underscores that procyclical spending amplifies wage volatility without addressing underlying supply-side constraints.[178]Economic Effects

Impacts on Employment Levels

In standard economic theory, wages represent the price of labor, and increases above the market-clearing level—such as through minimum wage policies—reduce the quantity of labor demanded by employers, leading to lower employment levels, particularly among low-skilled workers whose marginal productivity falls below the mandated wage.[179] This disemployment effect arises because firms respond by cutting hours, automating tasks, or substituting higher-skilled labor, with the burden falling disproportionately on entry-level positions. Empirical estimates often quantify this via employment elasticities, where a 10% minimum wage hike correlates with a 0-2.6% drop in employment for affected groups.[180] Rigorous reviews of U.S. data, including panel studies across states, consistently find negative employment impacts from minimum wage increases, with elasticities around -0.1 to -0.2 overall, but stronger effects (up to -1 to -2%) for teenagers and low-skilled adults.[181][182] For instance, a Congressional Budget Office analysis projected that raising the federal minimum wage to $15 per hour by 2025 would eliminate 1.4 million jobs on average, primarily among low-wage workers, while boosting earnings for those remaining employed.[183][184] These findings hold after controlling for confounders like regional economic conditions, with disemployment concentrated in sectors like retail, food service, and hospitality where low-skill labor predominates.[185] Evidence is particularly pronounced for vulnerable subgroups: youth employment drops by 1-2% per 10% wage increase, as firms reduce hiring of inexperienced workers, while low-skilled minorities face amplified losses due to fewer alternative opportunities.[181][186] Recent meta-analyses confirm a median own-wage employment elasticity of -0.13, indicating modest but statistically significant job reductions, countering claims of negligible effects from earlier studies like Card and Krueger (1994), which have been critiqued for relying on aggregated data that overlook hours reductions and firm entry/exit dynamics.[187][188] International evidence aligns, with minimum wage hikes in countries like Germany and the UK showing similar low-skill employment declines.[179] While some research invokes monopsony models—where employers hold wage-setting power—to argue for neutral or positive employment effects, these rely on specific market frictions rarely dominant in competitive low-wage sectors, and broader empirical syntheses find such cases exceptional rather than rule.[181] Overall, the weight of evidence from non-experimental and quasi-experimental designs supports that wage floors distort labor markets, trading higher pay for fewer jobs, especially harming those the policies aim to assist.[189]Effects on Economic Productivity

Efficiency wage theory posits that firms may pay wages above the market-clearing level to elicit greater worker effort, reduce shirking, lower turnover, and attract higher-quality applicants, thereby enhancing overall productivity.[190] Empirical tests support this mechanism, showing that higher wages correlate with reduced monitoring needs and improved performance incentives, as firms trade off supervision costs against wage premiums.[191] For instance, industry wage differentials have been linked to higher productivity through better worker discipline and selection effects.[192] Studies on wage increases demonstrate direct productivity gains among workers. A randomized experiment in U.S. fruit harvesting found that raising pay by $1 per hour increased output per worker by more than $1, driven by heightened effort and reduced turnover.[193] Similarly, border-discontinuity analyses around minimum wage hikes reveal that affected workers exhibit 1-2% higher productivity post-increase, alongside lower termination rates, suggesting intensified effort to retain jobs.[194] In piece-rate settings, unexpected pay raises prompt workers to exceed baseline effort levels, aligning with incentive-based models over mere signaling.[195] Aggregate data reinforces a tight link between wage growth and productivity trends, with historical U.S. evidence from 1948-2019 indicating that average hourly compensation tracks labor productivity closely, contradicting claims of persistent decoupling.[93] Cross-sectoral analyses in Europe and Asia further show persistent positive wage effects on firm-level productivity, as higher labor costs spur innovation and efficiency improvements.[196] However, in low-skill sectors with binding minimum wages, productivity responses vary by market concentration; concentrated labor markets see amplified gains, while competitive ones may experience offsetting automation pressures.[197] While these effects hold empirically, causal identification relies on natural experiments like policy shocks, as observational data risks endogeneity from unobserved firm heterogeneity.[198] Overall, elevated wages appear to boost productivity through micro-level incentives, though magnitudes diminish at economy-wide scales if not paired with skill-enhancing policies.[199]Consequences for Income Distribution

Wages, as the predominant form of labor income, directly shape income distribution by determining the earnings floor and dispersion for the workforce, which comprises the majority of households in market economies. In the United States, labor compensation represents about 60% of national income, with wage inequality accounting for roughly half of the rise in overall household income inequality since 1979.[200] From 1979 to 2017, the income share of the bottom quintile fell from 5.3% to 3.5%, while the top quintile's share increased from 41.9% to 50.1%, driven primarily by divergent wage growth between low- and high-skilled workers.[201] This pattern reflects skill-biased technological change and globalization, which have elevated premiums for college-educated labor, widening the gap independent of capital income concentration.[202] Declining union density has amplified wage dispersion and, consequently, income inequality. Empirical analyses attribute 10-20% of the growth in U.S. men's wage inequality from the late 1970s to the late 1980s to falling unionization rates, as unions historically compressed wage structures within firms and industries through collective bargaining.[81] Across OECD countries, higher collective bargaining coverage correlates with lower Gini coefficients, with unions reducing pre-tax income disparities by elevating low- and middle-wage earnings relative to non-union sectors.[203] However, union effects vary: while they narrow gaps within covered groups, overall inequality may persist if unions concentrate in high-wage industries, leaving informal or non-union low-wage sectors behind.[80] Minimum wage regulations exhibit mixed impacts on income distribution. Increases can raise family incomes at the lower tail, with robust evidence showing higher minimum wages boost earnings for affected workers and lift some households out of poverty, though gains are concentrated among single-earner families rather than multi-earner ones.[204] The U.S. Congressional Budget Office projects that raising the federal minimum wage to $15 per hour by 2025 would increase annual earnings for 1.4 million workers while reducing employment by 1.4 million jobs, resulting in net income gains for low-wage groups but potential losses for those displaced.[205] Cross-country studies yield conflicting results: some find minimum wage hikes reduce poverty and compress the bottom of the distribution, while others observe no significant change in household income Gini or even slight increases due to occupational shifts and disemployment among the least skilled.[206][207] Broader wage trends, such as stagnant real wages for low earners amid productivity gains, perpetuate intergenerational income immobility and concentrate economic power. In OECD nations, the income ratio of the richest 10% to the poorest 10% averaged 8.4:1 in 2021, with wage shares declining in many countries since the 1980s, shifting more income toward capital holders and exacerbating functional distribution imbalances.[208][209] These dynamics underscore that policies fostering wage compression—through skill enhancement or bargaining institutions—can mitigate inequality, but unintended consequences like reduced labor force participation among low-productivity workers must be weighed against egalitarian goals.[210]Key Controversies

Debates on Minimum Wage Outcomes

The primary debate on minimum wage outcomes revolves around its effects on employment, particularly for low-skilled workers, with theoretical predictions of disemployment contrasting empirical findings that often show small but negative impacts. In standard economic theory, a minimum wage acts as a price floor above the market equilibrium wage, leading to excess labor supply or unemployment as firms hire fewer workers at the higher mandated cost.[185] Empirical studies, however, yield mixed results, though meta-analyses indicate that a 10% increase in the minimum wage typically reduces employment by 1-3% among affected groups such as teenagers and low-skilled adults. [179] Numerous peer-reviewed meta-analyses affirm modest disemployment effects, especially in competitive labor markets. For instance, a review of time-series studies estimates elasticities ranging from -0.1 to -0.3, implying that minimum wage hikes disproportionately affect vulnerable populations by reducing job opportunities rather than hours worked uniformly.[179] Another analysis of 55 studies across 15 countries found negative employment responses when minimum wages interact with labor market regulations, with stronger effects for youth and the least-skilled.[211] Critics of disemployment claims, such as early work by Card and Krueger, argued for negligible or positive effects based on case studies like New Jersey's 1992 increase, but subsequent critiques highlighted methodological flaws, including reliance on survey data prone to measurement error and failure to account for hours reductions.[181] More robust panel data analyses, including those by Neumark and colleagues, consistently detect job losses for low-wage teens and restaurant workers, with elasticities around -0.2 for a 10% hike.[181] [182] Real-world implementations provide case-specific evidence of trade-offs. Seattle's phased minimum wage increase from $9.47 in 2015 to $13.00 by 2017 resulted in a 9% reduction in hours worked per low-wage job, lowering average monthly earnings by $125 for full-time equivalent workers compared to nearby areas, though overall inequality among low earners decreased modestly due to wage compression.[212] Similarly, the U.S. Congressional Budget Office projected in 2021 that raising the federal minimum to $15 by 2025 under the Raise the Wage Act would boost earnings for 17 million workers but eliminate 1.4 million jobs (0.9% of employment) in that year, with net family income gains from reduced poverty offset by losses among the newly unemployed.[213] These outcomes suggest that while some low-wage earners benefit from higher pay, others—often the least experienced or marginal workers—face barriers to entry, prompting debates on whether gains in take-home pay for incumbents justify the exclusion of job seekers.[205] Proponents of minimum wages counter that monopsonistic market power in low-wage sectors allows hikes without significant job loss, citing studies like the UK's National Minimum Wage reviews showing no aggregate disemployment.[214] However, such findings are context-dependent, with weaker effects in highly regulated or unionized environments, and U.S.-focused research reveals heterogeneous impacts: minimal overall but pronounced for teens (up to 2-3% employment drop per 10% wage rise) and industries like restaurants.[215] Recent analyses also link minimum wages to accelerated automation, reducing automatable low-skill jobs as firms substitute capital for labor.[216] Overall, the evidence tilts toward causal disemployment costs that challenge claims of unambiguous benefits, particularly when ignoring long-term effects on skill acquisition and labor force participation among youth.[217]| Study Type | Key Finding | Elasticity Estimate (for 10% MW Increase) | Source |

|---|---|---|---|

| Time-Series Meta-Analysis | Negative teen employment effects | -0.1 to -0.3 | |

| Cross-Country Meta (55 Studies) | Negative, stronger with regulations | Varies, youth-focused | [211] |

| Seattle Ordinance Evaluation | Hours reduced 9%, earnings down for low-wage | N/A (case-specific) | [212] |

| CBO Projection ($15 by 2025) | 1.4M jobs lost (0.9%) | Implied ~ -0.1 overall | [213] |