Recent from talks

Contribute something

Nothing was collected or created yet.

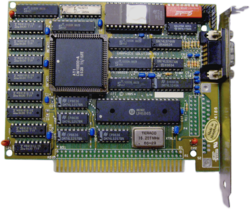

Graphics card

View on WikipediaThis article has multiple issues. Please help improve it or discuss these issues on the talk page. (Learn how and when to remove these messages)

|

A graphics card (also called a video card, display card, graphics accelerator, graphics adapter, VGA card/VGA, video adapter, or display adapter) is a computer expansion card that generates a feed of graphics output to a display device such as a monitor. Graphics cards are sometimes called discrete or dedicated graphics cards to emphasize their distinction to an integrated graphics processor on the motherboard or the central processing unit (CPU). A graphics processing unit (GPU) that performs the necessary computations is the main component in a graphics card, but the acronym "GPU" is sometimes also used to refer to the graphics card as a whole erroneously.[1]

Most graphics cards are not limited to simple display output. The graphics processing unit can be used for additional processing, which reduces the load from the CPU.[2] Additionally, computing platforms such as OpenCL and CUDA allow using graphics cards for general-purpose computing. Applications of general-purpose computing on graphics cards include AI training, cryptocurrency mining, and molecular simulation.[3][4][5]

Usually, a graphics card comes in the form of a printed circuit board (expansion board) which is to be inserted into an expansion slot.[6] Others may have dedicated enclosures, and they are connected to the computer via a docking station or a cable. These are known as external GPUs (eGPUs).

Graphics cards are often preferred over integrated graphics for increased performance. A more powerful graphics card will be able to render more frames per second.

History

[edit]Graphics cards, also known as video cards or graphics processing units (GPUs), have historically evolved alongside computer display standards to accommodate advancing technologies and user demands. In the realm of IBM PC compatibles, the early standards included Monochrome Display Adapter (MDA), Color Graphics Adapter (CGA), Hercules Graphics Card, Enhanced Graphics Adapter (EGA), and Video Graphics Array (VGA). Each of these standards represented a step forward in the ability of computers to display more colors, higher resolutions, and richer graphical interfaces, laying the foundation for the development of modern graphical capabilities.

In the late 1980s, advancements in personal computing led companies like Radius to develop specialized graphics cards for the Apple Macintosh II. These cards were unique in that they incorporated discrete 2D QuickDraw capabilities, enhancing the graphical output of Macintosh computers by accelerating 2D graphics rendering. QuickDraw, a core part of the Macintosh graphical user interface, allowed for the rapid rendering of bitmapped graphics, fonts, and shapes, and the introduction of such hardware-based enhancements signaled an era of specialized graphics processing in consumer machines.

The evolution of graphics processing took a major leap forward in the mid-1990s with 3dfx Interactive's introduction of the Voodoo series, one of the earliest consumer-facing GPUs that supported 3D acceleration. The Voodoo's architecture marked a major shift in graphical computing by offloading the demanding task of 3D rendering from the CPU to the GPU, significantly improving gaming performance and graphical realism.

The development of fully integrated GPUs that could handle both 2D and 3D rendering came with the introduction of the NVIDIA RIVA 128. Released in 1997, the RIVA 128 was one of the first consumer-facing GPUs to integrate both 3D and 2D processing units on a single chip. This innovation simplified the hardware requirements for end-users, as they no longer needed separate cards for 2D and 3D rendering, thus paving the way for the widespread adoption of more powerful and versatile GPUs in personal computers.

In contemporary times, the majority of graphics cards are built using chips sourced from two dominant manufacturers: AMD and Nvidia. These modern graphics cards are multifunctional and support various tasks beyond rendering 3D images for gaming. They also provide 2D graphics processing, video decoding, TV output, and multi-monitor setups. Additionally, many graphics cards now have integrated sound capabilities, allowing them to transmit audio alongside video output to connected TVs or monitors with built-in speakers, further enhancing the multimedia experience.

Within the graphics industry, these products are often referred to as graphics add-in boards (AIBs).[7] The term "AIB" emphasizes the modular nature of these components, as they are typically added to a computer's motherboard to enhance its graphical capabilities. The evolution from the early days of separate 2D and 3D cards to today's integrated and multifunctional GPUs reflects the ongoing technological advancements and the increasing demand for high-quality visual and multimedia experiences in computing.

Discrete vs integrated graphics

[edit]

As an alternative to the use of a graphics card, video hardware can be integrated into the motherboard, CPU, or a system-on-chip as integrated graphics. Motherboard-based implementations are sometimes called "on-board video". Some motherboards support using both integrated graphics and a graphics card simultaneously to feed separate displays. The main advantages of integrated graphics are: low cost, compactness, simplicity, and low energy consumption. Integrated graphics often have less performance than a graphics card because the graphics processing unit inside integrated graphics needs to share system resources with the CPU. On the other hand, a graphics card has a separate random access memory (RAM), cooling system, and dedicated power regulators. A graphics card can offload work and reduce memory-bus-contention from the CPU and system RAM, therefore, the overall performance for a computer could improve, in addition to increased performance in graphics processing. Such improvements to performance can be seen in video gaming, 3D animation, and video editing.[8][9]

Both AMD and Intel have introduced CPUs and motherboard chipsets that support the integration of a GPU into the same die as the CPU. AMD advertises CPUs with integrated graphics under the trademark Accelerated Processing Unit (APU), while Intel brands similar technology under "Intel Graphics Technology".[10]

Power demand

[edit]As the processing power of graphics cards increased, so did their demand for electrical power. Current high-performance graphics cards tend to consume large amounts of power. For example, the thermal design power (TDP) for the GeForce Titan RTX is 280 watts.[11] When tested with video games, the GeForce RTX 2080 Ti Founder's Edition averaged 300 watts of power consumption.[12] While CPU and power supply manufacturers have recently aimed toward higher efficiency, power demands of graphics cards continued to rise, with the largest power consumption of any individual part in a computer.[13][14] Although power supplies have also increased their power output, the bottleneck occurs in the PCI-Express connection, which is limited to supplying 75 watts.[15]

Modern graphics cards with a power consumption of over 75 watts usually include a combination of six-pin (75 W) or eight-pin (150 W) sockets that connect directly to the power supply. Providing adequate cooling becomes a challenge in such computers. Computers with multiple graphics cards may require power supplies over 750 watts. Heat extraction becomes a major design consideration for computers with two or more high-end graphics cards.[citation needed]

As of the Nvidia GeForce RTX 30 series, Ampere architecture, a custom flashed RTX 3090 named "Hall of Fame" has been recorded to reach a peak power draw as high as 630 watts. A standard RTX 3090 can peak at up to 450 watts. The RTX 3080 can reach up to 350 watts, while a 3070 can reach a similar, if not slightly lower, peak power draw. Ampere cards of the Founders Edition variant feature a "dual axial flow through"[16] cooler design, which includes fans above and below the card to dissipate as much heat as possible towards the rear of the computer case. A similar design was used by the Sapphire Radeon RX Vega 56 Pulse graphics card.[17]

Size

[edit]Graphics cards for desktop computers have different size profiles, which allows graphics cards to be added to smaller-sized computers. Some graphics cards are not of the usual size, and are named as "low profile".[18][19] Graphics card profiles are based on height only, with low-profile cards taking up less than the height of a PCIe slot. Length and thickness can vary greatly, with high-end cards usually occupying two or three expansion slots, and with modern high-end graphics cards such as the RTX 4090 exceeding 300mm in length.[20] A lower profile card is preferred when trying to fit multiple cards or if graphics cards run into clearance issues with other motherboard components like the DIMM or PCIE slots. This can be fixed with a larger computer case such as mid-tower or full tower. Full towers are usually able to fit larger motherboards in sizes like ATX and micro ATX.[citation needed]

GPU sag

[edit]In the late 2010s and early 2020s, some high-end graphics card models have become so heavy that it is possible for them to sag downwards after installing without proper support, which is why many manufacturers provide additional support brackets.[21] GPU sag can damage a GPU in the long term.[21]

Multicard scaling

[edit]Some graphics cards can be linked together to allow scaling graphics processing across multiple cards. This is done using either the PCIe bus on the motherboard or, more commonly, a data bridge. Usually, the cards must be of the same model to be linked, and most low end cards are not able to be linked in this way.[22] AMD and Nvidia both have proprietary scaling methods, CrossFireX for AMD, and SLI (since the Turing generation, superseded by NVLink) for Nvidia. Cards from different chip-set manufacturers or architectures cannot be used together for multi-card scaling. If graphics cards have different sizes of memory, the lowest value will be used, with the higher values disregarded. Currently, scaling on consumer-grade cards can be done using up to four cards.[23][24][25] The use of four cards requires a large motherboard with a proper configuration. Nvidia's GeForce GTX 590 graphics card can be configured in a four-card configuration.[26] As stated above, users will want to stick to cards with the same performances for optimal use. Motherboards including ASUS Maximus 3 Extreme and Gigabyte GA EX58 Extreme are certified to work with this configuration.[27] A large power supply is necessary to run the cards in SLI or CrossFireX. Power demands must be known before a proper supply is installed. For the four card configuration, a 1000+ watt supply is needed.[27] With any relatively powerful graphics card, thermal management cannot be ignored. Graphics cards require well-vented chassis and good thermal solutions. Air or water cooling are usually required, though low end GPUs can use passive cooling. Larger configurations use water solutions or immersion cooling to achieve proper performance without thermal throttling.[28]

SLI and Crossfire have become increasingly uncommon as most games do not fully utilize multiple GPUs, due to the fact that most users cannot afford them.[29][30][31] Multiple GPUs are still used on supercomputers (like in Summit), on workstations to accelerate video[32][33][34] and 3D rendering,[35][36][37][38][39] visual effects,[40][41] for simulations,[42] and for training artificial intelligence.

3D graphics APIs

[edit]A graphics driver usually supports one or multiple cards by the same vendor and has to be written for a specific operating system. Additionally, the operating system or an extra software package may provide certain programming APIs for applications to perform 3D rendering.

| OS | Vulkan | Direct3D | Metal | OpenGL | OpenGL ES | OpenCL |

|---|---|---|---|---|---|---|

| Windows | Yes | Microsoft | No | Yes | Yes | Yes |

| macOS, iOS and iPadOS | MoltenVK | No | Apple | MacOS | iOS/iPadOS | Apple |

| Linux | Yes | Alternative Implementations |

No | Yes | Yes | Yes |

| Android | Yes | No | No | Nvidia | Yes | Yes |

| Tizen | In development | No | No | No | Yes | — |

| Sailfish OS | In development | No | No | No | Yes | — |

Usage

[edit]GPUs are designed with specific usages in mind, such product lines are categorized here :

- Gaming

- GeForce GTX

- GeForce RTX

- Nvidia Titan

- Radeon HD

- Radeon RX

- Intel Arc

- Cloud gaming

- Workstation

- Cloud Workstation

- Artificial Intelligence Cloud

- Automated/Driverless car

Industry

[edit]As of 2016, the primary suppliers of the GPUs (graphics chips or chipsets) used in graphics cards are AMD and Nvidia. In the third quarter of 2013, AMD had a 35.5% market share while Nvidia had 64.5%,[43] according to Jon Peddie Research. In economics, this industry structure is termed a duopoly. AMD and Nvidia also build and sell graphics cards, which are termed graphics add-in-boards (AIBs) in the industry. (See Comparison of Nvidia graphics processing units and Comparison of AMD graphics processing units.) In addition to marketing their own graphics cards, AMD and Nvidia sell their GPUs to authorized AIB suppliers, which AMD and Nvidia refer to as "partners".[44] The fact that Nvidia and AMD compete directly with their customer/partners complicates relationships in the industry. AMD and Intel being direct competitors in the CPU industry is also noteworthy, since AMD-based graphics cards may be used in computers with Intel CPUs. Intel's integrated graphics may weaken AMD, in which the latter derives a significant portion of its revenue from its APUs. As of the second quarter of 2013, there were 52 AIB suppliers.[44] These AIB suppliers may market graphics cards under their own brands, produce graphics cards for private label brands, or produce graphics cards for computer manufacturers. Some AIB suppliers such as MSI build both AMD-based and Nvidia-based graphics cards. Others, such as EVGA, build only Nvidia-based graphics cards, while XFX, now builds only AMD-based graphics cards. Several AIB suppliers are also motherboard suppliers. Most of the largest AIB suppliers are based in Taiwan and they include ASUS, MSI, GIGABYTE, and Palit. Hong Kong–based AIB manufacturers include Sapphire and Zotac. Sapphire and Zotac also sell graphics cards exclusively for AMD and Nvidia GPUs respectively.[45]

Market

[edit]Graphics card shipments peaked at a total of 114 million in 1999. By contrast, they totaled 14.5 million units in the third quarter of 2013, a 17% fall from Q3 2012 levels.[43] Shipments reached an annual total of 44 million in 2015.[citation needed] The sales of graphics cards have trended downward due to improvements in integrated graphics technologies; high-end, CPU-integrated graphics can provide competitive performance with low-end graphics cards. At the same time, graphics card sales have grown within the high-end segment, as manufacturers have shifted their focus to prioritize the gaming and enthusiast market.[45][46]

Beyond the gaming and multimedia segments, graphics cards have been increasingly used for general-purpose computing, such as big data processing.[47] The growth of cryptocurrency has placed a severely high demand on high-end graphics cards, especially in large quantities, due to their advantages in the process of cryptocurrency mining. In January 2018, mid- to high-end graphics cards experienced a major surge in price, with many retailers having stock shortages due to the significant demand among this market.[46][48][49] Graphics card companies released mining-specific cards designed to run 24 hours a day, seven days a week, and without video output ports.[5] The graphics card industry took a setback due to the 2020–21 chip shortage.[50]

Parts

[edit]

A modern graphics card consists of a printed circuit board on which the components are mounted. These include:

Graphics processing unit

[edit]A graphics processing unit (GPU), also occasionally called visual processing unit (VPU), is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the building of images in a frame buffer intended for output to a display. Because of the large degree of programmable computational complexity for such a task, a modern graphics card is also a computer unto itself.

Heat sink

[edit]A heat sink is mounted on most modern graphics cards. A heat sink spreads out the heat produced by the graphics processing unit evenly throughout the heat sink and unit itself. The heat sink commonly has a fan mounted to cool the heat sink and the graphics processing unit. Not all cards have heat sinks, for example, some cards are liquid-cooled and instead have a water block; additionally, cards from the 1980s and early 1990s did not produce much heat, and did not require heat sinks. Most modern graphics cards need proper thermal solutions. They can be water-cooled or through heat sinks with additional connected heat pipes usually made of copper for the best thermal transfer.[citation needed]

Video BIOS

[edit]The video BIOS or firmware contains a minimal program for the initial set up and control of the graphics card. It may contain information on the memory and memory timing, operating speeds and voltages of the graphics processor, and other details which can sometimes be changed.[citation needed]

Modern Video BIOSes do not support full functionalities of graphics cards; they are only sufficient to identify and initialize the card to display one of a few frame buffer or text display modes. It does not support YUV to RGB translation, video scaling, pixel copying, compositing or any of the multitude of other 2D and 3D features of the graphics card, which must be accessed by software drivers.[citation needed]

Video memory

[edit]| Type | Memory clock rate (MHz) | Bandwidth (GB/s) |

|---|---|---|

| DDR | 200–400 | 1.6–3.2 |

| DDR2 | 400–1066 | 3.2–8.533 |

| DDR3 | 800–2133 | 6.4–17.066 |

| DDR4 | 1600–4866 | 12.8–25.6 |

| DDR5 | 4000-8800 | 32-128 |

| GDDR4 | 3000–4000 | 160–256 |

| GDDR5 | 1000–2000 | 288–336.5 |

| GDDR5X | 1000–1750 | 160–673 |

| GDDR6 | 1365–1770 | 336–672 |

| HBM | 250–1000 | 512–1024 |

The memory capacity of most modern graphics cards ranges from 2 to 24 GB.[51] But with up to 32 GB as of the late 2010s, the applications for graphics use are becoming more powerful and widespread. Since video memory needs to be accessed by the GPU and the display circuitry, it often uses special high-speed or multi-port memory, such as VRAM, WRAM, SGRAM, etc. Around 2003, the video memory was typically based on DDR technology. During and after that year, manufacturers moved towards DDR2, GDDR3, GDDR4, GDDR5, GDDR5X, and GDDR6. The effective memory clock rate in modern cards is generally between 2 and 15 GHz.[citation needed]

Video memory may be used for storing other data as well as the screen image, such as the Z-buffer, which manages the depth coordinates in 3D graphics, as well as textures, vertex buffers, and compiled shader programs.

RAMDAC

[edit]The RAMDAC, or random-access-memory digital-to-analog converter, converts digital signals to analog signals for use by a computer display that uses analog inputs such as cathode-ray tube (CRT) displays. The RAMDAC is a kind of RAM chip that regulates the functioning of the graphics card. Depending on the number of bits used and the RAMDAC-data-transfer rate, the converter will be able to support different computer-display refresh rates. With CRT displays, it is best to work over 75 Hz and never under 60 Hz, to minimize flicker.[52] (This is not a problem with LCD displays, as they have little to no flicker.[citation needed]) Due to the growing popularity of digital computer displays and the integration of the RAMDAC onto the GPU die, it has mostly disappeared as a discrete component. All current LCD/plasma monitors and TVs and projectors with only digital connections work in the digital domain and do not require a RAMDAC for those connections. There are displays that feature analog inputs (VGA, component, SCART, etc.) only. These require a RAMDAC, but they reconvert the analog signal back to digital before they can display it, with the unavoidable loss of quality stemming from this digital-to-analog-to-digital conversion.[citation needed] With the VGA standard being phased out in favor of digital formats, RAMDACs have started to disappear from graphics cards.[citation needed]

Output interfaces

[edit]

The most common connection systems between the graphics card and the computer display are:

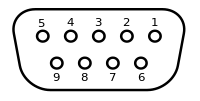

Video Graphics Array (VGA) (DE-15)

[edit]

Also known as D-sub, VGA is an analog-based standard adopted in the late 1980s designed for CRT displays, also called VGA connector. Today, the VGA analog interface is used for high definition video resolutions including 1080p and higher. Some problems of this standard are electrical noise, image distortion and sampling error in evaluating pixels. While the VGA transmission bandwidth is high enough to support even higher resolution playback, the picture quality can degrade depending on cable quality and length. The extent of quality difference depends on the individual's eyesight and the display; when using a DVI or HDMI connection, especially on larger sized LCD/LED monitors or TVs, quality degradation, if present, is prominently visible. Blu-ray playback at 1080p is possible via the VGA analog interface, if Image Constraint Token (ICT) is not enabled on the Blu-ray disc.

Digital Visual Interface (DVI)

[edit]

Digital Visual Interface is a digital-based standard designed for displays such as flat-panel displays (LCDs, plasma screens, wide high-definition television displays) and video projectors. There were also some rare high-end CRT monitors that use DVI. It avoids image distortion and electrical noise, corresponding each pixel from the computer to a display pixel, using its native resolution. Most manufacturers include a DVI-I connector, allowing (via simple adapter) standard RGB signal output to an old CRT or LCD monitor with VGA input.

Video-in video-out (VIVO) for S-Video, composite video and component video

[edit]

These connectors are included to allow connection with televisions, DVD players, video recorders and video game consoles. They often come in two 10-pin mini-DIN connector variations, and the VIVO splitter cable generally comes with either 4 connectors (S-Video in and out plus composite video in and out), or 6 connectors (S-Video in and out, component YPBPR out and composite in and out).

High-Definition Multimedia Interface (HDMI)

[edit]

HDMI is a compact audio/video interface for transferring uncompressed video data and compressed/uncompressed digital audio data from an HDMI-compliant device ("the source device") to a compatible digital audio device, computer monitor, video projector, or digital television.[53] HDMI is a digital replacement for existing analog video standards. HDMI supports copy protection through HDCP.

DisplayPort

[edit]

DisplayPort is a digital display interface developed by the Video Electronics Standards Association (VESA). The interface is primarily used to connect a video source to a display device such as a computer monitor, though it can also be used to transmit audio, USB, and other forms of data.[54] The VESA specification is royalty-free. VESA designed it to replace VGA, DVI, and LVDS. Backward compatibility to VGA and DVI by using adapter dongles enables consumers to use DisplayPort fitted video sources without replacing existing display devices. Although DisplayPort has a greater throughput of the same functionality as HDMI, it is expected to complement the interface, not replace it.[55][56]

USB-C

[edit]USB-C is a extensible connector used for USB, display port, thunderbolt, power delivery. The USB-C is a 24 pin reversible connector that supersedes previous USB connectors. Some newer graphics cards use USB-C ports for versatility.[57]

Other types of connection systems

[edit]| Type | Connector | Description |

|---|---|---|

| Composite video |  |

For display on analog systems with SD resolutions (PAL or NTSC)[58] the RCA connector output can be used. The single pin connector carries all resolution, brightness and color information, making it the lowest quality dedicated video connection.[59] Depending on the card the SECAM color system might be supported, along with non-standard modes like PAL-60 or NTSC50. |

| S-Video |  |

For display on analog systems with SD resolutions (PAL or NTSC), the S-video cable carries two synchronized signal and ground pairs, termed Y and C, on a four-pin mini-DIN connector. In composite video, the signals co-exist on different frequencies. To achieve this, the luminance signal must be low-pass filtered, dulling the image. As S-Video maintains the two as separate signals, such detrimental low-pass filtering for luminance is unnecessary, although the chrominance signal still has limited bandwidth relative to component video. |

| 7P |  |

Non-standard 7-pin mini-DIN connectors (termed "7P") are used in some computer equipment (PCs and Macs). A 7P socket accepts and is pin compatible with a standard 4-pin S-Video plug.[60] The three extra sockets may be used to supply composite (CVBS), an RGB or YPbPr video signal, or an I²C interface.[60][61] |

| 8-pin mini-DIN |  |

The 8-pin mini-DIN connector is used in some ATI Radeon video cards.[62] |

| Component video |  |

It uses three cables, each with an RCA connector (YCBCR for digital component, or YPBPR for analog component); it is used in older projectors, video-game consoles, and DVD players.[63] It can carry SDTV 480i/576i and EDTV 480p/576p resolutions, and HDTV resolutions 720p and 1080i, but not 1080p due to industry concerns about copy protection. Its graphics quality is equivalent to HDMI for the resolutions it carries,[64] but for best performance for Blu-ray, other 1080p sources like PPV, or 4K Ultra HD, a digital display connector is required. |

| DB13W3 |  |

An analog standard once used by Sun Microsystems, SGI and IBM. |

| DMS-59 |  |

A connector that provides a DVI or VGA output on a single connector. |

| DE-9 |  |

The historical connector used by EGA and CGA graphics cards is a female nine-pin D-subminiature (DE-9). The signal standard and pinout are backward-compatible with CGA, allowing EGA monitors to be used on CGA cards and vice versa. |

Motherboard interfaces

[edit]

Chronologically, connection systems between graphics card and motherboard were, mainly:

- S-100 bus: Designed in 1974 as a part of the Altair 8800, it is the first industry-standard bus for the microcomputer industry.

- ISA: Introduced in 1981 by IBM, it became dominant in the marketplace in the 1980s. It is an 8- or 16-bit bus clocked at 8 MHz.

- NuBus: Used in Macintosh II, it is a 32-bit bus with an average bandwidth of 10 to 20 MB/s.

- MCA: Introduced in 1987 by IBM it is a 32-bit bus clocked at 10 MHz.

- EISA: Released in 1988 to compete with IBM's MCA, it was compatible with the earlier ISA bus. It is a 32-bit bus clocked at 8.33 MHz.

- VLB: An extension of ISA, it is a 32-bit bus clocked at 33 MHz. Also referred to as VESA.

- PCI: Replaced the EISA, ISA, MCA and VESA buses from 1993 onwards. PCI allowed dynamic connectivity between devices, avoiding the manual adjustments required with jumpers. It is a 32-bit bus clocked 33 MHz.

- UPA: An interconnect bus architecture introduced by Sun Microsystems in 1995. It is a 64-bit bus clocked at 67 or 83 MHz.

- USB: Although mostly used for miscellaneous devices, such as secondary storage devices or peripherals and toys, USB displays and display adapters exist. It was first used in 1996.

- AGP: First used in 1997, it is a dedicated-to-graphics bus. It is a 32-bit bus clocked at 66 MHz.

- PCI-X: An extension of the PCI bus, it was introduced in 1998. It improves upon PCI by extending the width of bus to 64 bits and the clock frequency to up to 133 MHz.

- PCI Express: Abbreviated as PCIe, it is a point-to-point interface released in 2004. In 2006, it provided a data-transfer rate that is double of AGP. It should not be confused with PCI-X, an enhanced version of the original PCI specification. This is standard for most modern graphics cards.

The following table is a comparison between features of some interfaces listed above.

| Bus | Width (bits) | Clock rate (MHz) | Bandwidth (MB/s) | Style |

|---|---|---|---|---|

| ISA XT | 8 | 4.77 | 8 | Parallel |

| ISA AT | 16 | 8.33 | 16 | Parallel |

| MCA | 32 | 10 | 20 | Parallel |

| NUBUS | 32 | 10 | 10–40 | Parallel |

| EISA | 32 | 8.33 | 32 | Parallel |

| VESA | 32 | 40 | 160 | Parallel |

| PCI | 32–64 | 33–100 | 132–800 | Parallel |

| AGP 1x | 32 | 66 | 264 | Parallel |

| AGP 2x | 32 | 66 | 528 | Parallel |

| AGP 4x | 32 | 66 | 1000 | Parallel |

| AGP 8x | 32 | 66 | 2000 | Parallel |

| PCIe x1 | 1 | 2500 / 5000 | 250 / 500 | Serial |

| PCIe x4 | 1 × 4 | 2500 / 5000 | 1000 / 2000 | Serial |

| PCIe x8 | 1 × 8 | 2500 / 5000 | 2000 / 4000 | Serial |

| PCIe x16 | 1 × 16 | 2500 / 5000 | 4000 / 8000 | Serial |

| PCIe ×1 2.0[65] | 1 | 500 / 1000 | Serial | |

| PCIe ×4 2.0 | 1 × 4 | 2000 / 4000 | Serial | |

| PCIe ×8 2.0 | 1 × 8 | 4000 / 8000 | Serial | |

| PCIe ×16 2.0 | 1 × 16 | 5000 / 10000 | 8000 / 16000 | Serial |

| PCIe ×1 3.0 | 1 | 1000 / 2000 | Serial | |

| PCIe ×4 3.0 | 1 × 4 | 4000 / 8000 | Serial | |

| PCIe ×8 3.0 | 1 × 8 | 8000 / 16000 | Serial | |

| PCIe ×16 3.0 | 1 × 16 | 16000 / 32000 | Serial | |

| PCIe ×1 4.0 | 1 | 2000 / 4000 | Serial | |

| PCIe ×4 4.0 | 1 × 4 | 8000 / 16000 | Serial | |

| PCIe ×8 4.0 | 1 × 8 | 16000 / 32000 | Serial | |

| PCIe ×16 4.0 | 1 × 16 | 32000 / 64000 | Serial | |

| PCIe ×1 5.0 | 1 | 4000 / 8000 | Serial | |

| PCIe ×4 5.0 | 1 × 4 | 16000 / 32000 | Serial | |

| PCIe ×8 5.0 | 1 × 8 | 32000 / 64000 | Serial | |

| PCIe ×16 5.0 | 1 × 16 | 64000 / 128000 | Serial |

See also

[edit]- List of computer hardware

- List of graphics card manufacturers

- List of computer display standards – a detailed list of standards like SVGA, WXGA, WUXGA, etc.

- AMD (ATI), Nvidia – quasi duopoly of 3D chip GPU and graphics card designers

- GeForce, Radeon, Intel Arc – examples of graphics card series

- GPGPU (i.e.: CUDA, AMD FireStream)

- Framebuffer – the computer memory used to store a screen image

- Capture card – the inverse of a graphics card

References

[edit]- ^ "What is a GPU?" Intel. Retrieved 10 August 2023.

- ^ "ExplainingComputers.com: Hardware". www.explainingcomputers.com. Archived from the original on 17 December 2017. Retrieved 11 December 2017.

- ^ "OpenGL vs DirectX - Cprogramming.com". www.cprogramming.com. Archived from the original on 12 December 2017. Retrieved 11 December 2017.

- ^ "Powering Change with Nvidia AI and Data Science". Nvidia. Archived from the original on 10 November 2020. Retrieved 10 November 2020.

- ^ a b Parrish, Kevin (10 July 2017). "Graphics cards dedicated to cryptocurrency mining are here, and we have the list". Digital Trends. Archived from the original on 1 August 2020. Retrieved 16 January 2020.

- ^ "Graphic Card Components". pctechguide.com. 23 September 2011. Archived from the original on 12 December 2017. Retrieved 11 December 2017.

- ^ "Graphics Add-in Board (AIB) Market Share, Size, Growth, Opportunity and Forecast 2024-2032". www.imarcgroup.com. Retrieved 15 September 2024.

- ^ "Integrated vs Dedicated Graphics Cards | Lenovo US". www.lenovo.com. Retrieved 9 November 2023.

- ^ Brey, Barry B. (2009). The Intel microprocessors: 8086/8088, 80186/80188, 80286, 80386, 80486, Pentium, Pentium Pro processor, Pentium II, Pentium III, Pentium 4, and Core2 with 64-bit extensions (PDF) (8th ed.). Upper Saddle River, N.J: Pearson Prentice Hall. ISBN 978-0-13-502645-8.

- ^ Crijns, Koen (6 September 2013). "Intel Iris Pro 5200 graphics review: the end of mid-range GPUs?". hardware.info. Archived from the original on 3 December 2013. Retrieved 30 November 2013.

- ^ "Introducing The GeForce GTX 780 Ti". Archived from the original on 3 December 2013. Retrieved 30 November 2013.

- ^ "Test Results: Power Consumption For Mining & Gaming - The Best GPUs For Ethereum Mining, Tested and Compared". Tom's Hardware. 30 March 2018. Archived from the original on 1 December 2018. Retrieved 30 November 2018.

- ^ "Faster, Quieter, Lower: Power Consumption and Noise Level of Contemporary Graphics Cards". xbitlabs.com. Archived from the original on 4 September 2011.

- ^ "Video Card Power Consumption". codinghorror.com. 18 August 2006. Archived from the original on 8 September 2008. Retrieved 15 September 2008.

- ^ Maxim Integrated Products. "Power-Supply Management Solution for PCI Express x16 Graphics 150W-ATX Add-In Cards". Archived from the original on 5 December 2009. Retrieved 17 February 2007.

- ^ "Introducing NVIDIA GeForce RTX 30 Series Graphics Cards". NVIDIA. Retrieved 24 February 2024.

- ^ "NVIDIA GeForce Ampere Architecture, Board Design, Gaming Tech & Software". TechPowerUp. 4 September 2020. Retrieved 24 February 2024.

- ^ "What is a Low Profile Video Card?". Outletapex. Archived from the original on 24 July 2020. Retrieved 29 April 2020.

- ^ "Best 'low profile' graphics card". Tom's Hardware. Archived from the original on 19 February 2013. Retrieved 6 December 2012.

- ^ "RTX 4090 | GeForce RTX 4090 Graphics Card". GeForce. Archived from the original on 8 March 2023. Retrieved 3 April 2023.

- ^ a b "What is GPU sag, and how to avoid it". Digital Trends. 18 April 2023. Retrieved 30 September 2024.

- ^ "SLI". geforce.com. Archived from the original on 15 March 2013. Retrieved 13 March 2013.

- ^ "SLI vs. CrossFireX: The DX11 generation". techreport.com. 11 August 2010. Archived from the original on 27 February 2013. Retrieved 13 March 2013.

- ^ Adrian Kingsley-Hughes. "NVIDIA GeForce GTX 680 in quad-SLI configuration benchmarked". ZDNet. Archived from the original on 7 February 2013. Retrieved 13 March 2013.

- ^ "Head to Head: Quad SLI vs. Quad CrossFireX". Maximum PC. Archived from the original on 10 August 2012. Retrieved 13 March 2013.

- ^ "How to Build a Quad SLI Gaming Rig | GeForce". www.geforce.com. Archived from the original on 26 December 2017. Retrieved 11 December 2017.

- ^ a b "How to Build a Quad SLI Gaming Rig | GeForce". www.geforce.com. Archived from the original on 26 December 2017. Retrieved 11 December 2017.

- ^ "NVIDIA Quad-SLI|NVIDIA". www.nvidia.com. Archived from the original on 12 December 2017. Retrieved 11 December 2017.

- ^ Abazovic, Fuad. "Crossfire and SLI market is just 300.000 units". www.fudzilla.com. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "Is Multi-GPU Dead?". Tech Altar. 7 January 2018. Archived from the original on 27 March 2020. Retrieved 3 March 2020.

- ^ "Nvidia SLI and AMD CrossFire is dead – but should we mourn multi-GPU gaming? | TechRadar". www.techradar.com. 24 August 2019. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "Hardware Selection and Configuration Guide" (PDF). documents.blackmagicdesign.com. Archived (PDF) from the original on 11 November 2020. Retrieved 10 November 2020.

- ^ "Recommended System: Recommended Systems for DaVinci Resolve". Puget Systems. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "GPU Accelerated Rendering and Hardware Encoding". helpx.adobe.com. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "V-Ray Next Multi-GPU Performance Scaling". Puget Systems. 20 August 2019. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "FAQ | GPU-accelerated 3D rendering software | Redshift". www.redshift3d.com. Archived from the original on 11 April 2020. Retrieved 3 March 2020.

- ^ "OctaneRender 2020 Preview is here!". Archived from the original on 7 March 2020. Retrieved 3 March 2020.

- ^ Williams, Rob. "Exploring Performance With Autodesk's Arnold Renderer GPU Beta – Techgage". techgage.com. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "GPU Rendering — Blender Manual". docs.blender.org. Archived from the original on 16 April 2020. Retrieved 3 March 2020.

- ^ "V-Ray for Nuke – Ray Traced Rendering for Compositors | Chaos Group". www.chaosgroup.com. Archived from the original on 3 March 2020. Retrieved 3 March 2020.

- ^ "System Requirements | Nuke | Foundry". www.foundry.com. Archived from the original on 1 August 2020. Retrieved 3 March 2020.

- ^ "What about multi-GPU support?". Archived from the original on 18 January 2021. Retrieved 10 November 2020.

- ^ a b "Graphics Card Market Up Sequentially in Q3, NVIDIA Gains as AMD Slips". Archived from the original on 28 November 2013. Retrieved 30 November 2013.

- ^ a b "Add-in board-market down in Q2, AMD gains market share [Press Release]". Jon Peddie Research. 16 August 2013. Archived from the original on 3 December 2013. Retrieved 30 November 2013.

- ^ a b Chen, Monica (16 April 2013). "Palit, PC Partner surpass Asustek in graphics card market share". DIGITIMES. Archived from the original on 7 September 2013. Retrieved 1 December 2013.

- ^ a b Shilov, Anton. "Discrete Desktop GPU Market Trends Q2 2016: AMD Grabs Market Share, But NVIDIA Remains on Top". Anandtech. Archived from the original on 23 January 2018. Retrieved 22 January 2018.

- ^ Chanthadavong, Aimee. "Nvidia touts GPU processing as the future of big data". ZDNet. Archived from the original on 20 January 2018. Retrieved 22 January 2018.

- ^ "Here's why you can't buy a high-end graphics card at Best Buy". Ars Technica. Archived from the original on 21 January 2018. Retrieved 22 January 2018.

- ^ "GPU Prices Skyrocket, Breaking the Entire DIY PC Market". ExtremeTech. 19 January 2018. Archived from the original on 20 January 2018. Retrieved 22 January 2018.

- ^ "How Graphics Card shortage is killing PC Gaming". MarketWatch. Archived from the original on 1 September 2021. Retrieved 1 September 2021.

- ^ "NVIDIA TITAN RTX is Here". NVIDIA. Archived from the original on 8 November 2019. Retrieved 7 November 2019.

- ^ "Refresh rate recommended". Archived from the original on 2 January 2007. Retrieved 17 February 2007.

- ^ "HDMI FAQ". HDMI.org. Archived from the original on 22 February 2018. Retrieved 9 July 2007.

- ^ "DisplayPort Technical Overview" (PDF). VESA.org. 10 January 2011. Archived (PDF) from the original on 12 November 2020. Retrieved 23 January 2012.

- ^ "FAQ Archive – DisplayPort". VESA. Archived from the original on 24 November 2020. Retrieved 22 August 2012.

- ^ "The Truth About DisplayPort vs. HDMI". dell.com. Archived from the original on 1 March 2014. Retrieved 13 March 2013.

- ^ "I/O Ports - USB Type-C|Graphics Cards|ASUS Global". ASUS Global. Retrieved 10 October 2025.

- ^ "Legacy Products | Matrox Video". video.matrox.com. Retrieved 9 November 2023.

- ^ "Video Signals and Connectors". Apple. Archived from the original on 26 March 2018. Retrieved 29 January 2016.

- ^ a b Keith Jack (2007). Video demystified: a handbook for the digital engineer. Newnes. ISBN 9780750678223.

- ^ "ATI Radeon 7 pin SVID/OUT connector pinout diagram @ pinoutguide.com". pinoutguide.com. Retrieved 9 November 2023.

- ^ Pinouts.Ru (2017). "ATI Radeon 8-pin audio / video VID IN connector pinout".

- ^ "How to Connect Component Video to a VGA Projector". AZCentral. Retrieved 29 January 2016.

- ^ "Quality Difference Between Component vs. HDMI". Extreme Tech. Archived from the original on 4 February 2016. Retrieved 29 January 2016.

- ^ PCIe 2.1 has the same clock and bandwidth as PCIe 2.0

Sources

[edit]- Mueller, Scott (2005) Upgrading and Repairing PCs. 16th edition. Que Publishing. ISBN 0-7897-3173-8

External links

[edit]Graphics card

View on GrokipediaTypes

Discrete Graphics Cards

A discrete graphics card is a standalone hardware accelerator consisting of a separate printed circuit board (PCB) that houses a dedicated graphics processing unit (GPU), its own video random access memory (VRAM), and specialized power delivery components, enabling high-performance rendering for demanding visual and computational workloads.[5][6] Unlike integrated solutions, these cards operate independently of the central processing unit (CPU), offloading complex graphics tasks such as 3D modeling, ray tracing, and parallel computing to achieve superior speed and efficiency.[7] This dedicated architecture allows for greater processing bandwidth and memory isolation, making discrete cards essential for applications requiring real-time visual fidelity.[8] The primary advantages of discrete graphics cards include significantly higher computational power, often exceeding integrated options by orders of magnitude in graphics-intensive scenarios, along with support for advanced customizable cooling systems like multi-fan designs or liquid cooling to manage thermal output.[9][10] Additionally, their modular design facilitates easy upgradability, permitting users to enhance graphics performance without replacing the CPU, motherboard, or other system components, which extends the lifespan of a PC build.[7] These benefits come at the cost of higher power consumption and physical space requirements, but they enable tailored configurations for peak performance.[5] Discrete graphics cards excel in use cases demanding intensive graphics processing, such as high-end gaming rigs for immersive 4K experiences with ray tracing, professional video editing workstations for real-time 8K rendering and effects, and AI training setups leveraging parallel compute capabilities for machine learning model development.[11][8] Representative examples include NVIDIA's GeForce RTX 50 series, such as the RTX 5090, which delivers over 100 teraflops of AI-accelerated performance for next-generation gaming and content creation as of 2025, and AMD's Radeon RX 9000 series, like the RX 9070 XT, offering 16GB of GDDR6 memory for high-fidelity visuals in professional simulations.[12][13] These cards provide a stark contrast to integrated graphics processors, which function as a lower-power alternative suited for basic display and light tasks.[5] Installation of a discrete graphics card typically involves inserting the card into a compatible PCIe x16 slot on the motherboard, securing it with screws, and connecting supplemental power cables from the power supply unit if the card's thermal design power exceeds the slot's provision.[14] Following hardware setup, users must download and install manufacturer-specific drivers—such as NVIDIA's GeForce Game Ready Drivers or AMD's Adrenalin Software—to ensure full feature support and OS compatibility across Windows, Linux, or other platforms.[15] Proper driver configuration is crucial for optimizing performance and enabling technologies like direct memory access for seamless integration with the system.[16]Integrated Graphics Processors

Integrated graphics processors (iGPUs) are graphics processing units embedded directly into the central processing unit (CPU) die or integrated as part of the motherboard chipset, enabling visual output without requiring a separate graphics card.[6] Prominent examples include Intel's UHD Graphics series, found in Core processors, and AMD's Radeon Graphics, integrated into Ryzen APUs such as those based on the Vega or RDNA architectures.[17] These solutions are designed for general-purpose computing, providing essential rendering capabilities for operating systems, video playback, and basic applications.[2] The primary advantages of iGPUs lie in their cost-effectiveness and energy efficiency, as they eliminate the need for additional hardware, reducing overall system expenses and power consumption—particularly beneficial for laptops and budget desktops.[6] Their seamless integration with the CPU allows for faster data sharing and simpler thermal management, contributing to compact designs in mobile devices.[18] However, limitations include reliance on shared system RAM for memory allocation, which can lead to performance bottlenecks during intensive tasks, and inherently lower computational power compared to discrete GPUs for complex rendering.[2] The evolution of iGPUs began in the late 1990s with basic 2D acceleration and rudimentary 3D support in chipsets, such as Intel's 810 platform released in 1999, which introduced integrated rendering pipelines for entry-level visuals. By the early 2010s, on-die integration advanced significantly, with AMD's Llano APUs in 2011 and Intel's Sandy Bridge processors marking the shift to unified CPU-GPU architectures for improved efficiency.[19] Modern developments, as of 2025, enable support for 4K video decoding, hardware-accelerated encoding, and light gaming, exemplified by Intel's Arc-based iGPUs in Core Ultra series processors like Lunar Lake, which leverage Xe architecture for enhanced ray tracing and AI upscaling.[17] In terms of performance, contemporary iGPUs deliver playable frame rates in 1080p gaming scenarios, typically achieving 30-60 FPS in titles like Forza Horizon 5 at low to medium settings, though they fall short of discrete GPUs for high-end 3D workloads requiring sustained high resolutions or complex effects.[20]Historical Development

Early Innovations

The development of graphics cards began in the early 1980s with the introduction of the IBM Color Graphics Adapter (CGA) in 1981, which marked the first standard for color graphics on personal computers, supporting resolutions up to 320x200 pixels with a palette of 16 colors from a total of 4 available simultaneously.[21] This adapter utilized a frame buffer—a dedicated memory area storing pixel data for the display—to enable basic raster graphics, fundamentally shifting from text-only displays to visual computing.[22] In 1982, the Hercules Graphics Card emerged as a third-party innovation, providing high-resolution monochrome graphics at 720x348 pixels while maintaining compatibility with IBM's Monochrome Display Adapter (MDA), thus addressing the need for sharper text and simple graphics in professional applications without color.[23] These early cards relied on scan converters to transform vector or outline data into raster images stored in the frame buffer, a process essential for rendering on cathode-ray tube (CRT) monitors.[22] The rise of PC gaming and computer-aided design (CAD) software in the 1980s and 1990s drove demand for enhanced graphics capabilities, as titles like King's Quest (1984) and early CAD tools such as AutoCAD required better color depth and resolution for immersive experiences and precise modeling.[24] By the mid-1990s, this momentum led to multimedia accelerators like the S3 ViRGE (Virtual Reality Graphics Engine), released in 1995, which was among the first consumer-oriented chips to integrate 2D acceleration, basic 3D rendering, and video playback support, featuring a 64-bit memory interface for smoother motion handling.[25] The same year saw the debut of early application programming interfaces (APIs) like DirectX 1.0 from Microsoft, providing developers with standardized tools for accessing hardware acceleration in Windows environments, thereby facilitating the transition from software-rendered to hardware-assisted graphics.[26] Breakthroughs in 3D acceleration defined the late 1990s, with 3dfx's Voodoo Graphics card launching in November 1996 as a dedicated 3D-only accelerator that offloaded polygon rendering and texture mapping from the CPU, dramatically improving frame rates in games like Quake through its Glide API.[27] Building on this, NVIDIA's RIVA 128 in 1997 introduced a unified architecture combining high-performance 2D and 3D processing on a single chip with a 128-bit memory bus, enabling seamless handling of resolutions up to 1024x768 while supporting Direct3D, which broadened accessibility for both gaming and professional visualization.[28] These innovations laid the groundwork for frame buffers to evolve into larger video RAM pools, optimizing scan conversion for real-time 3D scenes and fueling the PC's emergence as a viable platform for graphics-intensive applications.[22]Modern Evolution

The modern era of graphics cards, beginning in the early 2000s, marked a shift toward programmable and versatile architectures that extended beyond fixed-function rendering pipelines. NVIDIA's GeForce 3, released in 2001, introduced the first consumer-level programmable vertex and pixel shaders, enabling developers to customize shading effects for more realistic visuals in games and applications. This innovation laid the groundwork for greater flexibility in graphics processing, allowing for dynamic lighting and texture manipulation that previous fixed pipelines could not achieve.[29] By the mid-2000s, the industry transitioned to unified shader architectures, where a single pool of processors could handle vertex, pixel, and geometry tasks interchangeably, improving efficiency and scalability. NVIDIA pioneered this with the G80 architecture in the GeForce 8800 series launched in 2006, which supported DirectX 10 and unified processing cores for balanced workload distribution. Concurrently, AMD's acquisition of ATI Technologies in October 2006 for $5.4 billion consolidated graphics expertise, paving the way for ATI's evolution into AMD's Radeon lineup and fostering competition in unified designs. AMD followed with its TeraScale architecture in the Radeon HD 2000 series in 2007, adopting a similar unified approach to enhance performance in high-definition gaming.[29][30] Entering the 2010s, advancements focused on compute capabilities and memory enhancements to support emerging workloads like general-purpose GPU (GPGPU) computing. NVIDIA's introduction of CUDA in 2006 with the G80 enabled parallel programming for non-graphics tasks, such as scientific simulations, while the Khronos Group's OpenCL standard in 2009 provided cross-vendor support, allowing AMD and others to leverage GPUs for heterogeneous computing. Hardware tessellation units, debuted in DirectX 11-compatible GPUs around 2009-2010, dynamically subdivided polygons for detailed surfaces in real-time, with NVIDIA's Fermi architecture (GeForce GTX 400 series) and AMD's Evergreen (Radeon HD 5000 series) leading early implementations. Video RAM capacities expanded significantly, progressing from GDDR5 in the early 2010s to GDDR6 by 2018, offering up to 50% higher bandwidth for 4K gaming and VR applications. The 2020s brought integration of AI and ray tracing hardware, transforming graphics cards into hybrid compute engines. NVIDIA's RTX 20-series, launched in September 2018, incorporated dedicated RT cores for real-time ray tracing, simulating accurate light interactions, alongside tensor cores for AI-accelerated upscaling via Deep Learning Super Sampling (DLSS). AMD entered the fray with its RDNA 2 architecture in the Radeon RX 6000 series in 2020, adding ray accelerators for hardware-accelerated ray tracing to compete in photorealistic rendering. DLSS evolved rapidly, reaching version 4 by 2025 with multi-frame generation and enhanced super resolution powered by fifth-generation tensor cores, enabling up to 8x performance uplifts in ray-traced games on RTX 50-series GPUs. Key trends included adoption of PCIe 4.0 interfaces starting with AMD's Radeon VII in 2019 for doubled bandwidth over PCIe 3.0, followed by PCIe 5.0 support in consumer GPUs starting with NVIDIA's GeForce RTX 50 series in 2025, building on platforms like Intel's Alder Lake that introduced PCIe 5.0 slots in 2021, though full utilization awaited higher-bandwidth needs.[31][32] Amid the cryptocurrency mining boom from 2017 to 2022, which strained GPU supplies due to Ethereum's proof-of-work demands, manufacturers emphasized energy-efficient designs, reducing power per transistor via 7nm and smaller processes to balance performance and sustainability. By 2025, NVIDIA held approximately 90% dominance in the AI GPU market, driven by its Hopper and Blackwell architectures tailored for machine learning workloads.[33]Physical Design

Form Factors and Dimensions

Graphics cards are designed in various form factors to accommodate different PC chassis sizes and configurations, primarily defined by their slot occupancy, length, height, and thickness. Single-slot designs occupy one expansion slot on the motherboard and are typically compact, featuring a single fan or passive cooling, making them suitable for slim or office-oriented builds. Dual-slot cards, the most common for mid-range and gaming applications, span two slots and support larger heatsinks with two or three fans for improved thermal performance. High-end models often extend to three or four slots to house massive coolers, enabling better heat dissipation in demanding workloads.[34] These form factors ensure compatibility with standard ATX motherboards, which provide multiple PCIe slots for installation. Low-profile variants, limited to about 69mm in height, fit small form factor (SFF) PCs and often use half-height brackets for constrained cases. For multi-GPU setups like legacy SLI configurations, specialized brackets align cards physically and maintain spacing, preventing interference while supporting parallel operation in compatible systems. Overall lengths vary significantly; mid-range cards measure approximately 250-320mm, while 2025 flagships like the NVIDIA GeForce RTX 5090 Founders Edition reach 304mm, with partner models exceeding 350mm to incorporate expansive cooling arrays.[35][36][37] A key structural challenge in larger cards is GPU sag, where the weight of heavy coolers—often exceeding 1kg in high-end designs—causes the card to bend under gravity, potentially stressing the PCIe slot over time. This issue became prevalent with the rise of dual-GPU cards in the 2010s, as thicker heatsinks and denser components increased mass. Solutions include adjustable support brackets that prop the card from below, distributing weight evenly and preserving PCIe connector integrity without impeding airflow. These brackets, often made of aluminum or acrylic, attach to the case frame and have been widely adopted since the mid-2010s for cards over 300mm long.[38] Typical dimensions for a mid-range graphics card, such as the NVIDIA GeForce RTX 5070, are around 242mm in length, 112mm in height, and 40mm in thickness (dual-slot), influencing case selection by requiring at least 250mm of clearance in the GPU mounting area. Larger dimensions in high-end models can restrict airflow within the chassis, as extended coolers may block adjacent fans or radiators, necessitating cases with optimized ventilation paths. For instance, cards over 300mm often demand mid-tower or full-tower ATX cases to maintain thermal efficiency.[39][36] Recent trends emphasize adaptability across device types. In laptops, thinner designs use Mobile PCI Express Module (MXM) standards, with modules measuring 82mm x 70mm or 105mm, enabling upgradable graphics in compact chassis while integrating cooling for sustained performance. For servers, modular form factors like NVIDIA's MGX platform allow customizable GPU integration into rackmount systems, supporting up to eight cards in scalable configurations without fixed desktop constraints. These evolutions prioritize fitment and modularity while addressing heat dissipation through integrated cooling structures.[40]Cooling Systems

Graphics cards generate significant heat due to high power draw from the graphics processing unit and other components, necessitating effective cooling to maintain performance and longevity.[41] Cooling systems for graphics cards primarily fall into three categories: passive, air-based, and liquid-based. Passive cooling relies on natural convection and radiation without moving parts, typically used in low-power integrated or entry-level discrete cards where thermal design power (TDP) remains below 75W, allowing operation without fans for silent performance.[42] Air cooling, the most common for discrete graphics cards, employs heatsinks with fins, heat pipes, and fans to dissipate heat; these systems dominate consumer GPUs due to their balance of cost and efficacy. Liquid cooling, often implemented via all-in-one (AIO) loops or custom setups, circulates coolant through a block on the GPU die and a radiator with fans, excelling in high-TDP scenarios exceeding 300W by providing superior heat transfer.[43][44] Key components in these systems include heat pipes, which use phase-change principles to transport heat from the GPU die to fins via evaporating and condensing fluid; vapor chambers, flat heat pipes that spread heat evenly across a larger area for uniform cooling; thermal pads for insulating non-critical areas while conducting heat from memory chips; and copper baseplates in modern 2025 models for direct contact and high thermal conductivity. For instance, NVIDIA's Blackwell architecture GPUs, such as the GeForce RTX 5090, feature advanced vapor chambers and multiple heat pipes designed for high thermal loads, improving cooling efficiency over predecessors.[45][46][41] Thermal challenges arise from junction temperatures reaching up to 90°C in NVIDIA GPUs and 110°C in AMD models under load, where exceeding these limits triggers throttling to reduce clock speeds and prevent damage, particularly in cards with TDPs over 110W. Blower-style air coolers, which exhaust hot air directly out the case via a single radial fan, suit multi-GPU setups by avoiding heat recirculation but generate more noise; in contrast, open-air designs with multiple axial fans offer quieter operation and 10-15°C better cooling in well-ventilated cases, though they may raise ambient temperatures.[47][48][49] Innovations address these issues through undervolting, which lowers voltage to cut power consumption and heat by up to 20% without performance loss, extending boost clocks; integrated RGB lighting on fans for aesthetic appeal without compromising airflow; and advanced materials like fluid dynamic bearings in 2025 fans for durability. Efficient 2025 GPUs, such as NVIDIA's GeForce RTX 5090, maintain core temperatures around 70°C under sustained load with these systems, minimizing throttling.[50][51][52]Power Requirements

Graphics cards vary significantly in their power consumption, measured as Thermal Design Power (TDP), which represents the maximum heat output and thus the electrical power draw under typical loads. Entry-level discrete graphics cards often have a TDP as low as 75 W, sufficient for basic tasks and light gaming when powered solely through the PCIe slot. In contrast, high-end models in 2025, such as the NVIDIA GeForce RTX 5090, reach TDPs of 575 W to support demanding workloads like 4K ray-traced gaming and AI acceleration.[52][53] To deliver this power beyond the standard 75 W provided by the PCIe slot, graphics cards use auxiliary connectors. The 6-pin PCIe connector supplies up to 75 W, commonly found on mid-range cards from earlier generations. The 8-pin variant doubles this to 150 W, enabling higher performance in modern setups. Introduced in 2022 as part of the PCIe 5.0 standard, the 12VHPWR (12 Volt High Power) 16-pin connector supports up to 600 W through a single cable, essential for flagship cards like the RTX 5090, which uses the revised 12V-2x6 connector or equivalents like four 8-pin cables via adapters. The 12V-2x6 is the standard for high-power modern NVIDIA GPUs including the RTX 50 series, featuring enhanced sense pins for better safety and detection, reducing melting risks compared to the original 12VHPWR.[54][55][56][57] Integrating a high-TDP graphics card requires a robust power supply unit (PSU) to ensure stability. For GPUs consuming 450-600 W, a PSU of 1000 W or more is recommended.[58] NVIDIA recommends at least a 1000 W PSU for systems with the RTX 5090, with higher wattage advised for overclocked GPUs or configurations with high-end CPUs. This provides headroom for system stability, accommodates transient power spikes, ensures operation at optimal efficiency (50-70% load), and supports power-hungry CPUs alongside the GPU.[59] This power consumption generates substantial heat, which cooling systems must dissipate effectively.[52][58] Modern graphics cards exhibit power trends influenced by dynamic boosting, where consumption spikes transiently during peak loads to achieve higher clock speeds. NVIDIA's GPU Boost technology monitors power and thermal limits, potentially throttling clocks if exceeded, leading to brief surges that can approach or surpass the TDP. Users can tune these via software tools like NVIDIA-SMI, which allows setting custom power limits to balance performance and efficiency, or third-party applications such as MSI Afterburner for granular control.[60][61][62] Safety considerations are paramount with high-power connectors like 12VHPWR, which include overcurrent protection to prevent damage from faults. However, post-2022 incidents revealed risks of connector melting due to improper seating or bending, often from poor cable management causing partial contact and localized overheating. Manufacturers now emphasize secure installation and native cabling over adapters to mitigate these issues, with revised 12V-2x6 variants improving sense pins for better detection.[63][64][65]Core Components

Graphics Processing Unit

The graphics processing unit (GPU) serves as the computational heart of a graphics card, specialized for parallel processing tasks inherent to rendering complex visuals. Modern GPUs employ highly parallel architectures designed to handle massive workloads simultaneously, featuring thousands of smaller processing cores that operate in unison. In NVIDIA architectures, such as the Blackwell series introduced in 2025, the fundamental building block is the streaming multiprocessor (SM), which integrates multiple CUDA cores for executing floating-point operations, along with dedicated units for specialized tasks.[66] Similarly, AMD's RDNA 4 architecture, powering the Radeon RX 9000 series in 2025, organizes processing around compute units (CUs), each containing 64 stream processors optimized for graphics workloads, with configurations scaling up to 64 CUs in high-end models like the RX 9070 XT.[67] These architectures enable GPUs to process vertices, fragments, and pixels in parallel, far surpassing the capabilities of general-purpose CPUs for graphics-intensive applications. A key evolution in GPU design since 2018 has been the integration of dedicated ray tracing cores, first introduced by NVIDIA in the Turing architecture to accelerate real-time ray tracing simulations for realistic lighting, shadows, and reflections.[68] These RT cores handle the computationally intensive bounding volume hierarchy traversals and ray-triangle intersections, offloading work from the main shader cores and enabling hybrid rendering pipelines that combine traditional rasterization with ray-traced effects. In 2025 flagships like NVIDIA's GeForce RTX 5090, core counts exceed 21,000 CUDA cores, while AMD equivalents feature over 4,000 stream processors across their CUs, with boost clock speeds typically ranging from 2.0 to 3.0 GHz to balance performance and thermal efficiency.[69][70] This scale allows high-end GPUs in 2025 to deliver over 100 TFLOPS of FP32 compute performance, while mid-range models achieve around 30 TFLOPS, establishing benchmarks for smooth 4K rendering in gaming and professional visualization.[71][72] The graphics pipeline within a GPU encompasses stages like rasterization, which converts 3D primitives into 2D fragments; texturing, which applies surface details; and pixel shading, which computes final colors and effects for each pixel. Prior to 2001, these stages relied on fixed-function hardware, limiting flexibility to predefined operations set by the manufacturer. The shift to programmable pipelines began post-2001 with NVIDIA's GeForce 3 and ATI's Radeon 8500, introducing vertex and pixel shaders that allowed developers to write custom code for these stages, transforming GPUs into versatile programmable processors.[73][74] By 2025, these pipelines are fully programmable, supporting advanced techniques like variable-rate shading to optimize performance by varying computation per pixel based on visibility. Contemporary GPUs are fabricated using advanced semiconductor processes, with NVIDIA's Blackwell GPUs on TSMC's custom 4N node and AMD's RDNA 4 on TSMC's 4nm-class process, enabling denser transistor integration for higher efficiency. Die sizes for 2025 flagships typically range from 350 to 750 mm², accommodating the expanded core arrays and specialized hardware while managing power density challenges. For instance, AMD's Navi 48 die measures approximately 390 mm², supporting efficient scaling across market segments. This integration with high-bandwidth video memory ensures seamless data flow to the processing cores, minimizing bottlenecks in memory-intensive rendering tasks.[75]Video Memory

Video memory, commonly referred to as VRAM, is the dedicated random-access memory (RAM) integrated into graphics cards to store and quickly access graphical data during rendering processes.[76] It serves as a high-speed buffer separate from the system's main RAM, enabling the graphics processing unit (GPU) to handle large datasets without relying on slower system memory transfers. This separation is crucial for maintaining performance in graphics-intensive tasks, where data locality reduces latency and improves throughput.[77] Modern graphics cards primarily use two main types of video memory: GDDR (Graphics Double Data Rate) variants and HBM (High Bandwidth Memory). GDDR6X, introduced in 2020 by Micron in collaboration with NVIDIA for the GeForce RTX 30 series, employs PAM4 signaling to achieve higher data rates than standard GDDR6, reaching up to 21 Gbps per pin.[78] [79] HBM3, standardized by JEDEC in 2022 and first deployed in high-end GPUs like NVIDIA's H100, uses stacked DRAM dies connected via through-silicon vias (TSVs) for ultra-high bandwidth in compute-focused applications.[80] [81] Capacities have scaled significantly, starting from 8 GB in mid-range consumer cards to over 48 GB in professional models by 2025, such as the AMD Radeon Pro W7900 with 48 GB GDDR6.[82] High-end configurations, like NVIDIA's RTX 4090 with 24 GB GDDR6X, support demanding workloads including 4K gaming and AI training.[83] Bandwidth is a key performance metric for video memory, determined by the memory type, clock speed, and bus width. High-end cards often feature a 384-bit memory bus, enabling bandwidth exceeding 700 GB/s; for instance, the RTX 4090 achieves 1,008 GB/s with GDDR6X at 21 Gbps.[83] Professional cards frequently incorporate Error-Correcting Code (ECC) support in their GDDR memory to detect and correct data corruption, essential for reliability in scientific simulations and data centers, as seen in AMD's Radeon Pro series.[82] VRAM plays a pivotal role in graphics rendering by storing textures, frame buffers, and Z-buffers, which hold depth information for occlusion culling.[84] Textures, which define surface details, can consume substantial VRAM due to their high resolution and mipmapping chains.[85] Frame buffers capture rendered pixels for each frame, while Z-buffers manage 3D scene depth to prevent overdraw. Exhaustion of VRAM forces the GPU to swap data with system RAM, leading to performance degradation such as stuttering in games, where frame times spike due to increased latency.[86] The memory controller, integrated into the GPU die, manages data flow between the VRAM modules and processing cores, handling addressing, error correction, and refresh cycles to optimize access patterns.[77] Users can overclock VRAM using software tools like MSI Afterburner, which adjusts memory clocks beyond factory settings for potential bandwidth gains, though this risks instability without adequate cooling.[87] Historically, graphics memory evolved from standard DDR SDRAM to specialized GDDR types for higher speeds and efficiency, addressing the growing demands of parallel processing in GPUs.[88] Recent trends emphasize stacked architectures like HBM for AI and high-performance computing, where massive parallelism requires terabytes-per-second bandwidth to avoid bottlenecks in training large models.[89] By 2025, HBM3 and emerging GDDR7 continue this shift, prioritizing density and power efficiency for data-center GPUs.[90]Firmware

The firmware of a graphics card, known as Video BIOS (VBIOS), consists of low-level software embedded in the card's non-volatile memory that initializes the graphics processing unit (GPU) and associated hardware during system startup. This firmware executes before the operating system loads, ensuring the GPU is configured for basic operation and providing essential data structures for subsequent driver handoff. For NVIDIA GPUs, the VBIOS includes the BIOS Information Table (BIT), a structured set of pointers to initialization scripts, performance parameters, and hardware-specific configurations that guide the boot process. Similarly, AMD GPUs rely on comparable firmware structures to achieve initial hardware readiness. VBIOS is stored in an EEPROM (Electrically Erasable Programmable Read-Only Memory) chip directly on the graphics card, allowing for reprogramming while maintaining data persistence without power. During boot, it performs the Power-On Self-Test (POST) to verify GPU functionality, programs initial clock frequencies via phase-locked loop (PLL) tables, and establishes fan control curves based on temperature thresholds to prevent overheating. For power management, VBIOS defines performance states (P-states), such as NVIDIA's P0 for maximum performance or lower states for efficiency, including associated clock ranges and voltage levels; AMD equivalents use power play tables to set engine and memory clocks at startup. It also supports reading Extended Display Identification Data (EDID) from connected monitors via the Display Data Channel (DDC) to identify display capabilities like resolutions and refresh rates, enabling proper output configuration. Updating VBIOS involves flashing a new image using vendor tools, such as NVIDIA's nvflash utility or AMD's ATIFlash, often integrated with OEM software like ASUS VBIOS Flash Tool, to address bugs, improve compatibility, or adjust limits. However, the process carries significant risks, including power interruptions or incompatible files that can brick the card by corrupting the EEPROM, rendering it non-functional until recovery via external programmers. Following vulnerabilities in the 2010s that exposed firmware to tampering, modern implementations incorporate digital signing and Secure Boot mechanisms; NVIDIA GPUs, for example, use a hardware root of trust to verify signatures on firmware images, preventing unauthorized modifications and integrating with UEFI for chain-of-trust validation during boot. OEM customizations tailor VBIOS variants to specific platforms, with desktop versions optimized for higher power delivery and cooling headroom, while laptop editions incorporate stricter thermal profiles, reduced power states, and hybrid graphics integration to align with mobile constraints like battery life and shared chassis heat. These differences ensure compatibility but limit cross-platform flashing without risking instability. The VBIOS briefly interacts with OS drivers post-initialization to transfer control, enabling advanced runtime features.Display Output Hardware

Display output hardware in graphics cards encompasses the specialized chips and circuits responsible for processing and converting digital video signals from the GPU into formats suitable for transmission to displays. These components handle the final stages of signal preparation, ensuring compatibility with various output standards while maintaining image integrity. Historically, this hardware included analog conversion mechanisms, but contemporary designs emphasize digital processing to support high-resolution, multi-display setups. The Random Access Memory Digital-to-Analog Converter (RAMDAC) was a core element in early display output hardware, functioning to translate digital pixel data stored in video RAM into analog voltage levels for CRT and early LCD monitors. By accessing a programmable color lookup table in RAM, the RAMDAC generated precise analog signals for red, green, and blue channels, enabling resolutions up to 2048x1536 at 75 Hz with clock speeds reaching 400 MHz in high-end implementations during the 2000s. It played a crucial role in VGA and early DVI-I outputs, where analog components were required for legacy compatibility.[91][92] As digital interfaces proliferated, RAMDACs became largely obsolete in consumer graphics cards by the early 2010s, supplanted by fully digital pipelines that eliminated the need for analog conversion. The transition was driven by the adoption of standards like DVI-D and HDMI, which transmit uncompressed video digitally without signal degradation over distance. Modern GPUs retain minimal analog support only for niche VGA ports via integrated low-speed DACs, but primary outputs rely on digital encoders.[93] For digital outputs, Transition-Minimized Differential Signaling (TMDS) encoders and HDMI transmitters form the backbone of signal processing, serializing parallel RGB data into high-speed differential pairs while minimizing electromagnetic interference. These encoders apply 8b/10b encoding to convert 24-bit (8 bits per channel) video data into 30-bit streams, with serialization at up to 10 times the pixel clock rate—enabling support for 1080p at 60 Hz with 36-bit color depth or higher in HDMI 1.3 and beyond. Integrated within the GPU's display engine, they handle pixel clock recovery and channel balancing for reliable transmission over DVI and HDMI ports.[94] Content protection is integral to these digital encoders through High-bandwidth Digital Content Protection (HDCP), which applies AES-128 encryption in counter mode to video streams before TMDS encoding, preventing unauthorized copying of premium audiovisual material. HDCP authentication occurs between the graphics card (as transmitter) and display (as receiver), generating a 128-bit session key exchanged via TMDS control packets; encryption then XORs the key stream with pixel data in 24-bit blocks across the three TMDS channels. This ensures compliance for 4K and 8K content delivery, with re-authentication triggered by link errors detected through error-correcting codes in data islands.[95] Multi-monitor configurations leverage the display output hardware's ability to drive multiple independent streams, with daisy-chaining via Multi-Stream Transport (MST) in DisplayPort enabling up to 4 native displays and extending to 6-8 total through chained hubs on 2025-era cards like NVIDIA's GeForce RTX 50 series. The hardware manages bandwidth allocation across streams, supporting simultaneous 4K outputs while synchronizing timings to prevent tearing. This scalability is vital for professional workflows, where the GPU's display controller pipelines parallel signal generation without taxing the core rendering units.[37] Display scalers within the output hardware perform real-time resolution upscaling and format adaptation, interpolating lower-resolution content to match native display panels—such as bilinear or Lanczos algorithms to upscale 1080p to 4K—while converting color spaces like RGB to YCbCr for efficient transmission over bandwidth-limited links. These circuits apply matrix transformations to separate luminance (Y) from chrominance (CbCr), reducing data volume by subsampling chroma channels (e.g., 4:2:2 format) without perceptible loss in perceived quality. Hardware acceleration ensures low-latency processing, often integrated with the TMDS encoder for seamless pipeline operation in video playback and gaming scenarios.[96][97]Connectivity

Host Bus Interfaces

Host bus interfaces connect graphics cards to the motherboard, enabling data transfer between the GPU and the CPU, system memory, and other components. These interfaces have evolved to support increasing bandwidth demands driven by advancements in graphics processing and computational workloads. Early standards like PCI and AGP laid the foundation for dedicated graphics acceleration, while modern PCIe dominates due to its scalability and performance.[98] The Peripheral Component Interconnect (PCI) bus, introduced in June 1992 by Intel and managed by the PCI Special Interest Group (PCI-SIG), served as the initial standard for graphics cards, providing a shared 32-bit bus at 33 MHz for up to 133 MB/s bandwidth.[98][99] PCI allowed graphics adapters to integrate with general-purpose expansion slots but suffered from bandwidth limitations for 3D rendering tasks. To address this, Intel developed the Accelerated Graphics Port (AGP) in 1996 as a dedicated interface for video cards, offering point-to-point connectivity to main memory with bandwidths of 266 MB/s (1x mode) in AGP 1.0, increasing to 533 MB/s (2x) and 1.07 GB/s (4x) in AGP 2.0, specifically targeting 3D acceleration.[100][101] AGP improved latency and texture data access compared to PCI, becoming the standard for consumer graphics cards through the early 2000s.[101] The PCI Express (PCIe) interface, introduced by PCI-SIG in 2003 with version 1.0, replaced AGP and PCI by using serial lanes for higher throughput and full-duplex communication. Each subsequent version has doubled the data rate per lane while maintaining backward compatibility. PCIe 2.0 (2007) reached 5 GT/s, PCIe 3.0 (2010) 8 GT/s, PCIe 4.0 (2017) 16 GT/s, and PCIe 5.0 (2019 specification, with updates through 2022) 32 GT/s. Graphics cards typically use x16 configurations, providing up to 64 GB/s of bandwidth in PCIe 5.0, sufficient for high-resolution gaming and AI workloads.[102][103][104]| PCIe Version | Release Year | Data Rate per Lane (GT/s) | x16 Bandwidth (GB/s, bidirectional) |

|---|---|---|---|

| 1.0 | 2003 | 2.5 | ~8 |

| 2.0 | 2007 | 5.0 | ~16 |

| 3.0 | 2010 | 8.0 | ~32 |

| 4.0 | 2017 | 16.0 | ~64 |

| 5.0 | 2019 | 32.0 | ~128 |

Display Interfaces