Recent from talks

Nothing was collected or created yet.

Musical acoustics

View on WikipediaMusical acoustics or music acoustics is a multidisciplinary field that combines knowledge from physics,[1][2][3] psychophysics,[4] organology[5] (classification of the instruments), physiology,[6] music theory,[7] ethnomusicology,[8] signal processing and instrument building,[9] among other disciplines. As a branch of acoustics, it is concerned with researching and describing the physics of music – how sounds are employed to make music. Examples of areas of study are the function of musical instruments, the human voice (the physics of speech and singing), computer analysis of melody, and in the clinical use of music in music therapy.

The pioneer of music acoustics was Hermann von Helmholtz, a German polymath of the 19th century who was an influential physician, physicist, physiologist, musician, mathematician and philosopher. His book On the Sensations of Tone as a Physiological Basis for the Theory of Music[7] is a revolutionary compendium of several studies and approaches that provided a complete new perspective to music theory, musical performance, music psychology and the physical behaviour of musical instruments.

Methods and fields of study

[edit]- The physics of musical instruments

- Frequency range of music

- Fourier analysis

- Computer analysis of musical structure

- Synthesis of musical sounds

- Music cognition, based on physics (also known as psychoacoustics)

Physical aspects

[edit]

Whenever two different pitches are played at the same time, their sound waves interact with each other – the highs and lows in the air pressure reinforce each other to produce a different sound wave. Any repeating sound wave that is not a sine wave can be modeled by many different sine waves of the appropriate frequencies and amplitudes (a frequency spectrum). In humans the hearing apparatus (composed of the ears and brain) can usually isolate these tones and hear them distinctly. When two or more tones are played at once, a variation of air pressure at the ear "contains" the pitches of each, and the ear and/or brain isolate and decode them into distinct tones.

When the original sound sources are perfectly periodic, the note consists of several related sine waves (which mathematically add to each other) called the fundamental and the harmonics, partials, or overtones. The sounds have harmonic frequency spectra. The lowest frequency present is the fundamental, and is the frequency at which the entire wave vibrates. The overtones vibrate faster than the fundamental, but must vibrate at integer multiples of the fundamental frequency for the total wave to be exactly the same each cycle. Real instruments are close to periodic, but the frequencies of the overtones are slightly imperfect, so the shape of the wave changes slightly over time.[citation needed]

Subjective aspects

[edit]Variations in air pressure against the ear drum, and the subsequent physical and neurological processing and interpretation, give rise to the subjective experience called sound. Most sound that people recognize as musical is dominated by periodic or regular vibrations rather than non-periodic ones; that is, musical sounds typically have a definite pitch. The transmission of these variations through air is via a sound wave. In a very simple case, the sound of a sine wave, which is considered the most basic model of a sound waveform, causes the air pressure to increase and decrease in a regular fashion, and is heard as a very pure tone. Pure tones can be produced by tuning forks or whistling. The rate at which the air pressure oscillates is the frequency of the tone, which is measured in oscillations per second, called hertz. Frequency is the primary determinant of the perceived pitch. Frequency of musical instruments can change with altitude due to changes in air pressure.

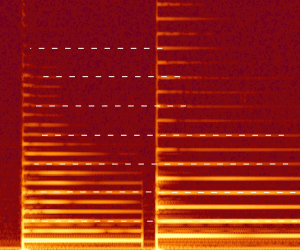

Pitch ranges of musical instruments

[edit]The accessibility of this article is in question. The specific issue is: Text is way too small and distorted to be legible at default size. Relevant discussion may be found on the talk page. |

*This chart only displays down to C0, though some pipe organs, such as the Boardwalk Hall Auditorium Organ, extend down to C−1 (one octave below C0). Also, the fundamental frequency of the subcontrabass tuba is B♭−1.

Harmonics, partials, and overtones

[edit]

The fundamental is the frequency at which the entire wave vibrates. Overtones are other sinusoidal components present at frequencies above the fundamental. All of the frequency components that make up the total waveform, including the fundamental and the overtones, are called partials. Together they form the harmonic series.

Overtones that are perfect integer multiples of the fundamental are called harmonics. When an overtone is near to being harmonic, but not exact, it is sometimes called a harmonic partial, although they are often referred to simply as harmonics. Sometimes overtones are created that are not anywhere near a harmonic, and are just called partials or inharmonic overtones.

The fundamental frequency is considered the first harmonic and the first partial. The numbering of the partials and harmonics is then usually the same; the second partial is the second harmonic, etc. But if there are inharmonic partials, the numbering no longer coincides. Overtones are numbered as they appear above the fundamental. So strictly speaking, the first overtone is the second partial (and usually the second harmonic). As this can result in confusion, only harmonics are usually referred to by their numbers, and overtones and partials are described by their relationships to those harmonics.

Harmonics and non-linearities

[edit]

When a periodic wave is composed of a fundamental and only odd harmonics (f, 3 f, 5 f, 7 f, ...), the summed wave is half-wave symmetric; it can be inverted and phase shifted and be exactly the same. If the wave has any even harmonics (2 f, 4 f, 6 f, ...), it is asymmetrical; the top half of the plotted wave form does not mirror image the bottom.

Conversely, a system that changes the shape of the wave (beyond simple scaling or shifting) creates additional harmonics (harmonic distortion). This is called a non-linear system. If it affects the wave symmetrically, the harmonics produced are all odd. If it affects the harmonics asymmetrically, at least one even harmonic is produced (and probably also odd harmonics).

Harmony

[edit]If two notes are simultaneously played, with frequency ratios that are simple fractions (e.g. 2 / 1 , 3/ 2 , or 5/ 4 ), the composite wave is still periodic, with a short period – and the combination sounds consonant. For instance, a note vibrating at 200 Hz and a note vibrating at 300 Hz (a perfect fifth, or 3/ 2 ratio, above 200 Hz) add together to make a wave that repeats at 100 Hz: Every 1/ 100 of a second, the 300 Hz wave repeats three times and the 200 Hz wave repeats twice. Note that the combined wave repeats at 100 Hz, even though there is no actual 100 Hz sinusoidal component contributed by an individual sound source.

Additionally, the two notes from acoustical instruments will have overtone partials that will include many that share the same frequency. For instance, a note with the frequency of its fundamental harmonic at 200 Hz can have harmonic overtones at: 400, 600, 800, 1000, 1200, 1400, 1600, 1800, ... Hz. A note with fundamental frequency of 300 Hz can have overtones at: 600, 900, 1200, 1500, 1800, ... Hz. The two notes share harmonics at 600, 1200, 1800 Hz, and more that coincide with each other, further along in the each series.

Although the mechanism of human hearing that accomplishes it is still incompletely understood, practical musical observations for nearly 2000 years[10] The combination of composite waves with short fundamental frequencies and shared or closely related partials is what causes the sensation of harmony: When two frequencies are near to a simple fraction, but not exact, the composite wave cycles slowly enough to hear the cancellation of the waves as a steady pulsing instead of a tone. This is called beating, and is considered unpleasant, or dissonant.

The frequency of beating is calculated as the difference between the frequencies of the two notes. When two notes are close in pitch they beat slowly enough that a human can measure the frequency difference by ear, with a stopwatch; beat timing is how tuning pianos, harps, and harpsichords to complicated temperaments was managed before affordable tuning meters.

- For the example above, | 200 Hz − 300 Hz | = 100 Hz .

- As another example from modulation theory, a combination of 3425 Hz and 3426 Hz would beat once per second, since | 3425 Hz − 3426 Hz | = 1 Hz .

The difference between consonance and dissonance is not clearly defined, but the higher the beat frequency, the more likely the interval is dissonant. Helmholtz proposed that maximum dissonance would arise between two pure tones when the beat rate is roughly 35 Hz.[11]

Scales

[edit]The material of a musical composition is usually taken from a collection of pitches known as a scale. Because most people cannot adequately determine absolute frequencies, the identity of a scale lies in the ratios of frequencies between its tones (known as intervals).

The diatonic scale appears in writing throughout history, consisting of seven tones in each octave. In just intonation the diatonic scale may be easily constructed using the three simplest intervals within the octave, the perfect fifth (3/2), perfect fourth (4/3), and the major third (5/4). As forms of the fifth and third are naturally present in the overtone series of harmonic resonators, this is a very simple process.

The following table shows the ratios between the frequencies of all the notes of the just major scale and the fixed frequency of the first note of the scale.

| C | D | E | F | G | A | B | C |

|---|---|---|---|---|---|---|---|

| 1 | 9/8 | 5/4 | 4/3 | 3/2 | 5/3 | 15/8 | 2 |

There are other scales available through just intonation, for example the minor scale. Scales that do not adhere to just intonation, and instead have their intervals adjusted to meet other needs are called temperaments, of which equal temperament is the most used. Temperaments, though they obscure the acoustical purity of just intervals, often have desirable properties, such as a closed circle of fifths.

See also

[edit]- Acoustic resonance

- Cymatics

- Mathematics of musical scales

- String resonance

- Vibrating string

- 3rd bridge (harmonic resonance based on equal string divisions)

- Basic physics of the violin

References

[edit]- ^ Benade, Arthur H. (1990). Fundamentals of Musical Acoustics. Dover Publications. ISBN 9780486264844.

- ^ Fletcher, Neville H.; Rossing, Thomas (2008-05-23). The Physics of Musical Instruments. Springer Science & Business Media. ISBN 9780387983745.

- ^ Campbell, Murray; Greated, Clive (1994-04-28). The Musician's Guide to Acoustics. OUP Oxford. ISBN 9780191591679.

- ^ Roederer, Juan (2009). The Physics and Psychophysics of Music: An Introduction (4 ed.). New York: Springer-Verlag. ISBN 9780387094700.

- ^ Henrique, Luís L. (2002). Acústica musical (in Portuguese). Fundação Calouste Gulbenkian. ISBN 9789723109870.

- ^ Watson, Lanham, Alan H. D., ML (2009). The Biology of Musical Performance and Performance-Related Injury. Cambridge: Scarecrow Press. ISBN 9780810863590.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ^ a b Helmholtz, Hermann L. F.; Ellis, Alexander J. (1885). On the Sensations of Tone as a Physiological Basis for the Theory of Music by Hermann L. F. Helmholtz. doi:10.1017/CBO9780511701801. hdl:2027/mdp.39015000592603. ISBN 9781108001779. Retrieved 2019-11-04.

{{cite book}}: ISBN / Date incompatibility (help);|website=ignored (help) - ^ Kartomi, Margareth (1990). On Concepts and Classifications of Musical Instruments. Chicago: University of Chicago Press. ISBN 9780226425498.

- ^ Hopkin, Bart (1996). Musical Instrument Design: Practical Information for Instrument Design. See Sharp Press. ISBN 978-1884365089.

- ^ Ptolemy, Gaius Claudius. Harmonikon Ἁρμονικόν [Harmonics]. year c. 180 CE.

- ^ "Roughness". music-cog.ohio-state.edu (course notes). Music 829B. Ohio State University.

External links

[edit]- Music acoustics - sound files, animations and illustrations - University of New South Wales

- Acoustics collection - descriptions, photos, and video clips of the apparatus for research in musical acoustics by Prof. Dayton Miller

- The Technical Committee on Musical Acoustics (TCMU) of the Acoustical Society of America (ASA)

- The Musical Acoustics Research Library (MARL)

- Acoustics Group/Acoustics and Music Technology courses - University of Edinburgh

- Acoustics Research Group - Open University

- The music acoustics group at Speech, Music and Hearing KTH

- The physics of harpsichord sound

- Visual music

- Savart Journal - The open access online journal of science and technology of stringed musical instruments

- Interference and Consonance from Physclips

- Curso de Acústica Musical (Spanish)

Musical acoustics

View on GrokipediaFundamentals of Sound in Music

Properties of Sound Waves

Sound waves are longitudinal pressure disturbances that propagate through a medium, such as air, by means of alternating compressions and rarefactions of the medium's particles. In a compression, particles are displaced closer together, resulting in a local increase in pressure above the ambient level, while in a rarefaction, particles spread farther apart, causing a decrease in pressure. These oscillations transfer energy without net displacement of the medium itself, distinguishing sound from transverse waves like those on a string.[4][5] The mathematical description of sound propagation follows the one-dimensional wave equation derived from fluid dynamics, , where is the acoustic pressure perturbation, is time, is position, and is the speed of sound. A fundamental solution for a plane progressive wave takes the form , where is the equilibrium pressure, is the pressure amplitude, is the wave number ( being the wavelength), and is the angular frequency ( being the frequency). This sinusoidal variation captures the periodic nature of simple tones, with the phase term indicating propagation in the positive -direction at speed .[6][7] In dry air at 20°C, the speed of sound is approximately 343 m/s, determined by the medium's density and elasticity via , where is the adiabatic index (1.4 for air), is ambient pressure, and is equilibrium density. This speed increases with temperature at roughly 0.6 m/s per °C rise due to enhanced molecular motion, and humidity slightly elevates it by reducing air density, though the effect is minor (about 0.3–0.6 m/s increase for high humidity). These variations influence musical timing and intonation in performance environments, such as adjusting for warmer stage lighting that locally raises air temperature.[8] During propagation in musical settings, sound waves interact with boundaries and inhomogeneities through reflection, refraction, and absorption, shaping the auditory experience in venues like concert halls. Reflection occurs when waves bounce off rigid surfaces, such as walls or ceilings, following the law of reflection (angle of incidence equals angle of reflection); a single delayed reflection arriving over 0.1 seconds after the direct sound creates a distinct echo, while overlapping reflections blend into reverberation that enriches timbre but can blur articulation if excessive. Refraction bends wave paths in stratified media, often due to temperature or wind gradients in large halls, directing sound toward or away from audiences. Absorption dissipates energy upon striking porous materials like upholstery or curtains, quantified by absorption coefficients (e.g., 0.1–0.5 for typical hall furnishings), which designers balance to achieve optimal clarity and warmth without deadening the space.[9][10] The Doppler effect alters the perceived frequency of sound from a moving source relative to a stationary observer, given by , where is the emitted frequency, is the speed of sound, and is the source speed (positive if approaching). In musical contexts, this manifests as a rising pitch during approach and falling pitch during recession, as heard with moving performers or instruments in processional pieces or experimental orchestral works involving spatial motion to enhance swells and dynamic contrasts.[11][12]Frequency, Pitch, and Timbre Basics

In musical acoustics, frequency refers to the number of complete cycles of a sound wave that occur per second, measured in hertz (Hz). It is defined as the reciprocal of the period , so , where is the time for one cycle.[13] The frequency of a sound wave is inversely related to its wavelength through the formula , where is the speed of sound in the medium, approximately 343 m/s in air at room temperature.[14] For example, the note A4 at 440 Hz has a wavelength of about 0.78 m in air.[15] Pitch is the perceptual correlate of frequency, representing the subjective sensation of a sound's highness or lowness. It is primarily determined by the fundamental frequency of a periodic sound wave, though other factors like intensity and duration can influence it slightly.[16] Human perception of pitch follows a logarithmic scale, meaning that equal intervals in pitch correspond to multiplicative changes in frequency rather than additive ones. In musical contexts, this is evident in the octave, where the pitch doubles as the frequency doubles (e.g., ), such as from 261.63 Hz (middle C) to 523.25 Hz (the C above it).[17][13] Amplitude, the maximum displacement of particles in a sound wave, relates to the wave's intensity, which is the power per unit area carried by the wave and proportional to the square of the amplitude (). Intensity is often expressed in decibels (dB) using the formula , where W/m² is the reference intensity corresponding to the threshold of human hearing.[18] Loudness, the subjective perception of intensity, is not linearly related to physical intensity but increases roughly logarithmically, with a 10 dB increase perceived as approximately twice as loud for many sounds.[16][19] Timbre, often described as tone color, is the perceptual attribute that allows listeners to distinguish between sounds of the same pitch and loudness, such as a violin versus a flute playing the same note. It arises from differences in the overall shape of the sound waveform, which determines how the wave's energy is distributed over time and across frequencies.[16][15] The temporal evolution of a musical sound's amplitude is described by its envelope, commonly modeled using the attack-decay-sustain-release (ADSR) framework. In the ADSR model, the attack phase is the initial rise in amplitude from silence to peak; decay is the subsequent drop to a steady level; sustain maintains that level during the note's duration; and release is the fade to silence after the note ends. This envelope shape contributes to timbre by influencing the onset and decay characteristics that mimic natural instrument behaviors.[20][21]Sound Production Mechanisms

Vibration and Resonance in Instruments

Musical instruments produce sound through vibrations of their components, which can be classified as free or forced. Free vibrations occur when an instrument is excited once, such as by plucking a string, allowing it to oscillate at its natural frequencies without ongoing external input until energy dissipates.[1] In contrast, forced vibrations are sustained by continuous external driving, like bowing a violin string or blowing into a flute, where the driving force matches the instrument's resonant frequencies to maintain oscillation.[1] Resonance amplifies these vibrations when the excitation frequency aligns with a natural frequency, leading to larger amplitudes and efficient energy transfer, as seen in the quality factor that determines the sharpness of the resonance peak, with width .[1] In string instruments, such as guitars or violins, sound arises from transverse standing waves formed along the string's length. These vibrations occur in modes where the string is fixed at both ends, creating nodes at the endpoints and antinodes in between, with the fundamental mode having one antinode and higher modes adding more nodes.[22] The natural frequencies are given by , where is the mode number, is the wave speed, is the string length, is the tension, and is the linear mass density.[1] Increasing tension raises the frequency proportionally to its square root, while higher density or longer length lowers it, allowing musicians to adjust pitch by fretting or tuning.[22] Wind instruments rely on resonance within air columns to generate and sustain tones. For cylindrical pipes, standing waves form with frequencies depending on whether the pipe is open or closed; a closed pipe resonates at odd multiples of the fundamental (n odd), where v is the speed of sound and L is the effective length.[23] End corrections account for the non-ideal behavior at open ends, adding an effective length , where a is the radius, to yield for more accurate frequency prediction.[1] In instruments like ocarinas or bottle resonators, the Helmholtz resonator model applies, with the fundamental frequency , where A is the neck cross-sectional area, V is the cavity volume, and l is the neck length (including end correction).[1] This configuration acts like a mass-spring system, with the air in the neck as the mass and the cavity air as the spring, enabling low-frequency resonance.[24] Percussion instruments, including drums and cymbals, produce sound via vibrations of membranes or plates. Membrane vibrations, as in a drumhead under tension, form circularly symmetric modes with nodal circles and diameters, where frequencies depend on tension T and surface density , approximated by and as mode-specific constants.[1] These modes are often inharmonic, contributing to the characteristic timbre. Plate vibrations in instruments like bells or vibraphones involve flexural waves, with modal frequencies scaling with plate thickness h, material properties (Young's modulus E, density , Poisson's ratio ), and dimensions, given by for rectangular plates.[1] Chladni patterns visualize these modes by sprinkling sand on a driven plate, where sand gathers along nodal lines (non-vibrating regions) at resonant frequencies, such as 174 Hz for a simple circular mode or higher values up to 2700 Hz for complex patterns, illustrating the two-dimensional standing wave structure.[25] Excitation methods initiate and sustain these vibrations, with efficiency determined by how well energy transfers from the performer to the resonator. Bowing in string instruments employs a stick-slip mechanism, where the bow hair grips and releases the string via friction, injecting energy at each cycle to counteract damping, achieving high efficiency through precise control of bow force and velocity.[1] Blowing in wind instruments drives the air column via nonlinear airflow, such as across an edge (flutes) or through reeds/lips (clarinets, trumpets), with energy transfer efficiency typically a few percent, enhanced by matching the player's embouchure to the instrument's impedance.[1] Striking, used in percussion and some keyboards, delivers impulsive energy via a mallet or hammer, where efficiency depends on contact duration and mass ratio; for example, a light hammer on a heavy string minimizes energy loss, allowing rapid vibration onset.[1] In all cases, structures like bridges or soundboards couple the vibrator to the air, optimizing radiation while minimizing losses.[1]Acoustic Classification of Musical Instruments

The Hornbostel-Sachs system provides a foundational framework for classifying musical instruments based on the primary physical mechanism of sound production, emphasizing the vibrating agent that initiates the acoustic signal. Developed in 1914 by ethnomusicologists Erich Moritz von Hornbostel and Curt Sachs, this hierarchical scheme organizes instruments into five main categories: idiophones, membranophones, chordophones, aerophones, and electrophones (the latter added by Sachs in 1940 to account for emerging electronic technologies).[26][27] The system's focus on acoustics stems from earlier 19th-century classifications, such as Victor-Charles Mahillon's material-based grouping, but prioritizes the physics of vibration over construction materials to enable cross-cultural comparisons.[28] Idiophones generate sound through the vibration of the instrument's solid body itself, relying on its elasticity without additional strings, membranes, or air columns. Examples include bells, where striking causes the metal to resonate, producing partials strongest near the striking point, and the xylophone, whose wooden bars vibrate in flexural modes to create pitched tones via nodal patterns that determine resonance frequencies. Membranophones produce sound from a stretched membrane, as in drums, where the membrane's tension and diameter control the fundamental frequency through transverse waves. Chordophones rely on vibrating strings stretched between fixed points, with examples like the guitar, where string motion couples to the body for sound radiation, involving wave reflections at the ends that shape the standing wave patterns. Aerophones involve vibrating air as the primary sound source, such as in flutes, where an air column resonates with end corrections and impedance matching at the mouthpiece to efficiently couple player breath to output sound. Electrophones, relevant to acoustic studies through hybrids like amplified string instruments, generate or modify sound electrically but often interface with acoustic elements, such as pickups on chordophones that capture string vibrations for amplification.[26][29] Acoustic distinctions among these classes arise from differences in vibration propagation and energy transfer. In aerophones, sound production emphasizes impedance matching between the player's airstream and the instrument's bore to minimize reflection losses, contrasting with chordophones, where partial reflections at string bridges sustain standing waves but require body resonance for efficient radiation. Idiophones and membranophones typically exhibit direct radiation from the vibrating surface, with membranophones showing lower impedance due to the flexible membrane compared to the rigid bodies of idiophones. These differences influence overall acoustic behavior, such as wave types—longitudinal in aerophones versus transverse in strings and membranes—tying back to fundamental vibration principles.[30][31] Historically, instrument classification evolved from ancient descriptions, like those of Greek theorists such as Aristoxenus, to systematic acoustic frameworks; the Hornbostel-Sachs system marked a shift by incorporating ethnographic data from global collections, evolving further with revisions like the 2011 CIMCIM update to refine subclasses for compound instruments such as bagpipes, which blend aerophone and chordophone elements. This progression parallels instrument development, from ancient reed aerophones like the Egyptian aulos to modern acoustic modeling in synthesizers, which digitally emulate physical vibrations of traditional classes for realistic sound synthesis. Efficiency and radiation patterns vary by class: direct radiators, such as open strings in chordophones or struck bars in idiophones like the xylophone, project sound omnidirectionally with higher efficiency in higher modes, while enclosed radiators, like drumheads in membranophones or flared bells in aerophones, focus output directionally to enhance projection and reduce energy loss. For instance, guitar bodies in chordophones achieve radiation efficiencies around 1-10% of input mechanical power, depending on frequency, through coupled plate and air modes.[32][33]Spectral Composition of Musical Sounds

Harmonics, Partials, and Overtones

In musical acoustics, harmonics refer to the frequency components of a sound that are integer multiples of the fundamental frequency, the lowest frequency produced by a vibrating source.[34] This occurs in ideal linear systems, such as a perfectly flexible string fixed at both ends, where vibrations produce standing waves at these discrete frequencies.[35] The harmonic series is thus defined by the frequencies , where is the fundamental frequency and , resulting in a spectrum of evenly spaced lines that contribute to the pitched quality of the tone.[34] Partials encompass all spectral lines in a sound's waveform, including both harmonics and any non-integer multiples known as inharmonics, which arise in more complex vibrations.[34] Overtones, by contrast, specifically denote the partials above the fundamental frequency, with the first overtone corresponding to the second harmonic () and subsequent overtones following the series.[34] In ideal harmonic cases, overtones align perfectly with the harmonic series, but real instruments often deviate slightly due to material properties. Fourier analysis provides the mathematical foundation for understanding these components, stating that any periodic waveform can be decomposed into a sum of sinusoids at the fundamental frequency and its integer multiples.[36] The general form is where and are the amplitude and phase of the nth harmonic, respectively.[36] This decomposition reveals how the relative strengths of harmonics shape the overall waveform, with the fundamental determining pitch and higher components adding complexity. In real musical instruments, inharmonicity introduces deviations from the ideal series, particularly in stiff strings like those in pianos, where bending stiffness raises higher partial frequencies.[37] The frequency of the nth partial is approximated by with as the inharmonicity coefficient, typically ranging from for bass strings to for treble strings in a grand piano.[37] This effect stretches octaves and alters tone brightness, requiring tuning adjustments. The distribution and strengths of harmonics, partials, and overtones fundamentally determine an instrument's tone quality by modifying the waveform's shape without changing the perceived pitch.[38] For instance, the clarinet, as a closed cylindrical pipe, produces a spectrum dominated by odd harmonics (1st, 3rd, 5th, etc.), with even harmonics weak or absent due to boundary conditions at the reed end, yielding its reedy timbre.[39]Nonlinear Effects and Distortion

In musical acoustics, nonlinear effects arise when the response of a system to an input is not proportional, leading to deviations from the ideal harmonic spectra assumed in linear models. These effects introduce additional frequency components, such as subharmonics and combination tones, enriching the timbre of instruments while also contributing to distortion in amplified signals. Unlike the purely harmonic overtones in idealized vibrations, nonlinearities stem from physical interactions like fluid-structure coupling or geometric constraints, fundamentally shaping the realism of musical sounds.[40] Nonlinearities manifest prominently in the excitation mechanisms of instruments. In bowed string instruments, such as the violin, the bow-string interaction involves stick-slip friction, producing a sawtooth-like waveform known as Helmholtz motion, where the string sticks to the bow during the upward motion and slips abruptly downward, generating a rich spectrum beyond simple harmonics.[40] Similarly, in reed woodwind instruments like the clarinet, reed beating occurs through self-sustained oscillations driven by fluid-structure interactions, often modeled via Hopf bifurcations at pressure thresholds around 900 Pa, resulting in amplitude-dependent frequency shifts and additional partials.[40] In brass instruments, lip vibrations introduce strong nonlinearities, where the player's lips act as a pressure-controlled valve, coupling with the instrument's resonance to produce complex spectra; nonlinear wave propagation in the bore at Mach numbers near 0.15 generates shock waves that enhance the "brassy" timbre, particularly at high amplitudes. These instrument-specific nonlinearities contrast with the linear harmonic baseline by adding inharmonic components that contribute to the instrument's characteristic sound color.[40] A key outcome of nonlinear interactions is the generation of subharmonics and combination tones. Subharmonics, frequencies that are integer fractions of the fundamental, emerge in self-oscillating systems like reeds or lips under certain amplitude conditions, altering the perceived pitch stability. Combination tones arise when multiple frequencies interact, producing new frequencies such as the difference tone; for two input tones at frequencies and (with ), the Tartini tone appears at , first observed in violin double-stops and attributable to nonlinearities in both the instrument and the auditory system.[41] These effects are particularly evident in polyphonic playing, where they can create audible "ghost" notes that influence ensemble tuning. Distortion types further illustrate nonlinear behaviors, especially in signal processing and amplification. Clipping occurs when the output signal exceeds the dynamic range of an amplifier, flattening waveform peaks and introducing odd harmonics that brighten the sound but can harshen it at high volumes; in valve amplifiers, this is modeled by saturation functions like . Intermodulation distortion (IMD) generates sum and difference frequencies from multiple inputs—for instance, inputs at 110 Hz and 165 Hz produce a 55 Hz difference tone and a 275 Hz sum tone—via polynomial expansions such as , leading to new partials that enrich electric guitar tones but cause muddiness in clean signals.[42] Mathematical models approximate these phenomena using nonlinear wave equations. For stiff strings, such as those in pianos, the Duffing oscillator captures geometric nonlinearities through the equation , where represents cubic stiffness, causing upward frequency shifts (inharmonicity) and pitch glide as amplitude increases, with the effective frequency rising by up to several percent for high-tension strings. This model extends to full string equations like the Kirchhoff-Carrier form, incorporating averaged nonlinear tension for realistic simulation of inharmonic overtones. The impacts of these nonlinear effects on musical sound are dual-edged: in acoustic instruments, they provide timbral richness, as seen in the vibrant spectra of brass through lip-driven shocks, enhancing expressivity without external processing. Conversely, in amplification, excessive distortion like IMD can degrade clarity, though controlled application—such as in rock music—creates desirable "overdrive" by emphasizing higher partials and simulating natural nonlinearities.[42] Overall, these effects underscore the departure from ideality, making musical acoustics a field where nonlinearity is essential for authenticity.[40]Psychoacoustic Perception

Subjective Pitch and Interval Recognition

Subjective pitch perception bridges the physical property of sound frequency with human auditory psychology, enabling the recognition of musical notes and intervals. While frequency provides the objective basis for pitch, subjective perception involves complex neural processing in the cochlea and auditory pathways. Two foundational psychoacoustic models describe how pitch is encoded: place theory for higher frequencies and volley theory for lower ones. Place theory, first articulated by Hermann von Helmholtz in 1863, proposes that pitch is determined by the specific location along the basilar membrane where vibrations reach maximum amplitude, due to the membrane's tonotopic organization with varying stiffness and mass from base to apex.[43] This spatial coding allows the auditory system to resolve frequencies above approximately 200 Hz, where individual nerve fibers respond selectively to particular places of excitation. Modern physiological evidence supports this through observed frequency tuning curves in cochlear hair cells.[44] For lower frequencies, up to about 4-5 kHz, volley theory complements place theory by emphasizing temporal coding. Developed by Ernest Wever and Charles Bray in 1930, it suggests that groups of auditory nerve fibers fire action potentials in synchronized volleys, collectively preserving the stimulus periodicity even when individual fibers cannot follow every cycle.[45] This mechanism extends phase-locking beyond the refractory period limits of single neurons, explaining pitch sensitivity to low-frequency tones like those in bass instruments. The precision of pitch perception is quantified by the just noticeable difference (JND), the smallest frequency change detectable as a pitch shift. For pure tones around 1000 Hz, the relative JND is approximately Δf/f ≈ 0.003, equivalent to roughly 3 musical cents, though it increases at extreme frequencies (e.g., larger below 200 Hz or above 4 kHz). This Weber-like fraction underscores the logarithmic scaling of pitch perception, aligning with musical intonation practices. Interval recognition involves perceiving the ratio between two pitches, distinct from absolute pitch height. Octave equivalence, where tones separated by a 2:1 frequency ratio are treated as similar (e.g., middle C and the C above), underpins melodic and harmonic structures, as demonstrated by similarity ratings in tonal sequences.[46] Melodic intervals are recognized sequentially over time, relying on memory of relative spacing, while harmonic intervals occur simultaneously, often enhancing equivalence through shared spectral cues. This perception supports scale navigation in music, with octaves serving as perceptual anchors. Virtual pitch extends interval and single-pitch recognition to complex tones lacking a physical fundamental. In such cases, the auditory system infers the missing fundamental from harmonic patterns; for example, a tone with partials at 800, 1000, and 1200 Hz evokes a 200 Hz pitch via the common periodicity of the waveform envelope. J.F. Schouten's residue theory (1940) formalized this, positing that the "residue" pitch arises from temporal interactions among unresolved higher harmonics, crucial for perceiving instrument fundamentals in noisy environments.[47] Cultural and training factors modulate pitch and interval accuracy, beyond innate mechanisms. Absolute pitch (AP) possessors, who identify isolated notes without reference (e.g., naming a 440 Hz tone as A4 instantly), exhibit enhanced precision but may struggle with relative interval tasks in tonal contexts due to over-reliance on absolute labels.[48] Prevalence of AP is substantially higher in East Asian musicians (e.g., 30–50% in Japanese music students) compared to Western musicians (around 1–10%), with general population rates near 0.01%; this disparity is linked to early tonal language exposure and intensive training before age 6, highlighting environmental influences on perceptual development.[49][50]Consonance, Dissonance, and Harmony Perception

Consonance and dissonance refer to the perceptual qualities arising from the simultaneous presentation of multiple tones, where consonance evokes a sense of stability and pleasantness, while dissonance produces tension or roughness.[51] These perceptions stem from psychoacoustic interactions between the tones' frequencies and are fundamental to harmony in multi-note music. Early theories emphasized physiological mechanisms, evolving into more nuanced models incorporating auditory processing. One foundational explanation for dissonance involves beating, where two nearby pure tones with frequencies and produce amplitude modulation at a rate of , creating a fluctuating intensity perceived as roughness when the beat rate falls within approximately 20–150 Hz, with maximum roughness around 70–120 Hz.[52][53] Hermann von Helmholtz, in his 1877 work On the Sensations of Tone, extended this to complex tones, proposing that dissonance arises from the interaction of their partials, with roughness maximized when partials are separated by small intervals like the major second (9/8 ratio) and minimized for octaves (2:1) or unisons (1:1), due to absent or slow beats. Helmholtz's metric quantified this roughness as a function of partial proximity, influencing subsequent auditory models.[54] Building on Helmholtz, Reinier Plomp and Willem Levelt's 1965 study linked dissonance to critical bandwidths in the auditory system, where overlapping excitation patterns on the basilar membrane cause sensory interference if partials from different tones fall within the same critical band (approximately 100–200 Hz wide, varying with center frequency).[51] Their experiments with synthetic tones showed dissonance peaking when frequency separations equaled about one-quarter of the critical bandwidth, transitioning to consonance as separations exceeded it, providing empirical support for roughness as a primary dissonance cue independent of cultural context.[54] In contrast, consonance often involves harmonic fusion, where tones with simple frequency ratios, such as the perfect fifth (3:2), are perceived as a unified entity rather than separate sounds, due to their partials aligning closely with a common harmonic series.[55] This fusion enhances perceptual coherence, as demonstrated in studies where listeners report a single blended pitch for such intervals, contrasting with the distinct separation in dissonant combinations.[56] Modern psychoacoustic research expands these ideas, emphasizing harmonicity—the degree to which tones share a virtual pitch or fundamental—and temporal patterns like synchronized onsets in chord perception. Models incorporating these factors, such as those assessing subharmonic coincidence or periodicity detection in the auditory nerve, explain why major triads (root position) are rated highly consonant due to strong harmonicity cues, while inverted chords show reduced fusion from temporal asynchrony. These approaches integrate neural correlates, like enhanced responses in the inferior colliculus to harmonic sounds, revealing dissonance as a disruption of expected auditory streaming. Perceptions of consonance and dissonance exhibit cultural variations, with Western listeners favoring intervals like the major third based on equal-tempered tuning, whereas non-Western traditions, such as those in Javanese gamelan, prioritize different ratios or timbres, leading to distinct harmony preferences uninfluenced by Western exposure.[60] Cross-cultural studies confirm that while low-level roughness is universal, higher-level harmony judgments are shaped by enculturation, as seen in Amazonian groups rating dissonant Western chords as neutral or pleasant without prior familiarity.[61]Musical Organization and Acoustics

Scales and Tuning Systems

Musical scales and tuning systems in acoustics organize pitches based on frequency relationships, influencing the perceptual purity and versatility of musical intervals. These systems derive from the physical properties of sound waves, where intervals are defined by ratios of frequencies that align with harmonic overtones for consonance.[1] Just intonation, one of the earliest systems, constructs scales using simple rational frequency ratios to achieve pure intervals without beating.[62] In just intonation, intervals are derived from small integer ratios, such as the octave at 2:1, perfect fifth at 3:2, and major third at 5:4, ensuring that simultaneous tones reinforce common partials in their spectra.[63] This approach, rooted in the harmonic series, produces intervals free of acoustic interference, as the frequencies are rationally related and do not generate dissonant beats.[64] However, just intonation limits modulation to keys sharing the same reference pitch, as ratios do not close evenly across all transpositions.[65] Pythagorean tuning builds scales through a chain of pure perfect fifths, each with a 3:2 frequency ratio, forming the circle of fifths.[66] Starting from a fundamental, stacking twelve fifths approximates the octave but results in the Pythagorean comma, a small discrepancy of about 23.46 cents, leading to wolf intervals—such as a dissonant fifth between certain notes like G♯ and E♭—that disrupt purity in remote keys.[64] This system prioritizes fifths for their consonance but yields wider major thirds (81:64) compared to just intonation's 5:4.[62] Equal temperament addresses these limitations by dividing the octave logarithmically into twelve equal semitones, each with a frequency ratio of , allowing seamless modulation across all keys.[67] Adopted widely since the 18th century for keyboard instruments, it compromises interval purity; for instance, the perfect fifth in equal temperament is slightly flat at 700 cents compared to just intonation's approximately 701.96 cents, introducing subtle beats.[68] The major third in equal temperament measures 400 cents, wider than just intonation's 386.31 cents, enhancing versatility at the cost of harmonic clarity.[63] Acoustically, these systems trade off purity against practicality: just and Pythagorean tunings minimize beating—amplitude fluctuations from mismatched partials—for stable consonance in fixed keys, while equal temperament's irrational ratios cause low-level beats that blend into timbre but reduce the "sweetness" of pure intervals.[1] In performance, this manifests as warmer, more resonant chords in just intonation versus the brighter, more uniform sound of tempered scales.[65] Non-Western traditions often employ microtonal scales beyond the 12-tone framework. Indian classical music uses srutis, dividing the octave into 22 microintervals for ragas, allowing nuanced pitch bends and rational ratios finer than semitones.[69] Similarly, Arabic maqams incorporate quarter tones and other microtonal steps, such as the neutral second (about 150 cents), derived from modal acoustics to evoke specific emotional qualities through subtle frequency relationships.[67]Chord Structures and Harmonic Interactions

In musical acoustics, the spectrum of a chord arises from the linear superposition of the partials from its individual tones, resulting in a complex waveform where the amplitudes and frequencies of harmonics interact. For example, in a major triad such as C-E-G, the partials of each note combine, with lower partials dominating the overall timbre while higher ones may interfere if closely spaced. In dense chord voicings, such as close-position triads, partials from different notes can fall within close proximity (e.g., the third partial of one tone near the second partial of another), producing amplitude modulation or beating at rates determined by their frequency difference, typically 20-100 Hz for audible roughness. Masking occurs when a stronger partial from one tone overshadows a weaker one from another, altering the perceived spectral envelope without altering the underlying superposition.[1] Root position chords exhibit acoustic stability because the lowest note (bass) serves as the fundamental, with the upper notes aligning as partials within its harmonic series—for instance, in a C major triad (C-E-G), E and G correspond approximately to the fifth and fourth partials of the bass C. This alignment minimizes frequency mismatches among low-order partials, reducing beating compared to inversions, where the bass note's harmonic series does not naturally encompass the other tones as integer multiples. In first inversion (e.g., E-G-C), the bass E's series places C as roughly its sixth partial and G as its fifth, but the lack of a shared low fundamental introduces greater partial misalignment and potential for interference in the low-frequency range. Inversions thus shift the effective spectral centroid upward, emphasizing higher partials and altering radiation patterns in instruments like strings or winds.[70][1] Harmonic tension in structures like the dominant seventh chord (e.g., G-B-D-F) stems from the tritone interval between the third (B) and seventh (F), whose partials—particularly the second and third harmonics—exhibit significant frequency detuning, leading to rapid beating rates around 30-40 Hz when tuned in equal temperament. This beating arises because the tritone's ratio (√2 ≈ 1.414) deviates from simple integer relations in the harmonic series, causing upper partials (e.g., B's third partial near F's second) to oscillate and create amplitude fluctuations in the combined waveform. Resolution to the tonic (C-E-G) aligns these partials more closely with the bass harmonic series, reducing such interactions and stabilizing the spectrum.[1] Voice leading in chord progressions influences acoustic smoothness by enabling continuous tracking of partials across transitions, where small pitch movements (e.g., common tones or steps of a second) preserve frequency proximity among corresponding harmonics, minimizing transient beating or spectral disruptions. For instance, in a progression from C major to A minor (sharing E and G), the shared partials maintain amplitude consistency, while contrary or oblique motion avoids large jumps that could cause abrupt partial realignments and increased interference. Nonlinear effects in instruments, such as string stiffness, further modulate these transitions by introducing slight inharmonicity, but smooth leading keeps partial deviations below thresholds for significant waveform distortion.[70] Extended chords, such as ninth (e.g., C-E-G-B-D) or eleventh (C-E-G-B-D-F), amplify inharmonicity through added upper partials that stretch beyond simple harmonic alignments, limiting their density before excessive beating or masking overwhelms the spectrum. In piano-like instruments, string stiffness causes partial frequencies to deviate positively, given by , where the inharmonicity coefficient (E: Young's modulus, a: radius, L: length, T: tension), making ninth partials up to 20-30 cents sharp and increasing close-frequency interactions in voicings spanning over an octave. This inharmonicity sets practical limits, as beyond elevenths, the cumulative detuning (e.g., D's partials clashing with B's) produces irregular beating patterns, reducing timbral clarity without compensatory tuning adjustments.[70]Applied Musical Acoustics

Pitch Ranges and Instrument Capabilities

Musical instruments exhibit a wide variety of pitch ranges, determined by their physical construction and the acoustic principles governing sound production, which allow musicians to span frequencies from infrasonic lows to ultrasonic highs within the audible spectrum. The standard pitch range for the concert grand piano, for instance, extends from A0 at 27.5 Hz to C8 at 4186 Hz, encompassing over seven octaves and providing a foundational reference for keyboard-based music across genres. Similarly, the violin, a staple of string ensembles, typically ranges from G3 at 196 Hz to A7 at 3520 Hz, enabling expressive melodic lines and high-register solos in orchestral and chamber settings. These ranges are not arbitrary but reflect optimized designs for resonance and playability, with wind instruments like the flute achieving an even broader span from C4 (262 Hz) to C7 (2093 Hz) through embouchure control and key mechanisms. Limiting factors for instrument pitch ranges include the physiological boundaries of human hearing, which spans approximately 20 Hz to 20 kHz, and inherent design constraints such as string length or pipe dimensions that restrict low-frequency production. For example, longer strings or larger resonators are required for bass notes, as seen in the double bass, which descends to E1 (41.2 Hz) but faces challenges below 30 Hz due to insufficient tension and enclosure size. Human performers also impose limits; vocal ranges for trained sopranos reach up to C6 (1047 Hz), while bass voices bottom out around E2 (82.4 Hz), influencing how instruments are voiced to complement ensembles. These factors ensure that most instruments operate within the 50 Hz to 4 kHz core of musical relevance, where auditory sensitivity peaks. Transposing instruments introduce a distinction between notated and acoustic pitches, requiring performers to adjust mentally for ensemble cohesion; the clarinet in B♭, for instance, sounds a major second lower than written, so a notated C4 (262 Hz) produces an actual B♭3 (233 Hz). This transposition aids in fingering consistency across keys but demands precise intonation to align with non-transposing instruments like the flute. Brass examples include the trumpet in B♭, sounding a whole step below notation, which historically facilitated band scoring but requires conductors to manage collective pitch centering. Extended techniques further expand these ranges beyond standard capabilities, such as natural harmonics on strings, which allow the violin to access pitches up to E8 (5274 Hz) by lightly touching strings at nodal points. Woodwinds employ multiphonics—simultaneous tones from overblowing—to produce pitches outside normal scales, like the oboe generating harmonics above its fundamental D3 (147 Hz) range. These methods, popularized in 20th-century contemporary music, enhance timbral variety while pushing physical limits without altering instrument design. Historically, orchestral expansions have lowered pitch floors for greater depth; the contrabassoon, extended in the 19th century by makers like Heckel, reached down to 58.27 Hz (B♭0) for Wagnerian scores, surpassing earlier models limited to around 61.74 Hz (B1). Such innovations, driven by composers like Berlioz, integrated low fundamentals into symphonic textures, influencing modern ensembles to adopt extended-range variants for bass reinforcement.| Instrument | Standard Range (Fundamental Frequencies) | Notes |

|---|---|---|

| Piano | A0 (27.5 Hz) to C8 (4186 Hz) | Full keyboard span, 88 keys. |

| Violin | G3 (196 Hz) to A7 (3520 Hz) | Open strings to highest position notes. |

| Flute | C4 (262 Hz) to C7 (2093 Hz) | Boehm system enables three octaves. |

| Double Bass | E1 (41.2 Hz) to G4 (392 Hz) | Tuned in fourths, orchestral tuning. |

| Contrabassoon | B♭0 (58.27 Hz) to G3 (196 Hz) | Extended low register for modern use. |

Room Acoustics for Performance

Room acoustics for musical performance encompasses the design and properties of enclosed spaces that shape how sound from instruments and voices is projected, reflected, and perceived by performers and audiences. In concert halls and theaters, the acoustic environment influences the overall listening experience by balancing direct sound, reflections, and reverberation to achieve desired qualities such as intimacy, clarity, and envelopment. Optimal room acoustics ensure that musical ensembles can communicate effectively while delivering a rich, immersive sound to listeners, with parameters like reverberation time serving as key metrics for evaluation.[71] Reverberation time, denoted as RT60, measures the duration required for sound pressure to decay by 60 decibels after the source stops, typically ranging from 1.5 to 2.2 seconds in concert halls for symphonic music to provide warmth without muddiness. The seminal Sabine formula predicts this time as , where is the room volume in cubic meters, are the absorption coefficients of surface materials, and are the corresponding surface areas in square meters; this empirical equation assumes a diffuse sound field and is foundational for architectural planning.[72] Limitations arise in highly absorptive or non-diffuse spaces, where variants like the Eyring formula may offer better accuracy, but Sabine remains widely used for initial designs in performance venues.[73] Early reflections, arriving within approximately 50 milliseconds of the direct sound, enhance clarity by reinforcing the initial wavefront and localizing the source, while late reverberation—beyond 80 milliseconds—contributes to a sense of warmth and spaciousness through overlapping echoes. In concert halls, a strong early reflection component improves intelligibility for complex passages in orchestral works, whereas excessive late reverberation can blur transients, reducing definition; for instance, halls like Boston Symphony Hall exemplify this balance, with early lateral reflections promoting envelopment without overwhelming the direct signal.[71] Designers target a clarity index (C80), the ratio of early to late energy, above -3 dB for music to ensure articulate reproduction.[74] Diffusion and scattering prevent hotspots and echoes by dispersing sound waves evenly, with Schroeder diffusers—based on quadratic residue sequences—revolutionizing room design since their invention in the 1970s by providing broadband scattering from 300 Hz upward. These panels, featuring wells of varying depths calculated from number theory (e.g., a primitive root modulo N=7), distribute reflections uniformly, enhancing spatial uniformity in performance spaces like recording studios and halls without significant absorption. In musical venues, they promote a lively yet balanced sound field, as demonstrated in applications where they mitigate flutter echoes and improve ensemble blending.[75] Stage acoustics focus on providing performers with immediate feedback through reflective shells that project sound toward the audience while fostering intimacy among musicians, particularly in larger halls where direct communication might otherwise be lost. Orchestra shells, often modular with curved canopies and side panels, direct early reflections to players, enabling conductors and sections to hear each other clearly; for example, designs in opera houses converted for concerts use adjustable towers up to 30 feet high to optimize support for symphonic repertoires. In smaller venues, compact shells enhance mutual audibility, reducing reliance on amplified monitoring and preserving natural ensemble dynamics.[76] Modern concert hall designs integrate computational modeling and advanced materials to achieve tailored acoustics, as seen in the Elbphilharmonie in Hamburg, opened in 2017, where 10,000 uniquely shaped gypsum-fiber panels line the walls to diffuse and reflect sound, creating a vineyard-style hall with exceptional clarity and reverberation balance for diverse performances. While fixed in structure, such innovations address variability through precise control of reflection patterns, moving beyond pre-2020 limitations by incorporating digital simulations for optimization; for non-classical events, electronic systems further adapt the space.[77][78]References

- https://www.[arxiv](/page/ArXiv).org/pdf/2510.14159

- https://www.[nature](/page/Nature).com/articles/s41467-024-45812-z

- https://www.[nature](/page/Nature).com/articles/s41598-020-65615-8