Recent from talks

Contribute something

Nothing was collected or created yet.

Recent human evolution

View on WikipediaThis article has been flagged as possibly containing fringe theories without giving appropriate weight to mainstream views. (March 2022) |

| Part of a series on |

| Evolutionary biology |

|---|

|

Recent human evolution refers to evolutionary adaptation, sexual and natural selection, and genetic drift within Homo sapiens populations, since their separation and dispersal in the Middle Paleolithic about 50,000 years ago. Contrary to popular belief, not only are humans still evolving, their evolution since the dawn of agriculture is faster than ever before.[1][2][3][4] It has been proposed that human culture acts as a selective force in human evolution and has accelerated it;[5] however, this is disputed.[6][7] With a sufficiently large data set and modern research methods, scientists can study the changes in the frequency of an allele occurring in a tiny subset of the population over a single lifetime, the shortest meaningful time scale in evolution.[8] Comparing a given gene with that of other species enables geneticists to determine whether it is rapidly evolving in humans alone. For example, while human DNA is on average 98% identical to chimpanzee DNA, the so-called Human Accelerated Region 1 (HAR1), involved in the development of the brain, is only 85% similar.[2]

Following the peopling of Africa some 130,000 years ago, and the recent Out-of-Africa expansion some 70,000 to 50,000 years ago, some sub-populations of Homo sapiens have been geographically isolated for tens of thousands of years prior to the early modern Age of Discovery. Combined with archaic admixture, this has resulted in relatively significant genetic variation. Selection pressures were especially severe for populations affected by the Last Glacial Maximum (LGM) in Eurasia, and for sedentary farming populations since the Neolithic, or New Stone Age.[9]

Single nucleotide polymorphisms (SNP, pronounced 'snip'), or mutations of a single genetic code "letter" in an allele that spread across a population, in functional parts of the genome can potentially modify virtually any conceivable trait, from height and eye color to susceptibility to diabetes and schizophrenia. While approximately 2% of the human genome codes for proteins and a slightly larger fraction is involved in gene regulation, the remainder has no known function. If the environment remains stable, the beneficial mutations will spread throughout the local population over many generations until it becomes a dominant trait. An extremely beneficial allele could become ubiquitous in a population in as little as a few centuries whereas those that are less advantageous typically take millennia.[10]

Human traits that emerged recently include the ability to free-dive for long periods of time,[11] adaptations for living in high altitudes where oxygen concentrations are low,[2] resistance to contagious diseases (such as malaria),[12] light skin,[13] blue eyes,[14] lactase persistence (or the ability to digest milk after weaning),[15][16] lower blood pressure and cholesterol levels,[17][18] retention of the median artery,[19] reduced prevalence of Alzheimer's disease,[8] lower susceptibility to diabetes,[20] genetic longevity,[20] shrinking brain sizes,[21][22] and changes in the timing of menarche and menopause.[23]

Archaic admixture

[edit]

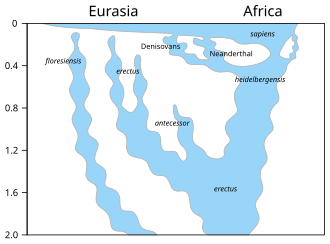

Genetic evidence suggests that a species dubbed Homo heidelbergensis is the last common ancestor of Neanderthals, Denisovans, and Homo sapiens. This common ancestor lived between 600,000 and 750,000 years ago, likely in either Europe or Africa. Members of this species migrated throughout Europe, the Middle East, and Africa and became the Neanderthals in Western Asia and Europe while another group moved further east and evolved into the Denisovans, named after the Denisova Cave in Russia where the first known fossils of them were discovered. In Africa, members of this group eventually became anatomically modern humans. Migrations and geographical isolation notwithstanding, the three descendant groups of Homo heidelbergensis later met and interbred.[24]

Archaeological research suggests that as prehistoric humans swept across Europe 45,000 years ago, Neanderthals went extinct. Even so, there is evidence of interbreeding between the two groups as humans expanded their presence in the continent. While prehistoric humans carried 3–6% Neanderthal DNA, modern humans have only about 2%. This seems to suggest selection against Neanderthal-derived traits.[26] For example, the neighborhood of the gene FOXP2, affecting speech and language, shows no signs of Neanderthal inheritance whatsoever.[27]

Introgression of genetic variants acquired by Neanderthal admixture has different distributions in Europeans and East Asians, pointing to differences in selective pressures.[28] Though East Asians inherit more Neanderthal DNA than Europeans,[27] East Asians, South Asians, Australo-Melanesians, Native Americans, and Europeans all share Neanderthal DNA, so hybridization likely occurred between Neanderthals and their common ancestors coming out of Africa.[29] Their differences also suggest separate hybridization events for the ancestors of East Asians and other Eurasians.[27]

Following the genome sequencing of three Vindija Neanderthals, a draft sequence of the Neanderthal genome was published and revealed that Neanderthals shared more alleles with Eurasian populations—such as French, Han Chinese, and Papua New Guinean—than with sub-Saharan African populations, such as Yoruba and San. According to the authors of the study, the observed excess of genetic similarity is best explained by recent gene flow from Neanderthals to modern humans after the migration out of Africa.[30] But gene flow did not go one way. The fact that some of the ancestors of modern humans in Europe migrated back into Africa means that modern Africans also carry some genetic materials from Neanderthals. In particular, Africans share 7.2% Neanderthal DNA with Europeans but only 2% with East Asians.[29]

Some climatic adaptations, such as high-altitude adaptation in humans, are thought to have been acquired by archaic admixture. An ethnic group known as the Sherpas from Nepal is believed to have inherited an allele called EPAS1, which allows them to breathe easily at high altitudes, from the Denisovans.[24] A 2014 study reported that Neanderthal-derived variants found in East Asian populations showed clustering in functional groups related to immune and haematopoietic pathways, while European populations showed clustering in functional groups related to the lipid catabolic process.[note 1] A 2017 study found correlation of Neanderthal admixture in modern European populations with traits such as skin tone, hair color, height, sleeping patterns, mood and smoking addiction.[31] A 2020 study of Africans unveiled Neanderthal haplotypes, or alleles that tend to be inherited together, linked to immunity and ultraviolet sensitivity.[29]

The gene microcephalin (MCPH1), involved in the development of the brain, likely originated from a Homo lineage separate from that of anatomically modern humans, but was introduced to them around 37,000 years ago, and has become much more common ever since, reaching around 70% of the human population at present. Neanderthals were suggested as one possible origin of this gene.[32] But later studies did not find this gene in the Neanderthal genome[33][30] nor has it been found to be associated with cognitive ability in modern people.[34][35][36]

The promotion of beneficial traits acquired from admixture is known as adaptive introgression.[29]

A study concluded only 1.5–7% of "regions" of the modern human genome to be specific to modern humans. These regions have neither been altered by archaic hominin DNA due to admixture (only a small portion of archaic DNA is inherited per individual but a large portion is inherited across populations overall) nor are shared with Neanderthals or Denisovans in any of the genomes of the used datasets. They also found two bursts of changes specific to modern human genomes which involve genes related to brain development and function.[37][38]

Upper Paleolithic, or the Late Stone Age (50,000 to 12,000 years ago)

[edit]

Victorian naturalist Charles Darwin was the first to propose the out-of-Africa hypothesis for the peopling of the world,[39] but the story of prehistoric human migration is now understood to be much more complex thanks to twenty-first-century advances in genomic sequencing.[39][40][41] There were multiple waves of dispersal of anatomically modern humans out of Africa,[42][43][44] with the most recent one dating back to 70,000 to 50,000 years ago.[45][46][47][48] Earlier waves of human migrants might have gone extinct or returned to Africa.[44][49] Moreover, a combination of gene flow from Eurasia back into Africa and higher rates of genetic drift among East Asians compared to Europeans led these human populations to diverge from one another at different times.[39]

Around 65,000 to 50,000 years ago, a variety of new technologies, such as projectile weapons, fish hooks, porcelain, and sewing needles, made their appearance.[50] Bird-bone flutes were invented 30,000 to 35,000 years ago,[51] indicating the arrival of music.[50] Artistic creativity also flowered, as can be seen with Venus figurines and cave paintings.[50] Cave paintings of not just actual animals but also imaginary creatures that could reliably be attributed to Homo sapiens have been found in different parts of the world. Radioactive dating suggests that the oldest of the ones that have been found, as of 2019, are 44,000 years old.[52] For researchers, these artworks and inventions represent a milestone in the evolution of human intelligence, the roots of story-telling, paving the way for spirituality and religion.[50][52] Experts believe this sudden "great leap forward"—as anthropologist Jared Diamond calls it—was due to climate change. Around 60,000 years ago, during the middle of an ice age, it was extremely cold in the far north, but ice sheets sucked up much of the moisture in Africa, making the continent even drier and droughts much more common. The result was a genetic bottleneck, pushing Homo sapiens to the brink of extinction, and a mass exodus from Africa. Nevertheless, it remains uncertain (as of 2003) whether or not this was due to some favorable genetic mutations, for example in the FOXP2 gene, linked to language and speech.[53] A combination of archaeological and genetic evidence suggests that humans migrated along Southern Asia and down to Australia 50,000 years ago, to the Middle East and then to Europe 35,000 years ago, and finally to the Americas via the Siberian Arctic 15,000 years ago.[53]

DNA analyses conducted since 2007 revealed the acceleration of evolution with regards to defenses against disease, skin color, nose shapes, hair color and type, and body shape since about 40,000 years ago, continuing a trend of active selection since humans emigrated from Africa 100,000 years ago. Humans living in colder climates tend to be more heavily built compared to those in warmer climates because having a smaller surface area compared to volume makes it easier to retain heat.[note 2] People from warmer climates tend to have thicker lips, which have large surface areas, enabling them to keep cool. With regards to nose shapes, humans residing in hot and dry places tend to have narrow and protruding noses in order to reduce loss of moisture. Humans living in hot and humid places tend to have flat and broad noses that moisturize inhaled air and retain moisture from exhaled air.[dubious – discuss][citation needed] Humans dwelling in cold and dry places tend to have small, narrow, and long noses in order to warm and moisturize inhaled air. As for hair types, humans from regions with colder climates tend to have straight hair so that the head and neck are kept warm. Straight hair also allows cool moisture to quickly fall off the head. On the other hand, tight and curly hair increases the exposed areas of the scalp, easing the evaporation of sweat and allowing heat to be radiated away while keeping itself off the neck and shoulders. Epicanthic eye folds are believed to be an adaptation protecting the eye from overexposure to ultraviolet radiation, and is presumed to be a particular trait in archaic humans from eastern and southeast Asia. A cold-adaptive explanation for the epicanthic fold is today seen as outdated by some, as epicanthic folds appear in some African populations. Dr. Frank Poirier, a physical anthropologist at Ohio State University, concluded that the epicanthic fold in fact may be an adaptation for tropical regions, and was already part of the natural diversity found among early modern humans.[54][55]

Physiological or phenotypical changes have been traced to Upper Paleolithic mutations, such as the East Asian variant of the EDAR gene, dated to about 35,000 years ago in Southern or Central China. Traits affected by the mutation are sweat glands, teeth, hair thickness and breast tissue.[57] While Africans and Europeans carry the ancestral version of the gene, most East Asians have the mutated version. By testing the gene on mice, Yana G. Kamberov and Pardis C. Sabeti and their colleagues at the Broad Institute found that the mutated version brings thicker hair shafts, more sweat glands, and less breast tissue. East Asian women are known for having comparatively small breasts and East Asians in general tend to have thick hair. The research team calculated that this gene originated in Southern China, which was warm and humid, meaning having more sweat glands would be advantageous to the hunter-gatherers who lived there.[57] A subsequent study from 2021, based on ancient DNA samples, has suggested that the derived variant became dominant among "Ancient Northern East Asians" shortly after the Last Glacial Maximum in Northeast Asia, around 19,000 years ago. Ancient remains from Northern East Asia, such as the Tianyuan Man (40,000 years old) and the AR33K (33,000 years old) specimen lacked the derived EDAR allele, while ancient East Asian remains after the LGM carry the derived EDAR allele.[58][59] The frequency of 370A is most highly elevated in North Asian and East Asian populations.[60]

The most recent Ice Age peaked in intensity between 19,000 and 25,000 years ago and ended about 12,000 years ago. As the glaciers that once covered Scandinavia all the way down to Northern France retreated, humans began returning to Northern Europe from the Southwest, modern-day Spain. But about 14,000 years ago, humans from Southeastern Europe, especially Greece and Turkey, began migrating to the rest of the continent, displacing the first group of humans. Analysis of genomic data revealed that all Europeans since 37,000 years ago have descended from a single founding population that survived the Ice Age, with specimens found in various parts of the continent, such as Belgium. Although this human population was displaced 33,000 years ago, a genetically related group began spreading across Europe 19,000 years ago.[26] Recent divergence of Eurasian lineages was sped up significantly during the Last Glacial Maximum (LGM), the Mesolithic and the Neolithic, due to increased selection pressures and founder effects associated with migration.[61] Alleles predictive of light skin have been found in Neanderthals,[62] but the alleles for light skin in Europeans and East Asians, KITLG and ASIP, are (as of 2012) thought to have not been acquired by archaic admixture but recent mutations since the LGM.[61] Hair, eye, and skin pigmentation phenotypes associated with humans of European descent emerged during the LGM, from about 19,000 years ago.[13] The associated TYRP1 SLC24A5 and SLC45A2 alleles emerge around 19,000 years ago, still during the LGM, most likely in the Caucasus.[61][63] Within the last 20,000 years or so, lighter skin has evolved in East Asia, Europe, North America and Southern Africa. In general, people living in higher latitudes tend to have lighter skin.[3] The HERC2 variation for blue eyes first appears around 14,000 years ago in Italy and the Caucasus.[64]

Inuit adaptation to high-fat diet and cold climate has been traced to a mutation dated the Last Glacial Maximum (20,000 years ago).[65] Humans living in Northern Asia and the Arctic have evolved the ability to develop thick layers of fat on their faces to keep warm. Moreover, the Inuit tend to have flat and broad faces, an adaptation that reduces the likelihood of frostbites.[66]

Australian Aboriginals living in the Central Desert, where the temperature can drop below freezing at night, have evolved the ability to reduce their core temperatures without shivering.[66]

Holocene (12,000 years ago to present)

[edit]Neolithic or New Stone Age

[edit]Impacts of agriculture

[edit]The advent of agriculture has played a key role in the evolutionary history of humanity. Early farming communities benefited from new and comparatively stable sources of food, but were also exposed to new and initially devastating diseases such as tuberculosis, measles, and smallpox. Eventually, genetic resistance to such diseases evolved and humans living today are descendants of those who survived the agricultural revolution and reproduced.[67][5] The pioneers of agriculture faced tooth cavities, protein deficiency and general malnutrition, resulting in shorter statures.[5] Diseases are one of the strongest forces of evolution acting on Homo sapiens. As this species migrated throughout Africa and began colonizing new lands outside the continent around 100,000 years ago, they came into contact with and helped spread a variety of pathogens with deadly consequences. In addition, the dawn of agriculture led to the rise of major disease outbreaks. Malaria is the oldest known of human contagions, traced to West Africa around 100,000 years ago, before humans began migrating out of the continent. Malarial infections surged around 10,000 years ago, raising the selective pressures upon the affected populations, leading to the evolution of resistance.[12]

Examples for adaptations related to agriculture and animal domestication include East Asian types of ADH1B associated with rice domestication,[68] and lactase persistence.[69][70]

Migrations

[edit]As Europeans and East Asians migrated out of Africa, those groups were maladapted and came under stronger selective pressures.[5]

Lactose tolerance

[edit]

Around 11,000 years ago, as agriculture was replacing hunting and gathering in the Middle East, people invented ways to reduce the concentrations of lactose in milk by fermenting it to make yogurt and cheese. People lost the ability to digest lactose as they matured and as such lost the ability to consume milk. Thousands of years later, a genetic mutation enabled people living in Europe at the time to continue producing lactase, an enzyme that digests lactose, throughout their lives, allowing them to drink milk after weaning and survive bad harvests.[15]

Today, lactase persistence can be found in 90% or more of the populations in Northwestern and Northern Central Europe, and in pockets of Western and Southeastern Africa, Saudi Arabia, and South Asia. It is not as common in Southern Europe (40%) because Neolithic farmers had already settled there before the mutation existed. It is rarer in inland Southeast Asia and Southern Africa. While all Europeans with lactase persistence share a common ancestor for this ability, pockets of lactase persistence outside Europe are likely due to separate mutations. The European mutation, called the LP allele, is traced to modern-day Hungary, 7,500 years ago. In the twenty-first century, about 35% of the human population is capable of digesting lactose after the age of seven or eight.[15] Before this mutation, dairy farming was already widespread in Europe.[71]

A Finnish research team reported that the European mutation that allows for lactase persistence is not found among the milk-drinking and dairy-farming Africans, however. Sarah Tishkoff and her students confirmed this by analyzing DNA samples from Tanzania, Kenya, and Sudan, where lactase persistence evolved independently. The uniformity of the mutations surrounding the lactase gene suggests that lactase persistence spread rapidly throughout this part of Africa. According to Tishkoff's data, this mutation first appeared between 3,000 and 7,000 years ago. This mutation provides some protection against drought and enables people to drink milk without diarrhea, which causes dehydration.[16]

Lactase persistence is a rare ability among mammals.[71] Because it involves a single gene, it is a simple example of convergent evolution in humans. Other examples of convergent evolution, such as the light skin of Europeans and East Asians or the various means of resistance to malaria, are much more complicated.[16]

Skin color

[edit]

The light skin pigmentation characteristic of modern Europeans is estimated to have spread across Europe in a "selective sweep" during the Mesolithic (5,000 years ago).[13] Signals for selection in favor of light skin among Europeans was one of the most pronounced, comparable to those for resistance to malaria or lactose tolerance.[72] However, Dan Ju and Ian Mathieson caution in a study addressing 40,000 years of modern human history, "we can assess the extent to which they carried the same light pigmentation alleles that are present today," but explain that c. 40,000 BP Early Upper Paleolithic hunter-gatherers "may have carried different alleles that we cannot now detect", and as a result "we cannot confidently make statements about the skin pigmentation of ancient populations."[73]

Eumelanin, which is responsible for pigmentation in human skin, protects against ultraviolet radiation while also limiting vitamin D synthesis.[74] Variations in skin color, due to the levels of melanin, are caused by at least 25 different genes, and variations evolved independently of each other to meet different environmental needs.[74] Over the millennia, human skin colors have evolved to be well-suited to their local environments. Having too much melanin can lead to vitamin D deficiency and bone deformities while having too little makes the person more vulnerable to skin cancer.[74] Indeed, Europeans have evolved lighter skin in order to combat vitamin D deficiency in regions with low levels of sunlight. Today, they and their descendants in places with intense sunlight such as Australia are highly vulnerable to sunburn and skin cancer. On the other hand, Inuit have a diet rich in vitamin D and consequently have not needed lighter skin.[75]

Eye color

[edit]Blue eyes are an adaptation for living in regions where the amounts of light are limited because they allow more light to come in than brown eyes.[66] They also seem to have undergone both sexual and frequency-dependent selection.[76][77][72] A research program by geneticist Hans Eiberg and his team at the University of Copenhagen from the 1990s to 2000s investigating the origins of blue eyes revealed that a mutation in the gene OCA2 is responsible for this trait. According to them, all humans initially had brown eyes and the OCA2 mutation took place between 6,000 and 10,000 years ago. It dilutes the production of melanin, responsible for the pigmentation of human hair, eye, and skin color. The mutation does not completely switch off melanin production, however, as that would leave the individual with a condition known as albinism. Variations in eye color from brown to green can be explained via the variation in the amounts of melanin produced in the iris. While brown-eyed individuals share a large area in their DNA controlling melanin production, blue-eyed individuals have only a small region. By examining mitochondrial DNA of people from multiple countries, Eiberg and his team concluded blue-eyed individuals all share a common ancestor.[14]

In 2018, an international team of researchers from Israel and the United States announced their genetic analysis of 6,500-year-old excavated human remains in Israel's Upper Galilee region revealed a number of traits not found in the humans who had previously inhabited the area, including blue eyes. They concluded that the region experienced a significant demographic shift 6,000 years ago due to migration from Anatolia and the Zagros mountains (in modern-day Turkey and Iran) and that this change contributed to the development of the Chalcolithic culture in the region.[78]

Bronze Age to Medieval Era

[edit]

Resistance to malaria is a well-known example of recent human evolution. This disease attacks humans early in life. Thus humans who are resistant enjoy a higher chance of surviving and reproducing. While humans have evolved multiple defenses against malaria, sickle cell anemia—a condition in which red blood cells are deformed into sickle shapes, thereby restricting blood flow—is perhaps the best known. Sickle cell anemia makes it more difficult for the malarial parasite to infect red blood cells. This mechanism of defense against malaria emerged independently in Africa and in Pakistan and India. Within 4,000 years it has spread to 10–15% of the populations of these places.[79] Another mutation that enabled humans to resist malaria that is strongly favored by natural selection and has spread rapidly in Africa is the inability to synthesize the enzyme glucose-6-phosphate dehydrogenase, or G6PD.[16]

A combination of poor sanitation and high population densities proved ideal for the spread of contagious diseases which was deadly for the residents of ancient cities. Evolutionary thinking would suggest that people living in places with long-standing urbanization dating back millennia would have evolved resistance to certain diseases, such as tuberculosis and leprosy. Using DNA analysis and archeological findings, scientists from the University College London and the Royal Holloway studied samples from 17 sites in Europe, Asia, and Africa. They learned that, indeed, long-term exposure to pathogens has led to resistance spreading across urban populations. Urbanization is therefore a selective force that has influenced human evolution.[80] The allele in question is named SLC11A1 1729+55del4. Scientists found that among the residents of places that have been settled for thousands of years, such as Susa in Iran, this allele is ubiquitous whereas in places with just a few centuries of urbanization, such as Yakutsk in Siberia, only 70–80% of the population have it.[81]

Evolution to resist infection of pathogens also increased inflammatory disease risk in post-Neolithic Europeans over the last 10,000 years. A study of ancient DNA estimated nature, strength, and time of onset of selections due to pathogens and also found that "the bulk of genetic adaptation occurred after the start of the Bronze Age, <4,500 years ago".[82][83]

Adaptations have also been found in modern populations living in extreme climatic conditions such as the Arctic, as well as immunological adaptations such as resistance against prion caused brain disease in populations practicing mortuary cannibalism, or the consumption of human corpses.[84][85] Inuit have the ability to thrive on the lipid-rich diets consisting of Arctic mammals. Human populations living in regions of high altitudes, such as the Tibetan Plateau, Ethiopia, and the Andes benefit from a mutation that enhances the concentration of oxygen in their blood.[2] This is achieved by having more capillaries, increasing their capacity for carrying oxygen.[3] This mutation is believed to be around 3,000 years old.[2]

A recent adaptation has been proposed for the Austronesian Sama-Bajau, also known as the Sea Gypsies or Sea Nomads, developed under selection pressures associated with subsisting on free-diving over the past thousand years or so.[11][86] As maritime hunter-gatherers, the ability to dive for long periods of times plays a crucial role in their survival. Due to the mammalian dive reflex, the spleen contracts when the mammal dives and releases oxygen-carrying red blood cells. Over time, individuals with larger spleens were more likely to survive the lengthy free-dives, and thus reproduce. By contrast, communities centered around farming show no signs of evolving to have larger spleens. Because the Sama-Bajau show no interest in abandoning this lifestyle, there is no reason to believe further adaptation will not occur.[18]

Advances in the biology of genomes have enabled geneticists to investigate the course of human evolution within centuries. Jonathan Pritchard and a postdoctoral fellow, Yair Field, counted the singletons, or changes of single DNA bases, which are likely to be recent because they are rare and have not spread throughout the population. Since alleles bring neighboring DNA regions with them as they move around the genome, the number of singletons can be used to roughly estimate how quickly the allele has changed its frequency. This approach can unveil evolution within the last 2,000 years or a hundred human generations. Armed with this technique and data from the UK10K project, Pritchard and his team found that alleles for lactase persistence, blond hair, and blue eyes have spread rapidly among Britons within the last two millennia or so. Britain's cloudy skies may have played a role in that the genes for light hair could also cause light skin, reducing the chances of vitamin D deficiency. Sexual selection could also favor blond hair. The technique also enabled them to track the selection of polygenic traits—those affected by a multitude of genes, rather than just one—such as height, infant head circumferences, and female hip sizes (crucial for giving birth).[23] They found that natural selection has been favoring increased height and larger head and female hip sizes among Britons. Moreover, lactase persistence showed signs of active selection during the same period. However, evidence for the selection of polygenic traits is weaker than those affected only by one gene.[87]

A 2012 paper studied the DNA sequence of around 6,500 Americans of European and African descent and confirmed earlier work indicating that the majority of changes to a single letter in the sequence (single nucleotide variants) were accumulated within the last 5,000-10,000 years. Almost three quarters arose in the last 5,000 years or so. About 14% of the variants are potentially harmful, and among those, 86% were 5,000 years old or younger. The researchers also found that European Americans had accumulated a much larger number of mutations than African Americans. This is likely a consequence of their ancestors' migration out of Africa, which resulted in a genetic bottleneck; there were few mates available. Despite the subsequent exponential growth in population, natural selection has not had enough time to eradicate the harmful mutations. While humans today carry far more mutations than their ancestors did 5,000 years ago, they are not necessarily more vulnerable to illnesses because these might be caused by multiple mutations. It does, however, confirm earlier research suggesting that common diseases are not caused by common gene variants.[88] In any case, the fact that the human gene pool has accumulated so many mutations over such a short period of time—in evolutionary terms—and that the human population has exploded in that time mean that humanity is more evolvable than ever before. Natural selection might eventually catch up with the variations in the gene pool, as theoretical models suggest that evolutionary pressures increase as a function of population size.[89]

Early Modern Period to present

[edit]A study published in 2021 states that the populations of the Cape Verde islands off the coast of West Africa have speedily evolved resistance to malaria within roughly the last 20 generations, since the start of human habitation there. As expected, the residents of the Island of Santiago, where malaria is most prevalent, show the highest prevalence of resistance. This is one of the most rapid cases of change to the human genome measured.[90][91]

Geneticist Steve Jones told the BBC that during the sixteenth century, only a third of English babies survived until the age of 21, compared to 99% in the twenty-first century. Medical advances, especially those made in the twentieth century, made this change possible. Yet while people from the developed world today are living longer and healthier lives, many are choosing to have just a few or no children at all, meaning evolutionary forces continue to act on the human gene pool, just in a different way.[92]

Natural selection affects only 8% of the human genome, meaning mutations in the remaining parts of the genome can change their frequency by pure chance through neutral selection. If natural selective pressures are reduced, then more mutations survive, which could increase their frequency and the rate of evolution. For humans, a large source of heritable mutations is sperm; a man accumulates more and more mutations in his sperm as he ages. Hence, men delaying reproduction can affect human evolution.[2]

A 2012 study led by Augustin Kong suggests that the number of de novo (new) mutations increases by about two per year of delayed reproduction by the father and that the total number of paternal mutations doubles every 16.5 years.[93]

For a long time, medicine has reduced the fatality of genetic defects and contagious diseases, allowing more and more humans to survive and reproduce, but it has also enabled maladaptive traits that would otherwise be culled to accumulate in the gene pool. This is not a problem as long as access to modern healthcare is maintained. But natural selective pressures will mount considerably if that is taken away.[18] Nevertheless, dependence on medicine rather than genetic adaptations will likely be the driving force behind humanity's fight against diseases for the foreseeable future. Moreover, while the introduction of antibiotics initially reduced the mortality rates due to infectious diseases by significant amounts, overuse has led to the rise of antibiotic-resistant strains of bacteria, making many illnesses major causes of death once again.[67]

Human jaws and teeth have been shrinking in proportion with the decrease in body size in the last 30,000 years as a result of new diets and technology. There are many individuals today who do not have enough space in their mouths for their third molars (or wisdom teeth) due to reduced jaw sizes. In the twentieth century, the trend toward smaller teeth appeared to have been slightly reversed due to the introduction of fluoride, which thickens dental enamel, thereby enlarging the teeth.[66]

Recent research suggests that menopause is evolving to occur later. Other reported trends appear to include lengthening of the human reproductive period and reduction in cholesterol levels, blood glucose and blood pressure in some populations.[17]

Population geneticist Emmanuel Milot and his team studied recent human evolution in an isolated Canadian island using 140 years of church records. They found that selection favored younger age at first birth among women.[8] In particular, the average age at first birth of women from Coudres Island (Île aux Coudres), 80 km (50 mi) northeast of Québec City, decreased by four years between 1800 and 1930. Women who started having children sooner generally ended up with more children in total who survive until adulthood. In other words, for these French-Canadian women, reproductive success was associated with lower age at first childbirth. Maternal age at first birth is a highly heritable trait.[94]

Human evolution continues during the modern era, including among industrialized nations. Things like access to contraception and the freedom from predators do not stop natural selection.[95] Among developed countries, where life expectancy is high and infant mortality rates are low, selective pressures are the strongest on traits that influence the number of children a human has. It is speculated that alleles influencing sexual behavior would be subject to strong selection, though the details of how genes can affect said behavior remain unclear.[10]

Historically, as a by-product of the ability to walk upright, humans evolved to have narrower hips and birth canals and to have larger heads. Compared to other close relatives such as chimpanzees, childbirth is a highly challenging and potentially fatal experience for humans. Thus began an evolutionary tug-of-war (see Obstetrical dilemma). For babies, having larger heads proved beneficial as long as their mothers' hips were wide enough. If not, both mother and child typically died. This is an example of balancing selection, or the removal of extreme traits. In this case, heads that were too large or hips that were too small were selected against. This evolutionary tug-of-war attained an equilibrium, making these traits remain more or less constant over time while allowing for genetic variation to flourish, thus paving the way for rapid evolution should selective forces shift their direction.[96]

All this changed in the twentieth century as Caesarean sections (also known as C-sections) became safer and more common in some parts of the world.[97] Larger head sizes continue to be favored while selective pressures against smaller hip sizes have diminished. Projecting forward, this means that human heads would continue to grow while hip sizes would not. As a result of increasing fetopelvic disproportion, C-sections would become more and more common in a positive feedback loop, though not necessarily to the extent that natural childbirth would become obsolete.[96][97]

Paleoanthropologist Briana Pobiner of the Smithsonian Institution noted that cultural factors could play a role in the widely different rates of C-sections across the developed and developing worlds. Daghni Rajasingam of the Royal College of Obstetricians observed that the increasing rates of diabetes and obesity among women of reproductive age also boost the demand for C-sections.[97] Biologist Philipp Mitteroecker from the University of Vienna and his team estimated that about six percent of all births worldwide were obstructed and required medical intervention. In the United Kingdom, one quarter of all births involved the C-section while in the United States, the number was one in three. Mitteroecker and colleagues discovered that the rate of C-sections has gone up 10% to 20% since the mid-twentieth century. They argued that because the availability of safe Cesarean sections significantly reduced maternal and infant mortality rates in the developed world, they have induced an evolutionary change. However, "It's not easy to foresee what this will mean for the future of humans and birth," Mitteroecker told The Independent. This is because the increase in baby sizes is limited by the mother's metabolic capacity and modern medicine, which makes it more likely that neonates who are born prematurely or are underweight to survive.[98]

Researchers participating in the Framingham Heart Study, which began in 1948 and was intended to investigate the cause of heart disease among women and their descendants in Framingham, Massachusetts, found evidence for selective pressures against high blood pressure due to the modern Western diet, which contains high amounts of salt, known for raising blood pressure. They also found evidence for selection against hypercholesterolemia, or high levels of cholesterol in the blood.[18] Evolutionary geneticist Stephen Stearns and his colleagues reported signs that women were gradually becoming shorter and heavier. Stearns argued that human culture and changes humans have made on their natural environments are driving human evolution rather than putting the process to a halt.[92] The data indicates that the women were not eating more; rather, the ones who were heavier tended to have more children.[99] Stearns and his team also discovered that the subjects of the study tended to reach menopause later; they estimated that if the environment remains the same, the average age at menopause will increase by about a year in 200 years, or about ten generations. All these traits have medium to high heritability.[10] Given the starting date of the study, the spread of these adaptations can be observed in just a few generations.[18]

By analyzing genomic data of 60,000 individuals of Caucasian descent from Kaiser Permanente in Northern California, and of 150,000 people in the UK Biobank, evolutionary geneticist Joseph Pickrell and evolutionary biologist Molly Przeworski were able to identify signs of biological evolution among living human generations. For the purposes of studying evolution, one lifetime is the shortest possible time scale. An allele associated with difficulty withdrawing from tobacco smoking dropped in frequency among the British but not among the Northern Californians. This suggests that heavy smokers—who were common in Britain during the 1950s but not in Northern California—were selected against. A set of alleles linked to later menarche was more common among women who lived for longer. An allele called ApoE4, linked to Alzheimer's disease, fell in frequency as carriers tended to not live for very long.[23] In fact, these were the only traits that reduced life expectancy Pickrell and Przeworski found, which suggests that other harmful traits probably have already been eradicated. Only among older people are the effects of Alzheimer's disease and smoking visible. Moreover, smoking is a relatively recent trend. It is not entirely clear why such traits bring evolutionary disadvantages, however, since older people have already had children. Scientists proposed that either they also bring about harmful effects in youth or that they reduce an individual's inclusive fitness, or the tendency of organisms that share the same genes to help each other. Thus, mutations that make it difficult for grandparents to help raise their grandchildren are unlikely to propagate throughout the population.[8] Pickrell and Przeworski also investigated 42 traits determined by multiple alleles rather than just one, such as the timing of puberty. They found that later puberty and older age of first birth were correlated with higher life expectancy.[8]

Larger sample sizes allow for the study of rarer mutations. Pickrell and Przeworski told The Atlantic that a sample of half a million individuals would enable them to study mutations that occur among only 2% of the population, which would provide finer details of recent human evolution.[8] While studies of short time scales such as these are vulnerable to random statistical fluctuations, they can improve understanding of the factors that affect survival and reproduction among contemporary human populations.[23]

Evolutionary geneticist Jaleal Sanjak and his team analyzed genetic and medical information from more than 200,000 women over the age of 45 and 150,000 men over the age of 50—people who have passed their reproductive years—from the UK Biobank and identified 13 traits among women and ten among men that were linked to having children at a younger age, having a higher body-mass index,[note 3] fewer years of education, and lower levels of fluid intelligence, or the capacity for logical reasoning and problem solving. Sanjak noted, however, that it was not known whether having children actually made women heavier or being heavier made it easier to reproduce. Because taller men and shorter women tended to have more children and because the genes associated with height affect men and women equally, the average height of the population will likely remain the same. Among women who had children later, those with higher levels of education had more children.[95]

Evolutionary biologist Hakhamanesh Mostafavi led a 2017 study that analyzed data of 215,000 individuals from just a few generations in the United Kingdom and the United States and found a number of genetic changes that affect longevity. The ApoE allele linked to Alzheimer's disease was rare among women aged 70 and over while the frequency of the CHRNA3 gene associated with smoking addiction among men fell among middle-aged men and up. Because this is not itself evidence of evolution, since natural selection only cares about successful reproduction not longevity, scientists have proposed a number of explanations. Men who live longer tend to have more children. Men and women who survive until old age can help take care of both their children and grandchildren, in benefits their descendants down the generations. This explanation is known as the grandmother hypothesis. It is also possible that Alzheimer's disease and smoking addiction are also harmful earlier in life, but the effects are more subtle and larger sample sizes are required in order to study them. Mostafavi and his team also found that mutations causing health problems such as asthma, having a high body-mass index and high cholesterol levels were more common among those with shorter lifespans while mutations leading to delayed puberty and reproduction were more common among long living individuals. According to geneticist Jonathan Pritchard, while the link between fertility and longevity was identified in previous studies, those did not entirely rule out the effects of educational and financial status—people who rank high in both tend to have children later in life; this seems to suggest the existence of an evolutionary trade-off between longevity and fertility.[100]

In South Africa, where large numbers of people are infected with HIV, some have genes that help them combat this virus, making it more likely that they would survive and pass this trait onto their children.[101] If the virus persists, humans living in this part of the world could become resistant to it in as little as hundreds of years. However, because HIV evolves more quickly than humans, it will more likely be dealt with technologically rather than genetically.[10]

A 2017 study by researchers from Northwestern University unveiled a mutation among the Old Order Amish living in Berne, Indiana, that suppressed their chances of having diabetes and extends their life expectancy by about ten years on average. That mutation occurred in the gene called Serpine1, which codes for the production of the protein PAI-1 (plasminogen activator inhibitor), which regulates blood clotting and plays a role in the aging process. About 24% of the people sampled carried this mutation and had a life expectancy of 85, higher than the community average of 75. Researchers also found the telomeres—non-functional ends of human chromosomes—of those with the mutation to be longer than those without. Because telomeres shorten as the person ages, those with longer telomeres tend to live longer. At present, the Amish live in 22 U.S. states plus the Canadian province of Ontario. They live simple lifestyles that date back centuries and generally insulate themselves from modern North American society. They are mostly indifferent towards modern medicine, but scientists do have a healthy relationship with the Amish community in Berne. Their detailed genealogical records make them ideal subjects for research.[20]

In 2020, Teghan Lucas, Maciej Henneberg, Jaliya Kumaratilake gave evidence that a growing share of the human population retained the median artery in their forearms. This structure forms during fetal development but dissolves once two other arteries, the radial and ulnar arteries, develop. The median artery allows for more blood flow and could be used as a replacement in certain surgeries. Their statistical analysis suggested that the retention of the median artery was under extremely strong selection within the last 250 years or so. People have been studying this structure and its prevalence since the eighteenth century.[19][102]

Multidisciplinary research suggests that ongoing evolution could help explain the rise of certain medical conditions such as autism and autoimmune disorders. Autism and schizophrenia may be due to genes inherited from the mother and the father which are over-expressed and which fight a tug-of-war in the child's body. Allergies, asthma, and autoimmune disorders appear linked to higher standards of sanitation, which prevent the immune systems of modern humans from being exposed to various parasites and pathogens the way their ancestors' were, making them hypersensitive and more likely to overreact. The human body is not built from a professionally engineered blueprint but a system shaped over long periods of time by evolution with all kinds of trade-offs and imperfections. Understanding the evolution of the human body can help medical doctors better understand and treat various disorders. Research in evolutionary medicine suggests that diseases are prevalent because natural selection favors reproduction over health and longevity. In addition, biological evolution is slower than cultural evolution and humans evolve more slowly than pathogens.[103]

Whereas in the ancestral past, humans lived in geographically isolated communities where inbreeding was rather common,[67] modern transportation technologies have made it much easier for people to travel great distances and facilitated further genetic mixing, giving rise to additional variations in the human gene pool.[99] It also enables the spread of diseases worldwide, which can have an effect on human evolution.[67] Furthermore, climate change may trigger the mass migration of not just humans but also diseases affecting humans.[74] Besides the selection and flow of genes and alleles, another mechanism of biological evolution is epigenetics, or changes not to the DNA sequence itself, but rather the way it is expressed. Scientists already know that chronic illnesses and stress are epigenetic mechanisms.[3]

See also

[edit]Notes

[edit]- ^ "Specifically, genes in the LCP [lipid catabolic process] term had the greatest excess of NLS in populations of European descent, with an average NLS frequency of 20.8±2.6% versus 5.9±0.08% genome wide (two-sided t-test, P<0.0001, n=379 Europeans and n=246 Africans). Further, among examined out-of-Africa human populations, the excess of NLS [Neanderthal-like genomic sites] in LCP genes was only observed in individuals of European descent: the average NLS frequency in Asians is 6.7±0.7% in LCP genes versus 6.2±0.06% genome wide."[28]

- ^ Mathematically, area is a function of the square of distance whereas volume is a function of distance cubed. Volume therefore grows faster than area. See the square-cube law.

- ^ Defined as the mass (in kilograms) divided by the square of the height (in meters).

References

[edit]- ^ Dunham, Will (December 10, 2007). "Rapid acceleration in human evolution described". Science News. Reuters. Retrieved May 17, 2020.

- ^ a b c d e f Hurst, Laurence D. (November 14, 2018). "Human evolution is still happening – possibly faster than ever". Science and Technology. The Conversation. Retrieved May 17, 2020.

- ^ a b c d Flatow, Ira (September 27, 2013). "Modern Humans Still Evolving, and Faster Than Ever". Science. NPR. Retrieved May 19, 2020.

- ^ Stock, Jay T (2008). "Are humans still evolving?: Technological advances and unique biological characteristics allow us to adapt to environmental stress. Has this stopped genetic evolution?". EMBO Reports. 9 (Suppl 1): S51–S54. doi:10.1038/embor.2008.63. PMC 3327538. PMID 18578026.

- ^ a b c d Biello, David (December 10, 2007). "Culture Speeds Up Human Evolution". Scientific American. Archived from the original on November 12, 2020. Retrieved March 19, 2021.

- ^ Pinker, Steven (June 26, 2006). "Groups and Genes". The New Republic. Retrieved October 25, 2017.

- ^ Pinker, Steven (2012). "The False Allure of Group Selection". Edge. Retrieved November 28, 2018.

- ^ a b c d e f Zhang, Sarah (September 13, 2017). "Huge DNA Databases Reveal the Recent Evolution of Humans". Science. The Atlantic. Retrieved May 18, 2020.

- ^ Pinhasi, Ron; Thomas, Mark G.; Hofreiter, Michael; Currat, Mathias; Burger, Joachim (October 2012). "The genetic history of Europeans". Trends in Genetics. 28 (10): 496–505. doi:10.1016/j.tig.2012.06.006. PMID 22889475.

- ^ a b c d "How We Are Evolving". Scientific American. November 1, 2012. Retrieved May 27, 2020.

- ^ a b Ilardo, M. A.; Moltke, I.; Korneliussen, T. S.; Cheng, J.; Stern, A. J.; Racimo, F.; de Barros Damgaard, P.; Sikora, M.; Seguin-Orlando, A.; Rasmussen, S.; van den Munckhof, I. C. L.; ter Horst, R.; Joosten, L. A. B.; Netea, M. G.; Salingkat, S.; Nielsen, R.; Willerslev, E. (April 18, 2018). "Physiological and Genetic Adaptations to Diving in Sea Nomads". Cell. 173 (3): 569–580.e15. doi:10.1016/j.cell.2018.03.054. PMID 29677510.

- ^ a b Karlsson, Elinor K.; Kwiatkowski, Dominic P.; Sabeti, Pardis C. (June 2015). "Natural selection and infectious disease in human populations". Nature Reviews Genetics. 15 (6): 379–393. doi:10.1038/nrg3734. PMC 4912034. PMID 24776769.

- ^ a b c Burger, Joachim; Thomas, Mark G.; Schier, Wolfram; Potekhina, Inna D.; Hollfelder, Nina; Unterländer, Martina; Kayser, Manfred; Kaiser, Elke; Kirsanow, Karola (April 1, 2014). "Direct evidence for positive selection of skin, hair, and eye pigmentation in Europeans during the last 5,000 y". Proceedings of the National Academy of Sciences. 111 (13): 4832–4837. Bibcode:2014PNAS..111.4832W. doi:10.1073/pnas.1316513111. PMC 3977302. PMID 24616518.

- ^ a b University of Copenhagen (January 31, 2008). "Blue-eyed humans have a single, common ancestor". Science Daily. Retrieved May 23, 2020.

- ^ a b c Curry, Andrew (July 31, 2013). "Archaeology: The milk revolution". Nature. 500 (7460): 20–2. Bibcode:2013Natur.500...20C. doi:10.1038/500020a. PMID 23903732.

- ^ a b c d Check, Erika (December 21, 2006). "How Africa learned to love the cow". Nature. 444 (7122): 994–996. doi:10.1038/444994a. PMID 17183288.

- ^ a b Byars, S. G.; Ewbank, D.; Govindaraju, D. R.; Stearns, S. C. (2009). "Natural selection in a contemporary human population". Proceedings of the National Academy of Sciences. 107 (suppl_1): 1787–1792. Bibcode:2010PNAS..107.1787B. doi:10.1073/pnas.0906199106. PMC 2868295. PMID 19858476.

- ^ a b c d e Funnell, Anthony (May 14, 2019). "It isn't obvious, but humans are still evolving. So what will the future hold?". ABC News (Australia). Retrieved May 22, 2020.

- ^ a b Lucas, Teghan; Henneberg, Maciej; Kumaratilake, Jaliya (September 10, 2020). "Recently increased prevalence of the human median artery of the forearm: A microevolutionary change". Journal of Anatomy. 237 (4): 623–631. doi:10.1111/joa.13224. PMC 7495300. PMID 32914433.

- ^ a b c O'Connor, Joe (November 17, 2017). "Do Amish hold the key to a longer life? Study finds anti-aging gene in religious group". The National Post. Retrieved May 27, 2020.

- ^ Gruber, Karl (May 29, 2018). "We are still evolving". Biology. Phys.org. Retrieved May 25, 2020.

- ^ NPR Staff (January 2, 2011). "Our Brains Are Shrinking. Are We Getting Dumber?". Science. NPR. Retrieved May 18, 2020.

- ^ a b c d Pennisi, Elizabeth (May 17, 2016). "Humans are still evolving—and we can watch it happen". Science Magazine. Retrieved May 18, 2020.

- ^ a b Dorey, Fran (April 20, 2020). "The Denisovans". Australian Museum. Retrieved June 3, 2020.

- ^ Churchill, Steven E.; Keys, Kamryn; Ross, Ann H. (August 2022). "Midfacial Morphology and Neandertal–Modern Human Interbreeding". Biology. 11 (8): 1163. doi:10.3390/biology11081163. ISSN 2079-7737. PMC 9404802. PMID 36009790.

- ^ a b Howard Hughes Medical Institute (May 2, 2016). "The genetic history of Ice Age Europe". Science Daily. Retrieved May 23, 2020.

- ^ a b c Callaway, Ewen (March 26, 2014). "Human evolution: The Neanderthal in the family". Nature. Retrieved May 29, 2020.

- ^ a b Khrameeva, E; Bozek, K; He, L; Yan, Z; Jiang, X; Wei, Y; Tang, K; Gelfand, MS; Prüfer, K; Kelso, J; Pääbo, S; Giavalisco, P; Lachmann, M; Khaitovich, P (2014). "Neanderthal ancestry drives evolution of lipid catabolism in contemporary Europeans". Nature Communications. 5 (3584): 3584. Bibcode:2014NatCo...5.3584K. doi:10.1038/ncomms4584. PMC 3988804. PMID 24690587.

- ^ a b c d Cell Press (January 30, 2020). "Modern Africans and Europeans may have more Neanderthal ancestry than previously thought". Phys.org. Retrieved May 31, 2020.

- ^ a b Green RE, Krause J, Briggs AW, Maricic T, Stenzel U, Kircher M, et al. (May 2010). "A draft sequence of the Neandertal genome". Science. 328 (5979): 710–722. Bibcode:2010Sci...328..710G. doi:10.1126/science.1188021. PMC 5100745. PMID 20448178.

- ^ Dannemann, Michael; Kelso, Janet (October 2017). "The Contribution of Neanderthals to Phenotypic Variation in Modern Humans". The American Journal of Human Genetics. 101 (4): 578–589. doi:10.1016/j.ajhg.2017.09.010. PMC 5630192. PMID 28985494.

- ^ Evans, Patrick D.; Mekel-Bobrov, Nitzan; Vallender, Eric J.; Hudson, Richard R.; Lahn, Bruce T. (November 28, 2006). Harpending, Henry C. (ed.). "Evidence that the adaptive allele of the brain size gene microcephalin introgressed into Homo sapiens from an archaic Homo lineage". Proceedings of the National Academy of Sciences of the United States of America. 403 (48): 18178–18183. Bibcode:2006PNAS..10318178E. doi:10.1073/pnas.0606966103. PMC 1635020. PMID 17090677.

- ^ Pennisi E (February 2009). "Neandertal genomics. Tales of a prehistoric human genome". Science. 323 (5916): 866–71. doi:10.1126/science.323.5916.866. PMID 19213888. S2CID 206584252.

- ^ Timpson N, Heron J, Smith GD, Enard W (August 2007). "Comment on papers by Evans et al. and Mekel-Bobrov et al. on Evidence for Positive Selection of MCPH1 and ASPM". Science. 317 (5841): 1036, author reply 1036. Bibcode:2007Sci...317.1036T. doi:10.1126/science.1141705. PMID 17717170.

- ^ Mekel-Bobrov N, Posthuma D, Gilbert SL, Lind P, Gosso MF, Luciano M, et al. (March 2007). "The ongoing adaptive evolution of ASPM and Microcephalin is not explained by increased intelligence". Human Molecular Genetics. 16 (6): 600–8. doi:10.1093/hmg/ddl487. PMID 17220170.

- ^ Rushton JP, Vernon PA, Bons TA (April 2007). "No evidence that polymorphisms of brain regulator genes Microcephalin and ASPM are associated with general mental ability, head circumference or altruism". Biology Letters. 3 (2): 157–60. doi:10.1098/rsbl.2006.0586. PMC 2104484. PMID 17251122.

- ^ "Only a tiny fraction of our DNA is uniquely human". Science News. July 16, 2021. Retrieved August 13, 2021.

- ^ Schaefer, Nathan K.; Shapiro, Beth; Green, Richard E. (July 1, 2021). "An ancestral recombination graph of human, Neanderthal, and Denisovan genomes". Science Advances. 7 (29) eabc0776. Bibcode:2021SciA....7..776S. doi:10.1126/sciadv.abc0776. ISSN 2375-2548. PMC 8284891. PMID 34272242.

- ^ a b c López, Saioa; van Dorp, Lucy; Hellenthal, Garrett (April 21, 2016). "Human Dispersal Out of Africa: A Lasting Debate". Evolutionary Bioinformatics. 11 (Suppl 2): 57–68. doi:10.4137/EBO.S33489. PMC 4844272. PMID 27127403.

- ^ Wood, Barry (July 1, 2019). "Genotemporality: The DNA Revolution and The Prehistory of Human Migration". Journal of Big History. 3 (3). The International Big History Association: 225–231. doi:10.22339/jbh.v3i3.3313.

- ^ Diamond, Jared (April 20, 2018). "A Brand-New Version of Our Origin Story". Book Reviews. The New York Times. Archived from the original on December 5, 2020. Retrieved April 19, 2021.

- ^ Harvati, Katerina; Röding, Carolin; et al. (July 2019). "Apidima Cave fossils provide earliest evidence of Homo sapiens in Eurasia". Nature. 571 (7766): 500–504. doi:10.1038/s41586-019-1376-z. hdl:10072/397334. PMID 31292546. S2CID 195873640.

- ^ AFP (July 10, 2019). "'Oldest remains' outside Africa reset human migration clock". Phys.org. Retrieved July 10, 2019.

- ^ a b Zimmer, Carl (July 10, 2019). "A Skull Bone Discovered in Greece May Alter the Story of Human Prehistory - The bone, found in a cave, is the oldest modern human fossil ever discovered in Europe. It hints that humans began leaving Africa far earlier than once thought". Science. The New York Times. Archived from the original on January 2, 2021. Retrieved July 11, 2019.

- ^ Rito T, Vieira D, Silva M, Conde-Sousa E, Pereira L, Mellars P, et al. (March 2019). "A dispersal of Homo sapiens from southern to eastern Africa immediately preceded the out-of-Africa migration". Scientific Reports. 9 (1): 4728. Bibcode:2019NatSR...9.4728R. doi:10.1038/s41598-019-41176-3. PMC 6426877. PMID 30894612.

- ^ Posth C, Renaud G, Mittnik M, Drucker DG, Rougier H, Cupillard C, et al. (2016). "Pleistocene Mitochondrial Genomes Suggest a Single Major Dispersal of Non-Africans and a Late Glacial Population Turnover in Europe". Current Biology. 26 (6): 827–833. Bibcode:2016CBio...26..827P. doi:10.1016/j.cub.2016.01.037. hdl:2440/114930. PMID 26853362. S2CID 140098861.

- ^ Karmin M, Saag L, Vicente M, Wilson Sayres MA, Järve M, Talas UG, et al. (April 2015). "A recent bottleneck of Y chromosome diversity coincides with a global change in culture". Genome Research. 25 (4): 459–66. doi:10.1101/gr.186684.114. PMC 4381518. PMID 25770088.

- ^ Haber M, Jones AL, Connell BA, Arciero E, Yang H, Thomas MG, et al. (August 2019). "A Rare Deep-Rooting D0 African Y-Chromosomal Haplogroup and Its Implications for the Expansion of Modern Humans Out of Africa". Genetics. 212 (4): 1421–1428. doi:10.1534/genetics.119.302368. PMC 6707464. PMID 31196864.

- ^ Finlayson, Clive (2009). "Chapter 3: Failed Experiments". The humans who went extinct: why Neanderthals died out and we survived. Oxford University Press. p. 68. ISBN 978-0-19-923918-4.

- ^ a b c d Longrich, Nicholas R. (September 9, 2020). "When did we become fully human? What fossils and DNA tell us about the evolution of modern intelligence". The Conversation. Retrieved March 23, 2021.

- ^ Conard, Nicholas J.; Malina, Maria; Münzel, Susanne C. (June 24, 2009). "New flutes document the earliest musical tradition in southwestern Germany". Nature. 460 (2009): 737–740. Bibcode:2009Natur.460..737C. doi:10.1038/nature08169. PMID 19553935. S2CID 4336590.

- ^ a b Price, Michael (December 13, 2019). "Cave painting suggests ancient origin of modern mind". Archaeology. Science. 366 (6471). American Association for the Advancement of Science: 1299. Bibcode:2019Sci...366.1299P. doi:10.1126/science.366.6471.1299. PMID 31831650. S2CID 209342930.

- ^ a b Wells, Spencer (July 3, 2003). "The great leap". Research. The Guardian. Retrieved March 23, 2021.

- ^ Kwon, Bongsik; Nguyen, Anh H. (August 2015). "Reconsideration of the Epicanthus: Evolution of the Eyelid and the Devolutional Concept of Asian Blepharoplasty". Seminars in Plastic Surgery. 29 (3): 171–183. doi:10.1055/s-0035-1556849. ISSN 1535-2188. PMC 4536067. PMID 26306084.

- ^ Poirier, Frank (October 13, 2021). "Origin and evolution of the epicanthic fold remains a puzzle for scientists". Chicago Tribune.

- ^ O'Dea, JD (January 1994). "Possible contribution of low ultraviolet light under the rainforest canopy to the small stature of Pygmies and Negritos". Homo: Journal of Comparative Human Biology. 44 (3): 284–7.

- ^ a b Kamberov, Yana G.; Wang, Sijia; Tan, Jingze; Gerbault, Pascale; Wark, Abigail; Tan, Longzhi; Yang, Yajun; Li, Shilin; Tang, Kun; Chen, Hua; Powell, Adam; Itan, Yuval; Fuller, Dorian; Lohmueller, Jason; Mao, Junhao; Schachar, Asa; Paymer, Madeline; Hostetter, Elizabeth; Byrne, Elizabeth; Burnett, Melissa; McMahon, Andrew P.; Thomas, Mark G.; Lieberman, Daniel E.; Jin, Li; Tabin, Clifford J.; Morgan, Bruce A.; Sabeti, Pardis C. (February 2013). "Modeling Recent Human Evolution in Mice by Expression of a Selected EDAR Variant". Cell. 152 (4): 691–702. doi:10.1016/j.cell.2013.01.016. PMC 3575602. PMID 23415220.

- ^ Mao, Xiaowei; Zhang, Hucai; Qiao, Shiyu; Liu, Yichen; Chang, Fengqin; Xie, Ping; Zhang, Ming; Wang, Tianyi; Li, Mian; Cao, Peng; Yang, Ruowei; Liu, Feng; Dai, Qingyan; Feng, Xiaotian; Ping, Wanjing (2021-06-10). "The deep population history of northern East Asia from the Late Pleistocene to the Holocene". Cell. 184 (12): 3256–3266.e13. doi:10.1016/j.cell.2021.04.040. ISSN 0092-8674. PMID 34048699.

- ^ Zhang, Xiaoming; Ji, Xueping; Li, Chunmei; Yang, Tingyu; Huang, Jiahui; Zhao, Yinhui; Wu, Yun; Ma, Shiwu; Pang, Yuhong; Huang, Yanyi; He, Yaoxi; Su, Bing (25 July 2022). "A Late Pleistocene human genome from Southwest China". Current Biology. 32 (14): 3095–3109.e5. Bibcode:2022CBio...32E3095Z. doi:10.1016/j.cub.2022.06.016. ISSN 0960-9822. PMID 35839766. S2CID 250502011.

- ^ Hlusko, Leslea J.; Carlson, Joshua P.; Chaplin, George; Elias, Scott A.; Hoffecker, John F.; Huffman, Michaela; Jablonski, Nina G.; Monson, Tesla A.; O'Rourke, Dennis H.; Pilloud, Marin A.; Scott, G. Richard (2018-05-08). "Environmental selection during the last ice age on the mother-to-infant transmission of vitamin D and fatty acids through breast milk". Proceedings of the National Academy of Sciences. 115 (19): E4426–E4432. Bibcode:2018PNAS..115E4426H. doi:10.1073/pnas.1711788115. ISSN 0027-8424. PMC 5948952. PMID 29686092.

- ^ a b c Beleza, Sandra; Santos, A. M.; McEvoy, B.; Alves, I.; Martinho, C.; Cameron, E.; Shriver, M. D.; Parra, E. J.; Rocha, J. (2012). "The timing of pigmentation lightening in Europeans". Molecular Biology and Evolution. 30 (1): 24–35. doi:10.1093/molbev/mss207. PMC 3525146. PMID 22923467.

- ^ Lalueza-Fox, C.; Rompler, H.; Caramelli, D.; Staubert, C.; Catalano, G.; Hughes, D.; Rohland, N.; Pilli, E.; Longo, L.; Condemi, S.; de la Rasilla, M.; Fortea, J.; Rosas, A.; Stoneking, M.; Schoneberg, T.; Bertranpetit, J.; Hofreiter, M. (November 30, 2007). "A Melanocortin 1 Receptor Allele Suggests Varying Pigmentation Among Neanderthals". Science. 318 (5855): 1453–1455. Bibcode:2007Sci...318.1453L. doi:10.1126/science.1147417. PMID 17962522. S2CID 10087710.

- ^ Jones, Eppie R.; Gonzalez-Fortes, Gloria; Connell, Sarah; Siska, Veronika; Eriksson, Anders; Martiniano, Rui; McLaughlin, Russell L.; Gallego Llorente, Marcos; Cassidy, Lara M.; Gamba, Cristina; Meshveliani, Tengiz; Bar-Yosef, Ofer; Müller, Werner; Belfer-Cohen, Anna; Matskevich, Zinovi; Jakeli, Nino; Higham, Thomas F. G.; Currat, Mathias; Lordkipanidze, David; Hofreiter, Michael; Manica, Andrea; Pinhasi, Ron; Bradley, Daniel G. (November 16, 2015). "Upper Palaeolithic genomes reveal deep roots of modern Eurasians". Nature Communications. 6 (1): 8912. Bibcode:2015NatCo...6.8912J. doi:10.1038/ncomms9912. PMC 4660371. PMID 26567969.

- ^ Fu, Qiaomei; Posth, Cosimo (May 2, 2016). "The genetic history of Ice Age Europe". Nature. 534 (7606): 200–205. Bibcode:2016Natur.534..200F. doi:10.1038/nature17993. hdl:10211.3/198594. PMC 4943878. PMID 27135931.

- ^ Fumagalli, Matteo; et al. (2015). "Greenlandic Inuit show genetic signatures of diet and climate adaptation". Science. 349 (6254): 1343–1347. Bibcode:2015Sci...349.1343F. doi:10.1126/science.aab2319. hdl:10044/1/43212. PMID 26383953. S2CID 546365.

- ^ a b c d Fran, Dorey (May 27, 2020). "How have we changed since our species first appeared?". Australian Museum. Retrieved June 9, 2020.

- ^ a b c d Dorey, Fran (November 2, 2018). "How do we affect our evolution?". Australian Museum. Retrieved June 2, 2020.

- ^ Peng, Y.; et al. (2010). "The ADH1B Arg47His polymorphism in East Asian populations and expansion of rice domestication in history". BMC Evolutionary Biology. 10 (1): 15. Bibcode:2010BMCEE..10...15P. doi:10.1186/1471-2148-10-15. PMC 2823730. PMID 20089146.

- ^ Ségurel, Laure; Bon, Céline (2017). "On the Evolution of Lactase Persistence in Humans". Annual Review of Genomics and Human Genetics. 18 (1): 297–319. doi:10.1146/annurev-genom-091416-035340. PMID 28426286.

- ^ Ingram, Catherine J. E.; Mulcare, Charlotte A.; Itan, Yuval; Thomas, Mark G.; Swallow, Dallas M. (November 26, 2008). "Lactose digestion and the evolutionary genetics of lactase persistence". Human Genetics. 124 (6): 579–591. doi:10.1007/s00439-008-0593-6. PMID 19034520. S2CID 3329285.

- ^ a b Check, Erika (February 26, 2007). "Ancient DNA solves milk mystery". News. Nature. Retrieved May 22, 2020.

- ^ a b Universität Mainz (March 10, 2014). "Natural selection has altered the appearance of Europeans over the past 5,000 years". Science Daily. Retrieved March 21, 2021.

- ^ Ju, Dan; Mathieson, Ian (2021). "The evolution of skin pigmentation-associated variation in West Eurasia". PNAS. 118 (1) e2009227118. Bibcode:2021PNAS..11809227J. doi:10.1073/pnas.2009227118. PMC 7817156. PMID 33443182.

- ^ a b c d Solomon, Scott (September 7, 2018). "Climate change could affect human evolution. Here's how". Science. NBC News. Retrieved April 2, 2021.

- ^ Etcoff, Nancy (1999). "Chapter 4: Cover Me". Survival of the Prettiest. New York: Doubleday. pp. 115–6. ISBN 0-385-47854-2.

- ^ Frost, Peter (2006). "Frost: Why Do Europeans Have So Many Hair and Eye Colors?". Cogweb. University of California, Los Angeles. Retrieved March 21, 2021.

- ^ Forti, Isabela Rodrigues Nogueira; Young, Robert John (2016). "Human Commercial Models' Eye Colour Shows Negative Frequency-Dependent Selection". PLOS ONE. 11 (12) e0168458. Bibcode:2016PLoSO..1168458F. doi:10.1371/journal.pone.0168458. PMC 5179042. PMID 28005995.

- ^ American Friends of Tel Aviv University (August 20, 2018). "DNA analysis of 6,500-year-old human remains with blue eye mutation". Science Daily. Retrieved May 23, 2020.

- ^ Choi, Charles Q. (November 13, 2009). "Humans still evolving as our brains shrink". Science. NBC News. Archived from the original on February 4, 2019. Retrieved May 24, 2020.

- ^ "City living helped humans evolve immunity to tuberculosis and leprosy, new research suggests". University College London via Science Daily. September 24, 2010. Retrieved May 22, 2020.

- ^ Choi, Charles Q. (September 24, 2010). "Does city life affect human evolution?". Science. NBC News. Retrieved May 22, 2020.[dead link]

- ^ Kerner, Gaspard; Neehus, Anna-Lena; Philippot, Quentin; Bohlen, Jonathan; Rinchai, Darawan; Kerrouche, Nacim; Puel, Anne; Zhang, Shen-Ying; Boisson-Dupuis, Stéphanie; Abel, Laurent; Casanova, Jean-Laurent; Patin, Etienne; Laval, Guillaume; Quintana-Murci, Lluis (8 February 2023). "Genetic adaptation to pathogens and increased risk of inflammatory disorders in post-Neolithic Europe". Cell Genomics. 3 (2) 100248. doi:10.1016/j.xgen.2022.100248. ISSN 2666-979X. PMC 9932995. PMID 36819665. S2CID 250341156.

- ^ Barreiro, Luis B.; Quintana-Murci, Lluís (January 2010). "From evolutionary genetics to human immunology: how selection shapes host defence genes". Nature Reviews Genetics. 11 (1): 17–30. doi:10.1038/nrg2698. ISSN 1471-0064. PMID 19953080. S2CID 15705508.

- ^ Medical Research Council (UK) (November 21, 2009). "Brain Disease 'Resistance Gene' evolves in Papua New Guinea community; could offer insights Into CJD". ScienceDaily. Rockville, MD: ScienceDaily, LLC. Retrieved November 22, 2009.

- ^ Mead, Simon; Whitfield, Jerome; Poulter, Mark; Shah, Paresh; Uphill, James; Campbell, Tracy; Al-Dujaily, Huda; Hummerich, Holger; Beck, Jon; Mein, Charles A.; Verzilli, Claudio; Whittaker, John; Alpers, Michael P.; Collinge, John (November 19, 2009). "A Novel Protective Prion Protein Variant that Colocalizes with Kuru Exposure" (PDF). New England Journal of Medicine. 361 (21): 2056–2065. doi:10.1056/NEJMoa0809716. PMID 19923577.

- ^ Gislén, A; Dacke, M; Kröger, RH; Abrahamsson, M; Nilsson, DE; Warrant, EJ (2003). "Superior Underwater Vision in a Human Population of Sea Gypsies". Current Biology. 13 (10): 833–836. Bibcode:2003CBio...13..833G. doi:10.1016/S0960-9822(03)00290-2. PMID 12747831. S2CID 18731746.

- ^ Nowogrodzki, Anna (May 17, 2016). "Scientists track last 2,000 years of British evolution". Nature. Retrieved May 29, 2020.

- ^ Subbaraman, Nidhi (November 28, 2012). "Past 5,000 years prolific for changes to human genome". Nature. Retrieved May 25, 2020.

- ^ Keim, Brandon (November 29, 2012). "Human Evolution Enters an Exciting New Phase". Wired. Retrieved May 25, 2020.

- ^ Duke University (January 28, 2021). "Malaria threw human evolution into overdrive on this African archipelago". Science Daily. Retrieved April 2, 2021.

- ^ Hamid, Iman; Korunes, Katharine L.; Beleza, Sandra; Goldberg, Amy (January 4, 2021). "Rapid adaptation to malaria facilitated by admixture in the human population of Cabo Verde". eLife. 10 e63177. doi:10.7554/eLife.63177. PMC 7815310. PMID 33393457.

- ^ a b Bootle, Olly (March 1, 2011). "Are humans still evolving by Darwin's natural selection?". Science and Environment. BBC News. Retrieved May 18, 2020.

- ^ A., Kong; et al. (2012). "Rate of de novo mutations and the importance of father's age to disease risk". Nature. 488 (7412): 471–475. Bibcode:2012Natur.488..471K. doi:10.1038/nature11396. PMC 3548427. PMID 22914163.

- ^ Milot, Emmanuel; Mayer, Francine M.; Nussey, Daniel H.; Boisvert, Mireille; Pelletier, Fanie; Réale, Denis (October 11, 2011). "Evidence for evolution in response to natural selection in a contemporary human population". Proceedings of the National Academy of Sciences of the United States of America. 108 (41): 17040–17045. Bibcode:2011PNAS..10817040M. doi:10.1073/pnas.1104210108. PMC 3193187. PMID 21969551.

- ^ a b Chung, Emily (December 19, 2017). "Natural selection in humans is happening more than you think". Technology & Science. CBC News. Retrieved May 23, 2020.

- ^ a b "Natural selection hidden in modern medicine?". Understanding Evolution. University of California, Berkeley. March 2017. Retrieved June 23, 2020.

- ^ a b c Briggs, Helen (2016). "Caesarean births 'affecting human evolution'". Science and Technology. BBC News. Retrieved June 23, 2020.

- ^ Walker, Peter (December 6, 2016). "Success of C-sections altering course of human evolution, says new childbirth research". Science. The Independent. Retrieved June 23, 2020.

- ^ a b Railton, David; Newman, Tim (May 31, 2018). "Are humans still evolving?". Medical News Today. Retrieved May 23, 2020.

- ^ Martin, Bruno (September 6, 2017). "Massive genetic study shows how humans are evolving". Nature. Retrieved May 29, 2020.

- ^ Lomas, Kenny. "Are humans still evolving?". Science Focus. BBC. Retrieved May 18, 2020.

- ^ Flinders University (October 7, 2020). "Forearm artery reveals humans evolving from changes in natural selection". Phys.org. Retrieved March 26, 2021.

- ^ Harvard University (January 11, 2020). "Ongoing human evolution could explain recent rise in certain disorders". Science Daily. Retrieved May 24, 2020.

External links

[edit]- Pinker, Steven (2008). "What Have You Changed Your Mind About? Why?". Edge. Retrieved August 16, 2022.

- Randolph M. Nesse, Carl T. Bergstrom, Peter T. Ellison, Jeffrey S. Flier, Peter Gluckman, Diddahally R. Govindaraju, Dietrich Niethammer, Gilbert S. Omenn, Robert L. Perlman, Mark D. Schwartz, Mark G. Thomas, Stephen C. Stearns, David Valle. Making evolutionary biology a basic science for medicine. Proceedings of the National Academy of Sciences. Jan 2010, 107 (suppl 1) 1800–1807.

- Scott Solomon. The Future of Human Evolution | What Darwin Didn't Know. The Great Courses Plus. February 24, 2019. (Video lecture, 32:19.)

- Laurence Hurst. Is Human Evolution Speeding Up or Slowing Down? TED-Ed. September 2020. (Video lecture, 5:25)

- How Humans are Shaping Our Own Evolution, National Geographic, D. T. Max, 2017

Further reading

[edit]- Hunt, Earl B. (2011). Human Intelligence. Cambridge University Press. pp. 203–256, 407–447. ISBN 978-0-521-70781-7.

- Nesse, Randolph M. (2019). Good Reasons for Bad Feelings: Insights from the Frontier of Evolutionary Psychiatry. New York: Dutton. pp. 161–182, 234–261. ISBN 978-1-101-98566-3.

- Pinker, Steven (2011). The Better Angels of Our Nature: Why Violence Has Declined. New York: Penguin Group. pp. 611–622. ISBN 978-0-14-312201-2.

- Pinker, Steven (2016) [2005]. "36. The False Allure of Group Selection". In Buss, David (ed.). The Handbook of Evolutionary Psychology, Volume 2: Integrations (2nd ed.). Hoboken, NJ: Wiley. pp. 867–880. ISBN 978-1-118-75580-8.

- Neves, Walter; Senger, Maria Helena; Rocha, Gabriel; Valota, Leticia; Hubbe, Mark (2024). "The latest steps of human evolution: What the hard evidence has to say about it?". Quaternary Environments and Humans. 2 (2) 100005. doi:10.1016/j.qeh.2024.100005.