Recent from talks

Nothing was collected or created yet.

Rationality

View on Wikipedia

| Part of a series on |

| Epistemology |

|---|

Rationality is the quality of being guided by or based on reason. In this regard, a person acts rationally if they have a good reason for what they do, or a belief is rational if it is based on strong evidence. This quality can apply to an ability, as in a rational animal, to a psychological process, like reasoning, to mental states, such as beliefs and intentions, or to persons who possess these other forms of rationality. A thing that lacks rationality is either arational, if it is outside the domain of rational evaluation, or irrational, if it belongs to this domain but does not fulfill its standards.

There are many discussions about the essential features shared by all forms, or accounts, of rationality. According to reason-responsiveness accounts, to be rational is to be responsive to reasons. For example, dark clouds are a reason for taking an umbrella, which is why it is rational for an agent to do so in response. An important rival to this approach are coherence-based accounts, which define rationality as internal coherence among the agent's mental states. Many rules of coherence have been suggested in this regard, for example, that one should not hold contradictory beliefs or that one should intend to do something if one believes that one should do it.

Goal-based accounts characterize rationality in relation to goals, such as acquiring truth in the case of theoretical rationality. Internalists believe that rationality depends only on the person's mind. Externalists contend that external factors may also be relevant. Debates about the normativity of rationality concern the question of whether one should always be rational. A further discussion is whether rationality requires that all beliefs be reviewed from scratch rather than trusting pre-existing beliefs.

Various types of rationality are discussed in the academic literature. The most influential distinction is between theoretical and practical rationality. Theoretical rationality concerns the rationality of beliefs. Rational beliefs are based on evidence that supports them. Practical rationality pertains primarily to actions. This includes certain mental states and events preceding actions, like intentions and decisions. In some cases, the two can conflict, as when practical rationality requires that one adopts an irrational belief. Another distinction is between ideal rationality, which demands that rational agents obey all the laws and implications of logic, and bounded rationality, which takes into account that this is not always possible since the computational power of the human mind is too limited. Most academic discussions focus on the rationality of individuals. This contrasts with social or collective rationality, which pertains to collectives and their group beliefs and decisions.

Rationality is important for solving all kinds of problems in order to efficiently reach one's goal. It is relevant to and discussed in many disciplines. In ethics, one question is whether one can be rational without being moral at the same time. Psychology is interested in how psychological processes implement rationality. This also includes the study of failures to do so, as in the case of cognitive biases. Cognitive and behavioral sciences usually assume that people are rational enough to predict how they think and act. Logic studies the laws of correct arguments. These laws are highly relevant to the rationality of beliefs. A very influential conception of practical rationality is given in decision theory, which states that a decision is rational if the chosen option has the highest expected utility. Other relevant fields include game theory, Bayesianism, economics, and artificial intelligence.

Definition and semantic field

[edit]In its most common sense, rationality is the quality of being guided by reasons or being reasonable.[1][2][3] For example, a person who acts rationally has good reasons for what they do. This usually implies that they reflected on the possible consequences of their action and the goal it is supposed to realize. In the case of beliefs, it is rational to believe something if the agent has good evidence for it and it is coherent with the agent's other beliefs.[4][5] While actions and beliefs are the most paradigmatic forms of rationality, the term is used both in ordinary language and in many academic disciplines to describe a wide variety of things, such as persons, desires, intentions, decisions, policies, and institutions.[6][7] Because of this variety in different contexts, it has proven difficult to give a unified definition covering all these fields and usages. In this regard, different fields often focus their investigation on one specific conception, type, or aspect of rationality without trying to cover it in its most general sense.[8]

These different forms of rationality are sometimes divided into abilities, processes, mental states, and persons.[6][2][1][8][9] For example, when it is claimed that humans are rational animals, this usually refers to the ability to think and act in reasonable ways. It does not imply that all humans are rational all the time: this ability is exercised in some cases but not in others.[6][8][9] On the other hand, the term can also refer to the process of reasoning that results from exercising this ability. Often many additional activities of the higher cognitive faculties are included as well, such as acquiring concepts, judging, deliberating, planning, and deciding as well as the formation of desires and intentions. These processes usually affect some kind of change in the thinker's mental states. In this regard, one can also talk of the rationality of mental states, like beliefs and intentions.[6] A person who possesses these forms of rationality to a sufficiently high degree may themselves be called rational.[1] In some cases, also non-mental results of rational processes may qualify as rational. For example, the arrangement of products in a supermarket can be rational if it is based on a rational plan.[6][2]

The term "rational" has two opposites: irrational and arational. Arational things are outside the domain of rational evaluation, like digestive processes or the weather. Things within the domain of rationality are either rational or irrational depending on whether they fulfill the standards of rationality.[10][7] For example, beliefs, actions, or general policies are rational if there is a good reason for them and irrational otherwise. It is not clear in all cases what belongs to the domain of rational assessment. For example, there are disagreements about whether desires and emotions can be evaluated as rational and irrational rather than arational.[6] The term "irrational" is sometimes used in a wide sense to include cases of arationality.[11]

The meaning of the terms "rational" and "irrational" in academic discourse often differs from how they are used in everyday language. Examples of behaviors considered irrational in ordinary discourse are giving into temptations, going out late even though one has to get up early in the morning, smoking despite being aware of the health risks, or believing in astrology.[12][13] In the academic discourse, on the other hand, rationality is usually identified with being guided by reasons or following norms of internal coherence. Some of the earlier examples may qualify as rational in the academic sense depending on the circumstances. Examples of irrationality in this sense include cognitive biases and violating the laws of probability theory when assessing the likelihood of future events.[12] This article focuses mainly on irrationality in the academic sense.

The terms "rationality", "reason", and "reasoning" are frequently used as synonyms. But in technical contexts, their meanings are often distinguished.[7][12][1] Reason is usually understood as the faculty responsible for the process of reasoning.[7][14] This process aims at improving mental states. Reasoning tries to ensure that the norms of rationality obtain. It differs from rationality nonetheless since other psychological processes besides reasoning may have the same effect.[7] Rationality derives etymologically from the Latin term rationalitas.[6]

Disputes about the concept of rationality

[edit]There are many disputes about the essential characteristics of rationality. It is often understood in relational terms: something, like a belief or an intention, is rational because of how it is related to something else.[6][1] But there are disagreements as to what it has to be related to and in what way. For reason-based accounts, the relation to a reason that justifies or explains the rational state is central. For coherence-based accounts, the relation of coherence between mental states matters. There is a lively discussion in the contemporary literature on whether reason-based accounts or coherence-based accounts are superior.[15][5] Some theorists also try to understand rationality in relation to the goals it tries to realize.[1][16]

Other disputes in this field concern whether rationality depends only on the agent's mind or also on external factors, whether rationality requires a review of all one's beliefs from scratch, and whether we should always be rational.[6][1][12]

Based on reason-responsiveness

[edit]A common idea of many theories of rationality is that it can be defined in terms of reasons. In this view, to be rational means to respond correctly to reasons.[2][1][15] For example, the fact that a food is healthy is a reason to eat it. So this reason makes it rational for the agent to eat the food.[15] An important aspect of this interpretation is that it is not sufficient to merely act accidentally in accordance with reasons. Instead, responding to reasons implies that one acts intentionally because of these reasons.[2]

Some theorists understand reasons as external facts. This view has been criticized based on the claim that, in order to respond to reasons, people have to be aware of them, i.e. they have some form of epistemic access.[15][5] But lacking this access is not automatically irrational. In one example by John Broome, the agent eats a fish contaminated with salmonella, which is a strong reason against eating the fish. But since the agent could not have known this fact, eating the fish is rational for them.[17][18] Because of such problems, many theorists have opted for an internalist version of this account. This means that the agent does not need to respond to reasons in general, but only to reasons they have or possess.[2][15][5][19] The success of such approaches depends a lot on what it means to have a reason and there are various disagreements on this issue.[7][15] A common approach is to hold that this access is given through the possession of evidence in the form of cognitive mental states, like perceptions and knowledge. A similar version states that "rationality consists in responding correctly to beliefs about reasons". So it is rational to bring an umbrella if the agent has strong evidence that it is going to rain. But without this evidence, it would be rational to leave the umbrella at home, even if, unbeknownst to the agent, it is going to rain.[2][19] These versions avoid the previous objection since rationality no longer requires the agent to respond to external factors of which they could not have been aware.[2]

A problem faced by all forms of reason-responsiveness theories is that there are usually many reasons relevant and some of them may conflict with each other. So while salmonella contamination is a reason against eating the fish, its good taste and the desire not to offend the host are reasons in favor of eating it. This problem is usually approached by weighing all the different reasons. This way, one does not respond directly to each reason individually but instead to their weighted sum. Cases of conflict are thus solved since one side usually outweighs the other. So despite the reasons cited in favor of eating the fish, the balance of reasons stands against it, since avoiding a salmonella infection is a much weightier reason than the other reasons cited.[17][18] This can be expressed by stating that rational agents pick the option favored by the balance of reasons.[7][20]

However, other objections to the reason-responsiveness account are not so easily solved. They often focus on cases where reasons require the agent to be irrational, leading to a rational dilemma. For example, if terrorists threaten to blow up a city unless the agent forms an irrational belief, this is a very weighty reason to do all in one's power to violate the norms of rationality.[2][21]

Based on rules of coherence

[edit]An influential rival to the reason-responsiveness account understands rationality as internal coherence.[15][5] On this view, a person is rational to the extent that their mental states and actions are coherent with each other.[15][5] Diverse versions of this approach exist that differ in how they understand coherence and what rules of coherence they propose.[7][20][2] A general distinction in this regard is between negative and positive coherence.[12][22] Negative coherence is an uncontroversial aspect of most such theories: it requires the absence of contradictions and inconsistencies. This means that the agent's mental states do not clash with each other. In some cases, inconsistencies are rather obvious, as when a person believes that it will rain tomorrow and that it will not rain tomorrow. In complex cases, inconsistencies may be difficult to detect, for example, when a person believes in the axioms of Euclidean geometry and is nonetheless convinced that it is possible to square the circle. Positive coherence refers to the support that different mental states provide for each other. For example, there is positive coherence between the belief that there are eight planets in the Solar System and the belief that there are less than ten planets in the Solar System: the earlier belief implies the latter belief. Other types of support through positive coherence include explanatory and causal connections.[12][22]

Coherence-based accounts are also referred to as rule-based accounts since the different aspects of coherence are often expressed in precise rules. In this regard, to be rational means to follow the rules of rationality in thought and action. According to the enkratic rule, for example, rational agents are required to intend what they believe they ought to do. This requires coherence between beliefs and intentions. The norm of persistence states that agents should retain their intentions over time. This way, earlier mental states cohere with later ones.[15][12][5] It is also possible to distinguish different types of rationality, such as theoretical or practical rationality, based on the different sets of rules they require.[7][20]

One problem with such coherence-based accounts of rationality is that the norms can enter into conflict with each other, so-called rational dilemmas. For example, if the agent has a pre-existing intention that turns out to conflict with their beliefs, then the enkratic norm requires them to change it, which is disallowed by the norm of persistence. This suggests that, in cases of rational dilemmas, it is impossible to be rational, no matter which norm is privileged.[15][23][24] Some defenders of coherence theories of rationality have argued that, when formulated correctly, the norms of rationality cannot enter into conflict with each other. That means that rational dilemmas are impossible. This is sometimes tied to additional non-trivial assumptions, such that ethical dilemmas also do not exist. A different response is to bite the bullet and allow that rational dilemmas exist. This has the consequence that, in such cases, rationality is not possible for the agent and theories of rationality cannot offer guidance to them.[15][23][24] These problems are avoided by reason-responsiveness accounts of rationality since they "allow for rationality despite conflicting reasons but [coherence-based accounts] do not allow for rationality despite conflicting requirements". Some theorists suggest a weaker criterion of coherence to avoid cases of necessary irrationality: rationality requires not to obey all norms of coherence but to obey as many norms as possible. So in rational dilemmas, agents can still be rational if they violate the minimal number of rational requirements.[15]

Another criticism rests on the claim that coherence-based accounts are either redundant or false. On this view, either the rules recommend the same option as the balance of reasons or a different option. If they recommend the same option, they are redundant. If they recommend a different option, they are false since, according to its critics, there is no special value in sticking to rules against the balance of reasons.[7][20]

Based on goals

[edit]A different approach characterizes rationality in relation to the goals it aims to achieve.[1][16] In this regard, theoretical rationality aims at epistemic goals, like acquiring truth and avoiding falsehood. Practical rationality, on the other hand, aims at non-epistemic goals, like moral, prudential, political, economic, or aesthetic goals. This is usually understood in the sense that rationality follows these goals but does not set them. So rationality may be understood as a "minister without portfolio" since it serves goals external to itself.[1] This issue has been the source of an important historical discussion between David Hume and Immanuel Kant. The slogan of Hume's position is that "reason is the slave of the passions". This is often understood as the claim that rationality concerns only how to reach a goal but not whether the goal should be pursued at all. So people with perverse or weird goals may still be perfectly rational. This position is opposed by Kant, who argues that rationality requires having the right goals and motives.[7][25][26][27][1]

According to William Frankena there are four conceptions of rationality based on the goals it tries to achieve. They correspond to egoism, utilitarianism, perfectionism, and intuitionism.[1][28][29] According to the egoist perspective, rationality implies looking out for one's own happiness. This contrasts with the utilitarian point of view, which states that rationality entails trying to contribute to everyone's well-being or to the greatest general good. For perfectionism, a certain ideal of perfection, either moral or non-moral, is the goal of rationality. According to the intuitionist perspective, something is rational "if and only if [it] conforms to self-evident truths, intuited by reason".[1][28] These different perspectives diverge a lot concerning the behavior they prescribe. One problem for all of them is that they ignore the role of the evidence or information possessed by the agent. In this regard, it matters for rationality not just whether the agent acts efficiently towards a certain goal but also what information they have and how their actions appear reasonable from this perspective. Richard Brandt responds to this idea by proposing a conception of rationality based on relevant information: "Rationality is a matter of what would survive scrutiny by all relevant information."[1] This implies that the subject repeatedly reflects on all the relevant facts, including formal facts like the laws of logic.[1]

Internalism and externalism

[edit]An important contemporary discussion in the field of rationality is between internalists and externalists.[1][30][31] Both sides agree that rationality demands and depends in some sense on reasons. They disagree on what reasons are relevant or how to conceive those reasons. Internalists understand reasons as mental states, for example, as perceptions, beliefs, or desires. In this view, an action may be rational because it is in tune with the agent's beliefs and realizes their desires. Externalists, on the other hand, see reasons as external factors about what is good or right. They state that whether an action is rational also depends on its actual consequences.[1][30][31] The difference between the two positions is that internalists affirm and externalists reject the claim that rationality supervenes on the mind. This claim means that it only depends on the person's mind whether they are rational and not on external factors. So for internalism, two persons with the same mental states would both have the same degree of rationality independent of how different their external situation is. Because of this limitation, rationality can diverge from actuality. So if the agent has a lot of misleading evidence, it may be rational for them to turn left even though the actually correct path goes right.[2][1]

Bernard Williams has criticized externalist conceptions of rationality based on the claim that rationality should help explain what motivates the agent to act. This is easy for internalism but difficult for externalism since external reasons can be independent of the agent's motivation.[1][32][33] Externalists have responded to this objection by distinguishing between motivational and normative reasons.[1] Motivational reasons explain why someone acts the way they do while normative reasons explain why someone ought to act in a certain way. Ideally, the two overlap, but they can come apart. For example, liking chocolate cake is a motivational reason for eating it while having high blood pressure is a normative reason for not eating it.[34][35] The problem of rationality is primarily concerned with normative reasons. This is especially true for various contemporary philosophers who hold that rationality can be reduced to normative reasons.[2][17][18] The distinction between motivational and normative reasons is usually accepted, but many theorists have raised doubts that rationality can be identified with normativity. On this view, rationality may sometimes recommend suboptimal actions, for example, because the agent lacks important information or has false information. In this regard, discussions between internalism and externalism overlap with discussions of the normativity of rationality.[1]

Relativity

[edit]An important implication of internalist conceptions is that rationality is relative to the person's perspective or mental states. Whether a belief or an action is rational usually depends on which mental states the person has. So carrying an umbrella for the walk to the supermarket is rational for a person believing that it will rain but irrational for another person who lacks this belief.[6][36][37] According to Robert Audi, this can be explained in terms of experience: what is rational depends on the agent's experience. Since different people make different experiences, there are differences in what is rational for them.[36]

Normativity

[edit]Rationality is normative in the sense that it sets up certain rules or standards of correctness: to be rational is to comply with certain requirements.[2][15][16] For example, rationality requires that the agent does not have contradictory beliefs. Many discussions on this issue concern the question of what exactly these standards are. Some theorists characterize the normativity of rationality in the deontological terms of obligations and permissions. Others understand them from an evaluative perspective as good or valuable. A further approach is to talk of rationality based on what is praise- and blameworthy.[1] It is important to distinguish the norms of rationality from other types of norms. For example, some forms of fashion prescribe that men do not wear bell-bottom trousers. Understood in the strongest sense, a norm prescribes what an agent ought to do or what they have most reason to do. The norms of fashion are not norms in this strong sense: that it is unfashionable does not mean that men ought not to wear bell-bottom trousers.[2]

Most discussions of the normativity of rationality are interested in the strong sense, i.e. whether agents ought always to be rational.[2][18][17][38] This is sometimes termed a substantive account of rationality in contrast to structural accounts.[2][15] One important argument in favor of the normativity of rationality is based on considerations of praise- and blameworthiness. It states that we usually hold each other responsible for being rational and criticize each other when we fail to do so. This practice indicates that irrationality is some form of fault on the side of the subject that should not be the case.[39][38] A strong counterexample to this position is due to John Broome, who considers the case of a fish an agent wants to eat. It contains salmonella, which is a decisive reason why the agent ought not to eat it. But the agent is unaware of this fact, which is why it is rational for them to eat the fish.[17][18] So this would be a case where normativity and rationality come apart. This example can be generalized in the sense that rationality only depends on the reasons accessible to the agent or how things appear to them. What one ought to do, on the other hand, is determined by objectively existing reasons.[40][38] In the ideal case, rationality and normativity may coincide but they come apart either if the agent lacks access to a reason or if he has a mistaken belief about the presence of a reason. These considerations are summed up in the statement that rationality supervenes only on the agent's mind but normativity does not.[41][42]

But there are also thought experiments in favor of the normativity of rationality. One, due to Frank Jackson, involves a doctor who receives a patient with a mild condition and has to prescribe one out of three drugs: drug A resulting in a partial cure, drug B resulting in a complete cure, or drug C resulting in the patient's death.[43] The doctor's problem is that they cannot tell which of the drugs B and C results in a complete cure and which one in the patient's death. The objectively best case would be for the patient to get drug B, but it would be highly irresponsible for the doctor to prescribe it given the uncertainty about its effects. So the doctor ought to prescribe the less effective drug A, which is also the rational choice. This thought experiment indicates that rationality and normativity coincide since what is rational and what one ought to do depends on the agent's mind after all.[40][38]

Some theorists have responded to these thought experiments by distinguishing between normativity and responsibility.[38] On this view, critique of irrational behavior, like the doctor prescribing drug B, involves a negative evaluation of the agent in terms of responsibility but remains silent on normative issues. On a competence-based account, which defines rationality in terms of the competence of responding to reasons, such behavior can be understood as a failure to execute one's competence. But sometimes we are lucky and we succeed in the normative dimension despite failing to perform competently, i.e. rationally, due to being irresponsible.[38][44] The opposite can also be the case: bad luck may result in failure despite a responsible, competent performance. This explains how rationality and normativity can come apart despite our practice of criticizing irrationality.[38][45]

Normative and descriptive theories

[edit]The concept of normativity can also be used to distinguish different theories of rationality. Normative theories explore the normative nature of rationality. They are concerned with rules and ideals that govern how the mind should work. Descriptive theories, on the other hand, investigate how the mind actually works. This includes issues like under which circumstances the ideal rules are followed as well as studying the underlying psychological processes responsible for rational thought. Descriptive theories are often investigated in empirical psychology while philosophy tends to focus more on normative issues. This division also reflects how different these two types are investigated.[6][46][16][47]

Descriptive and normative theorists usually employ different methodologies in their research. Descriptive issues are studied by empirical research. This can take the form of studies that present their participants with a cognitive problem. It is then observed how the participants solve the problem, possibly together with explanations of why they arrived at a specific solution. Normative issues, on the other hand, are usually investigated in similar ways to how the formal sciences conduct their inquiry.[6][46] In the field of theoretical rationality, for example, it is accepted that deductive reasoning in the form of modus ponens leads to rational beliefs. This claim can be investigated using methods like rational intuition or careful deliberation toward a reflective equilibrium. These forms of investigation can arrive at conclusions about what forms of thought are rational and irrational without depending on empirical evidence.[6][48][49]

An important question in this field concerns the relation between descriptive and normative approaches to rationality.[6][16][47] One difficulty in this regard is that there is in many cases a huge gap between what the norms of ideal rationality prescribe and how people actually reason. Examples of normative systems of rationality are classical logic, probability theory, and decision theory. Actual reasoners often diverge from these standards because of cognitive biases, heuristics, or other mental limitations.[6]

Traditionally, it was often assumed that actual human reasoning should follow the rules described in normative theories. In this view, any discrepancy is a form of irrationality that should be avoided. However, this usually ignores the human limitations of the mind. Given these limitations, various discrepancies may be necessary (and in this sense rational) to get the most useful results.[6][12][1] For example, the ideal rational norms of decision theory demand that the agent should always choose the option with the highest expected value. However, calculating the expected value of each option may take a very long time in complex situations and may not be worth the trouble. This is reflected in the fact that actual reasoners often settle for an option that is good enough without making certain that it is really the best option available.[1][50] A further difficulty in this regard is Hume's law, which states that one cannot deduce what ought to be based on what is.[51][52] So just because a certain heuristic or cognitive bias is present in a specific case, it should not be inferred that it should be present. One approach to these problems is to hold that descriptive and normative theories talk about different types of rationality. This way, there is no contradiction between the two and both can be correct in their own field. Similar problems are discussed in so-called naturalized epistemology.[6][53]

Conservatism and foundationalism

[edit]Rationality is usually understood as conservative in the sense that rational agents do not start from zero but already possess many beliefs and intentions. Reasoning takes place on the background of these pre-existing mental states and tries to improve them. This way, the original beliefs and intentions are privileged: one keeps them unless a reason to doubt them is encountered. Some forms of epistemic foundationalism reject this approach. According to them, the whole system of beliefs is to be justified by self-evident beliefs. Examples of such self-evident beliefs may include immediate experiences as well as simple logical and mathematical axioms.[12][54][55]

An important difference between conservatism and foundationalism concerns their differing conceptions of the burden of proof. According to conservativism, the burden of proof is always in favor of already established belief: in the absence of new evidence, it is rational to keep the mental states one already has. According to foundationalism, the burden of proof is always in favor of suspending mental states. For example, the agent reflects on their pre-existing belief that the Taj Mahal is in Agra but is unable to access any reason for or against this belief. In this case, conservatives think it is rational to keep this belief while foundationalists reject it as irrational due to the lack of reasons. In this regard, conservatism is much closer to the ordinary conception of rationality. One problem for foundationalism is that very few beliefs, if any, would remain if this approach was carried out meticulously. Another is that enormous mental resources would be required to constantly keep track of all the justificatory relations connecting non-fundamental beliefs to fundamental ones.[12][54][55]

Types

[edit]Rationality is discussed in a great variety of fields, often in very different terms. While some theorists try to provide a unifying conception expressing the features shared by all forms of rationality, the more common approach is to articulate the different aspects of the individual forms of rationality. The most common distinction is between theoretical and practical rationality. Other classifications include categories for ideal and bounded rationality as well as for individual and social rationality.[6][56]

Theoretical and practical

[edit]The most influential distinction contrasts theoretical or epistemic rationality with practical rationality. Its theoretical side concerns the rationality of beliefs: whether it is rational to hold a given belief and how certain one should be about it. Practical rationality, on the other hand, is about the rationality of actions, intentions, and decisions.[7][12][56][27] This corresponds to the distinction between theoretical reasoning and practical reasoning: theoretical reasoning tries to assess whether the agent should change their beliefs while practical reasoning tries to assess whether the agent should change their plans and intentions.[12][56][27]

Theoretical

[edit]Theoretical rationality concerns the rationality of cognitive mental states, in particular, of beliefs.[7][4] It is common to distinguish between two factors. The first factor is about the fact that good reasons are necessary for a belief to be rational. This is usually understood in terms of evidence provided by the so-called sources of knowledge, i.e. faculties like perception, introspection, and memory. In this regard, it is often argued that to be rational, the believer has to respond to the impressions or reasons presented by these sources. For example, the visual impression of the sunlight on a tree makes it rational to believe that the sun is shining.[27][7][4] In this regard, it may also be relevant whether the formed belief is involuntary and implicit

The second factor pertains to the norms and procedures of rationality that govern how agents should form beliefs based on this evidence. These norms include the rules of inference discussed in regular logic as well as other norms of coherence between mental states.[7][4] In the case of rules of inference, the premises of a valid argument offer support to the conclusion and make therefore the belief in the conclusion rational.[27] The support offered by the premises can either be deductive or non-deductive.[57][58] In both cases, believing in the premises of an argument makes it rational to also believe in its conclusion. The difference between the two is given by how the premises support the conclusion. For deductive reasoning, the premises offer the strongest possible support: it is impossible for the conclusion to be false if the premises are true. The premises of non-deductive arguments also offer support for their conclusion. But this support is not absolute: the truth of the premises does not guarantee the truth of the conclusion. Instead, the premises make it more likely that the conclusion is true. In this case, it is usually demanded that the non-deductive support is sufficiently strong if the belief in the conclusion is to be rational.[56][27][57]

An important form of theoretical irrationality is motivationally biased belief, sometimes referred to as wishful thinking. In this case, beliefs are formed based on one's desires or what is pleasing to imagine without proper evidential support.[7][59] Faulty reasoning in the form of formal and informal fallacies is another cause of theoretical irrationality.[60]

Practical

[edit]All forms of practical rationality are concerned with how we act. It pertains both to actions directly as well as to mental states and events preceding actions, like intentions and decisions. There are various aspects of practical rationality, such as how to pick a goal to follow and how to choose the means for reaching this goal. Other issues include the coherence between different intentions as well as between beliefs and intentions.[61][62][1]

Some theorists define the rationality of actions in terms of beliefs and desires. In this view, an action to bring about a certain goal is rational if the agent has the desire to bring about this goal and the belief that their action will realize it. A stronger version of this view requires that the responsible beliefs and desires are rational themselves.[6] A very influential conception of the rationality of decisions comes from decision theory. In decisions, the agent is presented with a set of possible courses of action and has to choose one among them. Decision theory holds that the agent should choose the alternative that has the highest expected value.[61] Practical rationality includes the field of actions but not of behavior in general. The difference between the two is that actions are intentional behavior, i.e. they are performed for a purpose and guided by it. In this regard, intentional behavior like driving a car is either rational or irrational while non-intentional behavior like sneezing is outside the domain of rationality.[6][63][64]

For various other practical phenomena, there is no clear consensus on whether they belong to this domain or not. For example, concerning the rationality of desires, two important theories are proceduralism and substantivism. According to proceduralism, there is an important distinction between instrumental and noninstrumental desires. A desire is instrumental if its fulfillment serves as a means to the fulfillment of another desire.[65][12][6] For example, Jack is sick and wants to take medicine to get healthy again. In this case, the desire to take the medicine is instrumental since it only serves as a means to Jack's noninstrumental desire to get healthy. Both proceduralism and substantivism usually agree that a person can be irrational if they lack an instrumental desire despite having the corresponding noninstrumental desire and being aware that it acts as a means. Proceduralists hold that this is the only way a desire can be irrational. Substantivists, on the other hand, allow that noninstrumental desires may also be irrational. In this regard, a substantivist could claim that it would be irrational for Jack to lack his noninstrumental desire to be healthy.[7][65][6] Similar debates focus on the rationality of emotions.[6]

Relation between the two

[edit]Theoretical and practical rationality are often discussed separately and there are many differences between them. In some cases, they even conflict with each other. However, there are also various ways in which they overlap and depend on each other.[61][6]

It is sometimes claimed that theoretical rationality aims at truth while practical rationality aims at goodness.[61] According to John Searle, the difference can be expressed in terms of "direction of fit".[6][66][67] On this view, theoretical rationality is about how the mind corresponds to the world by representing it. Practical rationality, on the other hand, is about how the world corresponds to the ideal set up by the mind and how it should be changed.[6][7][68][1] Another difference is that arbitrary choices are sometimes needed for practical rationality. For example, there may be two equally good routes available to reach a goal. On the practical level, one has to choose one of them if one wants to reach the goal. It would even be practically irrational to resist this arbitrary choice, as exemplified by Buridan's ass.[12][69] But on the theoretical level, one does not have to form a belief about which route was taken upon hearing that someone reached the goal. In this case, the arbitrary choice for one belief rather than the other would be theoretically irrational. Instead, the agent should suspend their belief either way if they lack sufficient reasons. Another difference is that practical rationality is guided by specific goals and desires, in contrast to theoretical rationality. So it is practically rational to take medicine if one has the desire to cure a sickness. But it is theoretically irrational to adopt the belief that one is healthy just because one desires this. This is a form of wishful thinking.[12]

In some cases, the demands of practical and theoretical rationality conflict with each other. For example, the practical reason of loyalty to one's child may demand the belief that they are innocent while the evidence linking them to the crime may demand a belief in their guilt on the theoretical level.[12][68]

But the two domains also overlap in certain ways. For example, the norm of rationality known as enkrasia links beliefs and intentions. It states that "rationality requires of you that you intend to F if you believe your reasons require you to F". Failing to fulfill this requirement results in cases of irrationality known as akrasia or weakness of the will.[2][1][15][7][59] Another form of overlap is that the study of the rules governing practical rationality is a theoretical matter.[7][70] And practical considerations may determine whether to pursue theoretical rationality on a certain issue as well as how much time and resources to invest in the inquiry.[68][59] It is often held that practical rationality presupposes theoretical rationality. This is based on the idea that to decide what should be done, one needs to know what is the case. But one can assess what is the case independently of knowing what should be done. So in this regard, one can study theoretical rationality as a distinct discipline independent of practical rationality but not the other way round.[6] However, this independence is rejected by some forms of doxastic voluntarism. They hold that theoretical rationality can be understood as one type of practical rationality. This is based on the controversial claim that we can decide what to believe. It can take the form of epistemic decision theory, which states that people try to fulfill epistemic aims when deciding what to believe.[6][71][72] A similar idea is defended by Jesús Mosterín. He argues that the proper object of rationality is not belief but acceptance. He understands acceptance as a voluntary and context-dependent decision to affirm a proposition.[73]

Ideal and bounded

[edit]Various theories of rationality assume some form of ideal rationality, for example, by demanding that rational agents obey all the laws and implications of logic. This can include the requirement that if the agent believes a proposition, they should also believe in everything that logically follows from this proposition. However, many theorists reject this form of logical omniscience as a requirement for rationality. They argue that, since the human mind is limited, rationality has to be defined accordingly to account for how actual finite humans possess some form of resource-limited rationality.[12][6][1]

According to the position of bounded rationality, theories of rationality should take into account cognitive limitations, such as incomplete knowledge, imperfect memory, and limited capacities of computation and representation. An important research question in this field is about how cognitive agents use heuristics rather than brute calculations to solve problems and make decisions. According to the satisficing heuristic, for example, agents usually stop their search for the best option once an option is found that meets their desired achievement level. In this regard, people often do not continue to search for the best possible option, even though this is what theories of ideal rationality commonly demand.[6][1][50] Using heuristics can be highly rational as a way to adapt to the limitations of the human mind, especially in complex cases where these limitations make brute calculations impossible or very time- and resource-intensive.[6][1]

Individual and social

[edit]Most discussions and research in the academic literature focus on individual rationality. This concerns the rationality of individual persons, for example, whether their beliefs and actions are rational. But the question of rationality can also be applied to groups as a whole on the social level. This form of social or collective rationality concerns both theoretical and practical issues like group beliefs and group decisions.[6][74][75] And just like in the individual case, it is possible to study these phenomena as well as the processes and structures that are responsible for them. On the social level, there are various forms of cooperation to reach a shared goal. In theoretical cases, a group of jurors may first discuss and then vote to determine whether the defendant is guilty. Or in the practical case, politicians may cooperate to implement new regulations to combat climate change. These forms of cooperation can be judged on their social rationality depending on how they are implemented and on the quality of the results they bear. Some theorists try to reduce social rationality to individual rationality by holding that the group processes are rational to the extent that the individuals participating in them are rational. But such a reduction is frequently rejected.[6][74]

Various studies indicate that group rationality often outperforms individual rationality. For example, groups of people working together on the Wason selection task usually perform better than individuals by themselves. This form of group superiority is sometimes termed "wisdom of crowds" and may be explained based on the claim that competent individuals have a stronger impact on the group decision than others.[6][76] However, this is not always the case and sometimes groups perform worse due to conformity or unwillingness to bring up controversial issues.[6]

Others

[edit]Many other classifications are discussed in the academic literature. One important distinction is between approaches to rationality based on the output or on the process. Process-oriented theories of rationality are common in cognitive psychology and study how cognitive systems process inputs to generate outputs. Output-oriented approaches are more common in philosophy and investigate the rationality of the resulting states.[6][2] Another distinction is between relative and categorical judgments of rationality. In the relative case, rationality is judged based on limited information or evidence while categorical judgments take all the evidence into account and are thus judgments all things considered.[6][1] For example, believing that one's investments will multiply can be rational in a relative sense because it is based on one's astrological horoscope. But this belief is irrational in a categorical sense if the belief in astrology is itself irrational.[6]

Importance

[edit]Rationality is central to solving many problems, both on the local and the global scale. This is often based on the idea that rationality is necessary to act efficiently and to reach all kinds of goals.[6][16] This includes goals from diverse fields, such as ethical goals, humanist goals, scientific goals, and even religious goals.[6] The study of rationality is very old and has occupied many of the greatest minds since ancient Greek. This interest is often motivated by discovering the potentials and limitations of our minds. Various theorists even see rationality as the essence of being human, often in an attempt to distinguish humans from other animals.[6][8][9] However, this strong affirmation has been subjected to many criticisms, for example, that humans are not rational all the time and that non-human animals also show diverse forms of intelligence.[6]

The topic of rationality is relevant to a variety of disciplines. It plays a central role in philosophy, psychology, Bayesianism, decision theory, and game theory.[7] But it is also covered in other disciplines, such as artificial intelligence, behavioral economics, microeconomics, and neuroscience. Some forms of research restrict themselves to one specific domain while others investigate the topic in an interdisciplinary manner by drawing insights from different fields.[56]

Paradoxes of rationality

[edit]The term paradox of rationality has a variety of meanings. It is often used for puzzles or unsolved problems of rationality. Some are just situations where it is not clear what the rational person should do. Others involve apparent faults within rationality itself, for example, where rationality seems to recommend a suboptimal course of action.[7] A special case are so-called rational dilemmas, in which it is impossible to be rational since two norms of rationality conflict with each other.[23][24] Examples of paradoxes of rationality include Pascal's Wager, the Prisoner's dilemma, Buridan's ass, and the St. Petersburg paradox.[7][77][21]

History

[edit]This section needs expansion with: subsections on ancient Greek philosophy and Kant. You can help by adding to it. (August 2022) |

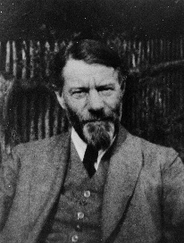

Max Weber

[edit]

The German scholar Max Weber proposed an interpretation of social action that distinguished between four different idealized types of rationality.[78]

The first, which he called Zweckrational or purposive/instrumental rationality, is related to the expectations about the behavior of other human beings or objects in the environment. These expectations serve as means for a particular actor to attain ends, ends which Weber noted were "rationally pursued and calculated."[This quote needs a citation] The second type, Weber called Wertrational or value/belief-oriented. Here the action is undertaken for what one might call reasons intrinsic to the actor: some ethical, aesthetic, religious or other motives, independent of whether it will lead to success. The third type was affectual, determined by an actor's specific affect, feeling, or emotion—to which Weber himself said that this was a kind of rationality that was on the borderline of what he considered "meaningfully oriented." The fourth was traditional or conventional, determined by ingrained habituation. Weber emphasized that it was very unusual to find only one of these orientations: combinations were the norm. His usage also makes clear that he considered the first two as more significant than the others, and it is arguable that the third and fourth are subtypes of the first two.

The advantage in Weber's interpretation of rationality is that it avoids a value-laden assessment, say, that certain kinds of beliefs are irrational. Instead, Weber suggests that ground or motive can be given—for religious or affect reasons, for example—that may meet the criterion of explanation or justification even if it is not an explanation that fits the Zweckrational orientation of means and ends. The opposite is therefore also true: some means-ends explanations will not satisfy those whose grounds for action are Wertrational.

Weber's constructions of rationality have been critiqued both from a Habermasian (1984) perspective (as devoid of social context and under-theorised in terms of social power)[79] and also from a feminist perspective (Eagleton, 2003) whereby Weber's rationality constructs are viewed as imbued with masculine values and oriented toward the maintenance of male power.[80] An alternative position on rationality (which includes both bounded rationality,[81] as well as the affective and value-based arguments of Weber) can be found in the critique of Etzioni (1988),[82] who reframes thought on decision-making to argue for a reversal of the position put forward by Weber. Etzioni illustrates how purposive/instrumental reasoning is subordinated by normative considerations (ideas on how people 'ought' to behave) and affective considerations (as a support system for the development of human relationships).

Richard Brandt

[edit]Richard Brandt proposed a "reforming definition" of rationality, arguing someone is rational if their notions survive a form of cognitive-psychotherapy.[83]

Robert Audi

[edit]Robert Audi developed a comprehensive account of rationality that covers both the theoretical and the practical side of rationality.[36][84] This account centers on the notion of a ground: a mental state is rational if it is "well-grounded" in a source of justification.[84]: 19 Irrational mental states, on the other hand, lack a sufficient ground. For example, the perceptual experience of a tree when looking outside the window can ground the rationality of the belief that there is a tree outside.

Audi is committed to a form of foundationalism: the idea that justified beliefs, or in his case, rational states in general, can be divided into two groups: the foundation and the superstructure.[84]: 13, 29–31 The mental states in the superstructure receive their justification from other rational mental states while the foundational mental states receive their justification from a more basic source.[84]: 16–18 For example, the above-mentioned belief that there is a tree outside is foundational since it is based on a basic source: perception. Knowing that trees grow in soil, we may deduce that there is soil outside. This belief is equally rational, being supported by an adequate ground, but it belongs to the superstructure since its rationality is grounded in the rationality of another belief. Desires, like beliefs, form a hierarchy: intrinsic desires are at the foundation while instrumental desires belong to the superstructure. In order to link the instrumental desire to the intrinsic desire an extra element is needed: a belief that the fulfillment of the instrumental desire is a means to the fulfillment of the intrinsic desire.[85]

Audi asserts that all the basic sources providing justification for the foundational mental states come from experience. As for beliefs, there are four types of experience that act as sources: perception, memory, introspection, and rational intuition.[86] The main basic source of the rationality of desires, on the other hand, comes in the form of hedonic experience: the experience of pleasure and pain.[87]: 20 So, for example, a desire to eat ice-cream is rational if it is based on experiences in which the agent enjoyed the taste of ice-cream, and irrational if it lacks such a support. Because of its dependence on experience, rationality can be defined as a kind of responsiveness to experience.[87]: 21

Actions, in contrast to beliefs and desires, do not have a source of justification of their own. Their rationality is grounded in the rationality of other states instead: in the rationality of beliefs and desires. Desires motivate actions. Beliefs are needed here, as in the case of instrumental desires, to bridge a gap and link two elements.[84]: 62 Audi distinguishes the focal rationality of individual mental states from the global rationality of persons. Global rationality has a derivative status: it depends on the focal rationality.[36] Or more precisely: "Global rationality is reached when a person has a sufficiently integrated system of sufficiently well-grounded propositional attitudes, emotions, and actions".[84]: 232 Rationality is relative in the sense that it depends on the experience of the person in question. Since different people undergo different experiences, what is rational to believe for one person may be irrational to believe for another person.[36] That a belief is rational does not entail that it is true.[85]

In various fields

[edit]Ethics and morality

[edit]The problem of rationality is relevant to various issues in ethics and morality.[7] Many debates center around the question of whether rationality implies morality or is possible without it. Some examples based on common sense suggest that the two can come apart. For example, some immoral psychopaths are highly intelligent in the pursuit of their schemes and may, therefore, be seen as rational. However, there are also considerations suggesting that the two are closely related to each other. For example, according to the principle of universality, "one's reasons for acting are acceptable only if it is acceptable that everyone acts on such reasons".[12] A similar formulation is given in Immanuel Kant's categorical imperative: "act only according to that maxim whereby you can, at the same time, will that it should become a universal law".[88] The principle of universality has been suggested as a basic principle both for morality and for rationality.[12] This is closely related to the question of whether agents have a duty to be rational. Another issue concerns the value of rationality. In this regard, it is often held that human lives are more important than animal lives because humans are rational.[12][8]

Psychology

[edit]Many psychological theories have been proposed to describe how reasoning happens and what underlying psychological processes are responsible. One of their goals is to explain how the different types of irrationality happen and why some types are more prevalent than others. They include mental logic theories, mental model theories, and dual process theories.[56][89][90] An important psychological area of study focuses on cognitive biases. Cognitive biases are systematic tendencies to engage in erroneous or irrational forms of thinking, judging, and acting. Examples include the confirmation bias, the self-serving bias, the hindsight bias, and the Dunning–Kruger effect.[91][92][93] Some empirical findings suggest that metacognition is an important aspect of rationality. The idea behind this claim is that reasoning is carried out more efficiently and reliably if the responsible thought processes are properly controlled and monitored.[56]

The Wason selection task is an influential test for studying rationality and reasoning abilities. In it, four cards are placed before the participants. Each has a number on one side and a letter on the opposite side. In one case, the visible sides of the four cards are A, D, 4, and 7. The participant is then asked which cards need to be turned around in order to verify the conditional claim "if there is a vowel on one side of the card, then there is an even number on the other side of the card". The correct answer is A and 7. But this answer is only given by about 10%. Many choose card 4 instead even though there is no requirement on what letters may appear on its opposite side.[6][89][94] An important insight from using these and similar tests is that the rational ability of the participants is usually significantly better for concrete and realistic cases than for abstract or implausible cases.[89][94] Various contemporary studies in this field use Bayesian probability theory to study subjective degrees of belief, for example, how the believer's certainty in the premises is carried over to the conclusion through reasoning.[6]

In the psychology of reasoning, psychologists and cognitive scientists have defended different positions on human rationality. One prominent view, due to Philip Johnson-Laird and Ruth M. J. Byrne among others is that humans are rational in principle but they err in practice, that is, humans have the competence to be rational but their performance is limited by various factors.[95] However, it has been argued that many standard tests of reasoning, such as those on the conjunction fallacy, on the Wason selection task, or the base rate fallacy suffer from methodological and conceptual problems. This has led to disputes in psychology over whether researchers should (only) use standard rules of logic, probability theory and statistics, or rational choice theory as norms of good reasoning. Opponents of this view, such as Gerd Gigerenzer, favor a conception of bounded rationality, especially for tasks under high uncertainty.[96] The concept of rationality continues to be debated by psychologists, economists and cognitive scientists.[97]

The psychologist Jean Piaget gave an influential account of how the stages in human development from childhood to adulthood can be understood in terms of the increase of rational and logical abilities.[6][98][99][100] He identifies four stages associated with rough age groups: the sensorimotor stage below the age of two, the preoperational state until the age of seven, the concrete operational stage until the age of eleven, and the formal operational stage afterward. Rational or logical reasoning only takes place in the last stage and is related to abstract thinking, concept formation, reasoning, planning, and problem-solving.[6]

Emotions

[edit]According to A. C. Grayling, rationality "must be independent of emotions, personal feelings or any kind of instincts".[101] Certain findings[which?] in cognitive science and neuroscience show that no human has ever satisfied this criterion, except perhaps a person with no affective feelings, for example, an individual with a massively damaged amygdala or severe psychopathy. Thus, such an idealized form of rationality is best exemplified by computers, and not people. However, scholars may productively appeal to the idealization as a point of reference. [citation needed] In his book, The Edge of Reason: A Rational Skeptic in an Irrational World, British philosopher Julian Baggini sets out to debunk myths about reason (e.g., that it is "purely objective and requires no subjective judgment").[102]

Cognitive and behavioral sciences

[edit]Cognitive and behavioral sciences try to describe, explain, and predict how people think and act. Their models are often based on the assumption that people are rational. For example, classical economics is based on the assumption that people are rational agents that maximize expected utility. However, people often depart from the ideal standards of rationality in various ways. For example, they may only look for confirming evidence and ignore disconfirming evidence. Another factor studied in this regard are the limitations of human intellectual capacities. Many discrepancies from rationality are caused by limited time, memory, or attention. Often heuristics and rules of thumb are used to mitigate these limitations, but they may lead to new forms of irrationality.[12][1][50]

Logic

[edit]Theoretical rationality is closely related to logic, but not identical to it.[12][6] Logic is often defined as the study of correct arguments. This concerns the relation between the propositions used in the argument: whether its premises offer support to its conclusion. Theoretical rationality, on the other hand, is about what to believe or how to change one's beliefs. The laws of logic are relevant to rationality since the agent should change their beliefs if they violate these laws. But logic is not directly about what to believe. Additionally, there are also other factors and norms besides logic that determine whether it is rational to hold or change a belief.[12] The study of rationality in logic is more concerned with epistemic rationality, that is, attaining beliefs in a rational manner, than instrumental rationality.

Decision theory

[edit]An influential account of practical rationality is given by decision theory.[12][56][6] Decisions are situations where a number of possible courses of action are available to the agent, who has to choose one of them. Decision theory investigates the rules governing which action should be chosen. It assumes that each action may lead to a variety of outcomes. Each outcome is associated with a conditional probability and a utility. The expected gain of an outcome can be calculated by multiplying its conditional probability with its utility. The expected utility of an act is equivalent to the sum of all expected gains of the outcomes associated with it. From these basic ingredients, it is possible to define the rationality of decisions: a decision is rational if it selects the act with the highest expected utility.[12][6] While decision theory gives a very precise formal treatment of this issue, it leaves open the empirical problem of how to assign utilities and probabilities. So decision theory can still lead to bad empirical decisions if it is based on poor assignments.[12]

According to decision theorists, rationality is primarily a matter of internal consistency. This means that a person's mental states like beliefs and preferences are consistent with each other or do not go against each other. One consequence of this position is that people with obviously false beliefs or perverse preferences may still count as rational if these mental states are consistent with their other mental states.[7] Utility is often understood in terms of self-interest or personal preferences. However, this is not a necessary aspect of decisions theory and it can also be interpreted in terms of goodness or value in general.[7][70]

Game theory

[edit]Game theory is closely related to decision theory and the problem of rational choice.[7][56] Rational choice is based on the idea that rational agents perform a cost-benefit analysis of all available options and choose the option that is most beneficial from their point of view. In the case of game theory, several agents are involved. This further complicates the situation since whether a given option is the best choice for one agent may depend on choices made by other agents. Game theory can be used to analyze various situations, like playing chess, firms competing for business, or animals fighting over prey. Rationality is a core assumption of game theory: it is assumed that each player chooses rationally based on what is most beneficial from their point of view. This way, the agent may be able to anticipate how others choose and what their best choice is relative to the behavior of the others.[7][103][104][105] This often results in a Nash equilibrium, which constitutes a set of strategies, one for each player, where no player can improve their outcome by unilaterally changing their strategy.[7][103][104]

Bayesianism

[edit]A popular contemporary approach to rationality is based on Bayesian epistemology.[7][106] Bayesian epistemology sees belief as a continuous phenomenon that comes in degrees. For example, Daniel is relatively sure that the Boston Celtics will win their next match and absolutely certain that two plus two equals four. In this case, the degree of the first belief is weaker than the degree of the second belief. These degrees are usually referred to as credences and represented by numbers between 0 and 1, where 0 corresponds to full disbelief, 1 corresponds to full belief and 0.5 corresponds to suspension of belief. Bayesians understand this in terms of probability: the higher the credence, the higher the subjective probability that the believed proposition is true. As probabilities, they are subject to the laws of probability theory. These laws act as norms of rationality: beliefs are rational if they comply with them and irrational if they violate them.[107][108][109] For example, it would be irrational to have a credence of 0.9 that it will rain tomorrow together with another credence of 0.9 that it will not rain tomorrow. This account of rationality can also be extended to the practical domain by requiring that agents maximize their subjective expected utility. This way, Bayesianism can provide a unified account of both theoretical and practical rationality.[7][106][6]

Economics

[edit]Rationality plays a key role in economics and there are several strands to this.[110] Firstly, there is the concept of instrumentality—basically the idea that people and organisations are instrumentally rational—that is, adopt the best actions to achieve their goals. Secondly, there is an axiomatic concept that rationality is a matter of being logically consistent within your preferences and beliefs. Thirdly, people have focused on the accuracy of beliefs and full use of information—in this view, a person who is not rational has beliefs that do not fully use the information they have.

Debates within economic sociology also arise as to whether or not people or organizations are "really" rational, as well as whether it makes sense to model them as such in formal models. Some have argued that a kind of bounded rationality makes more sense for such models.

Others think that any kind of rationality along the lines of rational choice theory is a useless concept for understanding human behavior; the term homo economicus (economic man: the imaginary man being assumed in economic models who is logically consistent but amoral) was coined largely in honor of this view. Behavioral economics aims to account for economic actors as they actually are, allowing for psychological biases, rather than assuming idealized instrumental rationality.

Artificial intelligence

[edit]The field of artificial intelligence is concerned, among other things, with how problems of rationality can be implemented and solved by computers.[56] Within artificial intelligence, a rational agent is typically one that maximizes its expected utility, given its current knowledge. Utility is the usefulness of the consequences of its actions. The utility function is arbitrarily defined by the designer, but should be a function of "performance", which is the directly measurable consequences, such as winning or losing money. In order to make a safe agent that plays defensively, a nonlinear function of performance is often desired, so that the reward for winning is lower than the punishment for losing. An agent might be rational within its own problem area, but finding the rational decision for arbitrarily complex problems is not practically possible. The rationality of human thought is a key problem in the psychology of reasoning.[111]

International relations

[edit]There is an ongoing debate over the merits of using "rationality" in the study of international relations (IR). Some scholars hold it indispensable.[112] Others are more critical.[113] Still, the pervasive and persistent usage of "rationality" in political science and IR is beyond dispute. "Rationality" remains ubiquitous in this field. Abulof finds that Some 40% of all scholarly references to "foreign policy" allude to "rationality"—and this ratio goes up to more than half of pertinent academic publications in the 2000s. He further argues that when it comes to concrete security and foreign policies, IR employment of rationality borders on "malpractice": rationality-based descriptions are largely either false or unfalsifiable; many observers fail to explicate the meaning of "rationality" they employ; and the concept is frequently used politically to distinguish between "us and them."[114]

Criticism

[edit]The concept of rationality has been subject to criticism by various philosophers who question its universality and capacity to provide a comprehensive understanding of reality and human existence.

Friedrich Nietzsche, in his work "Beyond Good and Evil" (1886), criticized the overemphasis on rationality and argued that it neglects the irrational and instinctual aspects of human nature. Nietzsche advocated for a reevaluation of values based on individual perspectives and the will to power, stating, "There are no facts, only interpretations."[115]

Martin Heidegger, in "Being and Time" (1927), offered a critique of the instrumental and calculative view of reason, emphasizing the primacy of our everyday practical engagement with the world. Heidegger challenged the notion that rationality alone is the sole arbiter of truth and understanding.[116]

Max Horkheimer and Theodor Adorno, in their seminal work "Dialectic of Enlightenment"[117] (1947), questioned the Enlightenment's rationality. They argued that the dominance of instrumental reason in modern society leads to the domination of nature and the dehumanization of individuals. Horkheimer and Adorno highlighted how rationality narrows the scope of human experience and hinders critical thinking.

Michel Foucault, in "Discipline and Punish"[118] (1975) and "The Birth of Biopolitics"[119] (1978), critiqued the notion of rationality as a neutral and objective force. Foucault emphasized the intertwining of rationality with power structures and its role in social control. He famously stated, "Power is not an institution, and not a structure; neither is it a certain strength we are endowed with; it is the name that one attributes to a complex strategic situation in a particular society."[120]

These philosophers' critiques of rationality shed light on its limitations, assumptions, and potential dangers. Their ideas challenge the universal application of rationality as the sole framework for understanding the complexities of human existence and the world.

See also

[edit]- Bayesian epistemology

- Cognitive bias

- Coherence (linguistics)

- Counterintuitive

- Dysrationalia

- Flipism

- Homo economicus

- Humeanism § Practical reason

- Imputation (game theory) (individual rationality)

- Instinct

- Intelligence

- Irrationality

- Law of thought

- LessWrong

- List of cognitive biases

- Principle of rationality

- Rational emotive behavior therapy

- Rationalism

- Rationalization (making excuses)

- Satisficing

- Superrationality

- Von Neumann–Morgenstern utility theorem

References

[edit]- ^ a b c d e f g h i j k l m n o p q r s t u v w x y z aa ab ac ad ae af Moser, Paul (2006). "Rationality". In Borchert, Donald (ed.). Macmillan Encyclopedia of Philosophy, 2nd Edition. Macmillan. Archived from the original on 12 January 2021. Retrieved 14 August 2022.

- ^ a b c d e f g h i j k l m n o p q r Broome, John (14 December 2021). "Reasons and rationality". In Knauff, Markus; Spohn, Wolfgang (eds.). The Handbook of Rationality. MIT Press. ISBN 978-0-262-04507-0.

- ^ "Definition of rational". Merriam-Webster. Archived from the original on 17 August 2017. Retrieved 24 September 2017.

- ^ a b c d Audi, Robert (2004). "Theoretical Rationality: Its Sources, Structure, and Scope". In Mele, Alfred R; Rawling, Piers (eds.). The Oxford Handbook of Rationality. Oxford University Press. doi:10.1093/0195145399.001.0001. ISBN 978-0-19-514539-7. Archived from the original on 30 December 2023. Retrieved 14 August 2022.

- ^ a b c d e f g Lord, Errol (30 May 2018). "1. Introduction". The Importance of Being Rational. Oxford University Press. ISBN 978-0-19-254675-3. Archived from the original on 30 December 2023. Retrieved 14 August 2022.

- ^ a b c d e f g h i j k l m n o p q r s t u v w x y z aa ab ac ad ae af ag ah ai aj ak al am an ao ap aq ar as at au av aw ax Knauff, Markus; Spohn, Wolfgang (14 December 2021). "Psychological and Philosophical Frameworks of Rationality - A Systematic Introduction". In Knauff, Markus; Spohn, Wolfgang (eds.). The Handbook of Rationality. MIT Press. ISBN 978-0-262-04507-0. Archived from the original on 30 December 2023. Retrieved 14 August 2022.

- ^ a b c d e f g h i j k l m n o p q r s t u v w x y z aa ab ac ad ae Mele, Alfred R.; Rawling, Piers. (2004). "INTRODUCTION: Aspects of Rationality". The Oxford Handbook of Rationality. Oxford University Press. doi:10.1093/0195145399.001.0001. ISBN 978-0-19-514539-7. Archived from the original on 22 January 2022. Retrieved 14 August 2022.

- ^ a b c d e Rysiew, Patrick. "Rationality". Oxford Bibliographies. Archived from the original on 11 August 2022. Retrieved 6 August 2022.

- ^ a b c Mittelstraß, Jürgen, ed. (2005). Enzyklopädie Philosophie und Wissenschaftstheorie. Metzler. Archived from the original on 20 October 2021. Retrieved 14 August 2022.

- ^ Nolfi, Kate (2015). "Which Mental States Are Rationally Evaluable, And Why?". Philosophical Issues. 25 (1): 41–63. doi:10.1111/phis.12051. Archived from the original on 5 June 2021. Retrieved 14 August 2022.

- ^ "The American Heritage Dictionary entry: irrational". www.ahdictionary.com. Archived from the original on 12 August 2023. Retrieved 10 August 2022.

- ^ a b c d e f g h i j k l m n o p q r s t u v w x y z Harman, Gilbert (1 February 2013). "Rationality". International Encyclopedia of Ethics. Blackwell Publishing Ltd. doi:10.1002/9781444367072.wbiee181. ISBN 978-1-4051-8641-4. Archived from the original on 14 August 2022. Retrieved 14 August 2022.

- ^ Grim, Patrick (17 July 1990). "On Dismissing Astrology and Other Irrationalities". Philosophy of Science and the Occult: Second Edition. SUNY Press. p. 28. ISBN 978-1-4384-0498-1. Archived from the original on 30 December 2023. Retrieved 3 September 2022.

- ^ Mosterín, Jesús (2008). Lo mejor posible: Racionalidad y acción humana. Madrid: Alianza Editorial, 2008. 318 pp. ISBN 978-84-206-8206-8.

- ^ a b c d e f g h i j k l m n o Heinzelmann, Nora (2022). "Rationality is Not Coherence". Philosophical Quarterly. 999: 312–332. doi:10.1093/pq/pqac083. Archived from the original on 14 August 2022. Retrieved 14 August 2022.

- ^ a b c d e f Pinker, Steven. "rationality". www.britannica.com. Archived from the original on 14 August 2022. Retrieved 6 August 2022.

- ^ a b c d e Broome, John (2007). "Is Rationality Normative?". Disputatio. 2 (23): 161–178. doi:10.2478/disp-2007-0008. S2CID 171079649. Archived from the original on 7 June 2021. Retrieved 7 June 2021.

- ^ a b c d e Kiesewetter, Benjamin (2017). "7. Rationality as Responding Correctly to Reasons". The Normativity of Rationality. Oxford: Oxford University Press. Archived from the original on 7 June 2021. Retrieved 7 June 2021.

{{cite book}}: CS1 maint: publisher location (link) - ^ a b Lord, Errol (30 May 2018). "3. What it is to possess a reason". The Importance of Being Rational. Oxford University Press. ISBN 978-0-19-254675-3. Archived from the original on 30 December 2023. Retrieved 14 August 2022.

- ^ a b c d McClennen, Edward F. (2004). "THE RATIONALITY OF BEING GUIDED BY RULES". In Mele, Alfred R; Rawling, Piers (eds.). The Oxford Handbook of Rationality. Oxford University Press. doi:10.1093/0195145399.001.0001. ISBN 978-0-19-514539-7. Archived from the original on 31 August 2021. Retrieved 14 August 2022.

- ^ a b Moriarty, Michael (27 February 2020). "Reasons for the Irrational". Pascal: Reasoning and Belief. Oxford. Archived from the original on 14 August 2022. Retrieved 14 August 2022.