Recent from talks

Nothing was collected or created yet.

Video

View on Wikipedia

This article needs additional citations for verification. (January 2025) |

Video is an electronic medium for the recording, copying, playback, broadcast, and display of moving-image media.[1] Video was first developed for mechanical television systems, which were quickly replaced by cathode-ray tube (CRT) systems, which, in turn, were replaced by flat-panel displays.

Video systems vary in display resolution, aspect ratio, refresh rate, color reproduction, and other qualities. Both analog and digital video can be carried on a variety of media, including radio, magnetic tape, optical discs, computer files, and network streaming.

Etymology

[edit]The word video comes from the Latin video, "I see," the first-person singular present indicative of videre, "to see".[2]

History

[edit]Analog video

[edit]

Video developed from facsimile systems developed in the mid-19th century. Mechanical video scanners, such as the Nipkow disk, were patented as early as 1884, but it took several decades before practical video systems could be developed. Whereas the medium of film records using a sequence of miniature photographic images visible to the naked eye, video encodes images electronically, turning them into analog or digital electronic signals for transmission and recording.[3]

Video was originally exclusively live technology, and was first developed for mechanical television systems. These were quickly replaced by cathode-ray tube (CRT) television systems. Live video cameras used an electron beam, which would scan a photoconductive plate with the desired image and produce a voltage signal proportional to the brightness in each part of the image. The signal could then be sent to televisions, where another beam would receive and display the image.[4] Charles Ginsburg led an Ampex research team to develop one of the first practical video tape recorders (VTR). In 1951, the first of these captured live images from television cameras by writing the camera's electrical signal onto magnetic videotape. VTRs sold for around US$50,000 in 1956, and videotapes cost US$300 per one-hour reel.[5] However, prices gradually dropped over the years, and in 1971, Sony began selling videocassette recorder (VCR) decks and tapes into the consumer market.[6]

Digital video

[edit]Digital video is capable of higher quality and, eventually, a much lower cost than its analog predecessor. After the commercial introduction of the DVD, in 1997, and later the Blu-ray Disc, in 2006, sales of videotape and recording equipment fell. Advances in computer technology allow even inexpensive personal computers and smartphones to capture, store, edit, and transmit digital video, further reducing the cost of video production and allowing programmers and broadcasters to move to tapeless production. The advent of digital broadcasting and the subsequent digital television transition are in the process of relegating analog video to the status of a legacy technology in most parts of the world. The development of high-resolution video cameras with improved dynamic range and broader color gamuts, along with the introduction of high-dynamic-range digital intermediate data formats with improved color depth, has caused digital video technology to converge with film technology. Since 2013, the use of digital cameras in Hollywood has surpassed the use of film cameras.[7]

Characteristics

[edit]Frame rate

[edit]Frame rate—the number of still pictures per unit of time—ranges from six or eight frames per second (frame/s or fps) for older mechanical cameras to 120 or more for new professional cameras. The PAL and SECAM standards specify 25 fps, while NTSC specifies 29.97 fps.[8] Film is shot at a slower frame rate of 24 frames per second, which slightly complicates the process of transferring film to video. The minimum frame rate to achieve persistence of vision (the illusion of a moving image) is about 16 frames per second.[9]

Interlacing vs. progressive-scan systems

[edit]Video can be interlaced or progressive. In progressive scan systems, each refresh period updates all scan lines in each frame in sequence. When displaying a natively progressive broadcast or recorded signal, the result is the optimum spatial resolution of both the stationary and moving parts of the image. Interlacing was invented as a way to reduce flicker in early mechanical and CRT video displays without increasing the number of complete frames per second. Interlacing retains detail while requiring lower bandwidth compared to progressive scanning.[10][11]

In interlaced video, the horizontal scan lines of each complete frame are treated as if numbered consecutively and captured as two fields: an odd field (upper field) consisting of the odd-numbered lines and an even field (lower field) consisting of the even-numbered lines. Analog display devices reproduce each frame, effectively doubling the frame rate as far as perceptible overall flicker is concerned. When the image capture device acquires the fields one at a time, rather than dividing up a complete frame after it is captured, the frame rate for motion is effectively doubled as well, resulting in smoother, more lifelike reproduction of rapidly moving parts of the image when viewed on an interlaced CRT display.[10][11]

NTSC, PAL, and SECAM are interlaced formats. In video resolution notation, 'i' denotes interlaced scanning. For example, PAL video format is often described as 576i50, where 576 indicates the total number of horizontal scan lines, i indicates interlacing, and 50 indicates 50 fields (half-frames) per second.[11][12]

When displaying a natively interlaced signal on a progressive scan device, the overall spatial resolution is degraded by simple line doubling—artifacts, such as flickering or comb effects in moving parts of the image, appear unless special signal processing eliminates them.[10][13] A procedure known as deinterlacing can optimize the display of an interlaced video signal from an analog, DVD, or satellite source on a progressive scan device such as an LCD television, digital video projector, or plasma panel. Deinterlacing cannot, however, produce video quality that is equivalent to true progressive scan source material.[11][12][13]

Aspect ratio

[edit]

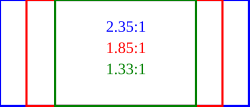

In video, an aspect ratio is the proportional relationship between the width and height of a video screen and video picture elements. All popular video formats are landscape, with a traditional television screen having an aspect ratio of 4:3, or about 1.33:1. High-definition televisions have an aspect ratio of 16:9, or about 1.78:1. The ratio of a full 35mm film frame with its sound track (the "Academy ratio") is 1.375:1.[14][15]

Pixels on computer monitors are usually square, but pixels used in digital video often have non-square aspect ratios, such as those used in the PAL and NTSC variants of the CCIR 601 digital video standard and the corresponding anamorphic widescreen formats. The 720 by 480 pixel raster uses thin pixels on a 4:3 aspect ratio display and fat pixels on a 16:9 display.[14][15]

The popularity of video on mobile phones has led to the growth of vertical video. Mary Meeker, a partner at Silicon Valley venture capital firm Kleiner Perkins Caufield & Byers, highlighted the growth of vertical-video viewing in her 2015 Internet Trends Report, noting that it had grown from 5% of viewing in 2010 to 29% in 2015. Vertical-video ads are watched in their entirety nine times more frequently than those in landscape ratios.[16]

Color model and depth

[edit]

The color model uses the video color representation and maps encoded color values to visible colors reproduced by the system. There are several such representations in common use: typically, YIQ is used in NTSC television, YUV is used in PAL television, YDbDr is used by SECAM television, and YCbCr is used for digital video.[17][18]

The number of distinct colors a pixel can represent depends on the color depth expressed in the number of bits per pixel. A common way to reduce the amount of data required in digital video is by chroma subsampling (e.g., 4:4:4, 4:2:2, etc.). Because the human eye is less sensitive to details in color than brightness, the luminance data for all pixels is maintained, while the chrominance data is averaged for a number of pixels in a block, and the same value is used for all of them. For example, this results in a 50% reduction in chrominance data using 2-pixel blocks (4:2:2) or 75% using 4-pixel blocks (4:2:0). This process does not reduce the number of possible color values that can be displayed, but it reduces the number of distinct points at which the color changes.[12][17][18]

Quality

[edit]Video quality can be measured with formal metrics like peak signal-to-noise ratio (PSNR) or through subjective video quality assessment using expert observation. Many subjective video quality methods are described in the ITU-T recommendation BT.500. One of the standardized methods is the Double Stimulus Impairment Scale (DSIS). In DSIS, each expert views an unimpaired reference video, followed by an impaired version of the same video. The expert then rates the impaired video using a scale ranging from "impairments are imperceptible" to "impairments are very annoying."

Compression (digital only)

[edit]Uncompressed video delivers maximum quality, but at a very high data rate. A variety of methods are used to compress video streams, with the most effective ones using a group of pictures (GOP) to reduce spatial and temporal redundancy. Broadly speaking, spatial redundancy is reduced by registering differences between parts of a single frame; this task is known as intraframe compression and is closely related to image compression. Likewise, temporal redundancy can be reduced by registering differences between frames; this task is known as interframe compression, including motion compensation and other techniques. The most common modern compression standards are MPEG-2, used for DVD, Blu-ray, and satellite television, and MPEG-4, used for AVCHD, mobile phones (3GP), and the Internet.[19][20]

Stereoscopy

[edit]Stereoscopic video for 3D film and other applications can be displayed using several different methods:[21][22]

- Two channels: a right channel for the right eye and a left channel for the left eye. Both channels may be viewed simultaneously by using light-polarizing filters 90 degrees off-axis from each other on two video projectors. These separately polarized channels are viewed wearing eyeglasses with matching polarization filters.

- Anaglyph 3D, where one channel is overlaid with two color-coded layers. This left and right layer technique is occasionally used for network broadcasts or recent anaglyph releases of 3D movies on DVD. Simple red/cyan plastic glasses provide the means to view the images discretely to form a stereoscopic view of the content.

- One channel with alternating left and right frames for the corresponding eye, using LCD shutter glasses that synchronize to the video to alternately block the image for each eye, so the appropriate eye sees the correct frame. This method is most common in computer virtual reality applications, such as in a Cave Automatic Virtual Environment, but reduces effective video framerate by a factor of two.

Formats

[edit]Different layers of video transmission and storage each provide their own set of formats to choose from.

For transmission, there is a physical connector and signal protocol (see List of video connectors). A given physical link can carry certain display standards that specify a particular refresh rate, display resolution, and color space.

Many analog and digital recording formats are in use, and digital video clips can also be stored on a computer file system as files, which have their own formats. In addition to the physical format used by the data storage device or transmission medium, the stream of ones and zeros that is sent must be in a particular digital video coding format, for which a number is available.

Analog video

[edit]Analog video is a video signal represented by one or more analog signals. Analog color video signals include luminance (Y) and chrominance (C). When combined into one channel, as is the case among others with NTSC, PAL, and SECAM, it is called composite video. Analog video may be carried in separate channels, as in two-channel S-Video (YC) and multi-channel component video formats.

Analog video is used in both consumer and professional television production applications.

-

Composite video

(single channel RCA) -

S-Video

(2-channel YC)

Digital video

[edit]Digital video signal formats have been adopted, including serial digital interface (SDI), Digital Visual Interface (DVI), High-Definition Multimedia Interface (HDMI) and DisplayPort Interface.

-

Serial digital interface (SDI)

-

Digital Visual Interface (DVI)

Transport medium

[edit]Video can be transmitted or transported in a variety of ways including wireless terrestrial television as an analog or digital signal, coaxial cable in a closed-circuit system as an analog signal. Broadcast or studio cameras use a single or dual coaxial cable system using serial digital interface (SDI). See List of video connectors for information about physical connectors and related signal standards.

Video may be transported over networks and other shared digital communications links using, for instance, MPEG transport stream, SMPTE 2022 and SMPTE 2110.

Display standards

[edit]Digital television

[edit]Digital television broadcasts use the MPEG-2 and other video coding formats and include:

- ATSC – United States, Canada, Mexico, Korea

- Digital Video Broadcasting (DVB) – Europe

- ISDB – Japan

- Digital multimedia broadcasting (DMB) – Korea

Analog television

[edit]Analog television broadcast standards include:

- Field-sequential color system (FCS) – US, Russia; obsolete

- Multiplexed Analogue Components (MAC) – Europe; obsolete

- Multiple sub-Nyquist sampling encoding (MUSE) – Japan

- NTSC – United States, Canada, Japan

- EDTV-II Clear-Vision - NTSC extension, Japan

- PAL – Europe, Asia, Oceania

- RS-343 (military)

- SECAM – France, former Soviet Union, Central Africa

- CCIR System A

- CCIR System B

- CCIR System G

- CCIR System H

- CCIR System I

- CCIR System M

An analog video format consists of more information than the visible content of the frame. Preceding and following the image are lines and pixels containing metadata and synchronization information. This surrounding margin is known as a blanking interval or blanking region; the horizontal and vertical front porch and back porch are the building blocks of the blanking interval.

Computer displays

[edit]Computer display standards specify a combination of aspect ratio, display size, display resolution, color depth, and refresh rate. A list of common resolutions is available.

Recording

[edit]

Early television was almost exclusively a live medium, with some programs recorded to film for historical purposes using Kinescope. The analog video tape recorder was commercially introduced in 1951. The following list is in rough chronological order. All formats listed were sold to and used by broadcasters, video producers, or consumers; or were important historically.[23][24]

- VERA (BBC experimental format ca. 1952)

- 2" Quadruplex videotape (Ampex 1956)

- 1" Type A videotape (Ampex)

- 1/2" EIAJ (1969)

- U-matic 3/4" (Sony)

- 1/2" Cartrivision (Avco)

- VCR, VCR-LP, SVR

- 1" Type B videotape (Robert Bosch GmbH)

- 1" Type C videotape (Ampex, Marconi and Sony)

- 2" Helical Scan Videotape (IVC) (1975)

- Betamax (Sony) (1975)

- VHS (JVC) (1976)

- Video 2000 (Philips) (1979)

- 1/4" CVC (Funai) (1980)

- Betacam (Sony) (1982)

- VHS-C (JVC) (1982)

- HDVS (Sony) (1984)[25]

- Video8 (Sony) (1986)

- Betacam SP (Sony) (1987)

- S-VHS (JVC) (1987)

- Pixelvision (Fisher-Price) (1987)

- UniHi 1/2" HD (1988)[25]

- Hi8 (Sony) (mid-1990s)

- W-VHS (JVC) (1994)

Digital video tape recorders offered improved quality compared to analog recorders.[24][26]

Optical storage mediums offered an alternative, especially in consumer applications, to bulky tape formats.[23][27]

- Blu-ray Disc (Sony)

- China Blue High-definition Disc (CBHD)

- DVD (was Super Density Disc, DVD Forum)

- Professional Disc

- Universal Media Disc (UMD) (Sony)

- Enhanced Versatile Disc (EVD, Chinese government-sponsored)

- HD DVD (NEC and Toshiba)

- HD-VMD

- Capacitance Electronic Disc

- Laserdisc (MCA and Philips)

- Television Electronic Disc (Teldec and Telefunken)

- VHD (JVC)

- Video CD

Digital encoding formats

[edit]A video codec is software or hardware that compresses and decompresses digital video. In the context of video compression, codec is a portmanteau of encoder and decoder, while a device that only compresses is typically called an encoder, and one that only decompresses is a decoder. The compressed data format usually conforms to a standard video coding format. The compression is typically lossy, meaning that the compressed video lacks some information present in the original video. A consequence of this is that decompressed video has lower quality than the original, uncompressed video because there is insufficient information to accurately reconstruct the original video.[28]

See also

[edit]- General

- Video format

- Video usage

- Video screen recording software

References

[edit]- ^ "Video – HiDef Audio and Video". hidefnj.com. Archived from the original on May 14, 2017. Retrieved March 30, 2017.

- ^ "video", Online Etymology Dictionary

- ^ Amidon, Audrey (June 25, 2013). "Film Preservation 101: What's the Difference Between a Film and a Video?". The Unwritten Record. US National Archives.

- ^ "Vocademy - Learn for Free - Electronics Technology - Analog Circuits - Analog Television". vocademy.net. Retrieved 2024-06-29.

- ^ Elen, Richard. "TV Technology 10. Roll VTR". Archived from the original on October 27, 2011.

- ^ "Vintage Umatic VCR – Sony VO-1600. The worlds first VCR. 1971". Rewind Museum. Archived from the original on February 22, 2014. Retrieved February 21, 2014.

- ^ Follows, Stephen (February 11, 2019). "The use of digital vs celluloid film on Hollywood movies". Archived from the original on April 11, 2022. Retrieved February 19, 2022.

- ^ Soseman, Ned. "What's the difference between 59.94fps and 60fps?". Archived from the original on June 29, 2017. Retrieved July 12, 2017.

- ^ Watson, Andrew B. (1986). "Temporal Sensitivity" (PDF). Sensory Processes and Perception. Archived from the original (PDF) on March 8, 2016.

- ^ a b c Bovik, Alan C. (2005). Handbook of image and video processing (2nd ed.). Amsterdam: Elsevier Academic Press. pp. 14–21. ISBN 978-0-08-053361-2. OCLC 190789775.

- ^ a b c d Wright, Steve (2002). Digital compositing for film and video. Boston: Focal Press. ISBN 978-0-08-050436-0. OCLC 499054489.

- ^ a b c Brown, Blain (2013). Cinematography: Theory and Practice: Image Making for Cinematographers and Directors. Taylor & Francis. pp. 159–166. ISBN 9781136047381.

- ^ a b Parker, Michael (2013). Digital Video Processing for Engineers : a Foundation for Embedded Systems Design. Suhel Dhanani. Amsterdam. ISBN 978-0-12-415761-3. OCLC 815408915.

{{cite book}}: CS1 maint: location missing publisher (link) - ^ a b Bing, Benny (2010). 3D and HD broadband video networking. Boston: Artech House. pp. 57–70. ISBN 978-1-60807-052-7. OCLC 672322796.

- ^ a b Stump, David (2022). Digital cinematography : fundamentals, tools, techniques, and workflows (2nd ed.). New York, NY: Routledge. pp. 125–139. ISBN 978-0-429-46885-8. OCLC 1233023513.

- ^ Constine, Josh (May 27, 2015). "The Most Important Insights From Mary Meeker's 2015 Internet Trends Report". TechCrunch. Archived from the original on August 4, 2015. Retrieved August 6, 2015.

- ^ a b Li, Ze-Nian; Drew, Mark S.; Liu, Jiangchun (2021). Fundamentals of multimedia (3rd ed.). Cham, Switzerland: Springer. pp. 108–117. ISBN 978-3-030-62124-7. OCLC 1243420273.

- ^ a b Banerjee, Sreeparna (2019). "Video in Multimedia". Elements of multimedia. Boca Raton: CRC Press. ISBN 978-0-429-43320-7. OCLC 1098279086.

- ^ Andy Beach (2008). Real World Video Compression. Peachpit Press. ISBN 978-0-13-208951-7. OCLC 1302274863.

- ^ Sanz, Jorge L. C. (1996). Image Technology : Advances in Image Processing, Multimedia and Machine Vision. Berlin, Heidelberg: Springer Berlin Heidelberg. ISBN 978-3-642-58288-2. OCLC 840292528.

- ^ Ekmekcioglu, Erhan; Fernando, Anil; Worrall, Stewart (2013). 3DTV : processing and transmission of 3D video signals. Chichester, West Sussex, United Kingdom: Wiley & Sons. ISBN 978-1-118-70573-5. OCLC 844775006.

- ^ Block, Bruce A.; McNally, Phillip (2013). 3D storytelling : how stereoscopic 3D works and how to use it. Burlington, MA: Taylor & Francis. ISBN 978-1-136-03881-5. OCLC 858027807.

- ^ a b Tozer, E.P.J. (2013). Broadcast engineer's reference book (1st ed.). New York. pp. 470–476. ISBN 978-1-136-02417-7. OCLC 1300579454.

{{cite book}}: CS1 maint: location missing publisher (link) - ^ a b Pizzi, Skip; Jones, Graham (2014). A Broadcast Engineering Tutorial for Non-Engineers (4th ed.). Hoboken: Taylor and Francis. pp. 145–152. ISBN 978-1-317-90683-4. OCLC 879025861.

- ^ a b "Sony HD Formats Guide (2008)" (PDF). pro.sony.com. Archived (PDF) from the original on March 6, 2015. Retrieved November 16, 2014.

- ^ Ward, Peter (2015). "Video Recording Formats". Multiskilling for television production. Alan Bermingham, Chris Wherry. New York: Focal Press. ISBN 978-0-08-051230-3. OCLC 958102392.

- ^ Merskin, Debra L., ed. (2020). The Sage international encyclopedia of mass media and society. Thousand Oaks, California. ISBN 978-1-4833-7551-9. OCLC 1130315057.

{{cite book}}: CS1 maint: location missing publisher (link) - ^ Ghanbari, Mohammed (2003). Standard Codecs: Image Compression to Advanced Video Coding. Institution of Engineering and Technology. pp. 1–12. ISBN 9780852967102. Archived from the original on August 8, 2019. Retrieved November 27, 2019.