Recent from talks

Nothing was collected or created yet.

Transistor count

View on Wikipedia

| Semiconductor device fabrication |

|---|

|

|

MOSFET scaling (process nodes) |

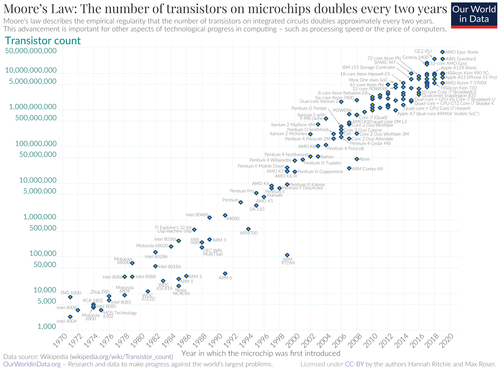

The transistor count is the number of transistors in an electronic device (typically on a single substrate or silicon die). It is the most common measure of integrated circuit complexity (although the majority of transistors in modern microprocessors are contained in cache memories, which consist mostly of the same memory cell circuits replicated many times). The rate at which MOS transistor counts have increased generally follows Moore's law, which observes that transistor count doubles approximately every two years. However, being directly proportional to the area of a die, transistor count does not represent how advanced the corresponding manufacturing technology is. A better indication of this is transistor density which is the ratio of a semiconductor's transistor count to its die area.

Records

[edit]As of 2023[update], the highest transistor count in flash memory is Micron's 2 terabyte (3D-stacked) 16-die, 232-layer V-NAND flash memory chip, with 5.3 trillion floating-gate MOSFETs (3 bits per transistor).

The highest transistor count in a single chip processor as of 2020[update] is that of the deep learning processor Wafer Scale Engine 2 by Cerebras. It has 2.6 trillion MOSFETs in 84 exposed fields (dies) on a wafer, manufactured using TSMC's 7 nm FinFET process.[1][2][3][4][5]

As of 2024[update], the GPU with the highest transistor count is Nvidia's Blackwell-based B100 accelerator, built on TSMC's custom 4NP process node and totaling 208 billion MOSFETs.

The highest transistor count in a consumer microprocessor as of March 2025[update] is 184 billion transistors, in Apple's ARM-based dual-die M3 Ultra SoC, which is fabricated using TSMC's 3 nm semiconductor manufacturing process.[citation needed]

| Year | Component | Name | Number of MOSFETs (in trillions) |

Remarks |

|---|---|---|---|---|

| 2022 | Flash memory | Micron's V-NAND module | 5.3 | stacked package of sixteen 232-layer 3D NAND dies |

| 2020 | any processor | Wafer Scale Engine 2 | 2.6 | wafer-scale design of 84 exposed fields (dies) |

| 2024 | GPU | Nvidia B100 | 0.208 | Uses two reticle limit dies, with 104 billion transistors each, joined together and acting as a single large monolithic piece of silicon |

| 2025 | Microprocessor (consumer) |

Apple M3 Ultra | 0.184 | SoC using two dies joined together with a high-speed bridge |

| 2020 | DLP | Colossus Mk2 GC200 | 0.059 | An IPU[a] (Intelligence Processing Unit) in contrast to CPU and GPU |

In terms of computer systems that consist of numerous integrated circuits, the supercomputer with the highest transistor count as of 2016[update] was the Chinese-designed Sunway TaihuLight, which has for all CPUs/nodes combined "about 400 trillion transistors in the processing part of the hardware" and "the DRAM includes about 12 quadrillion transistors, and that's about 97 percent of all the transistors."[6] To compare, the smallest computer, as of 2018[update] dwarfed by a grain of rice, had on the order of 100,000 transistors. Early experimental solid-state computers had as few as 130 transistors but used large amounts of diode logic. The first carbon nanotube computer had 178 transistors and was a 1-bit one-instruction set computer, while a later one is 16-bit (its instruction set is 32-bit RISC-V though).

Ionic transistor chips ("water-based" analog limited processor), have up to hundreds of such transistors.[7]

Estimates of the total numbers of transistors manufactured:

Transistor count

[edit]

Microprocessors

[edit]This subsection needs additional citations for verification. (December 2019) |

A microprocessor incorporates the functions of a computer's central processing unit on a single integrated circuit. It is a multi-purpose, programmable device that accepts digital data as input, processes it according to instructions stored in its memory, and provides results as output.

The development of MOS integrated circuit technology in the 1960s led to the development of the first microprocessors.[10] The 20-bit MP944, developed by Garrett AiResearch for the U.S. Navy's F-14 Tomcat fighter in 1970, is considered by its designer Ray Holt to be the first microprocessor.[11] It was a multi-chip microprocessor, fabricated on six MOS chips. However, it was classified by the Navy until 1998. The 4-bit Intel 4004, released in 1971, was the first single-chip microprocessor.

Modern microprocessors typically include on-chip cache memories. The number of transistors used for these cache memories typically far exceeds the number of transistors used to implement the logic of the microprocessor (that is, excluding the cache). For example, the last DEC Alpha chip uses 90% of its transistors for cache.[12]

| Processor | Transistor count | Year | Designer | Process (nm) |

Area (mm2) | Transistor density (tr./mm2) |

|---|---|---|---|---|---|---|

| MP944 (20-bit, 6-chip, 28 chips total) | 74,442 (5,360 excl. ROM & RAM)[13][14] | 1970[11][b] | Garrett AiResearch | ? | ? | ? |

| Intel 4004 (4-bit, 16-pin) | 2,250 | 1971 | Intel | 10,000 nm | 12 mm2 | 188 |

| TMX 1795 (8-bit, 24-pin) | 3,078[15] | 1971 | Texas Instruments | ? | 30.64 mm2 | 100.5 |

| Intel 8008 (8-bit, 18-pin) | 3,500 | 1972 | Intel | 10,000 nm | 14 mm2 | 250 |

| NEC μCOM-4 (4-bit, 42-pin) | 2,500[16][17] | 1973 | NEC | 7,500 nm[18] | ? | ? |

| Toshiba TLCS-12 (12-bit) | 11,000+[19] | 1973 | Toshiba | 6,000 nm | 32.45 mm2 | 340+ |

| Intel 4040 (4-bit, 16-pin) | 3,000 | 1974 | Intel | 10,000 nm | 12 mm2 | 250 |

| Motorola 6800 (8-bit, 40-pin) | 4,100 | 1974 | Motorola | 6,000 nm | 16 mm2 | 256 |

| Intel 8080 (8-bit, 40-pin) | 6,000 | 1974 | Intel | 6,000 nm | 20 mm2 | 300 |

| TMS 1000 (4-bit, 28-pin) | 8,000[c] | 1974[20] | Texas Instruments | 8,000 nm | 11 mm2 | 730 |

| HP Nanoprocessor (8-bit, 40-pin) | 4639[d][21] | 1974 | Hewlett-Packard | ? | 19 mm2 | ? |

| MOS Technology 6502 (8-bit, 40-pin) | 4,528[e][22] | 1975 | MOS Technology | 8,000 nm | 21 mm2 | 216 |

| Intersil IM6100 (12-bit, 40-pin; clone of PDP-8) | 4,000 | 1975 | Intersil | ? | ? | ? |

| CDP 1801 (8-bit, 2-chip, 40-pin) | 5,000 | 1975 | RCA | ? | ? | ? |

| RCA 1802 (8-bit, 40-pin) | 5,000 | 1976 | RCA | 5,000 nm | 27 mm2 | 185 |

| Zilog Z80 (8-bit, 4-bit ALU, 40-pin) | 8,500[f] | 1976 | Zilog | 4,000 nm | 18 mm2 | 470 |

| Intel 8085 (8-bit, 40-pin) | 6,500 | 1976 | Intel | 3,000 nm | 20 mm2 | 325 |

| TMS9900 (16-bit) | 8,000 | 1976 | Texas Instruments | ? | ? | ? |

| Bellmac-8 (8-bit) | 7,000 | 1977 | Bell Labs | 5,000 nm | ? | ? |

| Motorola 6809 (8-bit with some 16-bit features, 40-pin) | 9,000 | 1978 | Motorola | 5,000 nm | 21 mm2 | 430 |

| Intel 8086 (16-bit, 40-pin) | 29,000[23] | 1978 | Intel | 3,000 nm | 33 mm2 | 880 |

| Zilog Z8000 (16-bit) | 17,500[24] | 1979 | Zilog | 5,000-6,000 nm (design rules) | 39.31 mm2 (238x256 mil2) | 445 |

| Intel 8088 (16-bit, 8-bit data bus) | 29,000 | 1979 | Intel | 3,000 nm | 33 mm2 | 880 |

| Motorola 68000 (16/32-bit, 32-bit registers, 16-bit ALU) | 68,000[25] | 1979 | Motorola | 3,500 nm | 44 mm2 | 1,550 |

| Intel 8051 (8-bit, 40-pin) | 50,000 | 1980 | Intel | ? | ? | ? |

| WDC 65C02 | 11,500[26] | 1981 | WDC | 3,000 nm | 6 mm2 | 1,920 |

| ROMP (32-bit) | 45,000 | 1981 | IBM | 2,000 nm | 58.52 mm2 | 770 |

| Intel 80186 (16-bit, 68-pin) | 55,000 | 1982 | Intel | 3,000 nm | 60 mm2 | 920 |

| Intel 80286 (16-bit, 68-pin) | 134,000 | 1982 | Intel | 1,500 nm | 49 mm2 | 2,730 |

| WDC 65C816 (8/16-bit) | 22,000[27] | 1983 | WDC | 3,000 nm[28] | 9 mm2 | 2,400 |

| NEC V20 | 63,000 | 1984 | NEC | ? | ? | ? |

| Motorola 68020 (32-bit; 114 pins used) | 190,000[29] | 1984 | Motorola | 2,000 nm | 85 mm2 | 2,200 |

| Intel 80386 (32-bit, 132-pin; no cache) | 275,000 | 1985 | Intel | 1,500 nm | 104 mm2 | 2,640 |

| ARM 1 (32-bit; no cache) | 25,000[29] | 1985 | Acorn | 3,000 nm | 50 mm2 | 500 |

| Novix NC4016 (16-bit) | 16,000[30] | 1985[31] | Harris Corporation | 3,000 nm[32] | ? | ? |

| SPARC MB86900 (32-bit; no cache) | 110,000[33] | 1986 | Fujitsu | 1,200 nm | ? | ? |

| NEC V60[34] (32-bit; no cache) | 375,000 | 1986 | NEC | 1,500 nm | ? | ? |

| ARM 2 (32-bit, 84-pin; no cache) | 27,000[35][29] | 1986 | Acorn | 2,000 nm | 30.25 mm2 | 890 |

| Z80000 (32-bit; very small cache) | 91,000 | 1986 | Zilog | ? | ? | ? |

| NEC V70[34] (32-bit; no cache) | 385,000 | 1987 | NEC | 1,500 nm | ? | ? |

| Hitachi Gmicro/200[36] | 730,000 | 1987 | Hitachi | 1,000 nm | ? | ? |

| Motorola 68030 (32-bit, very small caches) | 273,000 | 1987 | Motorola | 800 nm | 102 mm2 | 2,680 |

| TI Explorer's 32-bit Lisp machine chip | 553,000[37] | 1987 | Texas Instruments | 2,000 nm[38] | ? | ? |

| DEC WRL MultiTitan | 180,000[39] | 1988 | DEC WRL | 1,500 nm | 61 mm2 | 2,950 |

| Intel i960 (32-bit, 33-bit memory subsystem, no cache) | 250,000[40] | 1988 | Intel | 1,500 nm[41] | ? | ? |

| Intel i960CA (32-bit, cache) | 600,000[41] | 1989 | Intel | 800 nm | 143 mm2 | 4,200 |

| Intel i860 (32/64-bit, 128-bit SIMD, cache, VLIW) | 1,000,000[42] | 1989 | Intel | ? | ? | ? |

| Intel 80486 (32-bit, 8 KB cache) | 1,180,235 | 1989 | Intel | 1,000 nm | 173 mm2 | 6,822 |

| ARM 3 (32-bit, 4 KB cache) | 310,000 | 1989 | Acorn | 1,500 nm | 87 mm2 | 3,600 |

| POWER1 (9-chip module, 72 kB of cache) | 6,900,000[43] | 1990 | IBM | 1,000 nm | 1,283.61 mm2 | 5,375 |

| Motorola 68040 (32-bit, 8 KB caches) | 1,200,000 | 1990 | Motorola | 650 nm | 152 mm2 | 7,900 |

| R4000 (64-bit, 16 KB of caches) | 1,350,000 | 1991 | MIPS | 1,000 nm | 213 mm2 | 6,340 |

| ARM 6 (32-bit, no cache for this 60 variant) | 35,000 | 1991 | ARM | 800 nm | ? | ? |

| Hitachi SH-1 (32-bit, no cache) | 600,000[44] | 1992[45] | Hitachi | 800 nm | 100 mm2 | 6,000 |

| Intel i960CF (32-bit, cache) | 900,000[41] | 1992 | Intel | ? | 125 mm2 | 7,200 |

| Alpha 21064 (64-bit, 290-pin; 16 KB of caches) | 1,680,000 | 1992 | DEC | 750 nm | 233.52 mm2 | 7,190 |

| Hitachi HARP-1 (32-bit, cache) | 2,800,000[46] | 1993 | Hitachi | 500 nm | 267 mm2 | 10,500 |

| Pentium (32-bit, 16 KB of caches) | 3,100,000 | 1993 | Intel | 800 nm | 294 mm2 | 10,500 |

| POWER2 (8-chip module, 288 kB of cache) | 23,037,000[47] | 1993 | IBM | 720 nm | 1,217.39 mm2 | 18,923 |

| ARM700 (32-bit; 8 KB cache) | 578,977[48] | 1994 | ARM | 700 nm | 68.51 mm2 | 8,451 |

| MuP21 (21-bit,[49] 40-pin; includes video) | 7,000[50] | 1994 | Offete Enterprises | 1,200 nm | ? | ? |

| Motorola 68060 (32-bit, 16 KB of caches) | 2,500,000 | 1994 | Motorola | 600 nm | 218 mm2 | 11,500 |

| PowerPC 601 (32-bit, 32 KB of caches) | 2,800,000[51] | 1994 | Apple, IBM, Motorola | 600 nm | 121 mm2 | 23,000 |

| PowerPC 603 (32-bit, 16 KB of caches) | 1,600,000[52] | 1994 | Apple, IBM, Motorola | 500 nm | 84.76 mm2 | 18,900 |

| PowerPC 603e (32-bit, 32 KB of caches) | 2,600,000[53] | 1995 | Apple, IBM, Motorola | 500 nm | 98 mm2 | 26,500 |

| Alpha 21164 EV5 (64-bit, 112 kB cache) | 9,300,000[54] | 1995 | DEC | 500 nm | 298.65 mm2 | 31,140 |

| SA-110 (32-bit, 32 KB of caches) | 2,500,000[29] | 1995 | Acorn, DEC, Apple | 350 nm | 50 mm2 | 50,000 |

| Pentium Pro (32-bit, 16 KB of caches;[55] L2 cache on-package, but on separate die) | 5,500,000[56] | 1995 | Intel | 500 nm | 307 mm2 | 18,000 |

| PA-8000 64-bit, no cache | 3,800,000[57] | 1995 | HP | 500 nm | 337.69 mm2 | 11,300 |

| Alpha 21164A EV56 (64-bit, 112 kB cache) | 9,660,000[58] | 1996 | DEC | 350 nm | 208.8 mm2 | 46,260 |

| AMD K5 (32-bit, caches) | 4,300,000 | 1996 | AMD | 500 nm | 251 mm2 | 17,000 |

| Pentium II Klamath (32-bit, 64-bit SIMD, caches) | 7,500,000 | 1997 | Intel | 350 nm | 195 mm2 | 39,000 |

| AMD K6 (32-bit, caches) | 8,800,000 | 1997 | AMD | 350 nm | 162 mm2 | 54,000 |

| F21 (21-bit; includes e.g. video) | 15,000 | 1997[50] | Offete Enterprises | ? | ? | ? |

| AVR (8-bit, 40-pin; w/memory) | 140,000 (48,000 excl. memory[59]) |

1997 | Nordic VLSI/Atmel | ? | ? | ? |

| Pentium II Deschutes (32-bit, large cache) | 7,500,000 | 1998 | Intel | 250 nm | 113 mm2 | 66,000 |

| Alpha 21264 EV6 (64-bit) | 15,200,000[60] | 1998 | DEC | 350 nm | 313.96 mm2 | 48,400 |

| Alpha 21164PC PCA57 (64-bit, 48 kB cache) | 5,700,000 | 1998 | Samsung | 280 nm | 100.5 mm2 | 56,700 |

| Hitachi SH-4 (32-bit, caches)[61] | 3,200,000[62] | 1998 | Hitachi | 250 nm | 57.76 mm2 | 55,400 |

| ARM 9TDMI (32-bit, no cache) | 111,000[29] | 1999 | Acorn | 350 nm | 4.8 mm2 | 23,100 |

| Pentium III Katmai (32-bit, 128-bit SIMD, caches) | 9,500,000 | 1999 | Intel | 250 nm | 128 mm2 | 74,000 |

| Emotion Engine (64-bit, 128-bit SIMD, cache) | 10,500,000[63] – 13,500,000[64] |

1999 | Sony, Toshiba | 250 nm | 239.7 mm2[63] | 43,800 – 56,300 |

| Pentium II Mobile Dixon (32-bit, caches) | 27,400,000 | 1999 | Intel | 180 nm | 180 mm2 | 152,000 |

| AMD K6-III (32-bit, caches) | 21,300,000 | 1999 | AMD | 250 nm | 118 mm2 | 181,000 |

| AMD K7 (32-bit, caches) | 22,000,000 | 1999 | AMD | 250 nm | 184 mm2 | 120,000 |

| Gekko (32-bit, large cache) | 21,000,000[65] | 2000 | IBM, Nintendo | 180 nm | 43 mm2 | 490,000 (check) |

| Pentium III Coppermine (32-bit, large cache) | 21,000,000 | 2000 | Intel | 180 nm | 80 mm2 | 263,000 |

| Pentium 4 Willamette (32-bit, large cache) | 42,000,000 | 2000 | Intel | 180 nm | 217 mm2 | 194,000 |

| SPARC64 V (64-bit, large cache) | 191,000,000[66] | 2001 | Fujitsu | 130 nm[67] | 290 mm2 | 659,000 |

| Pentium III Tualatin (32-bit, large cache) | 45,000,000 | 2001 | Intel | 130 nm | 81 mm2 | 556,000 |

| Pentium 4 Northwood (32-bit, large cache) | 55,000,000 | 2002 | Intel | 130 nm | 145 mm2 | 379,000 |

| Itanium 2 McKinley (64-bit, large cache) | 220,000,000 | 2002 | Intel | 180 nm | 421 mm2 | 523,000 |

| Alpha 21364 (64-bit, 946-pin, SIMD, very large caches) | 152,000,000[12] | 2003 | DEC | 180 nm | 397 mm2 | 383,000 |

| AMD K7 Barton (32-bit, large cache) | 54,300,000 | 2003 | AMD | 130 nm | 101 mm2 | 538,000 |

| AMD K8 (64-bit, large cache) | 105,900,000 | 2003 | AMD | 130 nm | 193 mm2 | 548,700 |

| Pentium M Banias (32-bit) | 77,000,000[68] | 2003 | Intel | 130 nm | 83 mm2 | 928,000 |

| Itanium 2 Madison 6M (64-bit) | 410,000,000 | 2003 | Intel | 130 nm | 374 mm2 | 1,096,000 |

| PlayStation 2 single chip (CPU + GPU) | 53,500,000[69] | 2003[70] | Sony, Toshiba | 90 nm[71] 130 nm[72][73] |

86 mm2 | 622,100 |

| Pentium 4 Prescott (32-bit, large cache) | 112,000,000 | 2004 | Intel | 90 nm | 110 mm2 | 1,018,000 |

| Pentium M Dothan (32-bit) | 144,000,000[74] | 2004 | Intel | 90 nm | 87 mm2 | 1,655,000 |

| SPARC64 V+ (64-bit, large cache) | 400,000,000[75] | 2004 | Fujitsu | 90 nm | 294 mm2 | 1,360,000 |

| Itanium 2 (64-bit;9 MB cache) | 592,000,000 | 2004 | Intel | 130 nm | 432 mm2 | 1,370,000 |

| Pentium 4 Prescott-2M (32-bit, large cache) | 169,000,000 | 2005 | Intel | 90 nm | 143 mm2 | 1,182,000 |

| Pentium D Smithfield (64-bit, large cache) | 228,000,000 | 2005 | Intel | 90 nm | 206 mm2 | 1,107,000 |

| Xenon (64-bit, 128-bit SIMD, large cache) | 165,000,000 | 2005 | IBM | 90 nm | ? | ? |

| Cell (32-bit, cache) | 250,000,000[76] | 2005 | Sony, IBM, Toshiba | 90 nm | 221 mm2 | 1,131,000 |

| Pentium 4 Cedar Mill (32-bit, large cache) | 184,000,000 | 2006 | Intel | 65 nm | 90 mm2 | 2,044,000 |

| Pentium D Presler (64-bit, large cache) | 362,000,000 [77] | 2006 | Intel | 65 nm | 162 mm2 | 2,235,000 |

| Core 2 Duo Conroe (dual-core 64-bit, large caches) | 291,000,000 | 2006 | Intel | 65 nm | 143 mm2 | 2,035,000 |

| Dual-core Itanium 2 (64-bit, SIMD, large caches) | 1,700,000,000[78] | 2006 | Intel | 90 nm | 596 mm2 | 2,852,000 |

| AMD K10 quad-core 2M L3 (64-bit, large caches) | 463,000,000[79] | 2007 | AMD | 65 nm | 283 mm2 | 1,636,000 |

| ARM Cortex-A9 (32-bit, (optional) SIMD, caches) | 26,000,000[80] | 2007 | ARM | 45 nm | 31 mm2 | 839,000 |

| Core 2 Duo Wolfdale (dual-core 64-bit, SIMD, caches) | 411,000,000 | 2007 | Intel | 45 nm | 107 mm2 | 3,841,000 |

| POWER6 (64-bit, large caches) | 789,000,000 | 2007 | IBM | 65 nm | 341 mm2 | 2,314,000 |

| Core 2 Duo Allendale (dual-core 64-bit, SIMD, large caches) | 169,000,000 | 2007 | Intel | 65 nm | 111 mm2 | 1,523,000 |

| Uniphier | 250,000,000[81] | 2007 | Matsushita | 45 nm | ? | ? |

| SPARC64 VI (64-bit, SIMD, large caches) | 540,000,000 | 2007[82] | Fujitsu | 90 nm | 421 mm2 | 1,283,000 |

| Core 2 Duo Wolfdale 3M (dual-core 64-bit, SIMD, large caches) | 230,000,000 | 2008 | Intel | 45 nm | 83 mm2 | 2,771,000 |

| Core i7 (quad-core 64-bit, SIMD, large caches) | 731,000,000 | 2008 | Intel | 45 nm | 263 mm2 | 2,779,000 |

| AMD K10 quad-core 6M L3 (64-bit, SIMD, large caches) | 758,000,000[79] | 2008 | AMD | 45 nm | 258 mm2 | 2,938,000 |

| Atom (32-bit, large cache) | 47,000,000 | 2008 | Intel | 45 nm | 24 mm2 | 1,958,000 |

| SPARC64 VII (64-bit, SIMD, large caches) | 600,000,000 | 2008[83] | Fujitsu | 65 nm | 445 mm2 | 1,348,000 |

| Six-core Xeon 7400 (64-bit, SIMD, large caches) | 1,900,000,000 | 2008 | Intel | 45 nm | 503 mm2 | 3,777,000 |

| Six-core Opteron 2400 (64-bit, SIMD, large caches) | 904,000,000 | 2009 | AMD | 45 nm | 346 mm2 | 2,613,000 |

| SPARC64 VIIIfx (64-bit, SIMD, large caches) | 760,000,000[84] | 2009 | Fujitsu | 45 nm | 513 mm2 | 1,481,000 |

| Atom (Pineview) 64-bit, 1-core, 512 kB L2 cache | 123,000,000[85] | 2010 | Intel | 45 nm | 66 mm2 | 1,864,000 |

| Atom (Pineview) 64-bit, 2-core, 1 MB L2 cache | 176,000,000[86] | 2010 | Intel | 45 nm | 87 mm2 | 2,023,000 |

| SPARC T3 (16-core 64-bit, SIMD, large caches) | 1,000,000,000[87] | 2010 | Sun/Oracle | 40 nm | 377 mm2 | 2,653,000 |

| Six-core Core i7 (Gulftown) | 1,170,000,000 | 2010 | Intel | 32 nm | 240 mm2 | 4,875,000 |

| POWER7 32M L3 (8-core 64-bit, SIMD, large caches) | 1,200,000,000 | 2010 | IBM | 45 nm | 567 mm2 | 2,116,000 |

| Quad-core z196[88] (64-bit, very large caches) | 1,400,000,000 | 2010 | IBM | 45 nm | 512 mm2 | 2,734,000 |

| Quad-core Itanium Tukwila (64-bit, SIMD, large caches) | 2,000,000,000[89] | 2010 | Intel | 65 nm | 699 mm2 | 2,861,000 |

| Xeon Nehalem-EX (8-core 64-bit, SIMD, large caches) | 2,300,000,000[90] | 2010 | Intel | 45 nm | 684 mm2 | 3,363,000 |

| SPARC64 IXfx (64-bit, SIMD, large caches) | 1,870,000,000[91] | 2011 | Fujitsu | 40 nm | 484 mm2 | 3,864,000 |

| Quad-core + GPU Core i7 (64-bit, SIMD, large caches) | 1,160,000,000 | 2011 | Intel | 32 nm | 216 mm2 | 5,370,000 |

| Six-core Core i7/8-core Xeon E5 (Sandy Bridge-E/EP) (64-bit, SIMD, large caches) |

2,270,000,000[92] | 2011 | Intel | 32 nm | 434 mm2 | 5,230,000 |

| Xeon Westmere-EX (10-core 64-bit, SIMD, large caches) | 2,600,000,000 | 2011 | Intel | 32 nm | 512 mm2 | 5,078,000 |

| Atom "Medfield" (64-bit) | 432,000,000[93] | 2012 | Intel | 32 nm | 64 mm2 | 6,750,000 |

| SPARC64 X (64-bit, SIMD, caches) | 2,990,000,000[94] | 2012 | Fujitsu | 28 nm | 600 mm2 | 4,983,000 |

| AMD Bulldozer (8-core 64-bit, SIMD, caches) | 1,200,000,000[95] | 2012 | AMD | 32 nm | 315 mm2 | 3,810,000 |

| Quad-core + GPU AMD Trinity (64-bit, SIMD, caches) | 1,303,000,000 | 2012 | AMD | 32 nm | 246 mm2 | 5,297,000 |

| Quad-core + GPU Core i7 Ivy Bridge (64-bit, SIMD, caches) | 1,400,000,000 | 2012 | Intel | 22 nm | 160 mm2 | 8,750,000 |

| POWER7+ (8-core 64-bit, SIMD, 80 MB L3 cache) | 2,100,000,000 | 2012 | IBM | 32 nm | 567 mm2 | 3,704,000 |

| Six-core zEC12 (64-bit, SIMD, large caches) | 2,750,000,000 | 2012 | IBM | 32 nm | 597 mm2 | 4,606,000 |

| Itanium Poulson (8-core 64-bit, SIMD, caches) | 3,100,000,000 | 2012 | Intel | 32 nm | 544 mm2 | 5,699,000 |

| Xeon Phi (61-core 32-bit, 512-bit SIMD, caches) | 5,000,000,000[96] | 2012 | Intel | 22 nm | 720 mm2 | 6,944,000 |

| Apple A7 (dual-core 64/32-bit ARM64, "mobile SoC", SIMD, caches) | 1,000,000,000 | 2013 | Apple | 28 nm | 102 mm2 | 9,804,000 |

| Six-core Core i7 Ivy Bridge E (64-bit, SIMD, caches) | 1,860,000,000 | 2013 | Intel | 22 nm | 256 mm2 | 7,266,000 |

| POWER8 (12-core 64-bit, SIMD, caches) | 4,200,000,000 | 2013 | IBM | 22 nm | 650 mm2 | 6,462,000 |

| Xbox One main SoC (64-bit, SIMD, caches) | 5,000,000,000 | 2013 | Microsoft, AMD | 28 nm | 363 mm2 | 13,770,000 |

| Quad-core + GPU Core i7 Haswell (64-bit, SIMD, caches) | 1,400,000,000[97] | 2014 | Intel | 22 nm | 177 mm2 | 7,910,000 |

| Apple A8 (dual-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 2,000,000,000 | 2014 | Apple | 20 nm | 89 mm2 | 22,470,000 |

| Core i7 Haswell-E (8-core 64-bit, SIMD, caches) | 2,600,000,000[98] | 2014 | Intel | 22 nm | 355 mm2 | 7,324,000 |

| Apple A8X (tri-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 3,000,000,000[99] | 2014 | Apple | 20 nm | 128 mm2 | 23,440,000 |

| Xeon Ivy Bridge-EX (15-core 64-bit, SIMD, caches) | 4,310,000,000[100] | 2014 | Intel | 22 nm | 541 mm2 | 7,967,000 |

| Xeon Haswell-E5 (18-core 64-bit, SIMD, caches) | 5,560,000,000[101] | 2014 | Intel | 22 nm | 661 mm2 | 8,411,000 |

| Quad-core + GPU GT2 Core i7 Skylake K (64-bit, SIMD, caches) | 1,750,000,000 | 2015 | Intel | 14 nm | 122 mm2 | 14,340,000 |

| Dual-core + GPU Iris Core i7 Broadwell-U (64-bit, SIMD, caches) | 1,900,000,000[102] | 2015 | Intel | 14 nm | 133 mm2 | 14,290,000 |

| Apple A9 (dual-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 2,000,000,000+ | 2015 | Apple | 14 nm (Samsung) |

96 mm2 (Samsung) |

20,800,000+ |

| 16 nm (TSMC) |

104.5 mm2 (TSMC) |

19,100,000+ | ||||

| Apple A9X (dual core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 3,000,000,000+ | 2015 | Apple | 16 nm | 143.9 mm2 | 20,800,000+ |

| IBM z13 (64-bit, caches) | 3,990,000,000 | 2015 | IBM | 22 nm | 678 mm2 | 5,885,000 |

| IBM z13 Storage Controller | 7,100,000,000 | 2015 | IBM | 22 nm | 678 mm2 | 10,472,000 |

| SPARC M7 (32-core 64-bit, SIMD, caches) | 10,000,000,000[103] | 2015 | Oracle | 20 nm | ? | ? |

| Core i7 Broadwell-E (10-core 64-bit, SIMD, caches) | 3,200,000,000[104] | 2016 | Intel | 14 nm | 246 mm2[105] | 13,010,000 |

| Apple A10 Fusion (quad-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 3,300,000,000 | 2016 | Apple | 16 nm | 125 mm2 | 26,400,000 |

| HiSilicon Kirin 960 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 4,000,000,000[106] | 2016 | Huawei | 16 nm | 110.00 mm2 | 36,360,000 |

| Xeon Broadwell-E5 (22-core 64-bit, SIMD, caches) | 7,200,000,000[107] | 2016 | Intel | 14 nm | 456 mm2 | 15,790,000 |

| Xeon Phi (72-core 64-bit, 512-bit SIMD, caches) | 8,000,000,000 | 2016 | Intel | 14 nm | 683 mm2 | 11,710,000 |

| Zip CPU (32-bit, for FPGAs) | 1,286 6-LUTs[108] | 2016 | Gisselquist Technology | ? | ? | ? |

| Qualcomm Snapdragon 835 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 3,000,000,000[109][110] | 2016 | Qualcomm | 10 nm | 72.3 mm2 | 41,490,000 |

| Apple A11 Bionic (hexa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 4,300,000,000 | 2017 | Apple | 10 nm | 89.23 mm2 | 48,190,000 |

| AMD Zen CCX (core complex unit: 4 cores, 8 MB L3 cache) | 1,400,000,000[111] | 2017 | AMD | 14 nm (GF 14LPP) |

44 mm2 | 31,800,000 |

| AMD Zeppelin SoC Ryzen (64-bit, SIMD, caches) | 4,800,000,000[112] | 2017 | AMD | 14 nm | 192 mm2 | 25,000,000 |

| AMD Ryzen 5 1600 Ryzen (64-bit, SIMD, caches) | 4,800,000,000[113] | 2017 | AMD | 14 nm | 213 mm2 | 22,530,000 |

| IBM z14 (64-bit, SIMD, caches) | 6,100,000,000 | 2017 | IBM | 14 nm | 696 mm2 | 8,764,000 |

| IBM z14 Storage Controller (64-bit) | 9,700,000,000 | 2017 | IBM | 14 nm | 696 mm2 | 13,940,000 |

| HiSilicon Kirin 970 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 5,500,000,000[114] | 2017 | Huawei | 10 nm | 96.72 mm2 | 56,900,000 |

| Xbox One X (Project Scorpio) main SoC (64-bit, SIMD, caches) | 7,000,000,000[115] | 2017 | Microsoft, AMD | 16 nm | 360 mm2[115] | 19,440,000 |

| Xeon Platinum 8180 (28-core 64-bit, SIMD, caches) | 8,000,000,000[116] | 2017 | Intel | 14 nm | ? | ? |

| Xeon (unspecified) | 7,100,000,000[117] | 2017 | Intel | 14 nm | 672 mm2 | 10,570,000 |

| POWER9 (64-bit, SIMD, caches) | 8,000,000,000 | 2017 | IBM | 14 nm | 695 mm2 | 11,500,000 |

| Freedom U500 Base Platform Chip (E51, 4×U54) RISC-V (64-bit, caches) | 250,000,000[118] | 2017 | SiFive | 28 nm | ~30 mm2 | 8,330,000 |

| SPARC64 XII (12-core 64-bit, SIMD, caches) | 5,450,000,000[119] | 2017 | Fujitsu | 20 nm | 795 mm2 | 6,850,000 |

| Apple A10X Fusion (hexa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 4,300,000,000[120] | 2017 | Apple | 10 nm | 96.40 mm2 | 44,600,000 |

| Centriq 2400 (64/32-bit, SIMD, caches) | 18,000,000,000[121] | 2017 | Qualcomm | 10 nm | 398 mm2 | 45,200,000 |

| AMD Epyc (32-core 64-bit, SIMD, caches) | 19,200,000,000 | 2017 | AMD | 14 nm | 768 mm2 | 25,000,000 |

| Qualcomm Snapdragon 845 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 5,300,000,000[122] | 2017 | Qualcomm | 10 nm | 94 mm2 | 56,400,000 |

| Qualcomm Snapdragon 850 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 5,300,000,000[123] | 2017 | Qualcomm | 10 nm | 94 mm2 | 56,400,000 |

| HiSilicon Kirin 710 (octa-core ARM64 "mobile SoC", SIMD, caches) | 5,500,000,000[124] | 2018 | Huawei | 12 nm | ? | ? |

| Apple A12 Bionic (hexa-core ARM64 "mobile SoC", SIMD, caches) | 6,900,000,000 [125][126] |

2018 | Apple | 7 nm | 83.27 mm2 | 82,900,000 |

| HiSilicon Kirin 980 (octa-core ARM64 "mobile SoC", SIMD, caches) | 6,900,000,000[127] | 2018 | Huawei | 7 nm | 74.13 mm2 | 93,100,000 |

| Qualcomm Snapdragon 8cx / SCX8180 (octa-core ARM64 "mobile SoC", SIMD, caches) | 8,500,000,000[128] | 2018 | Qualcomm | 7 nm | 112 mm2 | 75,900,000 |

| Apple A12X Bionic (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 10,000,000,000[129] | 2018 | Apple | 7 nm | 122 mm2 | 82,000,000 |

| Fujitsu A64FX (64/32-bit, SIMD, caches) | 8,786,000,000[130] | 2018[131] | Fujitsu | 7 nm | ? | ? |

| Tegra Xavier SoC (64/32-bit) | 9,000,000,000[132] | 2018 | Nvidia | 12 nm | 350 mm2 | 25,700,000 |

| Qualcomm Snapdragon 855 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 6,700,000,000[133] | 2018 | Qualcomm | 7 nm | 73 mm2 | 91,800,000 |

| AMD Zen 2 core (0.5 MB L2 + 4 MB L3 cache) | 475,000,000[134] | 2019 | AMD | 7 nm | 7.83 mm2 | 60,664,000 |

| AMD Zen 2 CCX (core complex: 4 cores, 16 MB L3 cache) | 1,900,000,000[134] | 2019 | AMD | 7 nm | 31.32 mm2 | 60,664,000 |

| AMD Zen 2 CCD (core complex die: 8 cores, 32 MB L3 cache) | 3,800,000,000[134] | 2019 | AMD | 7 nm | 74 mm2 | 51,350,000 |

| AMD Zen 2 client I/O die | 2,090,000,000[134] | 2019 | AMD | 12 nm | 125 mm2 | 16,720,000 |

| AMD Zen 2 server I/O die | 8,340,000,000[134] | 2019 | AMD | 12 nm | 416 mm2 | 20,050,000 |

| AMD Zen 2 Renoir die | 9,800,000,000[134] | 2019 | AMD | 7 nm | 156 mm2 | 62,820,000 |

| AMD Ryzen 7 3700X (64-bit, SIMD, caches, I/O die) | 5,990,000,000[135][g] | 2019 | AMD | 7 & 12 nm (TSMC) |

199 (74+125) mm2 |

30,100,000 |

| HiSilicon Kirin 990 4G | 8,000,000,000[136] | 2019 | Huawei | 7 nm | 90.00 mm2 | 89,000,000 |

| Apple A13 (hexa-core 64-bit ARM64 "mobile SoC", SIMD, caches) | 8,500,000,000 [137][138] |

2019 | Apple | 7 nm | 98.48 mm2 | 86,300,000 |

| IBM z15 CP chip (12 cores, 256 MB L3 cache) | 9,200,000,000[139] | 2019 | IBM | 14 nm | 696 mm2 | 13,220,000 |

| IBM z15 SC chip (960 MB L4 cache) | 12,200,000,000 | 2019 | IBM | 14 nm | 696 mm2 | 17,530,000 |

| AMD Ryzen 9 3900X (64-bit, SIMD, caches, I/O die) | 9,890,000,000 [140][141] |

2019 | AMD | 7 & 12 nm (TSMC) |

273 mm2 | 36,230,000 |

| HiSilicon Kirin 990 5G | 10,300,000,000[142] | 2019 | Huawei | 7 nm | 113.31 mm2 | 90,900,000 |

| AWS Graviton2 (64-bit, 64-core ARM-based, SIMD, caches)[143][144] | 30,000,000,000 | 2019 | Amazon | 7 nm | ? | ? |

| AMD Epyc Rome (64-bit, SIMD, caches) | 39,540,000,000 [140][141] |

2019 | AMD | 7 & 12 nm (TSMC) |

1,008 mm2 | 39,226,000 |

| Qualcomm Snapdragon 865 (octa-core 64/32-bit ARM64 "mobile SoC", SIMD, caches) | 10,300,000,000[145] | 2019 | Qualcomm | 7 nm | 83.54 mm2[146] | 123,300,000 |

| TI Jacinto TDA4VM (ARM A72, DSP, SRAM) | 3,500,000,000[147] | 2020 | Texas Instruments | 16 nm | ? | ? |

| Apple A14 Bionic (hexa-core 64-bit ARM64 "mobile SoC", SIMD, caches) | 11,800,000,000[148] | 2020 | Apple | 5 nm | 88 mm2 | 134,100,000 |

| Apple M1 (octa-core 64-bit ARM64 SoC, SIMD, caches) | 16,000,000,000[149] | 2020 | Apple | 5 nm | 119 mm2 | 134,500,000 |

| HiSilicon Kirin 9000 | 15,300,000,000 [150][151] |

2020 | Huawei | 5 nm | 114 mm2 | 134,200,000 |

| AMD Zen 3 CCX (core complex unit: 8 cores, 32 MB L3 cache) | 4,080,000,000[152] | 2020 | AMD | 7 nm | 68 mm2 | 60,000,000 |

| AMD Zen 3 CCD (core complex die) | 4,150,000,000[152] | 2020 | AMD | 7 nm | 81 mm2 | 51,230,000 |

| Core 11th gen Rocket Lake (8-core 64-bit, SIMD, large caches) | 6,000,000,000+ [153] | 2021 | Intel | 14 nm +++ 14 nm | 276 mm2[154] | 37,500,000 or 21,800,000+ [155] |

| AMD Ryzen 7 5800H (64-bit, SIMD, caches, I/O and GPU) | 10,700,000,000[156] | 2021 | AMD | 7 nm | 180 mm2 | 59,440,000 |

| AMD Epyc 7763 (Milan) (64-core, 64-bit) | ? | 2021 | AMD | 7 & 12 nm (TSMC) |

1,064 mm2 (8×81+416)[157] |

? |

| Apple A15 | 15,000,000,000 [158][159] |

2021 | Apple | 5 nm | 107.68 mm2 | 139,300,000 |

| Apple M1 Pro (10-core, 64-bit) | 33,700,000,000[160] | 2021 | Apple | 5 nm | 245 mm2[161] | 137,600,000 |

| Apple M1 Max (10-core, 64-bit) | 57,000,000,000 [162][160] |

2021 | Apple | 5 nm | 420.2 mm2[163] | 135,600,000 |

| Power10 dual-chip module (30 SMT8 cores or 60 SMT4 cores) | 36,000,000,000[164] | 2021 | IBM | 7 nm | 1,204 mm2 | 29,900,000 |

| Dimensity 9000 (ARM64 SoC) | 15,300,000,000 [165][166] |

2021 | Mediatek | 4 nm (TSMC N4) |

? | ? |

| Apple A16 (ARM64 SoC) | 16,000,000,000 [167][168][169] |

2022 | Apple | 4 nm | ? | ? |

| Apple M1 Ultra (dual-chip module, 2×10 cores) | 114,000,000,000 [170][171] |

2022 | Apple | 5 nm | 840.5 mm2[163] | 135,600,000 |

| AMD Epyc 7773X (Milan-X) (multi-chip module, 64 cores, 768 MB L3 cache) | 26,000,000,000 + Milan[172] | 2022 | AMD | 7 & 12 nm (TSMC) |

1,352 mm2 (Milan + 8×36)[172] |

? |

| IBM Telum dual-chip module (2×8 cores, 2×256 MB cache) | 45,000,000,000 [173][174] |

2022 | IBM | 7 nm (Samsung) | 1,060 mm2 | 42,450,000 |

| Apple M2 (octa-core 64-bit ARM64 SoC, SIMD, caches) | 20,000,000,000[175] | 2022 | Apple | 5 nm | ? | ? |

| Dimensity 9200 (ARM64 SoC) | 17,000,000,000 [176][177][178] |

2022 | Mediatek | 4 nm (TSMC N4P) |

? | ? |

| Qualcomm Snapdragon 8 Gen 2 (octa-core ARM64 "mobile SoC", SIMD, caches) | 16,000,000,000 | 2022 | Qualcomm | 4 nm | 268 mm2 | 59,701,492 |

| AMD EPYC Genoa (4th gen/9004 series) 13-chip module (up to 96 cores and 384 MB (L3) + 96 MB (L2) cache)[179] | 90,000,000,000 [180][181] |

2022 | AMD | 5 nm (CCD) 6 nm (IOD) |

1,263.34 mm2 12×72.225 (CCD) 396.64 (IOD) [182][183] |

71,240,000 |

| HiSilicon Kirin 9000s | 9,510,000,000[184] | 2023 | Huawei | 7 nm | 107 mm2 | 107,690,000 |

| Apple M4 (deca-core 64-bit ARM64 SoC, SIMD, caches) | 28,000,000,000[185] | 2024 | Apple | 3 nm | ? | ? |

| Apple M3 (octa-core 64-bit ARM64 SoC, SIMD, caches) | 25,000,000,000[186] | 2023 | Apple | 3 nm | ? | ? |

| Apple M3 Pro (dodeca-core 64-bit ARM64 SoC, SIMD, caches) | 37,000,000,000[186] | 2023 | Apple | 3 nm | ? | ? |

| Apple M3 Max (16-core 64-bit ARM64 SoC, SIMD, caches) | 92,000,000,000[186] | 2023 | Apple | 3 nm | ? | ? |

| Apple A17 | 19,000,000,000 [187] |

2023 | Apple | 3 nm | 103.8 mm2 | 183,044,315 |

| Sapphire Rapids quad-chip module (up to 60 cores and 112.5 MB of cache)[188] | 44,000,000,000– 48,000,000,000[189] |

2023 | Intel | 10 nm ESF (Intel 7) | 1,600 mm2 | 27,500,000– 30,000,000 |

| Apple M2 Pro (12-core 64-bit ARM64 SoC, SIMD, caches) | 40,000,000,000[190] | 2023 | Apple | 5 nm | ? | ? |

| Apple M2 Max (12-core 64-bit ARM64 SoC, SIMD, caches) | 67,000,000,000[190] | 2023 | Apple | 5 nm | ? | ? |

| Apple M2 Ultra (two M2 Max dies) | 134,000,000,000[191] | 2023 | Apple | 5 nm | ? | ? |

| AMD Epyc Bergamo (4th gen/97X4 series) 9-chip module (up to 128 cores and 256 MB (L3) + 128 MB (L2) cache) | 82,000,000,000[192] | 2023 | AMD | 5 nm (CCD) 6 nm (IOD) |

? | ? |

| AMD Instinct MI300A (multi-chip module, 24 cores, 128 GB GPU memory + 256 MB (LLC/L3) cache) | 146,000,000,000[193][194] | 2023 | AMD | 5 nm (CCD, GCD) 6 nm (IOD) |

1,017 mm2 | 144,000,000 |

| RV32-WUJI: 3-atom-thick molybdenum disulfide on sapphire; RISC-V architecture | 5931[195] | 2025 | ? | 3000 nm | ? | ? |

| Processor | Transistor count | Year | Designer | Process (nm) |

Area (mm2) | Transistor density (tr./mm2) |

GPUs

[edit]A graphics processing unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the building of images in a frame buffer intended for output to a display.

The designer refers to the technology company that designs the logic of the integrated circuit chip (such as Nvidia and AMD). The manufacturer ("Fab.") refers to the semiconductor company that fabricates the chip using its semiconductor manufacturing process at a foundry (such as TSMC and Samsung Semiconductor). The transistor count in a chip is dependent on a manufacturer's fabrication process, with smaller semiconductor nodes typically enabling higher transistor density and thus higher transistor counts.

The random-access memory (RAM) that comes with GPUs (such as VRAM, SGRAM or HBM) greatly increases the total transistor count, with the memory typically accounting for the majority of transistors in a graphics card. For example, Nvidia's Tesla P100 has 15 billion FinFETs (16 nm) in the GPU in addition to 16 GB of HBM2 memory, totaling about 150 billion MOSFETs on the graphics card.[196] The following table does not include the memory. For memory transistor counts, see the Memory section below.

| Processor | Transistor count | Year | Designer(s) | Fab(s) | Process | Area | Transistor density (tr./mm2) |

Ref |

|---|---|---|---|---|---|---|---|---|

| μPD7220 GDC | 40,000 | 1982 | NEC | NEC | 5,000 nm | ? | ? | [197] |

| ARTC HD63484 | 60,000 | 1984 | Hitachi | Hitachi | ? | ? | ? | [198] |

| CBM Agnus | 21,000 | 1985 | Commodore | CSG | 5,000 nm | ? | ? | [199][200] |

| YM7101 VDP | 100,000 | 1988 | Yamaha, Sega | Yamaha | ? | ? | ? | [201] |

| Tom & Jerry | 750,000 | 1993 | Flare | IBM | ? | ? | ? | [201] |

| VDP1 | 1,000,000 | 1994 | Sega | Hitachi | 500 nm | ? | ? | [202] |

| Sony GPU | 1,000,000 | 1994 | Toshiba | LSI | 500 nm | ? | ? | [203][204][205] |

| NV1 | 1,000,000 | 1995 | Nvidia, Sega | SGS | 500 nm | 90 mm2 | 11,000 | |

| Reality Coprocessor | 2,600,000 | 1996 | SGI | NEC | 350 nm | 81 mm2 | 32,100 | [206] |

| PowerVR | 1,200,000 | 1996 | VideoLogic | NEC | 350 nm | ? | ? | [207] |

| Voodoo Graphics | 1,000,000 | 1996 | 3dfx | TSMC | 500 nm | ? | ? | [208][209] |

| Voodoo Rush | 1,000,000 | 1997 | 3dfx | TSMC | 500 nm | ? | ? | [208][209] |

| NV3 | 3,500,000 | 1997 | Nvidia | SGS, TSMC | 350 nm | 90 mm2 | 38,900 | [210][211] |

| i740 | 3,500,000 | 1998 | Intel, Real3D | Real3D | 350 nm | ? | ? | [208][209] |

| Voodoo 2 | 4,000,000 | 1998 | 3dfx | TSMC | 350 nm | ? | ? | |

| Voodoo Rush | 4,000,000 | 1998 | 3dfx | TSMC | 350 nm | ? | ? | |

| NV4 | 7,000,000 | 1998 | Nvidia | TSMC | 350 nm | 90 mm2 | 78,000 | [208][211] |

| PowerVR2 CLX2 | 10,000,000 | 1998 | VideoLogic | NEC | 250 nm | 116 mm2 | 86,200 | [212][213][214][215] |

| PowerVR2 PMX1 | 6,000,000 | 1999 | VideoLogic | NEC | 250 nm | ? | ? | [216] |

| Rage 128 | 8,000,000 | 1999 | ATI | TSMC, UMC | 250 nm | 70 mm2 | 114,000 | [209] |

| Voodoo 3 | 8,100,000 | 1999 | 3dfx | TSMC | 250 nm | ? | ? | [217] |

| Graphics Synthesizer | 43,000,000 | 1999 | Sony, Toshiba | Sony, Toshiba | 180 nm | 279 mm2 | 154,000 | [65][218][64][63] |

| NV5 | 15,000,000 | 1999 | Nvidia | TSMC | 250 nm | 90 mm2 | 167,000 | [209] |

| NV10 | 17,000,000 | 1999 | Nvidia | TSMC | 220 nm | 111 mm2 | 153,000 | [219][211] |

| NV11 | 20,000,000 | 2000 | Nvidia | TSMC | 180 nm | 65 mm2 | 308,000 | [209] |

| NV15 | 25,000,000 | 2000 | Nvidia | TSMC | 180 nm | 81 mm2 | 309,000 | [209] |

| Voodoo 4 | 14,000,000 | 2000 | 3dfx | TSMC | 220 nm | ? | ? | [208][209] |

| Voodoo 5 | 28,000,000 | 2000 | 3dfx | TSMC | 220 nm | ? | ? | [208][209] |

| R100 | 30,000,000 | 2000 | ATI | TSMC | 180 nm | 97 mm2 | 309,000 | [209] |

| Flipper | 51,000,000 | 2000 | ArtX | NEC | 180 nm | 106 mm2 | 481,000 | [65][220] |

| PowerVR3 KYRO | 14,000,000 | 2001 | Imagination | ST | 250 nm | ? | ? | [208][209] |

| PowerVR3 KYRO II | 15,000,000 | 2001 | Imagination | ST | 180 nm | |||

| NV2A | 60,000,000 | 2001 | Nvidia | TSMC | 150 nm | ? | ? | [208][221] |

| NV20 | 57,000,000 | 2001 | Nvidia | TSMC | 150 nm | 128 mm2 | 445,000 | [209] |

| NV25 | 63,000,000 | 2002 | Nvidia | TSMC | 150 nm | 142 mm2 | 444,000 | |

| NV28 | 36,000,000 | 2002 | Nvidia | TSMC | 150 nm | 101 mm2 | 356,000 | |

| NV17/18 | 29,000,000 | 2002 | Nvidia | TSMC | 150 nm | 65 mm2 | 446,000 | |

| R200 | 60,000,000 | 2001 | ATI | TSMC | 150 nm | 68 mm2 | 882,000 | |

| R300 | 107,000,000 | 2002 | ATI | TSMC | 150 nm | 218 mm2 | 490,800 | |

| R360 | 117,000,000 | 2003 | ATI | TSMC | 150 nm | 218 mm2 | 536,700 | |

| NV34 | 45,000,000 | 2003 | Nvidia | TSMC | 150 nm | 124 mm2 | 363,000 | |

| NV34b | 45,000,000 | 2004 | Nvidia | TSMC | 140 nm | 91 mm2 | 495,000 | |

| NV30 | 125,000,000 | 2003 | Nvidia | TSMC | 130 nm | 199 mm2 | 628,000 | |

| NV31 | 80,000,000 | 2003 | Nvidia | TSMC | 130 nm | 121 mm2 | 661,000 | |

| NV35/38 | 135,000,000 | 2003 | Nvidia | TSMC | 130 nm | 207 mm2 | 652,000 | |

| NV36 | 82,000,000 | 2003 | Nvidia | IBM | 130 nm | 133 mm2 | 617,000 | |

| R480 | 160,000,000 | 2004 | ATI | TSMC | 130 nm | 297 mm2 | 538,700 | |

| NV40 | 222,000,000 | 2004 | Nvidia | IBM | 130 nm | 305 mm2 | 727,900 | |

| NV44 | 75,000,000 | 2004 | Nvidia | IBM | 130 nm | 110 mm2 | 681,800 | |

| NV41 | 222,000,000 | 2005 | Nvidia | TSMC | 110 nm | 225 mm2 | 986,700 | [209] |

| NV42 | 198,000,000 | 2005 | Nvidia | TSMC | 110 nm | 222 mm2 | 891,900 | |

| NV43 | 146,000,000 | 2005 | Nvidia | TSMC | 110 nm | 154 mm2 | 948,100 | |

| G70 | 303,000,000 | 2005 | Nvidia | TSMC, Chartered | 110 nm | 333 mm2 | 909,900 | |

| Xenos | 232,000,000 | 2005 | ATI | TSMC | 90 nm | 182 mm2 | 1,275,000 | [222][223] |

| RSX Reality Synthesizer | 300,000,000 | 2005 | Nvidia, Sony | Sony | 90 nm | 186 mm2 | 1,613,000 | [224][225] |

| R520 | 321,000,000 | 2005 | ATI | TSMC | 90 nm | 288 mm2 | 1,115,000 | [209] |

| RV530 | 157,000,000 | 2005 | ATI | TSMC | 90 nm | 150 mm2 | 1,047,000 | |

| RV515 | 107,000,000 | 2005 | ATI | TSMC | 90 nm | 100 mm2 | 1,070,000 | |

| R580 | 384,000,000 | 2006 | ATI | TSMC | 90 nm | 352 mm2 | 1,091,000 | |

| G71 | 278,000,000 | 2006 | Nvidia | TSMC | 90 nm | 196 mm2 | 1,418,000 | |

| G72 | 112,000,000 | 2006 | Nvidia | TSMC | 90 nm | 81 mm2 | 1,383,000 | |

| G73 | 177,000,000 | 2006 | Nvidia | TSMC | 90 nm | 125 mm2 | 1,416,000 | |

| G80 | 681,000,000 | 2006 | Nvidia | TSMC | 90 nm | 480 mm2 | 1,419,000 | |

| G86 Tesla | 210,000,000 | 2007 | Nvidia | TSMC | 80 nm | 127 mm2 | 1,654,000 | |

| G84 Tesla | 289,000,000 | 2007 | Nvidia | TSMC | 80 nm | 169 mm2 | 1,710,000 | |

| RV560 | 330,000,000 | 2006 | ATI | TSMC | 80 nm | 230 mm2 | 1,435,000 | |

| R600 | 700,000,000 | 2007 | ATI | TSMC | 80 nm | 420 mm2 | 1,667,000 | |

| RV610 | 180,000,000 | 2007 | ATI | TSMC | 65 nm | 85 mm2 | 2,118,000 | [209] |

| RV630 | 390,000,000 | 2007 | ATI | TSMC | 65 nm | 153 mm2 | 2,549,000 | |

| G92 | 754,000,000 | 2007 | Nvidia | TSMC, UMC | 65 nm | 324 mm2 | 2,327,000 | |

| G94 Tesla | 505,000,000 | 2008 | Nvidia | TSMC | 65 nm | 240 mm2 | 2,104,000 | |

| G96 Tesla | 314,000,000 | 2008 | Nvidia | TSMC | 65 nm | 144 mm2 | 2,181,000 | |

| G98 Tesla | 210,000,000 | 2008 | Nvidia | TSMC | 65 nm | 86 mm2 | 2,442,000 | |

| GT200[226] | 1,400,000,000 | 2008 | Nvidia | TSMC | 65 nm | 576 mm2 | 2,431,000 | |

| RV620 | 181,000,000 | 2008 | ATI | TSMC | 55 nm | 67 mm2 | 2,701,000 | [209] |

| RV635 | 378,000,000 | 2008 | ATI | TSMC | 55 nm | 135 mm2 | 2,800,000 | |

| RV710 | 242,000,000 | 2008 | ATI | TSMC | 55 nm | 73 mm2 | 3,315,000 | |

| RV730 | 514,000,000 | 2008 | ATI | TSMC | 55 nm | 146 mm2 | 3,521,000 | |

| RV670 | 666,000,000 | 2008 | ATI | TSMC | 55 nm | 192 mm2 | 3,469,000 | |

| RV770 | 956,000,000 | 2008 | ATI | TSMC | 55 nm | 256 mm2 | 3,734,000 | |

| RV790 | 959,000,000 | 2008 | ATI | TSMC | 55 nm | 282 mm2 | 3,401,000 | [227][209] |

| G92b Tesla | 754,000,000 | 2008 | Nvidia | TSMC, UMC | 55 nm | 260 mm2 | 2,900,000 | [209] |

| G94b Tesla | 505,000,000 | 2008 | Nvidia | TSMC, UMC | 55 nm | 196 mm2 | 2,577,000 | |

| G96b Tesla | 314,000,000 | 2008 | Nvidia | TSMC, UMC | 55 nm | 121 mm2 | 2,595,000 | |

| GT200b Tesla | 1,400,000,000 | 2008 | Nvidia | TSMC, UMC | 55 nm | 470 mm2 | 2,979,000 | |

| GT218 Tesla | 260,000,000 | 2009 | Nvidia | TSMC | 40 nm | 57 mm2 | 4,561,000 | [209] |

| GT216 Tesla | 486,000,000 | 2009 | Nvidia | TSMC | 40 nm | 100 mm2 | 4,860,000 | |

| GT215 Tesla | 727,000,000 | 2009 | Nvidia | TSMC | 40 nm | 144 mm2 | 5,049,000 | |

| RV740 | 826,000,000 | 2009 | ATI | TSMC | 40 nm | 137 mm2 | 6,029,000 | |

| Cypress RV870 | 2,154,000,000 | 2009 | ATI | TSMC | 40 nm | 334 mm2 | 6,449,000 | |

| Juniper RV840 | 1,040,000,000 | 2009 | ATI | TSMC | 40 nm | 166 mm2 | 6,265,000 | |

| Redwood RV830 | 627,000,000 | 2010 | AMD (ATI) | TSMC | 40 nm | 104 mm2 | 6,029,000 | [209] |

| Cedar RV810 | 292,000,000 | 2010 | AMD | TSMC | 40 nm | 59 mm2 | 4,949,000 | |

| Cayman RV970 | 2,640,000,000 | 2010 | AMD | TSMC | 40 nm | 389 mm2 | 6,789,000 | |

| Barts RV940 | 1,700,000,000 | 2010 | AMD | TSMC | 40 nm | 255 mm2 | 6,667,000 | |

| Turks RV930 | 716,000,000 | 2011 | AMD | TSMC | 40 nm | 118 mm2 | 6,068,000 | |

| Caicos RV910 | 370,000,000 | 2011 | AMD | TSMC | 40 nm | 67 mm2 | 5,522,000 | |

| GF100 Fermi | 3,200,000,000 | 2010 | Nvidia | TSMC | 40 nm | 526 mm2 | 6,084,000 | [228] |

| GF110 Fermi | 3,000,000,000 | 2010 | Nvidia | TSMC | 40 nm | 520 mm2 | 5,769,000 | [228] |

| GF104 Fermi | 1,950,000,000 | 2011 | Nvidia | TSMC | 40 nm | 332 mm2 | 5,873,000 | [209] |

| GF106 Fermi | 1,170,000,000 | 2010 | Nvidia | TSMC | 40 nm | 238 mm2 | 4,916,000 | [209] |

| GF108 Fermi | 585,000,000 | 2011 | Nvidia | TSMC | 40 nm | 116 mm2 | 5,043,000 | [209] |

| GF119 Fermi | 292,000,000 | 2011 | Nvidia | TSMC | 40 nm | 79 mm2 | 3,696,000 | [209] |

| Tahiti GCN1 | 4,312,711,873 | 2011 | AMD | TSMC | 28 nm | 365 mm2 | 11,820,000 | [229] |

| Cape Verde GCN1 | 1,500,000,000 | 2012 | AMD | TSMC | 28 nm | 123 mm2 | 12,200,000 | [209] |

| Pitcairn GCN1 | 2,800,000,000 | 2012 | AMD | TSMC | 28 nm | 212 mm2 | 13,210,000 | [209] |

| GK110 Kepler | 7,080,000,000 | 2012 | Nvidia | TSMC | 28 nm | 561 mm2 | 12,620,000 | [230][231] |

| GK104 Kepler | 3,540,000,000 | 2012 | Nvidia | TSMC | 28 nm | 294 mm2 | 12,040,000 | [232] |

| GK106 Kepler | 2,540,000,000 | 2012 | Nvidia | TSMC | 28 nm | 221 mm2 | 11,490,000 | [209] |

| GK107 Kepler | 1,270,000,000 | 2012 | Nvidia | TSMC | 28 nm | 118 mm2 | 10,760,000 | [209] |

| GK208 Kepler | 1,020,000,000 | 2013 | Nvidia | TSMC | 28 nm | 79 mm2 | 12,910,000 | [209] |

| Oland GCN1 | 1,040,000,000 | 2013 | AMD | TSMC | 28 nm | 90 mm2 | 11,560,000 | [209] |

| Bonaire GCN2 | 2,080,000,000 | 2013 | AMD | TSMC | 28 nm | 160 mm2 | 13,000,000 | |

| Durango (Xbox One) | 4,800,000,000 | 2013 | AMD | TSMC | 28 nm | 375 mm2 | 12,800,000 | [233][234] |

| Liverpool (PlayStation 4) | ? | 2013 | AMD | TSMC | 28 nm | 348 mm2 | ? | [235] |

| Hawaii GCN2 | 6,300,000,000 | 2013 | AMD | TSMC | 28 nm | 438 mm2 | 14,380,000 | [209] |

| GM200 Maxwell | 8,000,000,000 | 2015 | Nvidia | TSMC | 28 nm | 601 mm2 | 13,310,000 | |

| GM204 Maxwell | 5,200,000,000 | 2014 | Nvidia | TSMC | 28 nm | 398 mm2 | 13,070,000 | |

| GM206 Maxwell | 2,940,000,000 | 2014 | Nvidia | TSMC | 28 nm | 228 mm2 | 12,890,000 | |

| GM107 Maxwell | 1,870,000,000 | 2014 | Nvidia | TSMC | 28 nm | 148 mm2 | 12,640,000 | |

| Tonga GCN3 | 5,000,000,000 | 2014 | AMD | TSMC, GlobalFoundries | 28 nm | 366 mm2 | 13,660,000 | |

| Fiji GCN3 | 8,900,000,000 | 2015 | AMD | TSMC | 28 nm | 596 mm2 | 14,930,000 | |

| Durango 2 (Xbox One S) | 5,000,000,000 | 2016 | AMD | TSMC | 16 nm | 240 mm2 | 20,830,000 | [236] |

| Neo (PlayStation 4 Pro) | 5,700,000,000 | 2016 | AMD | TSMC | 16 nm | 325 mm2 | 17,540,000 | [237] |

| Ellesmere/Polaris 10 GCN4 | 5,700,000,000 | 2016 | AMD | Samsung, GlobalFoundries | 14 nm | 232 mm2 | 24,570,000 | [238] |

| Baffin/Polaris 11 GCN4 | 3,000,000,000 | 2016 | AMD | Samsung, GlobalFoundries | 14 nm | 123 mm2 | 24,390,000 | [209][239] |

| Lexa/Polaris 12 GCN4 | 2,200,000,000 | 2017 | AMD | Samsung, GlobalFoundries | 14 nm | 101 mm2 | 21,780,000 | [209][239] |

| GP100 Pascal | 15,300,000,000 | 2016 | Nvidia | TSMC, Samsung | 16 nm | 610 mm2 | 25,080,000 | [240][241] |

| GP102 Pascal | 11,800,000,000 | 2016 | Nvidia | TSMC, Samsung | 16 nm | 471 mm2 | 25,050,000 | [209][241] |

| GP104 Pascal | 7,200,000,000 | 2016 | Nvidia | TSMC | 16 nm | 314 mm2 | 22,930,000 | [209][241] |

| GP106 Pascal | 4,400,000,000 | 2016 | Nvidia | TSMC | 16 nm | 200 mm2 | 22,000,000 | [209][241] |

| GP107 Pascal | 3,300,000,000 | 2016 | Nvidia | Samsung | 14 nm | 132 mm2 | 25,000,000 | [209][241] |

| GP108 Pascal | 1,850,000,000 | 2017 | Nvidia | Samsung | 14 nm | 74 mm2 | 25,000,000 | [209][241] |

| Scorpio (Xbox One X) | 6,600,000,000 | 2017 | AMD | TSMC | 16 nm | 367 mm2 | 17,980,000 | [233][242] |

| Vega 10 GCN5 | 12,500,000,000 | 2017 | AMD | Samsung, GlobalFoundries | 14 nm | 484 mm2 | 25,830,000 | [243] |

| GV100 Volta | 21,100,000,000 | 2017 | Nvidia | TSMC | 12 nm | 815 mm2 | 25,890,000 | [244] |

| TU102 Turing | 18,600,000,000 | 2018 | Nvidia | TSMC | 12 nm | 754 mm2 | 24,670,000 | [245] |

| TU104 Turing | 13,600,000,000 | 2018 | Nvidia | TSMC | 12 nm | 545 mm2 | 24,950,000 | |

| TU106 Turing | 10,800,000,000 | 2018 | Nvidia | TSMC | 12 nm | 445 mm2 | 24,270,000 | |

| TU116 Turing | 6,600,000,000 | 2019 | Nvidia | TSMC | 12 nm | 284 mm2 | 23,240,000 | [246] |

| TU117 Turing | 4,700,000,000 | 2019 | Nvidia | TSMC | 12 nm | 200 mm2 | 23,500,000 | [247] |

| Vega 20 GCN5 | 13,230,000,000 | 2018 | AMD | TSMC | 7 nm | 331 mm2 | 39,970,000 | [209] |

| Navi 10 RDNA | 10,300,000,000 | 2019 | AMD | TSMC | 7 nm | 251 mm2 | 41,040,000 | [248] |

| Navi 12 RDNA | ? | 2020 | AMD | TSMC | 7 nm | ? | ? | |

| Navi 14 RDNA | 6,400,000,000 | 2019 | AMD | TSMC | 7 nm | 158 mm2 | 40,510,000 | [249] |

| Arcturus CDNA | 25,600,000,000 | 2020 | AMD | TSMC | 7 nm | 750 mm2 | 34,100,000 | [250] |

| GA100 Ampere | 54,200,000,000 | 2020 | Nvidia | TSMC | 7 nm | 826 mm2 | 65,620,000 | [251][252] |

| GA102 Ampere | 28,300,000,000 | 2020 | Nvidia | Samsung | 8 nm | 628 mm2 | 45,035,000 | [253][254] |

| GA103 Ampere | 22,000,000,000 | 2022 | Nvidia | Samsung | 8 nm | 496 mm2 | 44,400,000 | [255] |

| GA104 Ampere | 17,400,000,000 | 2020 | Nvidia | Samsung | 8 nm | 392 mm2 | 44,390,000 | [256] |

| GA106 Ampere | 12,000,000,000 | 2021 | Nvidia | Samsung | 8 nm | 276 mm2 | 43,480,000 | [257] |

| GA107 Ampere | 8,700,000,000 | 2021 | Nvidia | Samsung | 8 nm | 200 mm2 | 43,500,000 | [258] |

| Navi 21 RDNA2 | 26,800,000,000 | 2020 | AMD | TSMC | 7 nm | 520 mm2 | 51,540,000 | |

| Navi 22 RDNA2 | 17,200,000,000 | 2021 | AMD | TSMC | 7 nm | 335 mm2 | 51,340,000 | |

| Navi 23 RDNA2 | 11,060,000,000 | 2021 | AMD | TSMC | 7 nm | 237 mm2 | 46,670,000 | |

| Navi 24 RDNA2 | 5,400,000,000 | 2022 | AMD | TSMC | 6 nm | 107 mm2 | 50,470,000 | |

| Aldebaran CDNA2 | 58,200,000,000 (MCM) | 2021 | AMD | TSMC | 6 nm | 1448–1474 mm2[259] 1480 mm2[260] 1490–1580 mm2[261] |

39,500,000–40,200,000 39,200,000 36,800,000–39,100,000 |

[262] |

| GH100 Hopper | 80,000,000,000 | 2022 | Nvidia | TSMC | 4 nm | 814 mm2 | 98,280,000 | [263] |

| AD102 Ada Lovelace | 76,300,000,000 | 2022 | Nvidia | TSMC | 4 nm | 608.4 mm2 | 125,411,000 | [264] |

| AD103 Ada Lovelace | 45,900,000,000 | 2022 | Nvidia | TSMC | 4 nm | 378.6 mm2 | 121,240,000 | [265] |

| AD104 Ada Lovelace | 35,800,000,000 | 2022 | Nvidia | TSMC | 4 nm | 294.5 mm2 | 121,560,000 | [265] |

| AD106 Ada Lovelace | ? | 2023 | Nvidia | TSMC | 4 nm | 190 mm2 | ? | [266][267] |

| AD107 Ada Lovelace | ? | 2023 | Nvidia | TSMC | 4 nm | 146 mm2 | ? | [266][268] |

| Navi 31 RDNA3 | 57,700,000,000 (MCM) 45,400,000,000 (GCD) 6×2,050,000,000 (MCD) |

2022 | AMD | TSMC | 5 nm (GCD) 6 nm (MCD) |

531 mm2 (MCM) 306 mm2 (GCD) 6×37.5 mm2 (MCD) |

109,200,000 (MCM) 132,400,000 (GCD) 54,640,000 (MCD) |

[269][270][271] |

| Navi 32 RDNA3 | 28,100,000,000 (MCM) | 2023 | AMD | TSMC | 5 nm (GCD) 6 nm (MCD) |

350 mm2 (MCM) 200 mm2 (GCD) 4×37.5 mm2 (MCD) |

80,200,000 (MCM) | [272] |

| Navi 33 RDNA3 | 13,300,000,000 | 2023 | AMD | TSMC | 6 nm | 204 mm2 | 65,200,000 | [273] |

| Aqua Vanjaram CDNA3 | 153,000,000,000 (MCM) | 2023 | AMD | TSMC | 5 nm (GCD) 6 nm (MCD) |

? | ? | [274][275] |

| GB200 Grace Blackwell | 208,000,000,000 (MCM) | 2024 | Nvidia | TSMC | 4 nm | ? | ? | [276] |

| GB202 Blackwell | 92,200,000,000 | 2025 | Nvidia | TSMC | 4 nm | 750 mm2 | 122,600,000 | [277] |

| GB203 Blackwell | 45,600,000,000 | 2025 | Nvidia | TSMC | 4 nm | 378 mm2 | 120,600,000 | [278] |

| GB205 Blackwell | 31,100,000,000 | 2025 | Nvidia | TSMC | 4 nm | 263 mm2 | 118,300,000 | [279] |

| GB206 Blackwell | 21,900,000,000 | 2025 | Nvidia | TSMC | 4 nm | 181 mm2 | 121,000,000 | [280] |

| GB207 Blackwell | 16,900,000,000 | 2025 | Nvidia | TSMC | 4 nm | 149 mm2 | 113,400,000 | [281] |

| Navi 44 RDNA4 | 29,700,000,000 | 2025 | AMD | TSMC | 4 nm | 199 mm2 | 149,200,000 | [282] |

| Navi 48 RDNA4 | 53,900,000,000 | 2025 | AMD | TSMC | 4 nm | 357 mm2 | 151,000,000 | [283] |

| Processor | Transistor count | Year | Designer(s) | Fab(s) | MOS process | Area | Transistor density (tr./mm2) |

Ref |

FPGA

[edit]A field-programmable gate array (FPGA) is an integrated circuit designed to be configured by a customer or a designer after manufacturing.

| FPGA | Transistor count | Date of introduction | Designer | Manufacturer | Process | Area | Transistor density, tr./mm2 | Ref |

|---|---|---|---|---|---|---|---|---|

| Virtex | 70,000,000 | 1997 | Xilinx | |||||

| Virtex-E | 200,000,000 | 1998 | Xilinx | |||||

| Virtex-II | 350,000,000 | 2000 | Xilinx | 130 nm | ||||

| Virtex-II PRO | 430,000,000 | 2002 | Xilinx | |||||

| Virtex-4 | 1,000,000,000 | 2004 | Xilinx | 90 nm | ||||

| Virtex-5 | 1,100,000,000 | 2006 | Xilinx | TSMC | 65 nm | [284] | ||

| Stratix IV | 2,500,000,000 | 2008 | Altera | TSMC | 40 nm | [285] | ||

| Stratix V | 3,800,000,000 | 2011 | Altera | TSMC | 28 nm | [citation needed] | ||

| Arria 10 | 5,300,000,000 | 2014 | Altera | TSMC | 20 nm | [286] | ||

| Virtex-7 2000T | 6,800,000,000 | 2011 | Xilinx | TSMC | 28 nm | [287] | ||

| Stratix 10 SX 2800 | 17,000,000,000 | TBD | Intel | Intel | 14 nm | 560 mm2 | 30,400,000 | [288][289] |

| Virtex-Ultrascale VU440 | 20,000,000,000 | Q1 2015 | Xilinx | TSMC | 20 nm | [290][291] | ||

| Virtex-Ultrascale+ VU19P | 35,000,000,000 | 2020 | Xilinx | TSMC | 16 nm | 900 mm2[h] | 38,900,000 | [292][293][294] |

| Versal VC1902 | 37,000,000,000 | 2H 2019 | Xilinx | TSMC | 7 nm | [295][296][297] | ||

| Stratix 10 GX 10M | 43,300,000,000 | Q4 2019 | Intel | Intel | 14 nm | 1,400 mm2[h] | 30,930,000 | [298][299] |

| Versal VP1802 | 92,000,000,000 | 2021 ?[i] | Xilinx | TSMC | 7 nm | [300][301] |

Memory

[edit]Semiconductor memory is an electronic data storage device, often used as computer memory, implemented on integrated circuits. Nearly all semiconductor memories since the 1970s have used MOSFETs (MOS transistors), replacing earlier bipolar junction transistors. There are two major types of semiconductor memory: random-access memory (RAM) and non-volatile memory (NVM). In turn, there are two major RAM types: dynamic random-access memory (DRAM) and static random-access memory (SRAM), as well as two major NVM types: flash memory and read-only memory (ROM).

Typical CMOS SRAM consists of six transistors per cell. For DRAM, 1T1C, which means one transistor and one capacitor structure, is common. Capacitor charged or not[clarification needed] is used to store 1 or 0. In flash memory, the data is stored in floating gates, and the resistance of the transistor is sensed[clarification needed] to interpret the data stored. Depending on how fine scale the resistance could be separated[clarification needed], one transistor could store up to three bits, meaning eight distinctive levels of resistance possible per transistor. However, a finer scale comes with the cost of repeatability issues, and hence reliability. Typically, low grade 2-bits MLC flash is used for flash drives, so a 16 GB flash drive contains roughly 64 billion transistors.

For SRAM chips, six-transistor cells (six transistors per bit) was the standard.[302] DRAM chips during the early 1970s had three-transistor cells (three transistors per bit), before single-transistor cells (one transistor per bit) became standard since the era of 4 Kb DRAM in the mid-1970s.[303][304] In single-level flash memory, each cell contains one floating-gate MOSFET (one transistor per bit),[305] whereas multi-level flash contains 2, 3 or 4 bits per transistor.

Flash memory chips are commonly stacked up in layers, up to 128-layer in production,[306] and 136-layer managed,[307] and available in end-user devices up to 69-layer from manufacturers.

| Chip name | Capacity (bits) | RAM type | Transistor count | Date of introduction | Manufacturer(s) | Process | Area | Transistor density (tr./mm2) |

Ref |

|---|---|---|---|---|---|---|---|---|---|

| — | 1-bit | SRAM (cell) | 6 | 1963 | Fairchild | — | — | ? | [308] |

| — | 1-bit | DRAM (cell) | 1 | 1965 | Toshiba | — | — | ? | [309][310] |

| ? | 8-bit | SRAM (bipolar) | 48 | 1965 | SDS, Signetics | ? | ? | ? | [308] |

| SP95 | 16-bit | SRAM (bipolar) | 80 | 1965 | IBM | ? | ? | ? | [311] |

| TMC3162 | 16-bit | SRAM (TTL) | 96 | 1966 | Transitron | — | ? | ? | [304] |

| ? | ? | SRAM (MOS) | ? | 1966 | NEC | ? | ? | ? | [303] |

| 256-bit | DRAM (IC) | 256 | 1968 | Fairchild | ? | ? | ? | [304] | |

| 64-bit | SRAM (PMOS) | 384 | 1968 | Fairchild | ? | ? | ? | [303] | |

| 144-bit | SRAM (NMOS) | 864 | 1968 | NEC | |||||

| 1101 | 256-bit | SRAM (PMOS) | 1,536 | 1969 | Intel | 12,000 nm | ? | ? | [312][313][314] |

| 1102 | 1 Kb | DRAM (PMOS) | 3,072 | 1970 | Intel, Honeywell | ? | ? | ? | [303] |

| 1103 | 1 Kb | DRAM (PMOS) | 3,072 | 1970 | Intel | 8,000 nm | 10 mm2 | 307 | [315][302][316][304] |

| μPD403 | 1 Kb | DRAM (NMOS) | 3,072 | 1971 | NEC | ? | ? | ? | [317] |

| ? | 2 Kb | DRAM (PMOS) | 6,144 | 1971 | General Instrument | ? | 12.7 mm2 | 484 | [318] |

| 2102 | 1 Kb | SRAM (NMOS) | 6,144 | 1972 | Intel | ? | ? | ? | [312][319] |

| ? | 8 Kb | DRAM (PMOS) | 8,192 | 1973 | IBM | ? | 18.8 mm2 | 436 | [318] |

| 5101 | 1 Kb | SRAM (CMOS) | 6,144 | 1974 | Intel | ? | ? | ? | [312] |

| 2116 | 16 Kb | DRAM (NMOS) | 16,384 | 1975 | Intel | ? | ? | ? | [320][304] |

| 2114 | 4 Kb | SRAM (NMOS) | 24,576 | 1976 | Intel | ? | ? | ? | [312][321] |

| ? | 4 Kb | SRAM (CMOS) | 24,576 | 1977 | Toshiba | ? | ? | ? | [313] |

| 64 Kb | DRAM (NMOS) | 65,536 | 1977 | NTT | ? | 35.4 mm2 | 1851 | [318] | |

| DRAM (VMOS) | 65,536 | 1979 | Siemens | ? | 25.2 mm2 | 2601 | [318] | ||

| 16 Kb | SRAM (CMOS) | 98,304 | 1980 | Hitachi, Toshiba | ? | ? | ? | [322] | |

| 256 Kb | DRAM (NMOS) | 262,144 | 1980 | NEC | 1,500 nm | 41.6 mm2 | 6302 | [318] | |

| NTT | 1,000 nm | 34.4 mm2 | 7620 | [318] | |||||

| 64 Kb | SRAM (CMOS) | 393,216 | 1980 | Matsushita | ? | ? | ? | [322] | |

| 288 Kb | DRAM | 294,912 | 1981 | IBM | ? | 25 mm2 | 11,800 | [323] | |

| 64 Kb | SRAM (NMOS) | 393,216 | 1982 | Intel | 1,500 nm | ? | ? | [322] | |

| 256 Kb | SRAM (CMOS) | 1,572,864 | 1984 | Toshiba | 1,200 nm | ? | ? | [322][314] | |

| 8 Mb | DRAM | 8,388,608 | January 5, 1984 | Hitachi | ? | ? | ? | [324][325] | |

| 16 Mb | DRAM (CMOS) | 16,777,216 | 1987 | NTT | 700 nm | 148 mm2 | 113,400 | [318] | |

| 4 Mb | SRAM (CMOS) | 25,165,824 | 1990 | NEC, Toshiba, Hitachi, Mitsubishi | ? | ? | ? | [322] | |

| 64 Mb | DRAM (CMOS) | 67,108,864 | 1991 | Matsushita, Mitsubishi, Fujitsu, Toshiba | 400 nm | ||||

| KM48SL2000 | 16 Mb | SDRAM | 16,777,216 | 1992 | Samsung | ? | ? | ? | [326][327] |

| ? | 16 Mb | SRAM (CMOS) | 100,663,296 | 1992 | Fujitsu, NEC | 400 nm | ? | ? | [322] |

| 256 Mb | DRAM (CMOS) | 268,435,456 | 1993 | Hitachi, NEC | 250 nm | ||||

| 1 Gb | DRAM | 1,073,741,824 | January 9, 1995 | NEC | 250 nm | ? | ? | [328][329] | |

| Hitachi | 160 nm | ? | ? | ||||||

| SDRAM | 1,073,741,824 | 1996 | Mitsubishi | 150 nm | ? | ? | [322] | ||

| SDRAM (SOI) | 1,073,741,824 | 1997 | Hyundai | ? | ? | ? | [330] | ||

| 4 Gb | DRAM (4-bit) | 1,073,741,824 | 1997 | NEC | 150 nm | ? | ? | [322] | |

| DRAM | 4,294,967,296 | 1998 | Hyundai | ? | ? | ? | [330] | ||

| 8 Gb | SDRAM (DDR3) | 8,589,934,592 | April 2008 | Samsung | 50 nm | ? | ? | [331] | |

| 16 Gb | SDRAM (DDR3) | 17,179,869,184 | 2008 | ||||||

| 32 Gb | SDRAM (HBM2) | 34,359,738,368 | 2016 | Samsung | 20 nm | ? | ? | [332] | |

| 64 Gb | SDRAM (HBM2) | 68,719,476,736 | 2017 | ||||||

| 128 Gb | SDRAM (DDR4) | 137,438,953,472 | 2018 | Samsung | 10 nm | ? | ? | [333] | |

| ? | RRAM[334] (3DSoC)[335] | ? | 2019 | SkyWater Technology[336] | 90 nm | ? | ? |

| Chip name | Capacity (bits) | Flash type | FGMOS transistor count | Date of introduction | Manufacturer(s) | Process | Area | Transistor density (tr./mm2) |

Ref |

|---|---|---|---|---|---|---|---|---|---|

| ? | 256 Kb | NOR | 262,144 | 1985 | Toshiba | 2,000 nm | ? | ? | [322] |

| 1 Mb | NOR | 1,048,576 | 1989 | Seeq, Intel | ? | ||||

| 4 Mb | NAND | 4,194,304 | 1989 | Toshiba | 1,000 nm | ||||

| 16 Mb | NOR | 16,777,216 | 1991 | Mitsubishi | 600 nm | ||||

| DD28F032SA | 32 Mb | NOR | 33,554,432 | 1993 | Intel | ? | 280 mm2 | 120,000 | [312][337] |

| ? | 64 Mb | NOR | 67,108,864 | 1994 | NEC | 400 nm | ? | ? | [322] |

| NAND | 67,108,864 | 1996 | Hitachi | ||||||

| 128 Mb | NAND | 134,217,728 | 1996 | Samsung, Hitachi | ? | ||||

| 256 Mb | NAND | 268,435,456 | 1999 | Hitachi, Toshiba | 250 nm | ||||

| 512 Mb | NAND | 536,870,912 | 2000 | Toshiba | ? | ? | ? | [338] | |

| 1 Gb | 2-bit NAND | 536,870,912 | 2001 | Samsung | ? | ? | ? | [322] | |

| Toshiba, SanDisk | 160 nm | ? | ? | [339] | |||||

| 2 Gb | NAND | 2,147,483,648 | 2002 | Samsung, Toshiba | ? | ? | ? | [340][341] | |

| 8 Gb | NAND | 8,589,934,592 | 2004 | Samsung | 60 nm | ? | ? | [340] | |

| 16 Gb | NAND | 17,179,869,184 | 2005 | Samsung | 50 nm | ? | ? | [342] | |

| 32 Gb | NAND | 34,359,738,368 | 2006 | Samsung | 40 nm | ||||

| THGAM | 128 Gb | Stacked NAND | 128,000,000,000 | April 2007 | Toshiba | 56 nm | 252 mm2 | 507,900,000 | [343] |

| THGBM | 256 Gb | Stacked NAND | 256,000,000,000 | 2008 | Toshiba | 43 nm | 353 mm2 | 725,200,000 | [344] |

| THGBM2 | 1 Tb | Stacked 4-bit NAND | 256,000,000,000 | 2010 | Toshiba | 32 nm | 374 mm2 | 684,500,000 | [345] |

| KLMCG8GE4A | 512 Gb | Stacked 2-bit NAND | 256,000,000,000 | 2011 | Samsung | ? | 192 mm2 | 1,333,000,000 | [346] |

| KLUFG8R1EM | 4 Tb | Stacked 3-bit V-NAND | 1,365,333,333,504 | 2017 | Samsung | ? | 150 mm2 | 9,102,000,000 | [347] |

| eUFS (1 TB) | 8 Tb | Stacked 4-bit V-NAND | 2,048,000,000,000 | 2019 | Samsung | ? | 150 mm2 | 13,650,000,000 | [348][349] |

| ? | 1 Tb | 232L TLC NAND die | 333,333,333,333 | 2022 | Micron | ? | 68.5 mm2 (memory array) |

4,870,000,000 (14.6 Gbit/mm2) |

[350][351][352][353] |

| ? | 16 Tb | 232L package | 5,333,333,333,333 | 2022 | Micron | ? | 68.5 mm2 (memory array) |

77,900,000,000 (16×14.6 Gbit/mm2) |

| Chip name | Capacity (bits) | ROM type | Transistor count | Date of introduction | Manufacturer(s) | Process | Area | Ref |

|---|---|---|---|---|---|---|---|---|

| ? | ? | PROM | ? | 1956 | Arma | — | ? | [354][355] |

| 1 Kb | ROM (MOS) | 1,024 | 1965 | General Microelectronics | ? | ? | [356] | |

| 3301 | 1 Kb | ROM (bipolar) | 1,024 | 1969 | Intel | — | ? | [356] |

| 1702 | 2 Kb | EPROM (MOS) | 2,048 | 1971 | Intel | ? | 15 mm2 | [357] |

| ? | 4 Kb | ROM (MOS) | 4,096 | 1974 | AMD, General Instrument | ? | ? | [356] |

| 2708 | 8 Kb | EPROM (MOS) | 8,192 | 1975 | Intel | ? | ? | [312] |

| ? | 2 Kb | EEPROM (MOS) | 2,048 | 1976 | Toshiba | ? | ? | [358] |

| μCOM-43 ROM | 16 Kb | PROM (PMOS) | 16,000 | 1977 | NEC | ? | ? | [359] |

| 2716 | 16 Kb | EPROM (TTL) | 16,384 | 1977 | Intel | — | ? | [315][360] |

| EA8316F | 16 Kb | ROM (NMOS) | 16,384 | 1978 | Electronic Arrays | ? | 436 mm2 | [356][361] |

| 2732 | 32 Kb | EPROM | 32,768 | 1978 | Intel | ? | ? | [312] |

| 2364 | 64 Kb | ROM | 65,536 | 1978 | Intel | ? | ? | [362] |

| 2764 | 64 Kb | EPROM | 65,536 | 1981 | Intel | 3,500 nm | ? | [312][322] |

| 27128 | 128 Kb | EPROM | 131,072 | 1982 | Intel | ? | ||

| 27256 | 256 Kb | EPROM (HMOS) | 262,144 | 1983 | Intel | ? | ? | [312][363] |

| ? | 256 Kb | EPROM (CMOS) | 262,144 | 1983 | Fujitsu | ? | ? | [364] |

| 512 Kb | EPROM (NMOS) | 524,288 | 1984 | AMD | 1,700 nm | ? | [322] | |

| 27512 | 512 Kb | EPROM (HMOS) | 524,288 | 1984 | Intel | ? | ? | [312][365] |

| ? | 1 Mb | EPROM (CMOS) | 1,048,576 | 1984 | NEC | 1,200 nm | ? | [322] |

| 4 Mb | EPROM (CMOS) | 4,194,304 | 1987 | Toshiba | 800 nm | |||

| 16 Mb | EPROM (CMOS) | 16,777,216 | 1990 | NEC | 600 nm | |||

| MROM | 16,777,216 | 1995 | AKM, Hitachi | ? | ? | [329] |

Transistor computers

[edit]

Before transistors were invented, relays were used in commercial tabulating machines and experimental early computers. The world's first working programmable, fully automatic digital computer,[366] the 1941 Z3 22-bit word length computer, had 2,600 relays, and operated at a clock frequency of about 4–5 Hz. The 1940 Complex Number Computer had fewer than 500 relays,[367] but it was not fully programmable. The earliest practical computers used vacuum tubes and solid-state diode logic. ENIAC had 18,000 vacuum tubes, 7,200 crystal diodes, and 1,500 relays, with many of the vacuum tubes containing two triode elements.

The second generation of computers were transistor computers that featured boards filled with discrete transistors, solid-state diodes and magnetic memory cores. The experimental 1953 48-bit Transistor Computer, developed at the University of Manchester, is widely believed to be the first transistor computer to come into operation anywhere in the world (the prototype had 92 point-contact transistors and 550 diodes).[368] A later version the 1955 machine had a total of 250 junction transistors and 1,300 point-contact diodes. The Computer also used a small number of tubes in its clock generator, so it was not the first fully transistorized. The ETL Mark III, developed at the Electrotechnical Laboratory in 1956, may have been the first transistor-based electronic computer using the stored program method. It had about "130 point-contact transistors and about 1,800 germanium diodes were used for logic elements, and these were housed on 300 plug-in packages which could be slipped in and out."[369] The 1958 decimal architecture IBM 7070 was the first transistor computer to be fully programmable. It had about 30,000 alloy-junction germanium transistors and 22,000 germanium diodes, on approximately 14,000 Standard Modular System (SMS) cards. The 1959 MOBIDIC, short for "MOBIle DIgital Computer", at 12,000 pounds (6.0 short tons) mounted in the trailer of a semi-trailer truck, was a transistorized computer for battlefield data.

The third generation of computers used integrated circuits (ICs).[370] The 1962 15-bit Apollo Guidance Computer used "about 4,000 "Type-G" (3-input NOR gate) circuits" for about 12,000 transistors plus 32,000 resistors.[371] The IBM System/360, introduced 1964, used discrete transistors in hybrid circuit packs.[370] The 1965 12-bit PDP-8 CPU had 1409 discrete transistors and over 10,000 diodes, on many cards. Later versions, starting with the 1968 PDP-8/I, used integrated circuits. The PDP-8 was later reimplemented as a microprocessor as the Intersil 6100, see below.[372]

The next generation of computers were the microcomputers, starting with the 1971 Intel 4004, which used MOS transistors. These were used in home computers or personal computers (PCs).

This list includes early transistorized computers (second generation) and IC-based computers (third generation) from the 1950s and 1960s.

| Computer | Transistor count | Year | Manufacturer | Notes | Ref |

|---|---|---|---|---|---|

| Transistor Computer | 92 | 1953 | University of Manchester | Point-contact transistors, 550 diodes. Lacked stored program capability. | [368] |

| TRADIC | 700 | 1954 | Bell Labs | Point-contact transistors | [368] |

| Transistor Computer (full size) | 250 | 1955 | University of Manchester | Discrete point-contact transistors, 1,300 diodes | [368] |

| IBM 608 | 3,000 | 1955 | IBM | Germanium transistors | [373] |

| ETL Mark III | 130 | 1956 | Electrotechnical Laboratory | Point-contact transistors, 1,800 diodes, stored program capability | [368][369] |

| Metrovick 950 | 200 | 1956 | Metropolitan-Vickers | Discrete junction transistors | |

| NEC NEAC-2201 | 600 | 1958 | NEC | Germanium transistors | [374] |

| Hitachi MARS-1 | 1,000 | 1958 | Hitachi | [375] | |

| IBM 7070 | 30,000 | 1958 | IBM | Alloy-junction germanium transistors, 22,000 diodes | [376] |

| Matsushita MADIC-I | 400 | 1959 | Matsushita | Bipolar transistors | [377] |

| NEC NEAC-2203 | 2,579 | 1959 | NEC | [378] | |

| Toshiba TOSBAC-2100 | 5,000 | 1959 | Toshiba | [379] | |

| IBM 7090 | 50,000 | 1959 | IBM | Discrete germanium transistors | [380] |

| PDP-1 | 2,700 | 1959 | Digital Equipment Corporation | Discrete transistors | |

| Olivetti Elea 9003 | ? | 1959 | Olivetti | 300,000 (?) discrete transistors and diodes | [381] |

| Mitsubishi MELCOM 1101 | 3,500 | 1960 | Mitsubishi | Germanium transistors | [382] |

| M18 FADAC | 1,600 | 1960 | Autonetics | Discrete transistors | |

| CPU of IBM 7030 Stretch | 169,100 | 1961 | IBM | World's fastest computer from 1961 to 1964 | [383] |

| D-17B | 1,521 | 1962 | Autonetics | Discrete transistors | |

| NEC NEAC-L2 | 16,000 | 1964 | NEC | Ge transistors | [384] |

| CDC 6600 (entire computer) | 400,000 | 1964 | Control Data Corporation | World's fastest computer from 1964 to 1969 | [385] |

| IBM System/360 | ? | 1964 | IBM | Hybrid circuits | |

| PDP-8 "Straight-8" | 1,409[372] | 1965 | Digital Equipment Corporation | discrete transistors, 10,000 diodes | |

| PDP-8/S | 1,001[386][387][388] | 1966 | Digital Equipment Corporation | discrete transistors, diodes | |

| PDP-8/I | 1,409[citation needed] | 1968[389] | Digital Equipment Corporation | 74 series TTL circuits[390] | |

| Apollo Guidance Computer Block I | 12,300 | 1966 | Raytheon / MIT Instrumentation Laboratory | 4,100 ICs, each containing a 3-transistor, 3-input NOR gate. (Block II had 2,800 dual 3-input NOR gates ICs.) |

Logic functions

[edit]Transistor count for generic logic functions is based on static CMOS implementation.[391]

| Function | Transistor count | Ref. |

|---|---|---|

| NOT | 2 | |

| Buffer | 4 | |

| NAND 2-input | 4 | |

| NOR 2-input | 4 | |

| AND 2-input | 6 | |

| OR 2-input | 6 | |

| NAND 3-input | 6 | |

| NOR 3-input | 6 | |

| XOR 2-input | 6 | |

| XNOR 2-input | 8 | |

| MUX 2-input with TG | 6 | |

| MUX 4-input with TG | 18 | |

| NOT MUX 2-input | 8 | |

| MUX 4-input | 24 | |

| 1-bit full adder | 24 | |

| 1-bit adder–subtractor | 48 | |

| AND-OR-INVERT | 6 | [392] |

| Latch, D gated | 8 | |

| Flip-flop, edge triggered dynamic D with reset | 12 | |

| 8-bit multiplier | 3,000 | |

| 16-bit multiplier | 9,000 | |

| 32-bit multiplier | 21,000 | [citation needed] |

| small-scale integration | 2–100 | [393] |

| medium-scale integration | 100–500 | [393] |

| large-scale integration | 500–20,000 | [393] |

| very-large-scale integration | 20,000–1,000,000 | [393] |

| ultra-large scale integration | >1,000,000 |

Parallel systems

[edit]Historically, each processing element in earlier parallel systems—like all CPUs of that time—was a serial computer built out of multiple chips. As transistor counts per chip increases, each processing element could be built out of fewer chips, and then later each multi-core processor chip could contain more processing elements.[394]

Goodyear MPP: (1983?) 8 pixel processors per chip, 3,000 to 8,000 transistors per chip.[394]

Brunel University Scape (single-chip array-processing element): (1983) 256 pixel processors per chip, 120,000 to 140,000 transistors per chip.[394]

Cell Broadband Engine: (2006) with 9 cores per chip, had 234 million transistors per chip.[395]

Other devices

[edit]| Device type | Device name | Transistor count | Date of introduction | Designer(s) | Manufacturer(s) | MOS process | Area | Transistor density, tr./mm2 | Ref |

|---|---|---|---|---|---|---|---|---|---|

| Deep learning engine / IPU[j] | Colossus GC2 | 23,600,000,000 | 2018 | Graphcore | TSMC | 16 nm | ~800 mm2 | 29,500,000 | [396][397][398][better source needed] |

| Deep learning engine / IPU | Wafer Scale Engine | 1,200,000,000,000 | 2019 | Cerebras | TSMC | 16 nm | 46,225 mm2 | 25,960,000 | [1][2][3][4] |

| Deep learning engine / IPU | Wafer Scale Engine 2 | 2,600,000,000,000 | 2020 | Cerebras | TSMC | 7 nm | 46,225 mm2 | 56,250,000 | [5][399][400] |

| Network switch | NVLink4 NVSwitch | 25,100,000,000 | 2022 | Nvidia | TSMC | N4 (4 nm) | 294 mm2 | 85,370,000 | [401] |

Transistor density

[edit]The transistor density is the number of transistors that are fabricated per unit area, typically measured in terms of the number of transistors per square millimeter (mm2). The transistor density usually correlates with the gate length of a semiconductor node (also known as a semiconductor manufacturing process), typically measured in nanometers (nm). As of 2019[update], the semiconductor node with the highest transistor density is TSMC's 5 nanometer node, with 171.3 million transistors per square millimeter (note this corresponds to a transistor-transistor spacing of 76.4 nm, far greater than the relative meaningless "5nm")[402]

MOSFET nodes

[edit]| Node name | Transistor density (transistors/mm2) | Production year | Process | MOSFET | Manufacturer(s) | Ref |

|---|---|---|---|---|---|---|

| ? | ? | 1960 | 20,000 nm | PMOS | Bell Labs | [403][404] |

| ? | ? | 1960 | 20,000 nm | NMOS | ||

| ? | ? | 1963 | ? | CMOS | Fairchild | [405] |

| ? | ? | 1964 | ? | PMOS | General Microelectronics | [406] |

| ? | ? | 1968 | 20,000 nm | CMOS | RCA | [407] |

| ? | ? | 1969 | 12,000 nm | PMOS | Intel | [322][314] |

| ? | ? | 1970 | 10,000 nm | CMOS | RCA | [407] |

| ? | 300 | 1970 | 8,000 nm | PMOS | Intel | [316][304] |

| ? | ? | 1971 | 10,000 nm | PMOS | Intel | [408] |

| ? | 480 | 1971 | ? | PMOS | General Instrument | [318] |

| ? | ? | 1973 | ? | NMOS | Texas Instruments | [318] |

| ? | 220 | 1973 | ? | NMOS | Mostek | [318] |

| ? | ? | 1973 | 7,500 nm | NMOS | NEC | [18][17] |

| ? | ? | 1973 | 6,000 nm | PMOS | Toshiba | [19][409] |

| ? | ? | 1976 | 5,000 nm | NMOS | Hitachi, Intel | [318] |

| ? | ? | 1976 | 5,000 nm | CMOS | RCA | |

| ? | ? | 1976 | 4,000 nm | NMOS | Zilog | |

| ? | ? | 1976 | 3,000 nm | NMOS | Intel | [410] |

| ? | 1,850 | 1977 | ? | NMOS | NTT | [318] |

| ? | ? | 1978 | 3,000 nm | CMOS | Hitachi | [411] |

| ? | ? | 1978 | 2,500 nm | NMOS | Texas Instruments | [318] |

| ? | ? | 1978 | 2,000 nm | NMOS | NEC, NTT | |

| ? | 2,600 | 1979 | ? | VMOS | Siemens | |

| ? | 7,280 | 1979 | 1,000 nm | NMOS | NTT | |

| ? | 7,620 | 1980 | 1,000 nm | NMOS | NTT | |

| ? | ? | 1983 | 2,000 nm | CMOS | Toshiba | [322] |

| ? | ? | 1983 | 1,500 nm | CMOS | Intel | [318] |

| ? | ? | 1983 | 1,200 nm | CMOS | Intel | |

| ? | ? | 1984 | 800 nm | CMOS | NTT | |

| ? | ? | 1987 | 700 nm | CMOS | Fujitsu | |

| ? | ? | 1989 | 600 nm | CMOS | Mitsubishi, NEC, Toshiba | [322] |

| ? | ? | 1989 | 500 nm | CMOS | Hitachi, Mitsubishi, NEC, Toshiba | |

| ? | ? | 1991 | 400 nm | CMOS | Matsushita, Mitsubishi, Fujitsu, Toshiba | |

| ? | ? | 1993 | 350 nm | CMOS | Sony | |

| ? | ? | 1993 | 250 nm | CMOS | Hitachi, NEC | |

| 3LM | 32,000 | 1994 | 350 nm | CMOS | NEC | [206] |

| ? | ? | 1995 | 160 nm | CMOS | Hitachi | [322] |

| ? | ? | 1996 | 150 nm | CMOS | Mitsubishi | |

| TSMC 180 nm | ? | 1998 | 180 nm | CMOS | TSMC | [412] |

| CS80 | ? | 1999 | 180 nm | CMOS | Fujitsu | [413] |

| ? | ? | 1999 | 180 nm | CMOS | Intel, Sony, Toshiba | [312][218] |

| CS85 | ? | 1999 | 170 nm | CMOS | Fujitsu | [414] |

| Samsung 140 nm | ? | 1999 | 140 nm | CMOS | Samsung | [322] |

| ? | ? | 2001 | 130 nm | CMOS | Fujitsu, Intel | [413][312] |

| Samsung 100 nm | ? | 2001 | 100 nm | CMOS | Samsung | [322] |

| ? | ? | 2002 | 90 nm | CMOS | Sony, Toshiba, Samsung | [218][340] |

| CS100 | ? | 2003 | 90 nm | CMOS | Fujitsu | [413] |

| Intel 90 nm | 1,450,000 | 2004 | 90 nm | CMOS | Intel | [415][312] |

| Samsung 80 nm | ? | 2004 | 80 nm | CMOS | Samsung | [416] |

| ? | ? | 2004 | 65 nm | CMOS | Fujitsu, Toshiba | [417] |

| Samsung 60 nm | ? | 2004 | 60 nm | CMOS | Samsung | [340] |

| TSMC 45 nm | ? | 2004 | 45 nm | CMOS | TSMC | |

| Elpida 90 nm | ? | 2005 | 90 nm | CMOS | Elpida Memory | [418] |

| CS200 | ? | 2005 | 65 nm | CMOS | Fujitsu | [419][413] |

| Samsung 50 nm | ? | 2005 | 50 nm | CMOS | Samsung | [342] |

| Intel 65 nm | 2,080,000 | 2006 | 65 nm | CMOS | Intel | [415] |

| Samsung 40 nm | ? | 2006 | 40 nm | CMOS | Samsung | [342] |

| Toshiba 56 nm | ? | 2007 | 56 nm | CMOS | Toshiba | [343] |

| Matsushita 45 nm | ? | 2007 | 45 nm | CMOS | Matsushita | [81] |

| Intel 45 nm | 3,300,000 | 2008 | 45 nm | CMOS | Intel | [420] |

| Toshiba 43 nm | ? | 2008 | 43 nm | CMOS | Toshiba | [344] |

| TSMC 40 nm | ? | 2008 | 40 nm | CMOS | TSMC | [421] |

| Toshiba 32 nm | ? | 2009 | 32 nm | CMOS | Toshiba | [422] |

| Intel 32 nm | 7,500,000 | 2010 | 32 nm | CMOS | Intel | [420] |

| ? | ? | 2010 | 20 nm | CMOS | Hynix, Samsung | [423][342] |

| Intel 22 nm | 15,300,000 | 2012 | 22 nm | CMOS | Intel | [420] |

| IMFT 20 nm | ? | 2012 | 20 nm | CMOS | IMFT | [424] |

| Toshiba 19 nm | ? | 2012 | 19 nm | CMOS | Toshiba | |

| Hynix 16 nm | ? | 2013 | 16 nm | FinFET | SK Hynix | [423] |

| TSMC 16 nm | 28,880,000 | 2013 | 16 nm | FinFET | TSMC | [425][426] |

| Samsung 10 nm | 51,820,000 | 2013 | 10 nm | FinFET | Samsung | [427][428] |

| Intel 14 nm | 37,500,000 | 2014 | 14 nm | FinFET | Intel | [420] |

| 14LP | 32,940,000 | 2015 | 14 nm | FinFET | Samsung | [427] |

| TSMC 10 nm | 52,510,000 | 2016 | 10 nm | FinFET | TSMC | [425][429] |

| 12LP | 36,710,000 | 2017 | 12 nm | FinFET | GlobalFoundries, Samsung | [239] |

| N7FF | 96,500,000

101,850,000[430] |

2017 | 7 nm | FinFET | TSMC | [431][432][433] |

| 8LPP | 61,180,000 | 2018 | 8 nm | FinFET | Samsung | [427] |

| 7LPE | 95,300,000 | 2018 | 7 nm | FinFET | Samsung | [432] |

| Intel 10 nm | 100,760,000

106,100,000[430] |

2018 | 10 nm | FinFET | Intel | [434] |

| 5LPE | 126,530,000 | 2018 | 5 nm | FinFET | Samsung | [436][437] |

| N7FF+ | 113,900,000 | 2019 | 7 nm | FinFET | TSMC | [431][432] |

| CLN5FF | 171,300,000

185,460,000[430] |

2019 | 5 nm | FinFET | TSMC | [402] |

| Intel 7 | 100,760,000

106,100,000[430] |

2021 | 7 nm | FinFET | Intel | |

| 4LPE | 145,700,000[435] | 2021 | 4 nm | FinFET | Samsung | [438][439][440] |

| N4 | 196,600,000[430][441] | 2021 | 4 nm | FinFET | TSMC | [442] |

| N4P | 196,600,000[430][441] | 2022 | 4 nm | FinFET | TSMC | [443] |

| 3GAE | 202,850,000[430] | 2022 | 3 nm | MBCFET | Samsung | [444][438][445] |

| N3 | 314,730,000[430] | 2022 | 3 nm | FinFET | TSMC | [446][447] |

| N4X | ? | 2023 | 4 nm | FinFET | TSMC | [448][449][450] |